GPU Programming David Monismith Based on notes taken

GPU Programming David Monismith Based on notes taken from the Udacity Parallel Programming Course

GPU Programming Paradigm • General purpose CPUs have seen clock speed increases for many years. • Since about 2005 the trend has been to add more CPU cores, approximately doubling the number of cores every 2 years. • GPU manufacturers have taken a different approach by adding many small processors to their chips allowing for many (thousands) of operations to be performed per cycle.

Modern GPUs • • 1000’s of Arithmetic Logic Units 100’s or 1000’s of Processors 10, 000 or more threads running concurrently Allow for general purpose processing – GPGPU (general purpose graphics processing unit) • Recall from our discussion of computer architecture that CPU/GPU manufacturing (increases in the number of transistors on chip) is allowing for smaller dies that require less power and are (hopefully) faster. • Also recall that the power law has prevented drastic increases in clock speed over the past 10 years.

Why GPGPUs? • Instead of building faster processors, build more! • CPUs are very flexible (good) but have complex control units, which are expensive in terms of how much power is used and difficult to design. • GPUs have simple control hardware allowing for more transistors to be used for computation and are thus more power efficient, but they are more difficult to program than CPUs.

GPGPU Focus • The GPU focus is on throughput – amount of jobs completed per unit time. • The CPU focus is on minimizing the amount of time it takes to run a single job (latency). • This is similar to comparing a 747 -800 to a Concorde. • The Concorde will arrive at the destination faster (lower latency), but the 747 -800 can transport more people per unit time because it has more seats.

GPU vs CPU • CPU – 16 core Intel Xeon Haswell – 8 AVX operations per core – 2 threads per core (Hyper-threading) – 256 = 16*8*2 operations in parallel • GPU – NVidia Tesla K 80 – 4992 CUDA Cores – At least 4992 operations in parallel

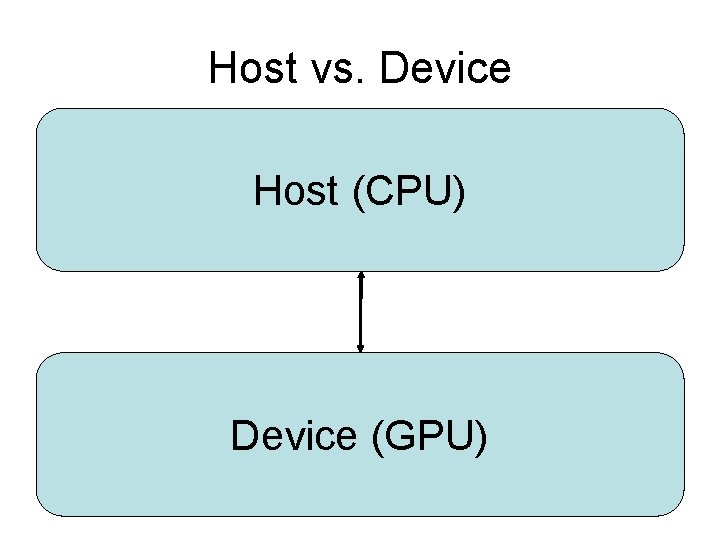

Host vs. Device Host (CPU) Device (GPU)

CUDA • Allows for programming both host and device with one program. • Supports many languages including C. • Part of the problem may run on host and the other part may run on the device. • The device is assumed to be a coprocessor. • The device and host are assumed to have their own memories.

CUDA Memory Operations • Data may be moved from the device to host or host to device with one function: cuda. Memcpy • Memory may be allocated on the GPU with another function: cuda. Malloc • The host launches kernel functions on the GPU – This is code that will run on the GPU.

GPU Program Format • Allocate storage on GPU with cuda. Malloc • Copy input data from CPU to GPU with cuda. Memcpy • Launch kernel on GPU for data processing • Copy data back from GPU to CPU after processing is complete with cuda. Memcpy

Writing a Kernel Function • Kernel functions look like serial programs. • They are written as if they are a single thread. • GPU runs a kernel function on lots of threads. • Keep in mind that GPUs are meant to launch many threads in parallel.

![CPU Example • Multiply all values in an array by 7 arr[128] = {1, CPU Example • Multiply all values in an array by 7 arr[128] = {1,](http://slidetodoc.com/presentation_image_h2/f9672ffe6cd7f8dc6b2ab3d07b95580e/image-12.jpg)

CPU Example • Multiply all values in an array by 7 arr[128] = {1, …, 128}; for(int i = 0; i < 128; i++) arr[i] = arr[i] * 7; • May include instruction level parallelism and may be parallelized with Open. MP or MPI.

GPU Example • CPU – Allocates memory, copies data to and from the GPU, and launches the kernel function. – Launching the kernel denotes the degree of parallelism. – array. Mult<<<128>>>(arr. In, arr. Out); • GPU – Programmer must express how to implement the thread in one unit on the GPU. – Keep in mind that just like in Open. MP/MPI each thread on the GPU has an identifier. – It is reasonable to have one thread work on one element of an array in a GPU.

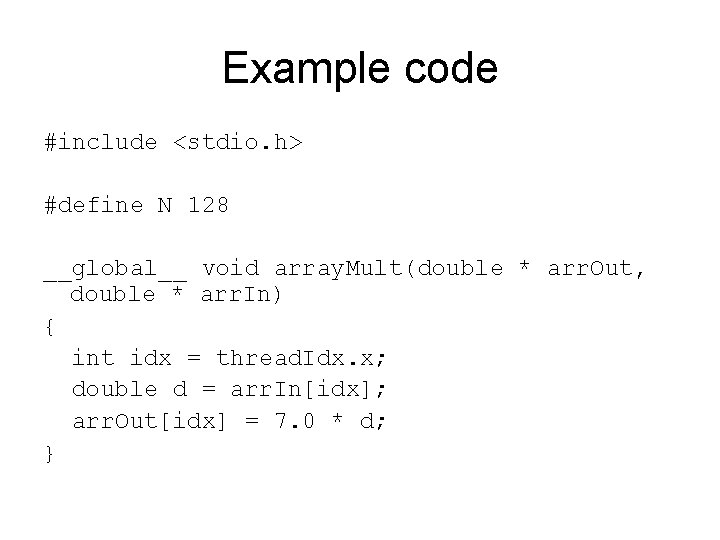

Example code #include <stdio. h> #define N 128 __global__ void array. Mult(double * arr. Out, double * arr. In) { int idx = thread. Idx. x; double d = arr. In[idx]; arr. Out[idx] = 7. 0 * d; }

![Example Code, Continued int main(int argc, char ** argv) { double host. In[N]; double Example Code, Continued int main(int argc, char ** argv) { double host. In[N]; double](http://slidetodoc.com/presentation_image_h2/f9672ffe6cd7f8dc6b2ab3d07b95580e/image-15.jpg)

Example Code, Continued int main(int argc, char ** argv) { double host. In[N]; double host. Out[N]; //Generate input data on host (CPU). for(int i = 0; i < N; i++) host. In[i] = (double) i; //Declare GPU pointers double * dev. In; double * dev. Out; cuda. Malloc((void **) &dev. In, N*sizeof(double)); cuda. Malloc((void **) &dev. Out, N*sizeof(double));

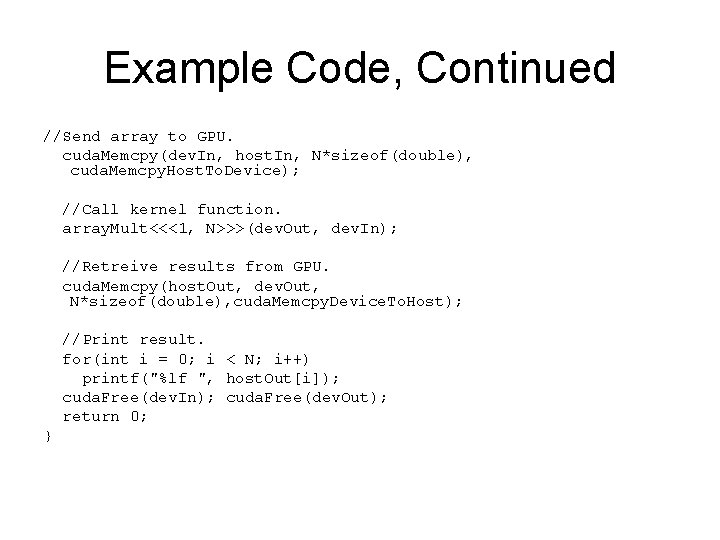

Example Code, Continued //Send array to GPU. cuda. Memcpy(dev. In, host. In, N*sizeof(double), cuda. Memcpy. Host. To. Device); //Call kernel function. array. Mult<<<1, N>>>(dev. Out, dev. In); //Retreive results from GPU. cuda. Memcpy(host. Out, dev. Out, N*sizeof(double), cuda. Memcpy. Device. To. Host); //Print result. for(int i = 0; i < N; i++) printf("%lf ", host. Out[i]); cuda. Free(dev. In); cuda. Free(dev. Out); return 0; }

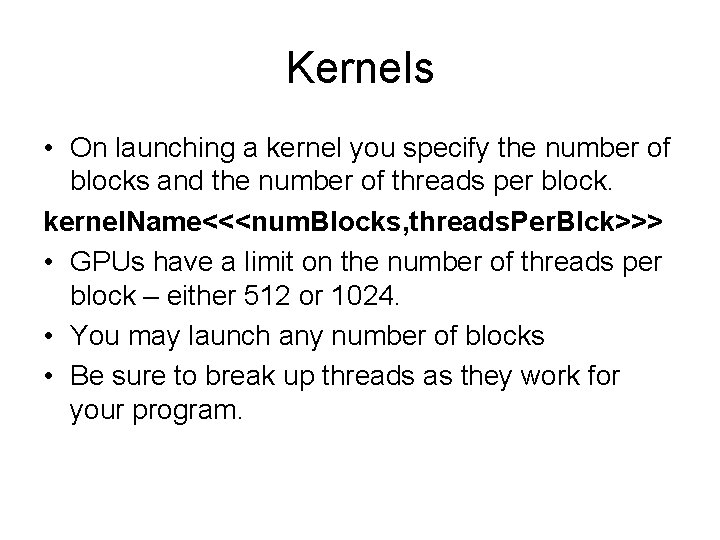

Kernels • On launching a kernel you specify the number of blocks and the number of threads per block. kernel. Name<<<num. Blocks, threads. Per. Blck>>> • GPUs have a limit on the number of threads per block – either 512 or 1024. • You may launch any number of blocks • Be sure to break up threads as they work for your program.

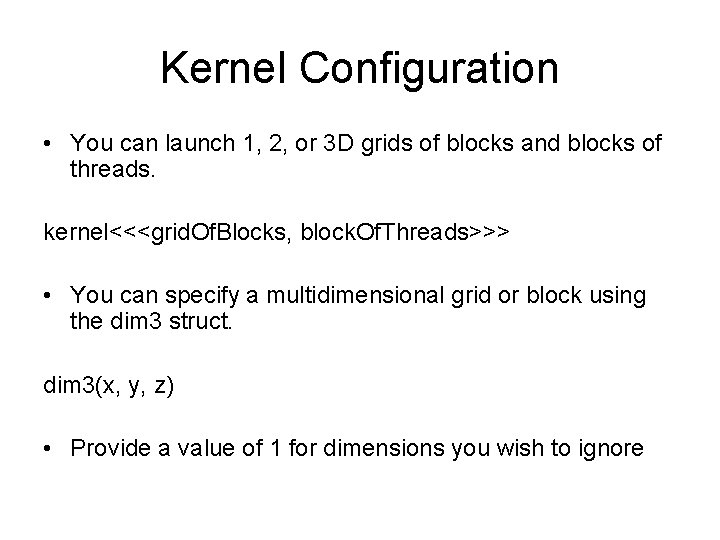

Kernel Configuration • You can launch 1, 2, or 3 D grids of blocks and blocks of threads. kernel<<<grid. Of. Blocks, block. Of. Threads>>> • You can specify a multidimensional grid or block using the dim 3 struct. dim 3(x, y, z) • Provide a value of 1 for dimensions you wish to ignore

Grids, Blocks, and Threads • thread. Idx – thread within a block, accessed with thread. Idx. x, thread. Idx. y, etc. • block. Dim – size of a block • block. Idx – block number within a grid • grid. Dim – size of the grid

Map Paradigm • We now have the ability to map elements to a kernel function. • Note that the map function below is not part of CUDA, it is just an idea • Map(elements, function) • Example – map(host. Arr, array. Mult) • GPUs are optimized to performing map operations (i. e. embarassingly parallel operations).

- Slides: 20