GPU Memory Details Martin Kruli by Martin Kruli

GPU Memory Details Martin Kruliš by Martin Kruliš (v 1. 2) 11. 2020 1

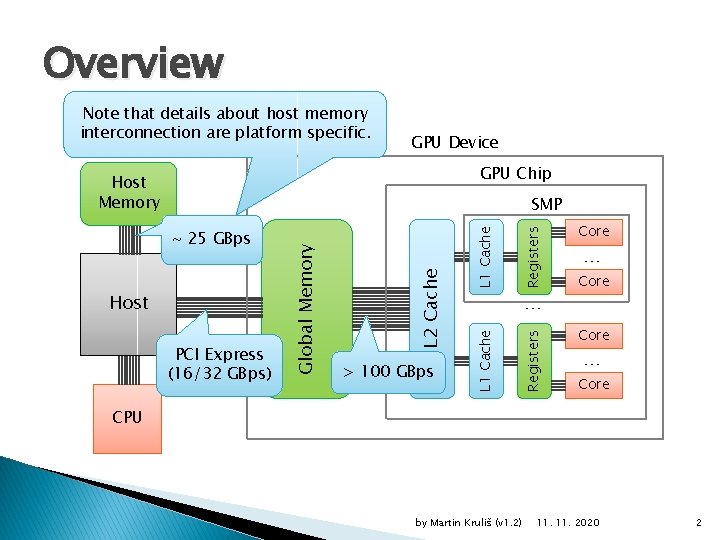

Overview Note that details about host memory interconnection are platform specific. GPU Device GPU Chip Host Memory > 100 GBps L 1 Cache Registers PCI Express (16/32 GBps) … L 1 Cache Host L 2 Cache ~ 25 GBps Global Memory SMP Core … Core CPU by Martin Kruliš (v 1. 2) 11. 2020 2

Host-Device Transfers � PCIe Transfers ◦ Much slower than internal GPU data transfers ◦ Issued explicitly by host code �cuda. Memcpy(dst, src, size, direction); �With one exception, when the GPU memory is mapped to the host memory space �The transfer call has significant overhead �Bulk transfers are preferred � Overlapping ◦ Up to 2 async. transfers while the GPU is computing ◦ We will get to this later… by Martin Kruliš (v 1. 2) 11. 2020 3

Global Memory � Global Memory Properties ◦ Off-chip, but on the GPU device ◦ High bandwidth and high latency �~ 100 GBps, 400 -600 of clock cycles ◦ Operated in transactions �Continuous aligned segments of 32 B - 128 B �Number of transaction depends on caching model, GPU architecture, and memory access pattern by Martin Kruliš (v 1. 2) 11. 2020 4

Global Memory � Global Memory Caching ◦ Data are cached in L 2 cache �Relatively small (e. g. , 2 MB on Maxwell GPUs) ◦ On CC < 3. 0 (Fermi) also cached in L 1 cache �Configurable by compiler flag �-Xptxas -dlcm=ca (Cache Always, i. e. also in L 1, default) �-Xptxas -dlcm=cg (Cache Global, i. e. L 2 only) ◦ CC 3. x (Kepler) reserves L 1 for local memory caching and registry spilling ◦ CC 5. x (Maxwell) separates L 1 cache from shared memory and unifies it with texture cache by Martin Kruliš (v 1. 2) 11. 2020 5

Global Memory � Coalesced Transfers ◦ Number of transactions caused by global memory access depends on the pattern of the access ◦ Certain access patterns are optimized ◦ CC 1. x �Threads sequentially access aligned memory block �Subsequent threads access subsequent words ◦ CC 2. 0 and later �Threads access aligned memory block �Access within the block can be permuted by Martin Kruliš (v 1. 2) 11. 2020 6

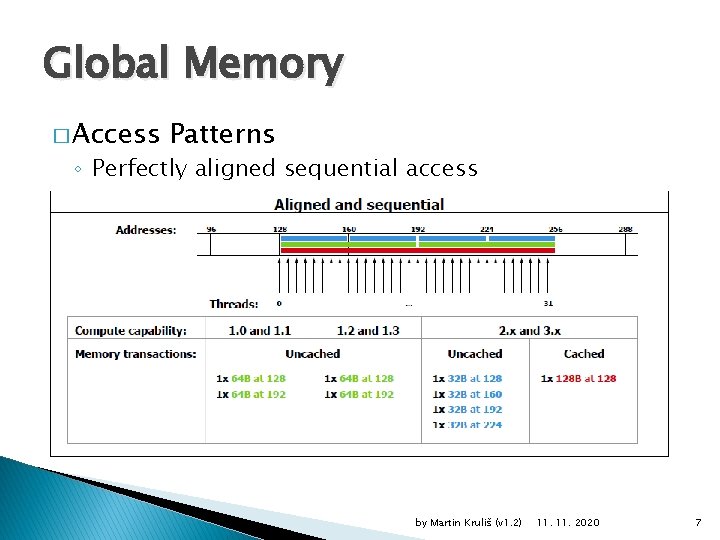

Global Memory � Access Patterns ◦ Perfectly aligned sequential access by Martin Kruliš (v 1. 2) 11. 2020 7

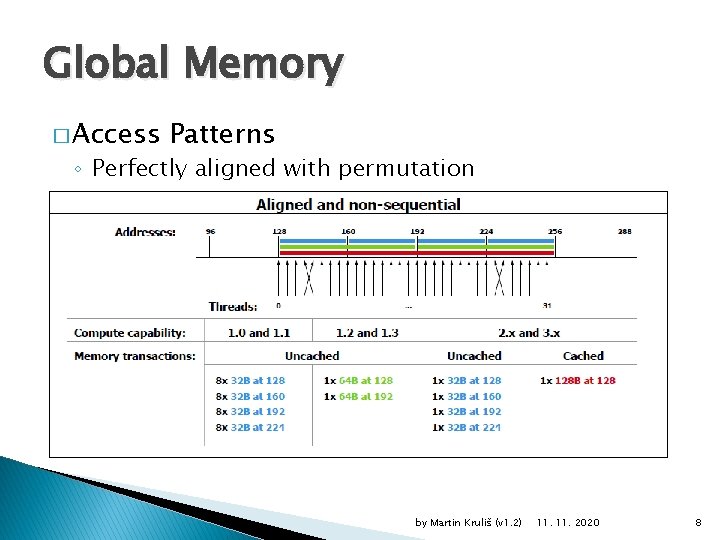

Global Memory � Access Patterns ◦ Perfectly aligned with permutation by Martin Kruliš (v 1. 2) 11. 2020 8

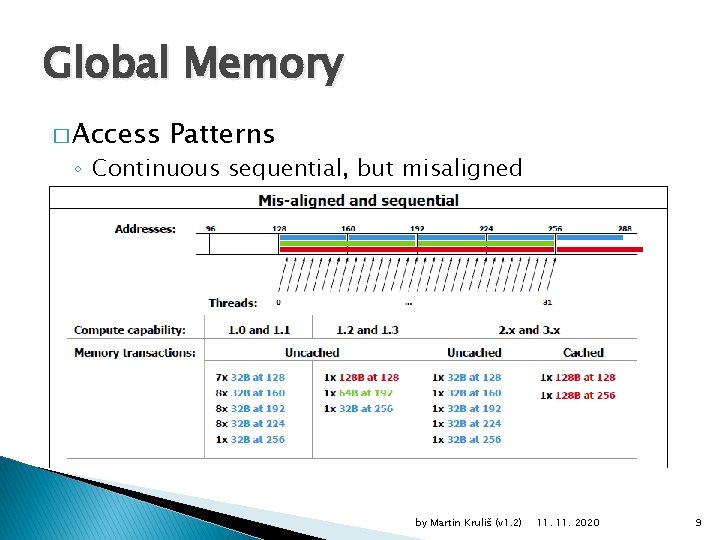

Global Memory � Access Patterns ◦ Continuous sequential, but misaligned by Martin Kruliš (v 1. 2) 11. 2020 9

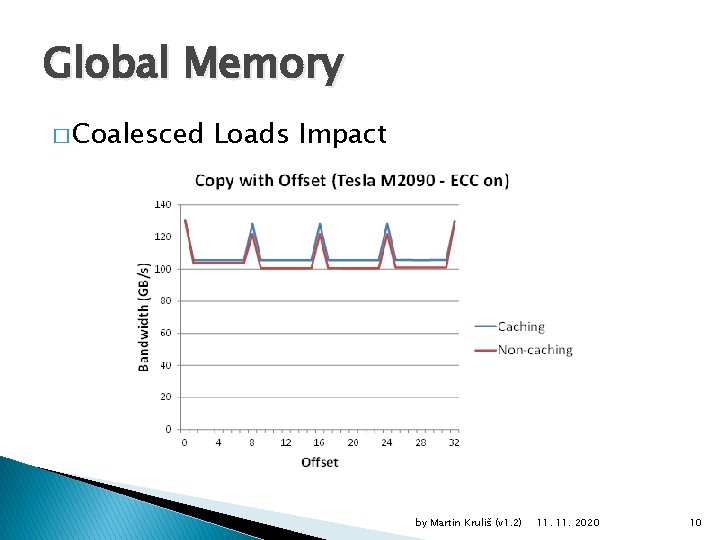

Global Memory � Coalesced Loads Impact by Martin Kruliš (v 1. 2) 11. 2020 10

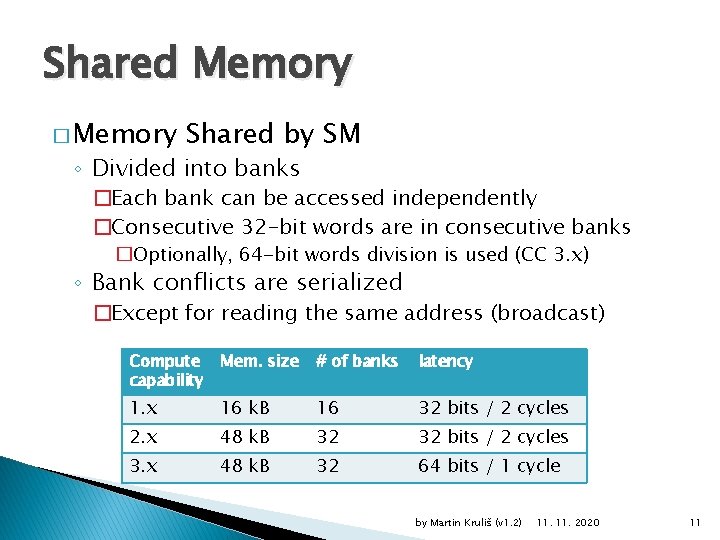

Shared Memory � Memory Shared by SM ◦ Divided into banks �Each bank can be accessed independently �Consecutive 32 -bit words are in consecutive banks �Optionally, 64 -bit words division is used (CC 3. x) ◦ Bank conflicts are serialized �Except for reading the same address (broadcast) Compute capability Mem. size # of banks latency 1. x 16 k. B 16 32 bits / 2 cycles 2. x 48 k. B 32 32 bits / 2 cycles 3. x 48 k. B 32 64 bits / 1 cycle by Martin Kruliš (v 1. 2) 11. 2020 11

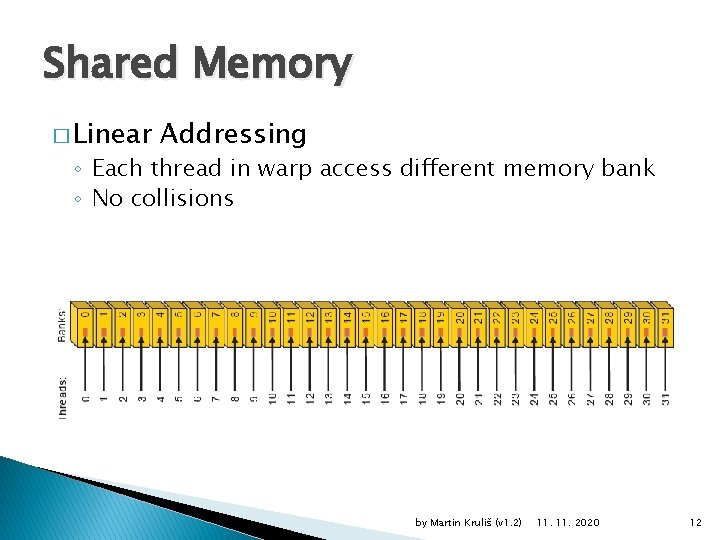

Shared Memory � Linear Addressing ◦ Each thread in warp access different memory bank ◦ No collisions by Martin Kruliš (v 1. 2) 11. 2020 12

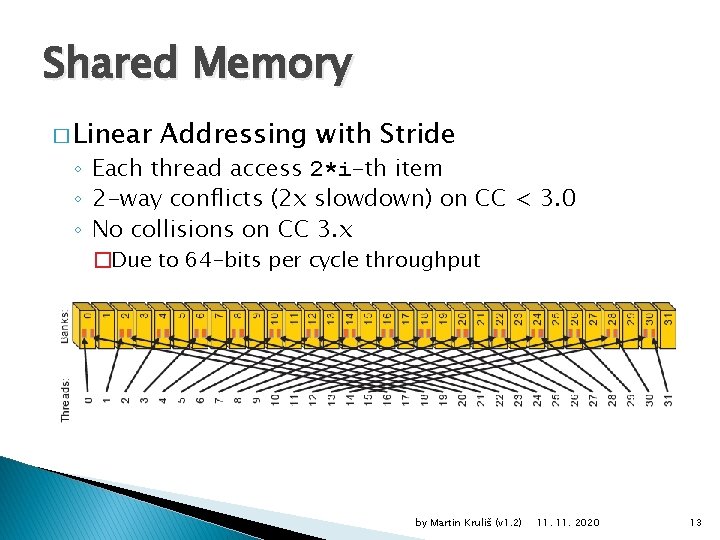

Shared Memory � Linear Addressing with Stride ◦ Each thread access 2*i-th item ◦ 2 -way conflicts (2 x slowdown) on CC < 3. 0 ◦ No collisions on CC 3. x �Due to 64 -bits per cycle throughput by Martin Kruliš (v 1. 2) 11. 2020 13

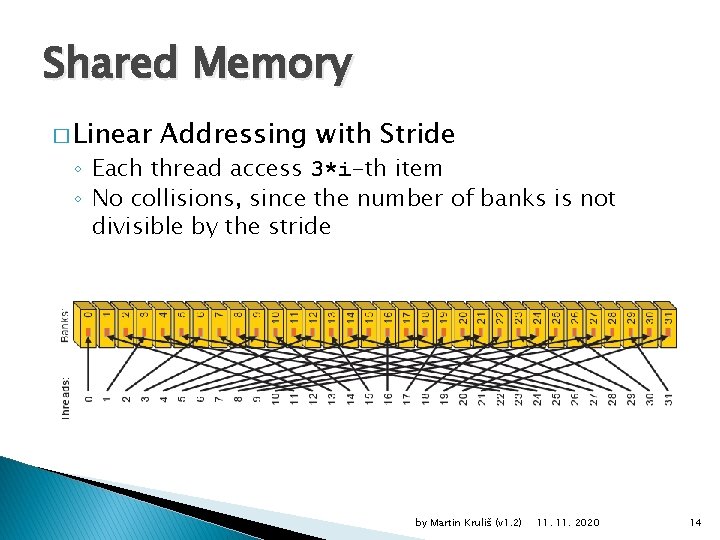

Shared Memory � Linear Addressing with Stride ◦ Each thread access 3*i-th item ◦ No collisions, since the number of banks is not divisible by the stride by Martin Kruliš (v 1. 2) 11. 2020 14

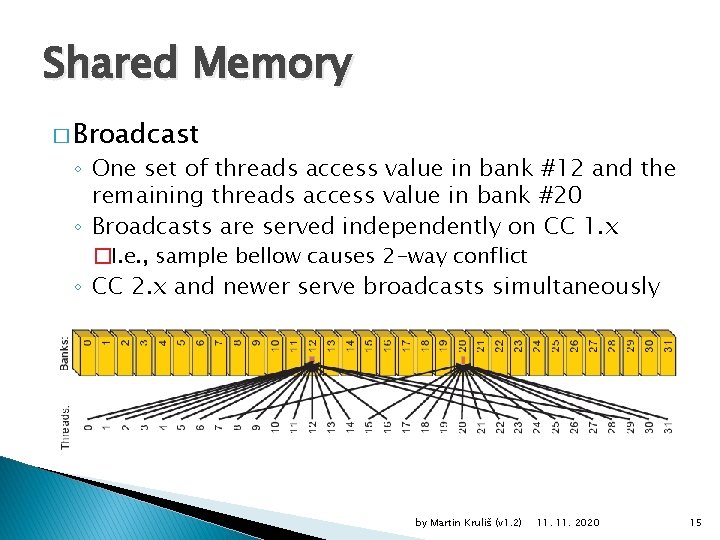

Shared Memory � Broadcast ◦ One set of threads access value in bank #12 and the remaining threads access value in bank #20 ◦ Broadcasts are served independently on CC 1. x �I. e. , sample bellow causes 2 -way conflict ◦ CC 2. x and newer serve broadcasts simultaneously by Martin Kruliš (v 1. 2) 11. 2020 15

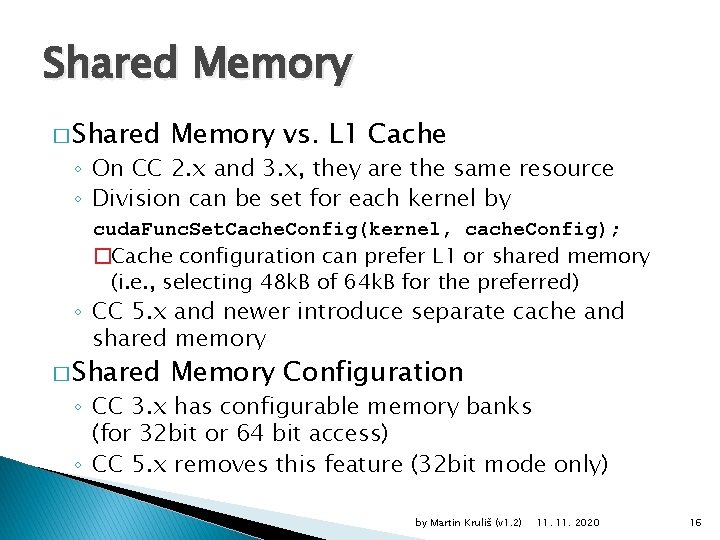

Shared Memory � Shared Memory vs. L 1 Cache ◦ On CC 2. x and 3. x, they are the same resource ◦ Division can be set for each kernel by cuda. Func. Set. Cache. Config(kernel, cache. Config); �Cache configuration can prefer L 1 or shared memory (i. e. , selecting 48 k. B of 64 k. B for the preferred) ◦ CC 5. x and newer introduce separate cache and shared memory � Shared Memory Configuration ◦ CC 3. x has configurable memory banks (for 32 bit or 64 bit access) ◦ CC 5. x removes this feature (32 bit mode only) by Martin Kruliš (v 1. 2) 11. 2020 16

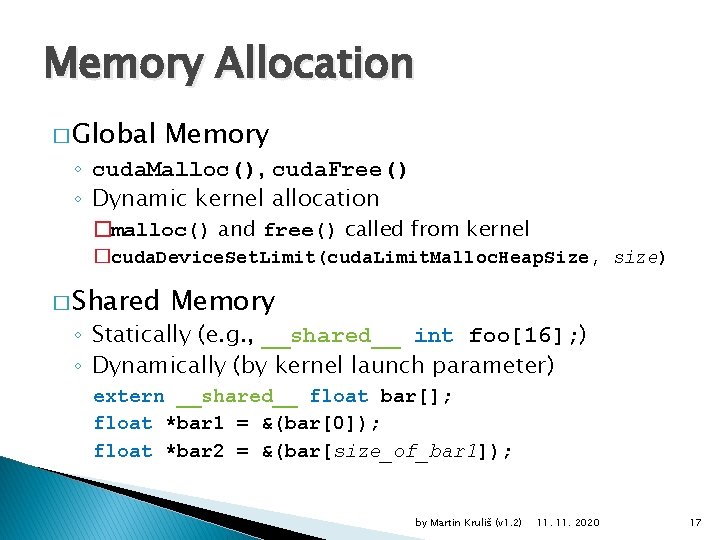

Memory Allocation � Global Memory ◦ cuda. Malloc(), cuda. Free() ◦ Dynamic kernel allocation �malloc() and free() called from kernel �cuda. Device. Set. Limit(cuda. Limit. Malloc. Heap. Size, size) � Shared Memory ◦ Statically (e. g. , __shared__ int foo[16]; ) ◦ Dynamically (by kernel launch parameter) extern __shared__ float bar[]; float *bar 1 = &(bar[0]); float *bar 2 = &(bar[size_of_bar 1]); by Martin Kruliš (v 1. 2) 11. 2020 17

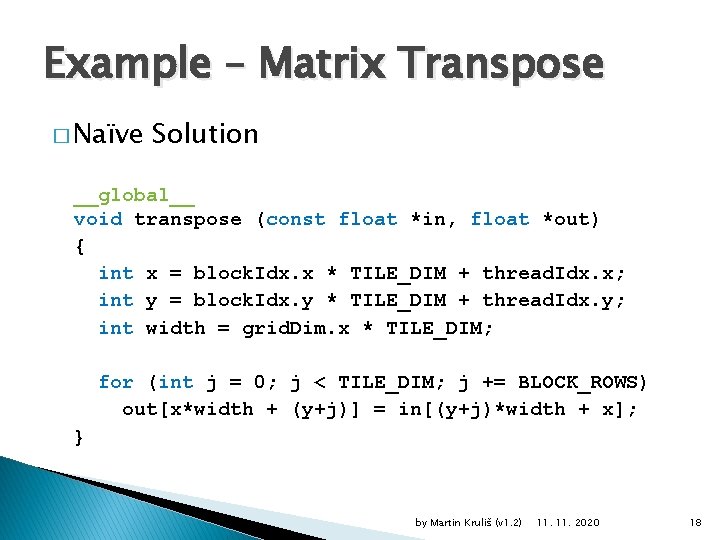

Example – Matrix Transpose � Naïve Solution __global__ void transpose (const float *in, float *out) { int x = block. Idx. x * TILE_DIM + thread. Idx. x; int y = block. Idx. y * TILE_DIM + thread. Idx. y; int width = grid. Dim. x * TILE_DIM; for (int j = 0; j < TILE_DIM; j += BLOCK_ROWS) out[x*width + (y+j)] = in[(y+j)*width + x]; } by Martin Kruliš (v 1. 2) 11. 2020 18

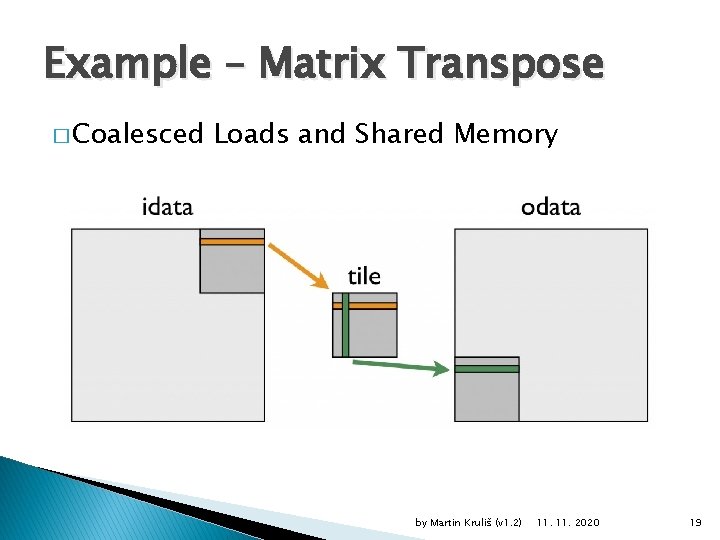

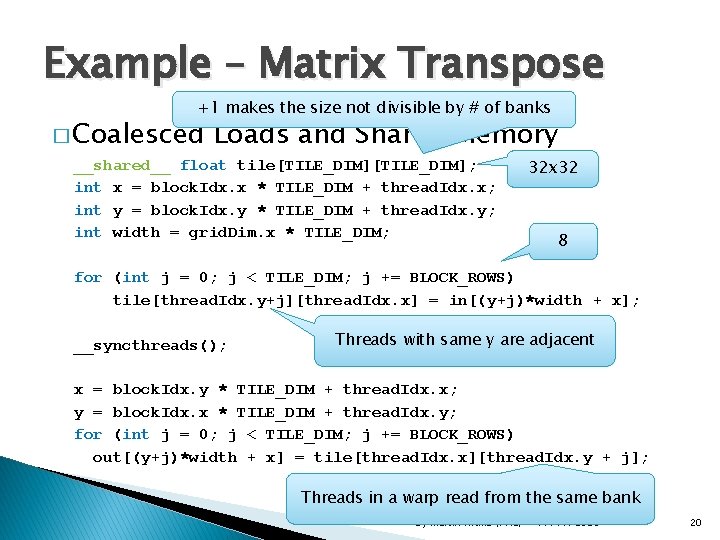

Example – Matrix Transpose � Coalesced Loads and Shared Memory by Martin Kruliš (v 1. 2) 11. 2020 19

Example – Matrix Transpose +1 makes the size not divisible by # of banks � Coalesced Loads and Shared Memory __shared__ float tile[TILE_DIM]; int x = block. Idx. x * TILE_DIM + thread. Idx. x; int y = block. Idx. y * TILE_DIM + thread. Idx. y; int width = grid. Dim. x * TILE_DIM; 32 x 32 8 for (int j = 0; j < TILE_DIM; j += BLOCK_ROWS) tile[thread. Idx. y+j][thread. Idx. x] = in[(y+j)*width + x]; __syncthreads(); Threads with same y are adjacent x = block. Idx. y * TILE_DIM + thread. Idx. x; y = block. Idx. x * TILE_DIM + thread. Idx. y; for (int j = 0; j < TILE_DIM; j += BLOCK_ROWS) out[(y+j)*width + x] = tile[thread. Idx. x][thread. Idx. y + j]; Threads in a warp read from the same bank by Martin Kruliš (v 1. 2) 11. 2020 20

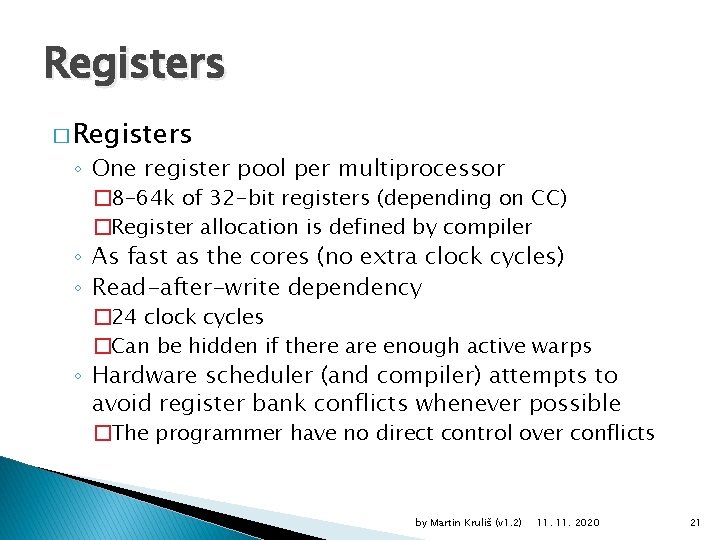

Registers � Registers ◦ One register pool per multiprocessor � 8 -64 k of 32 -bit registers (depending on CC) �Register allocation is defined by compiler ◦ As fast as the cores (no extra clock cycles) ◦ Read-after-write dependency � 24 clock cycles �Can be hidden if there are enough active warps ◦ Hardware scheduler (and compiler) attempts to avoid register bank conflicts whenever possible �The programmer have no direct control over conflicts by Martin Kruliš (v 1. 2) 11. 2020 21

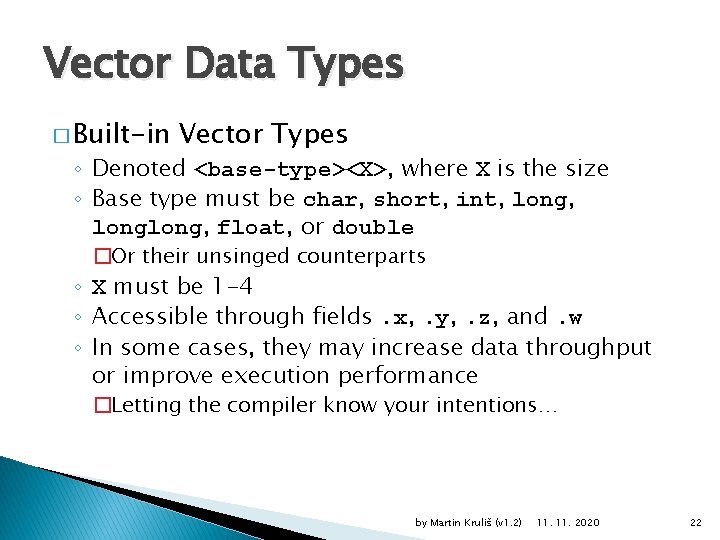

Vector Data Types � Built-in Vector Types ◦ Denoted <base-type><X>, where X is the size ◦ Base type must be char, short, int, longlong, float, or double �Or their unsinged counterparts ◦ X must be 1 -4 ◦ Accessible through fields. x, . y, . z, and. w ◦ In some cases, they may increase data throughput or improve execution performance �Letting the compiler know your intentions… by Martin Kruliš (v 1. 2) 11. 2020 22

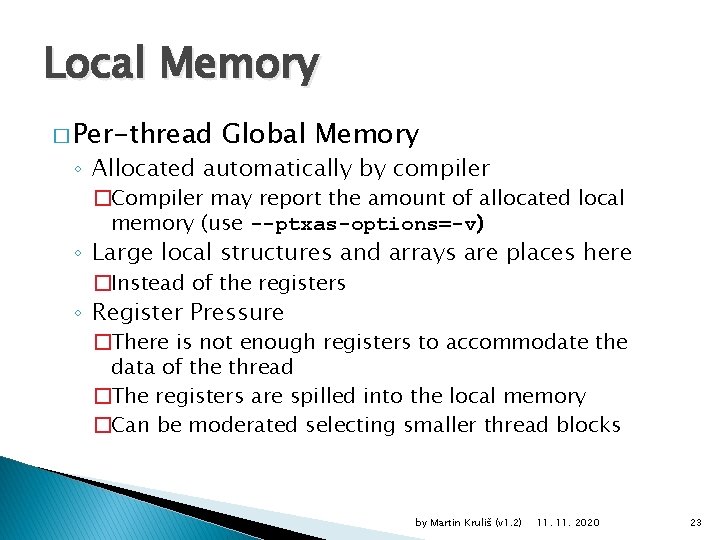

Local Memory � Per-thread Global Memory ◦ Allocated automatically by compiler �Compiler may report the amount of allocated local memory (use --ptxas-options=-v) ◦ Large local structures and arrays are places here �Instead of the registers ◦ Register Pressure �There is not enough registers to accommodate the data of the thread �The registers are spilled into the local memory �Can be moderated selecting smaller thread blocks by Martin Kruliš (v 1. 2) 11. 2020 23

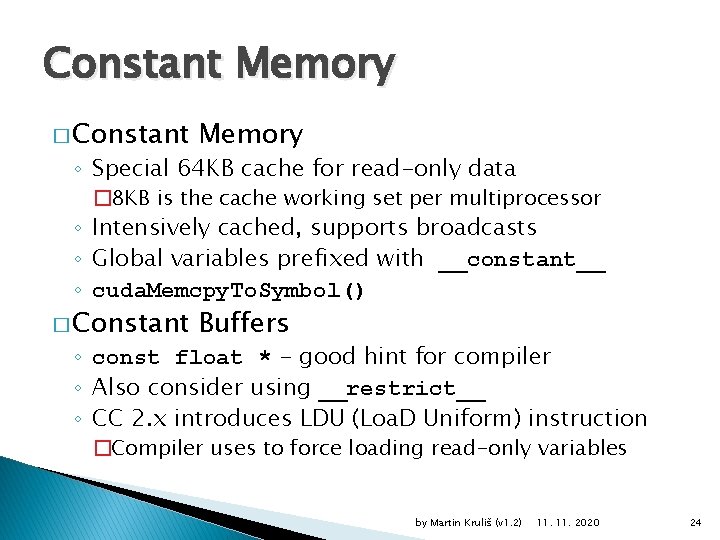

Constant Memory � Constant Memory ◦ Special 64 KB cache for read-only data � 8 KB is the cache working set per multiprocessor ◦ Intensively cached, supports broadcasts ◦ Global variables prefixed with __constant__ ◦ cuda. Memcpy. To. Symbol() � Constant Buffers ◦ const float * - good hint for compiler ◦ Also consider using __restrict__ ◦ CC 2. x introduces LDU (Loa. D Uniform) instruction �Compiler uses to force loading read-only variables by Martin Kruliš (v 1. 2) 11. 2020 24

Texture Memory � Texture Memory ◦ Regular memory designated as texture �Loaded via texture cache (specialized circuitry) ◦ Texture cache is optimized for 2 D spatial locality �But textures may be 1 D-3 D ◦ Additional functionality �Fast data interpolation (nearest neighbor/linear) �Normalized coordinate system �Indexing by floats (not integers) �Including support for level of detail �Handling the boundary cases by Martin Kruliš (v 1. 2) 11. 2020 25

Implications and Guidelines � Memory Caching ◦ The structures should be designed to utilize caches in best way possible �The workset of active blocks should fit L 2 cache ◦ Provide maximum information for the compiler �Vector types, const, … ◦ Re-using already loaded data �In shared memory, in registers � Data Alignment ◦ Operate efficiently on 32 bit/64 bit values only ◦ Align data structures to suitable powers of 2 by Martin Kruliš (v 1. 2) 11. 2020 26

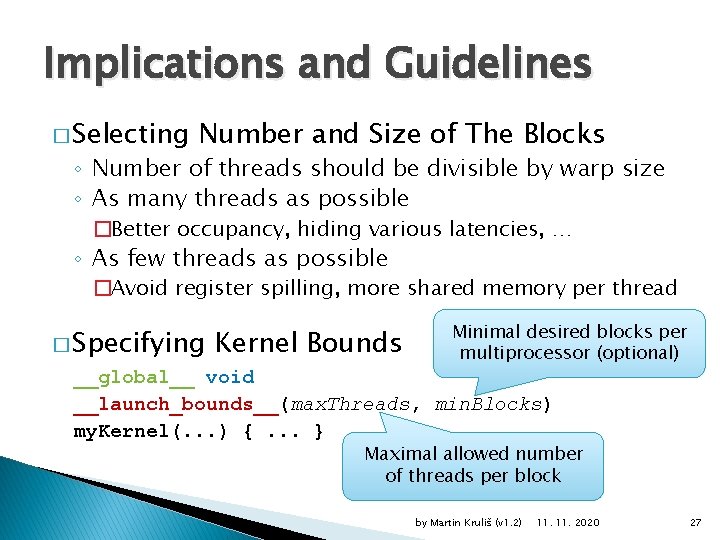

Implications and Guidelines � Selecting Number and Size of The Blocks ◦ Number of threads should be divisible by warp size ◦ As many threads as possible �Better occupancy, hiding various latencies, … ◦ As few threads as possible �Avoid register spilling, more shared memory per thread � Specifying Kernel Bounds Minimal desired blocks per multiprocessor (optional) __global__ void __launch_bounds__(max. Threads, min. Blocks) my. Kernel(. . . ) {. . . } Maximal allowed number of threads per block by Martin Kruliš (v 1. 2) 11. 2020 27

Discussion by Martin Kruliš (v 1. 2) 11. 2020 28

- Slides: 28