GPU Lab 1 Discussion A MATRIXMATRIX MULTIPLICATION EXAMPLE

GPU Lab 1 Discussion A MATRIX-MATRIX MULTIPLICATION EXAMPLE

A simple main function for the matrix multiplication example int main(void){ 1. // Allocate and initialize the matrices M, N, P // I/O to read the input matrices M and N 2. // M*N on the device Matrix. Multiplication(M, N, P, width); 3. // I/0 to write the output matrix P // Free matrix M, N, P … return 0; }

Step 1: allocate and copy // Allocate device memory for M, N and P; // Copy M and N to allocated device memory locations

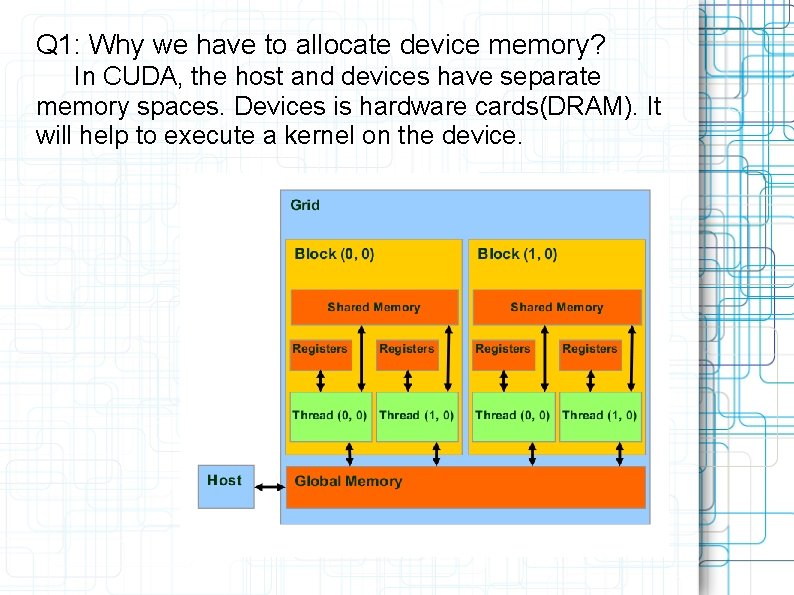

Q 1: Why we have to allocate device memory? In CUDA, the host and devices have separate memory spaces. Devices is hardware cards(DRAM). It will help to execute a kernel on the device.

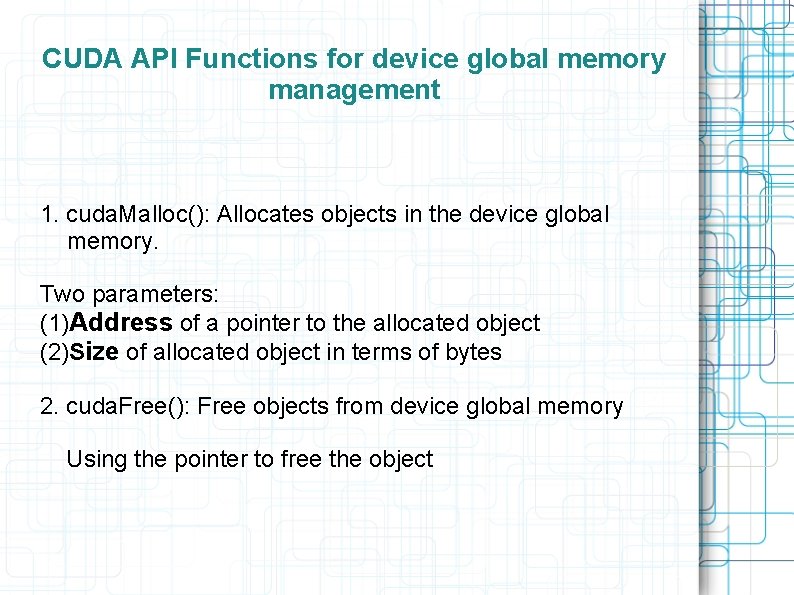

CUDA API Functions for device global memory management 1. cuda. Malloc(): Allocates objects in the device global memory. Two parameters: (1)Address of a pointer to the allocated object (2)Size of allocated object in terms of bytes 2. cuda. Free(): Free objects from device global memory Using the pointer to free the object

The program gives the function of allocation and copy as : Matrix Allocate. Matrix(int height, int width, int init) // allocate M, N, P on the device void Copy. To. Device. Matrix(Matrix Mdevice, const Matrix Mhost) //transfer pertinent data from the host memory to the device memory void Copy. From. Device. Matrix(Matrix Mhost, const Matrix Mdevice) // copy P from the device memory when the matrixmultiplication is done

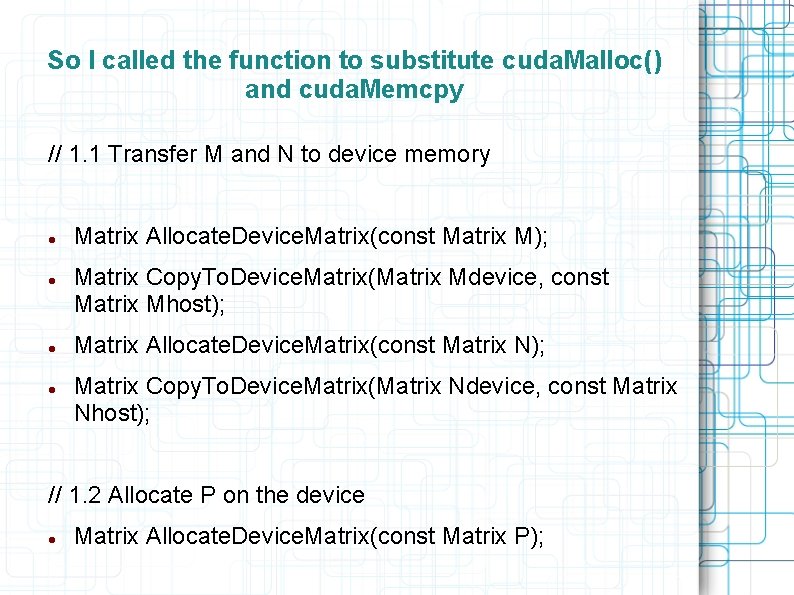

So I called the function to substitute cuda. Malloc() and cuda. Memcpy // 1. 1 Transfer M and N to device memory Matrix Allocate. Device. Matrix(const Matrix M); Matrix Copy. To. Device. Matrix(Matrix Mdevice, const Matrix Mhost); Matrix Allocate. Device. Matrix(const Matrix N); Matrix Copy. To. Device. Matrix(Matrix Ndevice, const Matrix Nhost); // 1. 2 Allocate P on the device Matrix Allocate. Device. Matrix(const Matrix P);

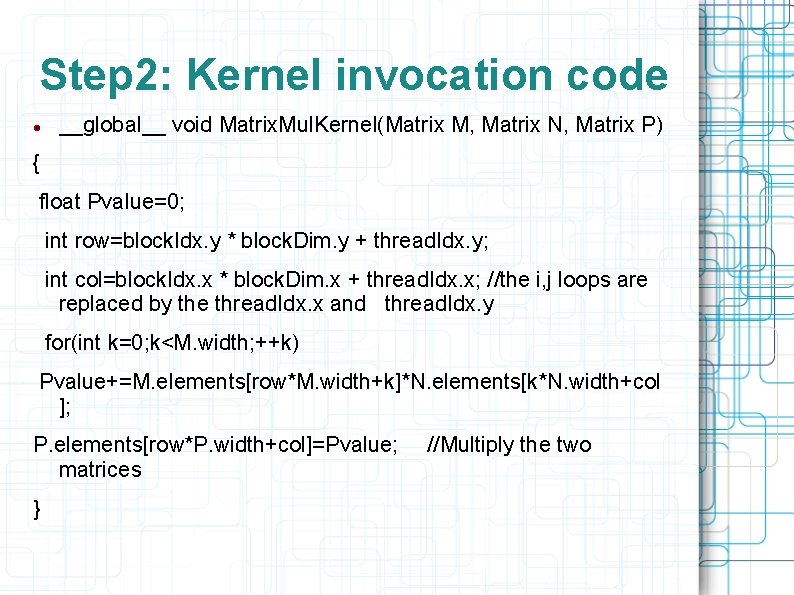

Step 2: Kernel invocation code __global__ void Matrix. Mul. Kernel(Matrix M, Matrix N, Matrix P) { float Pvalue=0; int row=block. Idx. y * block. Dim. y + thread. Idx. y; int col=block. Idx. x * block. Dim. x + thread. Idx. x; //the i, j loops are replaced by the thread. Idx. x and thread. Idx. y for(int k=0; k<M. width; ++k) Pvalue+=M. elements[row*M. width+k]*N. elements[k*N. width+col ]; P. elements[row*P. width+col]=Pvalue; matrices } //Multiply the two

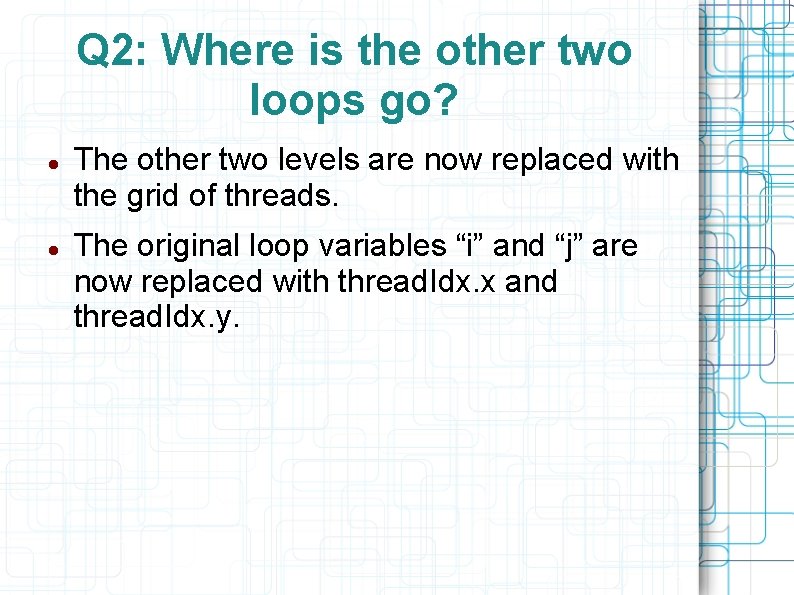

Q 2: Where is the other two loops go? The other two levels are now replaced with the grid of threads. The original loop variables “i” and “j” are now replaced with thread. Idx. x and thread. Idx. y.

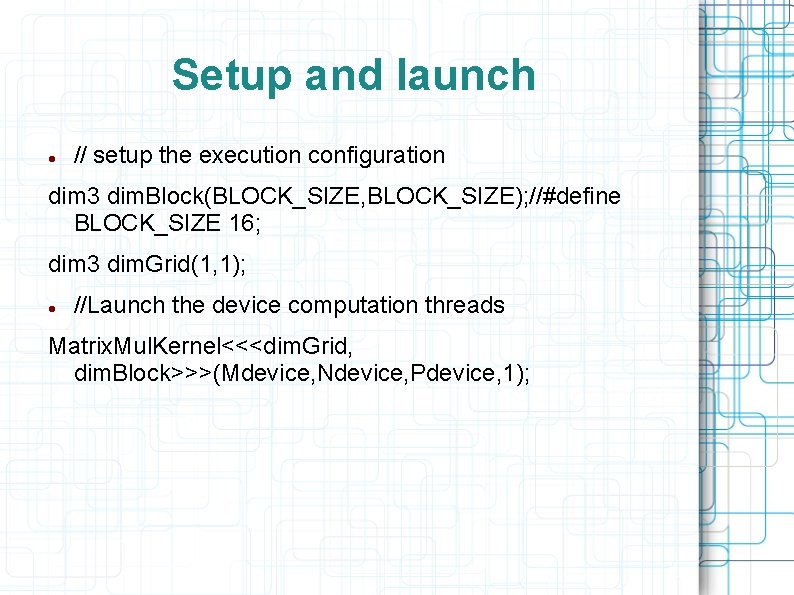

Setup and launch // setup the execution configuration dim 3 dim. Block(BLOCK_SIZE, BLOCK_SIZE); //#define BLOCK_SIZE 16; dim 3 dim. Grid(1, 1); //Launch the device computation threads Matrix. Mul. Kernel<<<dim. Grid, dim. Block>>>(Mdevice, Ndevice, Pdevice, 1);

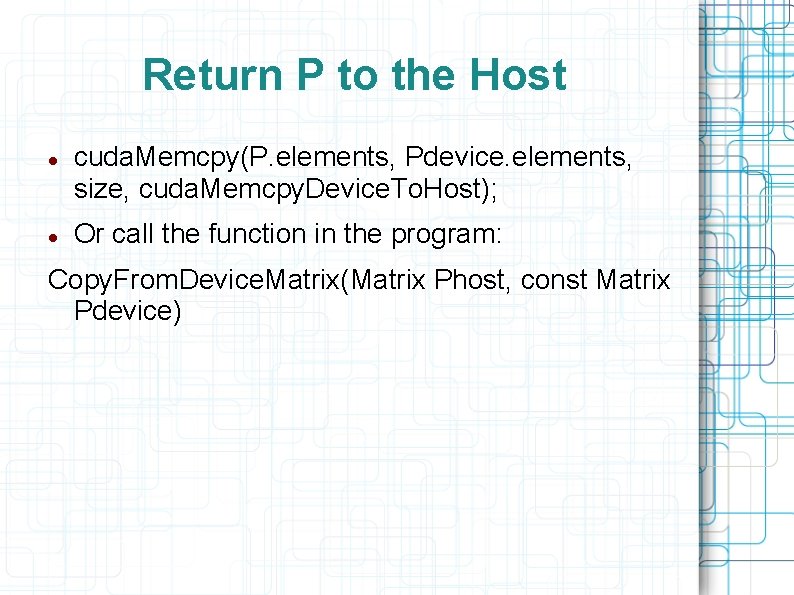

Return P to the Host cuda. Memcpy(P. elements, Pdevice. elements, size, cuda. Memcpy. Device. To. Host); Or call the function in the program: Copy. From. Device. Matrix(Matrix Phost, const Matrix Pdevice)

The last one: Free the point cuda. Free(Mdevice. elements); cuda. Free(Ndevice. elements); cuda. Free(Pdevice. elements);

The Questions 1. How many times is each element of the input matrix loaded during the execution of the kernel? The answer is width. For one element, the index of “i” or “j” is fixed. So the element can only be loaded width times.

2. What is the memory-access to floatingpoint computation ratio in each thread? The ratio is one.

- Slides: 14