GPU Computing CIS543 Lecture 03 Introduction to CUDA

![Formatted Output Format specifiers take the form: %[flags][width][. precision][size]type Flags: `#' ` ' `0' Formatted Output Format specifiers take the form: %[flags][width][. precision][size]type Flags: `#' ` ' `0'](https://slidetodoc.com/presentation_image/33bf6018fbb16b806514dada9739f077/image-19.jpg)

- Slides: 24

GPU Computing CIS-543 Lecture 03: Introduction to CUDA Dr. Muhammad Abid, DCIS, PIEAS GPU Computing, PIEAS

Programmer's View of Computing System To a CUDA programmer, the computing system consists of a host and one or more devices Simplified Detailed GPU Computing, PIEAS

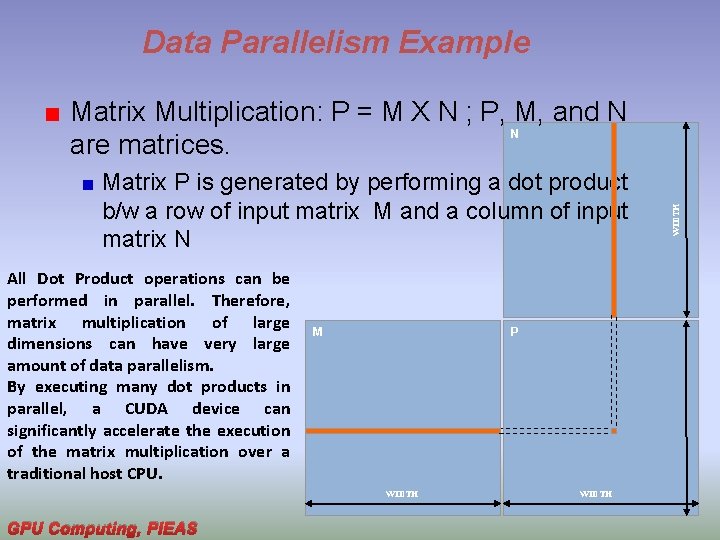

Data Parallelism GPUs expedite the execution of a program sections exhibiting a rich amount of data parallelism Data parallelism refers to the program property whereby many arithmetic operations can be safely performed on the data structures in a simultaneous manner Many software applications exhibit this property, ranging from image processing to Bioinformatics, to molecular dynamics, to physics simulations, etc. GPU Computing, PIEAS

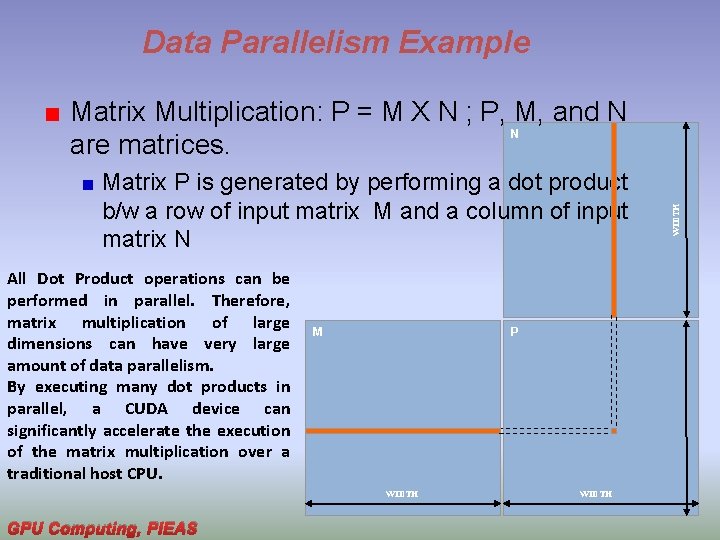

Data Parallelism Example Matrix P is generated by performing a dot product b/w a row of input matrix M and a column of input matrix N All Dot Product operations can be performed in parallel. Therefore, matrix multiplication of large dimensions can have very large amount of data parallelism. By executing many dot products in parallel, a CUDA device can significantly accelerate the execution of the matrix multiplication over a traditional host CPU. M P WIDTH GPU Computing, PIEAS WIDTH Matrix Multiplication: P = M X N ; P, M, and N N are matrices.

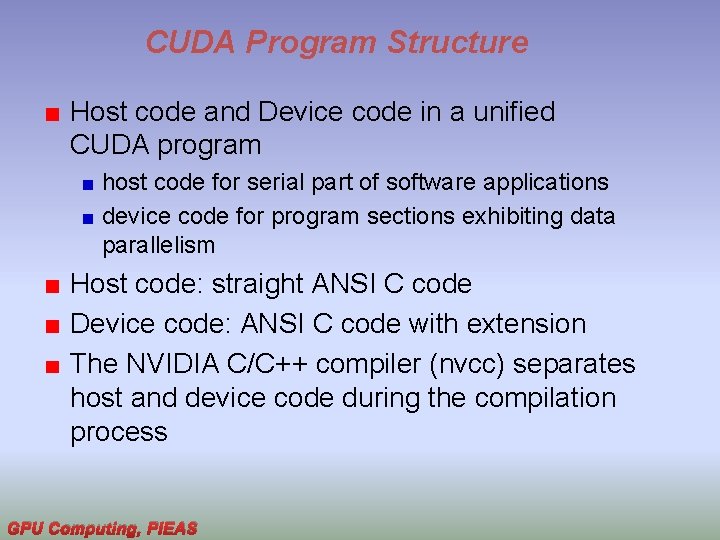

CUDA Program Structure Host code and Device code in a unified CUDA program host code for serial part of software applications device code for program sections exhibiting data parallelism Host code: straight ANSI C code Device code: ANSI C code with extension The NVIDIA C/C++ compiler (nvcc) separates host and device code during the compilation process GPU Computing, PIEAS

Kernel Functions Data-parallel function in CUDA known as kernel function or simply kernel generates a large number of threads to exploit data parallelism. Matrix multiplication kernel can generate thousands of threads where each thread computes dot product. For this case M = N = P = 1000 X 1000, kernel will generate 1, 000 threads when invoked/ called. CUDA threads are of much lighter weight than the CPU threads. take very few cycles to generate and schedule due to efficient hardware support. GPU Computing, PIEAS

Kernel Functions A kernel specifies the code to be executed by all threads during a parallel phase. Because all of these threads execute the same code, CUDA programming is an instance of the well-known single-program, multiple-data (SPMD) parallel programming style GPU Computing, PIEAS

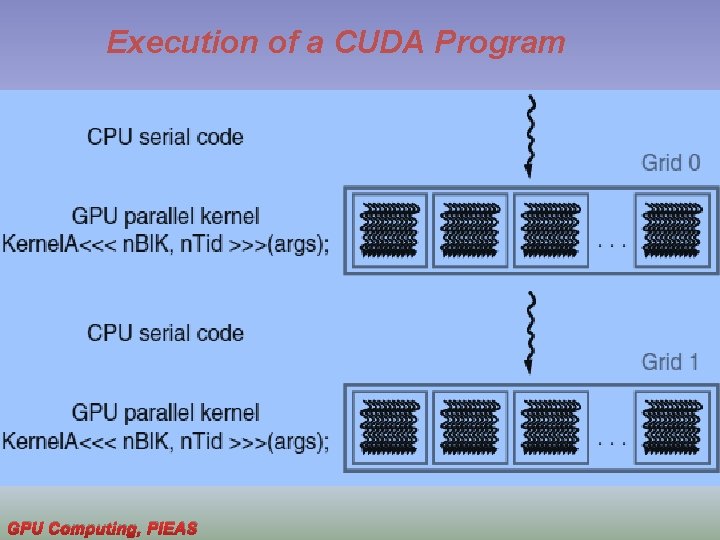

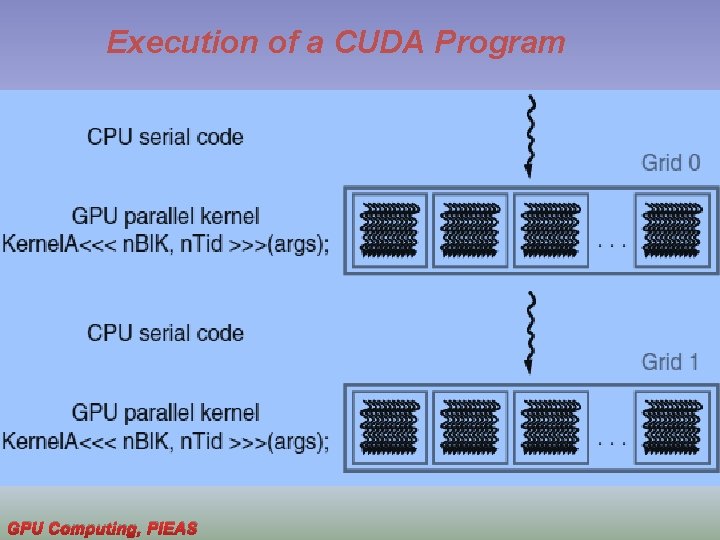

Execution of a CUDA Program GPU Computing, PIEAS

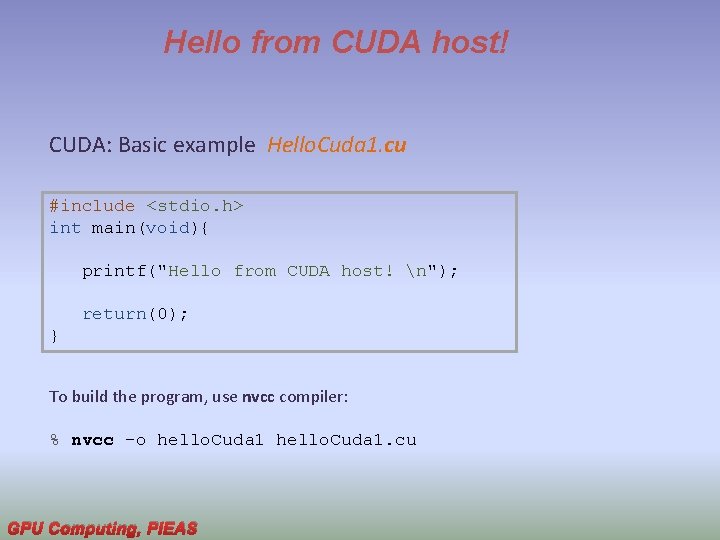

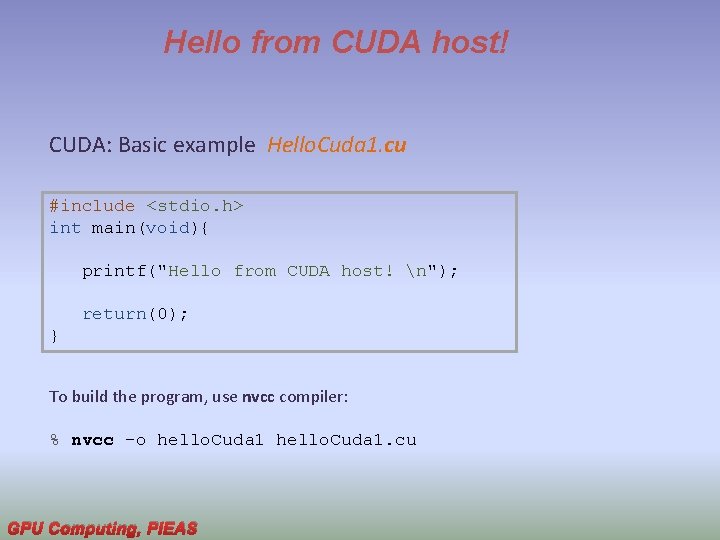

Hello from CUDA host! CUDA: Basic example Hello. Cuda 1. cu #include <stdio. h> int main(void){ printf("Hello from CUDA host! n"); return(0); } To build the program, use nvcc compiler: % nvcc -o hello. Cuda 1. cu GPU Computing, PIEAS

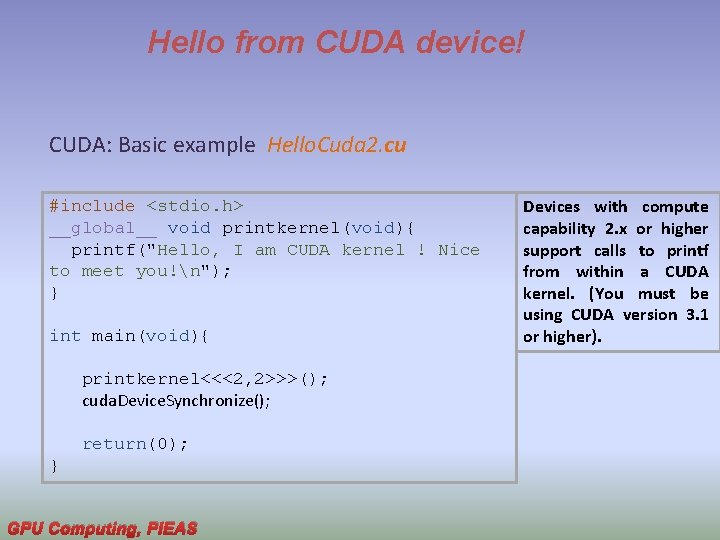

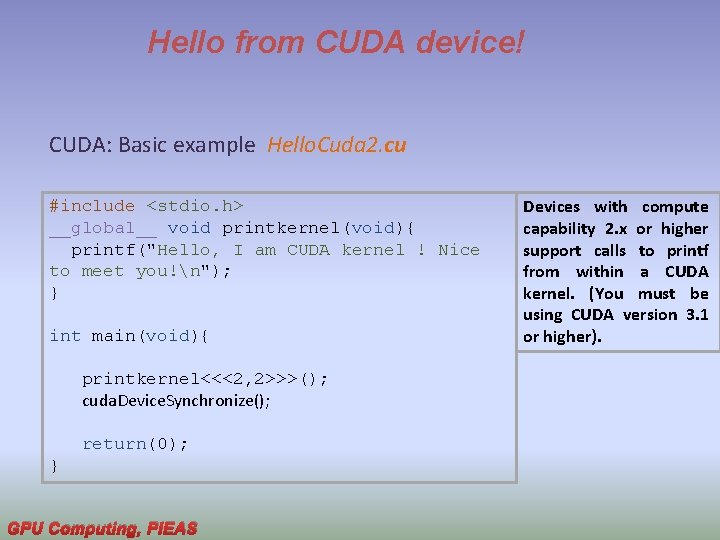

Hello from CUDA device! CUDA: Basic example Hello. Cuda 2. cu #include <stdio. h> __global__ void printkernel(void){ printf("Hello, I am CUDA kernel ! Nice to meet you!n"); } int main(void){ printkernel<<<2, 2>>>(); cuda. Device. Synchronize(); return(0); } GPU Computing, PIEAS Devices with compute capability 2. x or higher support calls to printf from within a CUDA kernel. (You must be using CUDA version 3. 1 or higher).

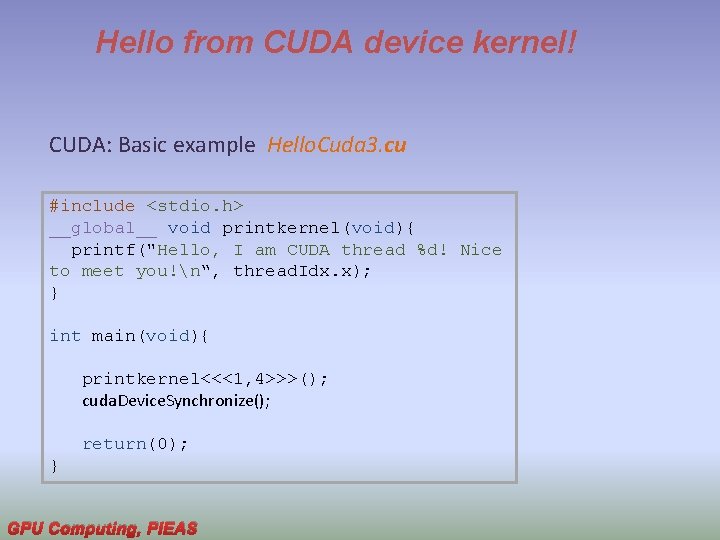

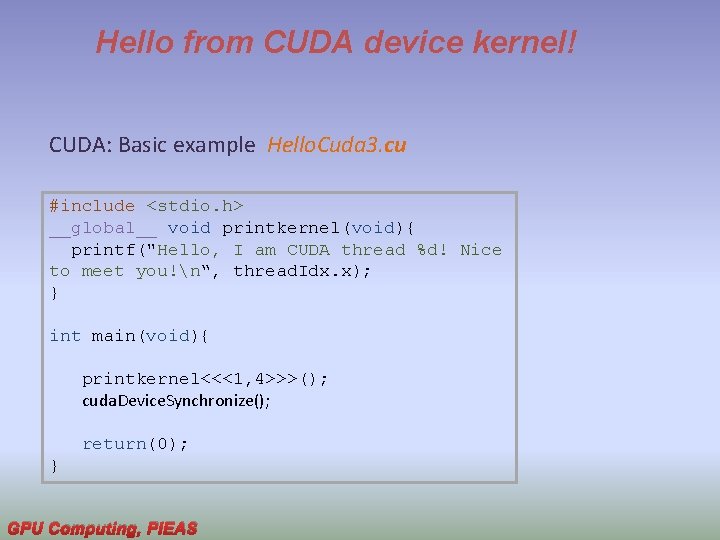

Hello from CUDA device kernel! CUDA: Basic example Hello. Cuda 3. cu #include <stdio. h> __global__ void printkernel(void){ printf("Hello, I am CUDA thread %d! Nice to meet you!n“, thread. Idx. x); } int main(void){ printkernel<<<1, 4>>>(); cuda. Device. Synchronize(); return(0); } GPU Computing, PIEAS

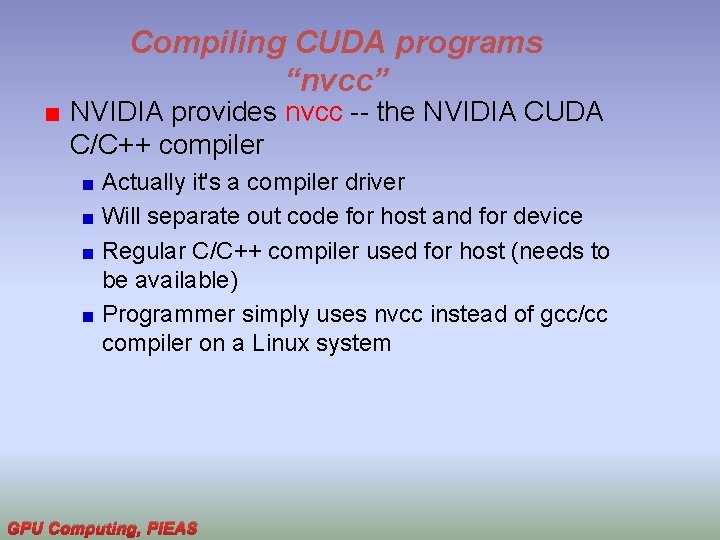

Compiling CUDA programs “nvcc” NVIDIA provides nvcc -- the NVIDIA CUDA C/C++ compiler Actually it's a compiler driver Will separate out code for host and for device Regular C/C++ compiler used for host (needs to be available) Programmer simply uses nvcc instead of gcc/cc compiler on a Linux system GPU Computing, PIEAS

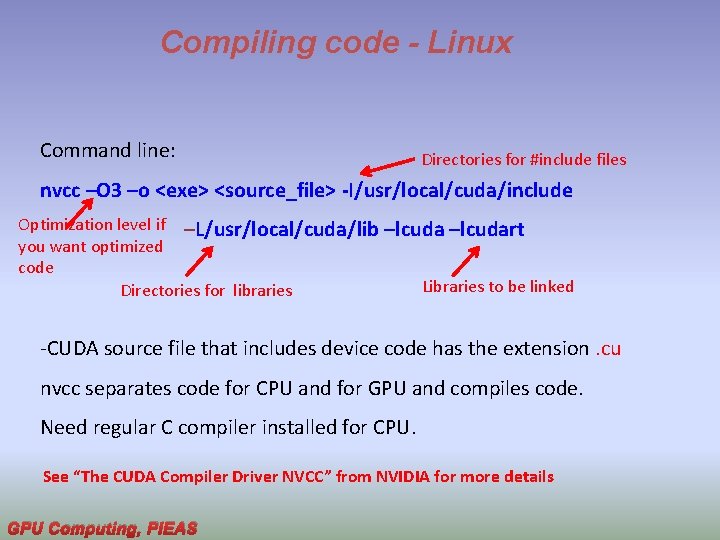

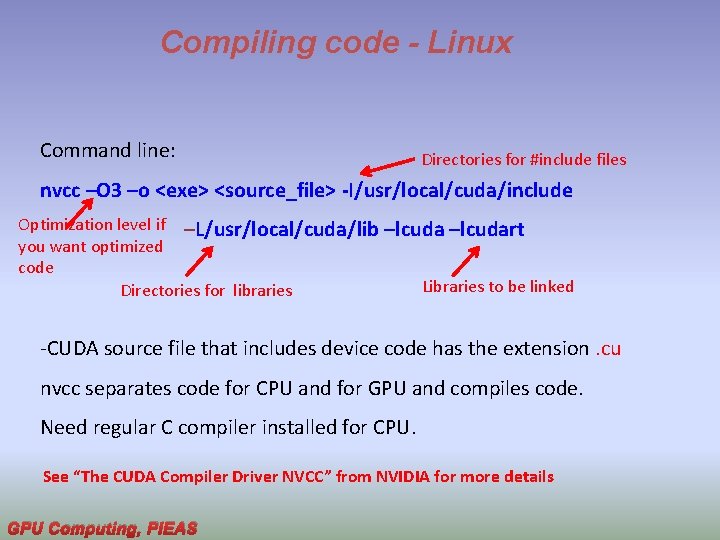

Compiling code - Linux Command line: Directories for #include files nvcc –O 3 –o <exe> <source_file> -I/usr/local/cuda/include Optimization level if –L/usr/local/cuda/lib –lcudart you want optimized code Libraries to be linked Directories for libraries -CUDA source file that includes device code has the extension. cu nvcc separates code for CPU and for GPU and compiles code. Need regular C compiler installed for CPU. See “The CUDA Compiler Driver NVCC” from NVIDIA for more details GPU Computing, PIEAS

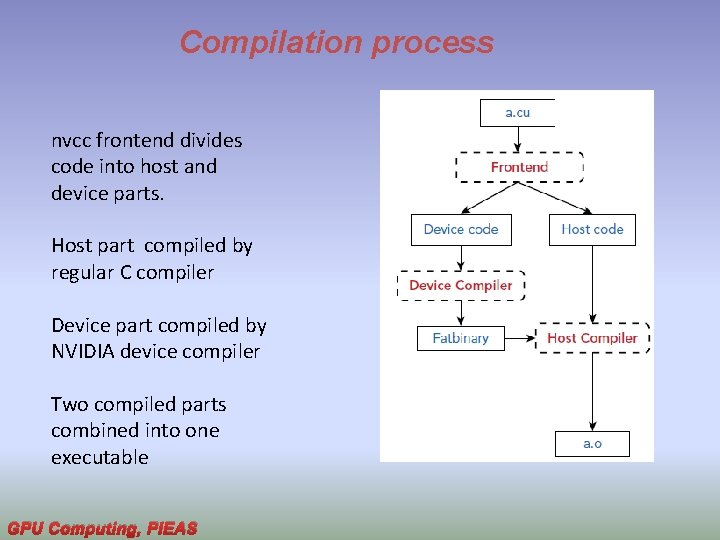

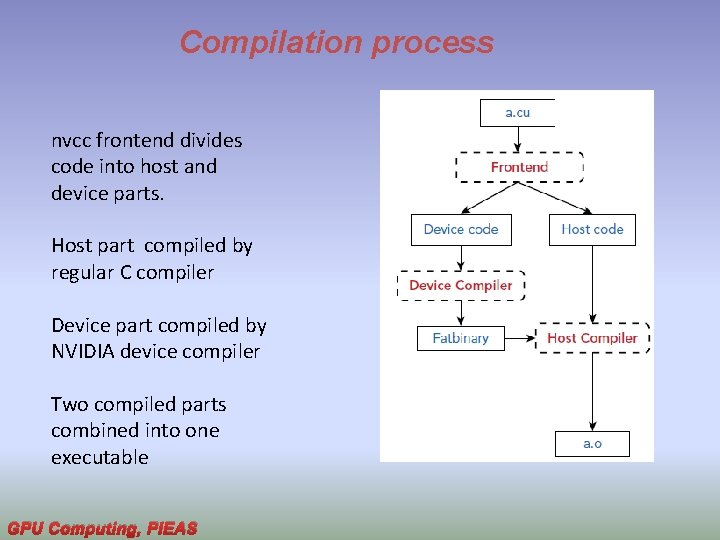

Compilation process nvcc frontend divides code into host and device parts. Host part compiled by regular C compiler Device part compiled by NVIDIA device compiler Two compiled parts combined into one executable GPU Computing, PIEAS

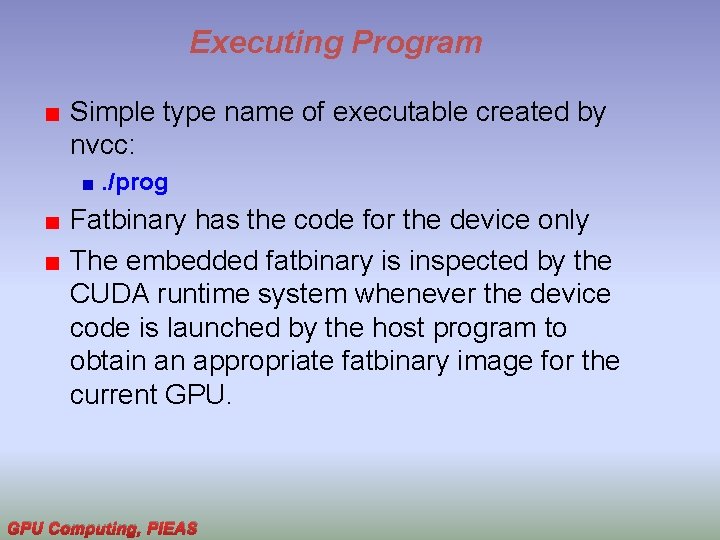

Executing Program Simple type name of executable created by nvcc: . /prog Fatbinary has the code for the device only The embedded fatbinary is inspected by the CUDA runtime system whenever the device code is launched by the host program to obtain an appropriate fatbinary image for the current GPU Computing, PIEAS

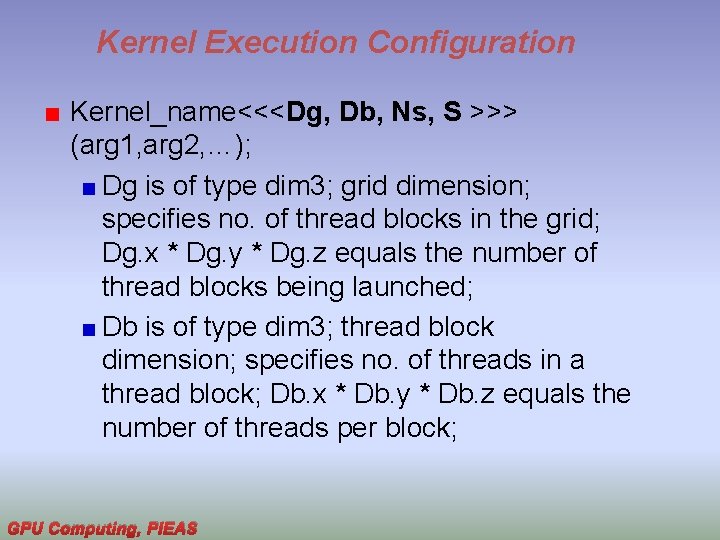

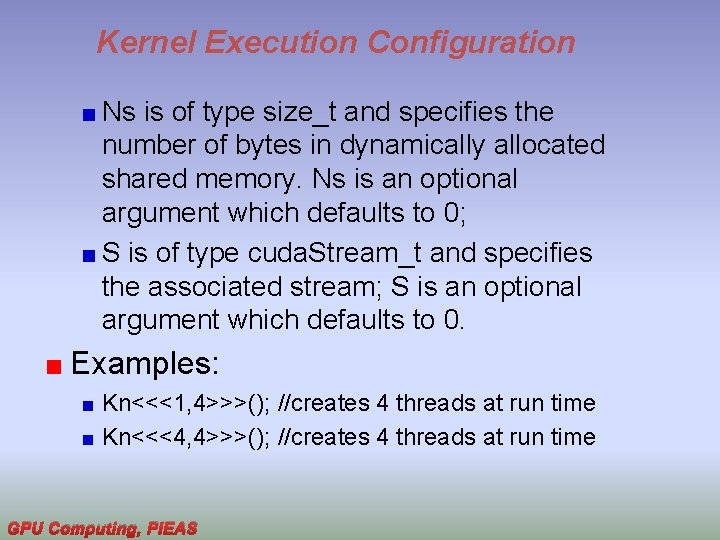

Kernel Execution Configuration Kernel_name<<<Dg, Db, Ns, S >>> (arg 1, arg 2, …); Dg is of type dim 3; grid dimension; specifies no. of thread blocks in the grid; Dg. x * Dg. y * Dg. z equals the number of thread blocks being launched; Db is of type dim 3; thread block dimension; specifies no. of threads in a thread block; Db. x * Db. y * Db. z equals the number of threads per block; GPU Computing, PIEAS

Kernel Execution Configuration Ns is of type size_t and specifies the number of bytes in dynamically allocated shared memory. Ns is an optional argument which defaults to 0; S is of type cuda. Stream_t and specifies the associated stream; S is an optional argument which defaults to 0. Examples: Kn<<<1, 4>>>(); //creates 4 threads at run time Kn<<<4, 4>>>(); //creates 4 threads at run time GPU Computing, PIEAS

Formatted Output only supported by devices of compute capability 2. x and higher int printf(const char *format[, arg, . . . ]); prints formatted output from a kernel to a host-side output stream. The printf() command is executed as any other device-side function: per-thread, and in the context of the calling thread. GPU Computing, PIEAS

![Formatted Output Format specifiers take the form flagswidth precisionsizetype Flags 0 Formatted Output Format specifiers take the form: %[flags][width][. precision][size]type Flags: `#' ` ' `0'](https://slidetodoc.com/presentation_image/33bf6018fbb16b806514dada9739f077/image-19.jpg)

Formatted Output Format specifiers take the form: %[flags][width][. precision][size]type Flags: `#' ` ' `0' `+' `-' Width: `*' `0 -9' Precision: `0 -9' Size: `h' `ll' Type: `%cdioux. Xpe. Efg. Ga. As Note: CUDA's printf()will accept any combination of flag, width, precision, size and type, whether or not overall they form a valid format specifier. Printf reference GPU Computing, PIEAS

Formatted Output Limitations Final formatting of the printf() output takes place on the host system format string must be understood by the hostsystem's compiler and C library. The printf() command can accept at most 32 arguments in addition to the format string. Additional arguments beyond this will be ignored, and the format specifier output as -is. GPU Computing, PIEAS

Formatted Output Limitations Make sure compiling system and running system support the same data type size. Differing size of the long type: a kernel which is compiled on a machine with long type size 8 B but then run on a machine with 4 B long will see corrupted output for all format strings which include "%ld". It is recommended that the compilation platform matches the execution platform to ensure safety. GPU Computing, PIEAS

Formatted Output Limitations The output buffer for printf(): set to a fixed size before kernel launch. circular and if more output is produced during kernel execution than can fit in the buffer, older output is overwritten. GPU Computing, PIEAS

Output Buffer Flushed only when one of these actions is performed: Kernel launch via <<<>>> or cu. Launch. Kernel() (at the start of the launch, and if the CUDA_LAUNCH_BLOCKING environment variable is set to 1, at the end of the launch as well), Synchronization via cuda. Device. Synchronize(), cu. Ctx. Synchronize(), cuda. Stream. Synchronize(), cuda. Event. Synchronize(), or cu. Event. Synchronize(), GPU Computing, PIEAS

Output Buffer Memory copies via any blocking version of cuda. Memcpy() or cu. Memcpy(), Module loading/unloading via cu. Module. Load() or cu. Module. Unload(), Context destruction via cuda. Device. Reset() or cu. Ctx. Destroy(). Prior to executing a stream callback added by cuda. Stream. Add. Callback or cu. Stream. Add. Callback. Note: buffer is not flushed automatically when the program exits. GPU Computing, PIEAS