Google Protocol Buffers as serialization method to transfer

Google Protocol Buffers as serialization method to transfer high-volume, low-latency event data. Casablanca Developer Forum Nokia Mobile Networks Management and Orchestration Team Ben Cheung Tomasz Kaminski Damian Nowak June 21 st, 2018

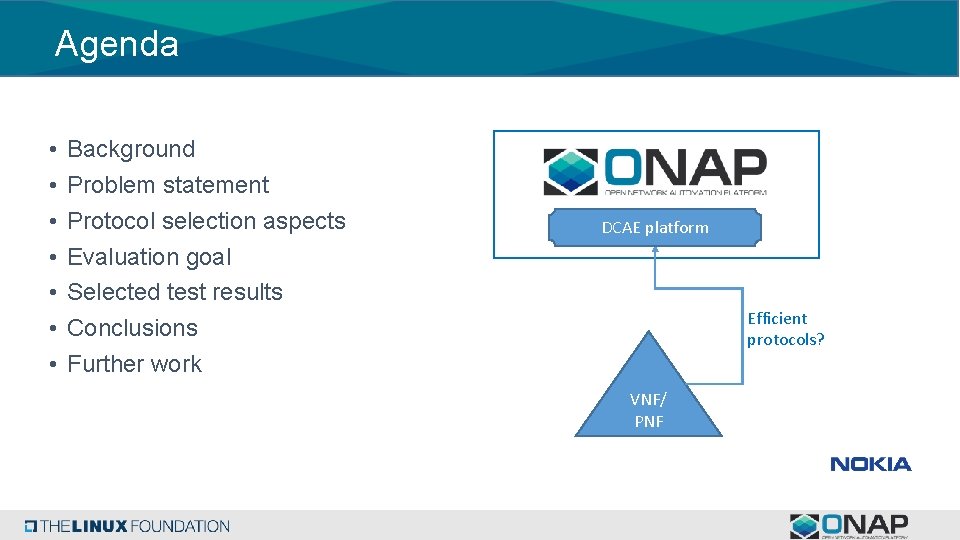

Agenda • • Background Problem statement Protocol selection aspects Evaluation goal Selected test results Conclusions Further work DCAE platform Efficient protocols? VNF/ PNF

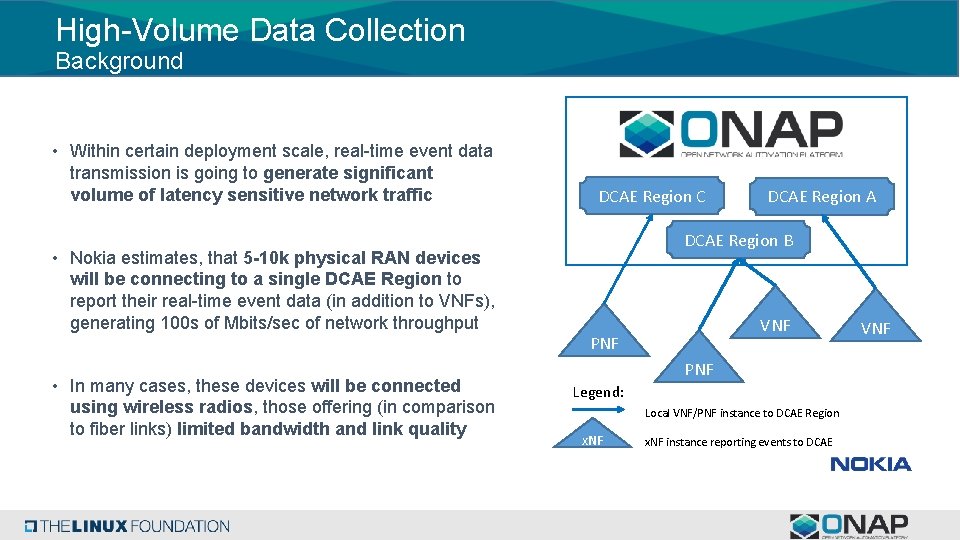

High-Volume Data Collection Background • Within certain deployment scale, real-time event data transmission is going to generate significant volume of latency sensitive network traffic • Nokia estimates, that 5 -10 k physical RAN devices will be connecting to a single DCAE Region to report their real-time event data (in addition to VNFs), generating 100 s of Mbits/sec of network throughput • In many cases, these devices will be connected using wireless radios, those offering (in comparison to fiber links) limited bandwidth and link quality DCAE Region C DCAE Region A DCAE Region B VNF PNF Legend: Local VNF/PNF instance to DCAE Region x. NF instance reporting events to DCAE VNF

High-Volume Data Collection Problem Statement Problem statement ONAP collectors in DCAE require a protocol, which allows efficient serialization (bandwidth and latency optimized) and streaming event data transmission in potentially unreliable networks.

High-Volume Data Collection Protocol selection aspects (1/2) • Human readability versus transmissions efficiency • Low bandwidth/Latency requirements • Lightweight to serialize/deserialize • Easy integration within analytics applications § Support for different programming languages § Existing know-how among analytics applications developers • Support for datagram extentions (backward/forward compatibility) • Telco market/applications adoption – active development running in opensource • Previous ONAP adoption/roadmap alignment

High-Volume Data Collection Evaluated Protocol Nokia has selected the following protocols for comparison (based on criteria listed in slide 5): • Persistent TCP socket/HTTP/JSON § § Existing protocol used by DCAE/VES collector ASCII based – human readable contents using e. g. protocol analysers De-facto standard in modern, micro-service based applications Not as efficient in data serialization, as binary protocols § Persistent TCP socket/GPB (Google Protocol Buffers) § § § Existing protocol/serialization method, well known and adopted in the industry Binary based – very efficient in data serialization Targeted to stream large amount of data Human readability not a requirement due to data volume Potentially could be secured with TLS

High-Volume Data Collection Benchmark/Comparison study conducted by Nokia to evaluate binary encoding Certain mechanisms behave differently in various conditions. That`s why it is important to put a mechnisms into test in specific context. Nokia`s evaluation goal, was to compare the JSON and GPB data serialization methods in their target use-case of event data collection from a large, distributed physical device base, like a Radio Area Network (RAN). Results of the study are obviously relevant for other applications and devices, generating significant amount of event data (faults, measurements, …)

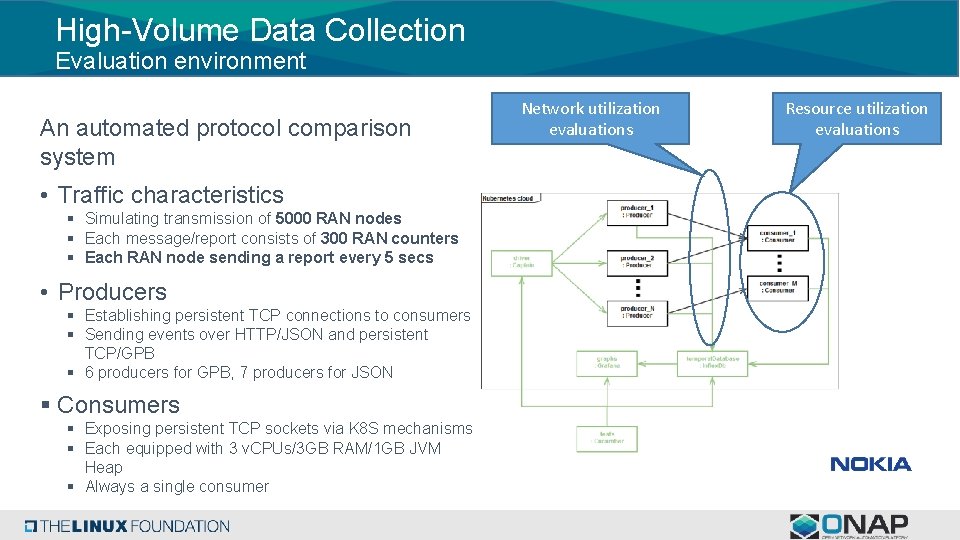

High-Volume Data Collection Evaluation environment An automated protocol comparison system • Traffic characteristics § Simulating transmission of 5000 RAN nodes § Each message/report consists of 300 RAN counters § Each RAN node sending a report every 5 secs • Producers § Establishing persistent TCP connections to consumers § Sending events over HTTP/JSON and persistent TCP/GPB § 6 producers for GPB, 7 producers for JSON § Consumers § Exposing persistent TCP sockets via K 8 S mechanisms § Each equipped with 3 v. CPUs/3 GB RAM/1 GB JVM Heap § Always a single consumer Network utilization evaluations Resource utilization evaluations

High-Volume Data Collection Test objects Test object A: Compare message transmission efficiency, using stable load of messages. Keywords: Network bandwidth, message latency Test object B: Compare message processing efficiency, using stable load of messages. Keywords: Resource consumption, message serialization latency 5000 PNFs (BTS), 5 sec report generation, 300 RAN counters

High-Volume Data Collection Test objects Test object A: Compare message transmission efficiency, using stable load of messages. Keywords: Network bandwidth, message latency 5000 PNFs (BTS), 5 sec report generation, 300 RAN counters

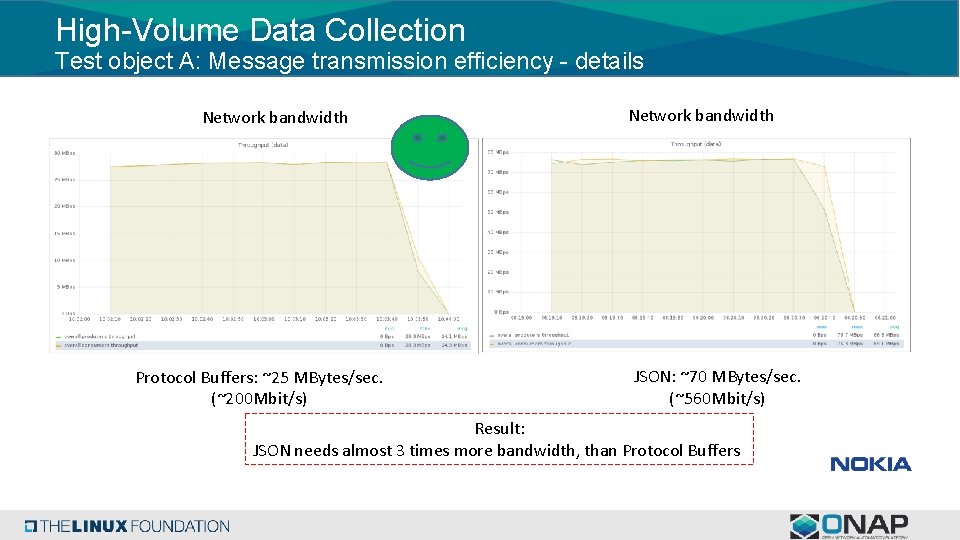

High-Volume Data Collection Test object A: Message transmission efficiency - details Network bandwidth Protocol Buffers: ~25 MBytes/sec. (~200 Mbit/s) Network bandwidth JSON: ~70 MBytes/sec. (~560 Mbit/s) Result: JSON needs almost 3 times more bandwidth, than Protocol Buffers

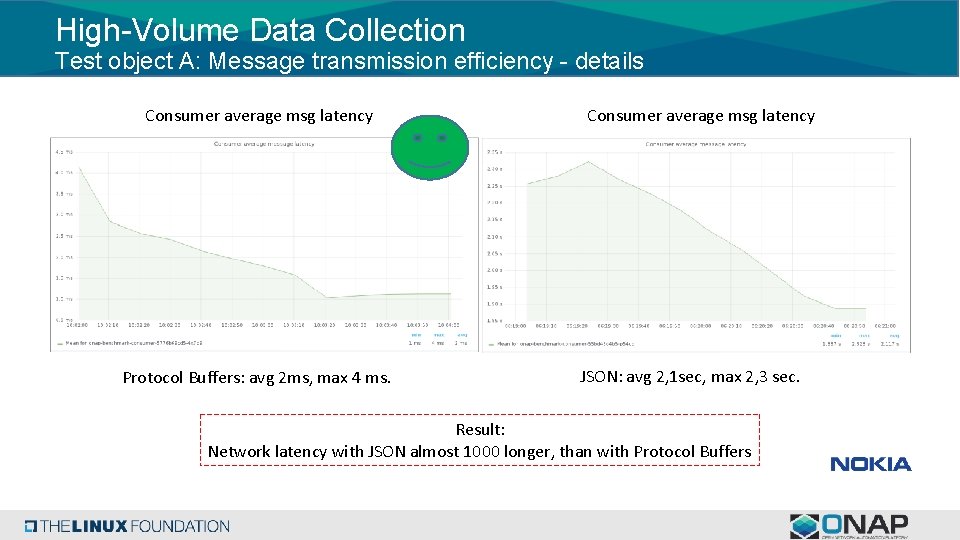

High-Volume Data Collection Test object A: Message transmission efficiency - details Consumer average msg latency Protocol Buffers: avg 2 ms, max 4 ms. Consumer average msg latency JSON: avg 2, 1 sec, max 2, 3 sec. Result: Network latency with JSON almost 1000 longer, than with Protocol Buffers

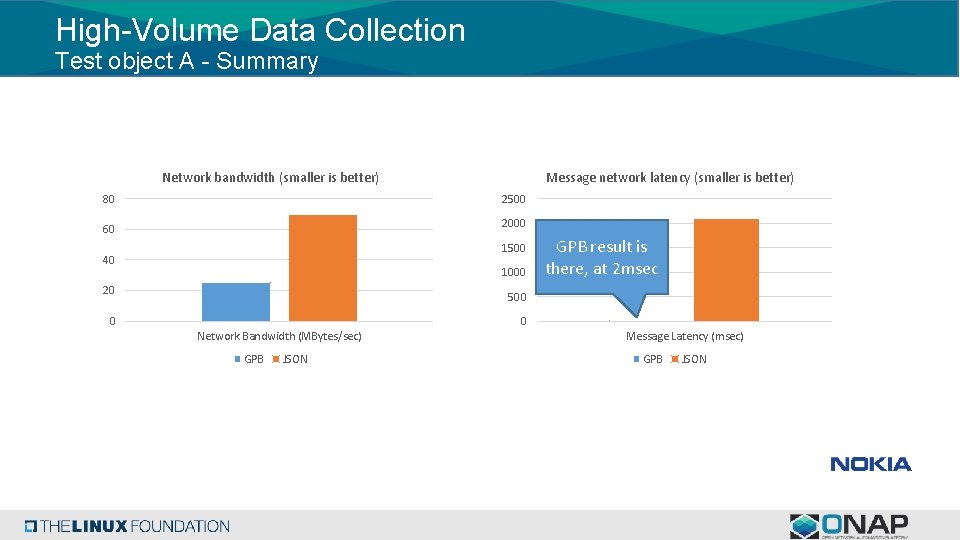

High-Volume Data Collection Test object A - Summary Network bandwidth (smaller is better) 80 Message network latency (smaller is better) 2500 2000 60 1500 40 1000 20 GPB result is there, at 2 msec 500 0 0 Network Bandwidth (MBytes/sec) GPB JSON Message Latency (msec) GPB JSON

High-Volume Data Collection Test objects Test object B: Compare message processing efficiency, using stable load of messages. Keywords: Resource consumption, message serialization latency 5000 PNFs (BTS), 5 sec report generation, 300 RAN counters

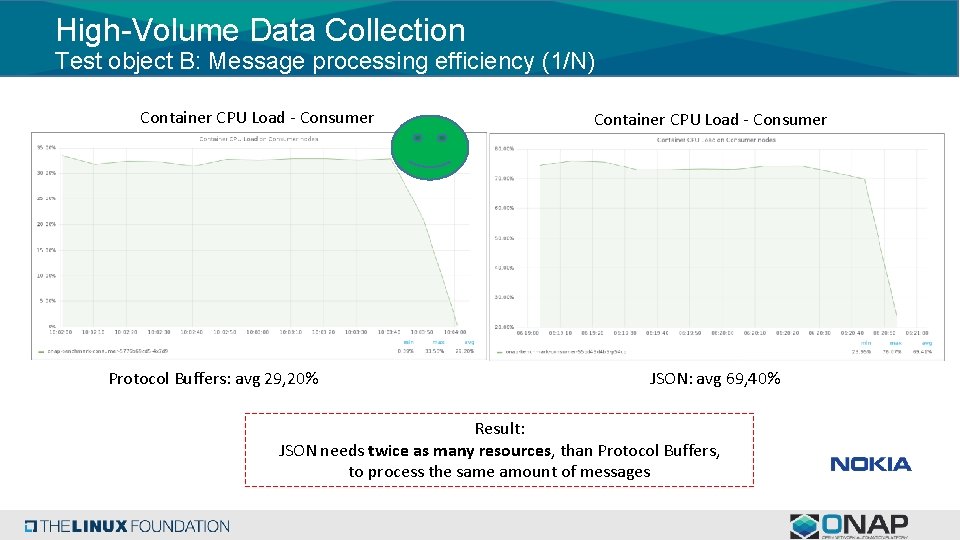

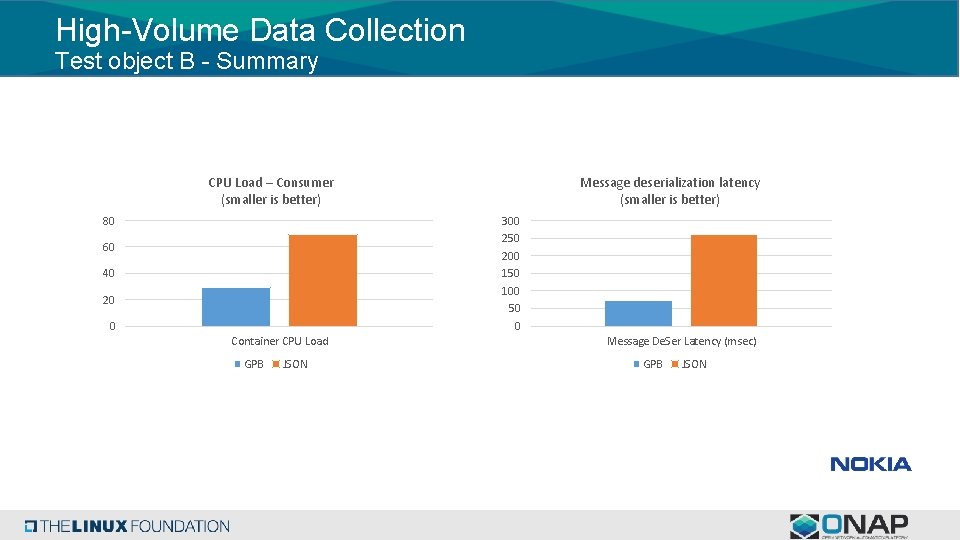

High-Volume Data Collection Test object B: Message processing efficiency (1/N) Container CPU Load - Consumer Protocol Buffers: avg 29, 20% Container CPU Load - Consumer JSON: avg 69, 40% Result: JSON needs twice as many resources, than Protocol Buffers, to process the same amount of messages

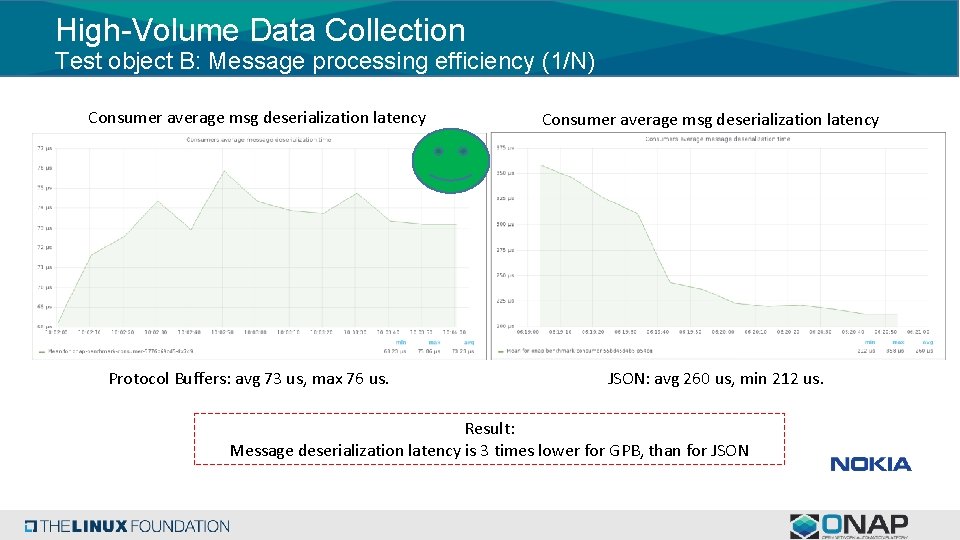

High-Volume Data Collection Test object B: Message processing efficiency (1/N) Consumer average msg deserialization latency Protocol Buffers: avg 73 us, max 76 us. Consumer average msg deserialization latency JSON: avg 260 us, min 212 us. Result: Message deserialization latency is 3 times lower for GPB, than for JSON

High-Volume Data Collection Test object B - Summary CPU Load – Consumer (smaller is better) 80 Message deserialization latency (smaller is better) 300 250 200 150 100 50 0 60 40 20 0 Container CPU Load GPB JSON Message De. Ser Latency (msec) GPB JSON

High-Volume Data Collection Conclusions In context RAN Real. Time PM collection use-case, Google Protocol Buffers in comparison to JSON serialization: üRequires 3 times less bandwidth ü Which is important for remote PNF sites and smaller cloud instances üIntroduces 3 orders of magnitude smaller network / 3 times smaller De. Ser latency ü When data is latency sensitive, and loses relevance quickly üRequires 50% of CPU time on consumer end (using JSON as reference) ü When collector and analytics applications are CPU intensive, and each CPU costs

High-Volume Data Collection Final Conclusion Nokia's analysis demonstrates that GPB serialization is much more efficient for high volume low latency event transmission and recommends ONAP supports events serialized in GPB being collected over persistent connections Nokia is as well committed to support the introduction of this capability into ONAP.

High-Volume Data Collection Further work Nokia is contributing an open-source High-Volume variant of VES collector: üBased on Google Protocol Buffers as serialization mechanism üCompatible with existing VES mechanisms (Common Header fields, …) ü Targeted to provide opportunity to collect any High-Volume data ü Available for integration with PNFs and VNFs ü Integrated with DMaa. P for data distribution within DCAE platform

s Thank You!

- Slides: 21