Goals of IDS Detect wide variety of intrusions

- Slides: 77

Goals of IDS • Detect wide variety of intrusions – Previously known and unknown attacks – Suggests need to learn/adapt to new attacks or changes in behavior • Detect intrusions in timely fashion – May need to be be real-time, especially when system responds to intrusion • Problem: analyzing commands may impact response time of system – May suffice to report intrusion occurred a few minutes or hours ago 2004 CS 395: Computer Security 1

Goals of IDS • Present analysis in simple, easy-to-understand format – Ideally a binary indicator – Usually more complex, allowing analyst to examine suspected attack – User interface critical, especially when monitoring many systems • Be accurate – Minimize false positives, false negatives – Minimize time spent verifying attacks, looking for them 2004 CS 395: Computer Security 2

Models of Intrusion Detection • Anomaly detection – What is usual, is known – What is unusual, is bad • Misuse detection – What is bad is known • Specification-based detection – We know what is good – What is not good is bad 2004 CS 395: Computer Security 3

Anomaly Detection • Analyzes a set of characteristics of system, and compares their values with expected values; report when computed statistics do not match expected statistics – Threshold metrics – Statistical moments – Markov model 2004 CS 395: Computer Security 4

Threshold Metrics • Counts number of events that occur – Between m and n events (inclusive) expected to occur – If number falls outside this range, anomalous • Example – Windows: lock user out after k failed sequential login attempts. Range is (0, k– 1). • k or more failed logins deemed anomalous 2004 CS 395: Computer Security 5

Difficulties • Appropriate threshold may depend on nonobvious factors – Typing skill of users – If keyboards are US keyboards, and most users are French, typing errors very common • Dvorak vs. non-Dvorak within the US 2004 CS 395: Computer Security 6

Statistical Moments • Analyzer computes standard deviation (first two moments), other measures of correlation (higher moments) – If measured values fall outside expected interval for particular moments, anomalous • Potential problem – Profile may evolve over time; solution is to weigh data appropriately or alter rules to take changes into account 2004 CS 395: Computer Security 7

Example: IDES • Developed at SRI International to test Denning’s model – Represent users, login session, other entities as ordered sequence of statistics <q 0, j, …, qn, j> – qi, j (statistic i for day j) is count or time interval – Weighting favors recent behavior over past behavior • Ak, j is sum of counts making up metric of kth statistic on jth day • qk, l+1 = Ak, l+1 – Ak, l + 2–rtqk, l where t is number of log entries/total time since start, r factor determined through experience 2004 CS 395: Computer Security 8

Example: Haystack • Let An be nth count or time interval statistic • Defines bounds TL and TU such that 90% of values for Ais lie between TL and TU • Haystack computes An+1 – Then checks that TL ≤ An+1 ≤ TU – If false, anomalous • Thresholds updated – Ai can change rapidly; as long as thresholds met, all is well – System is adaptive: as values of variables change, so do thresholds. 2004 CS 395: Computer Security 9

Potential Problems • Assumes behavior of processes and users can be modeled statistically – Ideal: matches a known distribution such as Gaussian or normal – Otherwise, must use techniques like clustering to determine moments, characteristics that show anomalies, etc. • Real-time computation a problem too 2004 CS 395: Computer Security 10

Markov Model • Past state affects current transition • Anomalies based upon sequences of events, and not on occurrence of single event • Events preceding time have put system into particular state – When next event occurs, system transitions into new state – Over time, transition probabilities are developed – When an event occurs that causes a transition with low probability, event is deemed anomalous 2004 CS 395: Computer Security 11

Markov Model • Problem: need to train system to establish valid sequences – Use known, training data that is not anomalous – The more training data, the better the model – Training data should cover all possible normal uses of system • Approach heralded misuse detection, and was used to develop effective anomaly detection mechanism 2004 CS 395: Computer Security 12

Example: TIM • Time-based Inductive Learning (AI technique) • System given type of event to be predicted. Develops set of temporally related conditions that predict time that event will occur with respect to the set • Ex. R: A B (p) – Says that B should follow A with probability p 2004 CS 395: Computer Security 13

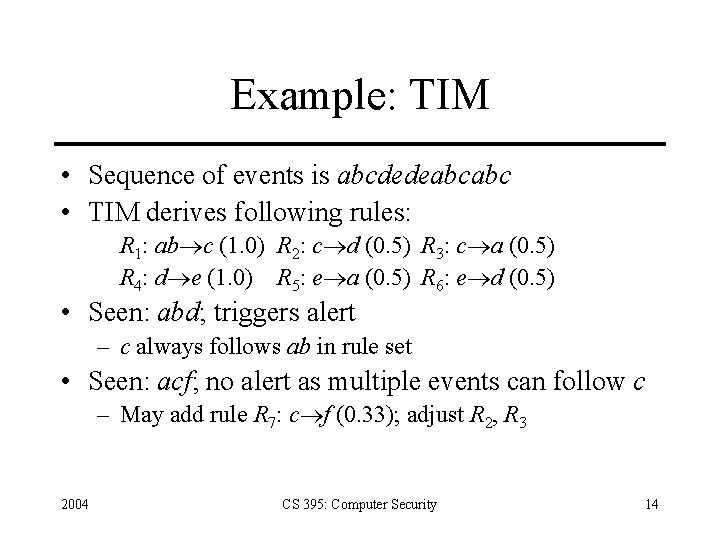

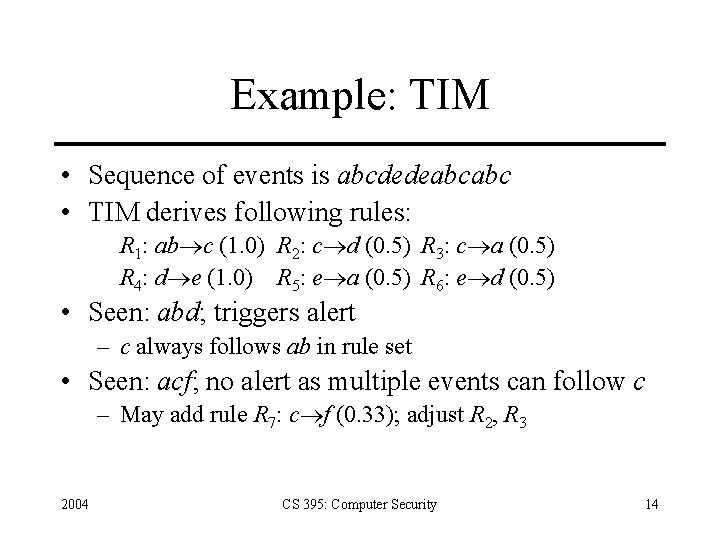

Example: TIM • Sequence of events is abcdedeabcabc • TIM derives following rules: R 1: ab c (1. 0) R 2: c d (0. 5) R 3: c a (0. 5) R 4: d e (1. 0) R 5: e a (0. 5) R 6: e d (0. 5) • Seen: abd; triggers alert – c always follows ab in rule set • Seen: acf; no alert as multiple events can follow c – May add rule R 7: c f (0. 33); adjust R 2, R 3 2004 CS 395: Computer Security 14

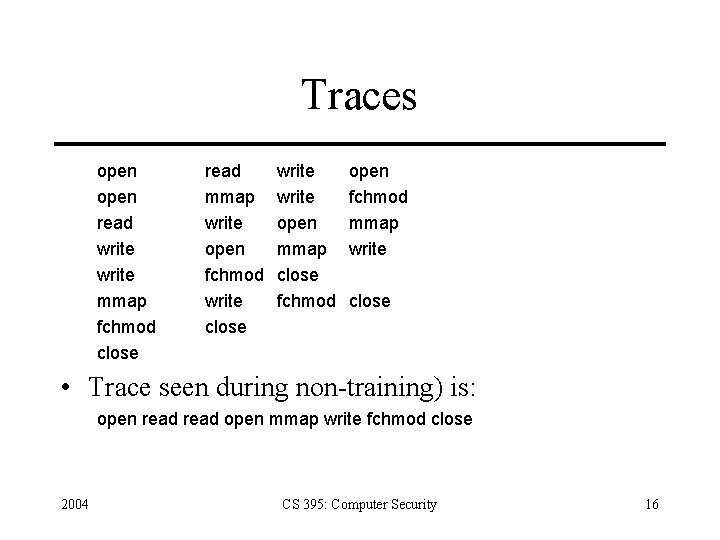

Sequences of System Calls • Forrest: define normal behavior in terms of sequences of system calls (traces) • Experiments show it distinguishes sendmail and lpd from other programs • Training trace is: open read write open mmap write fchmod close • Produces following database: (assuming trace length is 4) 2004 CS 395: Computer Security 15

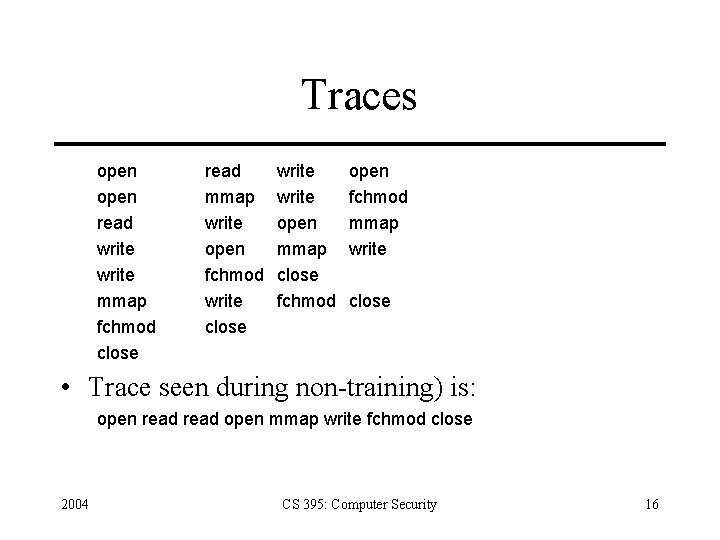

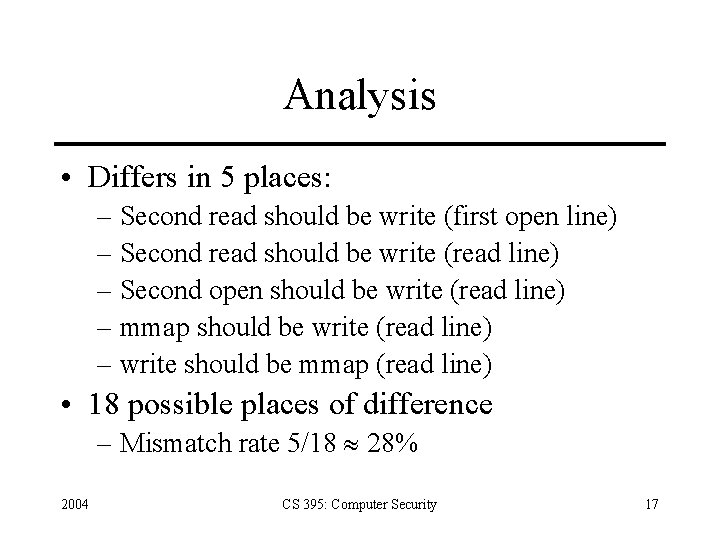

Traces open read write mmap fchmod close read mmap write open fchmod write close write open mmap close fchmod open fchmod mmap write close • Trace seen during non-training) is: open read open mmap write fchmod close 2004 CS 395: Computer Security 16

Analysis • Differs in 5 places: – Second read should be write (first open line) – Second read should be write (read line) – Second open should be write (read line) – mmap should be write (read line) – write should be mmap (read line) • 18 possible places of difference – Mismatch rate 5/18 28% 2004 CS 395: Computer Security 17

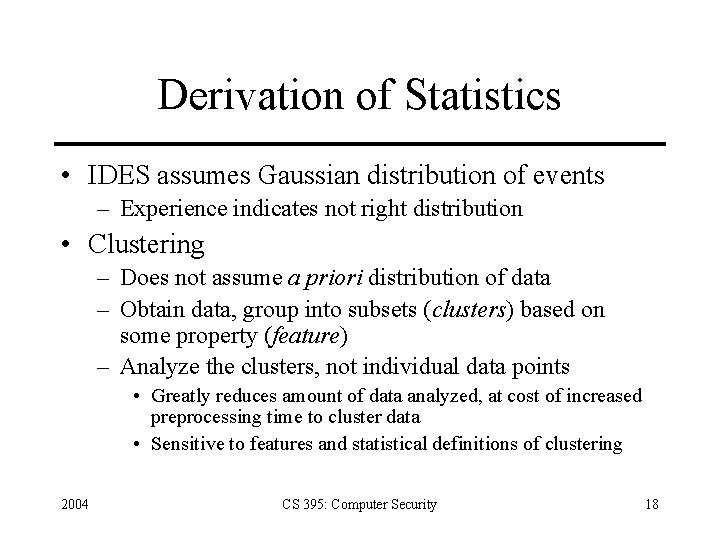

Derivation of Statistics • IDES assumes Gaussian distribution of events – Experience indicates not right distribution • Clustering – Does not assume a priori distribution of data – Obtain data, group into subsets (clusters) based on some property (feature) – Analyze the clusters, not individual data points • Greatly reduces amount of data analyzed, at cost of increased preprocessing time to cluster data • Sensitive to features and statistical definitions of clustering 2004 CS 395: Computer Security 18

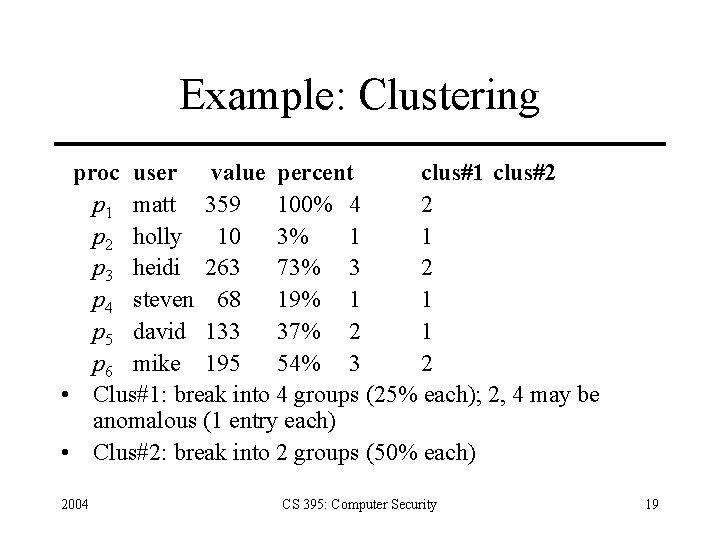

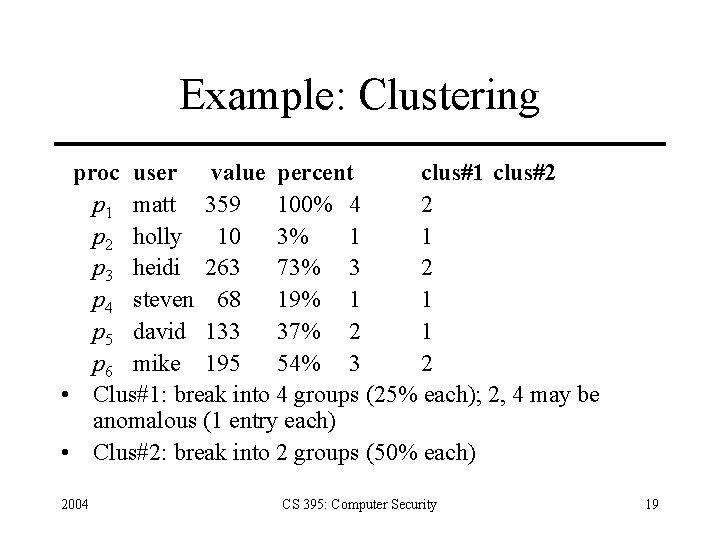

Example: Clustering proc user value percent clus#1 clus#2 p 1 matt 359 100% 4 2 p 2 holly 10 3% 1 1 p 3 heidi 263 73% 3 2 p 4 steven 68 19% 1 1 p 5 david 133 37% 2 1 p 6 mike 195 54% 3 2 • Clus#1: break into 4 groups (25% each); 2, 4 may be anomalous (1 entry each) • Clus#2: break into 2 groups (50% each) 2004 CS 395: Computer Security 19

Finding Features • Which features best show anomalies? – CPU use may not, but I/O use may • Use training data – Anomalous data marked – Feature selection program picks features, clusters that best reflects anomalous data 2004 CS 395: Computer Security 20

Example • Analysis of network traffic for features enabling classification as anomalous • 7 features – – – – 2004 Index number Length of time of connection Packet count from source to destination Packet count from destination to source Number of data bytes from source to destination Number of data bytes from destination to source Expert system warning of how likely an attack CS 395: Computer Security 21

Feature Selection • 3 types of algorithms used to select best feature set – Backwards sequential search: assume full set, delete features until error rate minimized • Best: all features except index (error rate 0. 011%) – Beam search: order possible clusters from best to worst, then search from best • As new potential clusters generated, they are added to list • Produced same error rate and feature set size as backwards sequential search. 2004 CS 395: Computer Security 22

Feature Selection • 3 types of algorithms used to select best feature set – Random sequential search: begin with random feature set, add and delete features • Slowest • Produced same results as other two 2004 CS 395: Computer Security 23

Results • If following features used: – Length of time of connection – Number of packets from destination – Number of data bytes from source classification error less than 0. 02% • Identifying type of connection (like SMTP) – Best feature set omitted index, number of data bytes from destination (error rate 0. 007%) – Other types of connections done similarly, but used different sets 2004 CS 395: Computer Security 24

Misuse Modeling • Determines whether a sequence of instructions being executed is known to violate the site security policy – Descriptions of known or potential exploits grouped into rule sets – IDS matches data against rule sets; on success, potential attack found • Cannot detect attacks unknown to developers of rule sets – No rules to cover them – Sometimes even miss variations of known attacks 2004 CS 395: Computer Security 25

Example: IDIOT • Event: change in system state. Can be result of a single action, or a series of actions resulting in a single observable record • Five features of attacks: 2004 – Existence: attack creates file or other entity – Sequence: attack causes several events sequentially – Partial order: attack causes 2 or more sequences of events, and events form partial order under temporal relation – Duration: something exists for interval of time – Interval: events occur exactly n units of time apart CS 395: Computer Security 26

IDIOT Representation • Sequences of events may be interlaced • Use colored Petri nets to capture this – Each signature corresponds to a particular CPA – Nodes are tokens; edges, transitions – Final state of signature is compromised state • Example: mkdir attack – Edges protected by guards (expressions) – Tokens move from node to node as guards satisfied 2004 CS 395: Computer Security 27

IDIOT Analysis 2004 CS 395: Computer Security 28

IDIOT Features • New signatures can be added dynamically – Partially matched signatures need not be cleared and rematched • Ordering the CPAs allows you to order the checking for attack signatures – Useful when you want a priority ordering – Can order initial branches of CPA to find sequences known to occur often 2004 CS 395: Computer Security 29

Example: STAT • Analyzes state transitions – Need keep only data relevant to security – Example: look at process gaining root privileges; how did it get them? • Example: attack giving setuid to root shell ln target. /–s –s 2004 CS 395: Computer Security 30

State Transition Diagram • Now add postconditions for attack under the appropriate state 2004 CS 395: Computer Security 31

Final State Diagram • Conditions met when system enters states s 1 and s 2; USER is effective UID of process • Note final postcondition is USER is no longer effective UID; usually done with new EUID of 0 (root) but works with any EUID 2004 CS 395: Computer Security 32

USTAT • USTAT is prototype STAT system – Uses BSM to get system records – Preprocessor gets events of interest, maps them into USTAT’s internal representation • Failed system calls ignored as they do not change state • Inference engine determines when compromising transition occurs 2004 CS 395: Computer Security 33

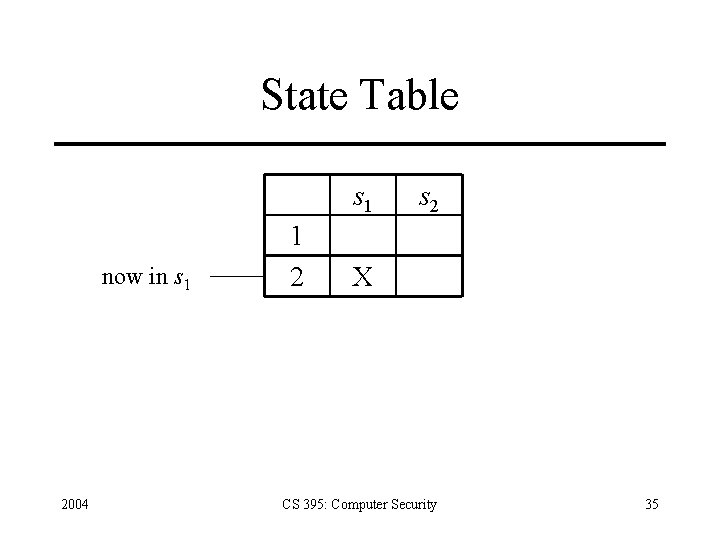

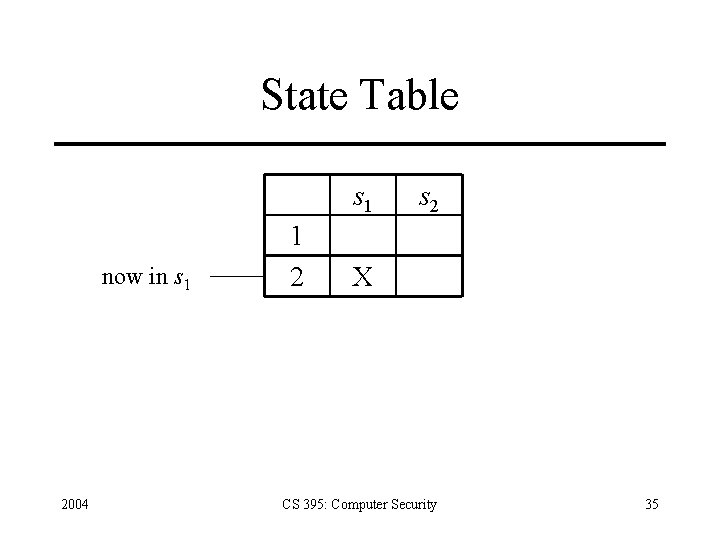

How Inference Engine Works • Constructs series of state table entries corresponding to transitions • Example: rule base has single rule above – – – Initial table has 1 row, 2 columns (corresponding to s 1 and s 2) Transition moves system into s 1 Engine adds second row, with “X” in first column as in state s 1 Transition moves system into s 2 Rule fires as in compromised transition • Does not clear row until conditions of that state false 2004 CS 395: Computer Security 34

State Table s 1 now in s 1 2004 1 2 s 2 X CS 395: Computer Security 35

Example: NFR (Network Flight Recorder) • Built to make adding new rules easily • Architecture: – Packet sucker: read packets from network – Decision engine: uses filters to extract information – Backend: write data generated by filters to disk • Query backend allows administrators to extract raw, postprocessed data from this file • Query backend is separate from NFR process 2004 CS 395: Computer Security 36

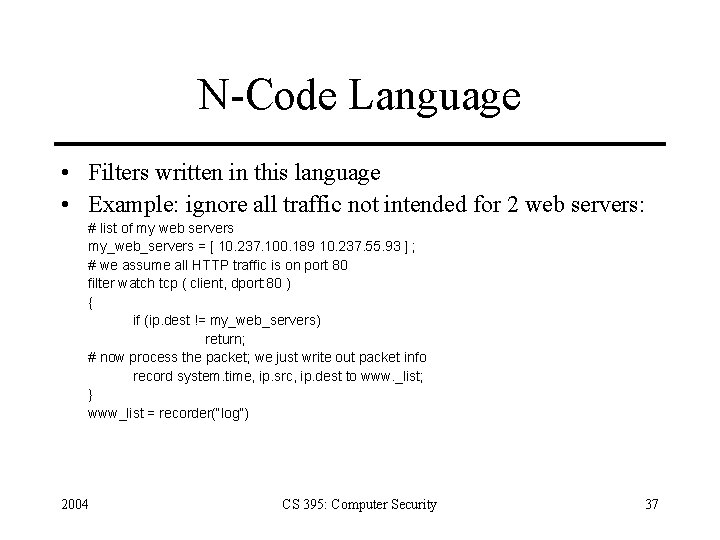

N-Code Language • Filters written in this language • Example: ignore all traffic not intended for 2 web servers: # list of my web servers my_web_servers = [ 10. 237. 100. 189 10. 237. 55. 93 ] ; # we assume all HTTP traffic is on port 80 filter watch tcp ( client, dport: 80 ) { if (ip. dest != my_web_servers) return; # now process the packet; we just write out packet info record system. time, ip. src, ip. dest to www. _list; } www_list = recorder(“log”) 2004 CS 395: Computer Security 37

Specification Modeling • Determines whether execution of sequence of instructions violates specification • Only need to check programs that alter protection state of system • System traces, or sequences of events t 1, … ti, ti+1, …, are basis of this – Event ti occurs at time C(ti) – Events in a system trace are totally ordered 2004 CS 395: Computer Security 38

System Traces • Notion of subtrace (subsequence of a trace) allows you to handle threads of a process, process of a system • Notion of merge of traces U, V when trace U and trace V merged into single trace • Filter p maps trace T to subtrace T´ such that, for all events ti T´, p(ti) is true 2004 CS 395: Computer Security 39

Examples • Subject S composed of processes p, q, r, with traces Tp, Tq, Tr has Ts = Tp Tq Tr • Filtering function: apply to system trace – On process, program, host, user as 4 -tuple < ANY, emacs, ANY, bishop > lists events with program “emacs”, user “bishop” < ANY, nobhill, ANY > list events on host “nobhill” 2004 CS 395: Computer Security 40

Example: Apply to rdist • Ko, Levitt, Ruschitzka defined PE-grammar to describe accepted behavior of program • rdist creates temp file, copies contents into it, changes protection mask, owner of it, copies it into place – Attack: during copy, delete temp file and place symbolic link with same name as temp file – rdist changes mode, ownership to that of program 2004 CS 395: Computer Security 41

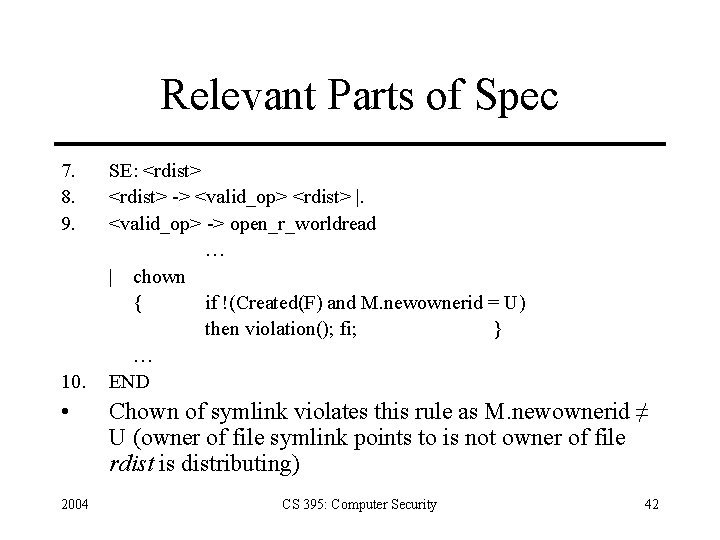

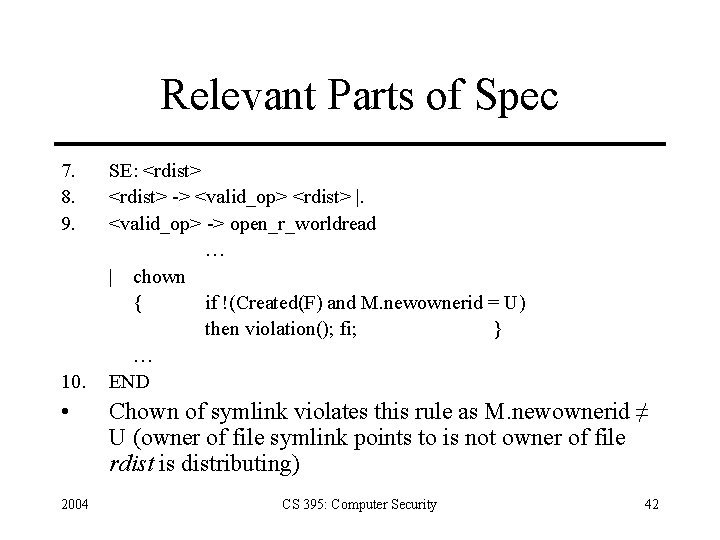

Relevant Parts of Spec 7. 8. 9. 10. • 2004 SE: <rdist> -> <valid_op> <rdist> |. <valid_op> -> open_r_worldread … | chown { if !(Created(F) and M. newownerid = U) then violation(); fi; } … END Chown of symlink violates this rule as M. newownerid ≠ U (owner of file symlink points to is not owner of file rdist is distributing) CS 395: Computer Security 42

Comparison and Contrast • Misuse detection: if all policy rules known, easy to construct rulesets to detect violations – Usual case is that much of policy is unspecified, so rulesets describe attacks, and are not complete • Anomaly detection: detects unusual events, but these are not necessarily security problems • Specification-based vs. misuse: spec assumes if specifications followed, policy not violated; misuse assumes if policy as embodied in rulesets followed, policy not violated 2004 CS 395: Computer Security 43

IDS Architecture • Basically, a sophisticated audit system – Agent like logger; it gathers data for analysis – Director like analyzer; it analyzes data obtained from the agents according to its internal rules – Notifier obtains results from director, and takes some action • May simply notify security officer • May reconfigure agents, director to alter collection, analysis methods • May activate response mechanism 2004 CS 395: Computer Security 44

Agents • Obtains information and sends to director • May put information into another form – Preprocessing of records to extract relevant parts • May delete unneeded information • Director may request agent send other information 2004 CS 395: Computer Security 45

Example • IDS uses failed login attempts in its analysis • Agent scans login log every 5 minutes, sends director for each new login attempt: – Time of failed login – Account name and entered password • Director requests all records of login (failed or not) for particular user – Suspecting a brute-force cracking attempt 2004 CS 395: Computer Security 46

Host-Based Agent • Obtain information from logs – May use many logs as sources – May be security-related or not – May be virtual logs if agent is part of the kernel • Very non-portable • Agent generates its information – Scans information needed by IDS, turns it into equivalent of log record – Typically, check policy; may be very complex 2004 CS 395: Computer Security 47

Network-Based Agents • Detects network-oriented attacks – Denial of service attack introduced by flooding a network • Monitor traffic for a large number of hosts • Examine the contents of the traffic itself • Agent must have same view of traffic as destination – TTL tricks, fragmentation may obscure this • End-to-end encryption defeats content monitoring – Not traffic analysis, though 2004 CS 395: Computer Security 48

Network Issues • Network architecture dictates agent placement – Ethernet or broadcast medium: one agent per subnet – Point-to-point medium: one agent per connection, or agent at distribution/routing point • Focus is usually on intruders entering network – If few entry points, place network agents behind them – Does not help if inside attacks to be monitored 2004 CS 395: Computer Security 49

Aggregation of Information • Agents produce information at multiple layers of abstraction – Application-monitoring agents provide one view (usually one line) of an event – System-monitoring agents provide a different view (usually many lines) of an event – Network-monitoring agents provide yet another view (involving many network packets) of an event 2004 CS 395: Computer Security 50

Director • Reduces information from agents – Eliminates unnecessary, redundent records • Analyzes remaining information to determine if attack under way – Analysis engine can use a number of techniques, discussed before, to do this • Usually run on separate system – Does not impact performance of monitored systems – Rules, profiles not available to ordinary users 2004 CS 395: Computer Security 51

Example • Jane logs in to perform system maintenance during the day • She logs in at night to write reports • One night she begins recompiling the kernel • Agent #1 reports logins and logouts • Agent #2 reports commands executed – Neither agent spots discrepancy – Director correlates log, spots it at once 2004 CS 395: Computer Security 52

Adaptive Directors • Modify profiles, rulesets to adapt their analysis to changes in system – Usually use machine learning or planning to determine how to do this • Example: use neural nets to analyze logs – Network adapted to users’ behavior over time – Used learning techniques to improve classification of events as anomalous • Reduced number of false alarms 2004 CS 395: Computer Security 53

Notifier • Accepts information from director • Takes appropriate action – Notify system security officer – Respond to attack • Often GUIs – Well-designed ones use visualization to convey information 2004 CS 395: Computer Security 54

Gr. IDS GUI • Gr. IDS interface showing the progress of a worm as it spreads through network • Left is early in spread • Right is later on 2004 CS 395: Computer Security 55

Other Examples • Courtney detected SATAN attacks – Added notification to system log – Could be configured to send email or paging message to system administrator • IDIP protocol coordinates IDSes to respond to attack – If an IDS detects attack over a network, notifies other IDSes on co-operative firewalls; they can then reject messages from the source 2004 CS 395: Computer Security 56

Organization of an IDS • Monitoring network traffic for intrusions – NSM system • Combining host and network monitoring – DIDS • Making the agents autonomous – AAFID system 2004 CS 395: Computer Security 57

Monitoring Networks: NSM • Develops profile of expected usage of network, compares current usage • Has 3 -D matrix for data – Axes are source, destination, service – Each connection has unique connection ID – Contents are number of packets sent over that connection for a period of time, and sum of data – NSM generates expected connection data – Expected data masks data in matrix, and anything left over is reported as an anomaly 2004 CS 395: Computer Security 58

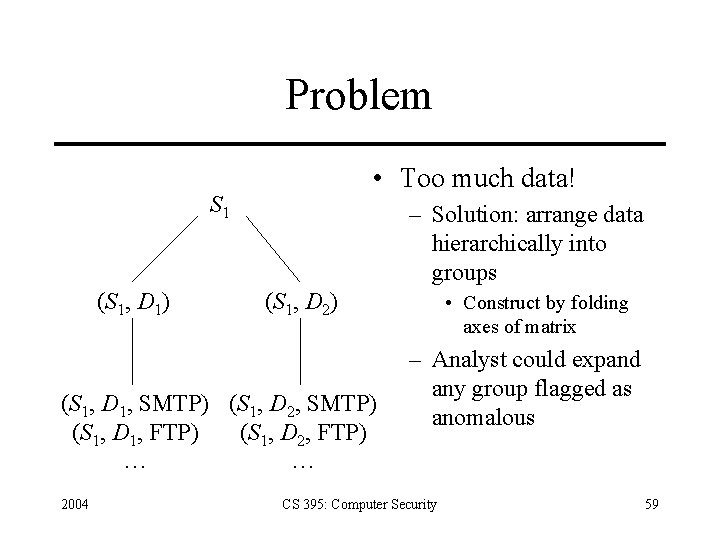

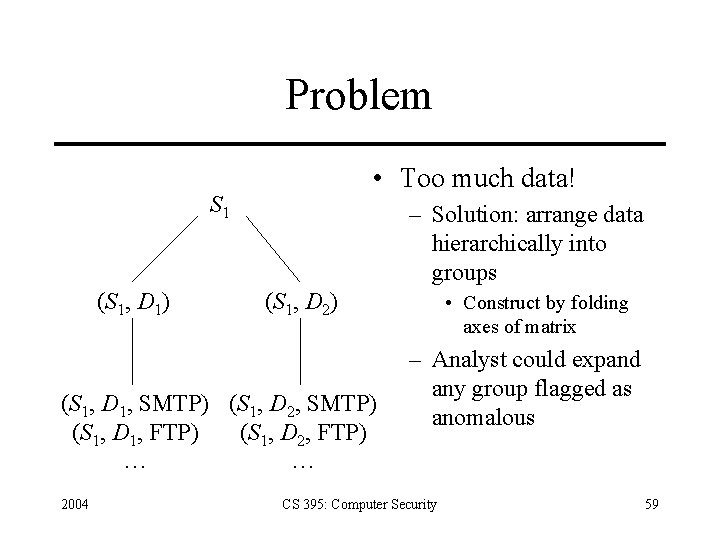

Problem • Too much data! S 1 (S 1, D 1) – Solution: arrange data hierarchically into groups (S 1, D 2) (S 1, D 1, SMTP) (S 1, D 2, SMTP) (S 1, D 1, FTP) (S 1, D 2, FTP) … … 2004 • Construct by folding axes of matrix – Analyst could expand any group flagged as anomalous CS 395: Computer Security 59

Signatures • Analyst can write rule to look for specific occurrences in matrix – Repeated telnet connections lasting only as long as set-up indicates failed login attempt • Analyst can write rules to match against network traffic – Used to look for excessive logins, attempt to communicate with non-existent host, single host communicating with 15 or more hosts 2004 CS 395: Computer Security 60

Other • Graphical interface independent of the NSM matrix analyzer • Detected many attacks – But false positives too • Still in use in some places – Signatures have changed, of course • Also demonstrated intrusion detection on network is feasible – Did no content analysis, so would work even with encrypted connections 2004 CS 395: Computer Security 61

Combining Host and Network Monitoring (DIDS) • DIDS: Distributed Intrusion Detection System – Combines network monitoring of NSM with host based intrusion detection • Why? Network would miss intruder logging into account without password, but host based might catch this after further action. Host would miss attacker who telnets with several user names, but network would catch this. 2004 CS 395: Computer Security 62

DIDS • DIDS director: centralized analysis engine – Rule based expert system that made inferences about both hosts and entire system • Agents on hosts and monitoring network • Example correlation: intruder accesses first system as Alice, second as Bob. DIDS would notice if Alice connected to a remote host and logged in to second system as Bob. Would then consider Alice and Bob to be same entity. 2004 CS 395: Computer Security 63

Autonomous Agents • Autonomous agent: process that can act independently of system of which it is part • Different species of agent, each with own internal model and rules. When these are violated, agent notifies other agents (of its own and other species) – Hybrid vigor: an attacker who compromises one type of agent may know nothing about other types – Agent division of labor can make each type relatively simple 2004 CS 395: Computer Security 64

Autonomous Agents • Eliminates single point of failure (cooperation of agents creates distributed control) • Scalability: agents can migrate through network and process data on multiple systems. • Disadvantage: Communication costs – Simple agents means more agents needed to monitor system, hence greater communication costs. – Communications must be secured – Distributed computation must be secured 2004 CS 395: Computer Security 65

AAFID • Autonomous Agents For Intrusion Detection • Each host has agents and transceiver – Transceiver controls agents (initiates and terminates them), collates info, forwards to monitor (if no monitor on host) • Filters: provide access to system resources in system -independent form. – Agents subscribe to filters (possibly many to a single filter) 2004 CS 395: Computer Security 66

AAFID • Monitors form the distributed control. – Communication with transceivers and other monitors – System must ensure consistency among monitors • Implemented prototype runs on Linux and Solaris systems. – Written in Perl – Slow, but demonstrated that autonomous agent IDS is practical 2004 CS 395: Computer Security 67

Intrusion Response • Goal: handle attempted attack in way such that damage is minimized. May also thwart attack. • Incident Prevention • Intrusion Handling – Containment – Eradication – Follow-Up 2004 CS 395: Computer Security 68

Incident Prevention • Want to detect and stop attacks before they succeed (so obviously attack needs to be identified before it completes) • Jailing: Allow attacker to think they have succeeded, when in reality they are in confined area – Berford • Somayaji and Forrest – Record anomolous system calls in locality frame buffer – When LFC exceeds predefined parameter, system calls delayed by d 2**LFC for d a tunable param. – If max LFC exceeded, system call blocked 2004 CS 395: Computer Security 69

Intrusion Handling • Preparation: Establishes procedures and mechanisms for detecting and responding to attacks • Identification • Containment: Limit damage of attack • Eradication: Stop attack and block further similar attacks • Recovery: Restore system to secure state • Follow-up: Take action against adversary, identify problems in handling of incident, record lessons learned 2004 CS 395: Computer Security 70

Containment • Passive monitoring: Record actions for later use – Marginally useful – May reveal goals of attacker – System remains vulnerable, and intruder could attack other systems through this one • Constrain actions of attacker – Considerably more difficult – Minimize protection domain while preventing attack from succeeding – May not know goals of attack (so above bullet requires guessing that may be wrong) 2004 CS 395: Computer Security 71

Honeypots • Also called decoy servers – – Offer many targets for attackers Instrumented and closely monitored Designed to trick attacker into revealing goals When attack detected, system takes action to shift attacker onto honeypot system • Ex. Stoll: Created large fake file detailing nuclear weapons technology to ensure that attacker remained connected long enough for international trace 2004 CS 395: Computer Security 72

Deception Tool Kit (DTK) • Creates false network interface that allows sysadmin to present any desired configuration to incoming connections • When probed, DTK returns wide range of vulnerabilities • Sysadmin can configure illusory systems and servers in order to determine attacker goals and ability • Experiments indicate this could be effective response to keep attackers from targeting real systems 2004 CS 395: Computer Security 73

Honeynet Project • Created to learn about hacker community • Interested in motives, techniques, and tools of attackers • Two phases – Identify common threats against specific OSs and configurations – Develop honeypot network that is easy to deploy, hard to detect, and efficient at collecting data 2004 CS 395: Computer Security 74

Eradication Phase • This means stopping the attack • Need to deny access to system, either through breaking of network connection or termination of involved processes • Wrappers: common means of implementing blocking – Wrappers implement various forms of access control – Wrappers kick in when attack detected – Fraser, Badger, Feldman used loadable kernel modules to place wrappers in kernels of UNIX systems • Could also be used to write audit logs, generate system call counts, etc – Can also control network access (e. g. wrap a database) 2004 CS 395: Computer Security 75

Firewalls • Can be thought of as kind of wrapper (e. g. consider proxy server) • We’ve seen the difficulties with these • E. g. Java applets – Change <applet> tag – Remove files with CA FE BA BE hex sequence – Disallow files with given extensions (problem with classes in. zip files) 2004 CS 395: Computer Security 76

IDIP • Intrudor Detection and Isolation Protocol – Provides protocol for coordinated responses to attackes • Boundary controller: system that can block connections from entering a perimeter – Typically firewalls or routers – Neighbors if directly connected 2004 CS 395: Computer Security 77