Global Trees A Framework for Linked Data Structures

Global Trees: A Framework for Linked Data Structures on Distributed Memory Parallel Systems D. Brian Larkins, James Dinan, Sriram Krishnamoorthy, Srinivasan Parthasarthy, Atanas Rountev, P. Sadayappan

Background • Trees and graphs can concisely represent relationships between data • Data sets are becoming increasingly large and can require compute-intensive processing • Developing efficient, memory hierarchyaware applications is hard

Sample Applications • n-body simulation • Fast Multipole Methods (FMM) • multiresolution analysis • clustering and classification • frequent pattern mining

Key Contributions • Efficient fine-grained data access with a global view of data • Exploit linked structure to provide fast global pointer dereferencing • High-level, locality-aware, parallel operations on linked data structures • Application-driven customization • Empirical validation of the approach

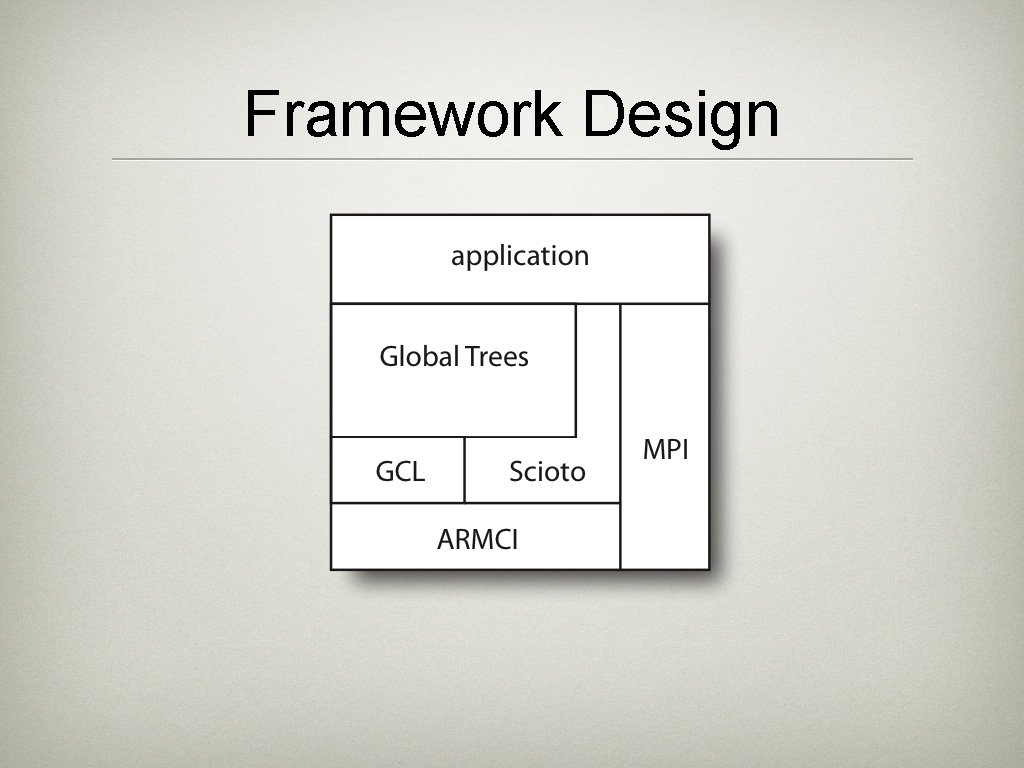

Framework Design

Global Chunk Layer (GCL) • API and run-time library for managing chunks - built on ARMCI • Abstracts common functionality for handling irregular, linked data • Provides a global namespace with access and modification operations • Extensible and highly customizable to maximize functionality and performance

Chunks A chunk is: • Contiguous memory segment • Globally accessible • Physically local to only one process • Collection of user-defined elements • Unit of data transfer

Programming Model • SPMD with MIMD-style parallelism • Global pointers permit fine-grained access • Chunks allow coarse-grained data movement • Uses get/compute/put model for globally shared data access • Provides both uniform global view and chunked global view of data

![Global Pointers c } } p c = &p. child[i] + p. child[i]. ci Global Pointers c } } p c = &p. child[i] + p. child[i]. ci](http://slidetodoc.com/presentation_image_h2/a904c1d1ae6b2903f044743e3cd115cd/image-9.jpg)

Global Pointers c } } p c = &p. child[i] + p. child[i]. ci + p. child[i]. no 4252 + -4252 + 4340

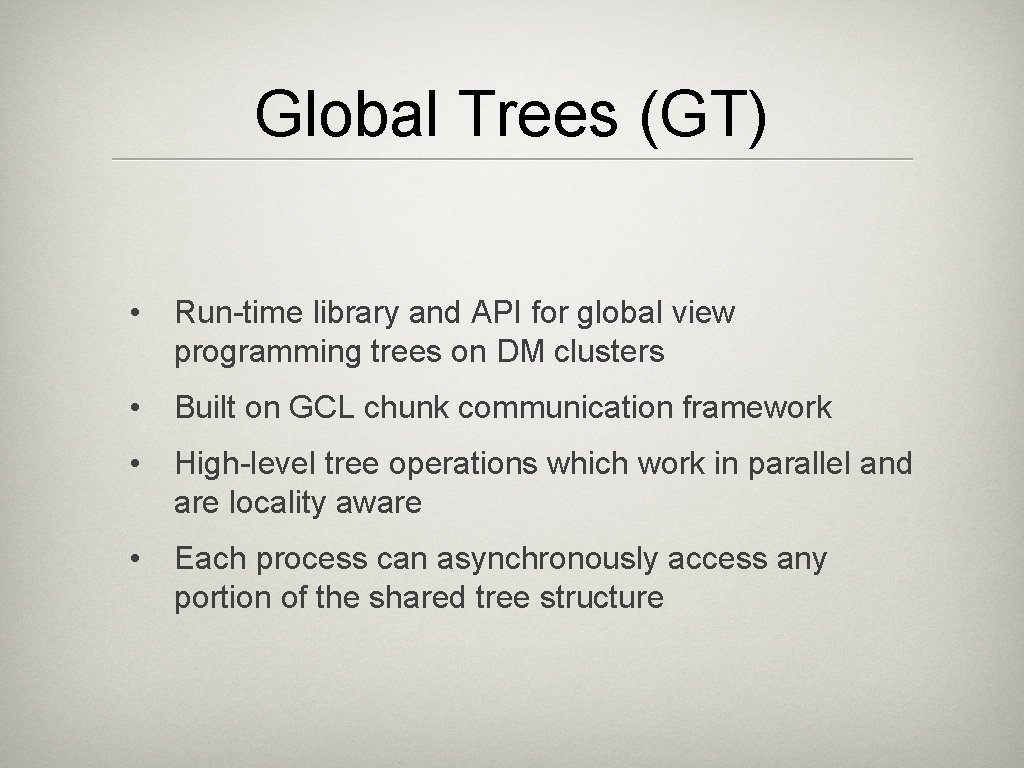

Global Trees (GT) • Run-time library and API for global view programming trees on DM clusters • Built on GCL chunk communication framework • High-level tree operations which work in parallel and are locality aware • Each process can asynchronously access any portion of the shared tree structure

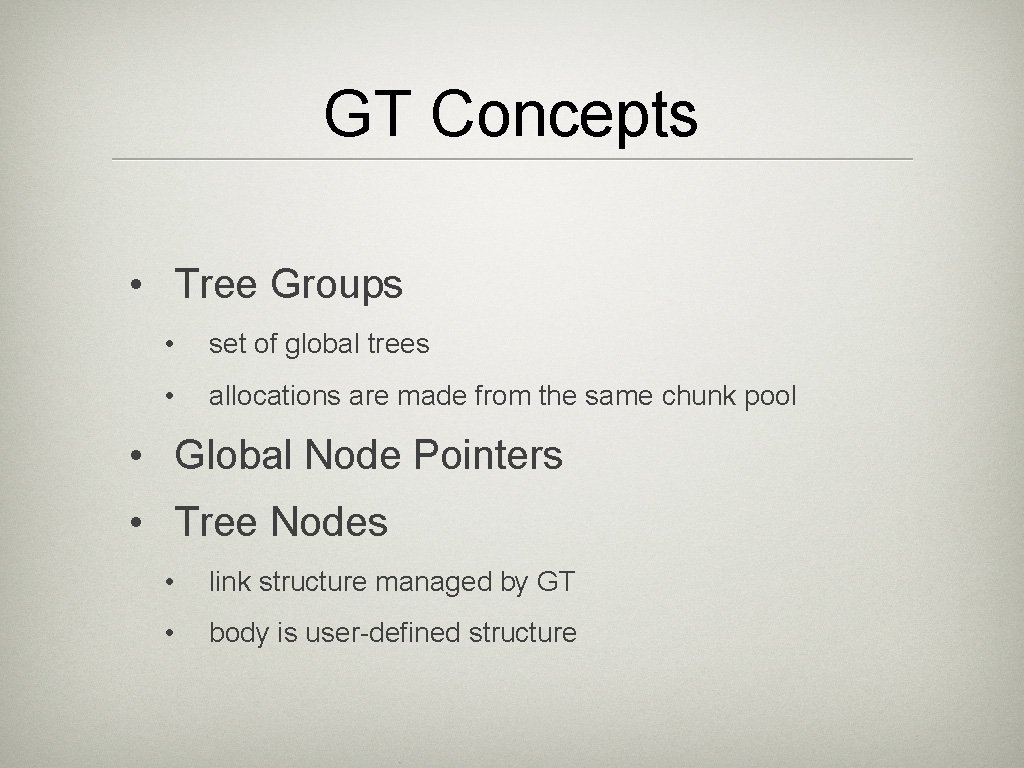

GT Concepts • Tree Groups • set of global trees • allocations are made from the same chunk pool • Global Node Pointers • Tree Nodes • link structure managed by GT • body is user-defined structure

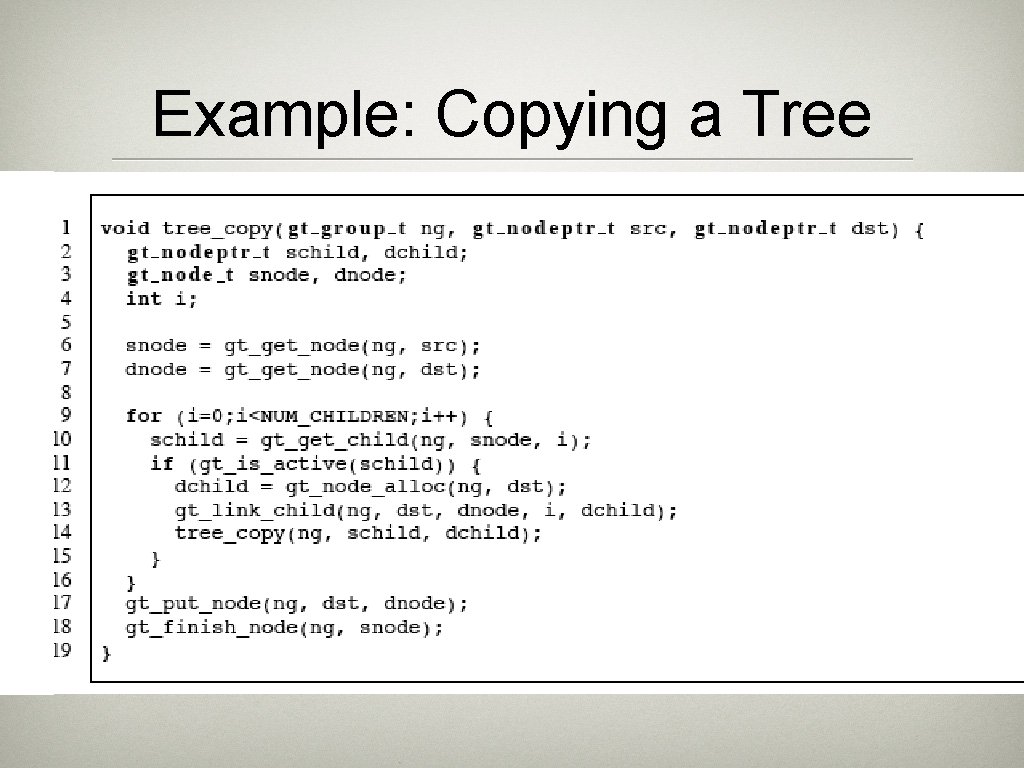

Example: Copying a Tree

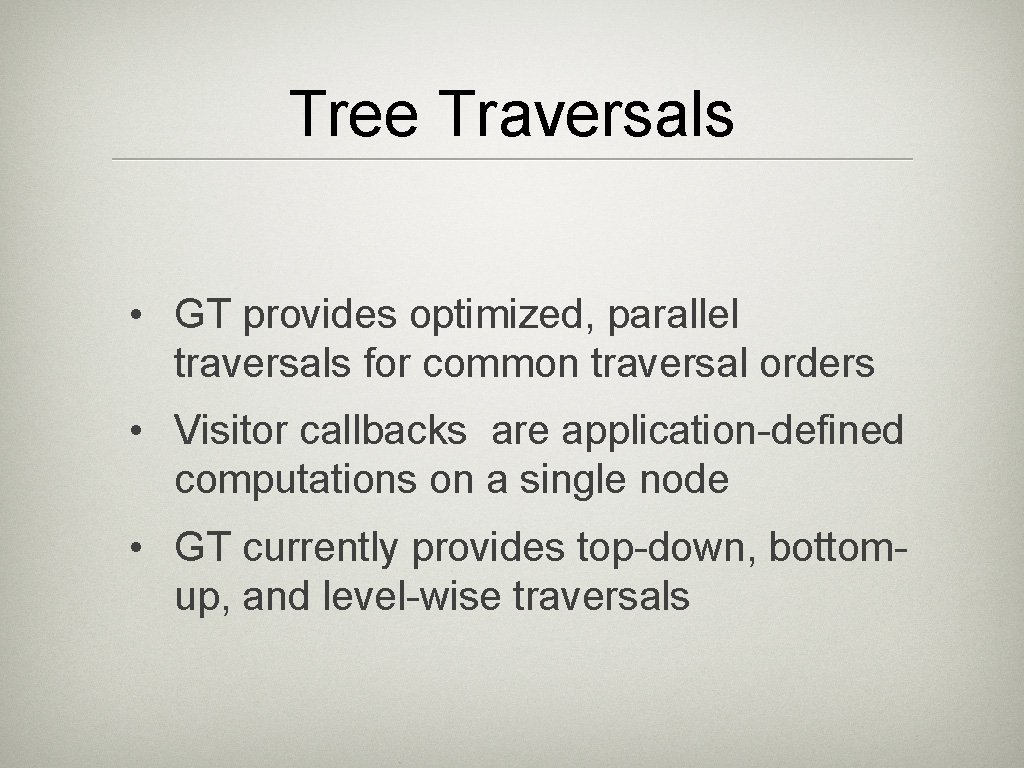

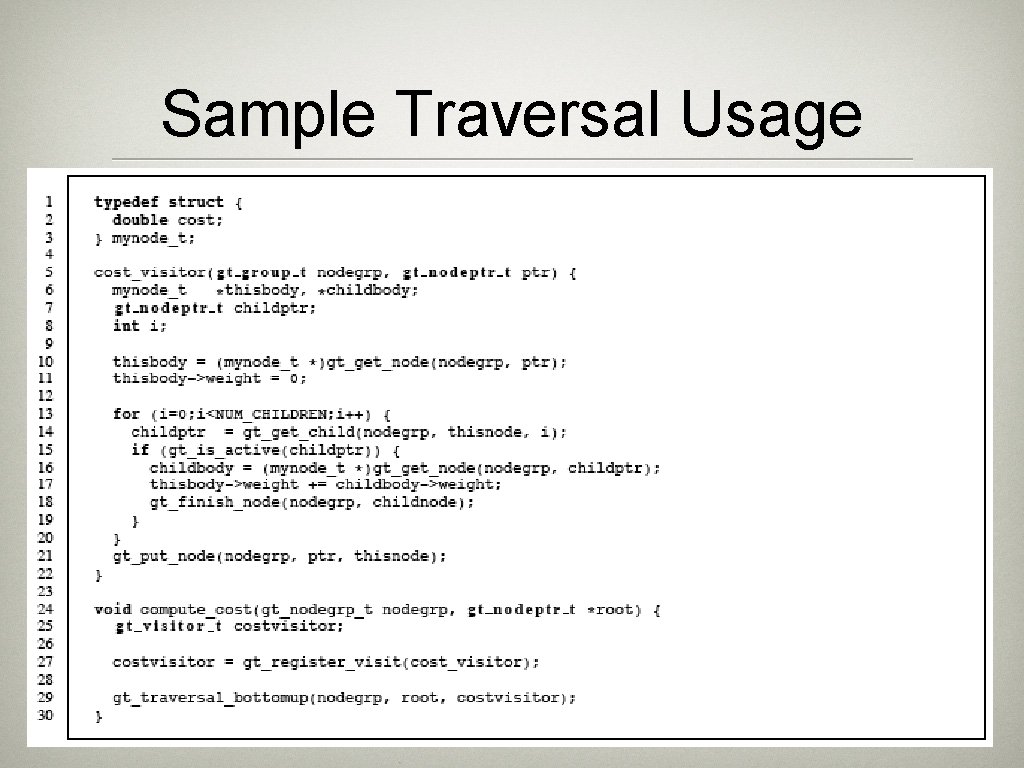

Tree Traversals • GT provides optimized, parallel traversals for common traversal orders • Visitor callbacks are application-defined computations on a single node • GT currently provides top-down, bottomup, and level-wise traversals

Sample Traversal Usage

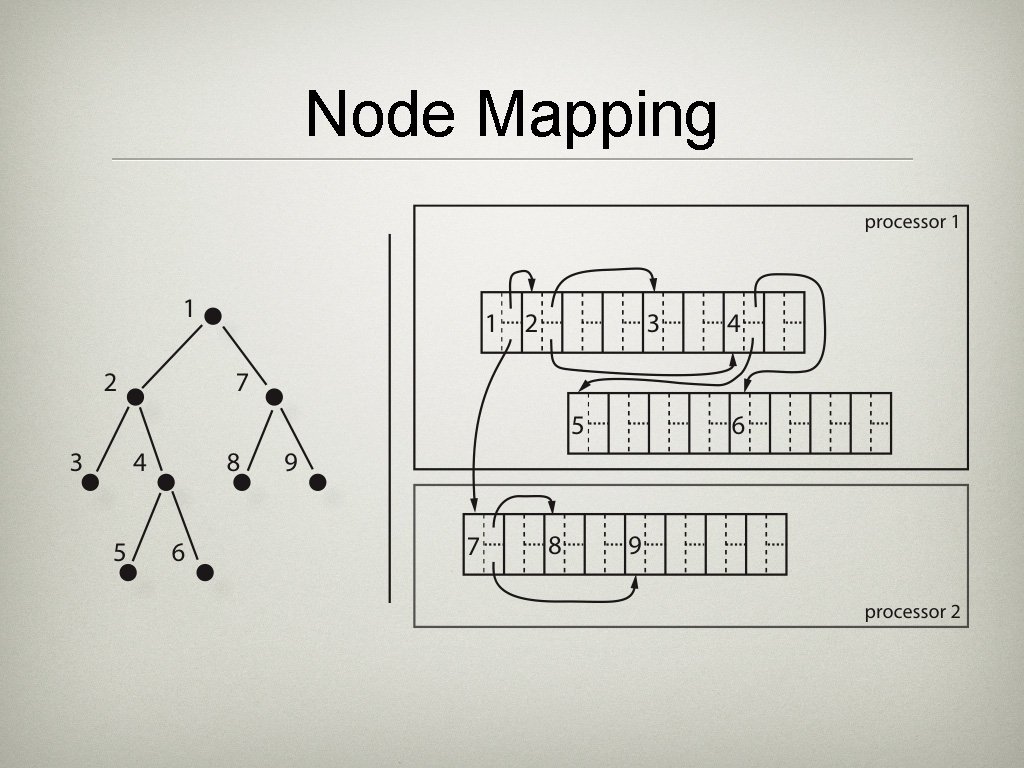

Node Mapping

Custom Allocation • No single mapping of data elements to chunks will be optimal • GT/GCL supports custom allocators to improve spatial locality • Allocators can use a hint from call-site and can keep state between calls • Default allocation is local-open

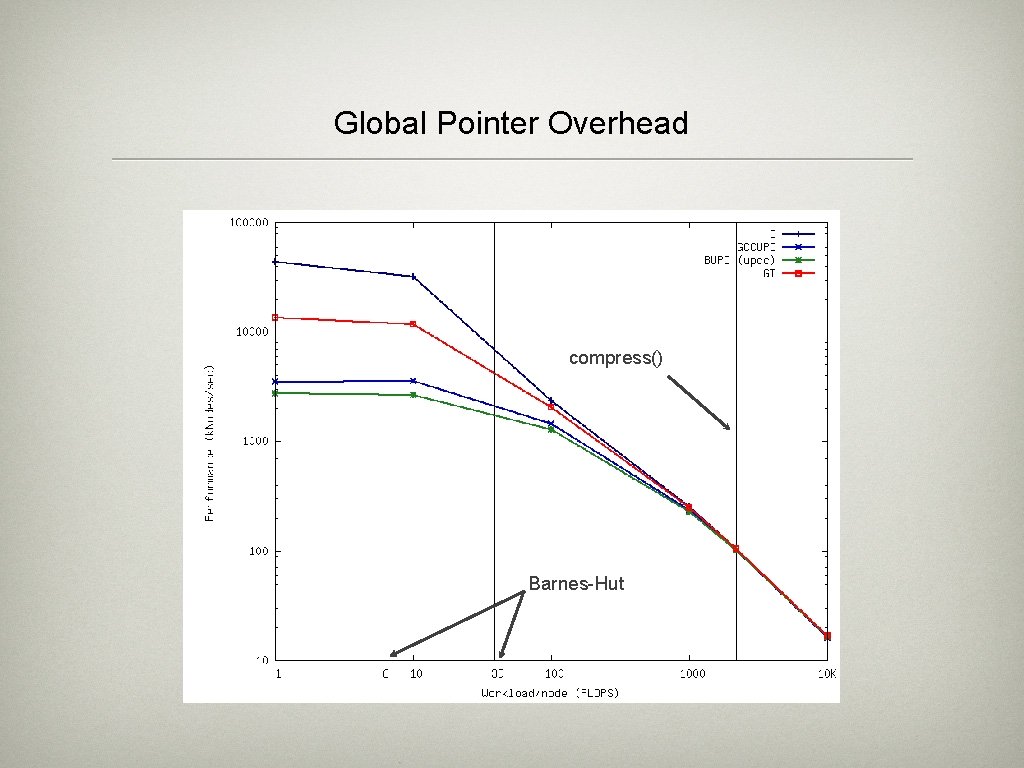

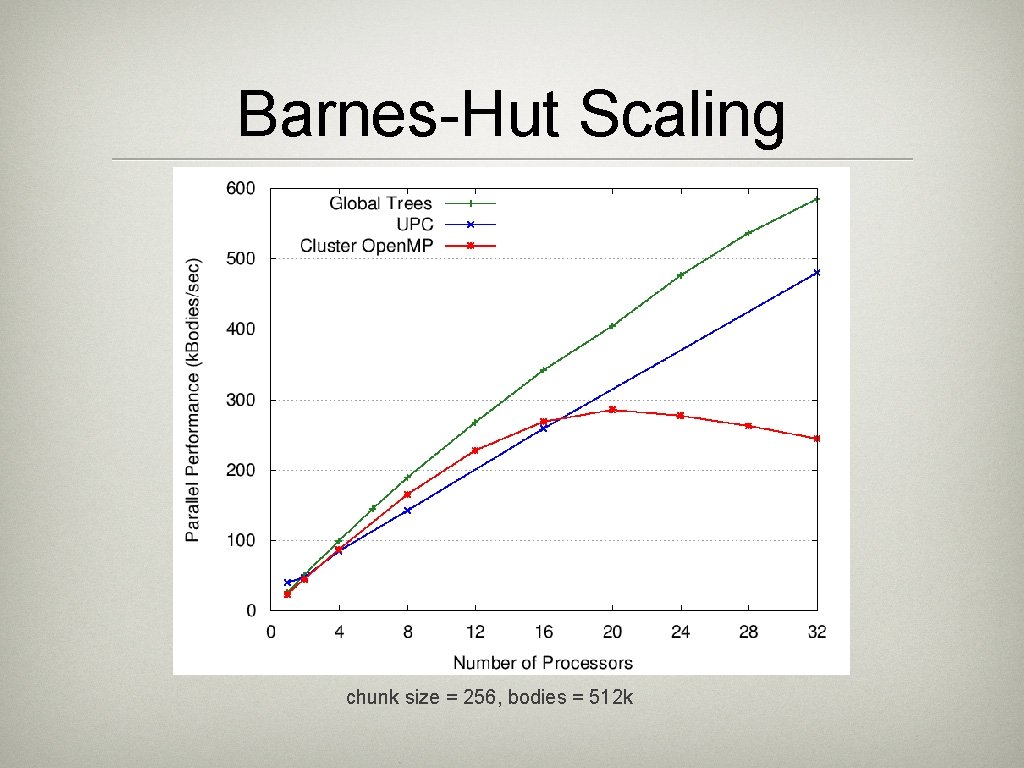

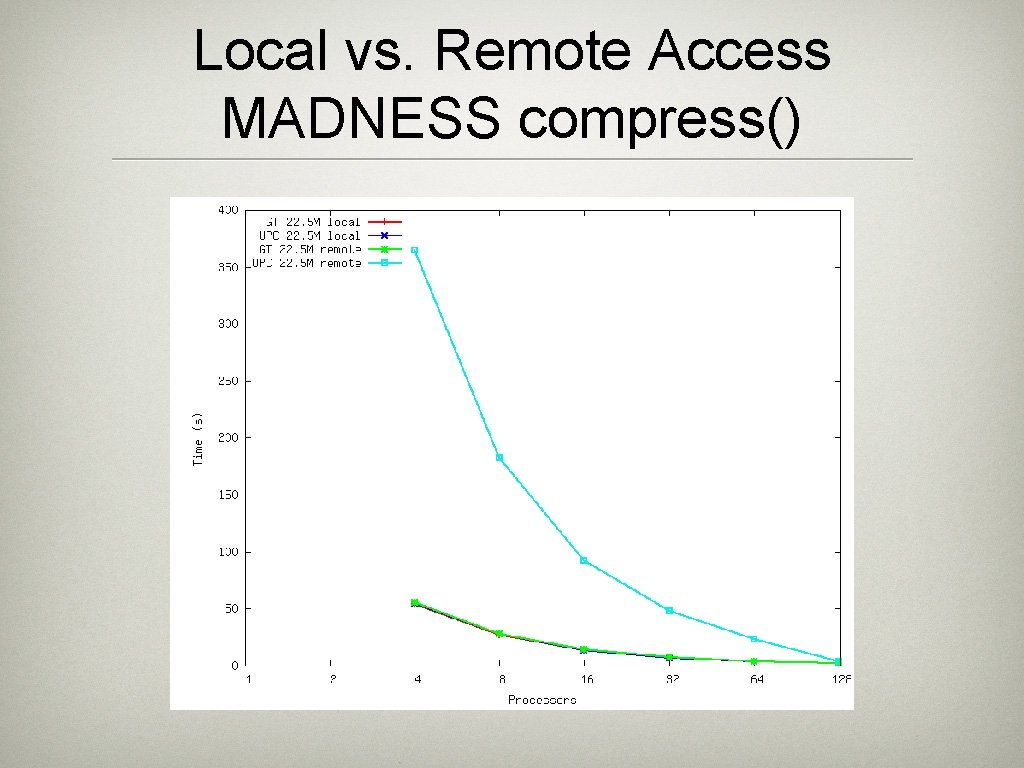

Experimental Results • Evaluate using: • Barnes-Hut from SPLASH-2 • Compression operation from MADNESS • GT compared with: • Intel’s Cluster Open. MP and Tread. Marks runtime • UPC

Global Pointer Overhead compress() Barnes-Hut

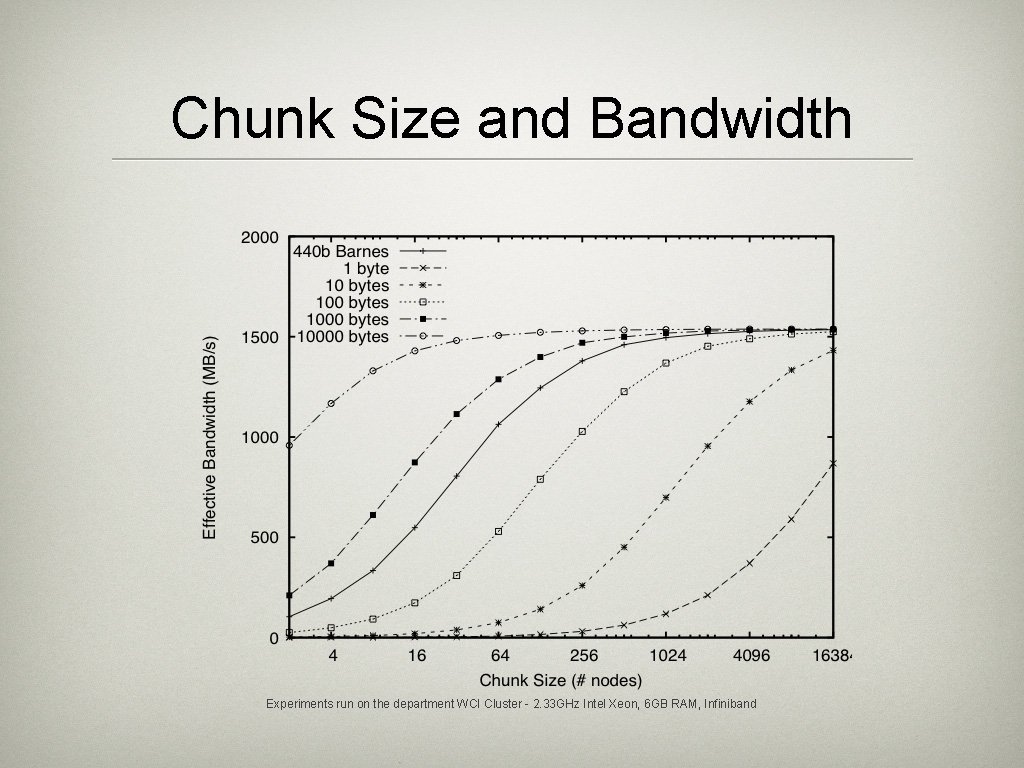

Chunk Size and Bandwidth Experiments run on the department WCI Cluster - 2. 33 GHz Intel Xeon, 6 GB RAM, Infiniband

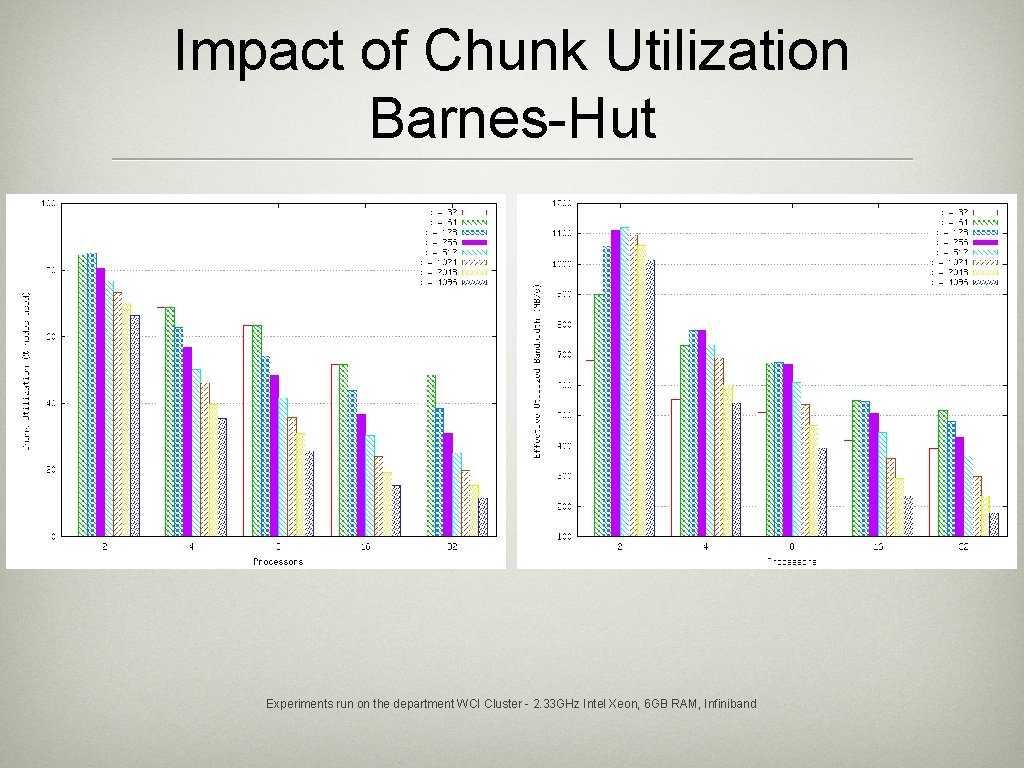

Impact of Chunk Utilization Barnes-Hut Experiments run on the department WCI Cluster - 2. 33 GHz Intel Xeon, 6 GB RAM, Infiniband

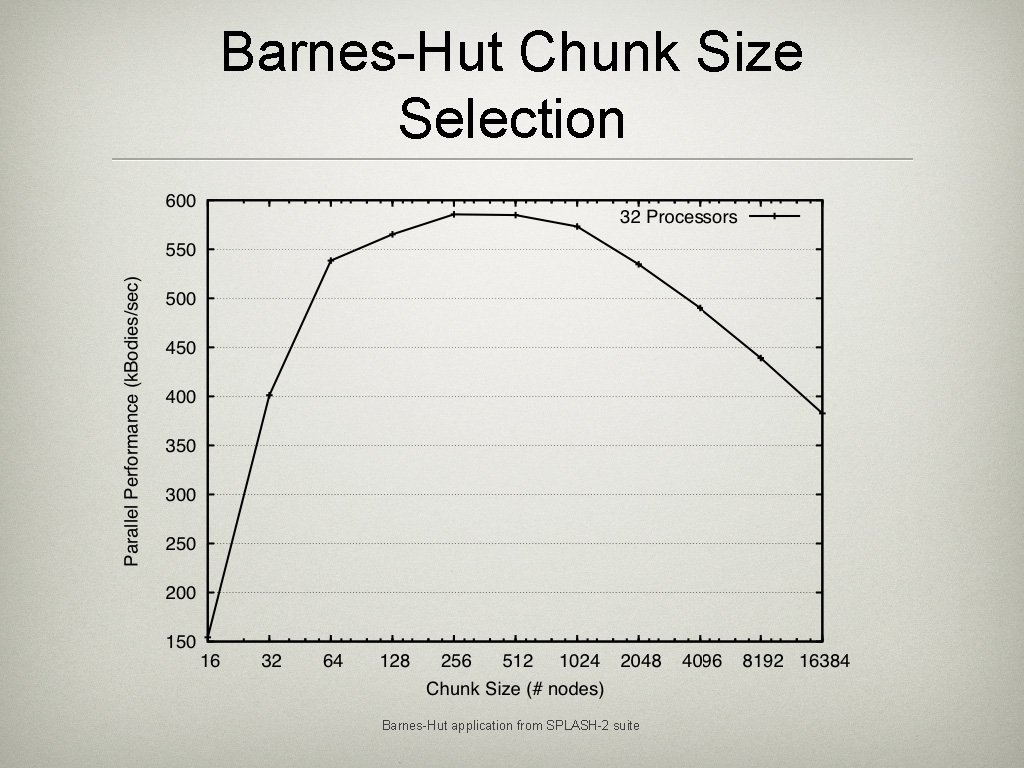

Barnes-Hut Chunk Size Selection Barnes-Hut application from SPLASH-2 suite

Barnes-Hut Scaling chunk size = 256, bodies = 512 k

Local vs. Remote Access MADNESS compress()

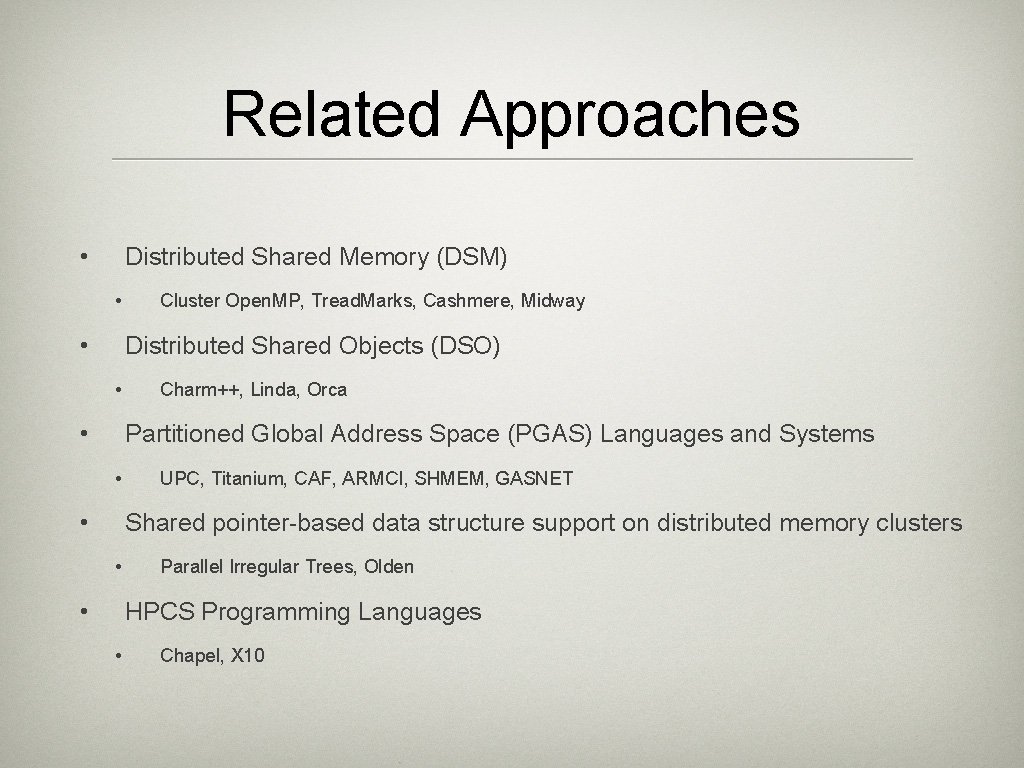

Related Approaches • Distributed Shared Memory (DSM) • • Cluster Open. MP, Tread. Marks, Cashmere, Midway Distributed Shared Objects (DSO) • • Charm++, Linda, Orca Partitioned Global Address Space (PGAS) Languages and Systems • • UPC, Titanium, CAF, ARMCI, SHMEM, GASNET Shared pointer-based data structure support on distributed memory clusters • • Parallel Irregular Trees, Olden HPCS Programming Languages • Chapel, X 10

Future Work • Global Graphs • GT data reference locality tools • More applications

Questions email: larkins@cse. ohio-state. edu

- Slides: 26