Genetic algorithms for neural networks An introduction Genetic

- Slides: 30

Genetic algorithms for neural networks An introduction

Genetic algorithms • Why use genetic algorithms? – “What aren’t genetic algorithms? ” • What are genetic algorithms? • What to avoid • Using genetic algorithms with neural networks

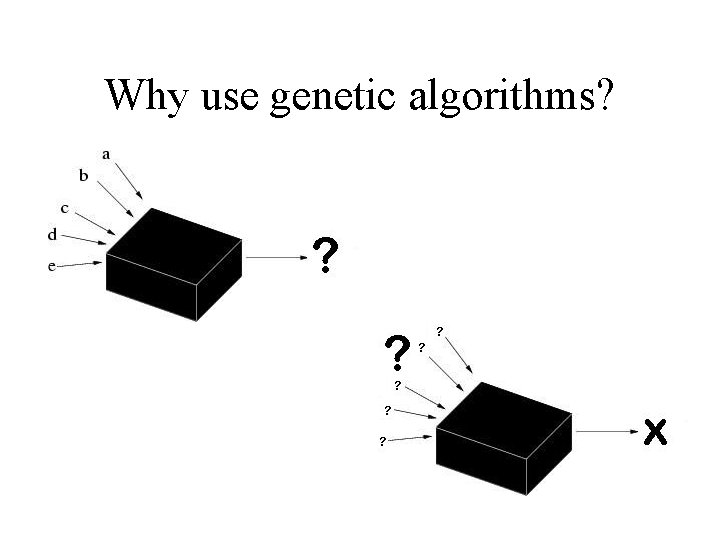

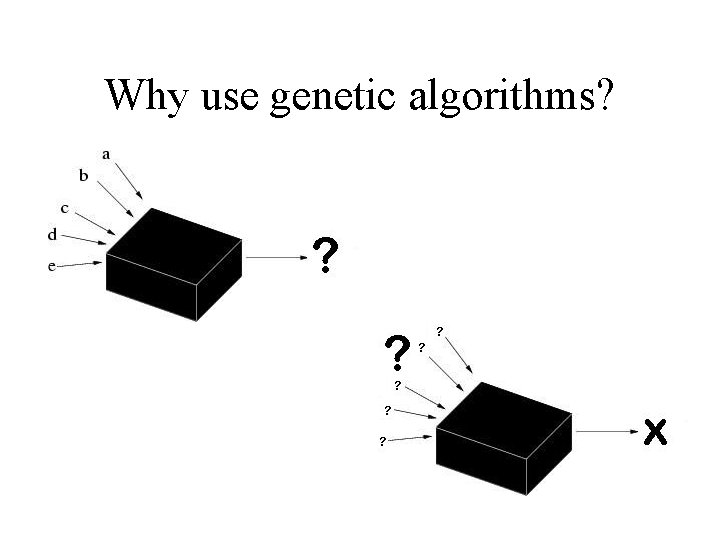

Why use genetic algorithms?

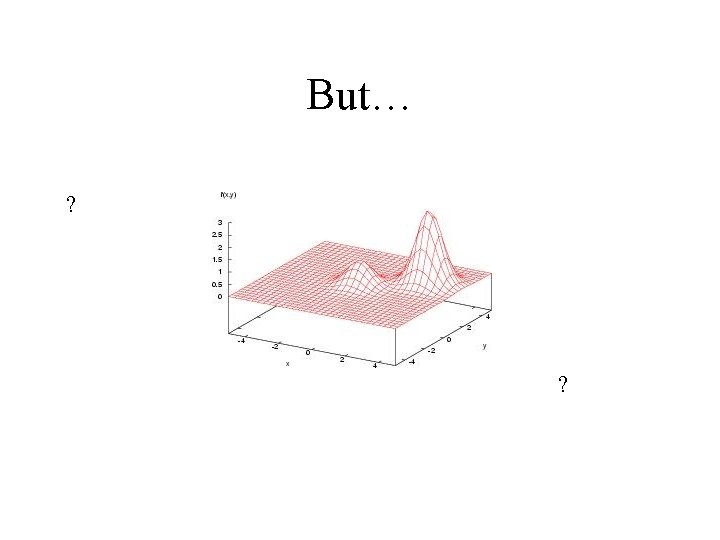

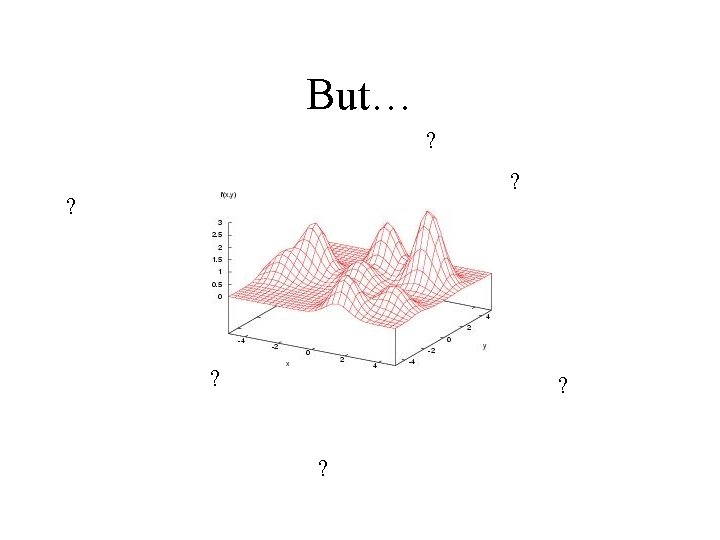

What aren’t genetic algorithms? • Hill-climbing algorithms • Enumerative algorithms • Random searches – Guesses – Ask an expert • Educated guesses

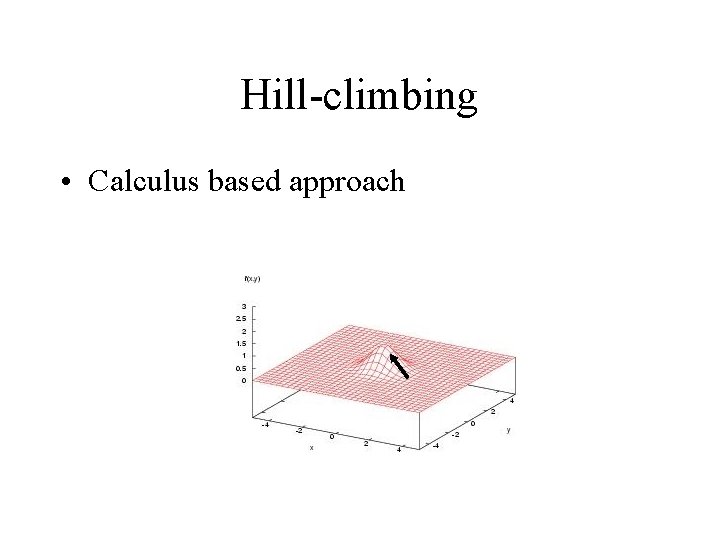

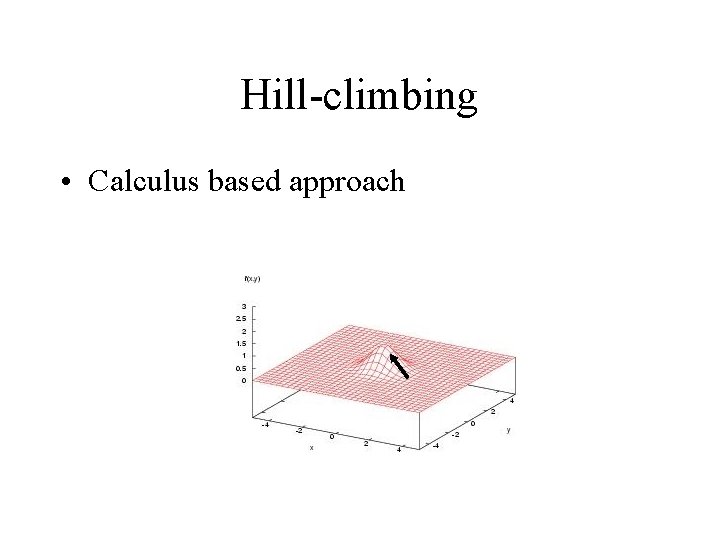

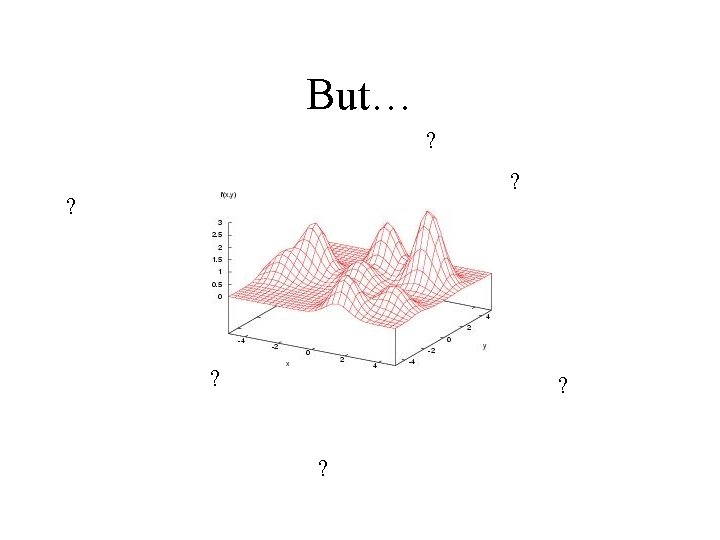

Hill-climbing • Calculus based approach

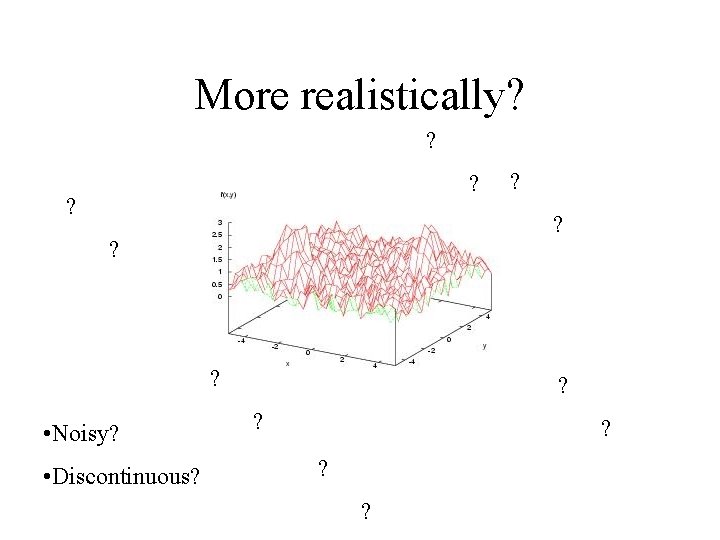

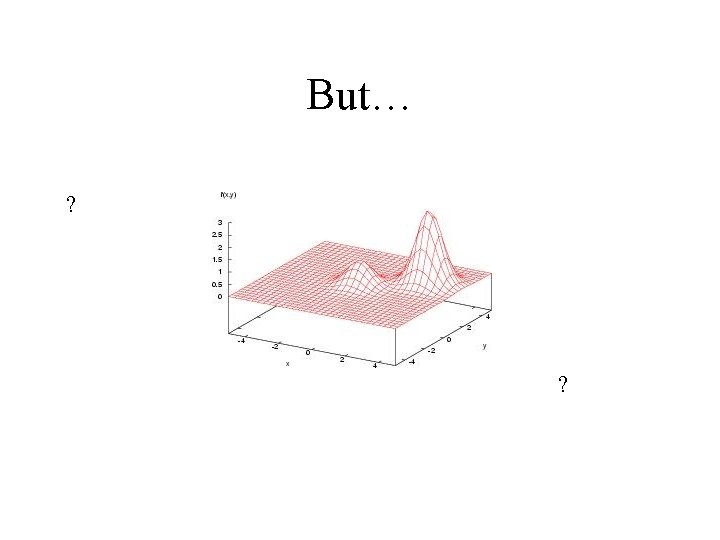

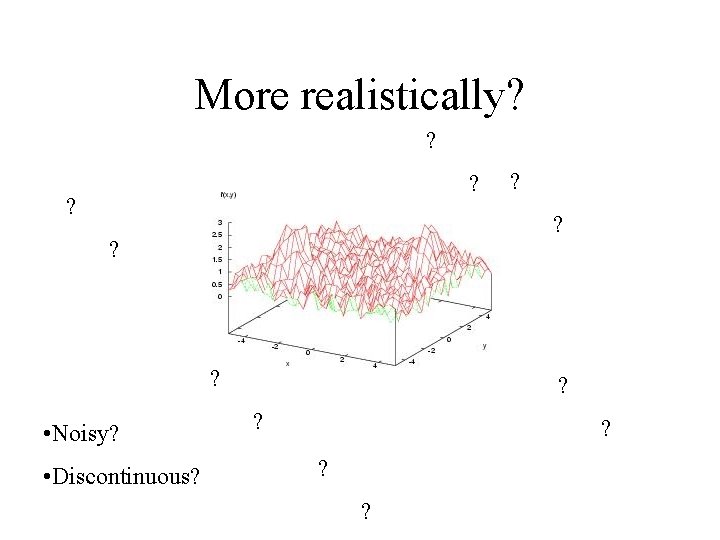

More realistically? ? ? ? ? • Noisy? • Discontinuous? ? ? ? ?

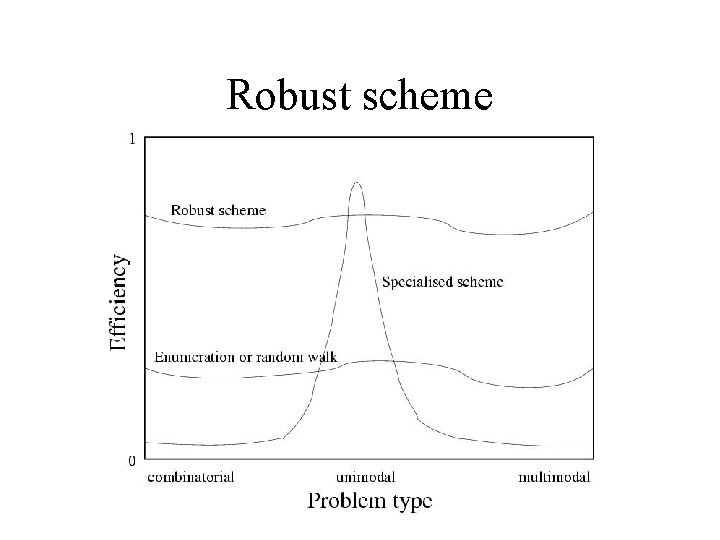

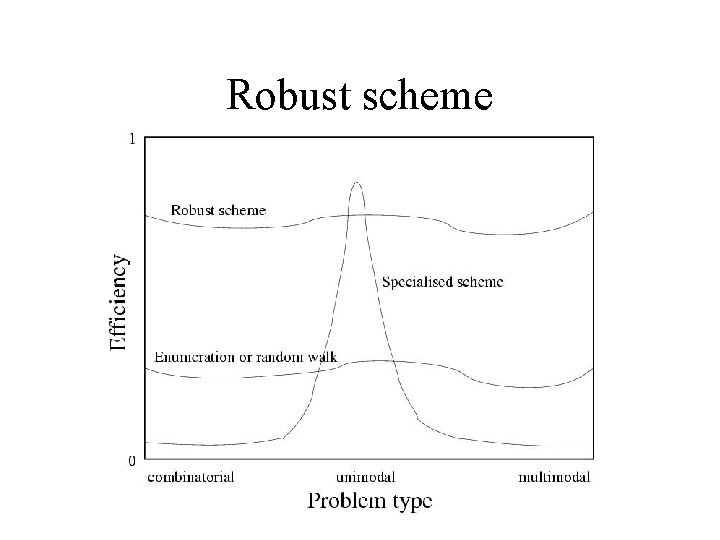

Robust scheme

Genetic algorithms • Cope with non-linear functions • Cope with large numbers of variables efficiently • Cope with modelling uncertainties • Do not require knowledge of the function

What are genetic algorithms? Chromosome Population

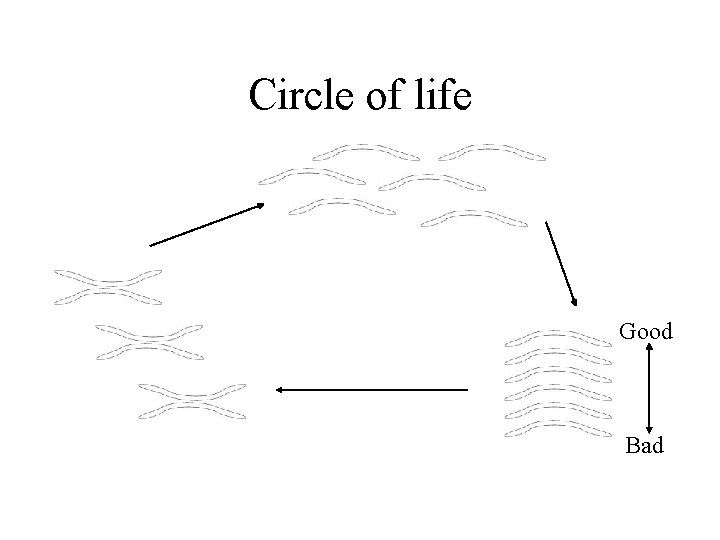

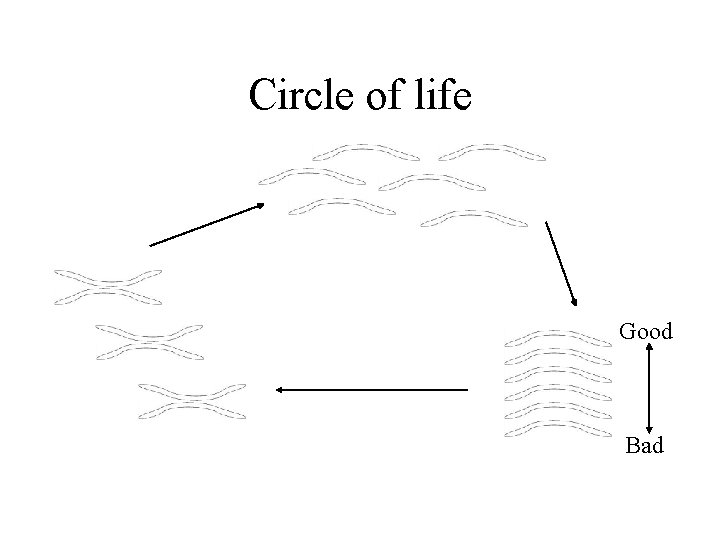

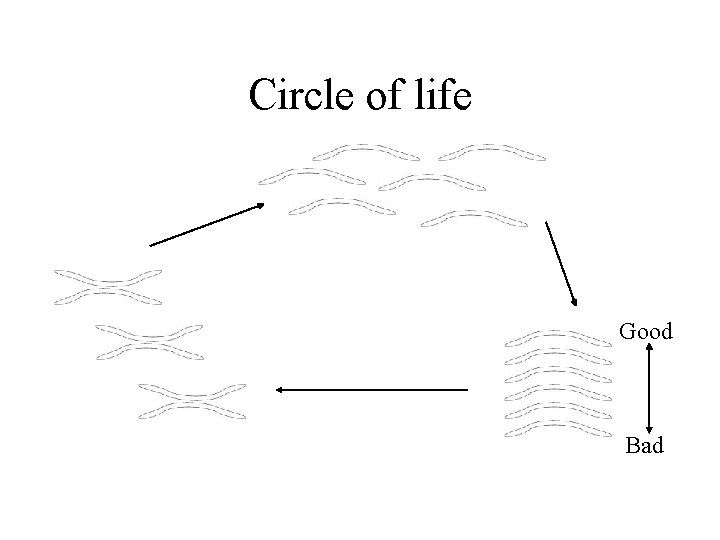

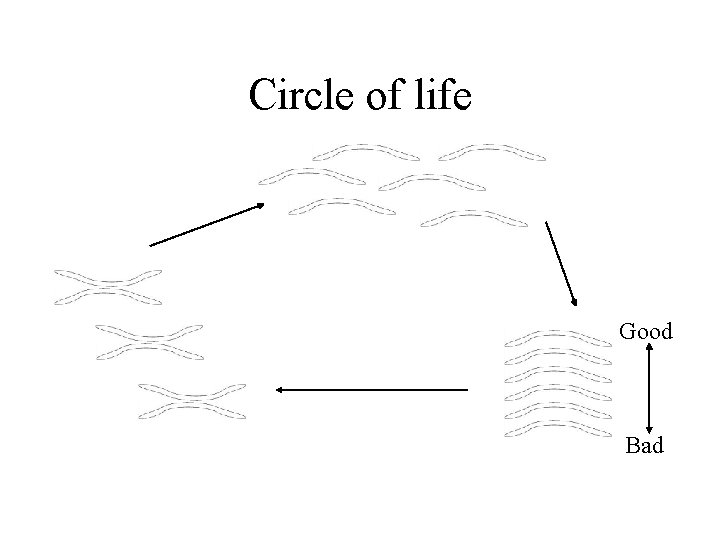

Circle of life Good Bad

Chromosome • A collection of genes [xi 1, xi 2, xi 3, xi 4, …]

Fitness • Ranked by a fitness factor • Proportional to the likelihood of breeding • Action of the algorithm is to maximise fitness

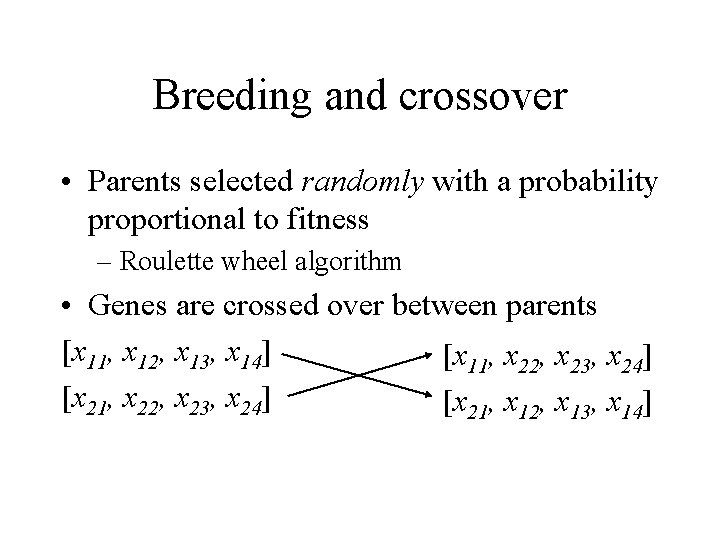

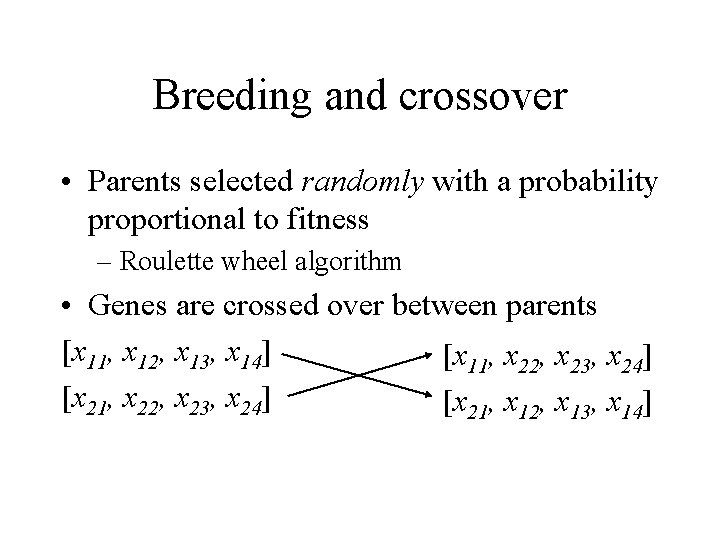

Breeding and crossover • Parents selected randomly with a probability proportional to fitness – Roulette wheel algorithm • Genes are crossed over between parents [x 11, x 12, x 13, x 14] [x 11, x 22, x 23, x 24] [x 21, x 12, x 13, x 14]

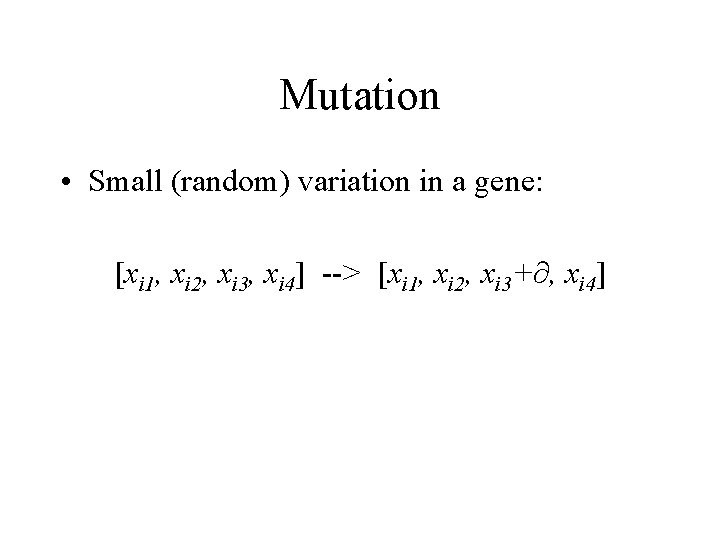

Mutation • Small (random) variation in a gene: [xi 1, xi 2, xi 3, xi 4] --> [xi 1, xi 2, xi 3+∂, xi 4]

Circle of life Good Bad

Genetic algorithms • Work on populations, not single points • Use an objective function (fitness) only, rather than derivatives or other information • Use probabilistic rules rather than deterministic rules • Operate on an encoded set of values (a chromosome) rather than the values themselves

Potential problems • • GA deceptive functions Premature and postmature convergence Excessive mutation Application to constrained problems – Neural networks, particularly • The meaning of fitness

Deceptive functions and premature convergence • A function which selects for one gene when a combination would be better • Can eliminate “better” genes • Avoided by – Elitism – Multiple populations – Mutation (which reintroduces genes) – Fitness scaling

Postmature convergence • When all of a population performs well, selection pressures wane • Avoided by fitness scaling (be careful!)

Excessive mutation • Too little mutation = loss of genes • Too much mutation = random walk

Application to constrained problems • Neural networks and genetic algorithms are by nature unconstrained – i. e they can take any value • Must avoid unphysical values • Restrict mutation • Punish through fitness function

The meaning of fitness • Genetic algorithms maximise fitness • Therefore fitness must be carefully defined • What are you actually trying to do?

When to stop? • How long do we run the algorithm for? – Until we find a solution – Until a fixed number of generations has been produced – Until there is no further improvement – Until we run out of time or money…?

Genetic algorithms for Bayesian neural networks • Generally want to find an optimised input set for a particular defined output

Define “fitness” • Need a function that includes target and uncertainty:

Define the chromosome • Set of inputs to the network except – Derived inputs must be removed • e. g. if you have both t and ln(t), or T and exp(-1/T), only one can be included – Prevents unphysical input sets being found

Create the populations • Chromosomes are randomly generated – (avoid non-physical values) – Population size must be considered – 20 is a good start • Best to use more than one population – Trade-off between coverage and time – Three is good

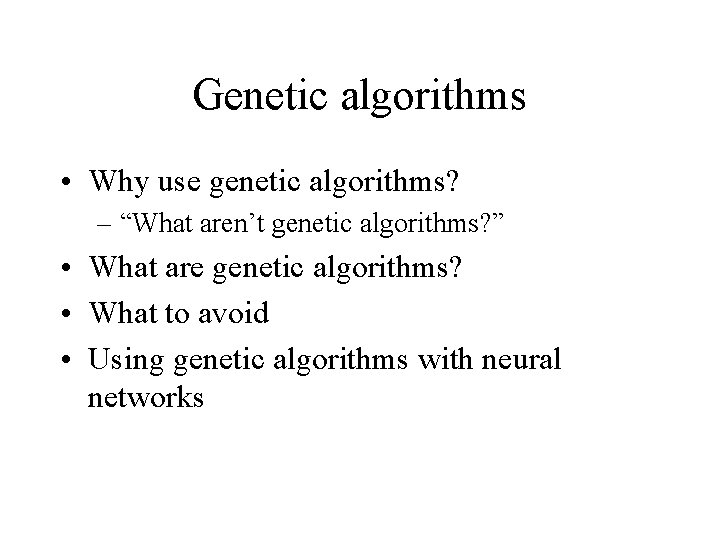

Run the algorithm! 1. 2. 3. 4. 5. 6. 7. 8. Decode chromosomes to NN inputs (i. e. calculate any other inputs) Make predictions for each chromosome 1. if the target is met or enough generations have happened, stop Calculate fitness for each chromosome Preserve the best chromosome (elitism) Breed 18 new chromosomes by crossbreeding Mutate one (non-elite) gene at random Create new chromosome at random Go back to 1