Generation of Referring Expressions the State of the

- Slides: 17

Generation of Referring Expressions: the State of the Art HIT Summer School, Harbin 2010 Kees van Deemter Computing Science University of Aberdeen

Introductory remarks about the course

I am Kees van Deemter… n Reader in Computing Science, n n University of Aberdeen (2004 -now) Principal Research Fellow, ITRI, University of Brighton (1997 -2004) Research Scientist, Philips Electronics/IPO (1984 -97) Ph. D University of Amsterdam 1991 Research interests: n n n Formal semantics of Natural Language (ambiguity, vagueness) Generation of text Multimodality (speech, graphics)

This course n An exploration into referring expressions, from the perspective of Natural Language Generation (NLG) n Generation of Referring Expressions (GRE) n The key question: How can we find the “best” referring expression in a given situation? n The ideal answer to the question is an algorithm (i. e. a recipe for cooking up the best referring expression)

Some simple examples n Assume that nothing has ever been said n Your task is to refer to an object. . .

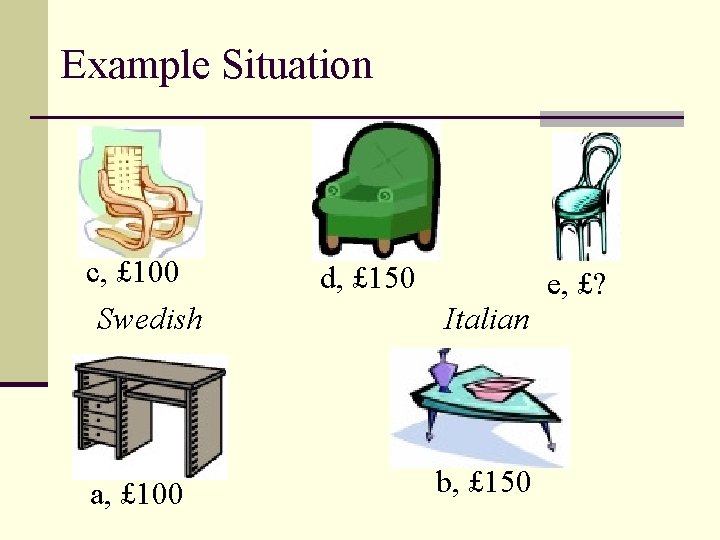

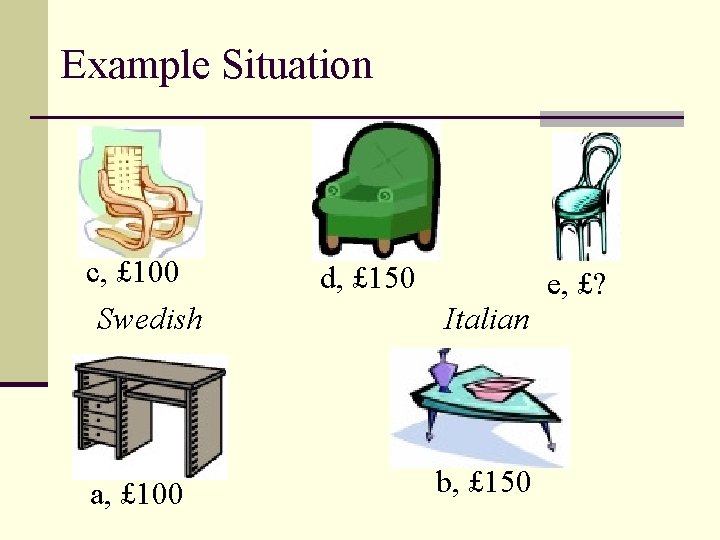

Example Situation c, £ 100 Swedish a, £ 100 d, £ 150 Italian b, £ 150 e, £?

Formalised n Type: furniture (abcde), desk (ab), chair (cde) n Origin: Sweden (ac), Italy (bde) n Colours: dark (ade), light (bc), brown (a) n Price: 100 (ac), 150 (bd) , 250 ({}) n Contains: wood ({}), metal ({abcde}), cotton(d) Assumption: all this is mutual knowledge

Game 1. Describe object a. 2. Describe object d. 3. Describe object e.

Game 1. Describe object a: {desk, sweden}, {grey} 2. Describe object d: {chair, 150} 3. Describe object e: {chair, neither 100 nor 150}

Questions n When is it a good idea to add “logically redundant” information to a referring expresion? n How to determine whether an algorithm is good? n Reference serves to pick out an object (i. e. , to individuate it). What does it mean to offer a useful description of an object?

Prerequisites n The most rudimentary understanding of computing will suffice n You need to be able to think in terms of sets and their associated operations. (Equivalently: propositions and Boolean operators) n Caveat: Some important issues will not be covered. . .

Limitations of the course n Issues concerning algorithmic frameworks will be bypassed (Conceptual Graphs, Description Logic) n Relational/recursive NPs will not be discussed in depth (Dale and Haddock 1991) n “(the pen on (the table in (the corner)))” n Perhaps the most important omission is how discourse affects reference: n Anaphora / salience will not play a large role

Another perspective on the course n 2003 -2007: EPSRC project “Towards a Unified Algorithm for the Generation of Referring Expressions” (TUNA) n This course asks what we have learned from TUNA and its aftermath n Project: trying to go beyond identification of the referent, towards the generation of useful descriptions

Plan of the course 1. GRE and its place in computational linguistics 2. A seminal paper on GRE: Dale & Reiter 1995 3. Testing Dale and Reiter’s claims (TUNA project) 4. Project description Reading material (See course web page): Krahmer and Van Deemter [submitted] Computational Generation of Referring Expressions: a Survey. (Particularly sections 1, 2, 5)

Motivation/assumptions

Why study referring expressions? n Great practical relevance: even the simplest NLG systems have to do GRE n GRE is one of the best-understood tasks in NLG. n Links with many areas of Cognitive Science and AI

Time to move on. . . to a brief overview of NLG