GDC Tutorial 2005 Building MultiPlayer Games Case Study

GDC Tutorial, 2005. Building Multi-Player Games Case Study: The Sims Online Lessons Learned, Larry Mellon

TSO: Overview n Initial team: little to no MMP experience Engineering estimate: switching from 4 -8 player peer to MMP client/server would take no additional development time! n No code / architecture / tool support for n n Long-term, continually changing nature of game n Non-deterministic execution, dual platform (win 32 / Linux) n Overall process designed for single-player complexity, small development team Limited nightly builds, minimal daily testing n Limited design reviews, limited scalability testing, no “maintainable/extensible” impl. requirement n

TSO: Case Study Outline (Lessons Learned) Poorly designed SP MP MMP transitions Scaling Team & code size, data set size Build & distribution Architecture: logical & code Visibility: development & operations Testability: development, release, load Multi-Player, Non-determinism Persistent user data vs code/content updates Patching / new content / custom content

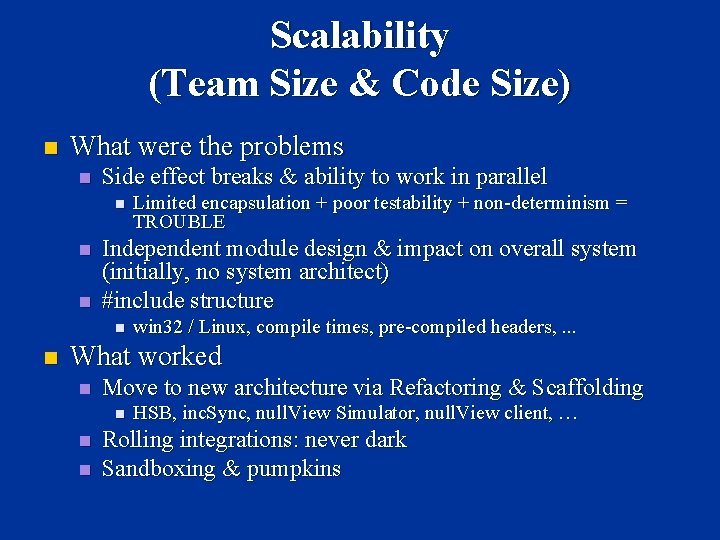

Scalability (Team Size & Code Size) n What were the problems n Side effect breaks & ability to work in parallel n n n Independent module design & impact on overall system (initially, no system architect) #include structure n n Limited encapsulation + poor testability + non-determinism = TROUBLE win 32 / Linux, compile times, pre-compiled headers, . . . What worked n Move to new architecture via Refactoring & Scaffolding n n n HSB, inc. Sync, null. View Simulator, null. View client, … Rolling integrations: never dark Sandboxing & pumpkins

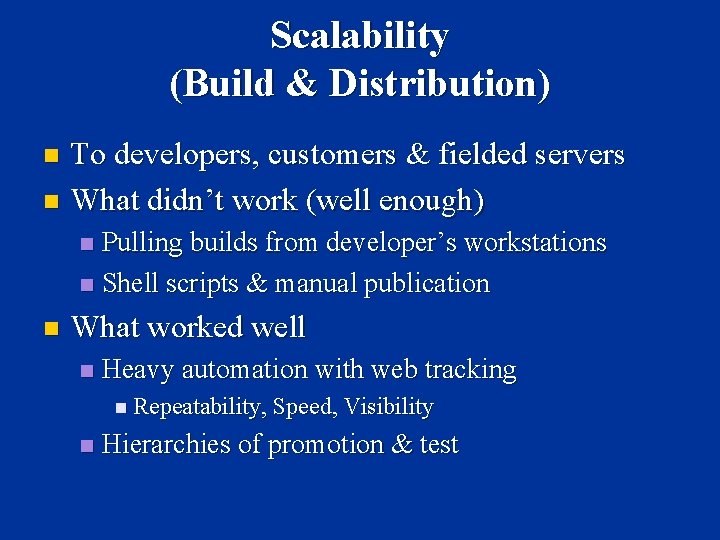

Scalability (Build & Distribution) To developers, customers & fielded servers n What didn’t work (well enough) n Pulling builds from developer’s workstations n Shell scripts & manual publication n n What worked well n Heavy automation with web tracking n Repeatability, Speed, Visibility n Hierarchies of promotion & test

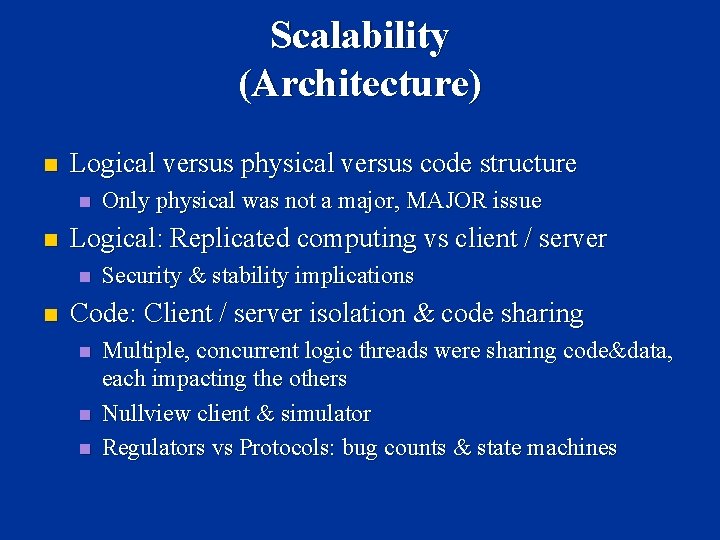

Scalability (Architecture) n Logical versus physical versus code structure n n Logical: Replicated computing vs client / server n n Only physical was not a major, MAJOR issue Security & stability implications Code: Client / server isolation & code sharing n n n Multiple, concurrent logic threads were sharing code&data, each impacting the others Nullview client & simulator Regulators vs Protocols: bug counts & state machines

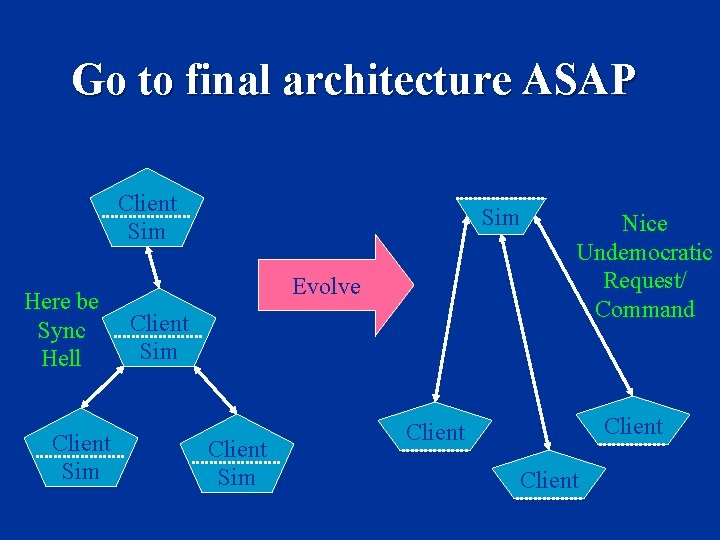

Go to final architecture ASAP Multiplayer: Client/Server: Client Sim Here be Sync Hell Client Sim Evolve Client Sim Nice Undemocratic Request/ Command Client

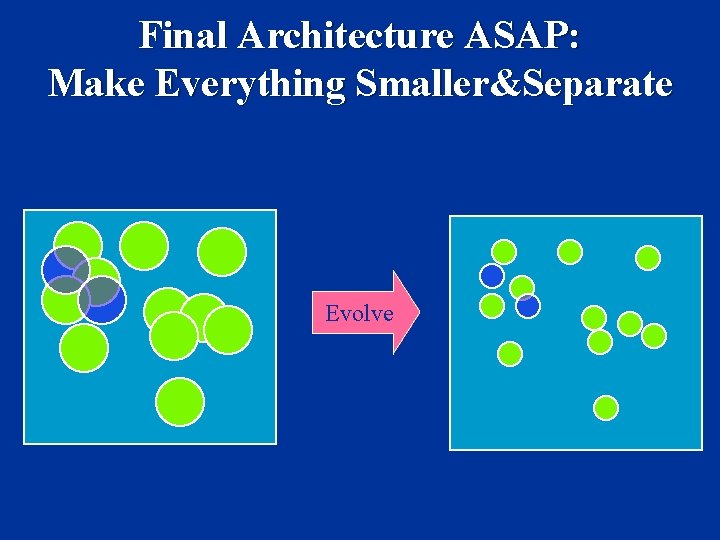

Final Architecture ASAP: Make Everything Smaller&Separate Evolve

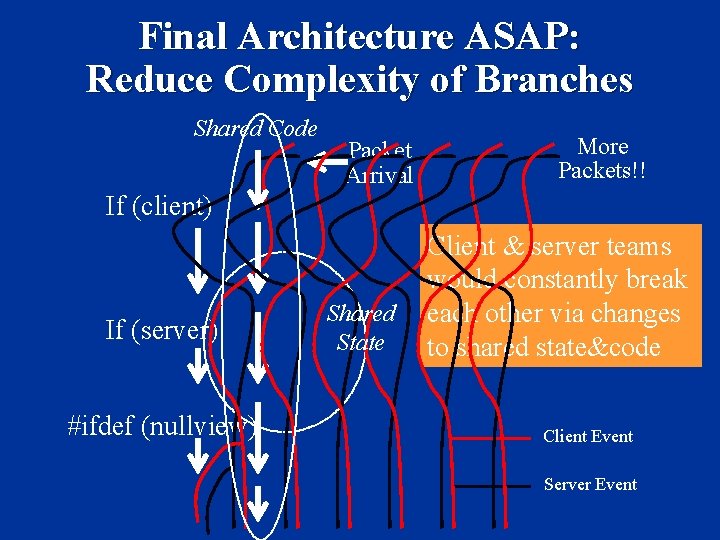

Final Architecture ASAP: Reduce Complexity of Branches Shared Code Packet Arrival More Packets!! If (client) If (server) #ifdef (nullview) Shared State Client & server teams would constantly break each other via changes to shared state&code Client Event Server Event

Final Architecture ASAP: “Refactoring” n Decomposed into Multiple dll’s n Found the Simulator Interfaces n Reference Counting n Client/Server subclassing n How it helped: –Reduced coupling. Even reduced compile times! –Developers in different modules broke each other less often. –We went everywhere and learned the code base.

Final Architecture ASAP: It Had to Always Run Initially clients wouldn’t behave predictably n We could not even play test n Game design was demoralized n n We needed a bridge, now! ? ?

Final Architecture ASAP: Incremental Sync n A quick temporary solution… Couldn’t wait for final system to be finished n High overhead, couldn’t ship it n n We took partial state snapshots on the server and restored to them on the client How it helped: –Could finally see the game as it would be. –Allowed parallel game design and coding –Bought time to lay in the “right” stuff.

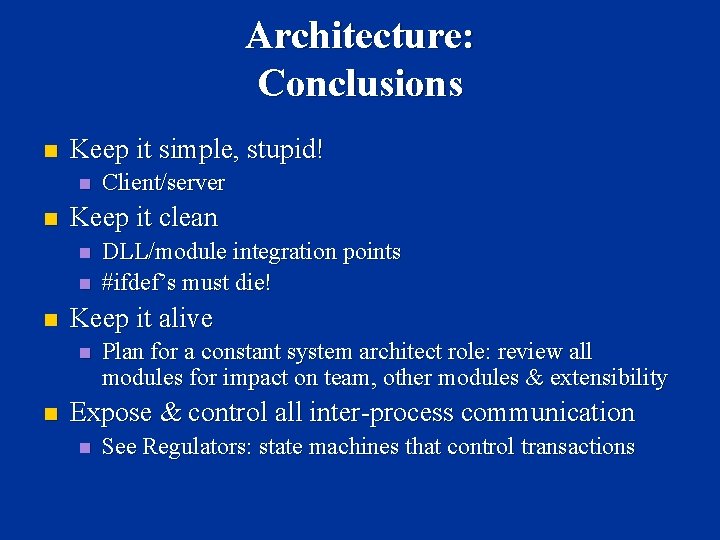

Architecture: Conclusions n Keep it simple, stupid! n n Keep it clean n DLL/module integration points #ifdef’s must die! Keep it alive n n Client/server Plan for a constant system architect role: review all modules for impact on team, other modules & extensibility Expose & control all inter-process communication n See Regulators: state machines that control transactions

TSO: Case Study Outline (Lessons Learned) Poorly designed SP MP MMP transitions Scaling Team & code size, data set size Build & distribution Architecture: logical & code Visibility: development & operations Testability: development, release, load Multi-Player, Non-determinism Persistent user data vs code/content updates Patching / new content / custom content

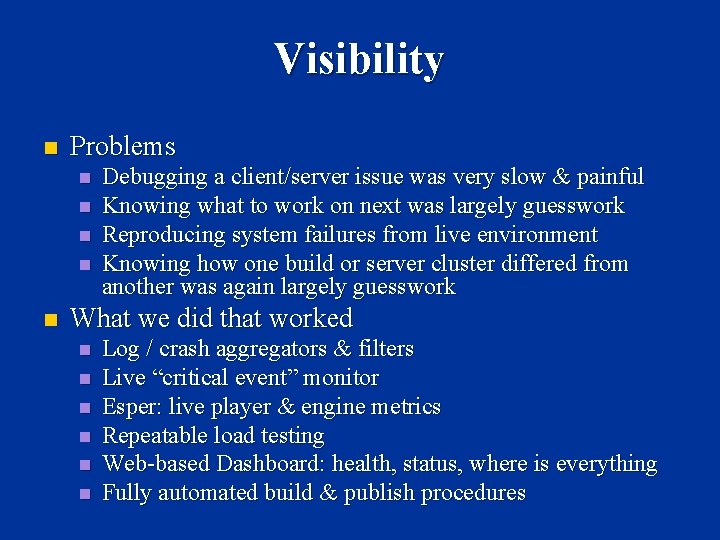

Visibility n Problems n n n Debugging a client/server issue was very slow & painful Knowing what to work on next was largely guesswork Reproducing system failures from live environment Knowing how one build or server cluster differed from another was again largely guesswork What we did that worked n n n Log / crash aggregators & filters Live “critical event” monitor Esper: live player & engine metrics Repeatable load testing Web-based Dashboard: health, status, where is everything Fully automated build & publish procedures

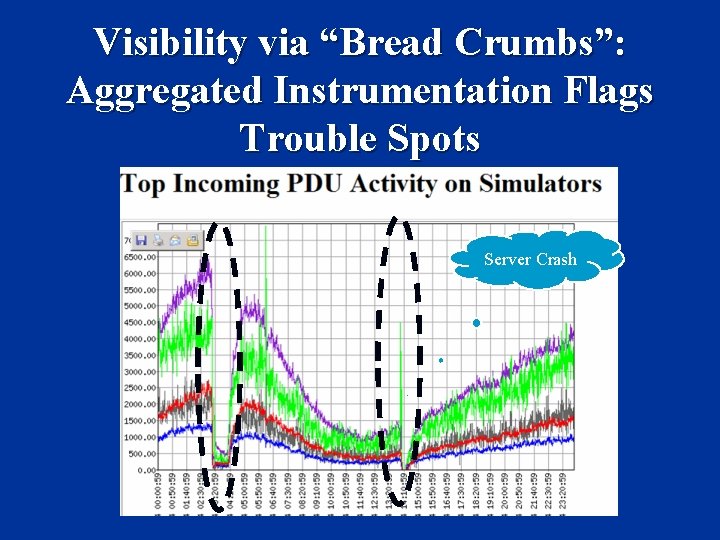

Visibility via “Bread Crumbs”: Aggregated Instrumentation Flags Trouble Spots Server Crash

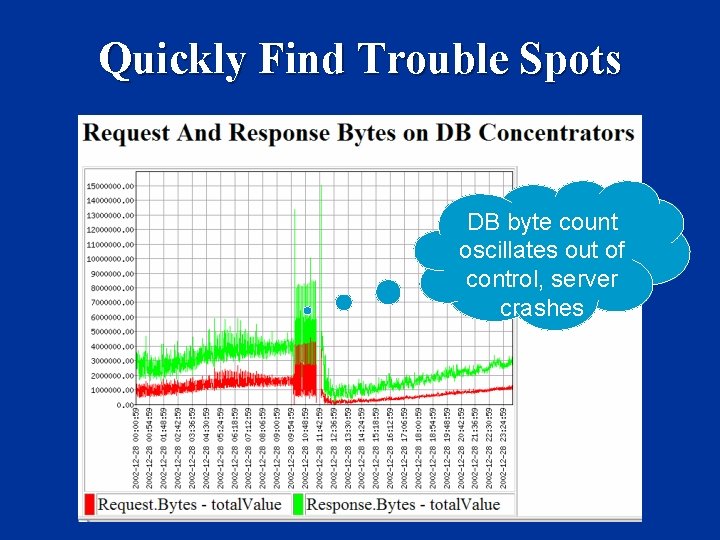

Quickly Find Trouble Spots DB byte count oscillates out of control, server crashes

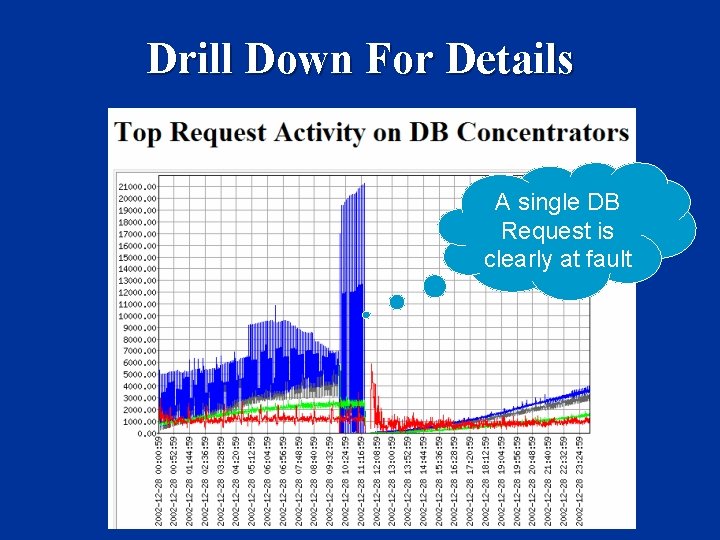

Drill Down For Details A single DB Request is clearly at fault

TSO: Case Study Outline (Lessons Learned) Poorly designed SP MP MMP transitions Scaling Team & code size, data set size Build & distribution Architecture: logical & code Visibility: development & operations Testability: development, release, load Multi-Player, Non-determinism Persistent user data vs code/content updates Patching / new content / custom content

Testability Development, release, load: all show stopper problems n QA coordination / speed / cost n Repeatablity, non-determinism n Need for many, many tests per day, each with multiple inputs (two to two thousand players per test) n

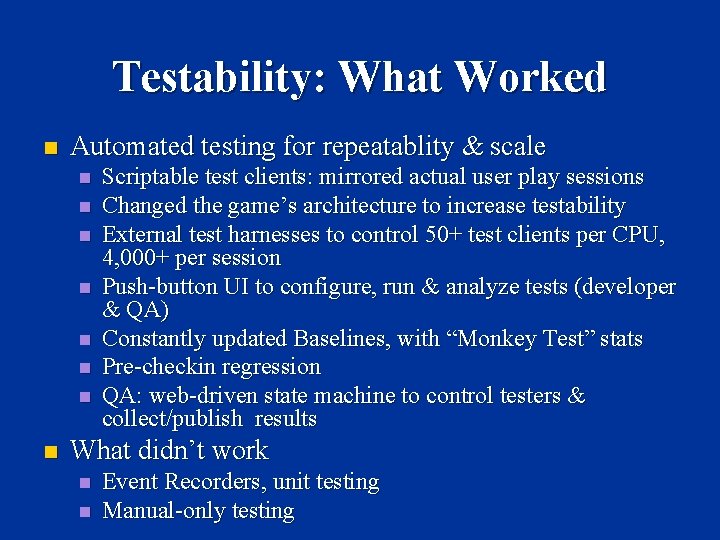

Testability: What Worked n Automated testing for repeatablity & scale n n n n Scriptable test clients: mirrored actual user play sessions Changed the game’s architecture to increase testability External test harnesses to control 50+ test clients per CPU, 4, 000+ per session Push-button UI to configure, run & analyze tests (developer & QA) Constantly updated Baselines, with “Monkey Test” stats Pre-checkin regression QA: web-driven state machine to control testers & collect/publish results What didn’t work n n Event Recorders, unit testing Manual-only testing

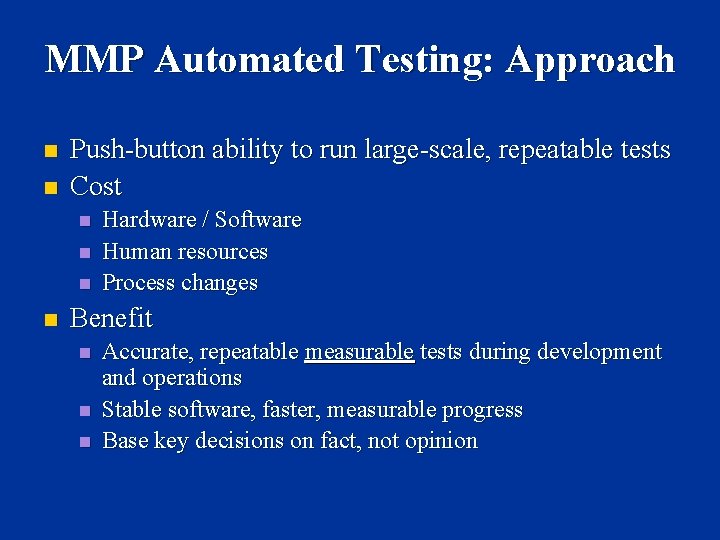

MMP Automated Testing: Approach n n Push-button ability to run large-scale, repeatable tests Cost n n Hardware / Software Human resources Process changes Benefit n n n Accurate, repeatable measurable tests during development and operations Stable software, faster, measurable progress Base key decisions on fact, not opinion

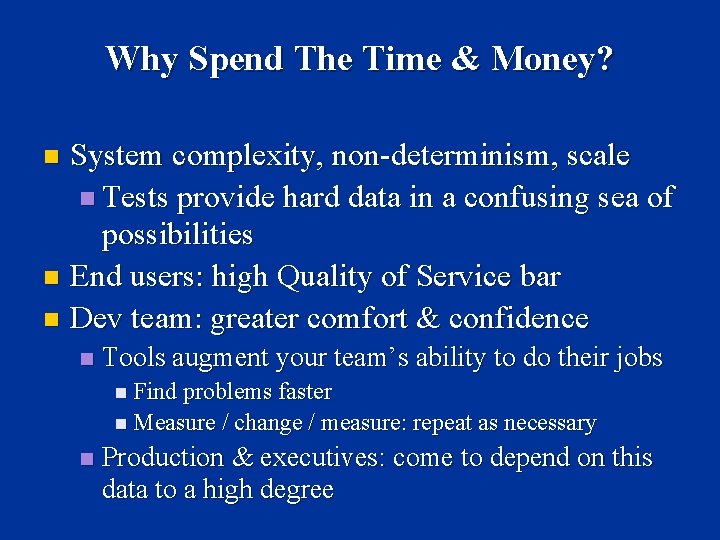

Why Spend The Time & Money? System complexity, non-determinism, scale n Tests provide hard data in a confusing sea of possibilities n End users: high Quality of Service bar n Dev team: greater comfort & confidence n n Tools augment your team’s ability to do their jobs n Find problems faster n Measure / change / measure: repeat as necessary n Production & executives: come to depend on this data to a high degree

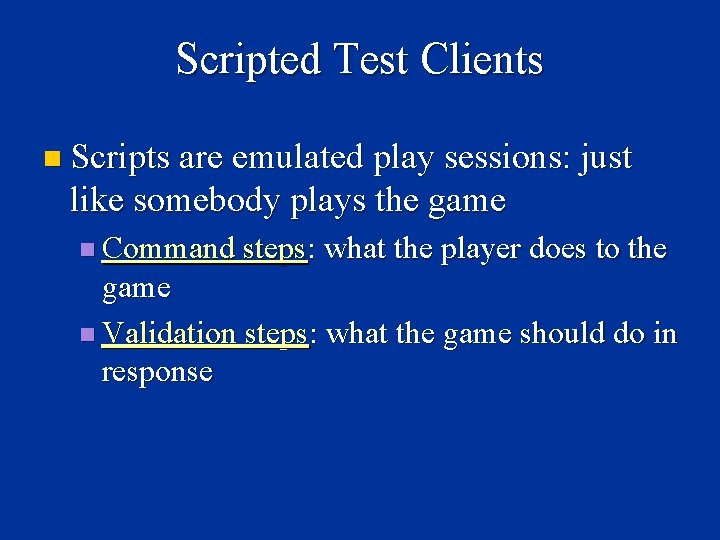

Scripted Test Clients n Scripts are emulated play sessions: just like somebody plays the game n Command steps: what the player does to the game n Validation steps: what the game should do in response

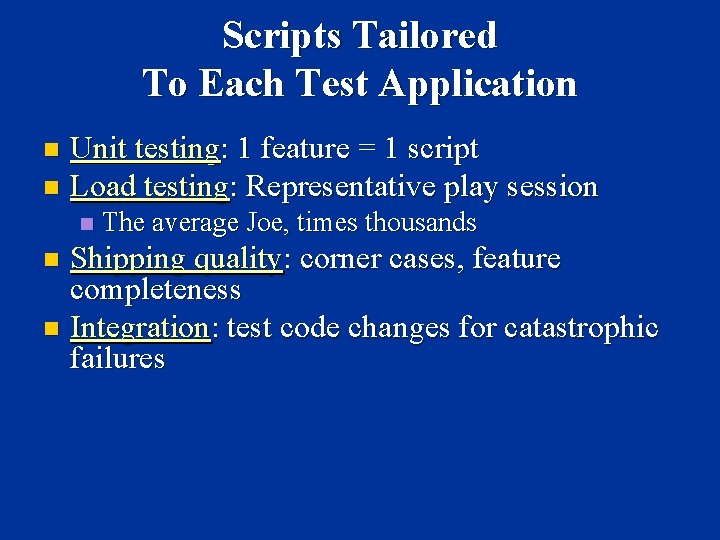

Scripts Tailored To Each Test Application Unit testing: 1 feature = 1 script n Load testing: Representative play session n n The average Joe, times thousands Shipping quality: corner cases, feature completeness n Integration: test code changes for catastrophic failures n

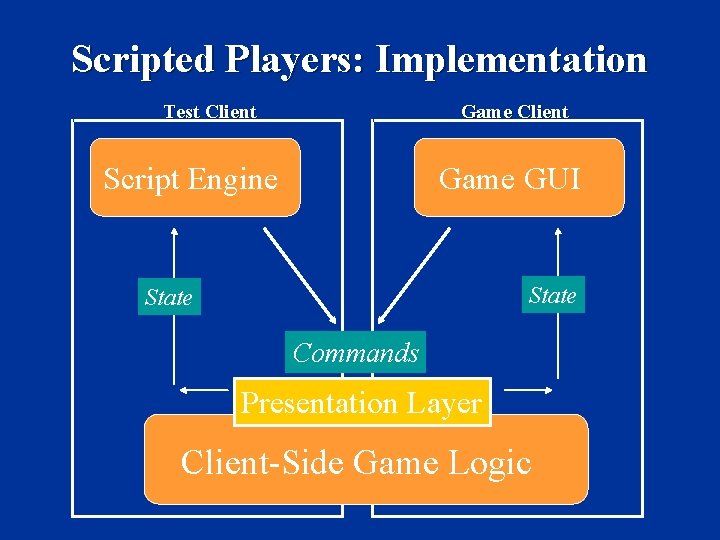

Scripted Players: Implementation Test Client Game Client Script Engine Game GUI State Commands Presentation Layer Client-Side Game Logic

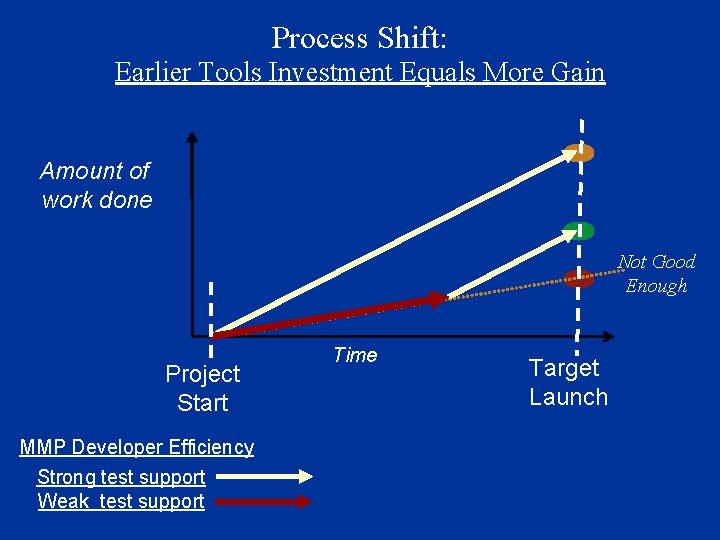

Process Shift: Earlier Tools Investment Equals More Gain Amount of work done Not Good Enough Project Start MMP Developer Efficiency Strong test support Weak test support Time Target Launch

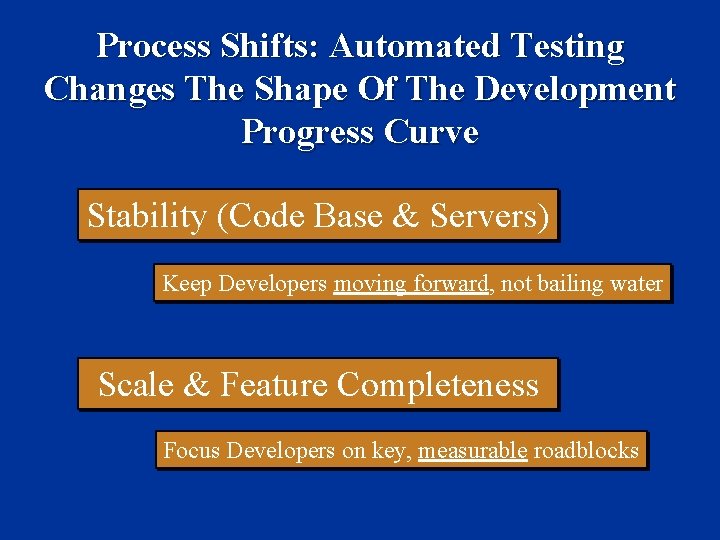

Process Shifts: Automated Testing Changes The Shape Of The Development Progress Curve Stability (Code Base & Servers) Keep Developers moving forward, not bailing water Scale & Feature Completeness Focus Developers on key, measurable roadblocks

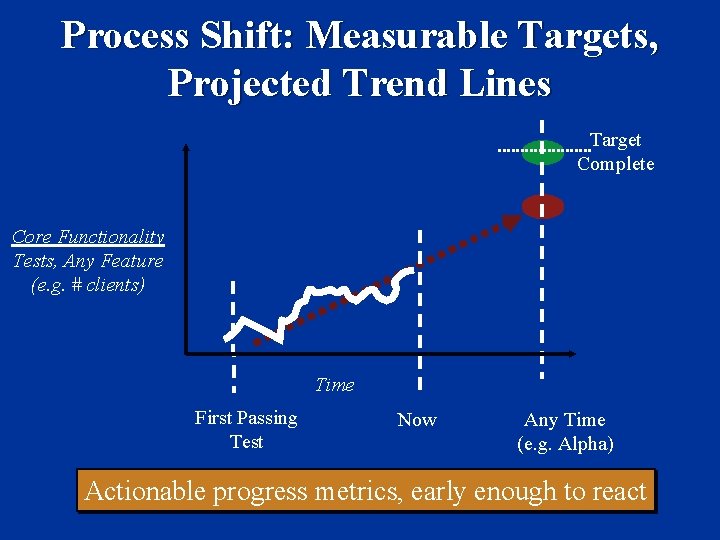

Process Shift: Measurable Targets, Projected Trend Lines Target Complete Core Functionality Tests, Any Feature (e. g. # clients) Time First Passing Test Now Any Time (e. g. Alpha) Actionable progress metrics, early enough to react

Process Shift: Load Testing (Before Paying Customers Show Up) Expose issues that only occur at scale Establish hardware requirements Establish play is acceptable @ scale

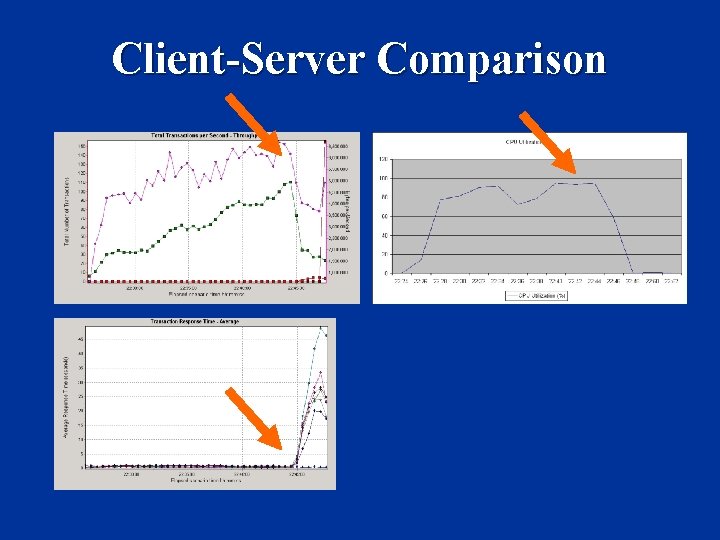

Client-Server Comparison

TSO: Case Study Outline (Lessons Learned) Poorly designed SP MP MMP transitions Scaling Team & code size, data set size Build & distribution Architecture: logical & code Visibility: development & operations Testability: development, release, load Multi-Player, Non-determinism Persistent user data vs code/content updates Patching / new content / custom content

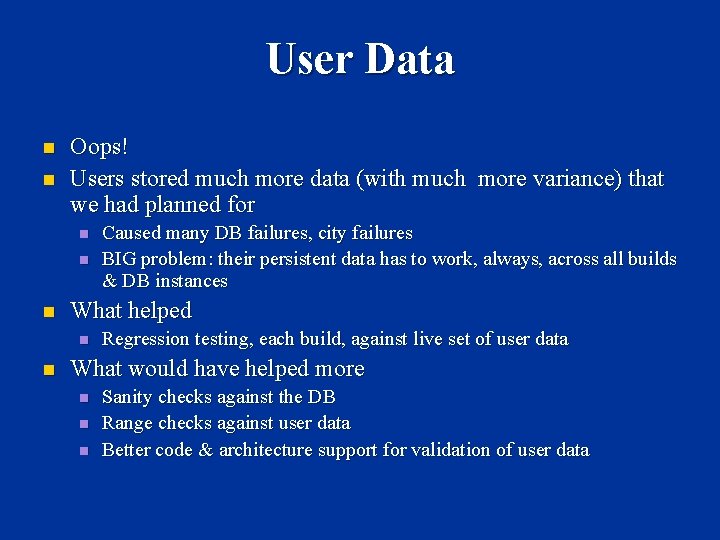

User Data n n Oops! Users stored much more data (with much more variance) that we had planned for n n n What helped n n Caused many DB failures, city failures BIG problem: their persistent data has to work, always, across all builds & DB instances Regression testing, each build, against live set of user data What would have helped more n n n Sanity checks against the DB Range checks against user data Better code & architecture support for validation of user data

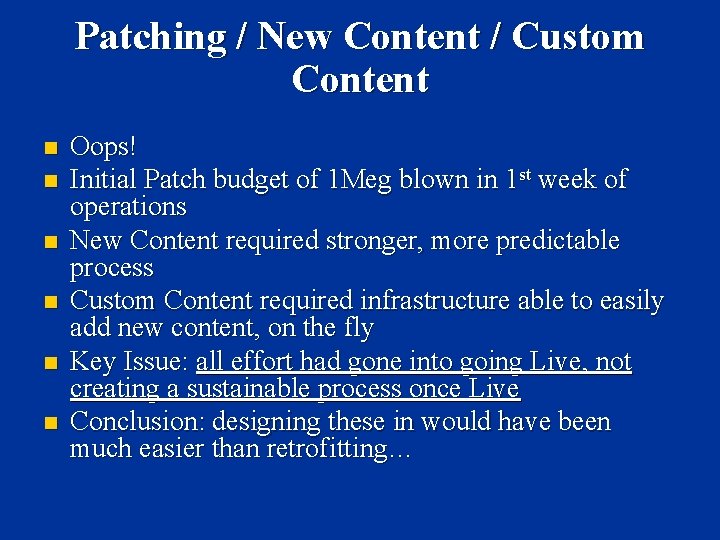

Patching / New Content / Custom Content n n n Oops! Initial Patch budget of 1 Meg blown in 1 st week of operations New Content required stronger, more predictable process Custom Content required infrastructure able to easily add new content, on the fly Key Issue: all effort had gone into going Live, not creating a sustainable process once Live Conclusion: designing these in would have been much easier than retrofitting…

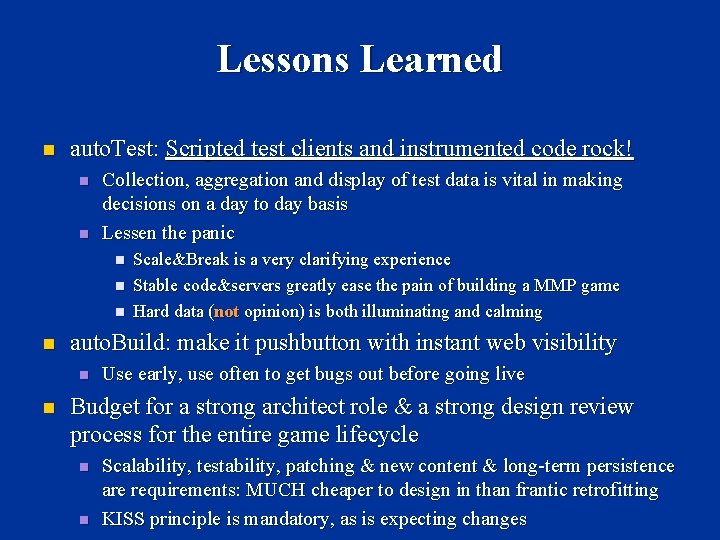

Lessons Learned n auto. Test: Scripted test clients and instrumented code rock! n n Collection, aggregation and display of test data is vital in making decisions on a day to day basis Lessen the panic n n auto. Build: make it pushbutton with instant web visibility n n Scale&Break is a very clarifying experience Stable code&servers greatly ease the pain of building a MMP game Hard data (not opinion) is both illuminating and calming Use early, use often to get bugs out before going live Budget for a strong architect role & a strong design review process for the entire game lifecycle n n Scalability, testability, patching & new content & long-term persistence are requirements: MUCH cheaper to design in than frantic retrofitting KISS principle is mandatory, as is expecting changes

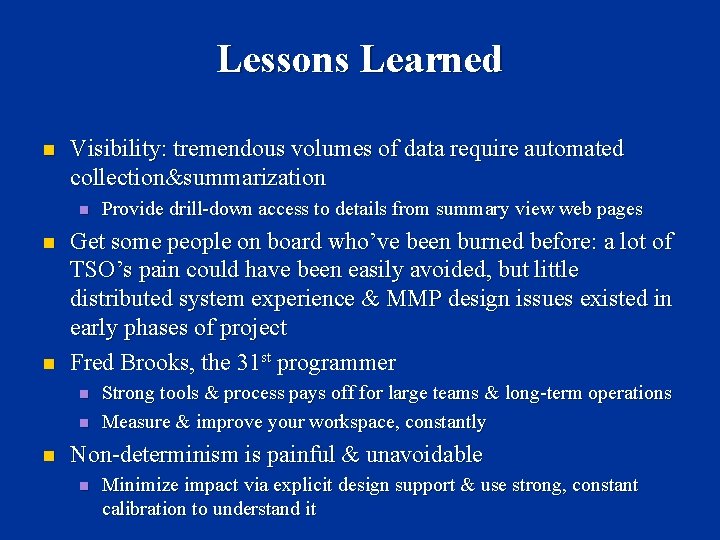

Lessons Learned n Visibility: tremendous volumes of data require automated collection&summarization n Get some people on board who’ve been burned before: a lot of TSO’s pain could have been easily avoided, but little distributed system experience & MMP design issues existed in early phases of project Fred Brooks, the 31 st programmer n n n Provide drill-down access to details from summary view web pages Strong tools & process pays off for large teams & long-term operations Measure & improve your workspace, constantly Non-determinism is painful & unavoidable n Minimize impact via explicit design support & use strong, constant calibration to understand it

Biggest Wins Code Isolation Scaffolding Tools: Build / Test / Measure, Information Management Pre-Checkin Regression / Load Testing

Biggest Losses Architecture: Massively peer to peer Early lack of tools #ifdef across platform / function “Critical Path” dependencies More Details: www. maggotranch. com/MMP (3 TSO Lessons Learned talks)

- Slides: 38