GCC Compiler Optimizations GCO The effects of various

- Slides: 12

GCC Compiler Optimizations (GCO) The effects of various compiler optimizations on benchmarks and analysis of the hardware/software interface.

GCO – Outline ● ● ● Outline Motivation Background Methodology Results Future Direction

GCO – Motivation ● Proebsting's Law: Compiler Advances Double Computing Power Every 18 Years http: //research. microsoft. com/~toddpro/papers/law. htm ● ● Applications that run on many hundreds of computers stand to see significant time and power benefits that have zero hardware cost. Compilers are the future. Moore's Law continues to hold but as the number of transistors grows, eventually greater speed benefits come from multiprocessing rather than bigger processors.

GCO – Background ● ● GCC is the GNU C Compiler, a standard compiler that ships with and is used to compile nearly every Linux distribution that exists (www. gnu. org) There are many strategies to optimize software, some of which push the program further from its pristine state than others GCC has a large set of flags to help software developers have control over how compilation is done (http: //gcc. gnu. org/onlinedocs/gcc/Optimize-Options. html) This is really done because GCC is simply a compiler, not an optimization system.

GCO – Methodology ● ● GCC has on the order of 50 different flags that can be passed to help it optimize your program It also comes with a number of preset optimization levels such as -O 1, -O 3, -O 99 These presets are simply the guesses of the compiler writers as to which flags yield improvements almost universally These presets do not take into account flags that help in some cases and hurt in others

GCO – Methodology 1) Baseline: Compile and run with -O 0 (no optimization 2) Presets: Compile and run again with the different -O presets (1, 2, 3, 9, 99) 3) Orthogonal Flags: Use different flags than those included in -O 1, 2, etc with the baseline of -O 0 4) Combinations: Take the best orthogonal flags and presets and try all combinations of them 5) The fastest out of those will be declared our winner and dissected

GCO – Methodology ● ● ● In order to have some idea of why the higher optimization levels perform better, we decided to simulate the worst performer (usually -O 0) and the best performer. This enables us to see metrics such as cache misses and hits, branch prediction, etc. By comparing the two we gain insight into how the optimizations effect the machine code output and what this means in terms of the architecture.

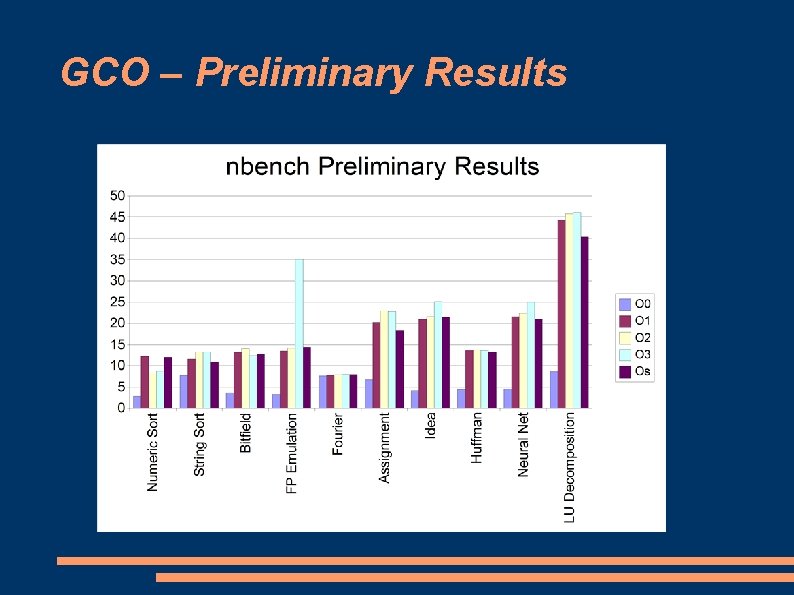

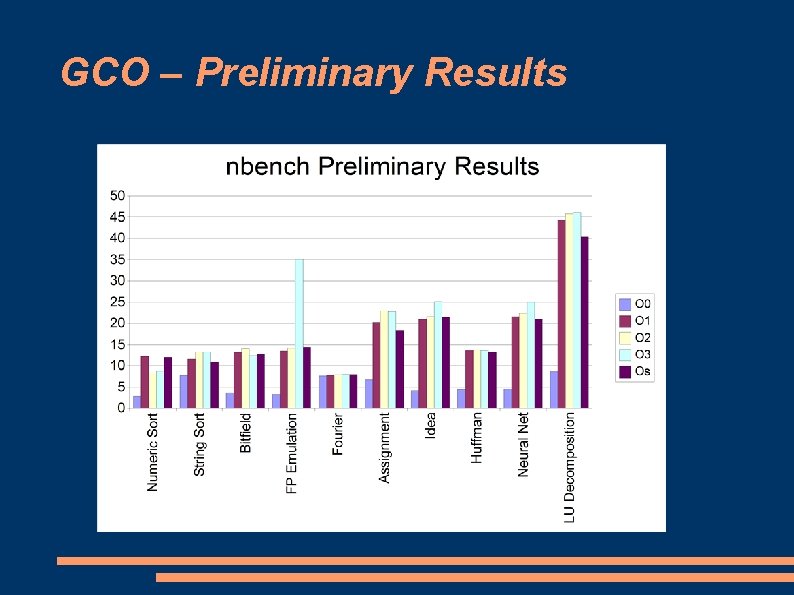

GCO – Preliminary Results ● ● ● n. Bench is a Linux/Unix benchmarking tool that exercises primarily the CPU We performed the steps of our proposed methodology to help us refine our process This conveniently also gave us some results to discuss in our presentation

GCO – Preliminary Results

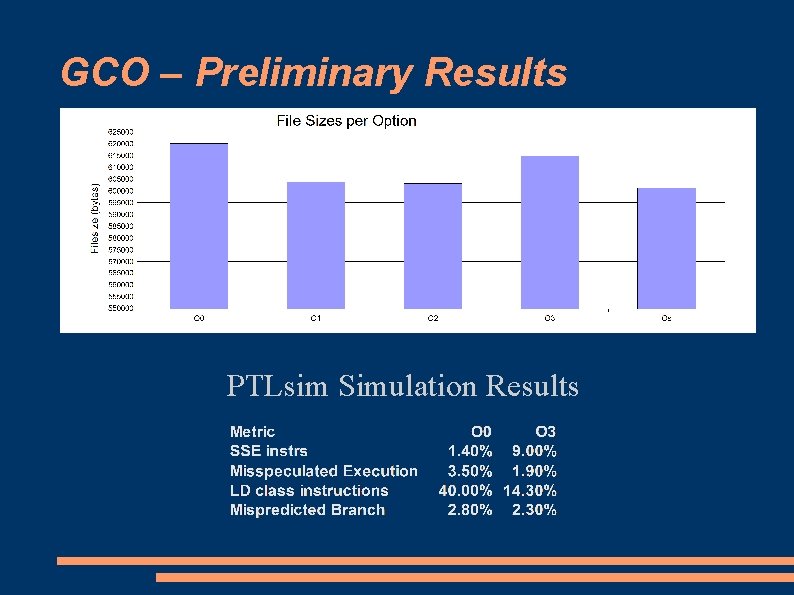

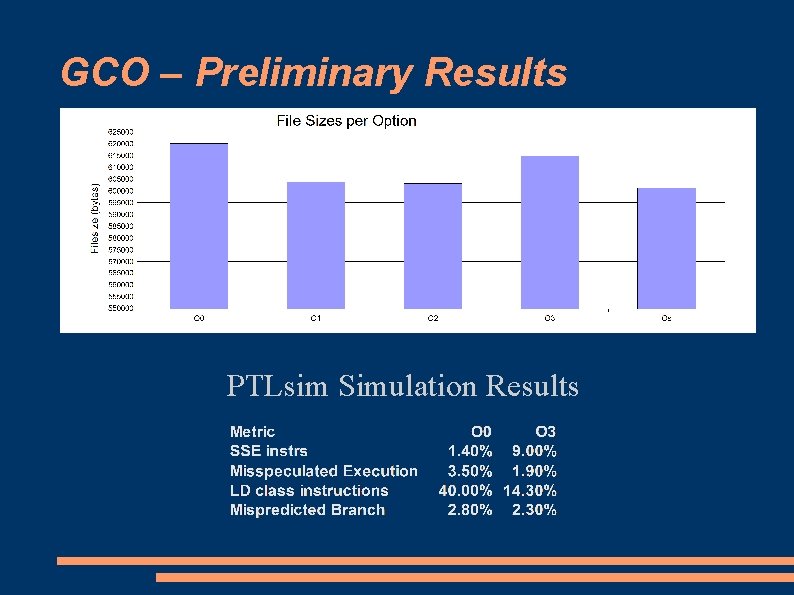

GCO – Preliminary Results PTLsim Simulation Results

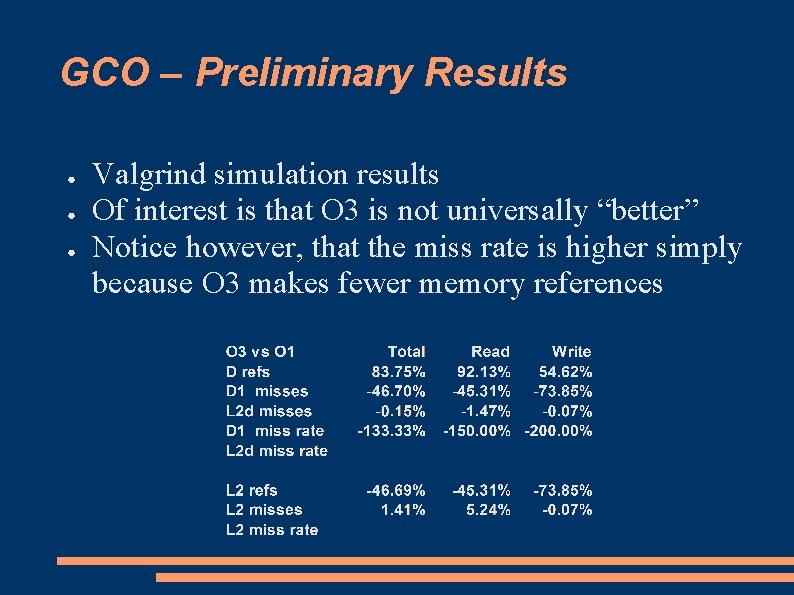

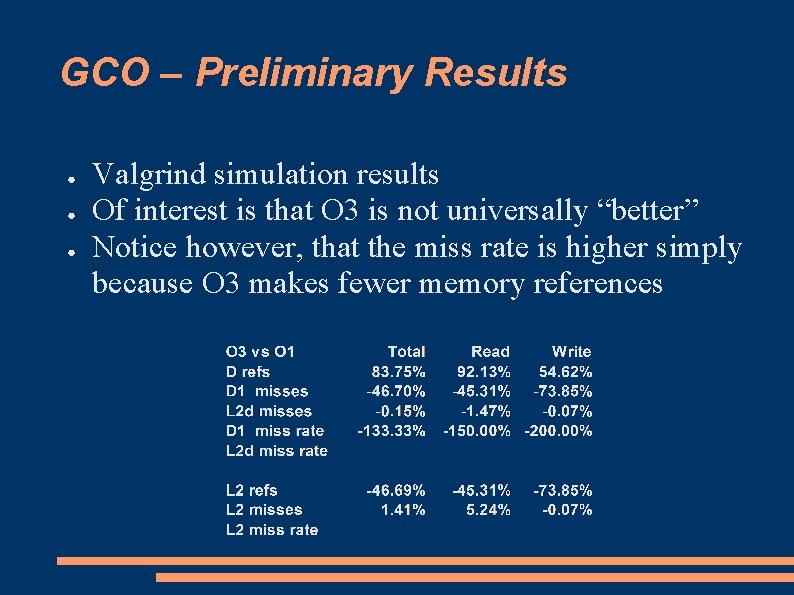

GCO – Preliminary Results ● ● ● Valgrind simulation results Of interest is that O 3 is not universally “better” Notice however, that the miss rate is higher simply because O 3 makes fewer memory references

GCO – Future Direction ● ● ● For our final report we're going to include SPEC 2000 and several other benchmarks Another area of interest would be to automate the process so that fastest possible options could be obtained by setting a run up and leaving it for several days We'd also be interested in looking at overall energy usage to obtain the results to see if more aggressive optimization helps or hurts power efficiency