Gas furnace data cont How should the sample

![model 1_temp 2 <lm(CO 2[1: 288]~gasrate[4: 291]+gasrate[5: 292]+gasrate[6: 293]+gasr ate[7: 294]+gasrate[8: 295]+gasrate[9: 296]) summary(model model 1_temp 2 <lm(CO 2[1: 288]~gasrate[4: 291]+gasrate[5: 292]+gasrate[6: 293]+gasr ate[7: 294]+gasrate[8: 295]+gasrate[9: 296]) summary(model](https://slidetodoc.com/presentation_image/49db6ef41092f408e06328f3000084a7/image-7.jpg)

![model 1_gasfurnace <arima(CO 2[1: 288], order=c(2, 0, 0), xreg=data. frame(gasrate_3=gas rate[4: 291], gasrate_4=gasrate[5: 292], model 1_gasfurnace <arima(CO 2[1: 288], order=c(2, 0, 0), xreg=data. frame(gasrate_3=gas rate[4: 291], gasrate_4=gasrate[5: 292],](https://slidetodoc.com/presentation_image/49db6ef41092f408e06328f3000084a7/image-9.jpg)

- Slides: 54

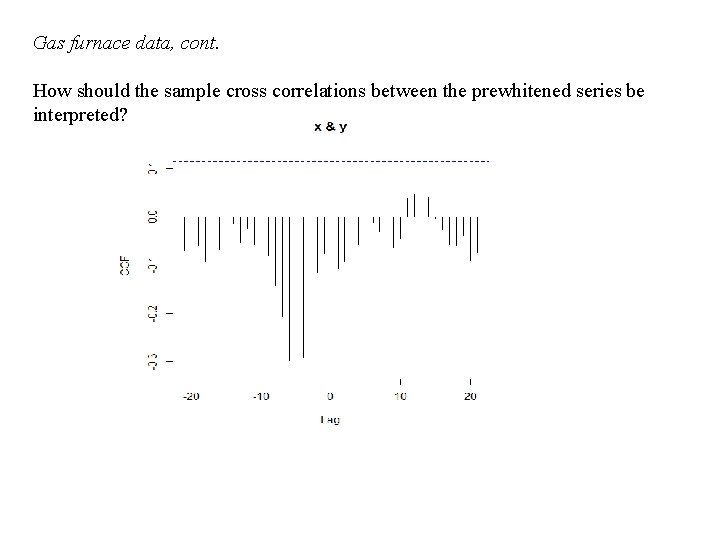

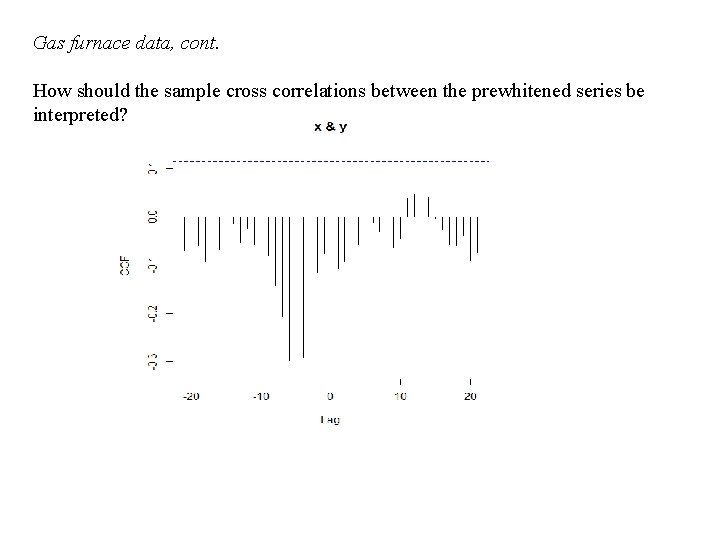

Gas furnace data, cont. How should the sample cross correlations between the prewhitened series be interpreted?

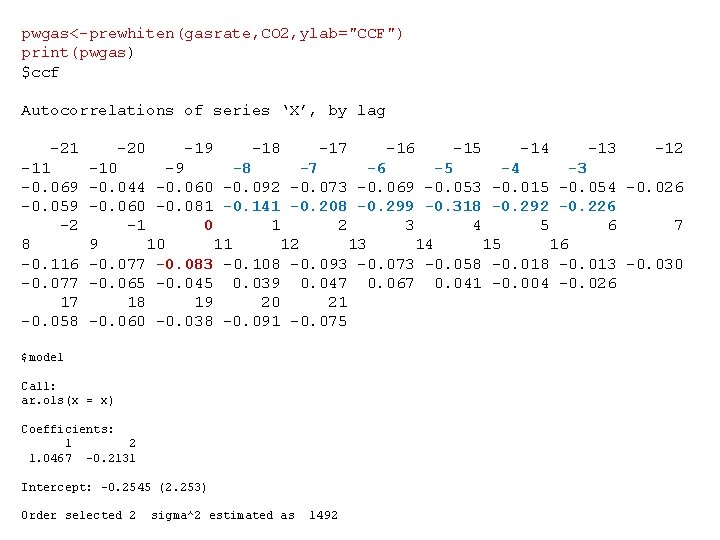

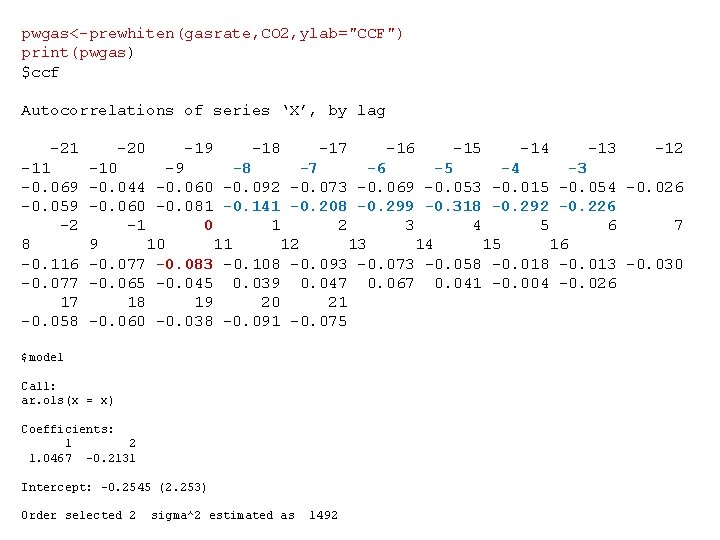

pwgas<-prewhiten(gasrate, CO 2, ylab="CCF") print(pwgas) $ccf Autocorrelations of series ‘X’, by lag -21 -11 -0. 069 -0. 059 -2 8 -0. 116 -0. 077 17 -0. 058 -20 -19 -18 -17 -16 -15 -14 -13 -12 -10 -9 -8 -7 -6 -5 -4 -3 -0. 044 -0. 060 -0. 092 -0. 073 -0. 069 -0. 053 -0. 015 -0. 054 -0. 026 -0. 060 -0. 081 -0. 141 -0. 208 -0. 299 -0. 318 -0. 292 -0. 226 -1 0 1 2 3 4 5 6 7 9 10 11 12 13 14 15 16 -0. 077 -0. 083 -0. 108 -0. 093 -0. 073 -0. 058 -0. 013 -0. 030 -0. 065 -0. 045 0. 039 0. 047 0. 067 0. 041 -0. 004 -0. 026 18 19 20 21 -0. 060 -0. 038 -0. 091 -0. 075 $model Call: ar. ols(x = x) Coefficients: 1 2 1. 0467 -0. 2131 Intercept: -0. 2545 (2. 253) Order selected 2 sigma^2 estimated as 1492

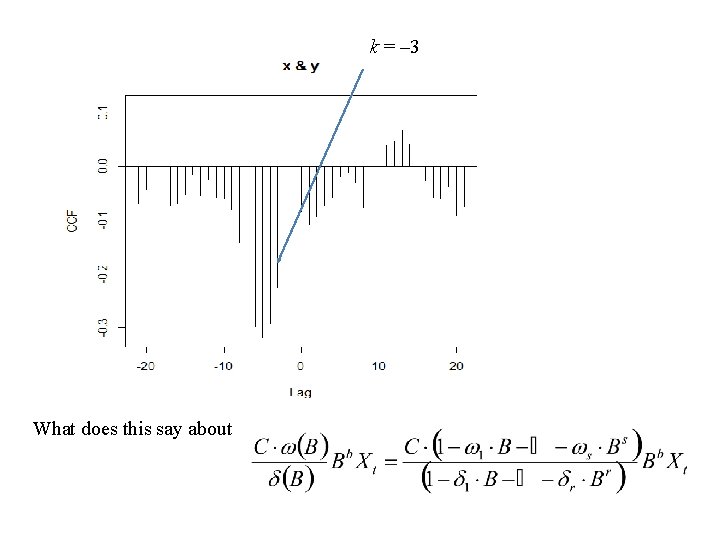

k = – 3 What does this say about

Since the first significant spike occurs at k = – 3 it is natural to set b = 3 Rules-of-thumb: • The order of the numerator polynomial, i. e. s is decided upon how many significant spikes there after the first signifikant spike and before the spikes start to die down. s =2 • The order of the denominator polynomial, i. e. r is decided upon how the spikes are dying down. exponential : choose r = 1 damped sine wave: choose r = 2 cuts of after lag b + s : choose r = 0 r=1

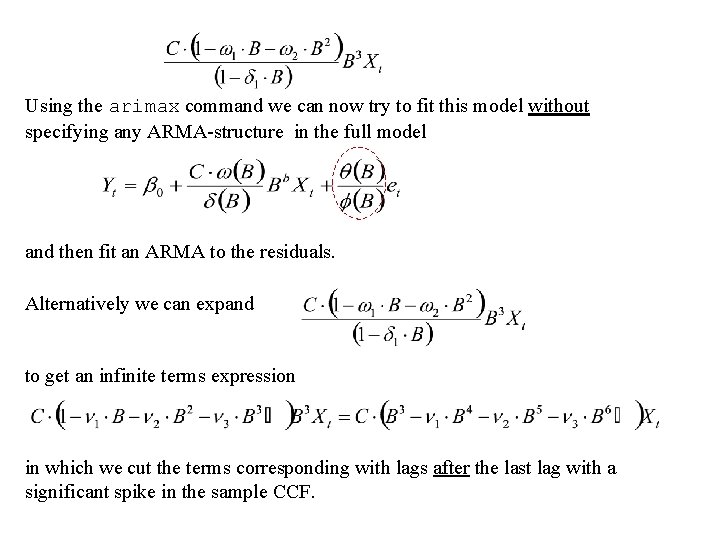

Using the arimax command we can now try to fit this model without specifying any ARMA-structure in the full model and then fit an ARMA to the residuals. Alternatively we can expand to get an infinite terms expression in which we cut the terms corresponding with lags after the last lag with a significant spike in the sample CCF.

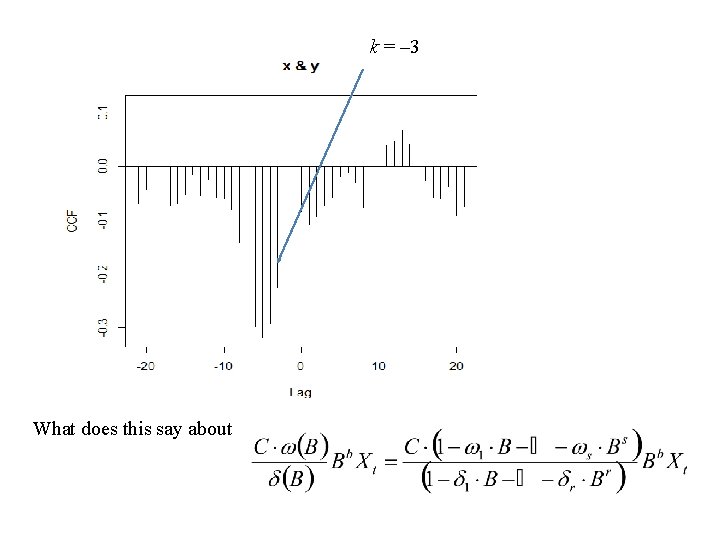

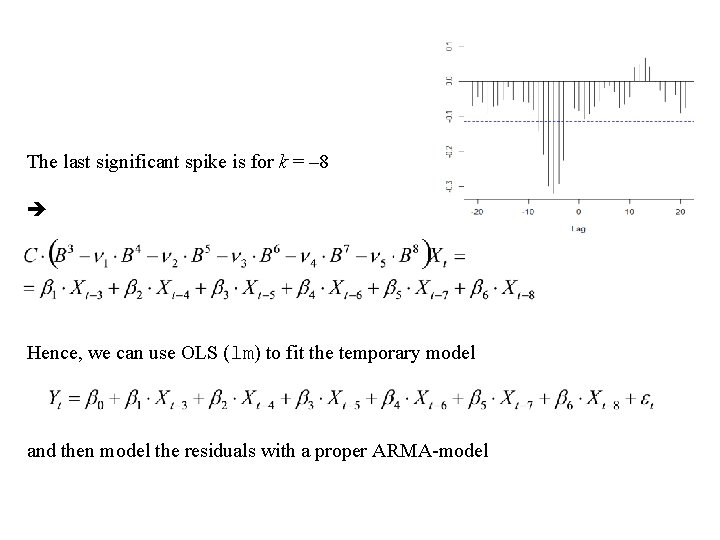

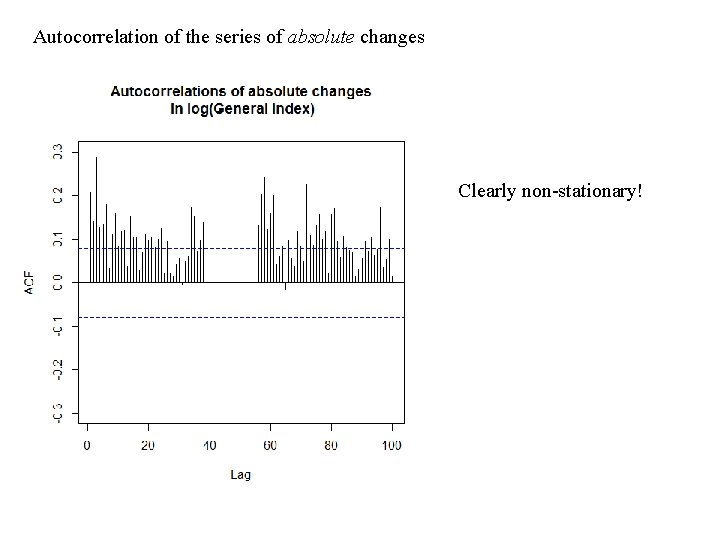

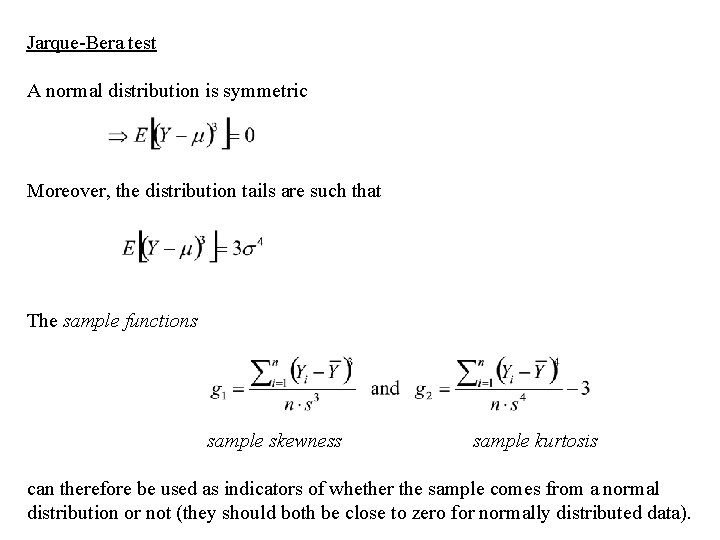

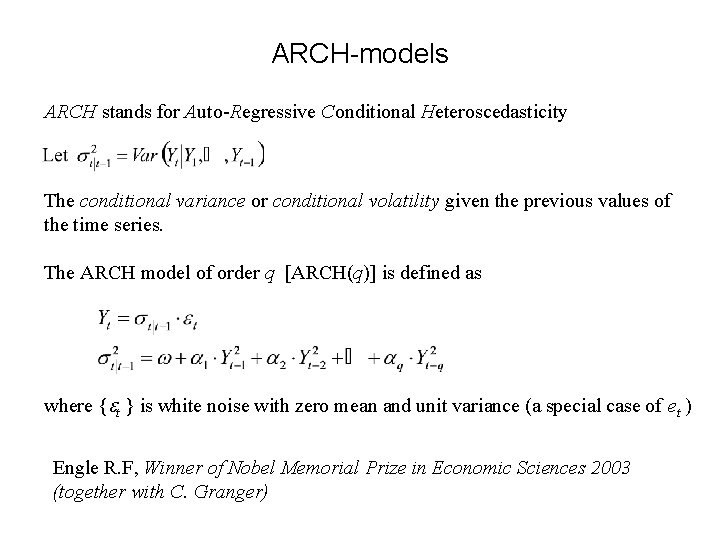

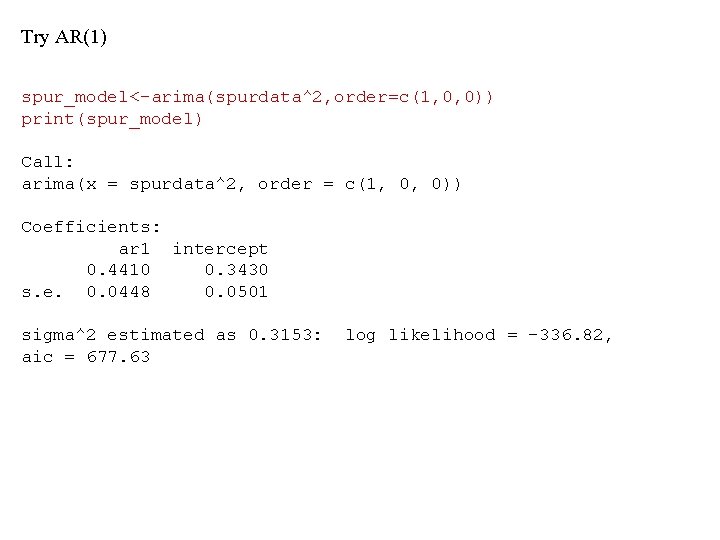

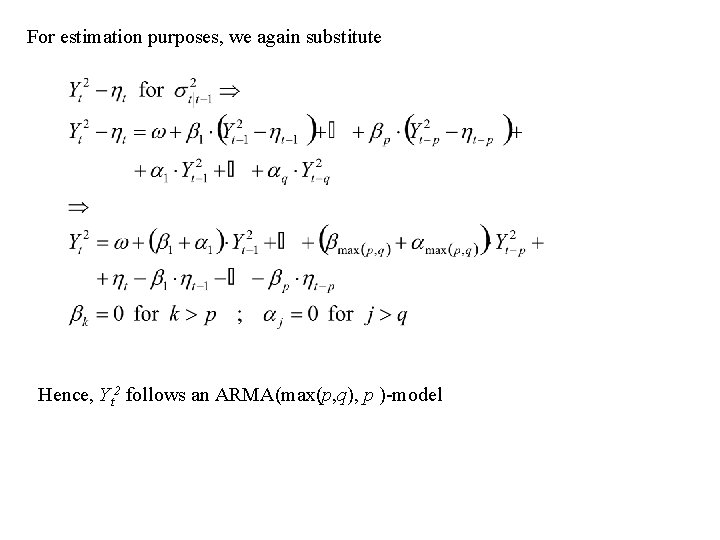

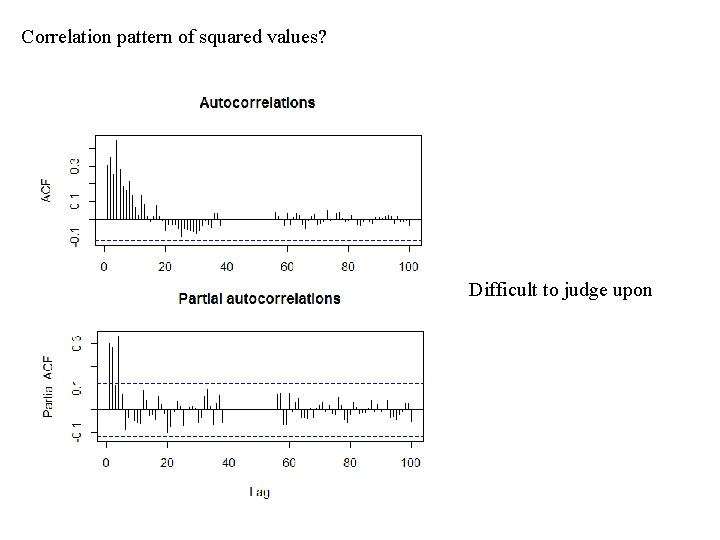

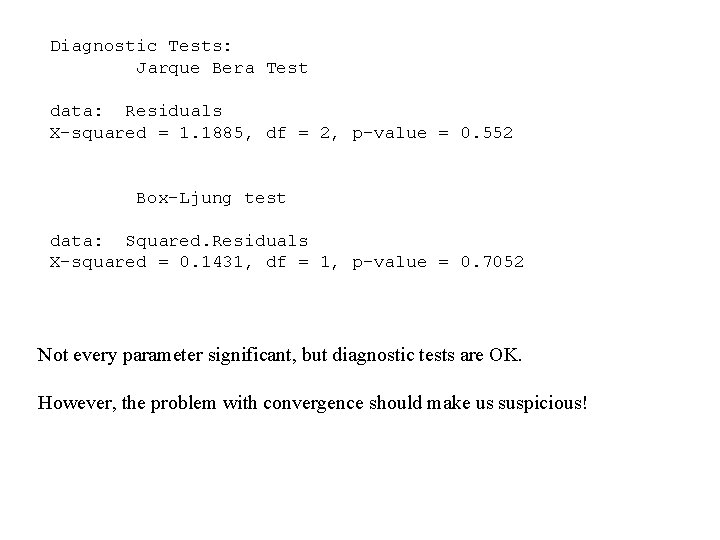

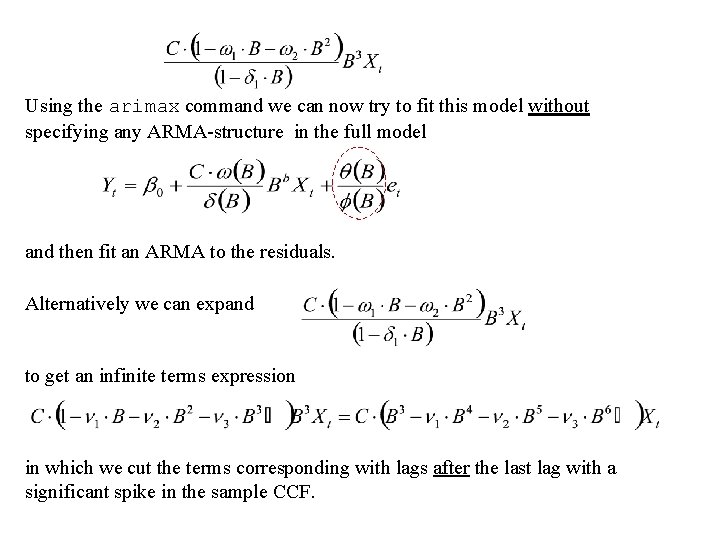

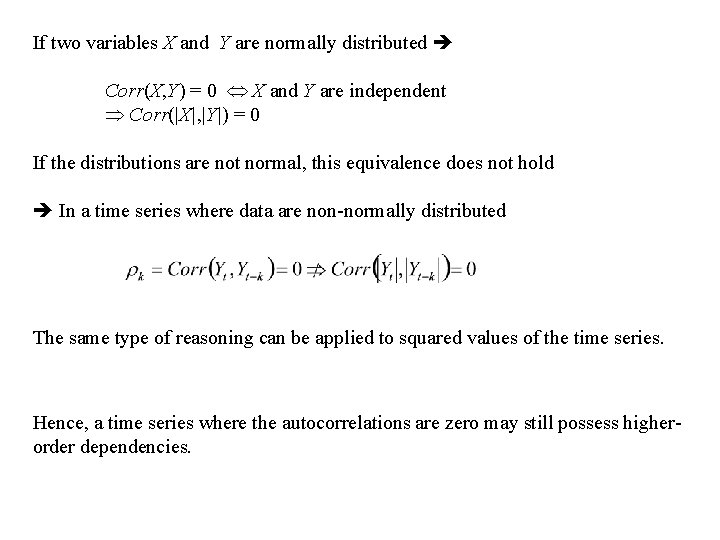

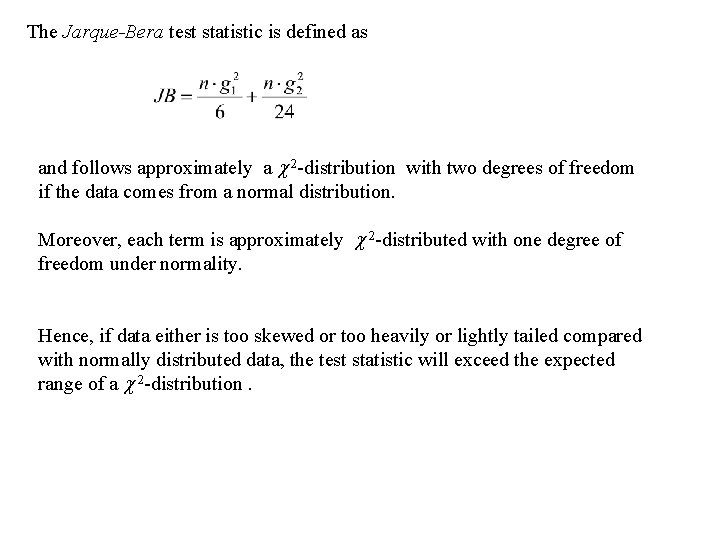

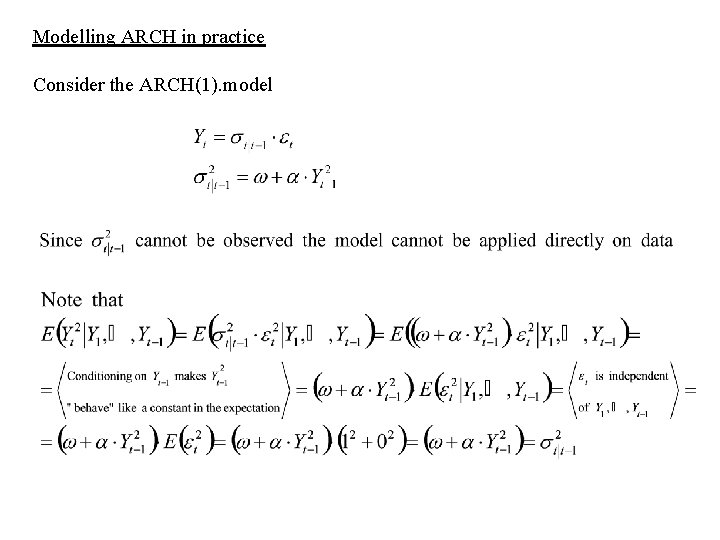

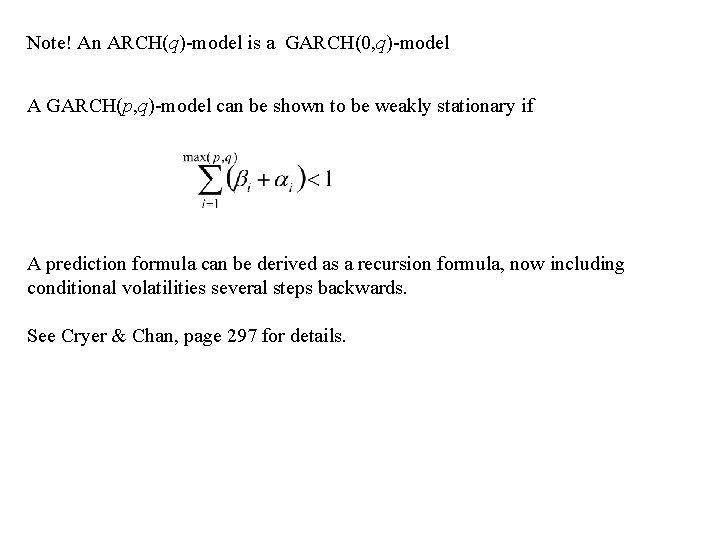

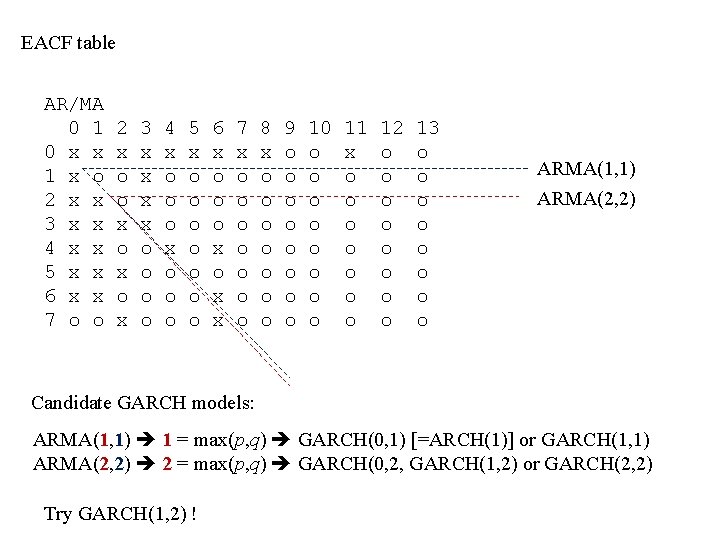

The last significant spike is for k = – 8 Hence, we can use OLS (lm) to fit the temporary model and then model the residuals with a proper ARMA-model

![model 1temp 2 lmCO 21 288gasrate4 291gasrate5 292gasrate6 293gasr ate7 294gasrate8 295gasrate9 296 summarymodel model 1_temp 2 <lm(CO 2[1: 288]~gasrate[4: 291]+gasrate[5: 292]+gasrate[6: 293]+gasr ate[7: 294]+gasrate[8: 295]+gasrate[9: 296]) summary(model](https://slidetodoc.com/presentation_image/49db6ef41092f408e06328f3000084a7/image-7.jpg)

model 1_temp 2 <lm(CO 2[1: 288]~gasrate[4: 291]+gasrate[5: 292]+gasrate[6: 293]+gasr ate[7: 294]+gasrate[8: 295]+gasrate[9: 296]) summary(model 1_temp 2) Call: lm(formula = CO 2[1: 288] ~ gasrate[4: 291] + gasrate[5: 292] + gasrate[6: 293] + gasrate[7: 294] + gasrate[8: 295] + gasrate[9: 296]) Residuals: Min 1 Q Median -7. 1044 -2. 1646 -0. 0916 3 Q 2. 2088 Max 6. 9429 Coefficients: (Intercept) gasrate[4: 291] gasrate[5: 292] gasrate[6: 293] gasrate[7: 294] gasrate[8: 295] gasrate[9: 296] --Signif. codes: Estimate Std. Error t value Pr(>|t|) 53. 3279 0. 1780 299. 544 < 2 e-16 *** -2. 7983 0. 9428 -2. 968 0. 00325 ** 3. 2472 2. 0462 1. 587 0. 11365 -0. 8936 2. 3434 -0. 381 0. 70325 -0. 3074 2. 3424 -0. 131 0. 89570 -0. 6443 2. 0453 -0. 315 0. 75300 0. 3574 0. 9427 0. 379 0. 70491 0 ‘***’ 0. 001 ‘**’ 0. 01 ‘*’ 0. 05 ‘. ’ 0. 1 ‘ ’ 1 Residual standard error: 3. 014 on 281 degrees of freedom Multiple R-squared: 0. 1105, Adjusted R-squared: 0. 09155 F-statistic: 5. 82 on 6 and 281 DF, p-value: 9. 824 e-06

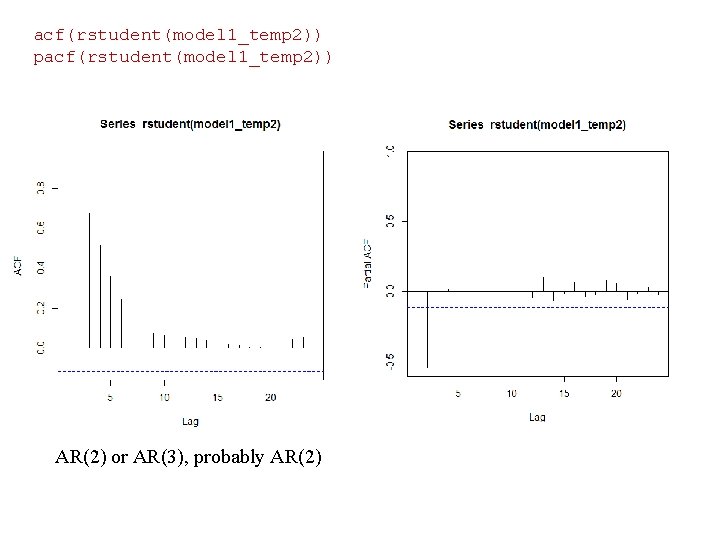

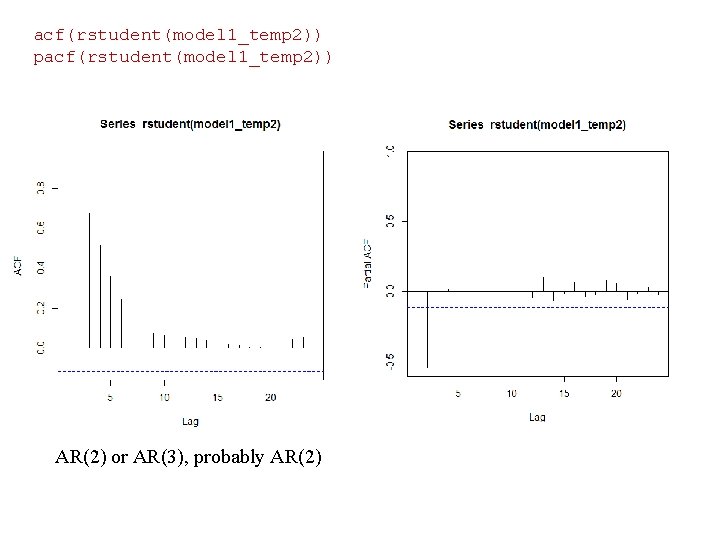

acf(rstudent(model 1_temp 2)) pacf(rstudent(model 1_temp 2)) AR(2) or AR(3), probably AR(2)

![model 1gasfurnace arimaCO 21 288 orderc2 0 0 xregdata framegasrate3gas rate4 291 gasrate4gasrate5 292 model 1_gasfurnace <arima(CO 2[1: 288], order=c(2, 0, 0), xreg=data. frame(gasrate_3=gas rate[4: 291], gasrate_4=gasrate[5: 292],](https://slidetodoc.com/presentation_image/49db6ef41092f408e06328f3000084a7/image-9.jpg)

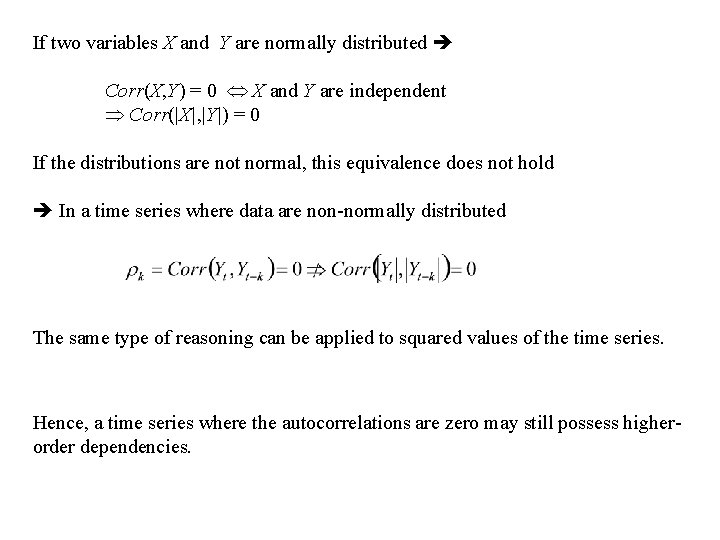

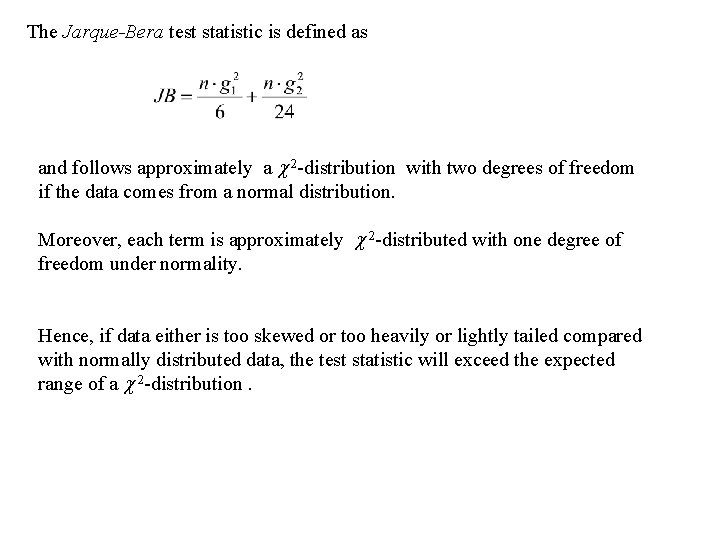

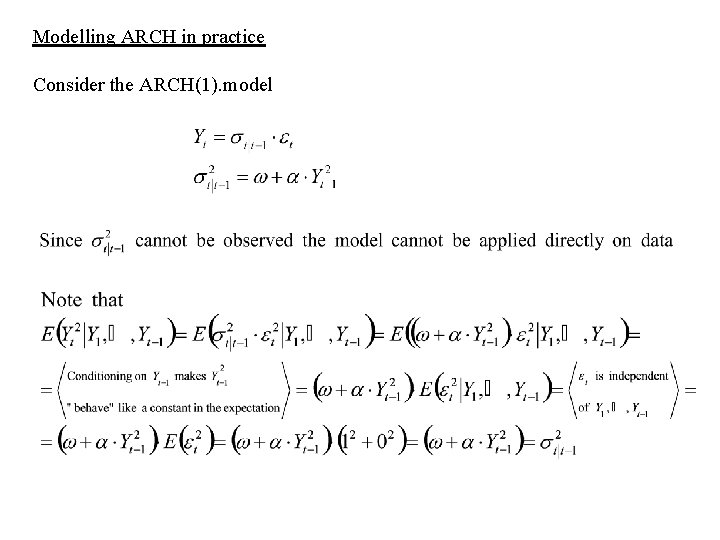

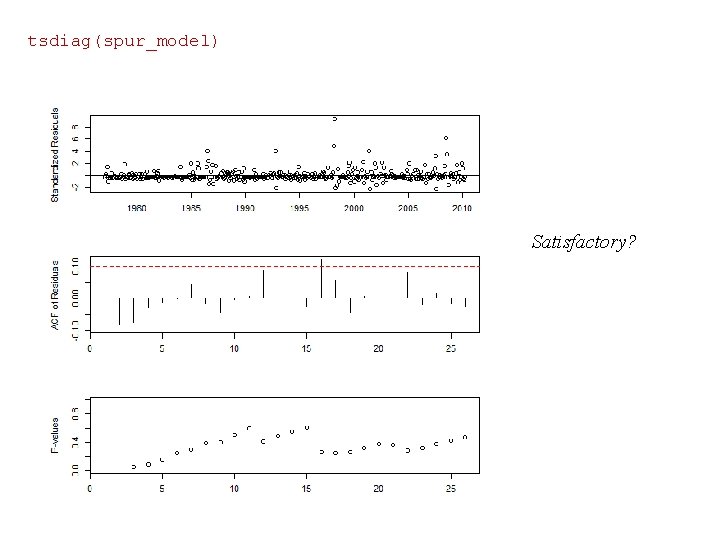

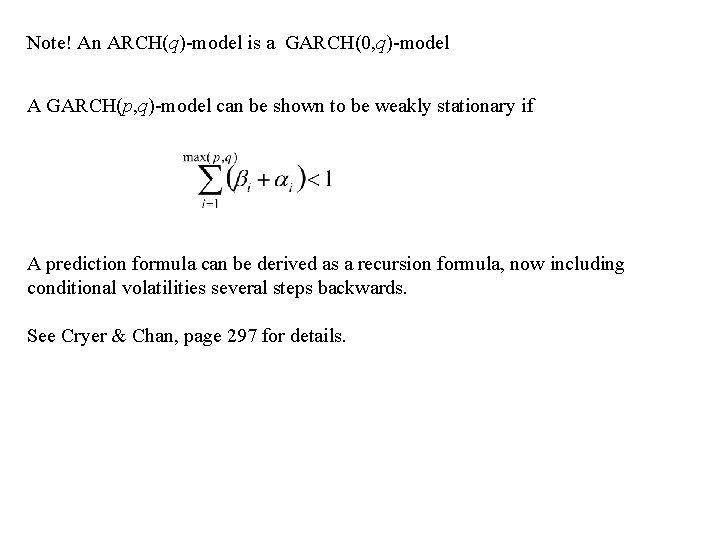

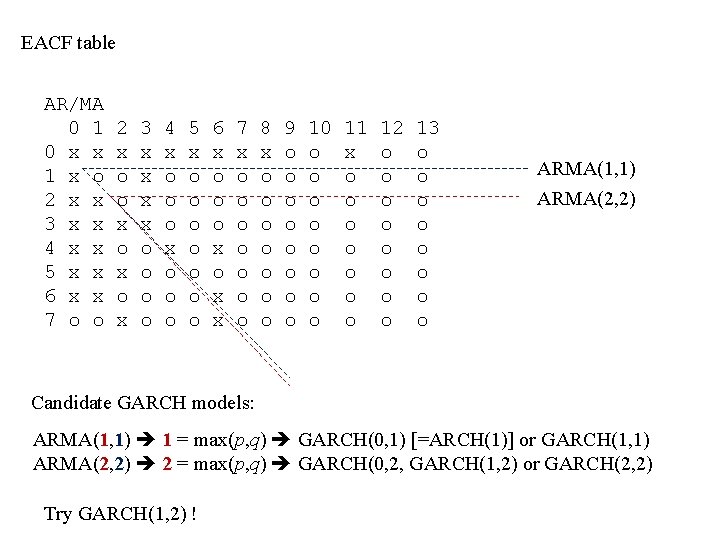

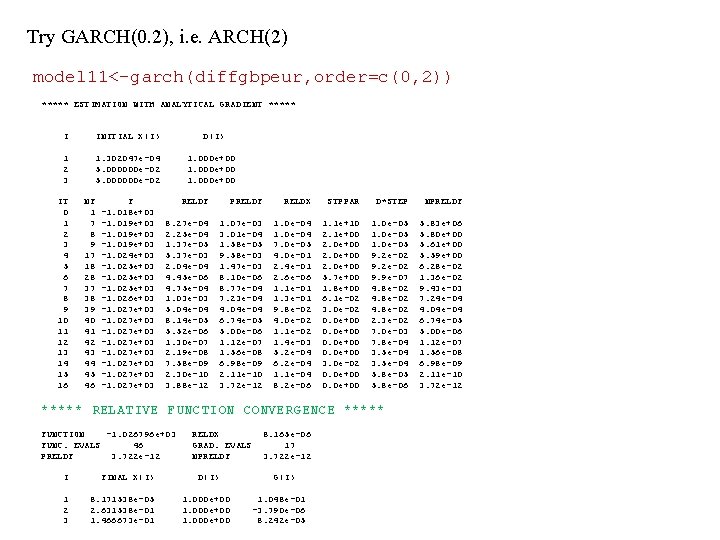

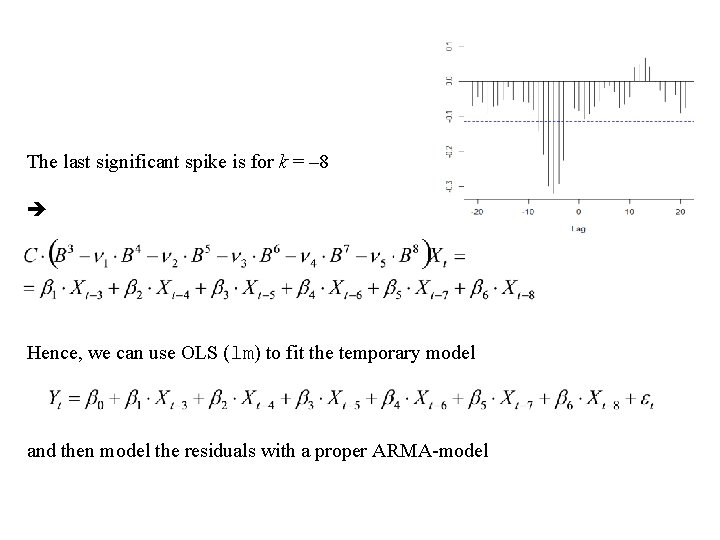

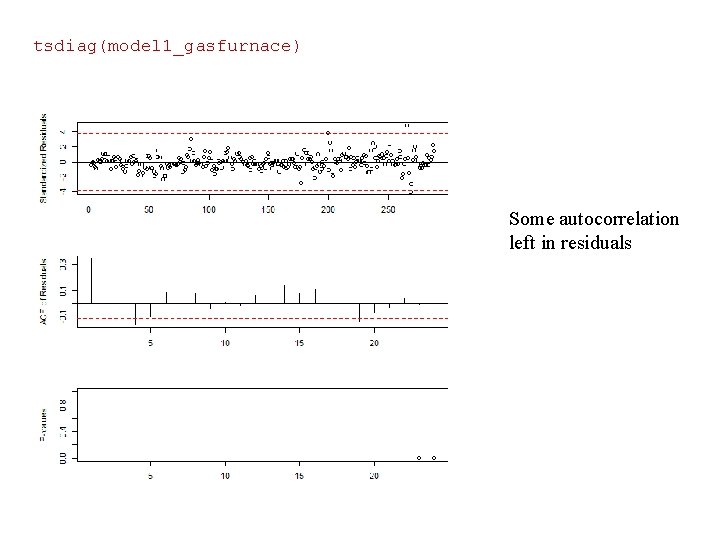

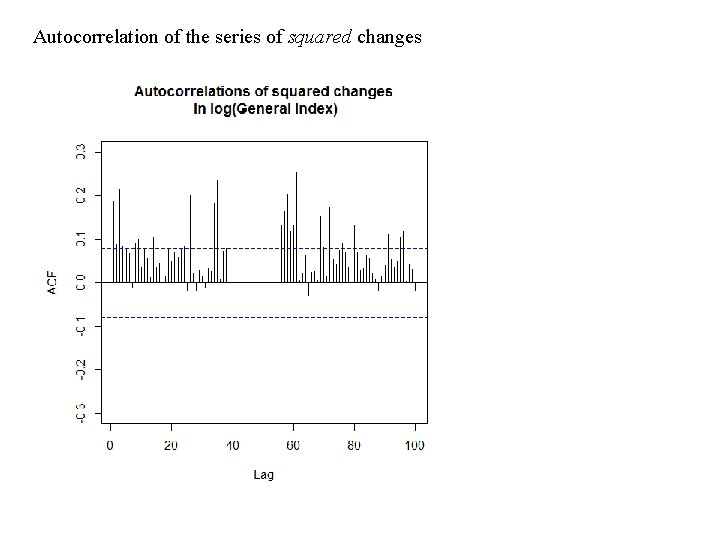

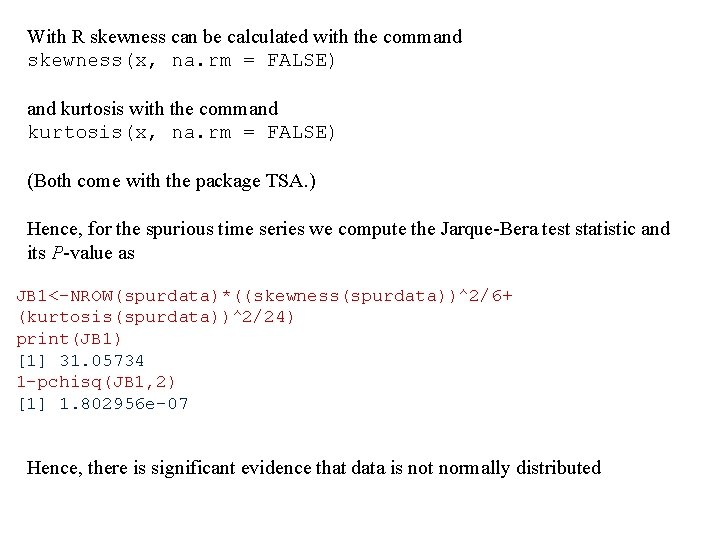

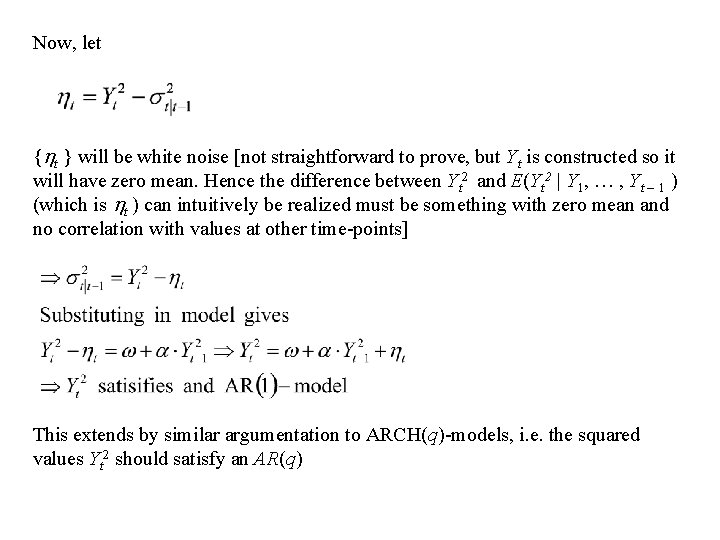

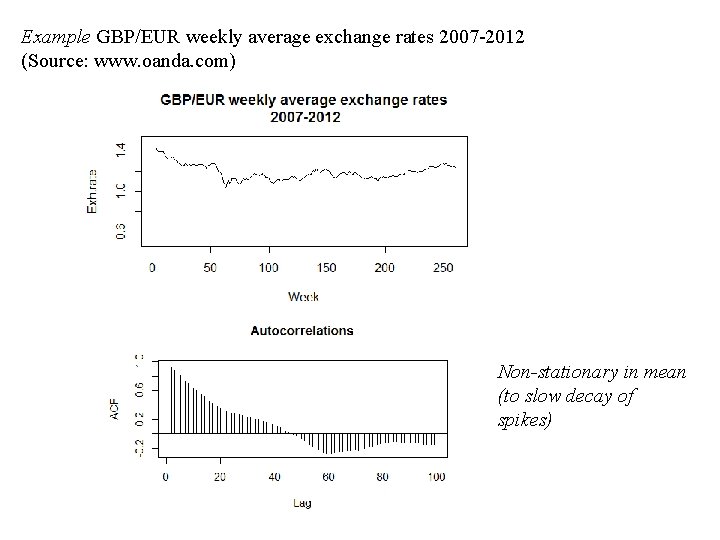

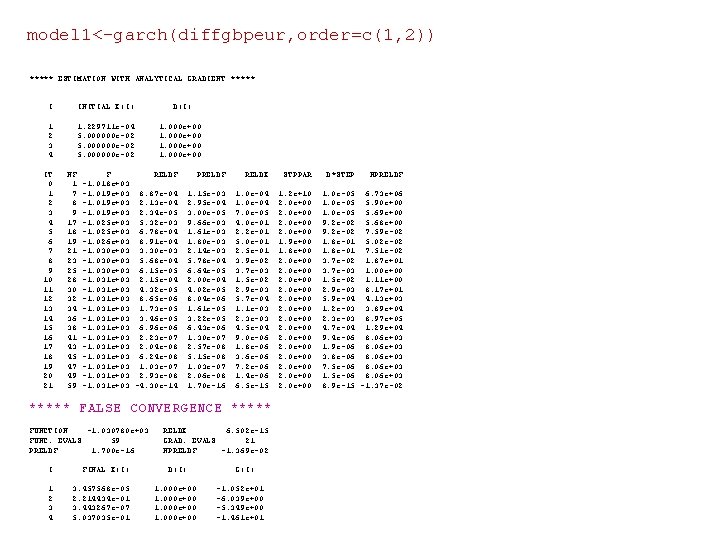

model 1_gasfurnace <arima(CO 2[1: 288], order=c(2, 0, 0), xreg=data. frame(gasrate_3=gas rate[4: 291], gasrate_4=gasrate[5: 292], gasrate_5=gasrate[6: 293], gasrate_6=gasrate[7: 294], gasrate_7=gasrate[8: 295], gasrate_8=gasrate[9: 296])) Note! With use only of xreg we print(model 1_gasfurnace) can stick to the command arima Call: arimax(x = CO 2[1: 288], order = c(2, 0, 0), xreg = data. frame(gasrate_3 = gasrate[4: 291], gasrate_4 = gasrate[5: 292], gasrate_5 = gasrate[6: 293], gasrate_6 = gasrate[7: 294], gasrate_7 = gasrate[8: 295], gasrate_8 = gasrate[9: 296])) Coefficients: ar 1 ar 2 intercept gasrate_6 gasrate_7 gasrate_8 1. 8314 -0. 8977 53. 3845 -0. 3673 -0. 2474 -0. 3395 s. e. 0. 0259 0. 0263 0. 3257 0. 1109 0. 1139 0. 1119 sigma^2 estimated as 0. 1341: 262. 71 gasrate_3 gasrate_4 gasrate_5 -0. 3382 -0. 2347 -0. 1517 0. 1151 0. 1145 0. 1111 log likelihood = -122. 35, aic =

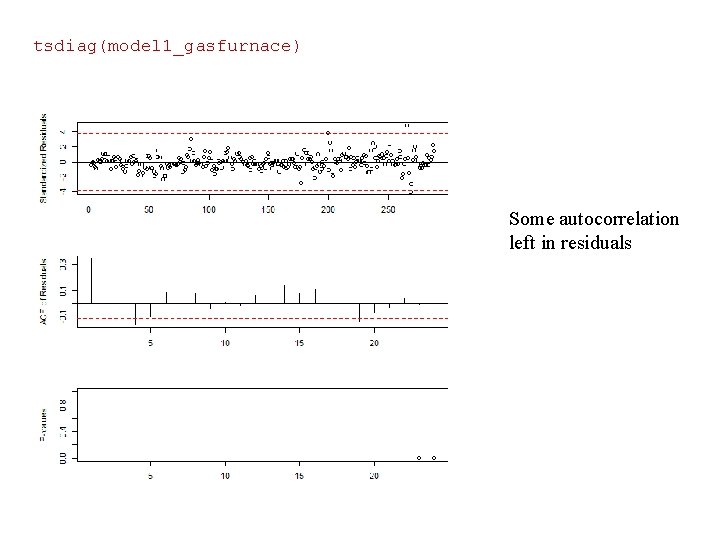

tsdiag(model 1_gasfurnace) Some autocorrelation left in residuals

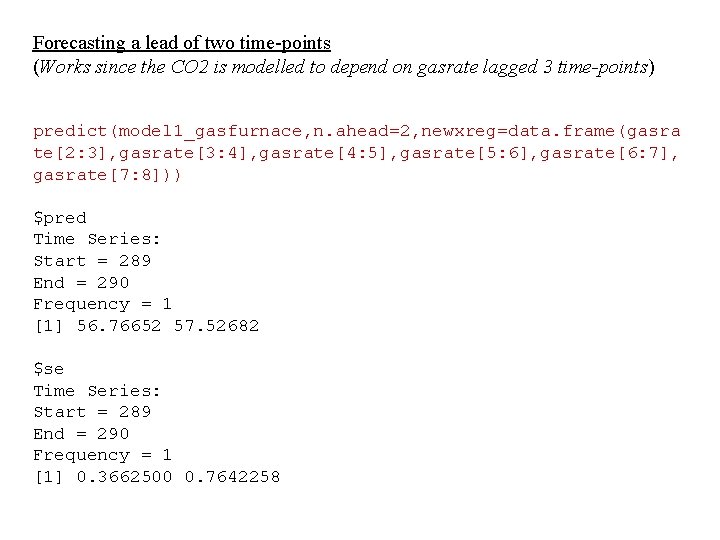

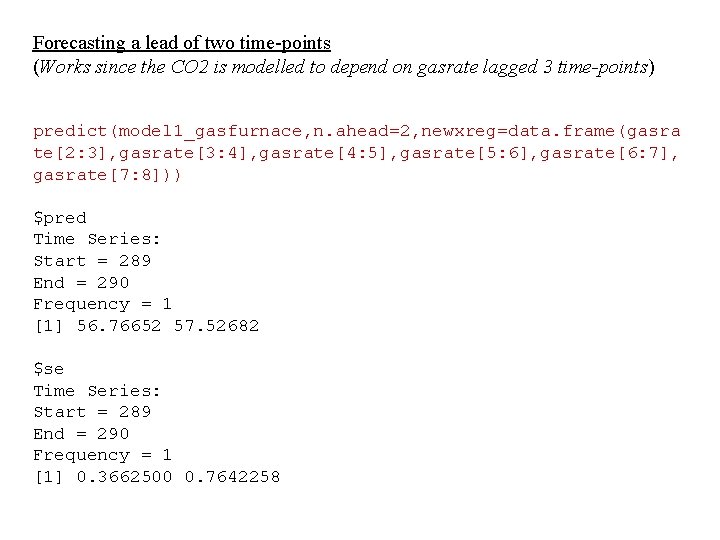

Forecasting a lead of two time-points (Works since the CO 2 is modelled to depend on gasrate lagged 3 time-points) predict(model 1_gasfurnace, n. ahead=2, newxreg=data. frame(gasra te[2: 3], gasrate[3: 4], gasrate[4: 5], gasrate[5: 6], gasrate[6: 7], gasrate[7: 8])) $pred Time Series: Start = 289 End = 290 Frequency = 1 [1] 56. 76652 57. 52682 $se Time Series: Start = 289 End = 290 Frequency = 1 [1] 0. 3662500 0. 7642258

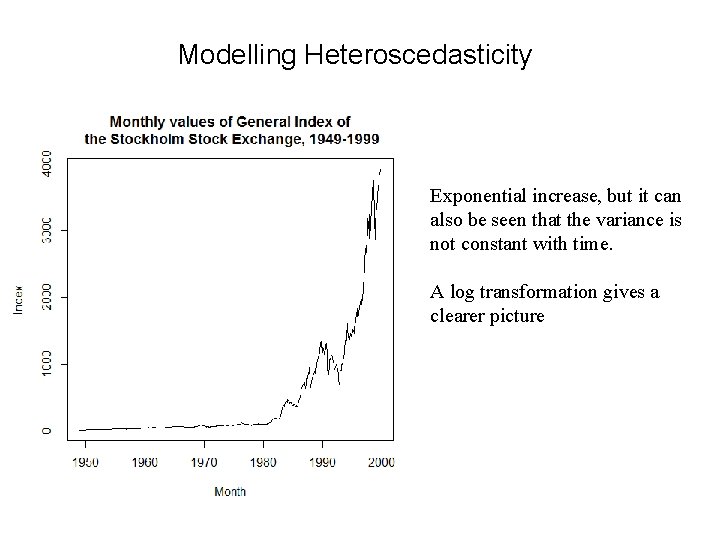

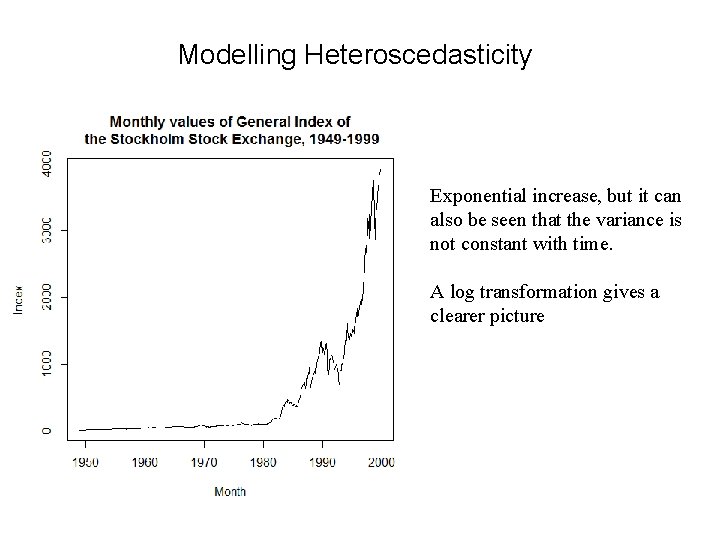

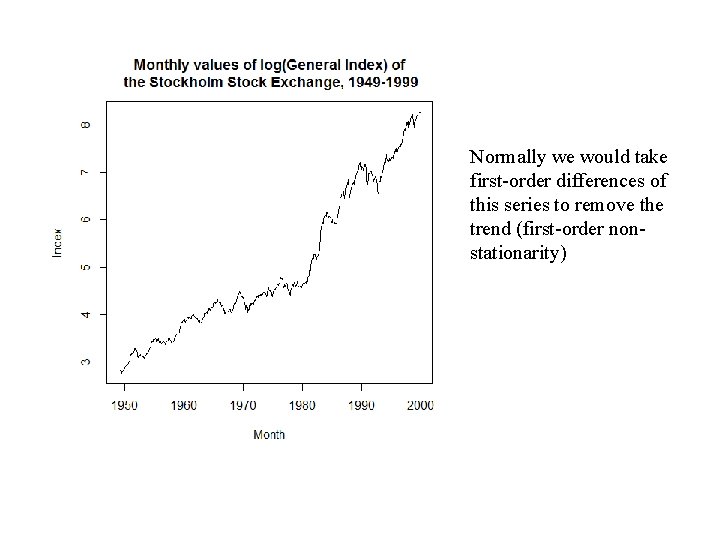

Modelling Heteroscedasticity Exponential increase, but it can also be seen that the variance is not constant with time. A log transformation gives a clearer picture

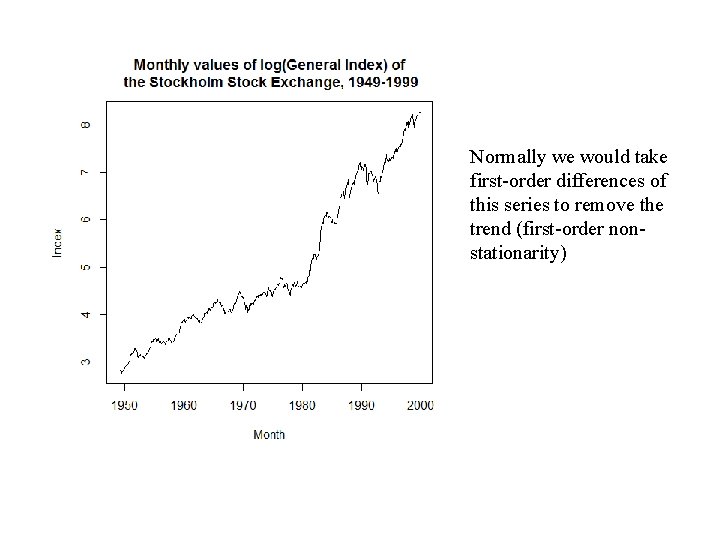

Normally we would take first-order differences of this series to remove the trend (first-order nonstationarity)

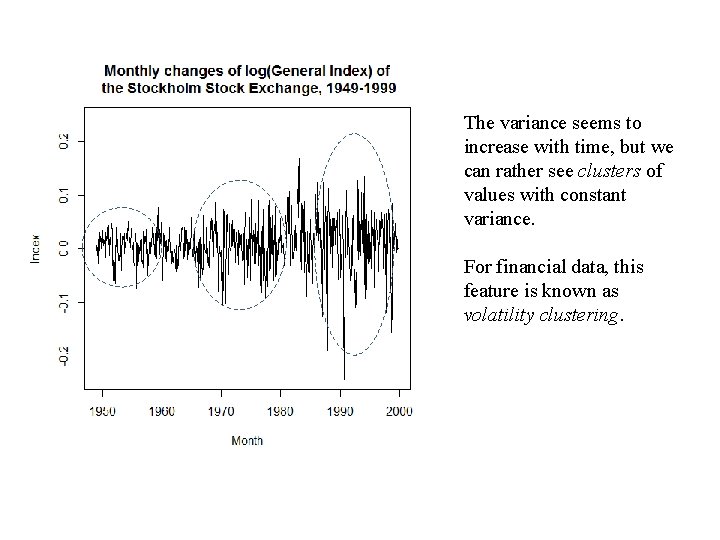

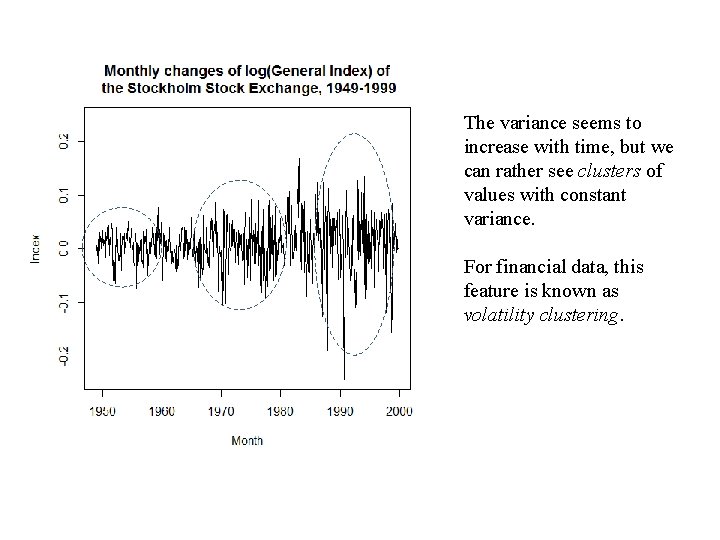

The variance seems to increase with time, but we can rather see clusters of values with constant variance. For financial data, this feature is known as volatility clustering.

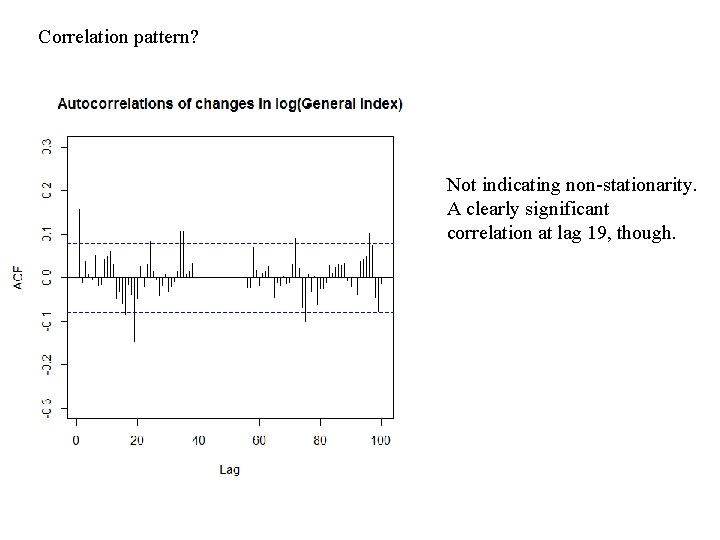

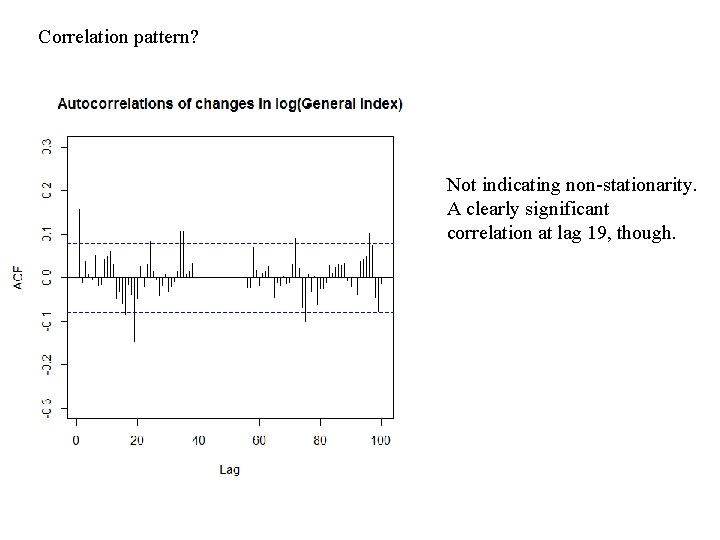

Correlation pattern? Not indicating non-stationarity. A clearly significant correlation at lag 19, though.

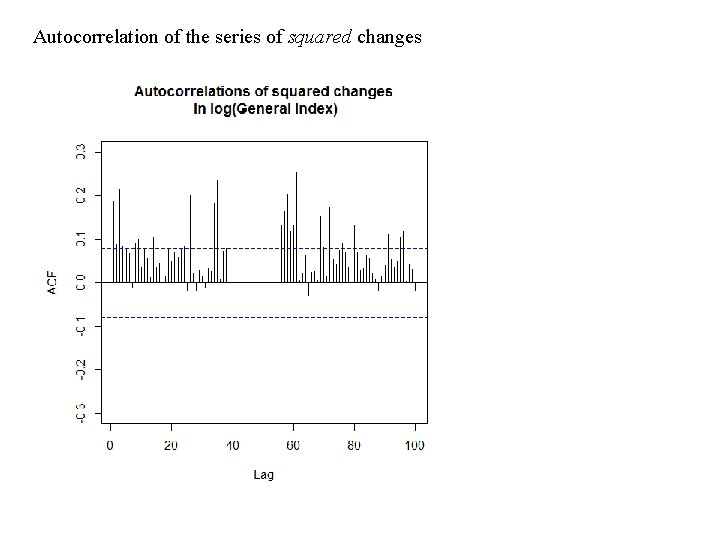

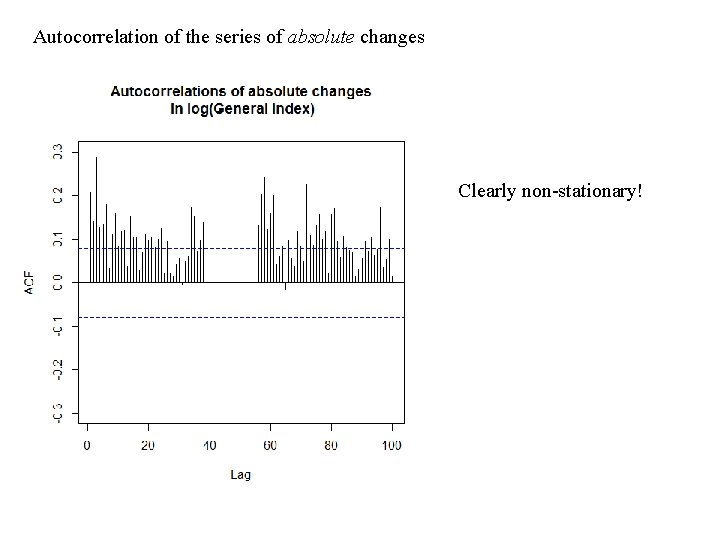

Autocorrelation of the series of absolute changes Clearly non-stationary!

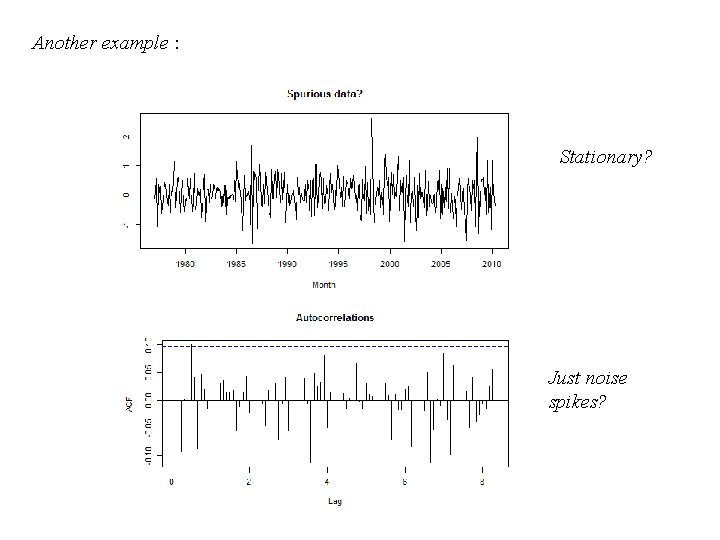

If two variables X and Y are normally distributed Corr(X, Y) = 0 X and Y are independent Corr(|X|, |Y|) = 0 If the distributions are not normal, this equivalence does not hold In a time series where data are non-normally distributed The same type of reasoning can be applied to squared values of the time series. Hence, a time series where the autocorrelations are zero may still possess higherorder dependencies.

Autocorrelation of the series of squared changes

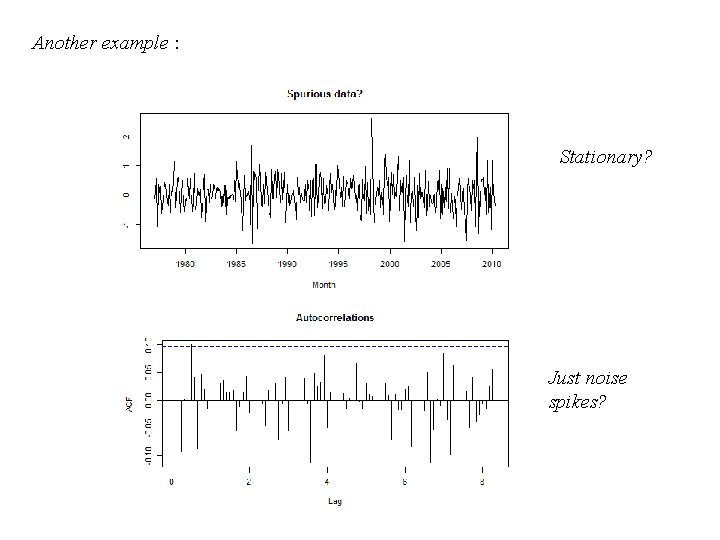

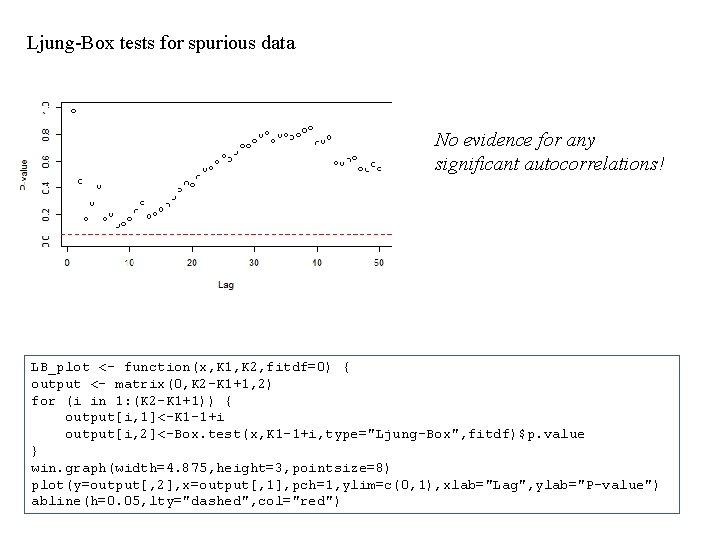

Another example : Stationary? Just noise spikes?

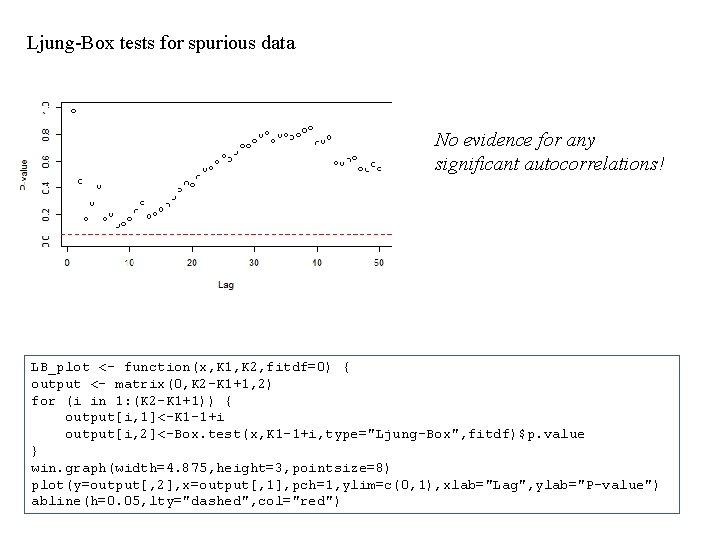

Ljung-Box tests for spurious data No evidence for any significant autocorrelations! LB_plot <- function(x, K 1, K 2, fitdf=0) { output <- matrix(0, K 2 -K 1+1, 2) for (i in 1: (K 2 -K 1+1)) { output[i, 1]<-K 1 -1+i output[i, 2]<-Box. test(x, K 1 -1+i, type="Ljung-Box", fitdf)$p. value } win. graph(width=4. 875, height=3, pointsize=8) plot(y=output[, 2], x=output[, 1], pch=1, ylim=c(0, 1), xlab="Lag", ylab="P-value") abline(h=0. 05, lty="dashed", col="red")

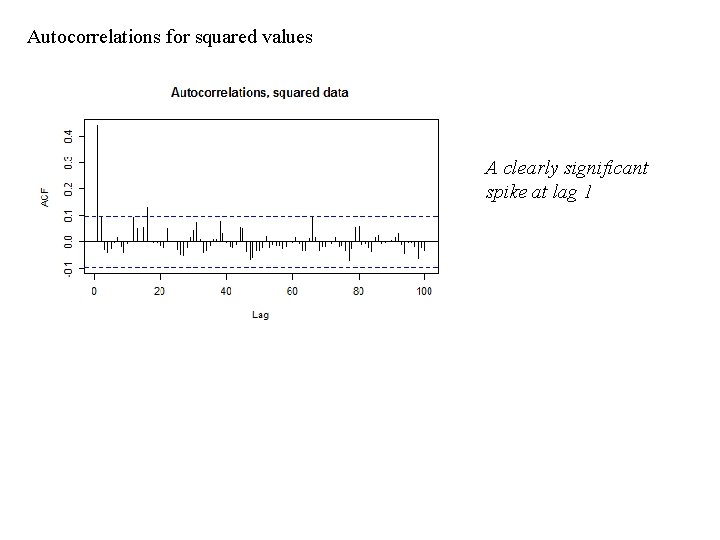

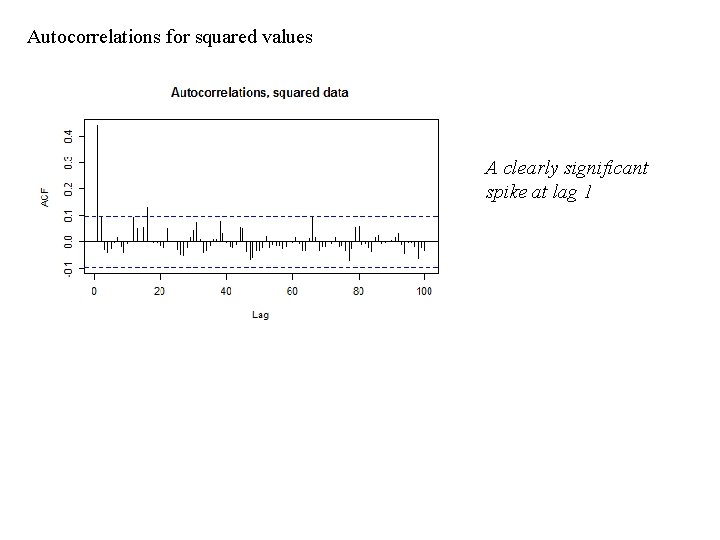

Autocorrelations for squared values A clearly significant spike at lag 1

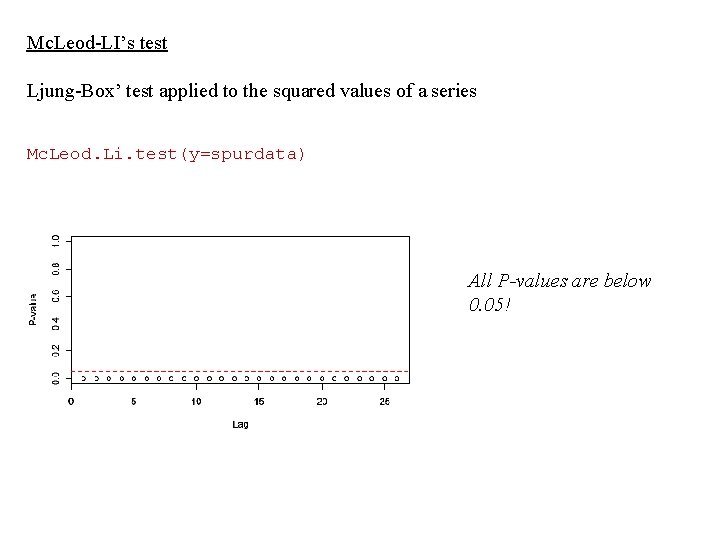

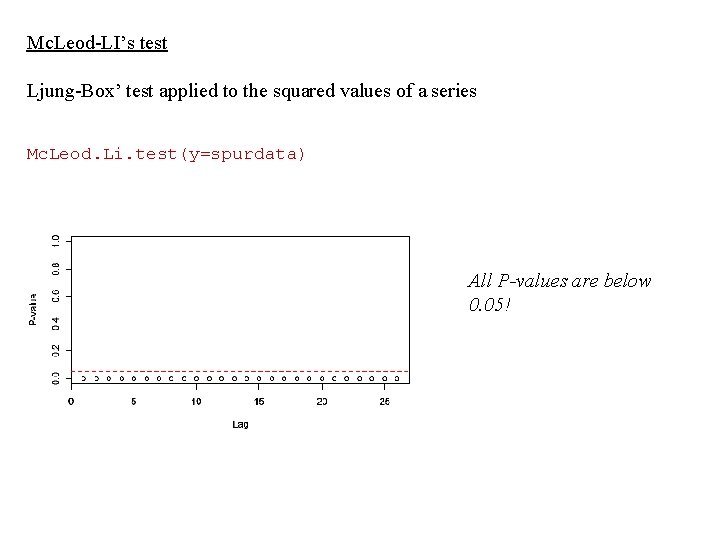

Mc. Leod-LI’s test Ljung-Box’ test applied to the squared values of a series Mc. Leod. Li. test(y=spurdata) All P-values are below 0. 05!

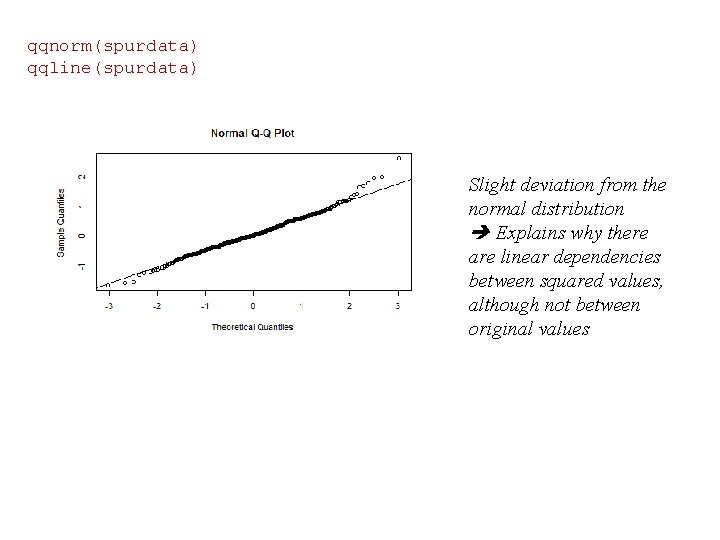

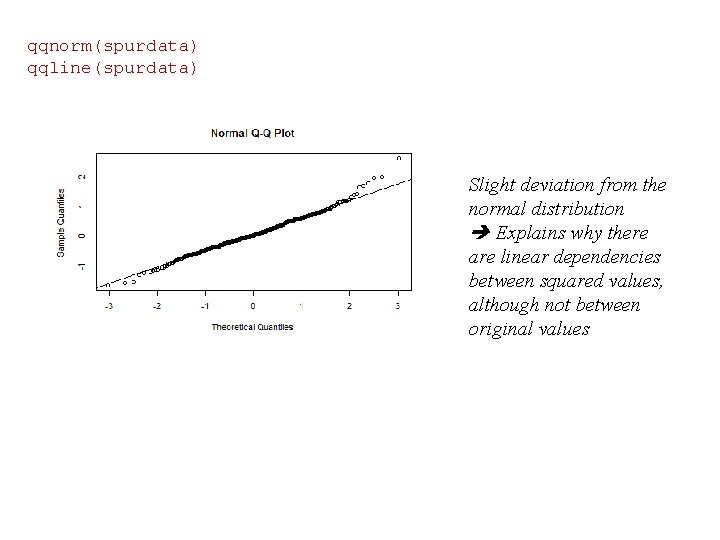

qqnorm(spurdata) qqline(spurdata) Slight deviation from the normal distribution Explains why there are linear dependencies between squared values, although not between original values

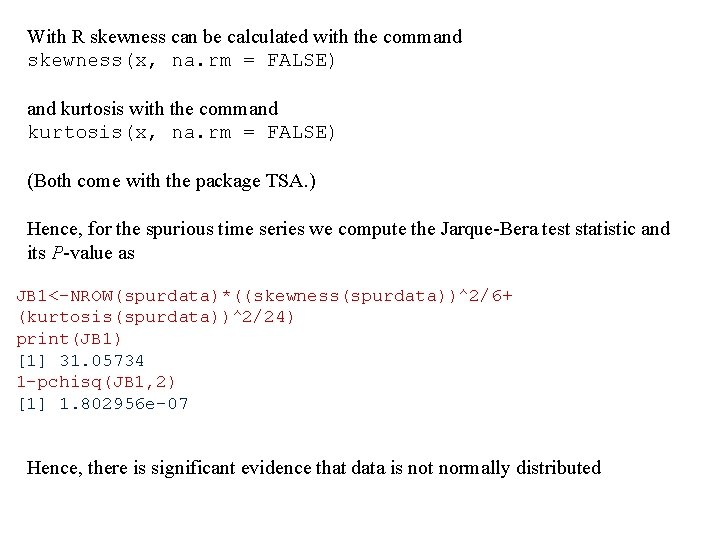

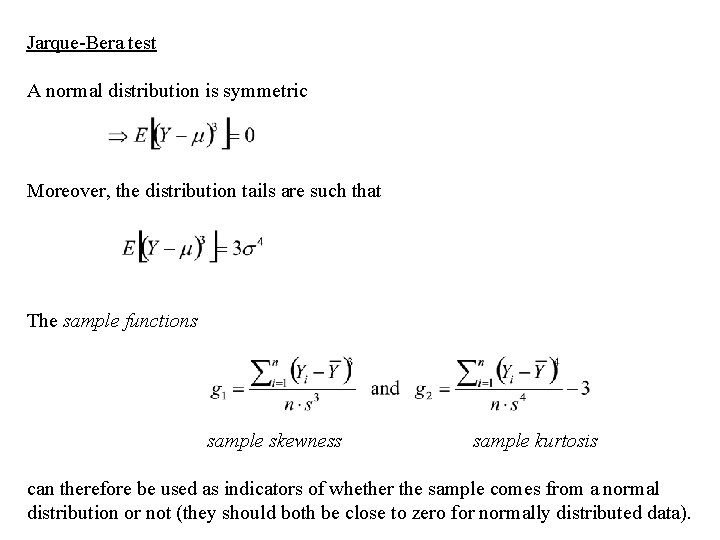

Jarque-Bera test A normal distribution is symmetric Moreover, the distribution tails are such that The sample functions sample skewness sample kurtosis can therefore be used as indicators of whether the sample comes from a normal distribution or not (they should both be close to zero for normally distributed data).

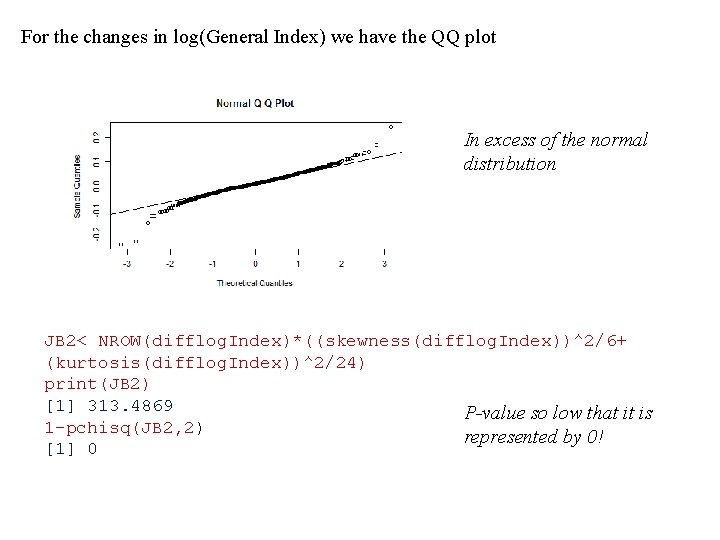

The Jarque-Bera test statistic is defined as and follows approximately a 2 -distribution with two degrees of freedom if the data comes from a normal distribution. Moreover, each term is approximately 2 -distributed with one degree of freedom under normality. Hence, if data either is too skewed or too heavily or lightly tailed compared with normally distributed data, the test statistic will exceed the expected range of a 2 -distribution.

With R skewness can be calculated with the command skewness(x, na. rm = FALSE) and kurtosis with the command kurtosis(x, na. rm = FALSE) (Both come with the package TSA. ) Hence, for the spurious time series we compute the Jarque-Bera test statistic and its P-value as JB 1<-NROW(spurdata)*((skewness(spurdata))^2/6+ (kurtosis(spurdata))^2/24) print(JB 1) [1] 31. 05734 1 -pchisq(JB 1, 2) [1] 1. 802956 e-07 Hence, there is significant evidence that data is not normally distributed

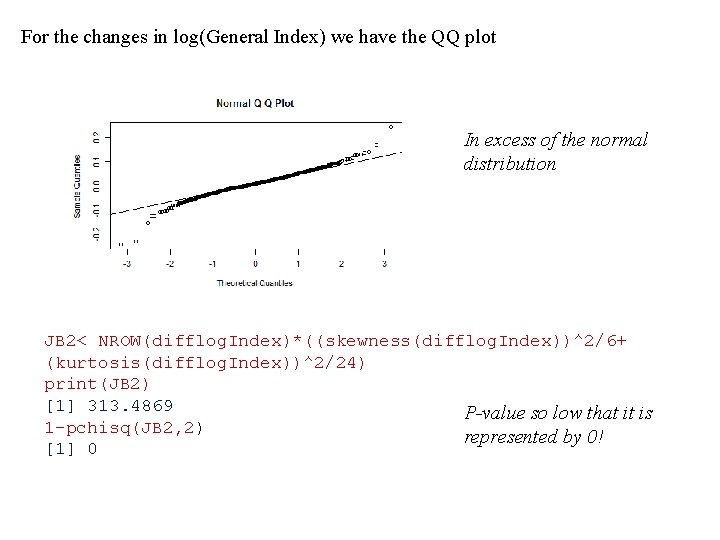

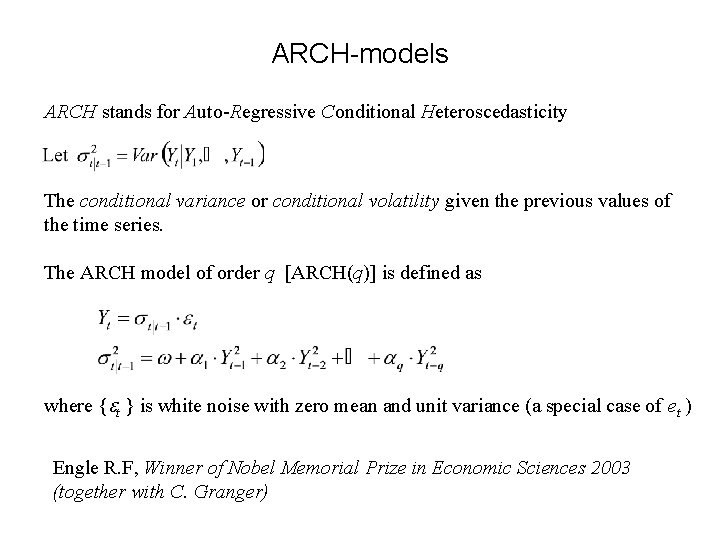

For the changes in log(General Index) we have the QQ plot In excess of the normal distribution JB 2< NROW(difflog. Index)*((skewness(difflog. Index))^2/6+ (kurtosis(difflog. Index))^2/24) print(JB 2) [1] 313. 4869 P-value so low that it is 1 -pchisq(JB 2, 2) represented by 0! [1] 0

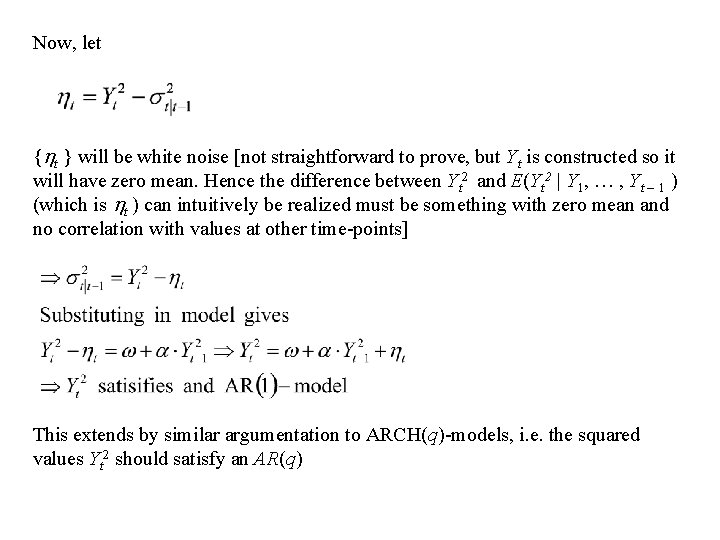

ARCH-models ARCH stands for Auto-Regressive Conditional Heteroscedasticity The conditional variance or conditional volatility given the previous values of the time series. The ARCH model of order q [ARCH(q)] is defined as where { t } is white noise with zero mean and unit variance (a special case of et ) Engle R. F, Winner of Nobel Memorial Prize in Economic Sciences 2003 (together with C. Granger)

Modelling ARCH in practice Consider the ARCH(1). model

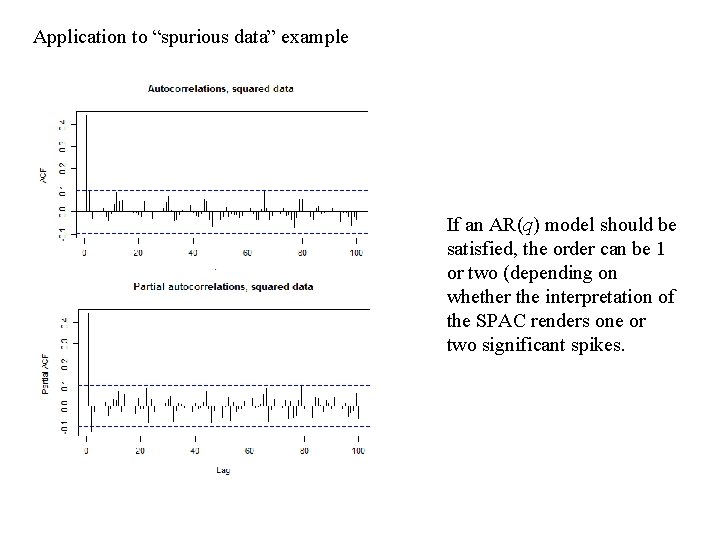

Now, let { t } will be white noise [not straightforward to prove, but Yt is constructed so it will have zero mean. Hence the difference between Yt 2 and E(Yt 2 | Y 1, … , Yt – 1 ) (which is t ) can intuitively be realized must be something with zero mean and no correlation with values at other time-points] This extends by similar argumentation to ARCH(q)-models, i. e. the squared values Yt 2 should satisfy an AR(q)

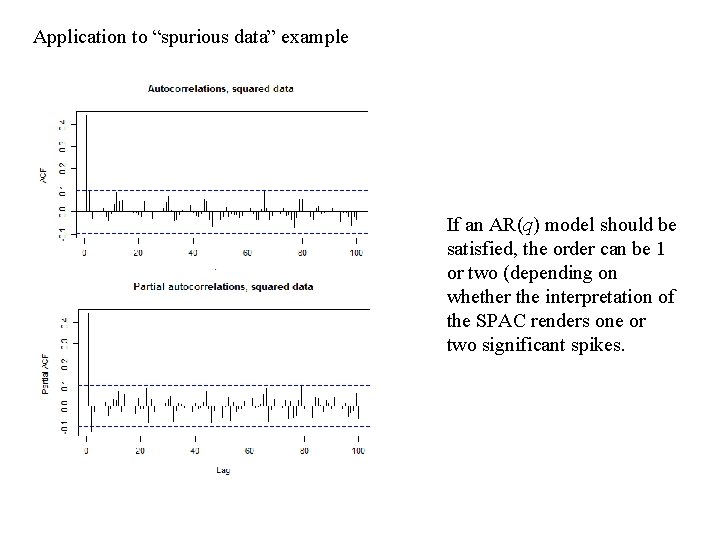

Application to “spurious data” example If an AR(q) model should be satisfied, the order can be 1 or two (depending on whether the interpretation of the SPAC renders one or two significant spikes.

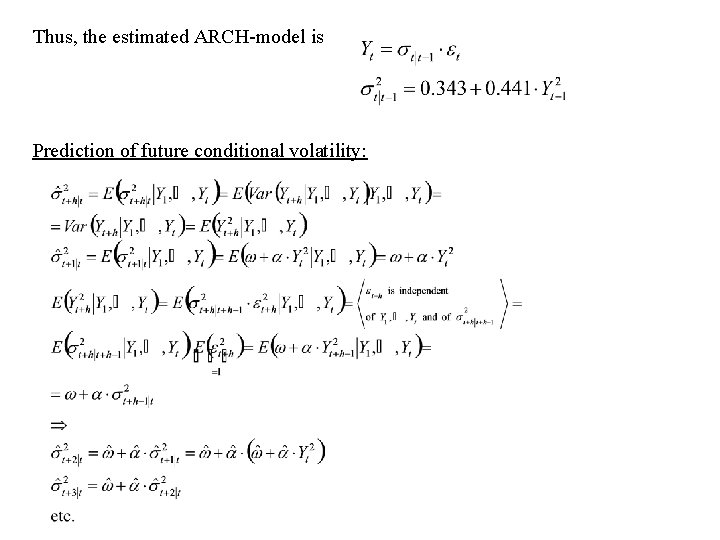

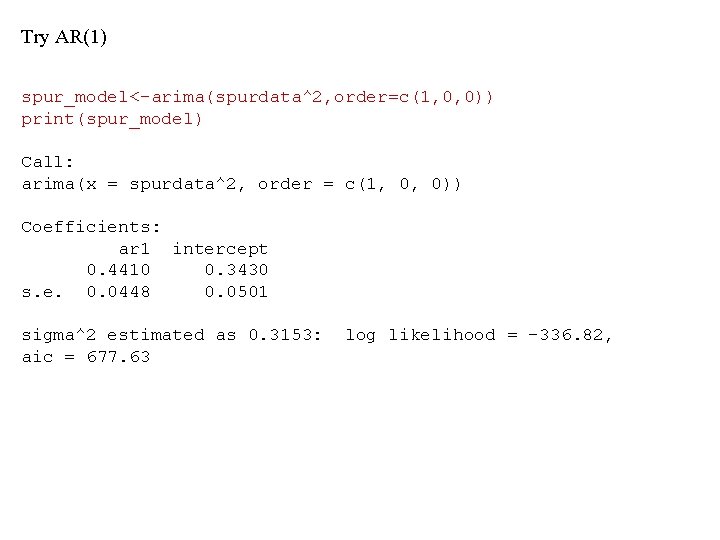

Try AR(1) spur_model<-arima(spurdata^2, order=c(1, 0, 0)) print(spur_model) Call: arima(x = spurdata^2, order = c(1, 0, 0)) Coefficients: ar 1 intercept 0. 4410 0. 3430 s. e. 0. 0448 0. 0501 sigma^2 estimated as 0. 3153: aic = 677. 63 log likelihood = -336. 82,

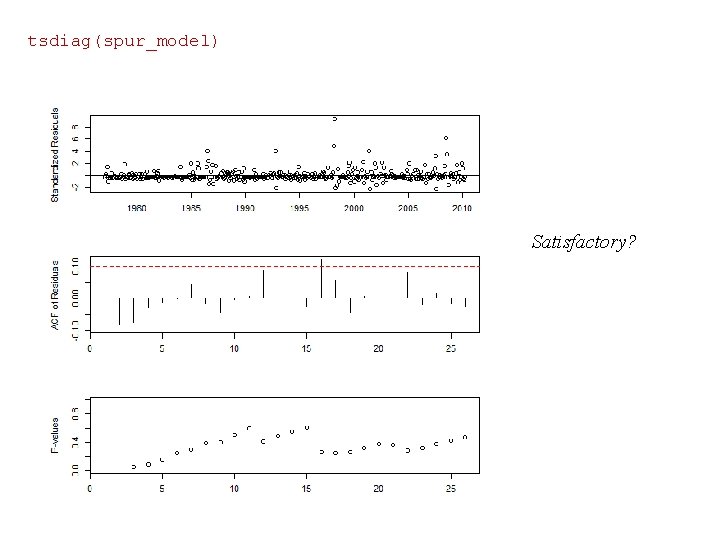

tsdiag(spur_model) Satisfactory?

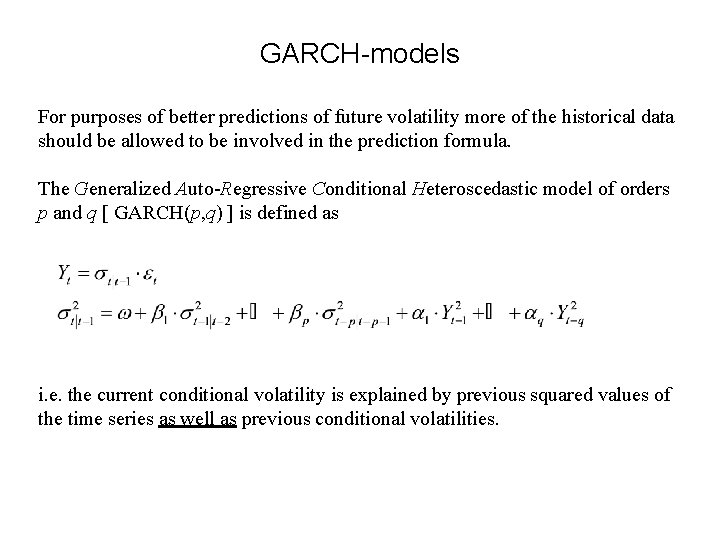

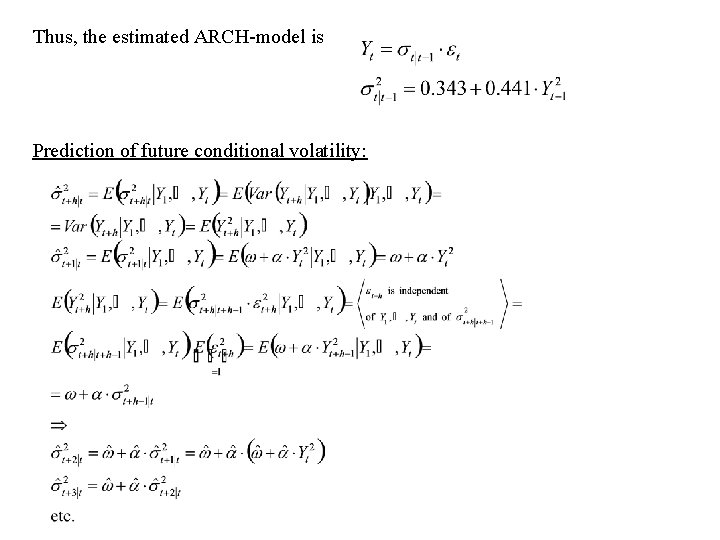

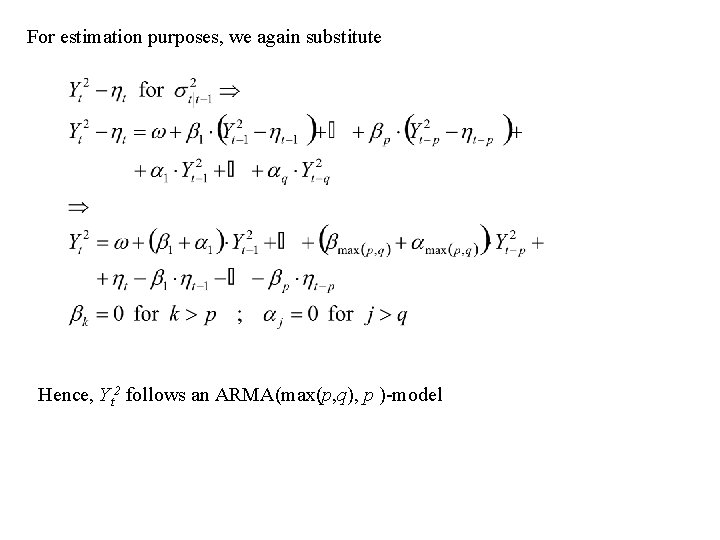

Thus, the estimated ARCH-model is Prediction of future conditional volatility:

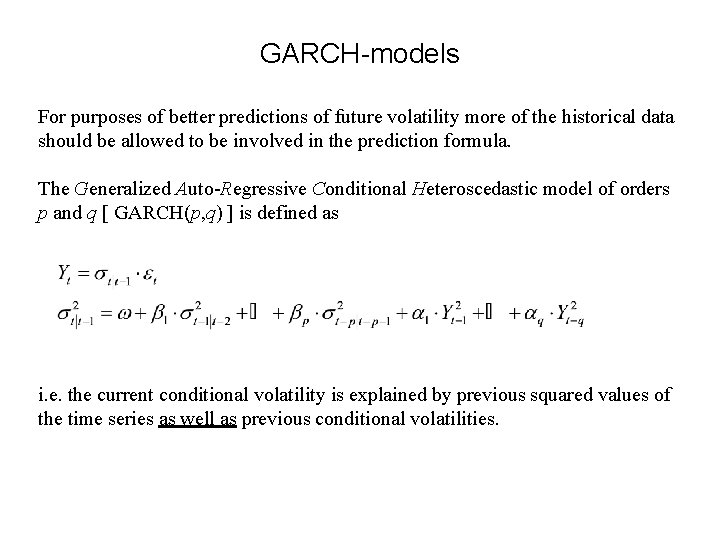

GARCH-models For purposes of better predictions of future volatility more of the historical data should be allowed to be involved in the prediction formula. The Generalized Auto-Regressive Conditional Heteroscedastic model of orders p and q [ GARCH(p, q) ] is defined as i. e. the current conditional volatility is explained by previous squared values of the time series as well as previous conditional volatilities.

For estimation purposes, we again substitute Hence, Yt 2 follows an ARMA(max(p, q), p )-model

Note! An ARCH(q)-model is a GARCH(0, q)-model A GARCH(p, q)-model can be shown to be weakly stationary if A prediction formula can be derived as a recursion formula, now including conditional volatilities several steps backwards. See Cryer & Chan, page 297 for details.

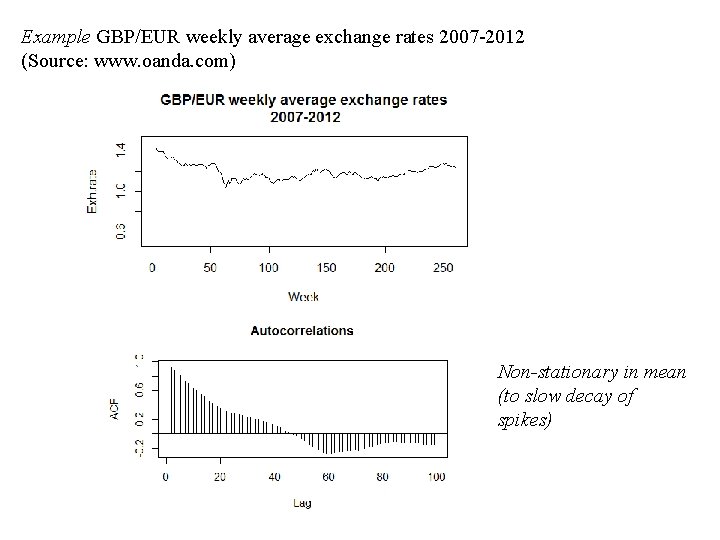

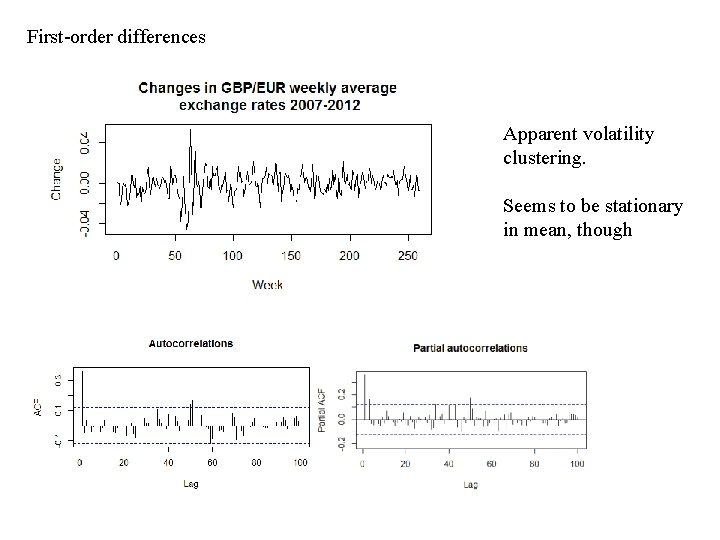

Example GBP/EUR weekly average exchange rates 2007 -2012 (Source: www. oanda. com) Non-stationary in mean (to slow decay of spikes)

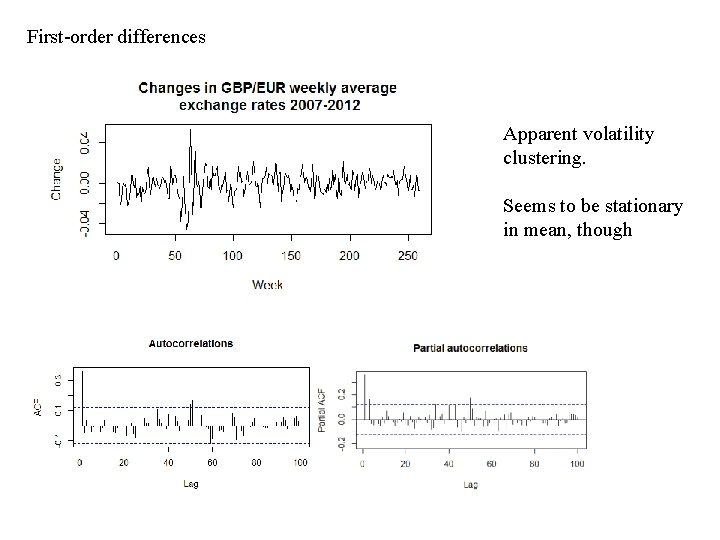

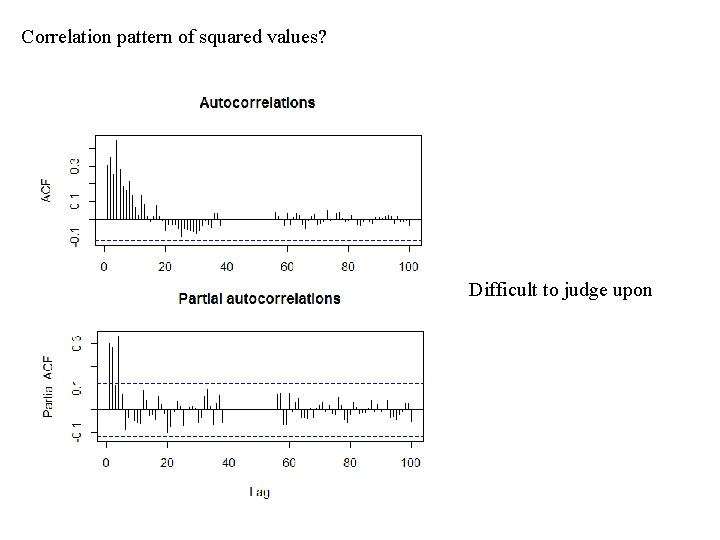

First-order differences Apparent volatility clustering. Seems to be stationary in mean, though

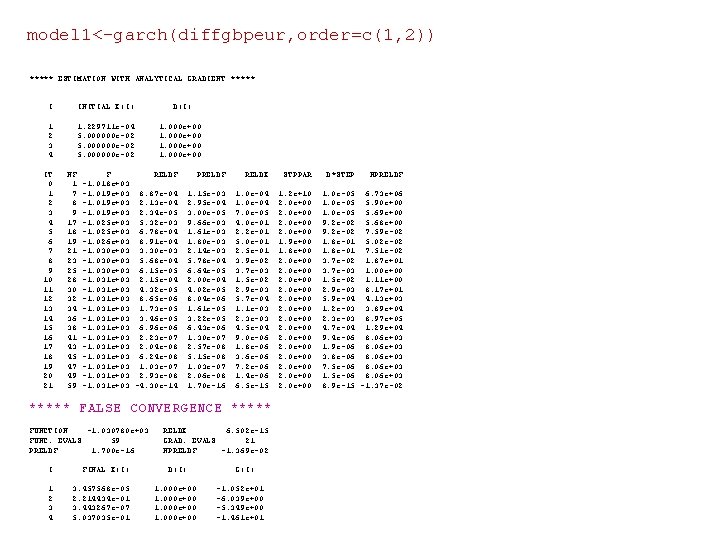

Correlation pattern of squared values? Difficult to judge upon

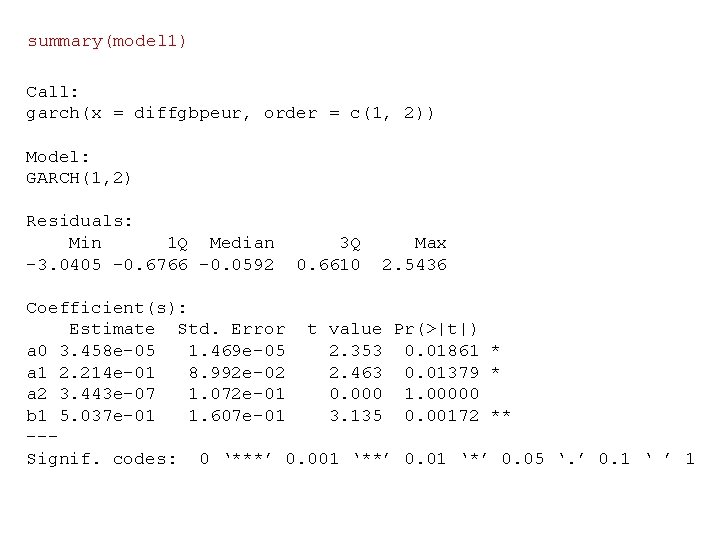

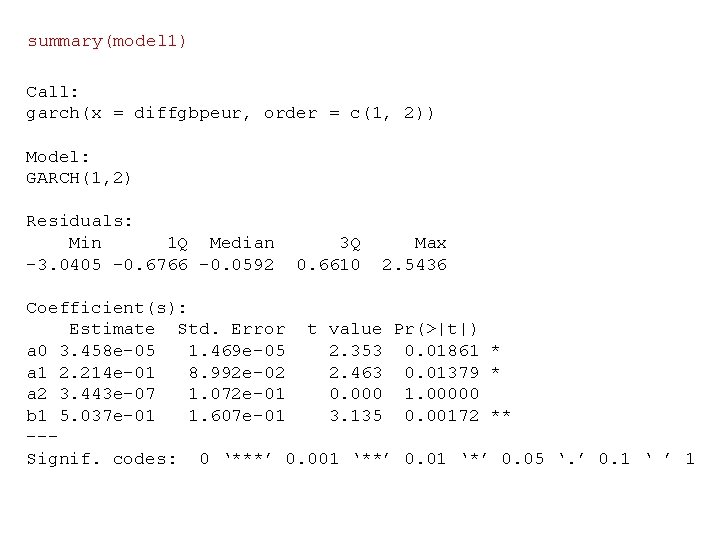

EACF table AR/MA 0 1 0 x x 1 x o 2 x x 3 x x 4 x x 5 x x 6 x x 7 o o 2 x o o x o x 3 x x o o 4 x o o o 5 x o o o o 6 x o o o x x 7 x o o o o 8 x o o o o 9 o o o o 10 o o o o 11 x o o o o 12 o o o o 13 o o o o ARMA(1, 1) ARMA(2, 2) Candidate GARCH models: ARMA(1, 1) 1 = max(p, q) GARCH(0, 1) [=ARCH(1)] or GARCH(1, 1) ARMA(2, 2) 2 = max(p, q) GARCH(0, 2, GARCH(1, 2) or GARCH(2, 2) Try GARCH(1, 2) !

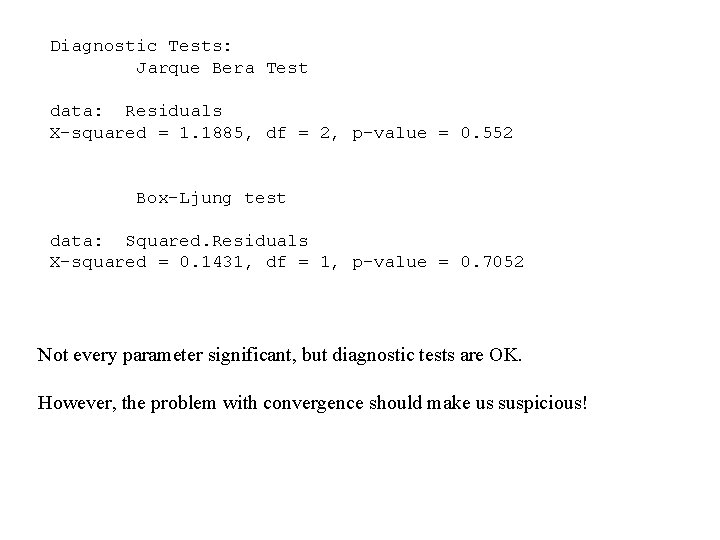

model 1<-garch(diffgbpeur, order=c(1, 2)) ***** ESTIMATION WITH ANALYTICAL GRADIENT ***** I INITIAL X(I) 1 2 3 4 1. 229711 e-04 5. 000000 e-02 IT 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 NF 1 7 8 9 17 18 19 21 23 25 28 30 32 34 36 38 41 43 45 47 49 59 D(I) 1. 000 e+00 F RELDF -1. 018 e+03 -1. 019 e+03 8. 87 e-04 -1. 019 e+03 2. 13 e-04 -1. 019 e+03 2. 34 e-05 -1. 025 e+03 5. 32 e-03 -1. 025 e+03 6. 78 e-04 -1. 026 e+03 8. 91 e-04 -1. 030 e+03 3. 30 e-03 -1. 030 e+03 5. 68 e-04 -1. 030 e+03 6. 15 e-05 -1. 031 e+03 2. 15 e-04 -1. 031 e+03 4. 32 e-05 -1. 031 e+03 8. 65 e-06 -1. 031 e+03 1. 73 e-05 -1. 031 e+03 3. 46 e-05 -1. 031 e+03 6. 96 e-06 -1. 031 e+03 2. 23 e-07 -1. 031 e+03 2. 04 e-08 -1. 031 e+03 6. 24 e-08 -1. 031 e+03 1. 03 e-07 -1. 031 e+03 2. 93 e-08 -1. 031 e+03 -4. 30 e-14 PRELDF RELDX STPPAR 1. 15 e-03 2. 95 e-04 3. 00 e-05 9. 66 e-03 1. 61 e-03 1. 80 e-03 2. 14 e-03 5. 78 e-04 6. 64 e-05 2. 00 e-04 4. 02 e-05 8. 04 e-06 1. 61 e-05 3. 22 e-05 6. 43 e-06 1. 30 e-07 2. 57 e-08 5. 15 e-08 1. 03 e-07 2. 06 e-08 1. 70 e-16 1. 0 e-04 7. 0 e-05 4. 0 e-01 2. 2 e-01 5. 0 e-01 2. 5 e-01 3. 9 e-02 3. 7 e-03 1. 5 e-02 2. 9 e-03 5. 7 e-04 1. 1 e-03 2. 3 e-03 4. 5 e-04 9. 0 e-06 1. 8 e-06 3. 6 e-06 7. 2 e-06 1. 4 e-06 6. 5 e-15 1. 2 e+10 2. 0 e+00 1. 9 e+00 1. 8 e+00 2. 0 e+00 2. 0 e+00 ***** FALSE CONVERGENCE ***** FUNCTION -1. 030780 e+03 FUNC. EVALS 59 PRELDF 1. 700 e-16 I FINAL X(I) 1 2 3 4 3. 457568 e-05 2. 214434 e-01 3. 443267 e-07 5. 037035 e-01 RELDX 6. 502 e-15 GRAD. EVALS 21 NPRELDF -1. 369 e-02 D(I) 1. 000 e+00 G(I) -1. 052 e+01 -6. 039 e+00 -5. 349 e+00 -1. 461 e+01 D*STEP NPRELDF 1. 0 e-05 6. 73 e+06 1. 0 e-05 5. 90 e+00 1. 0 e-05 5. 69 e+00 9. 2 e-02 5. 68 e+00 9. 2 e-02 7. 59 e-02 1. 8 e-01 5. 02 e-02 1. 8 e-01 7. 51 e-02 3. 7 e-02 1. 87 e+01 3. 7 e-03 1. 00 e+00 1. 5 e-02 1. 11 e+00 2. 9 e-03 8. 17 e+01 5. 9 e-04 4. 13 e+03 1. 2 e-03 3. 89 e+04 2. 3 e-03 8. 97 e+05 4. 7 e-04 1. 29 e+04 9. 4 e-06 8. 06 e+03 1. 9 e-06 8. 06 e+03 3. 8 e-06 8. 06 e+03 7. 5 e-06 8. 06 e+03 1. 5 e-06 8. 06 e+03 8. 9 e-15 -1. 37 e-02

summary(model 1) Call: garch(x = diffgbpeur, order = c(1, 2)) Model: GARCH(1, 2) Residuals: Min 1 Q Median -3. 0405 -0. 6766 -0. 0592 3 Q 0. 6610 Max 2. 5436 Coefficient(s): Estimate Std. Error t value Pr(>|t|) a 0 3. 458 e-05 1. 469 e-05 2. 353 0. 01861 * a 1 2. 214 e-01 8. 992 e-02 2. 463 0. 01379 * a 2 3. 443 e-07 1. 072 e-01 0. 000 1. 00000 b 1 5. 037 e-01 1. 607 e-01 3. 135 0. 00172 ** --Signif. codes: 0 ‘***’ 0. 001 ‘**’ 0. 01 ‘*’ 0. 05 ‘. ’ 0. 1 ‘ ’ 1

Diagnostic Tests: Jarque Bera Test data: Residuals X-squared = 1. 1885, df = 2, p-value = 0. 552 Box-Ljung test data: Squared. Residuals X-squared = 0. 1431, df = 1, p-value = 0. 7052 Not every parameter significant, but diagnostic tests are OK. However, the problem with convergence should make us suspicious!

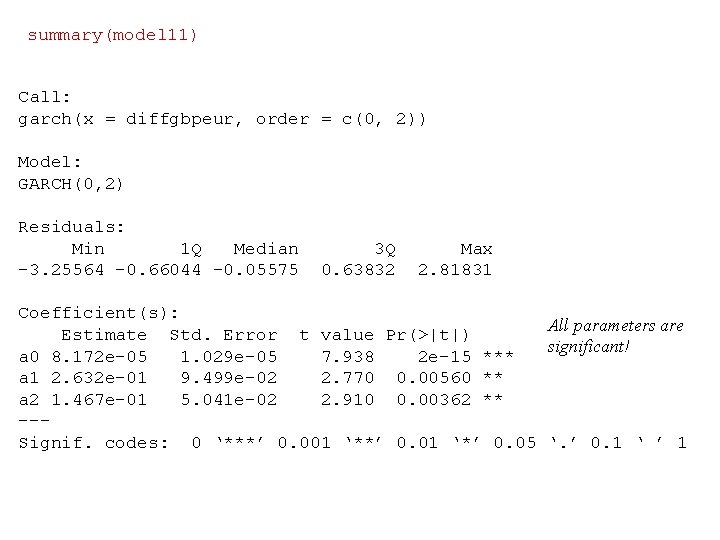

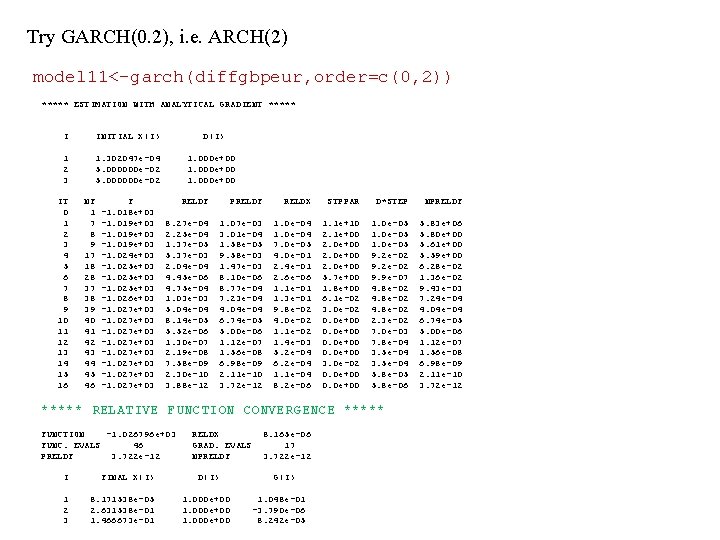

Try GARCH(0. 2), i. e. ARCH(2) model 11<-garch(diffgbpeur, order=c(0, 2)) ***** ESTIMATION WITH ANALYTICAL GRADIENT ***** I INITIAL X(I) D(I) 1 2 3 1. 302047 e-04 5. 000000 e-02 1. 000 e+00 IT 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 NF 1 7 8 9 17 18 28 37 38 39 40 41 42 43 44 45 46 F -1. 018 e+03 -1. 019 e+03 -1. 024 e+03 -1. 025 e+03 -1. 026 e+03 -1. 027 e+03 -1. 027 e+03 RELDF PRELDF RELDX STPPAR D*STEP NPRELDF 8. 27 e-04 2. 25 e-04 1. 37 e-05 5. 37 e-03 2. 04 e-04 4. 45 e-06 4. 75 e-04 1. 03 e-03 5. 04 e-04 8. 14 e-05 5. 52 e-06 1. 30 e-07 2. 19 e-08 7. 58 e-09 2. 30 e-10 3. 88 e-12 1. 07 e-03 3. 01 e-04 1. 58 e-05 9. 58 e-03 1. 47 e-03 8. 10 e-06 8. 77 e-04 7. 23 e-04 4. 04 e-04 6. 74 e-05 5. 00 e-06 1. 12 e-07 1. 56 e-08 6. 98 e-09 2. 11 e-10 3. 72 e-12 1. 0 e-04 7. 0 e-05 4. 0 e-01 2. 4 e-01 2. 6 e-06 1. 1 e-01 1. 3 e-01 9. 8 e-02 4. 0 e-02 1. 1 e-02 1. 4 e-03 5. 2 e-04 6. 2 e-04 1. 1 e-04 8. 2 e-06 1. 1 e+10 2. 1 e+00 2. 0 e+00 5. 7 e+00 1. 8 e+00 6. 1 e-02 3. 0 e-02 0. 0 e+00 3. 0 e-02 0. 0 e+00 1. 0 e-05 9. 2 e-02 9. 9 e-07 4. 8 e-02 2. 3 e-02 7. 0 e-03 7. 8 e-04 3. 5 e-04 5. 8 e-05 5. 8 e-06 5. 83 e+06 5. 80 e+00 5. 61 e+00 5. 59 e+00 6. 28 e-02 1. 36 e-02 9. 43 e-03 7. 24 e-04 4. 04 e-04 6. 74 e-05 5. 00 e-06 1. 12 e-07 1. 56 e-08 6. 98 e-09 2. 11 e-10 3. 72 e-12 ***** RELATIVE FUNCTION CONVERGENCE ***** FUNCTION -1. 026796 e+03 FUNC. EVALS 46 PRELDF 3. 722 e-12 I FINAL X(I) 1 2 3 8. 171538 e-05 2. 631538 e-01 1. 466673 e-01 RELDX GRAD. EVALS NPRELDF D(I) 1. 000 e+00 8. 165 e-06 17 3. 722 e-12 G(I) 1. 048 e-01 -3. 790 e-06 8. 242 e-05

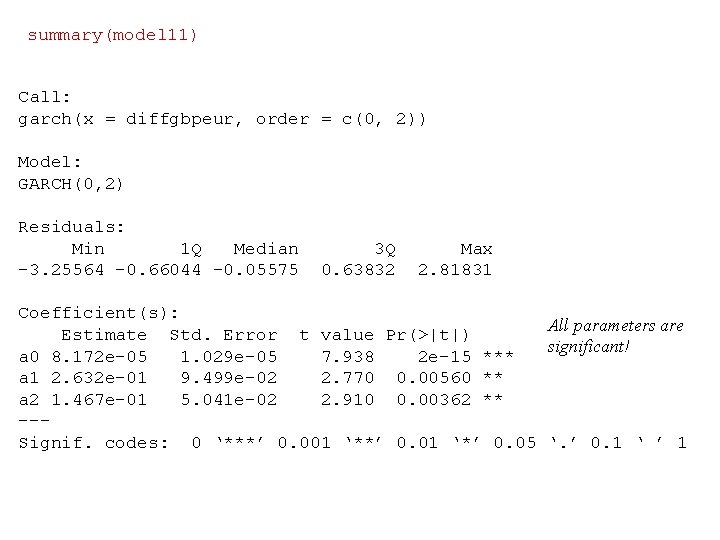

summary(model 11) Call: garch(x = diffgbpeur, order = c(0, 2)) Model: GARCH(0, 2) Residuals: Min 1 Q Median -3. 25564 -0. 66044 -0. 05575 3 Q 0. 63832 Max 2. 81831 Coefficient(s): All parameters are Estimate Std. Error t value Pr(>|t|) significant! a 0 8. 172 e-05 1. 029 e-05 7. 938 2 e-15 *** a 1 2. 632 e-01 9. 499 e-02 2. 770 0. 00560 ** a 2 1. 467 e-01 5. 041 e-02 2. 910 0. 00362 ** --Signif. codes: 0 ‘***’ 0. 001 ‘**’ 0. 01 ‘*’ 0. 05 ‘. ’ 0. 1 ‘ ’ 1

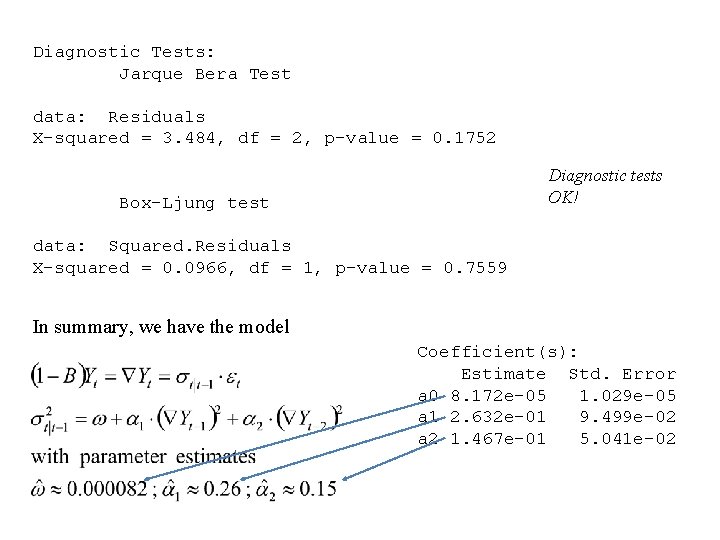

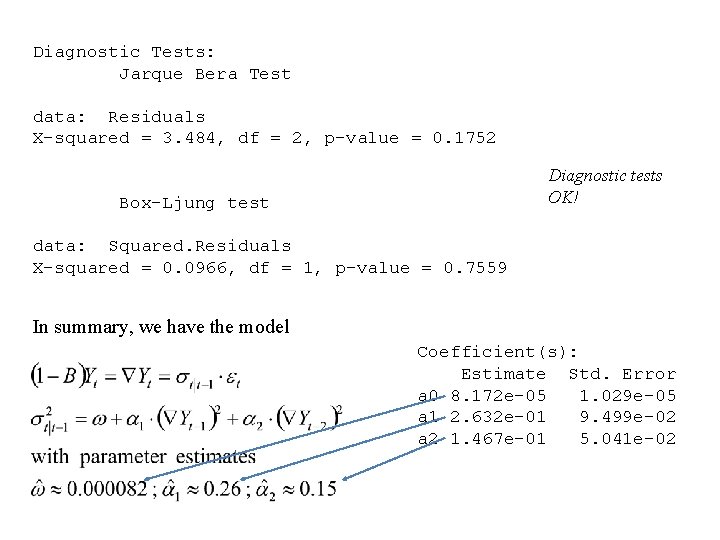

Diagnostic Tests: Jarque Bera Test data: Residuals X-squared = 3. 484, df = 2, p-value = 0. 1752 Diagnostic tests OK! Box-Ljung test data: Squared. Residuals X-squared = 0. 0966, df = 1, p-value = 0. 7559 In summary, we have the model Coefficient(s): Estimate Std. Error a 0 8. 172 e-05 1. 029 e-05 a 1 2. 632 e-01 9. 499 e-02 a 2 1. 467 e-01 5. 041 e-02

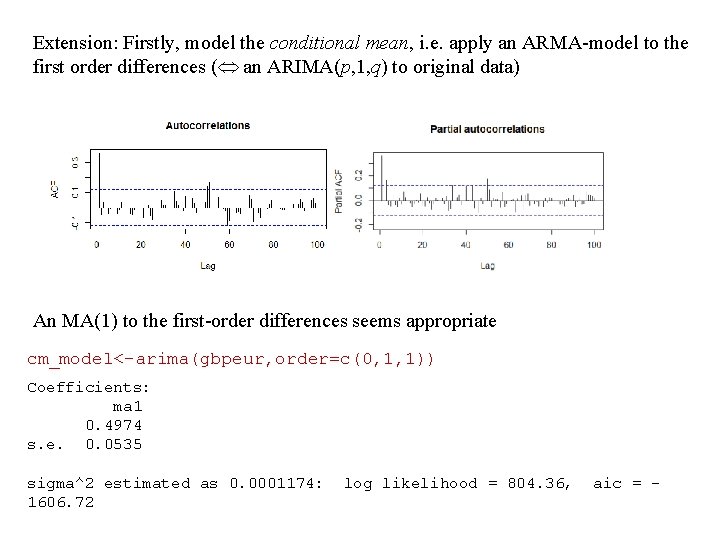

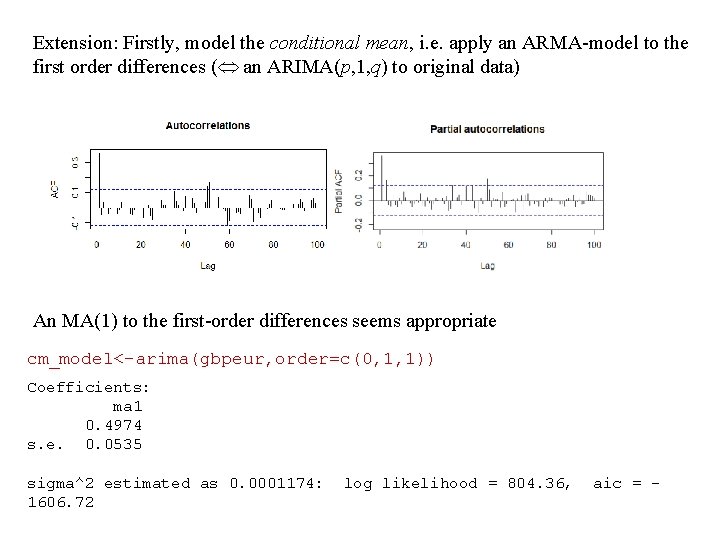

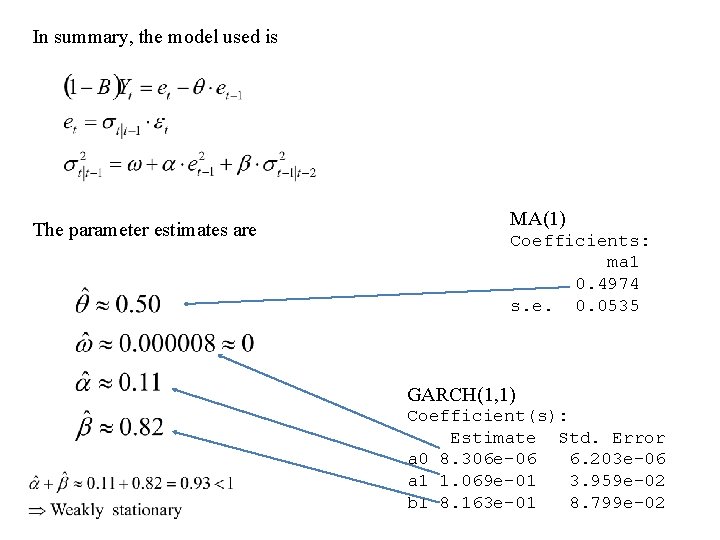

Extension: Firstly, model the conditional mean, i. e. apply an ARMA-model to the first order differences ( an ARIMA(p, 1, q) to original data) An MA(1) to the first-order differences seems appropriate cm_model<-arima(gbpeur, order=c(0, 1, 1)) Coefficients: ma 1 0. 4974 s. e. 0. 0535 sigma^2 estimated as 0. 0001174: 1606. 72 log likelihood = 804. 36, aic = -

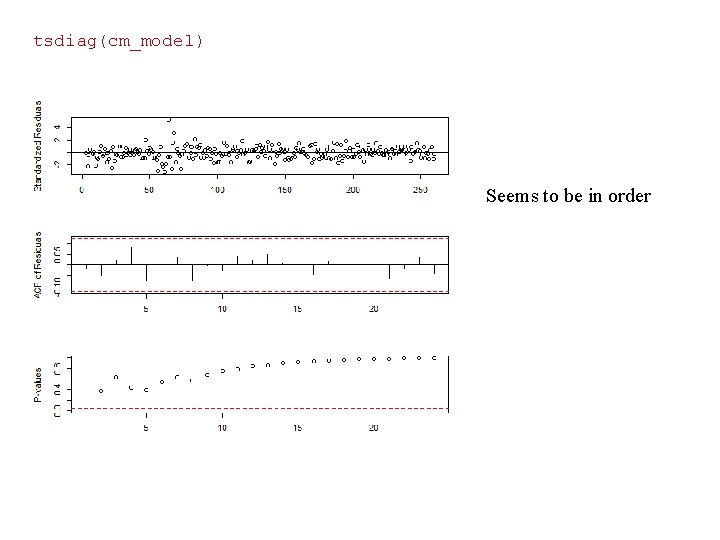

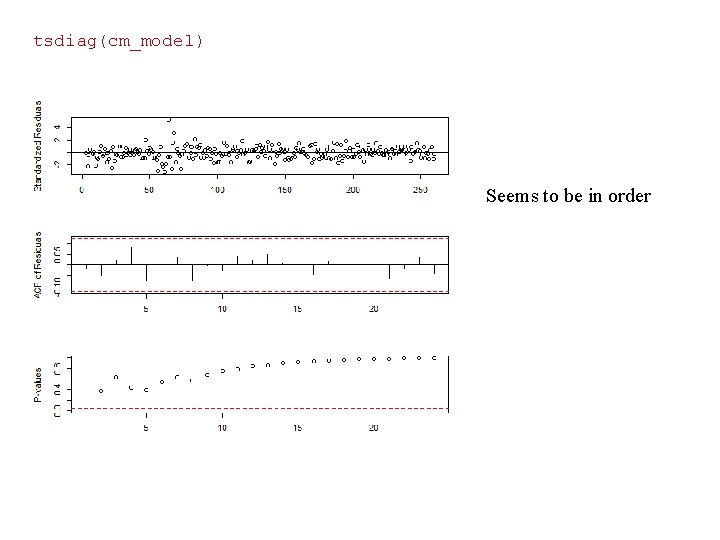

tsdiag(cm_model) Seems to be in order

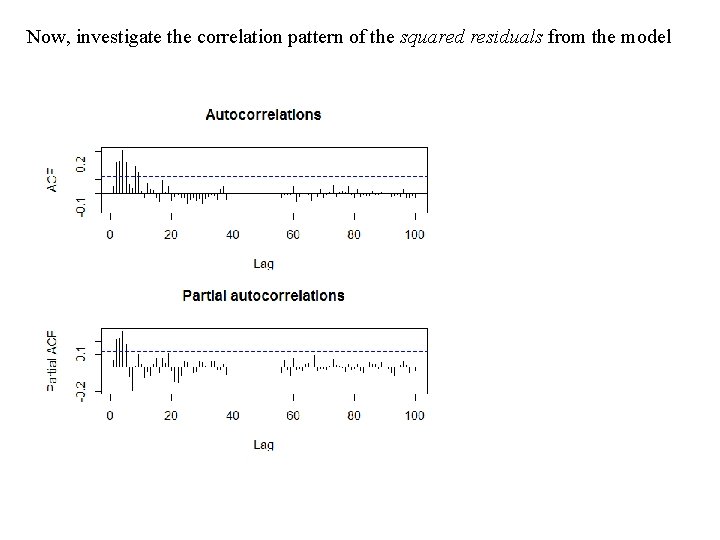

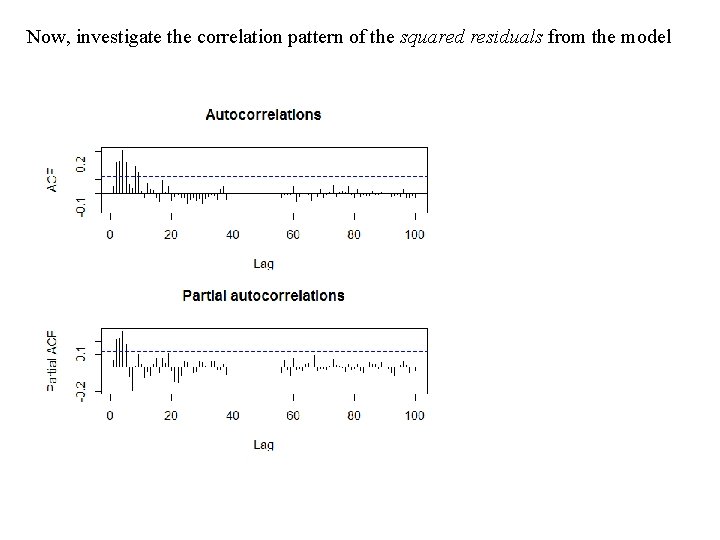

Now, investigate the correlation pattern of the squared residuals from the model

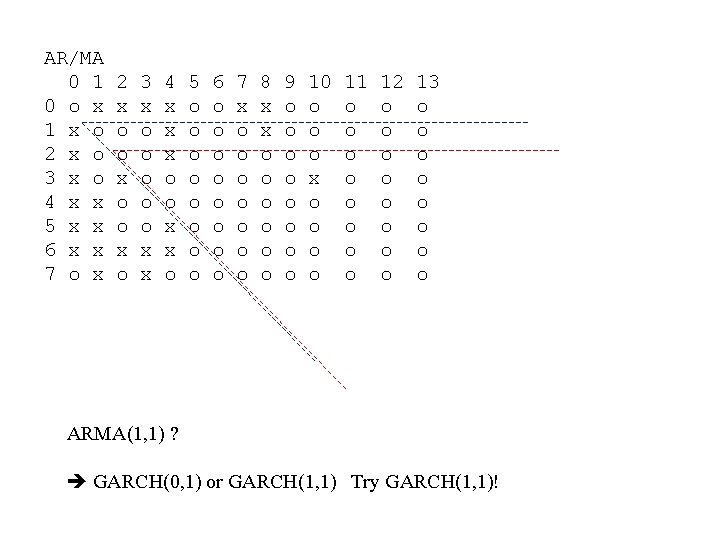

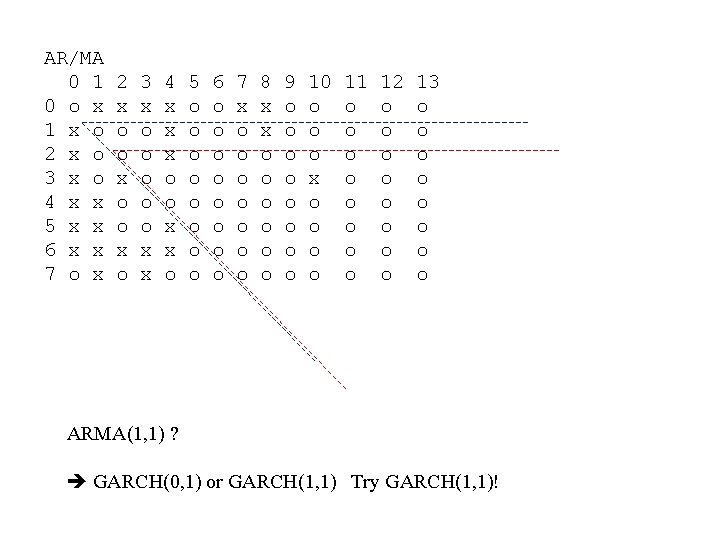

AR/MA 0 1 0 o x 1 x o 2 x o 3 x o 4 x x 5 x x 6 x x 7 o x 2 x o o x o 3 x o o o x x 4 x x x o o x x o 5 o o o o 6 o o o o 7 x o o o o 8 x x o o o 9 o o o o 10 o o o x o o 11 o o o o 12 o o o o 13 o o o o ARMA(1, 1) ? GARCH(0, 1) or GARCH(1, 1) Try GARCH(1, 1)!

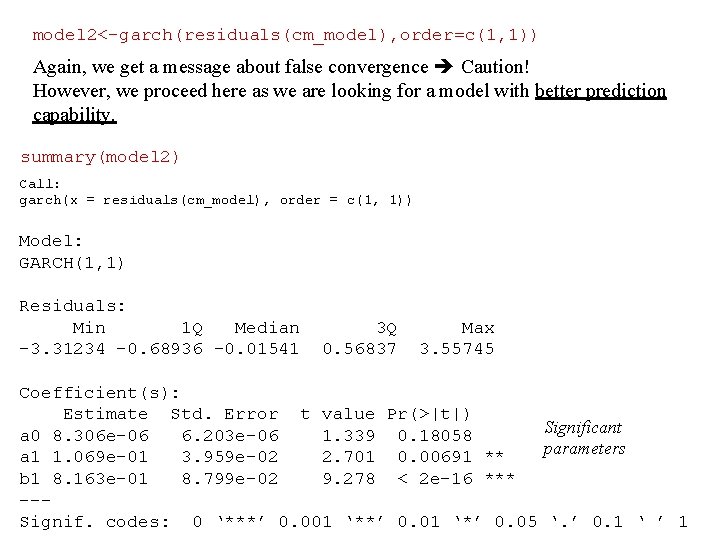

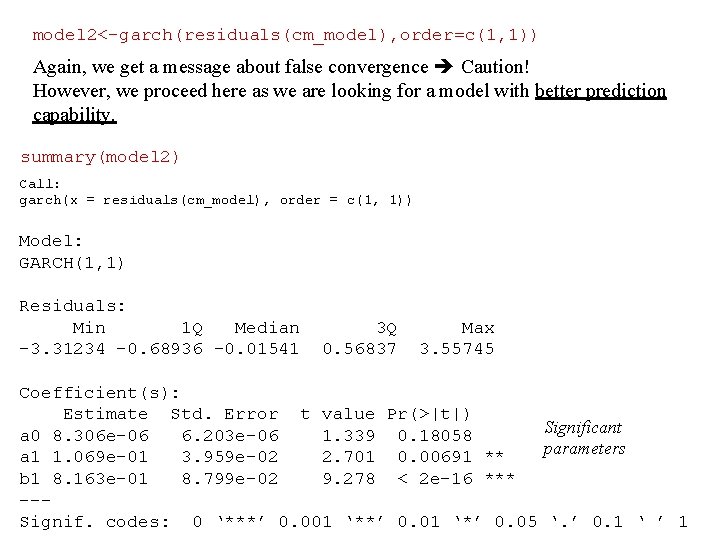

model 2<-garch(residuals(cm_model), order=c(1, 1)) Again, we get a message about false convergence Caution! However, we proceed here as we are looking for a model with better prediction capability. summary(model 2) Call: garch(x = residuals(cm_model), order = c(1, 1)) Model: GARCH(1, 1) Residuals: Min 1 Q Median -3. 31234 -0. 68936 -0. 01541 3 Q 0. 56837 Max 3. 55745 Coefficient(s): Estimate Std. Error t value Pr(>|t|) Significant a 0 8. 306 e-06 6. 203 e-06 1. 339 0. 18058 parameters a 1 1. 069 e-01 3. 959 e-02 2. 701 0. 00691 ** b 1 8. 163 e-01 8. 799 e-02 9. 278 < 2 e-16 *** --Signif. codes: 0 ‘***’ 0. 001 ‘**’ 0. 01 ‘*’ 0. 05 ‘. ’ 0. 1 ‘ ’ 1

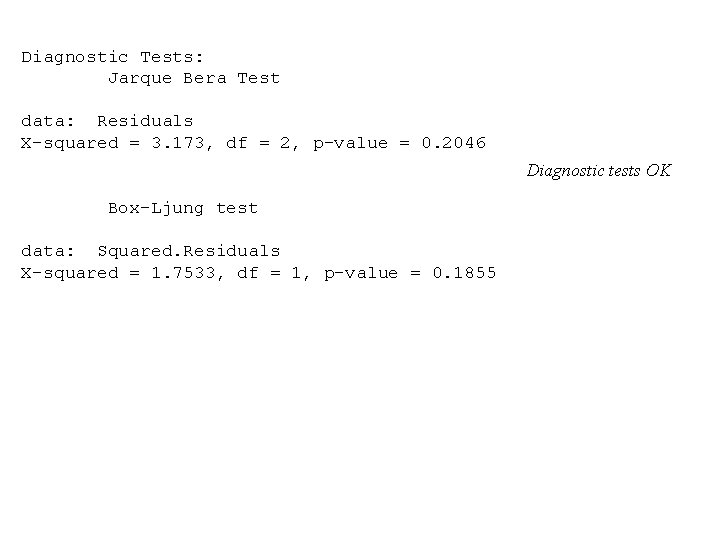

Diagnostic Tests: Jarque Bera Test data: Residuals X-squared = 3. 173, df = 2, p-value = 0. 2046 Diagnostic tests OK Box-Ljung test data: Squared. Residuals X-squared = 1. 7533, df = 1, p-value = 0. 1855

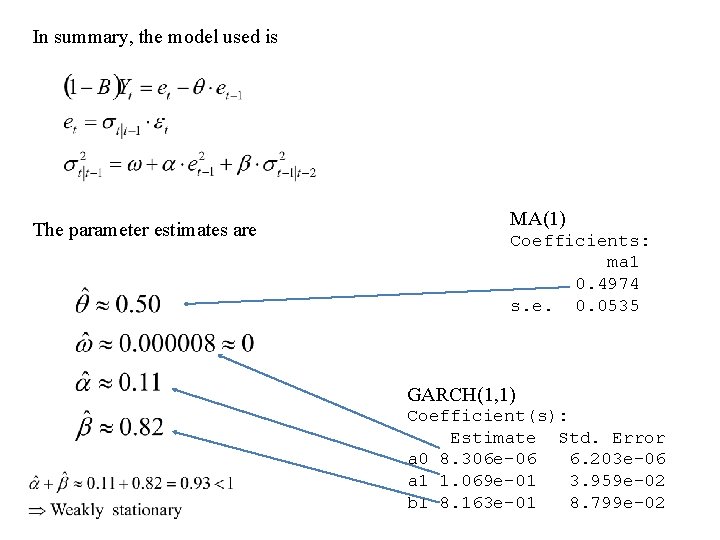

In summary, the model used is The parameter estimates are MA(1) Coefficients: ma 1 0. 4974 s. e. 0. 0535 GARCH(1, 1) Coefficient(s): Estimate Std. Error a 0 8. 306 e-06 6. 203 e-06 a 1 1. 069 e-01 3. 959 e-02 b 1 8. 163 e-01 8. 799 e-02