Game Playing Chapter 5 Game playing Search applied

- Slides: 24

Game Playing Chapter 5

Game playing § Search applied to a problem against an adversary l l some actions are not under the control of the problem -solver there is an opponent (hostile agent) § Since it is a search problem, we must specify states & operations/actions l l initial state = current board; operators = legal moves; goal state = game over; utility function = value for the outcome of the game usually, (board) games have well-defined rules & the entire state is accessible

Basic idea § Consider all possible moves for yourself § Consider all possible moves for your opponent § Continue this process until a point is reached where we know the outcome of the game § From this point, propagate the best move back l l choose best move for yourself at every turn assume your opponent will make the optimal move on their turn

Example § Tic-tac-toe (Nilsson’s book)

Problem § For interesting games, it is simply not computationally possible to look at all possible moves l l l in chess, there are on average 35 choices per turn on average, there about 50 moves per player thus, the number of possibilities to consider is 35100

Solution § Given that we can only look ahead k number of moves and that we can’t see all the way to the end of the game, we need a heuristic function that substitutes for looking to the end of the game l l l this is usually called a static board evaluator (SBE) a perfect static board evaluator would tell us for what moves we could win, lose or draw possible for tic-tac-toe, but not for chess

Creating a SBE approximation § Typically, made up of rules of thumb l for example, in most chess books each piece is given a value • pawn = 1; rook = 5; queen = 9; etc. l further, there are other important characteristics of a position • e. g. , center control l we put all of these factors into one function, weighting each aspect differently potentially, to determine the value of a position • board_value = * material_balance + * center_control + … [the coefficients might change as the game goes on]

Compromise § If we could search to the end of the game, then choosing a move would be relatively easy l just use minimax § Or, if we had a perfect scoring function (SBE), we wouldn’t have to do any search (just choose best move from current state -- one step look ahead) § Since neither is feasible for interesting games, we combine the two ideas

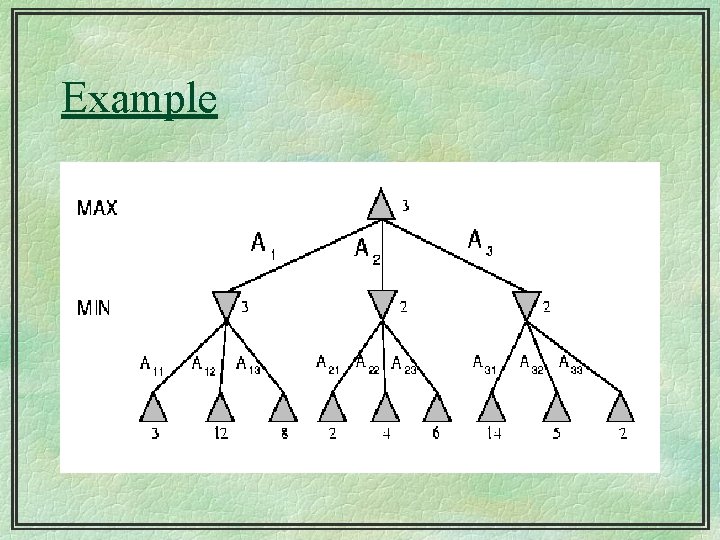

Basic idea § Build the game tree as deep as possible given the time constraints § apply an approximate SBE to the leaves § propagate scores back up to the root & use this information to choose a move § example

Score percolation: MINIMAX § When it is my turn, I will choose the move that maximizes the (approximate) SBE score § When it is my opponent’s turn, they will choose the move that minimizes the SBE l l because we are dealing with competitive games, what is good for me is bad for my opponent & what is bad for me is good for my opponent assume the opponent plays optimally [worst-case assumption]

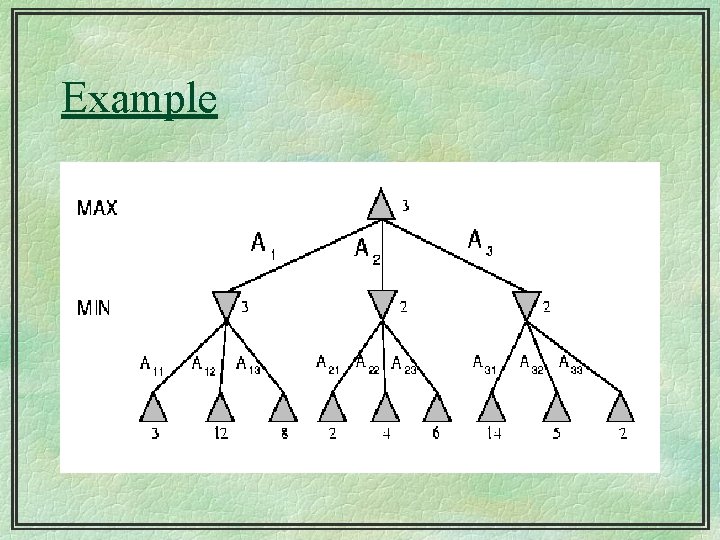

MINIMAX algorithm § Start at the leaves of the trees and apply the SBE § If it is my turn, choose the maximum SBE score for each sub-tree § If it is my opponent’s turn, choose the minimum score for each sub-tree § The scores on the leaves are how good the board appears from that point § Example

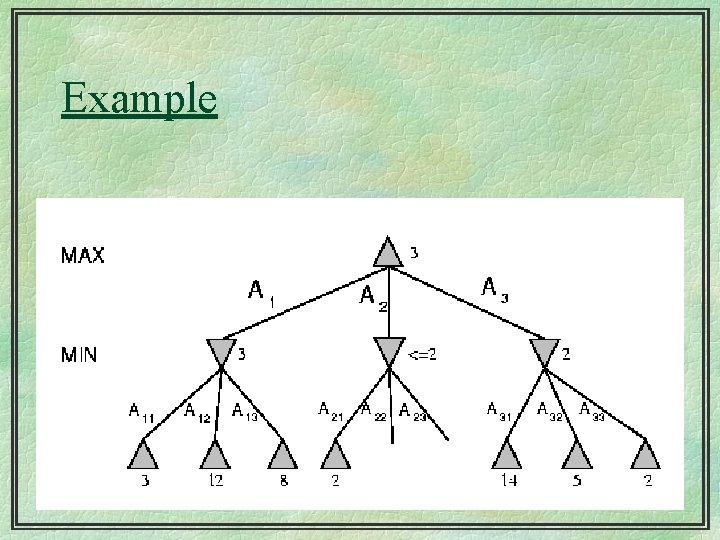

Example

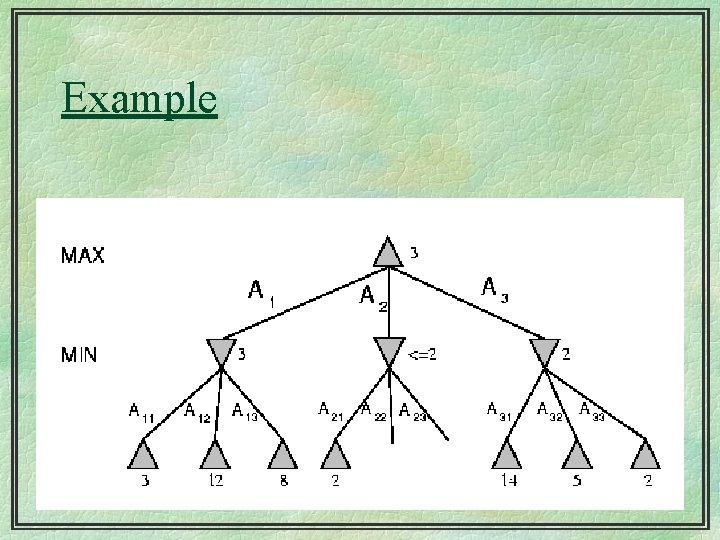

Alpha-beta pruning § While minimax is an effective algorithm, it can be inefficient l l l one reason for this is that it does unnecessary work it evaluates sub-trees where the value of the subtree is irrelevant alpha-beta pruning gets the same answer as minimax but it eliminates some useless work

Example

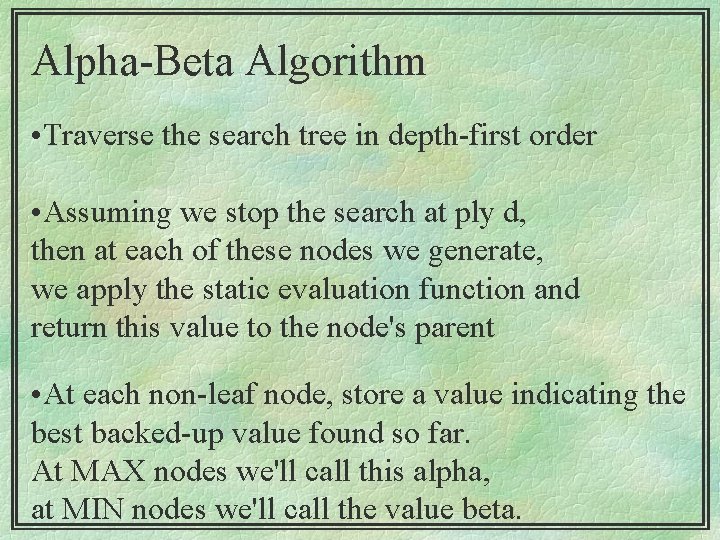

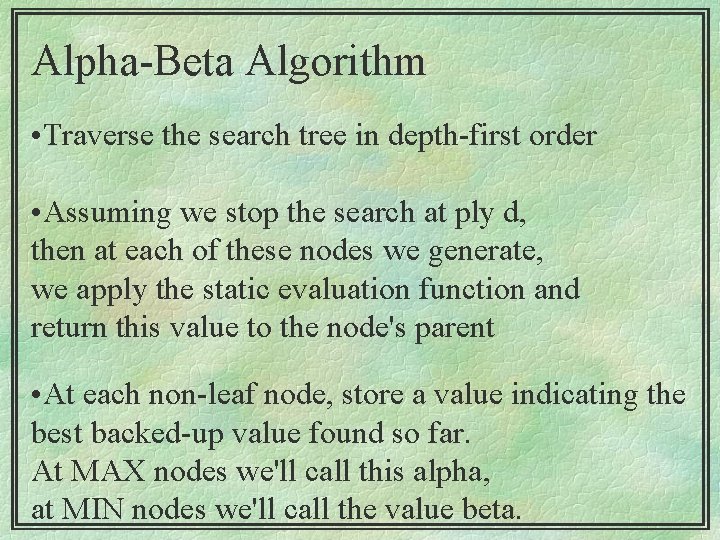

Alpha-Beta Algorithm • Traverse the search tree in depth-first order • Assuming we stop the search at ply d, then at each of these nodes we generate, we apply the static evaluation function and return this value to the node's parent • At each non-leaf node, store a value indicating the best backed-up value found so far. At MAX nodes we'll call this alpha, at MIN nodes we'll call the value beta.

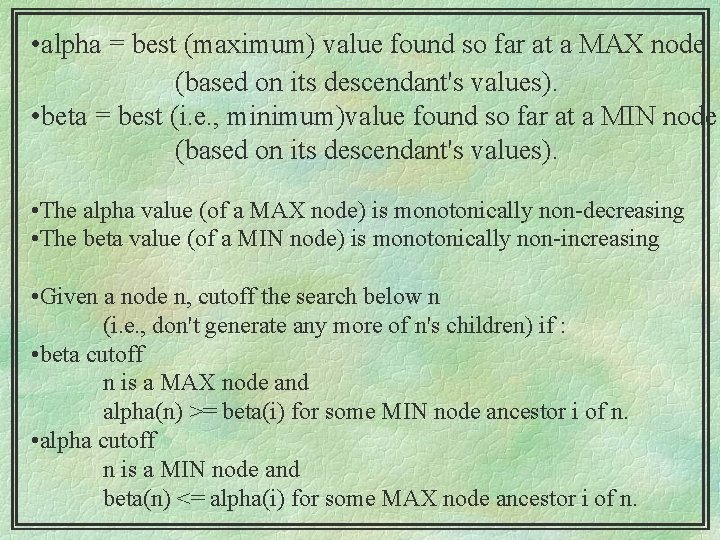

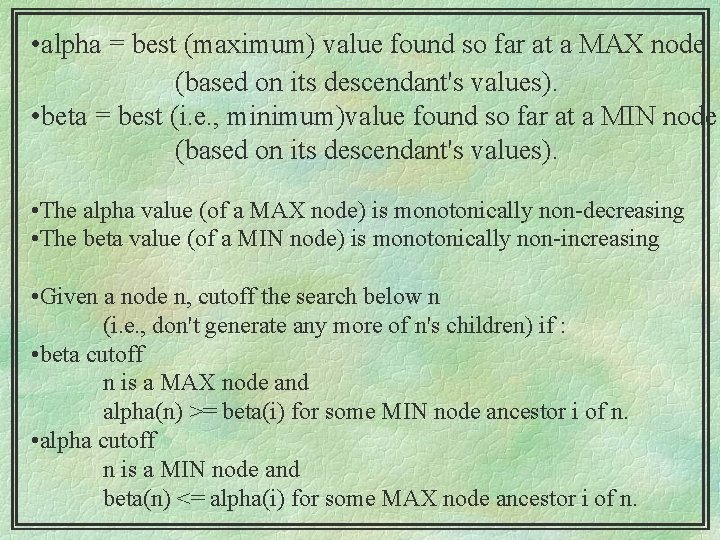

• alpha = best (maximum) value found so far at a MAX node (based on its descendant's values). • beta = best (i. e. , minimum)value found so far at a MIN node (based on its descendant's values). • The alpha value (of a MAX node) is monotonically non-decreasing • The beta value (of a MIN node) is monotonically non-increasing • Given a node n, cutoff the search below n (i. e. , don't generate any more of n's children) if : • beta cutoff n is a MAX node and alpha(n) >= beta(i) for some MIN node ancestor i of n. • alpha cutoff n is a MIN node and beta(n) <= alpha(i) for some MAX node ancestor i of n.

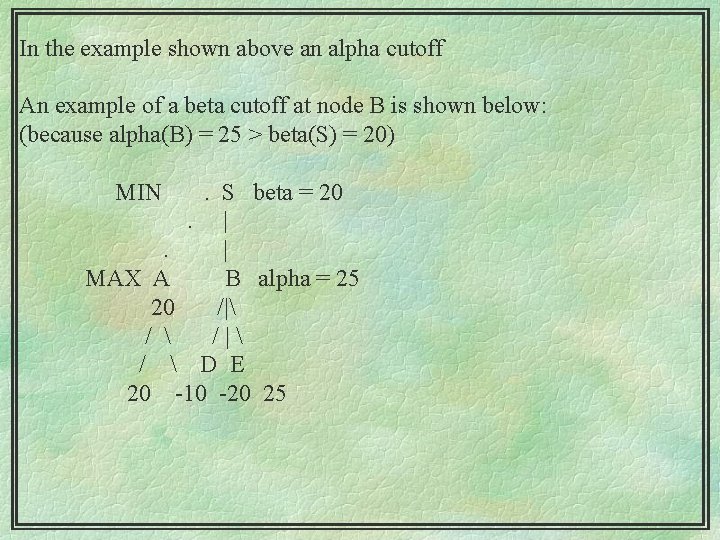

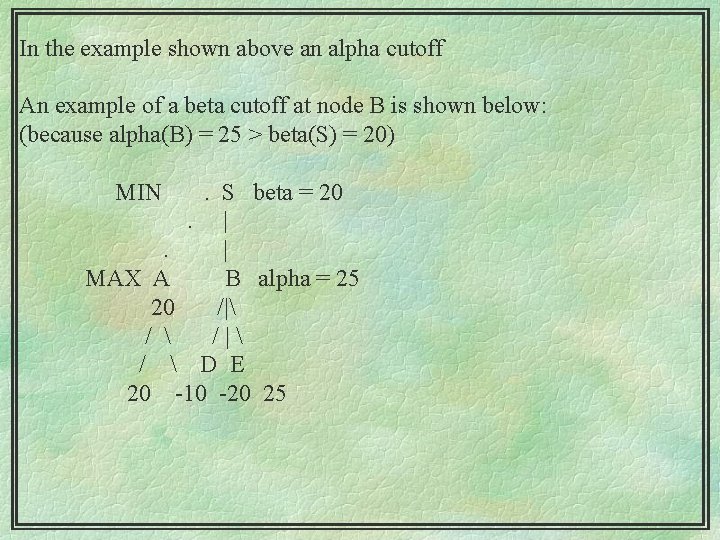

In the example shown above an alpha cutoff An example of a beta cutoff at node B is shown below: (because alpha(B) = 25 > beta(S) = 20) MIN . S beta = 20. |. | MAX A B alpha = 25 20 /| / D E 20 -10 -20 25

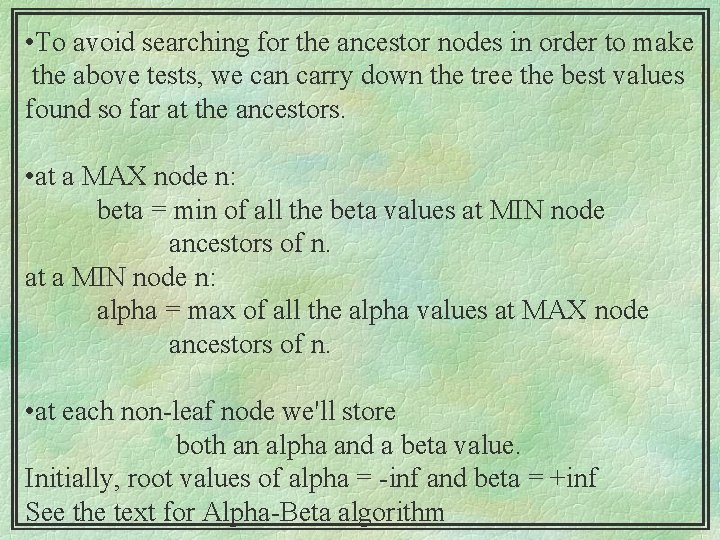

• To avoid searching for the ancestor nodes in order to make the above tests, we can carry down the tree the best values found so far at the ancestors. • at a MAX node n: beta = min of all the beta values at MIN node ancestors of n. at a MIN node n: alpha = max of all the alpha values at MAX node ancestors of n. • at each non-leaf node we'll store both an alpha and a beta value. Initially, root values of alpha = -inf and beta = +inf See the text for Alpha-Beta algorithm

Use § We project ahead k moves, but we only do one (the best) move then § After our opponent moves, we project ahead k moves so we are possibly repeating some work § However, since most of the work is at the leaves anyway, the amount of work we redo isn’t significant (think of iterative deepening)

Alpha-beta performance § Best-case: can search to twice the depth during a fixed amount of time [O(bd/2) v. O(bd)] § Worst-case: no savings l l l alpha-beta pruning & minimax always return the same answer the difference is the amount of work they do effectiveness depends on the order in which successors are examined • want to examine the best first

Refinements § Waiting for quiescence l avoids the horizon effect • disaster is lurking just beyond our search depth • on the nth move (the maximum depth I can see) I take your rook, but on the (n+1)th move (a depth to which I don’t look) you checkmate me l solution • when predicted values are changing frequently, search deeper in that part of the tree (quiescence search)

Secondary search § Find the best move by looking to depth d § Look k steps beyond this best move to see if it still looks good § No? Look further at second best move, etc. l in general, do a deeper search at parts of the tree that look “interesting” § Picture

Book moves § Build a database of opening moves, end games, tough examples, etc. § If the current state is in the database, use the knowledge in the database to determine the quality of a state § If it’s not in the database, just do alpha-beta pruning

AI & games § Initially felt to be great AI testbed § It turned out, however, that brute-force search is better than a lot of knowledge engineering l scaling up by dumbing down • perhaps then intelligence doesn’t have to be humanlike l l more high-speed hardware issues than AI issues however, still good test-beds for learning