FUNDAMENTAL OF DEEP LEARNING BY RAUF UR RAHIM

FUNDAMENTAL OF DEEP LEARNING BY RAUF UR RAHIM

PRE-REQ • A LITTLE BIT KNOWLEDGE OF PYTHON PROGRAMMING LANGUAGE • AI AS GENERAL • A STRONG INTENTION

WHAT WE WILL COVER? • BOOK “DEEP LEARNING FOR PYTHON” BY FRANÇOIS CHOLLET • WHAT IS DEEP LEARNING • MATHEMATICAL BUILDING BLOCK OF DEEP LEARNING • GETTING STARTED WITH NEURAL NETWORKS • FUNDAMENTALS OF MACHINE LEARNING

WHAT WE WILL COVER • FUNDAMENTALS OF ARTIFICIAL INTELLIGENCE, MACHINE LEARNING & DEEP LEARNING • A BRIEF HISTORY OF MACHINE LEARNING AND ALGORITHMS • INTRODUCTION TO DEEP LEARNING AND NEURAL NETWORKS OPERATIONS • THE RISE OF DEEP LEARNING

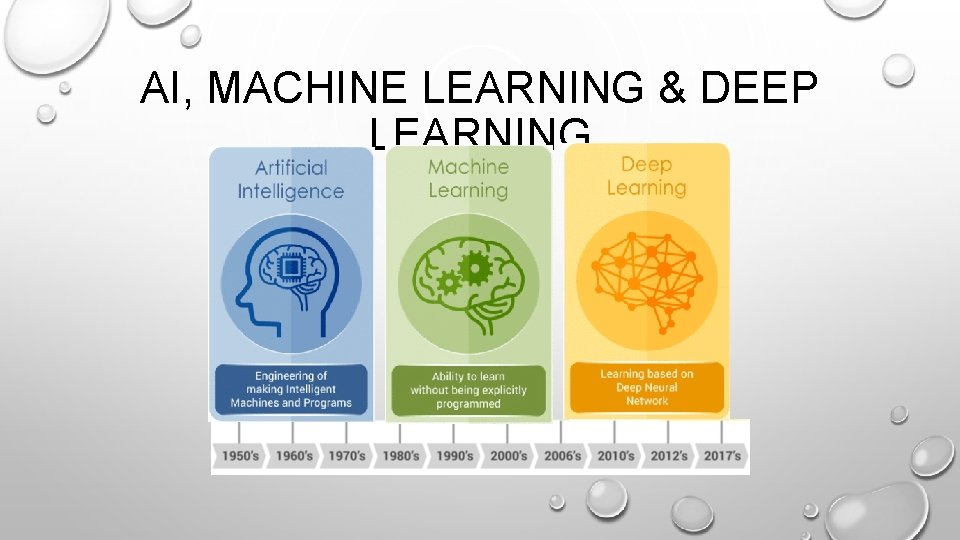

AI, MACHINE LEARNING & DEEP LEARNING

EXAMPLE OF AI SYSTEM

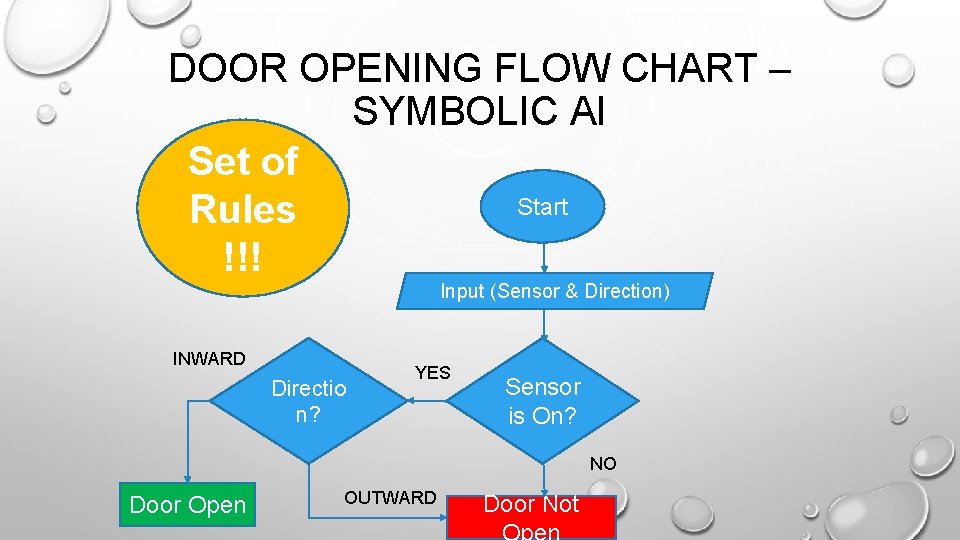

DOOR OPENING FLOW CHART – SYMBOLIC AI Set of Start Rules !!! Input (Sensor & Direction) INWARD Directio n? YES Sensor is On? NO Door Open OUTWARD Door Not

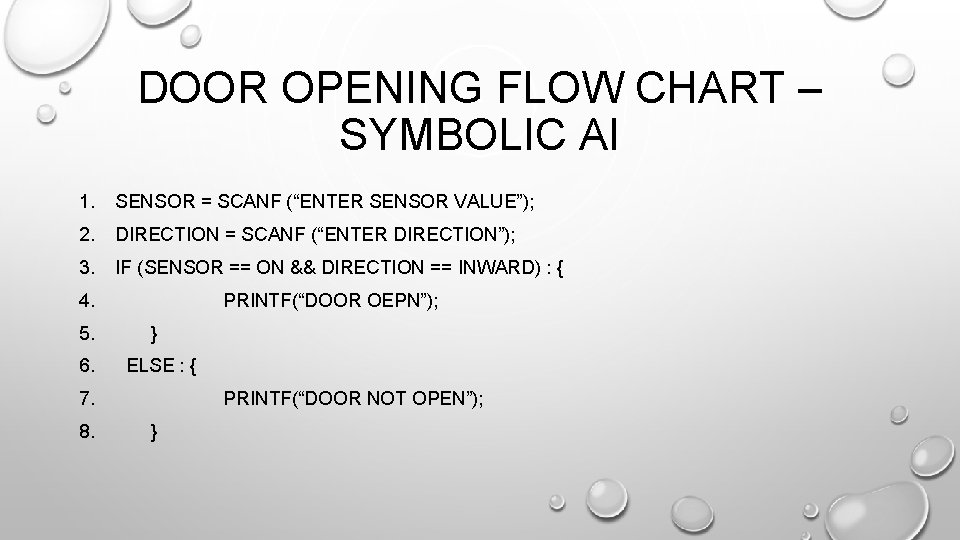

DOOR OPENING FLOW CHART – SYMBOLIC AI 1. SENSOR = SCANF (“ENTER SENSOR VALUE”); 2. DIRECTION = SCANF (“ENTER DIRECTION”); 3. IF (SENSOR == ON && DIRECTION == INWARD) : { 4. 5. 6. PRINTF(“DOOR OEPN”); } ELSE : { 7. 8. PRINTF(“DOOR NOT OPEN”); }

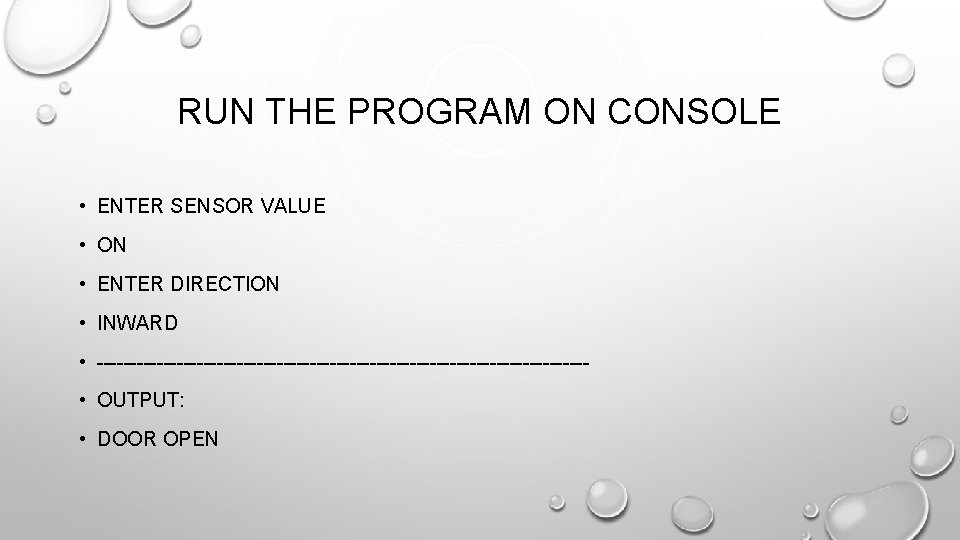

RUN THE PROGRAM ON CONSOLE • ENTER SENSOR VALUE • ON • ENTER DIRECTION • INWARD • ------------------------------------- • OUTPUT: • DOOR OPEN

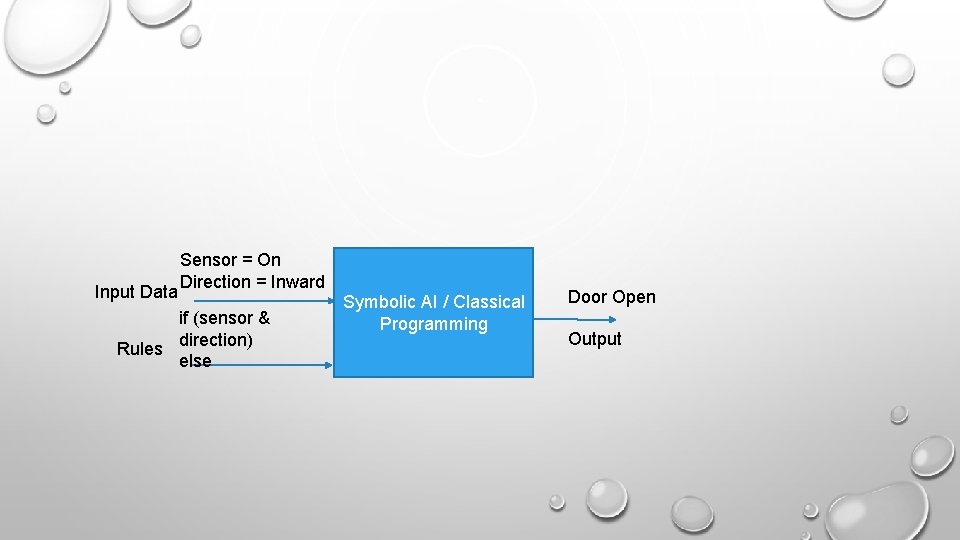

Input Data Sensor = On Direction = Inward if (sensor & direction) Rules else Symbolic AI / Classical Programming Door Open Output

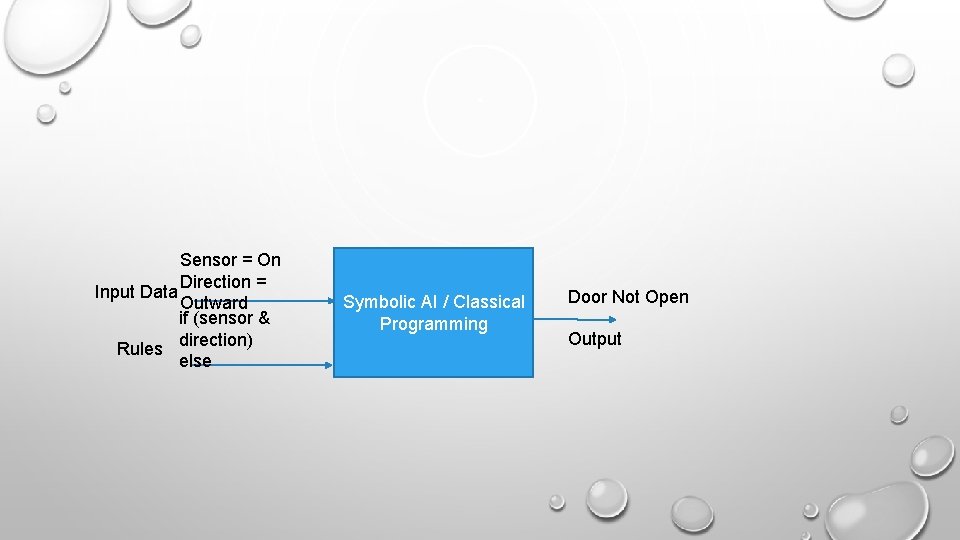

Sensor = On Direction = Input Data Outward if (sensor & direction) Rules else Symbolic AI / Classical Programming Door Not Open Output

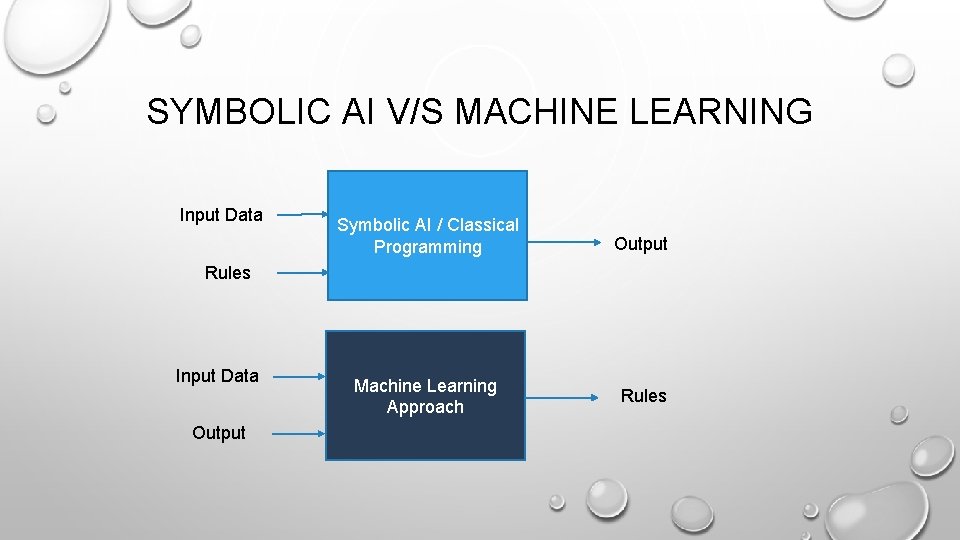

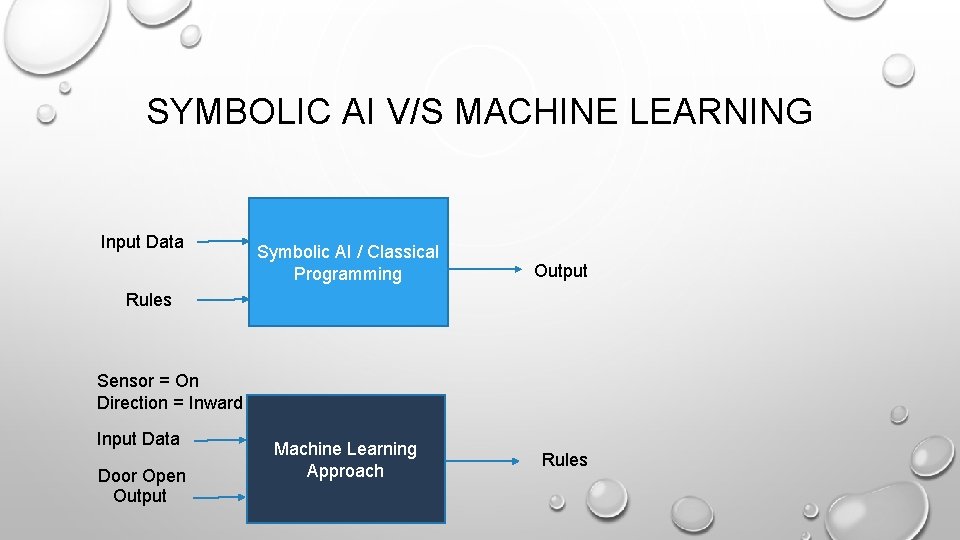

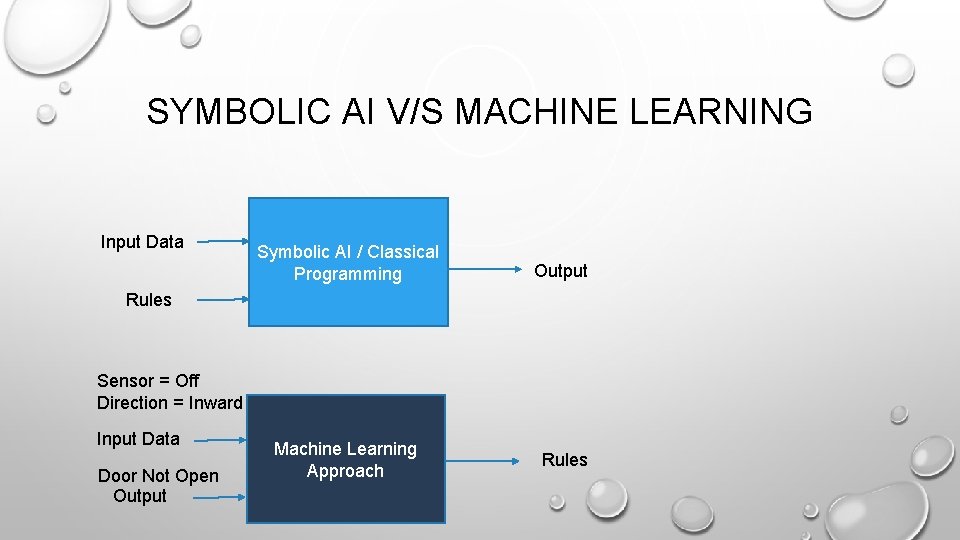

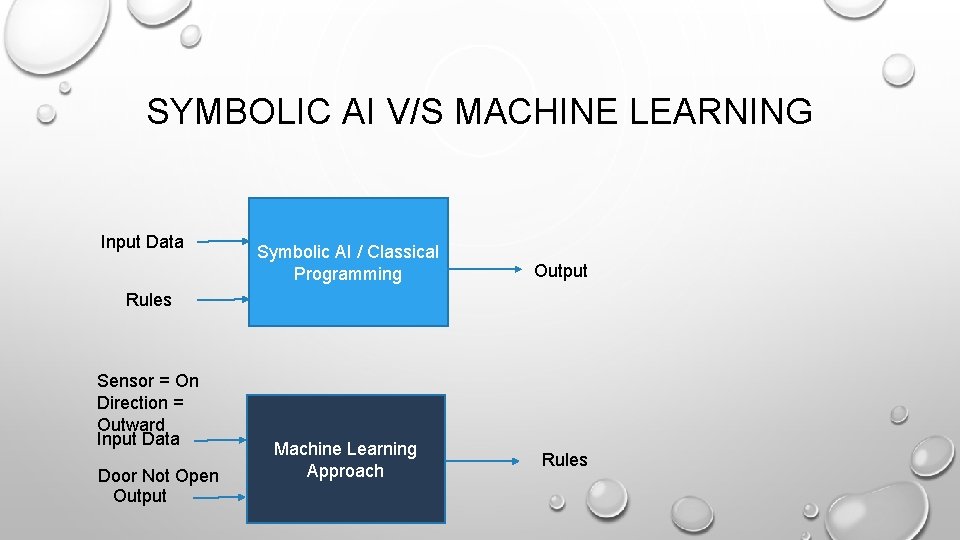

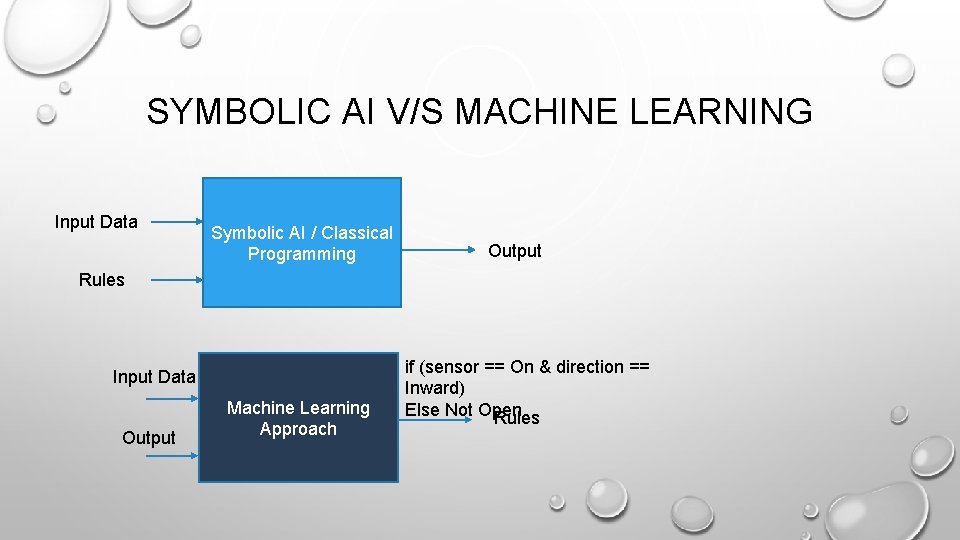

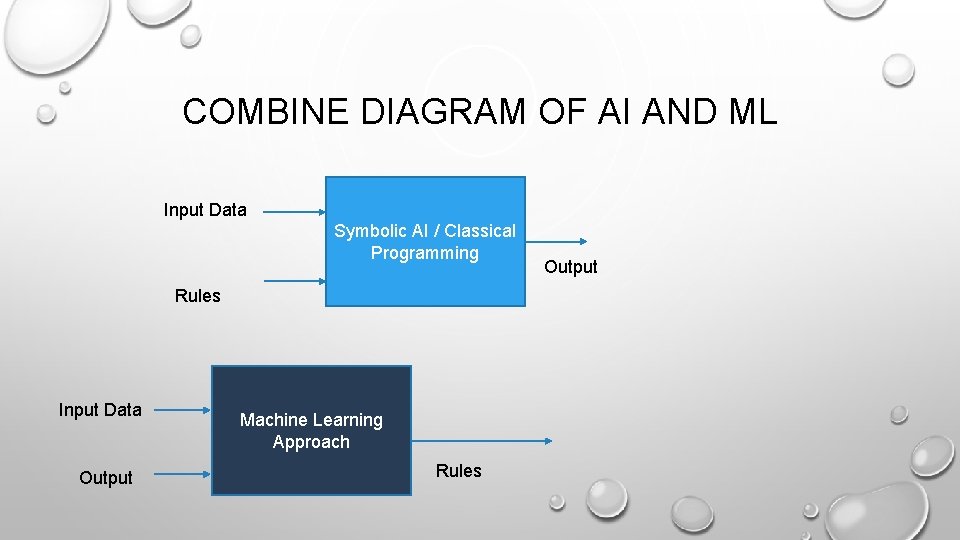

SYMBOLIC AI V/S MACHINE LEARNING Input Data Symbolic AI / Classical Programming Output Machine Learning Approach Rules Input Data Output

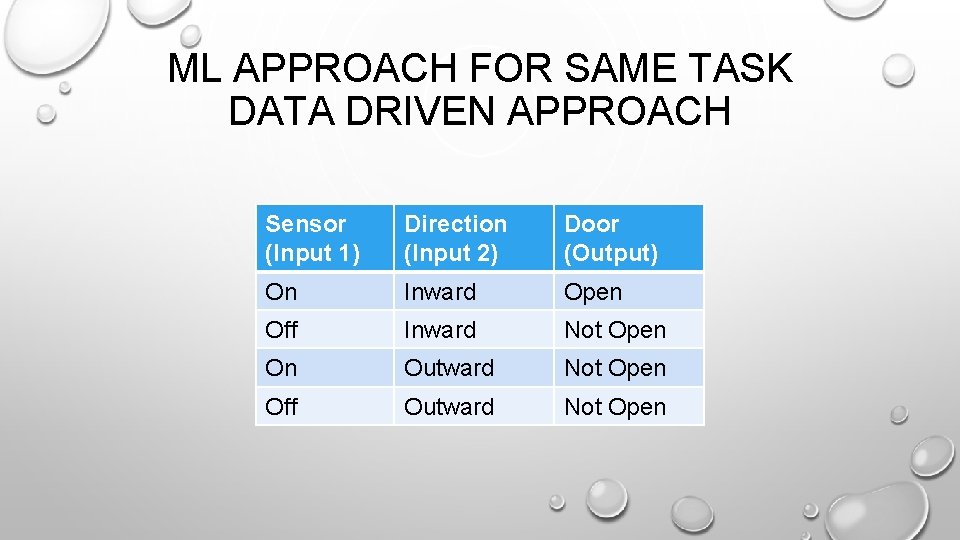

ML APPROACH FOR SAME TASK DATA DRIVEN APPROACH Sensor (Input 1) Direction (Input 2) Door (Output) On Inward Open Off Inward Not Open On Outward Not Open Off Outward Not Open

SYMBOLIC AI V/S MACHINE LEARNING Input Data Symbolic AI / Classical Programming Output Machine Learning Approach Rules Sensor = On Direction = Inward Input Data Door Open Output

SYMBOLIC AI V/S MACHINE LEARNING Input Data Symbolic AI / Classical Programming Output Machine Learning Approach Rules Sensor = Off Direction = Inward Input Data Door Not Open Output

SYMBOLIC AI V/S MACHINE LEARNING Input Data Symbolic AI / Classical Programming Output Machine Learning Approach Rules Sensor = On Direction = Outward Input Data Door Not Open Output

SYMBOLIC AI V/S MACHINE LEARNING Input Data Symbolic AI / Classical Programming Output Rules Input Data Output Machine Learning Approach if (sensor == On & direction == Inward) Else Not Open Rules

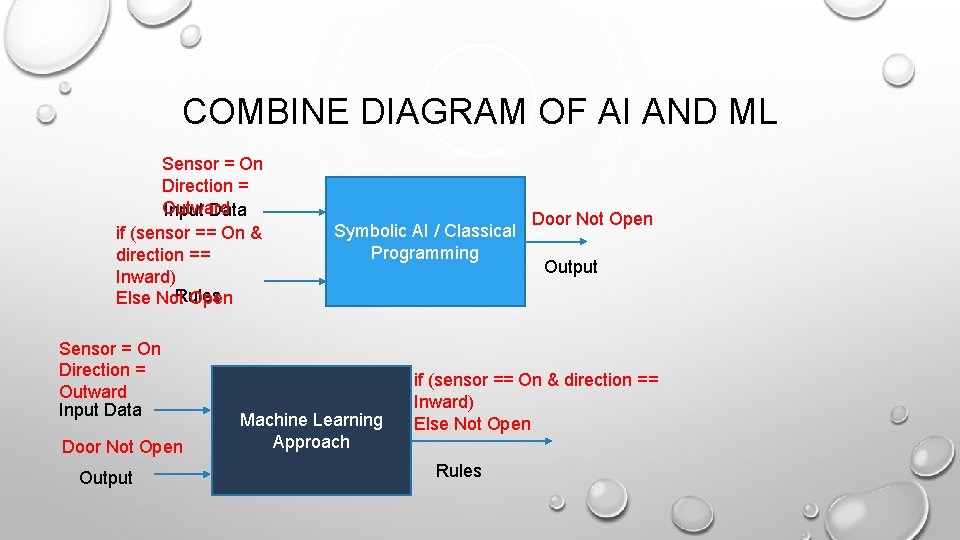

COMBINE DIAGRAM OF AI AND ML Sensor = On Direction = Outward Input Data if (sensor == On & direction == Inward) Rules Else Not Open Sensor = On Direction = Outward Input Data Door Not Open Output Symbolic AI / Classical Programming Machine Learning Approach Door Not Open Output if (sensor == On & direction == Inward) Else Not Open Rules

COMBINE DIAGRAM OF AI AND ML Input Data Symbolic AI / Classical Programming Rules Input Data Output Machine Learning Approach Rules Output

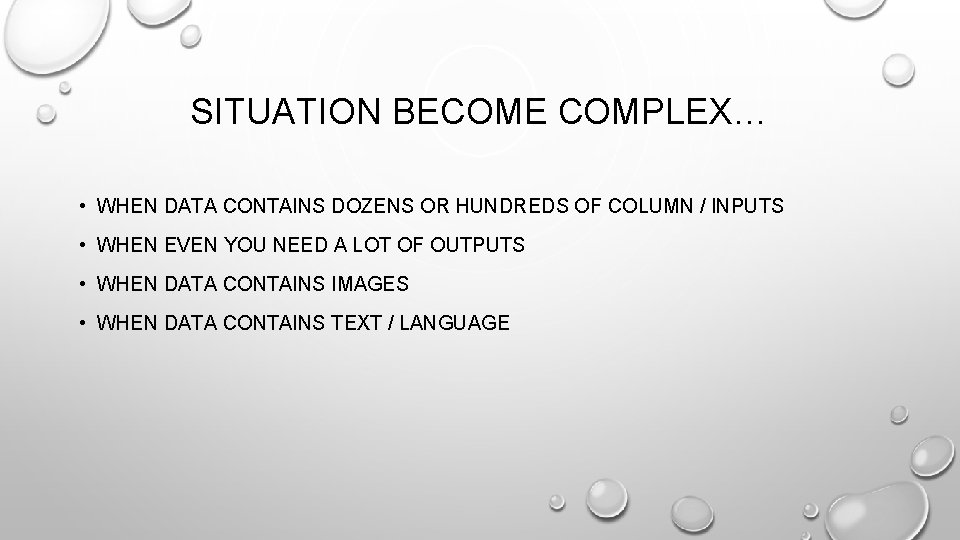

SITUATION BECOME COMPLEX… • WHEN DATA CONTAINS DOZENS OR HUNDREDS OF COLUMN / INPUTS • WHEN EVEN YOU NEED A LOT OF OUTPUTS • WHEN DATA CONTAINS IMAGES • WHEN DATA CONTAINS TEXT / LANGUAGE

3 ESSENTIAL THINGS IN MACHINE LEARNING 1. INPUT DATA POINTS 2. EXAMPLES OF THE EXPECTED OUTPUT 3. A WAY TO MEASURE HOW GOOD AN ALGORITHM IS DOING

2. A BRIEF HISTORY OF MACHINE LEARNING & ALGORITHMS • SINCE IT’S INCEPTION ML GAIN ATTENTION OF INDUSTRY • DEEP LEARNING IS NOT ALWAYS RIGHT TOOL DUE TO LIMITED DATASET • IF YOU HAVE DEEP-LEARNING HAMMER, EVERY MACHINE-LEARNING PROBLEM STARTS TO LOOK LIKE A NAIL • LET US LOOK AT FEW ML ALGORITHMS

2. 1 PROBABILISTIC MODELING • WORKS BASED ON PRINCIPALS OF STATISTICS • NAÏVE BYES THEOREM IS COMMON EXAMPLE • LOGISTIC REGRESSION IS GENERAL EXAMPLE

2. 2 EARLY NEURAL NETWORKS • IDEAS OF NEURAL NETWORKS WERE INVESTIGATED IN TOY FORMS AS EARLY AS THE 1950 S • APPROACH TOOK DECADES TO GET STARTED • BREAKTHROUGH IN 1980 WHEN SCIENTISTS WORK ON…. BREAK • BACK PROPAGATION • GRADIENT OPTIMIZATION • FIRST SUCCESSFUL APPLICATION IN 1990, WHEN HAND WRITTEN CHARACTERS ARE RECOGNIZED

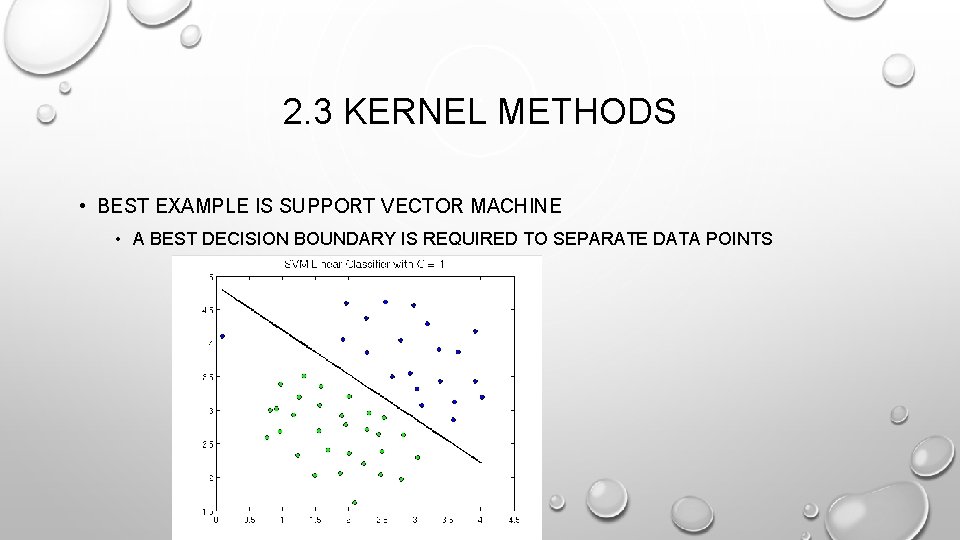

2. 3 KERNEL METHODS • BEST EXAMPLE IS SUPPORT VECTOR MACHINE • A BEST DECISION BOUNDARY IS REQUIRED TO SEPARATE DATA POINTS

2. 4 DECISION TREES, RANDOM FORESTS AND GRADIENT BOOSTING MACHINES

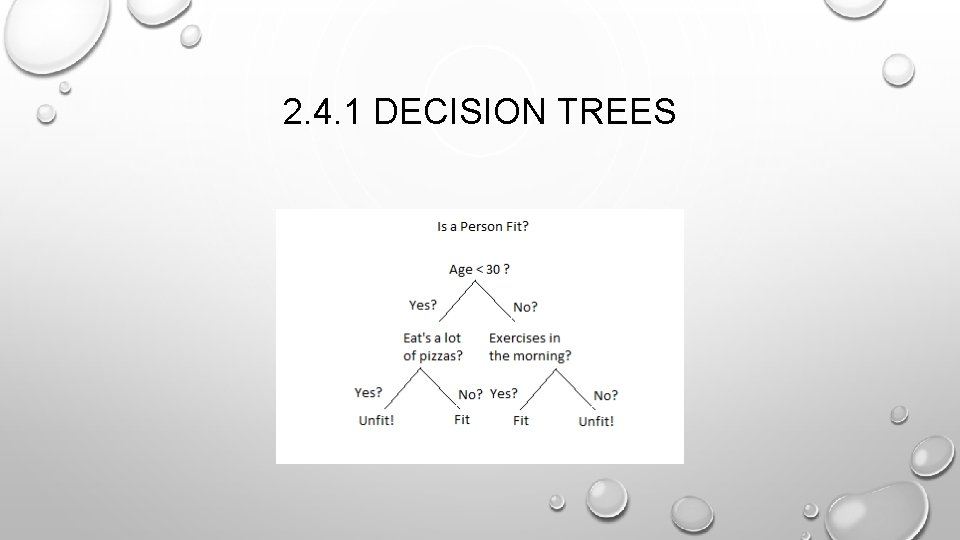

2. 4. 1 DECISION TREES

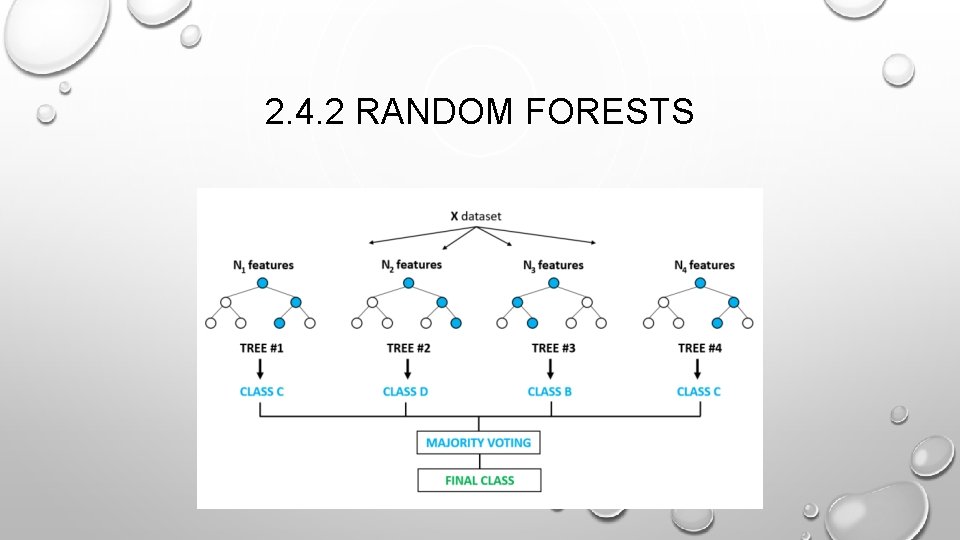

2. 4. 2 RANDOM FORESTS

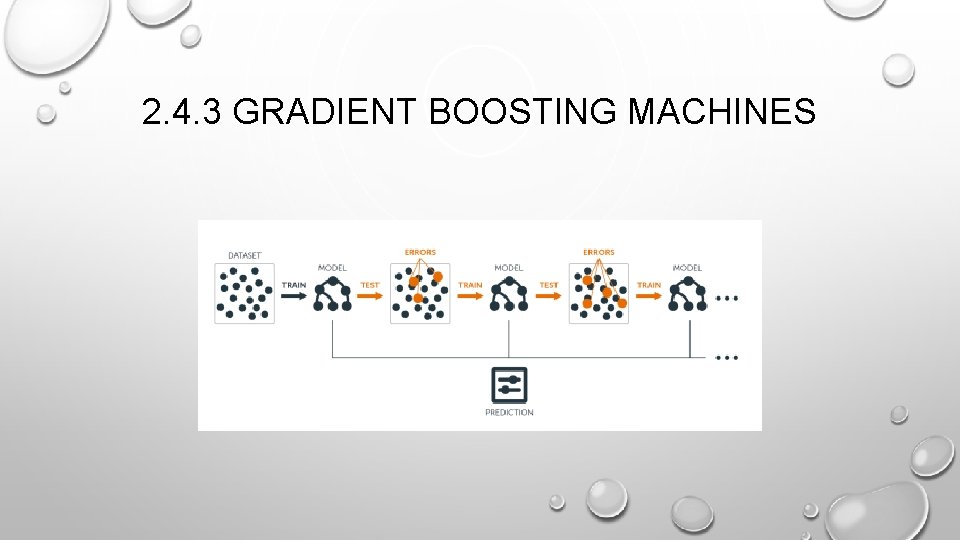

2. 4. 3 GRADIENT BOOSTING MACHINES

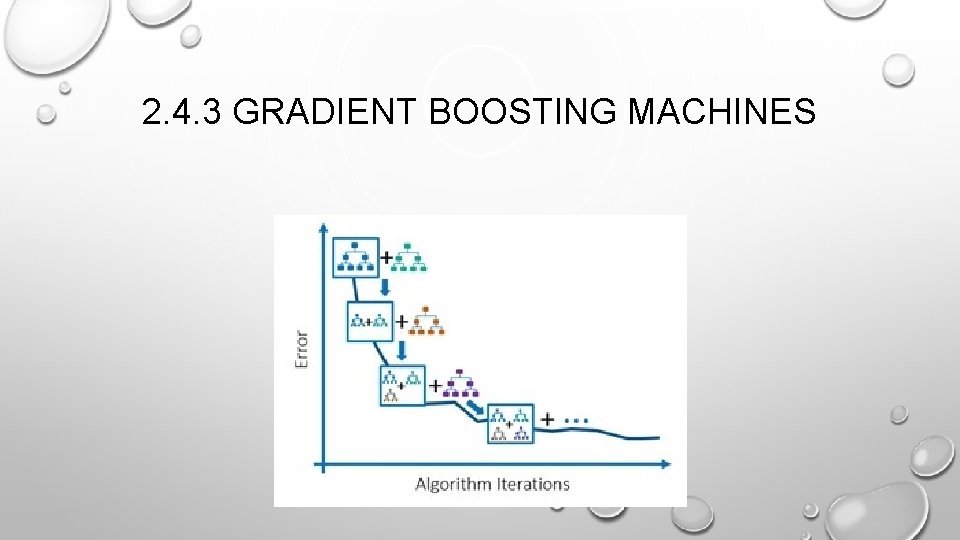

2. 4. 3 GRADIENT BOOSTING MACHINES

2. 5 THE MODERN MACHINE-LEARNING LANDSCAPE • KAGGLE COMPETITION AS BENCHMARK • WHAT KIND OF ALGORITHMS ARE WINNER? • WHICH TOOLS ARE BEING USED? • TWO COMMON APPROACHES: • GRADIENT BOOSTING MACHINES • DEEP LEARNING • GRADIENT BOOSTING FOR STRUCTURED DATA – SHALLOW LEARNING • DEEP LEARNING FOR PERCEPTUAL PROBLEMS LIKE TEXT, IMAGES, VIDEOS

3. THE DEEP LEARNING • ADVANCE FIELD OF MACHINE LEARNING • MULTILAYERED NEURAL NETWORKS • NO NEED OF FEATURE-ENGINEERING • NOT JUST CATEGORIZATION BUT ALSO HUMAN-LEVEL CAPABILITY LIKE LEARN, ADAPT

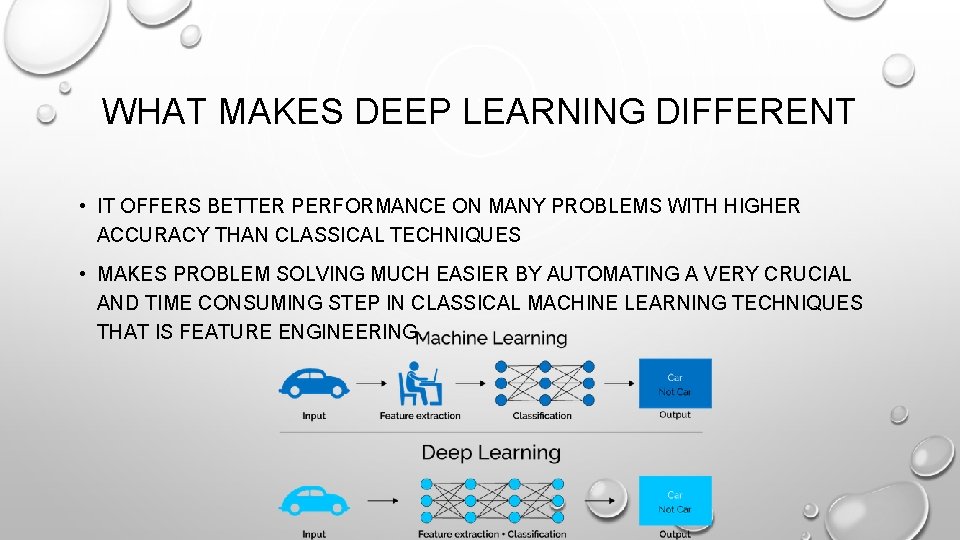

WHAT MAKES DEEP LEARNING DIFFERENT • IT OFFERS BETTER PERFORMANCE ON MANY PROBLEMS WITH HIGHER ACCURACY THAN CLASSICAL TECHNIQUES • MAKES PROBLEM SOLVING MUCH EASIER BY AUTOMATING A VERY CRUCIAL AND TIME CONSUMING STEP IN CLASSICAL MACHINE LEARNING TECHNIQUES THAT IS FEATURE ENGINEERING.

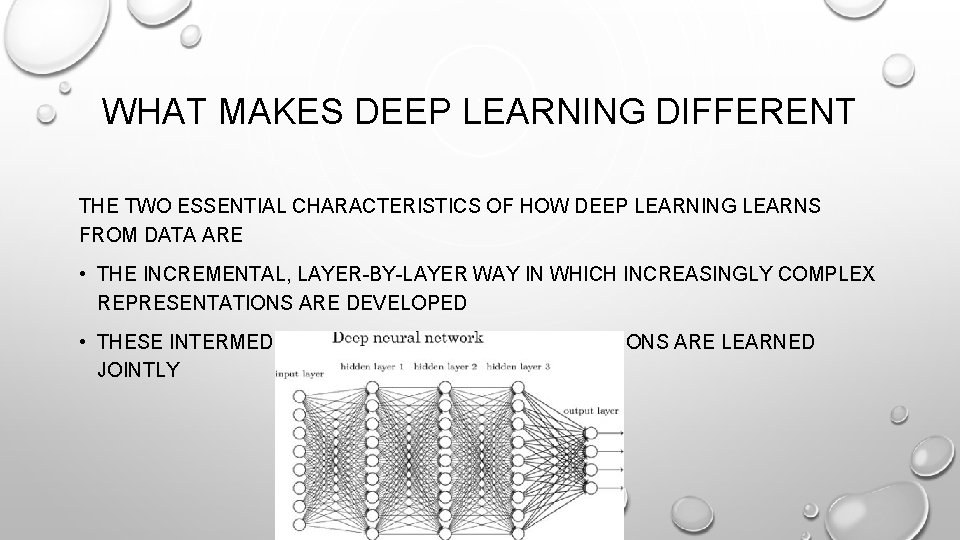

WHAT MAKES DEEP LEARNING DIFFERENT THE TWO ESSENTIAL CHARACTERISTICS OF HOW DEEP LEARNING LEARNS FROM DATA ARE • THE INCREMENTAL, LAYER-BY-LAYER WAY IN WHICH INCREASINGLY COMPLEX REPRESENTATIONS ARE DEVELOPED • THESE INTERMEDIATE INCREMENTAL REPRESENTATIONS ARE LEARNED JOINTLY

HUMAN BRAIN V/S NEURAL NETWORKS

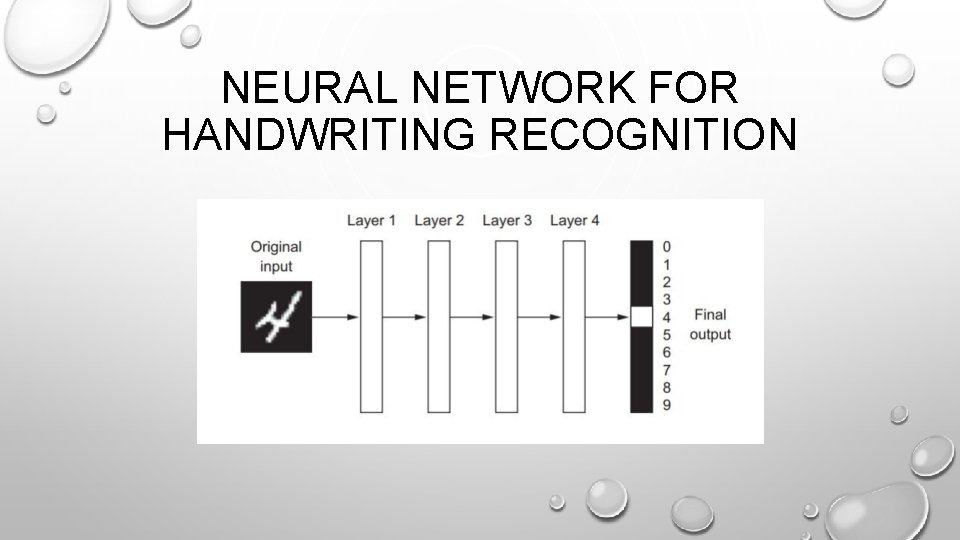

NEURAL NETWORK FOR HANDWRITING RECOGNITION

HUMAN LEARNING BEFORE DEEP LEARNING

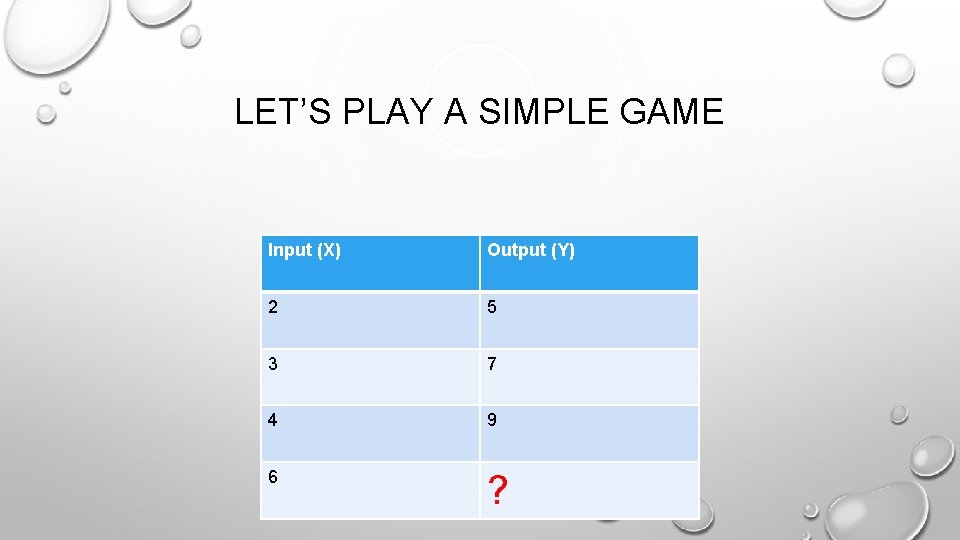

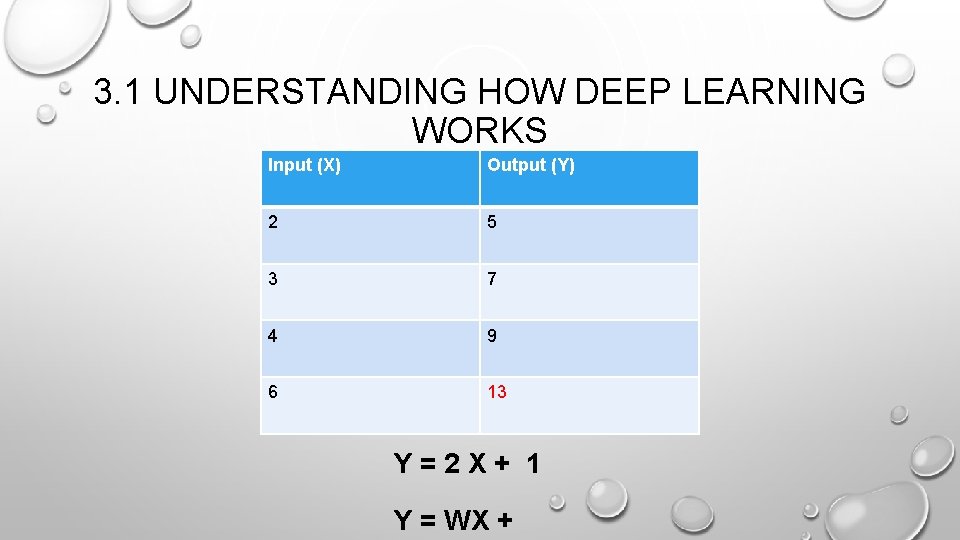

LET’S PLAY A SIMPLE GAME Input (X) Output (Y) 2 5 3 7 4 9 6 ?

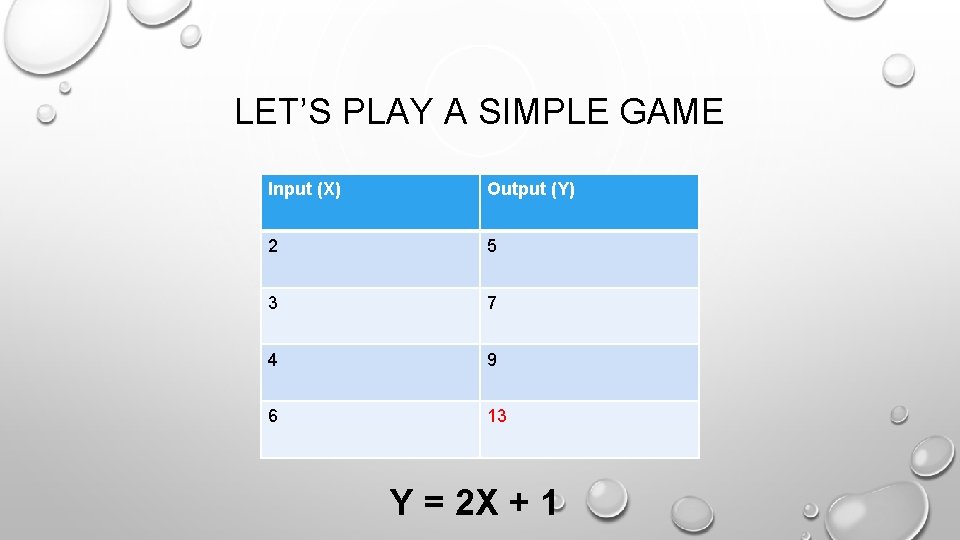

LET’S PLAY A SIMPLE GAME Input (X) Output (Y) 2 5 3 7 4 9 6 13 Y = 2 X + 1

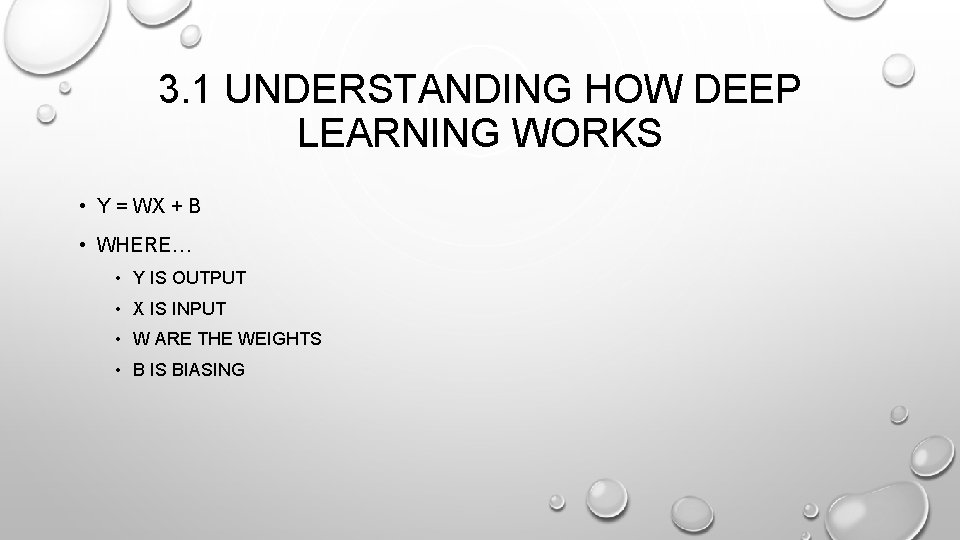

3. 1 UNDERSTANDING HOW DEEP LEARNING WORKS • Y = WX + B • WHERE… • Y IS OUTPUT • X IS INPUT • W ARE THE WEIGHTS • B IS BIASING

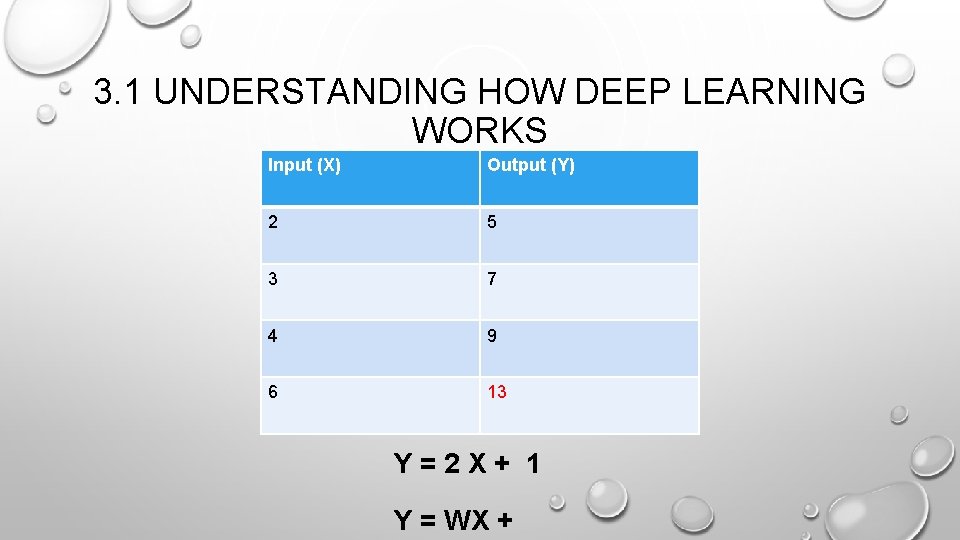

3. 1 UNDERSTANDING HOW DEEP LEARNING WORKS Input (X) Output (Y) 2 5 3 7 4 9 6 13 Y=2 X+ 1 Y = WX +

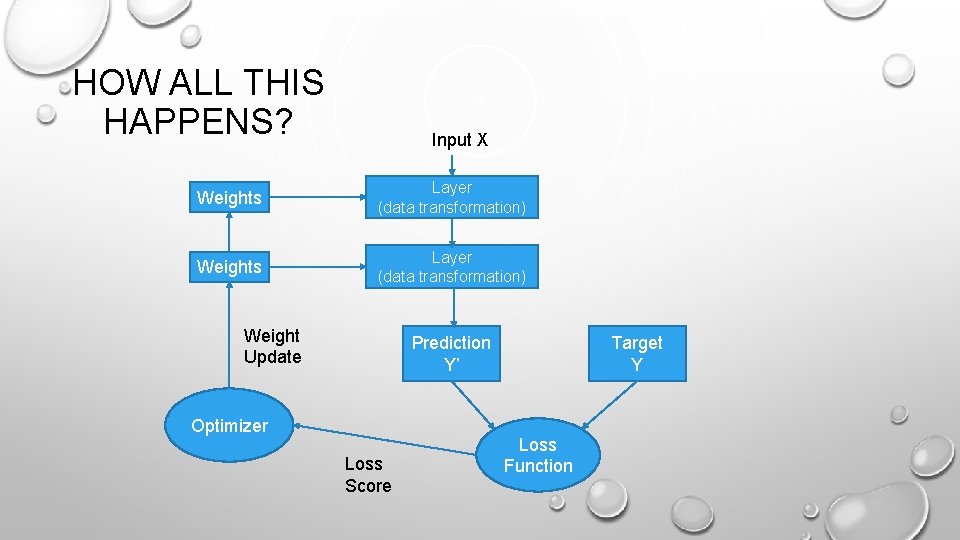

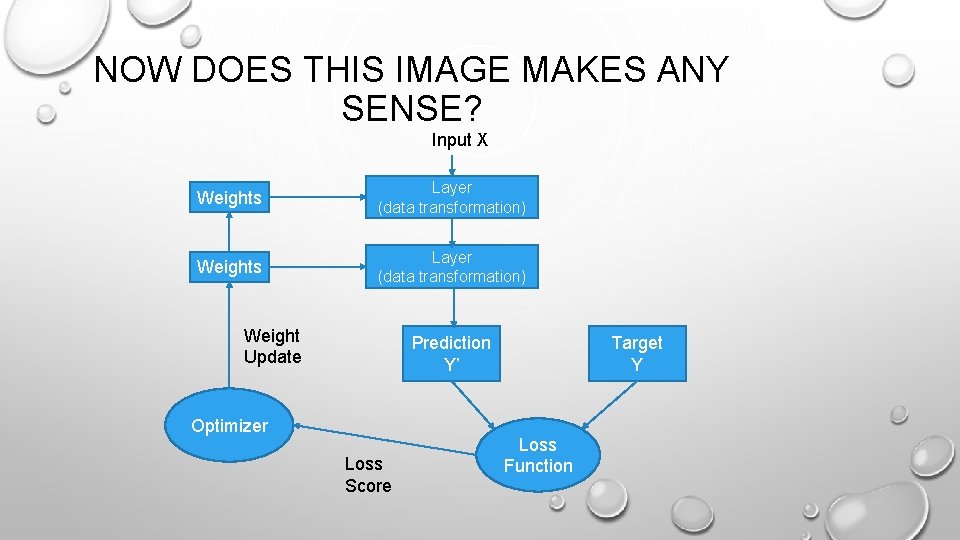

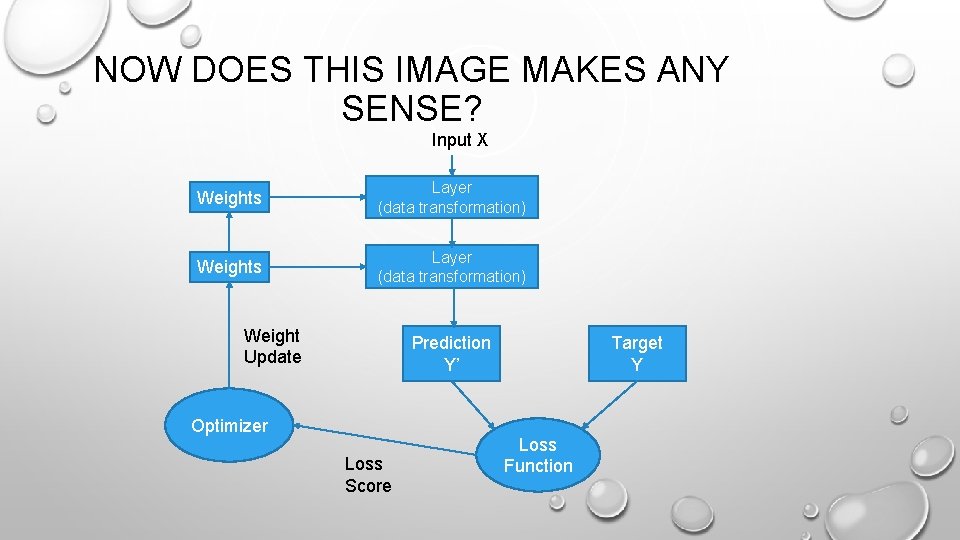

HOW ALL THIS HAPPENS? Input X Weights Layer (data transformation) Weight Update Prediction Y’ Optimizer Loss Score Target Y Loss Function

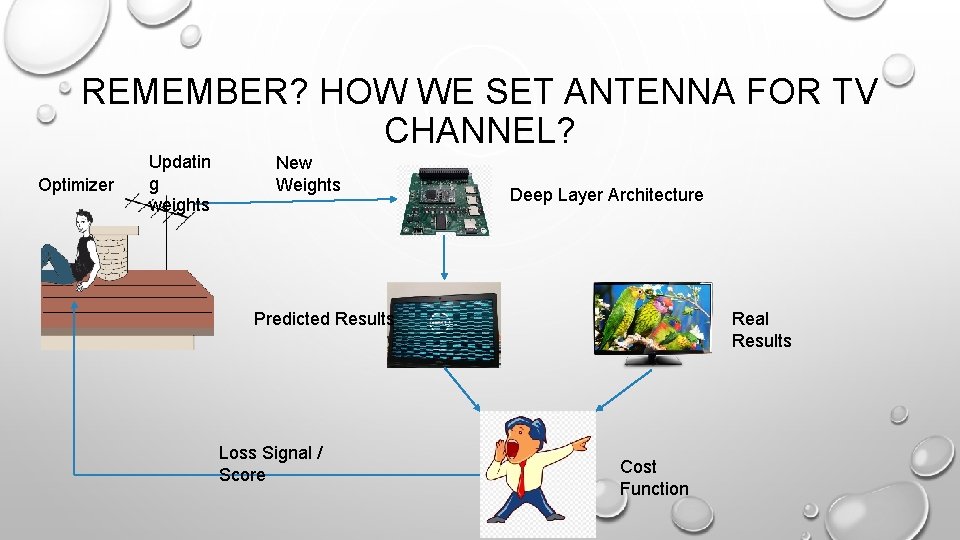

REMEMBER? HOW WE SET ANTENNA FOR TV CHANNEL? Optimizer Updatin g weights New Weights Deep Layer Architecture Predicted Results Loss Signal / Score Real Results Cost Function

3. 1 UNDERSTANDING HOW DEEP LEARNING WORKS Input (X) Output (Y) 2 5 3 7 4 9 6 13 Y=2 X+ 1 Y = WX +

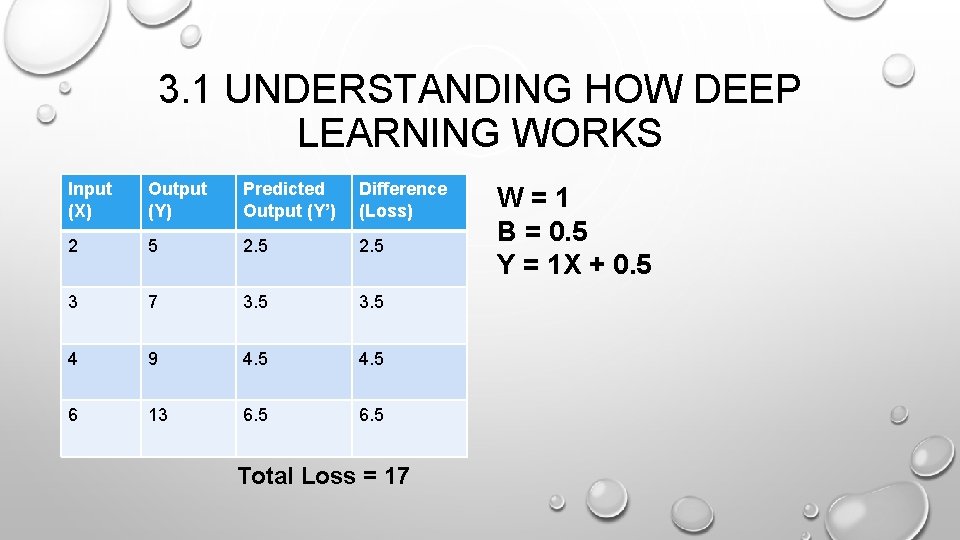

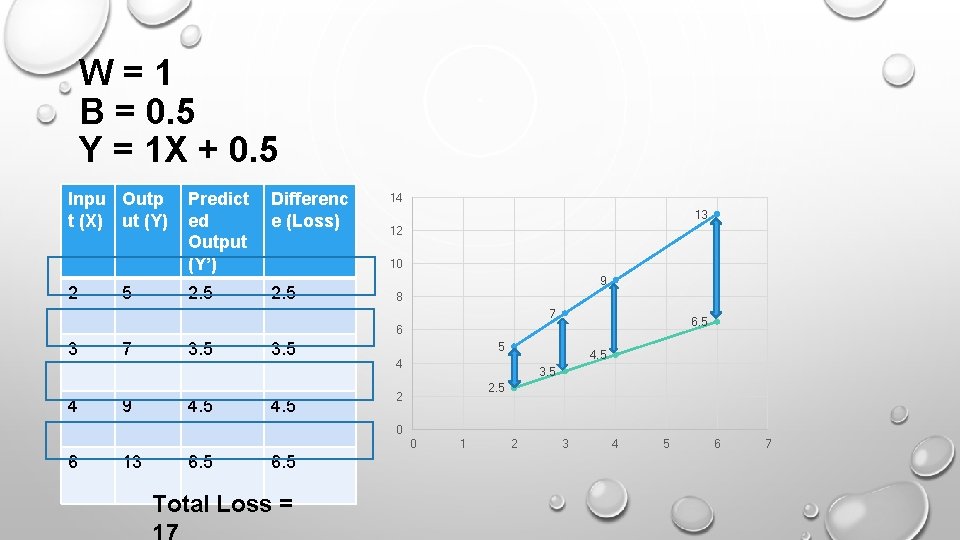

3. 1 UNDERSTANDING HOW DEEP LEARNING WORKS Input (X) Output (Y) Predicted Output (Y’) Difference (Loss) 2 5 2. 5 3 7 3. 5 4 9 4. 5 6 13 6. 5 Total Loss = 17 W=1 B = 0. 5 Y = 1 X + 0. 5

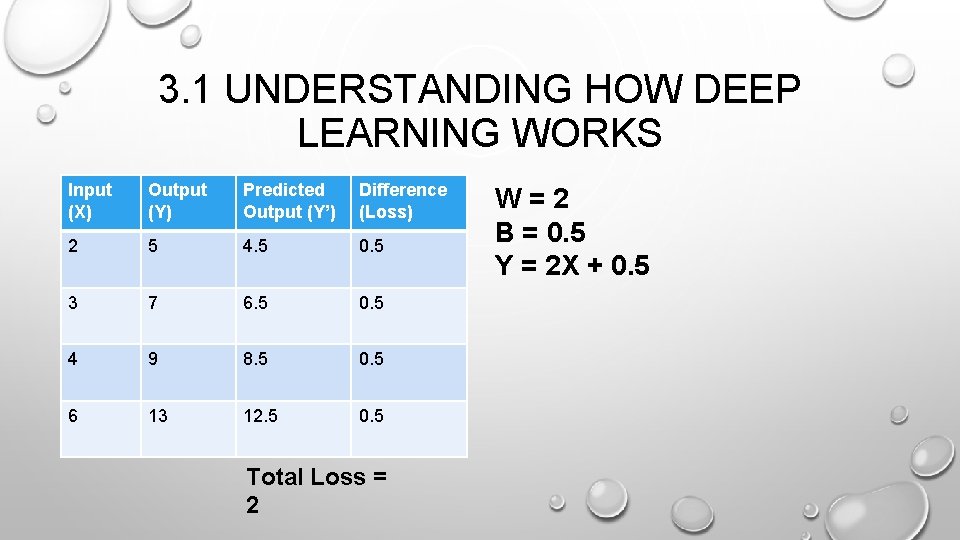

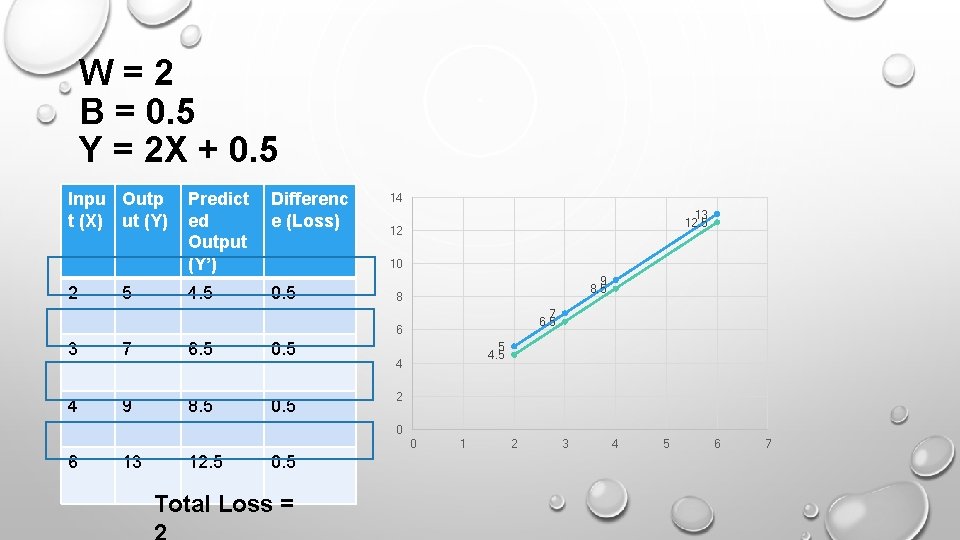

3. 1 UNDERSTANDING HOW DEEP LEARNING WORKS Input (X) Output (Y) Predicted Output (Y’) Difference (Loss) 2 5 4. 5 0. 5 3 7 6. 5 0. 5 4 9 8. 5 0. 5 6 13 12. 5 0. 5 Total Loss = 2 W=2 B = 0. 5 Y = 2 X + 0. 5

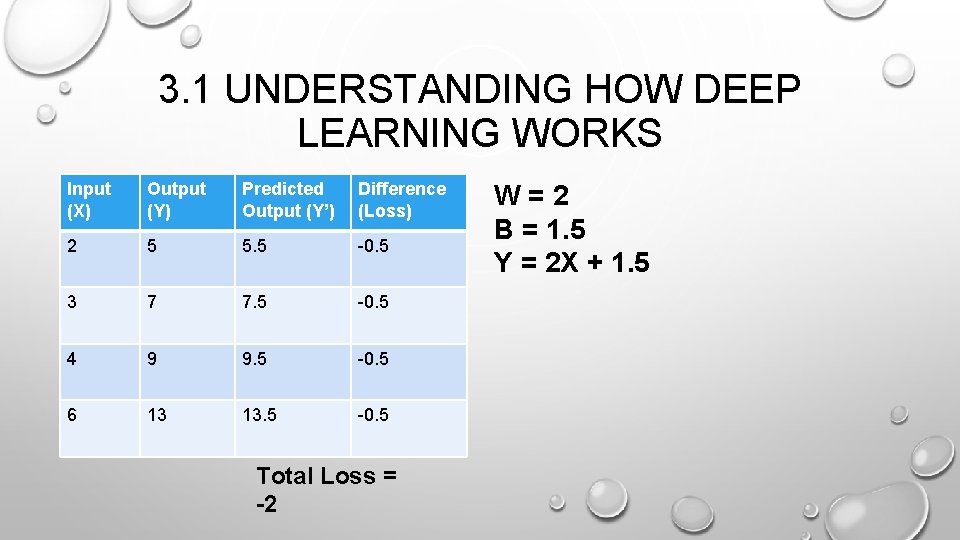

3. 1 UNDERSTANDING HOW DEEP LEARNING WORKS Input (X) Output (Y) Predicted Output (Y’) Difference (Loss) 2 5 5. 5 -0. 5 3 7 7. 5 -0. 5 4 9 9. 5 -0. 5 6 13 13. 5 -0. 5 Total Loss = -2 W=2 B = 1. 5 Y = 2 X + 1. 5

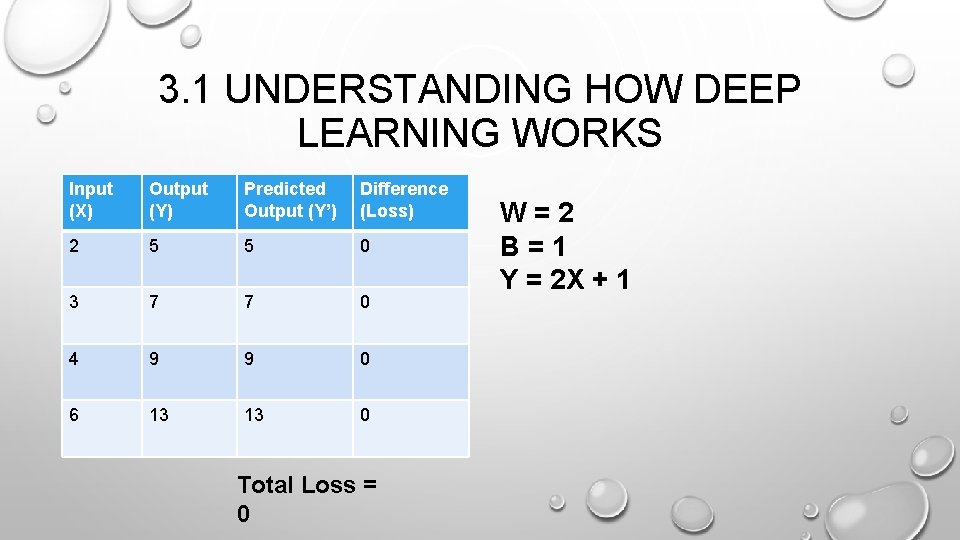

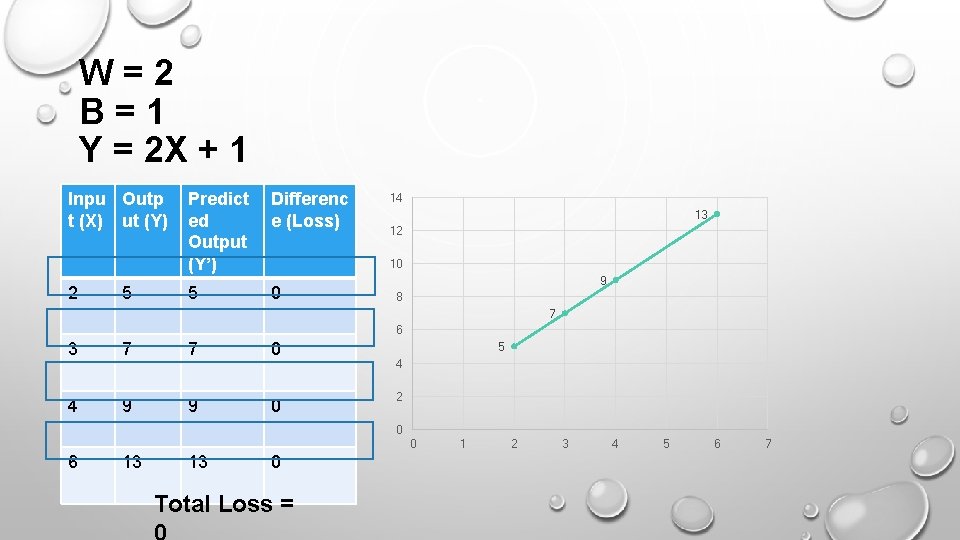

3. 1 UNDERSTANDING HOW DEEP LEARNING WORKS Input (X) Output (Y) Predicted Output (Y’) Difference (Loss) 2 5 5 0 3 7 7 0 4 9 9 0 6 13 13 0 Total Loss = 0 W=2 B=1 Y = 2 X + 1

NOW DOES THIS IMAGE MAKES ANY SENSE? Input X Weights Layer (data transformation) Weight Update Prediction Y’ Optimizer Loss Score Target Y Loss Function

NOW DOES THIS IMAGE MAKES ANY SENSE? Input X Weights Layer (data transformation) Weight Update Prediction Y’ Optimizer Loss Score Target Y Loss Function

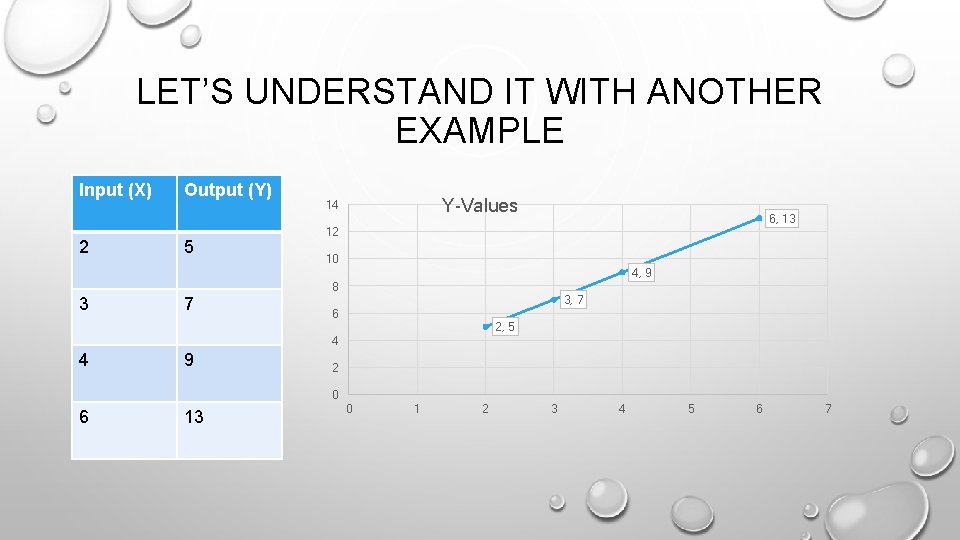

LET’S UNDERSTAND IT WITH ANOTHER EXAMPLE Input (X) 2 Output (Y) 5 Y-Values 14 6, 13 12 10 4, 9 8 3 7 3, 7 6 2, 5 4 4 9 2 0 6 13 0 1 2 3 4 5 6 7

W=1 B = 0. 5 Y = 1 X + 0. 5 Inpu Outp t (X) ut (Y) 2 5 Predict ed Output (Y’) Differenc e (Loss) 2. 5 14 13 12 10 9 8 7 6. 5 6 3 4 7 9 3. 5 4 3. 5 2 0 0 6 13 6. 5 Total Loss = 1 2 3 4 5 6 7

W=2 B = 0. 5 Y = 2 X + 0. 5 Inpu Outp t (X) ut (Y) 2 5 Predict ed Output (Y’) Differenc e (Loss) 4. 5 0. 5 14 13 12. 5 12 10 9 8. 5 8 7 6. 5 6 3 7 6. 5 0. 5 4 9 8. 5 0. 5 5 4 2 0 0 6 13 12. 5 0. 5 Total Loss = 1 2 3 4 5 6 7

W=2 B=1 Y = 2 X + 1 Inpu Outp t (X) ut (Y) 2 5 Predict ed Output (Y’) Differenc e (Loss) 5 0 14 13 12 10 9 8 7 6 3 7 7 0 4 9 9 0 5 4 2 0 0 6 13 13 0 Total Loss = 1 2 3 4 5 6 7

3. 2 WHAT DEEP LEARNING HAS ACHIEVED SO FAR

3. 2. 1 NEAR-HUMAN-LEVEL IMAGE CLASSIFICATION

3. 2. 2 NEAR-HUMAN-LEVEL SPEECH RECOGNITION

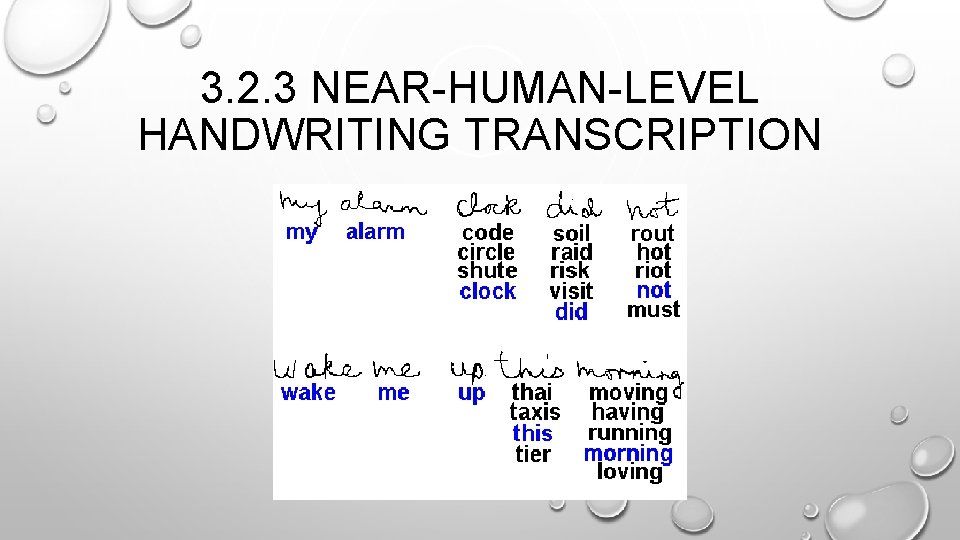

3. 2. 3 NEAR-HUMAN-LEVEL HANDWRITING TRANSCRIPTION

3. 2. 4 IMPROVED MACHINE TRANSLATION

3. 2. 5 IMPROVED TEXT-TO-SPEECH CONVERSION

3. 2. 6 DIGITAL ASSISTANTS SUCH AS GOOGLE NOW AND AMAZON ALEXA

3. 2. 7 NEAR-HUMAN-LEVEL AUTONOMOUS DRIVING

3. 2. 8 IMPROVED AD TARGETING, AS USED BY GOOGLE, BAIDU, AND BING

3. 2. 9 IMPROVED SEARCH RESULTS ON THE WEB

3. 2. 10 ABILITY TO ANSWER NATURALLANGUAGE QUESTIONS

3. 2. 11 SUPERHUMAN GO PLAYING

4. WHY DEEP LEARNING? WHY NOW? • WELL UNDERSTOOD IN 1989, LIKE CNN • LSTM DEVELOPED IN 1997 • SO WHY IN THESE TWO DECADES? • HARDWARE • DATASETS AND BENCHMARKS • ALGORITHMIC ADVANCES

4. 1 HARDWARE • MEMORY, COMPUTATIONAL POWER EXPONENTIAL GROWTH • CV, SPEECH RECOGNITION NEED MORE POWER • CPU, GPU, TPU

4. 2 DATA • GAME CHANGER – INTERNET DUE TO 3 G, 4 G, 5 G • YOU CAN UPLOADING AND DOWNLOAD DATA • YOUTUBE FOR VIDEOS, WIKIPEDIA FOR NATURAL LANGUAGE – WE HAVE TREASURE OF DATA

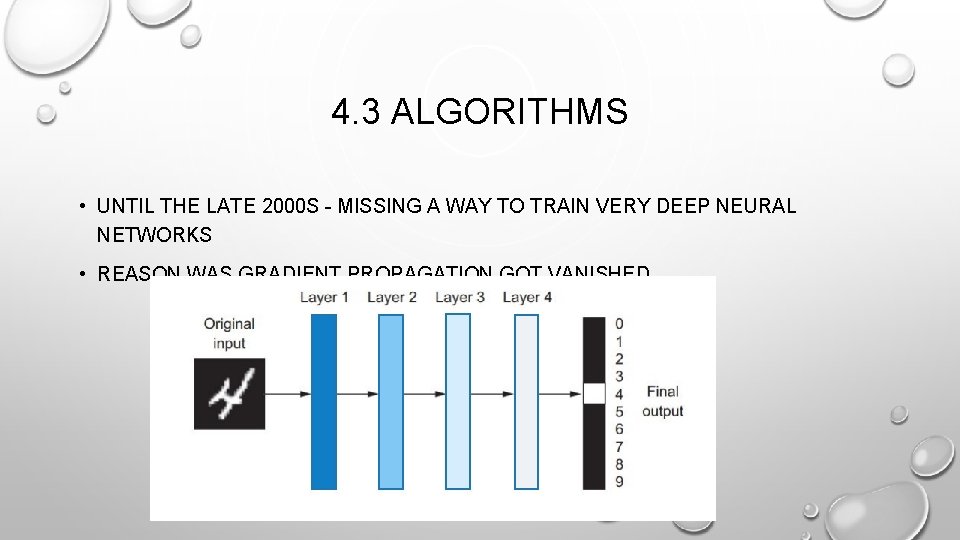

4. 3 ALGORITHMS • UNTIL THE LATE 2000 S - MISSING A WAY TO TRAIN VERY DEEP NEURAL NETWORKS • REASON WAS GRADIENT PROPAGATION GOT VANISHED

4. 3 ALGORITHMS • BETTER • ACTIVATION FUNCTIONS • WEIGHT-INITIALIZATION SCHEMES • OPTIMIZATION SCHEMES

4. 4 A NEW WAVE OF INVESTMENT • $2. 6 T IN VALUE BY 2020 IN MARKETING AND SALES • $2 T IN MANUFACTURING AND SUPPLY CHAIN PLANNING • GARTNER PREDICTS THE BUSINESS VALUE $3. 9 T IN 2022 • IDC PREDICTS WORLDWIDE SPENDING ON COGNITIVE AND ARTIFICIAL INTELLIGENCE SYSTEMS WILL REACH $77. 6 B IN 2022

4. 5 THE DEMOCRATIZATION OF DEEP LEARNING • DIFFICULT TO PROGRAM IN EARLY VERSIONS • THEANO, TENSORFLOW • KERAS HAS GAIN THE ATTENTION OF RESEARCHERS & DEVELOPERS • UNNECESSARY CODING HAS HIDED

4. 6 WILL IT LAST? • WHAT WILL HAPPENED AFTER 20 YEARS? • ANYTHING THAT WILL BE ADVANCE SHAPE OF NEURAL NETWORKS • IMPORTANT PROPERTIES CAN BE CATEGORIZED INTO 3 CATEGORIES: • SIMPLICITY • SCALABILITY • VERSATILITY AND REUSABILITY

- Slides: 77