FTMPICH Providing fault tolerance for MPI parallel applications

- Slides: 26

FT-MPICH : Providing fault tolerance for MPI parallel applications Prof. Heon Y. Yeom Distributed Computing Systems Lab. Seoul National University

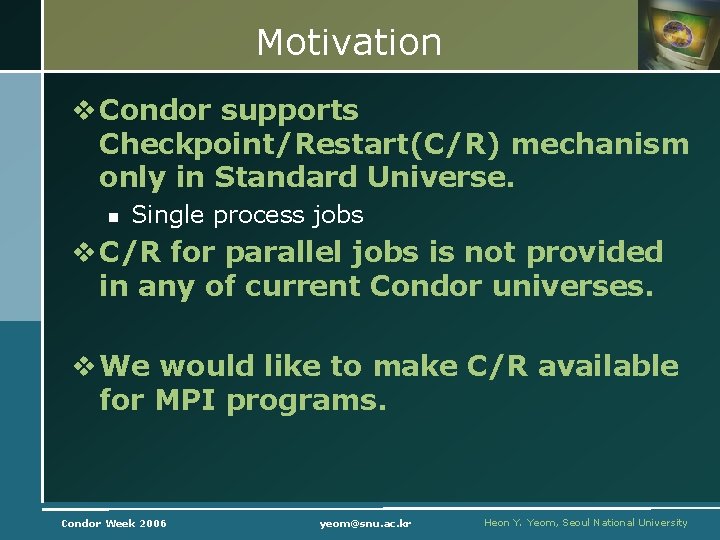

Motivation v Condor supports Checkpoint/Restart(C/R) mechanism only in Standard Universe. n Single process jobs v C/R for parallel jobs is not provided in any of current Condor universes. v We would like to make C/R available for MPI programs. Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

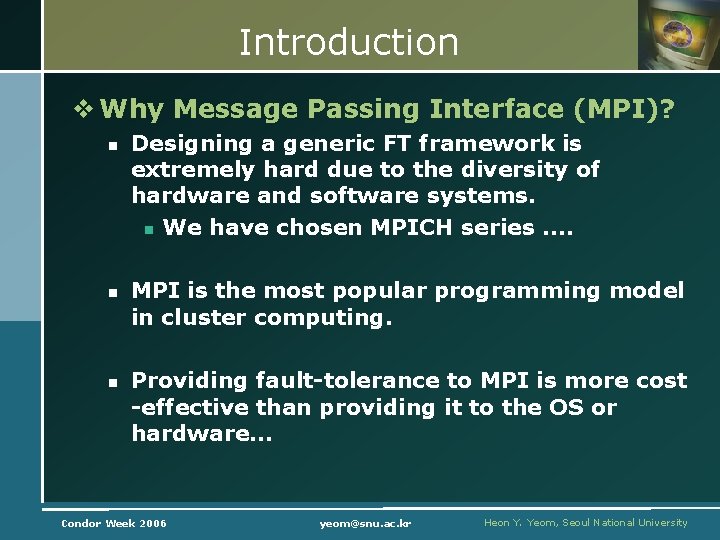

Introduction v Why Message Passing Interface (MPI)? n n n Designing a generic FT framework is extremely hard due to the diversity of hardware and software systems. n We have chosen MPICH series. . MPI is the most popular programming model in cluster computing. Providing fault-tolerance to MPI is more cost -effective than providing it to the OS or hardware… Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

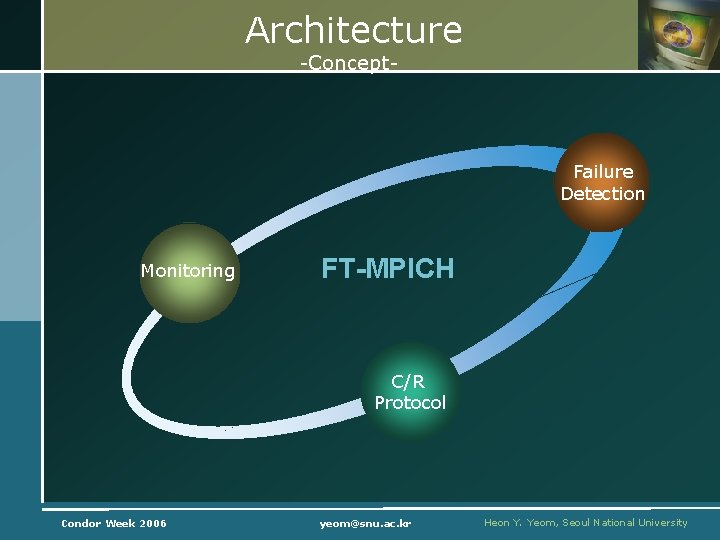

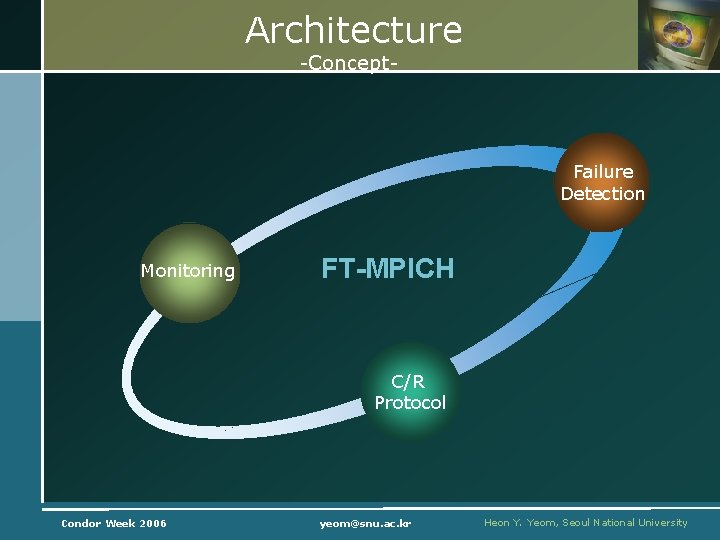

Architecture -Concept- Failure Detection Monitoring FT-MPICH C/R Protocol Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

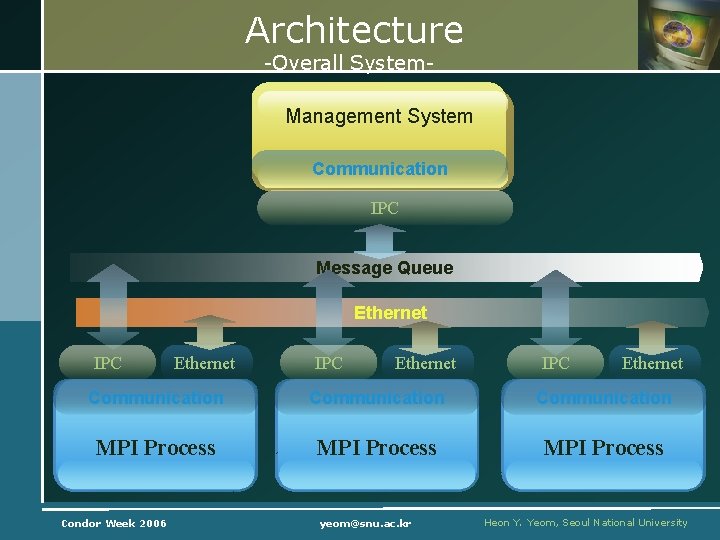

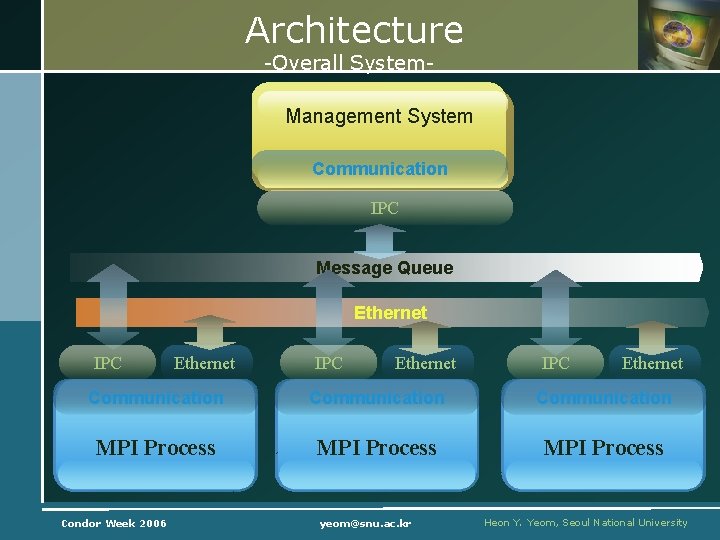

Architecture -Overall System- Management System Communication IPC Message Queue Ethernet IPC Ethernet Communication MPI Process Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

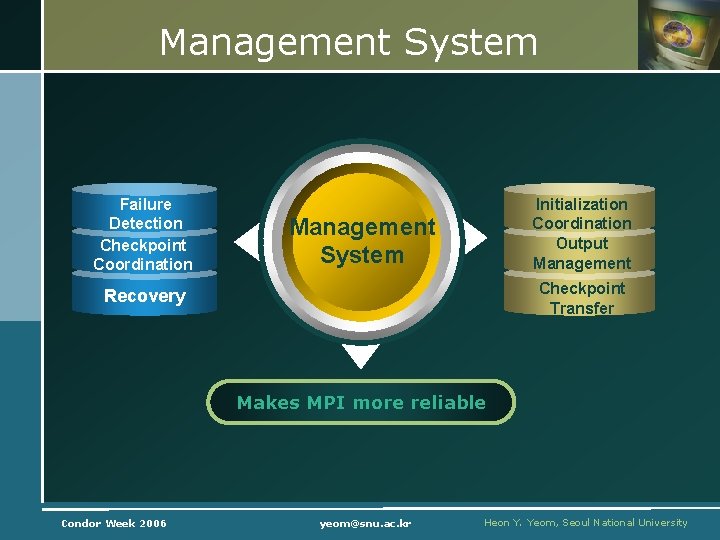

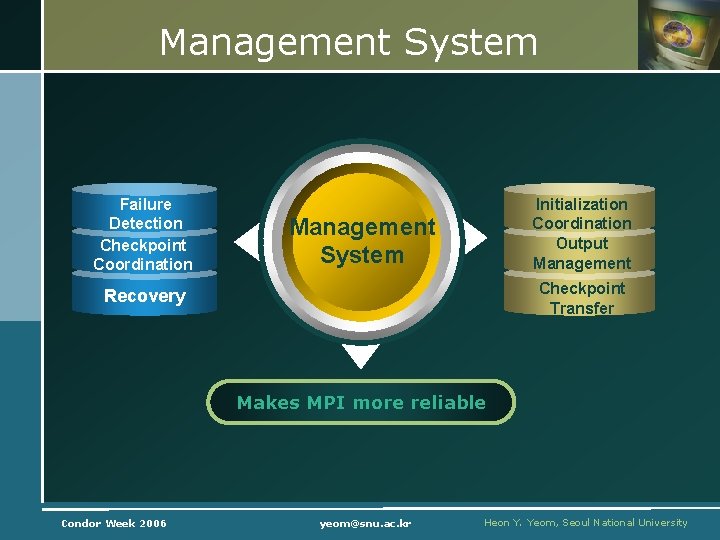

Management System Failure Detection Checkpoint Coordination Initialization Coordination Output Management System Checkpoint Transfer Recovery Makes MPI more reliable Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

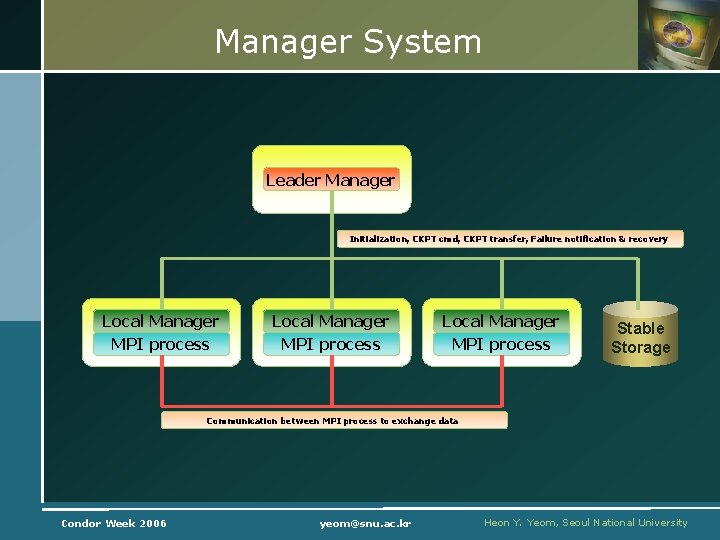

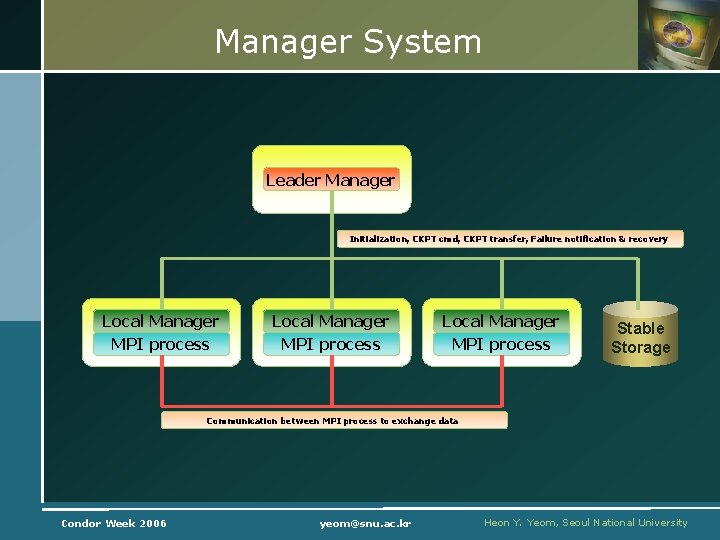

Manager System Leader Manager Initialization, CKPT cmd, CKPT transfer, Failure notification & recovery Local Manager MPI process Stable Storage Communication between MPI process to exchange data Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

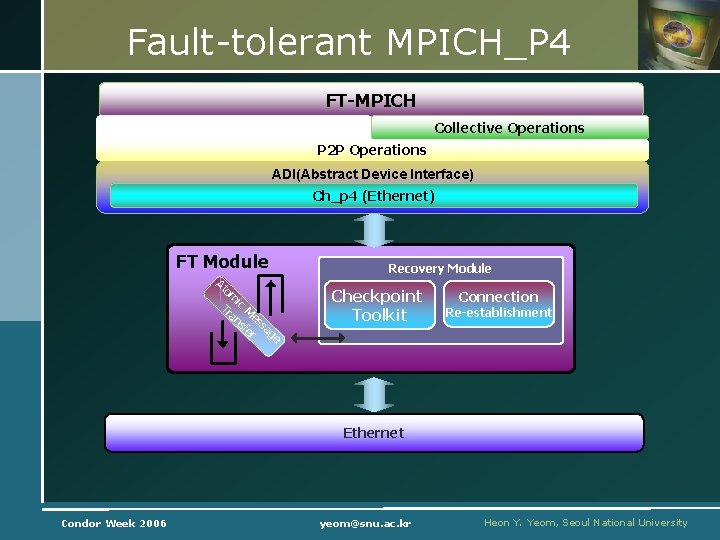

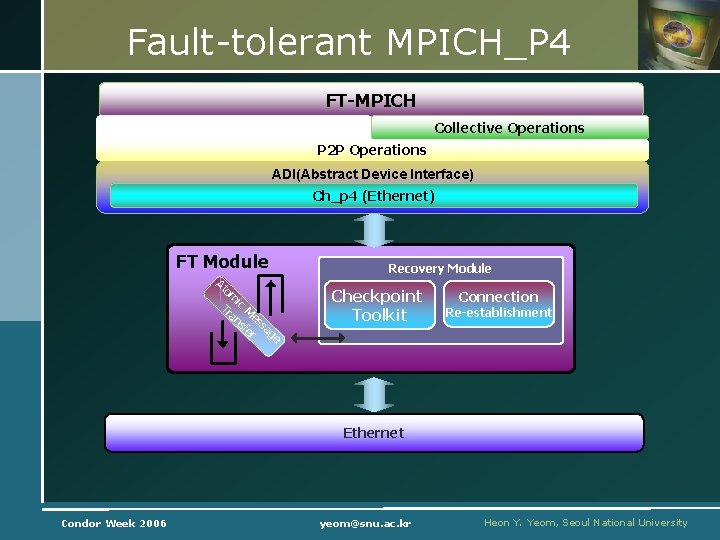

Fault-tolerant MPICH_P 4 FT-MPICH Collective Operations P 2 P Operations ADI(Abstract Device Interface) Ch_p 4 (Ethernet) FT Module At om Tr ic M an es sf sa er g e Recovery Module Checkpoint Toolkit Connection Re-establishment Ethernet Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

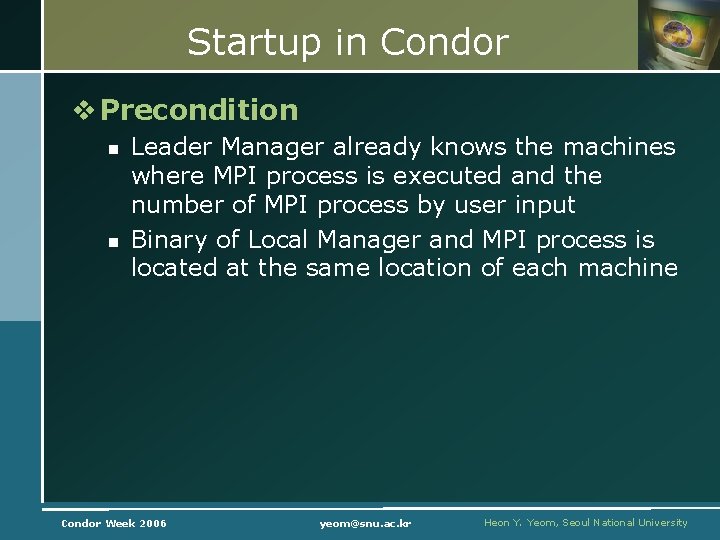

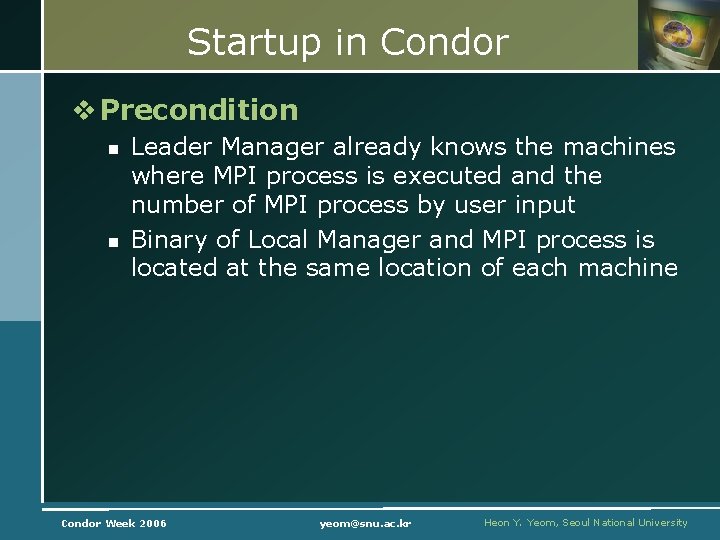

Startup in Condor v Precondition n n Leader Manager already knows the machines where MPI process is executed and the number of MPI process by user input Binary of Local Manager and MPI process is located at the same location of each machine Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

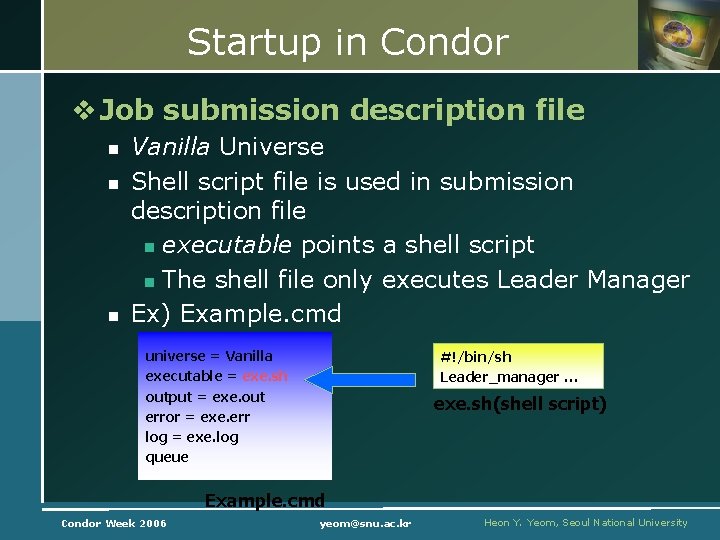

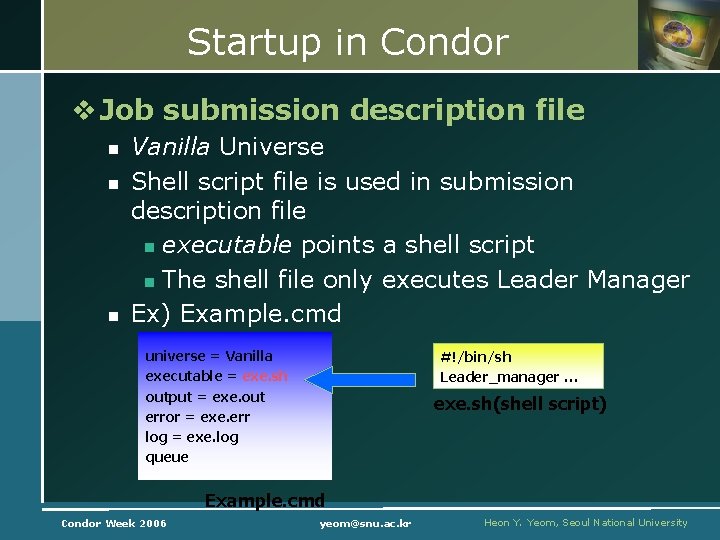

Startup in Condor v Job submission description file n n n Vanilla Universe Shell script file is used in submission description file n executable points a shell script n The shell file only executes Leader Manager Ex) Example. cmd universe = Vanilla executable = exe. sh output = exe. out error = exe. err log = exe. log queue #!/bin/sh Leader_manager … exe. sh(shell script) Example. cmd Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

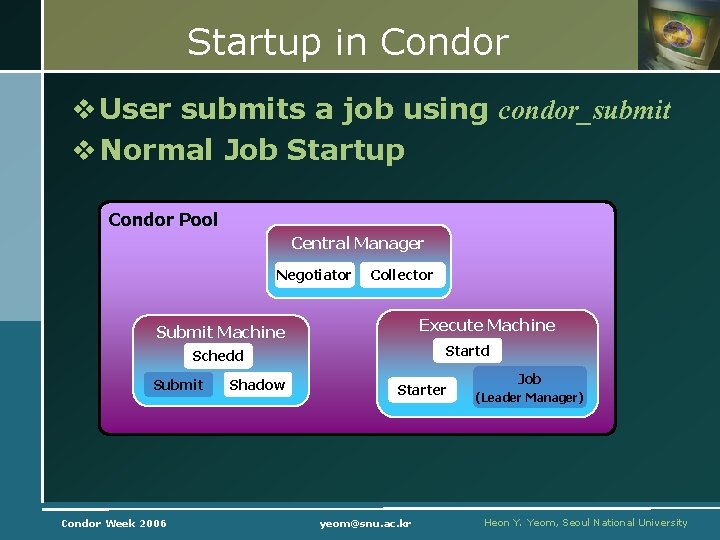

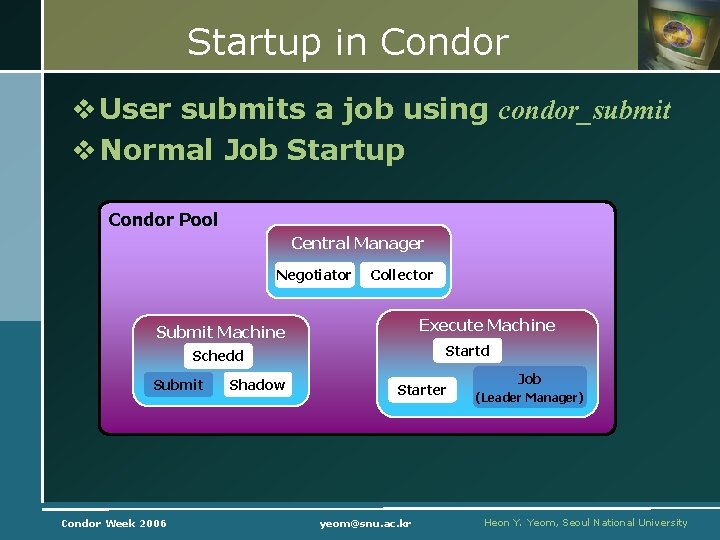

Startup in Condor v User submits a job using condor_submit v Normal Job Startup Condor Pool Central Manager Negotiator Collector Execute Machine Submit Machine Startd Schedd Submit Condor Week 2006 Shadow Starter yeom@snu. ac. kr Job (Leader Manager) Heon Y. Yeom, Seoul National University

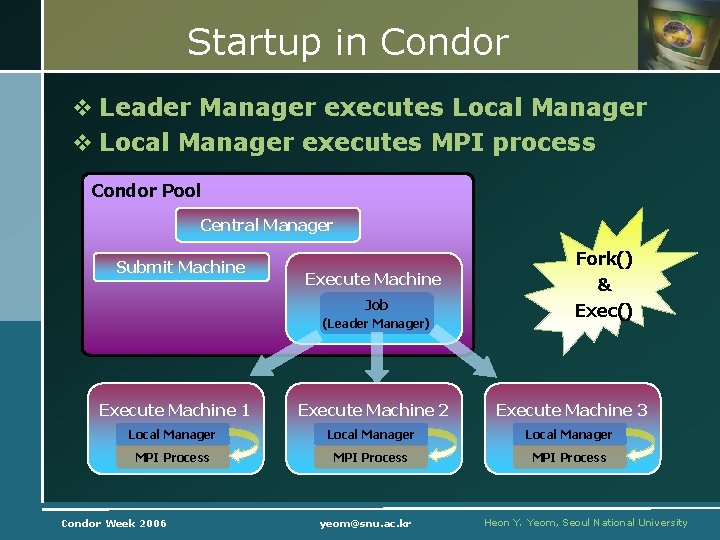

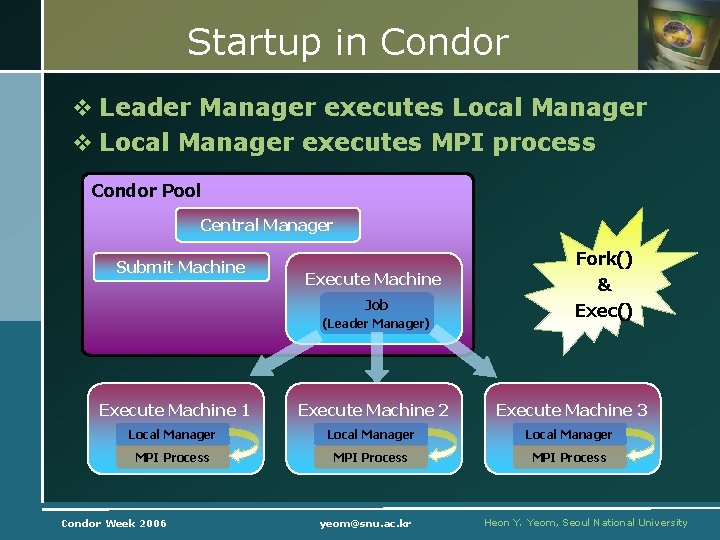

Startup in Condor v Leader Manager executes Local Manager v Local Manager executes MPI process Condor Pool Central Manager Submit Machine Execute Machine Job (Leader Manager) Fork() & Exec() Execute Machine 1 Execute Machine 2 Execute Machine 3 Local Manager MPI Process Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

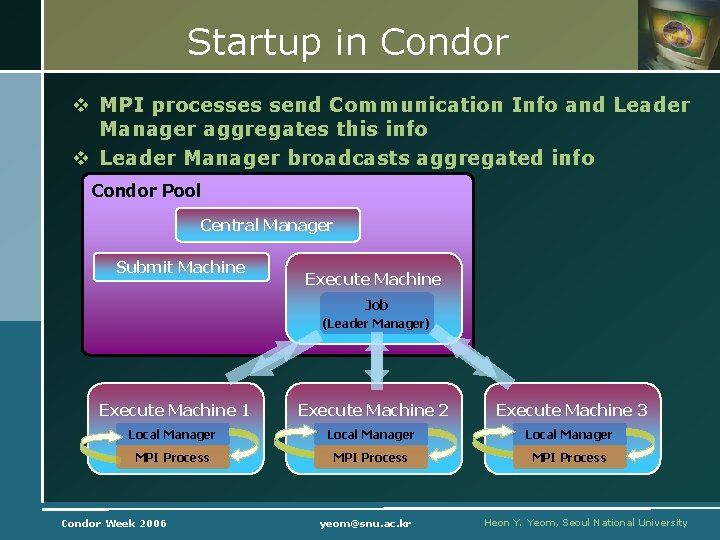

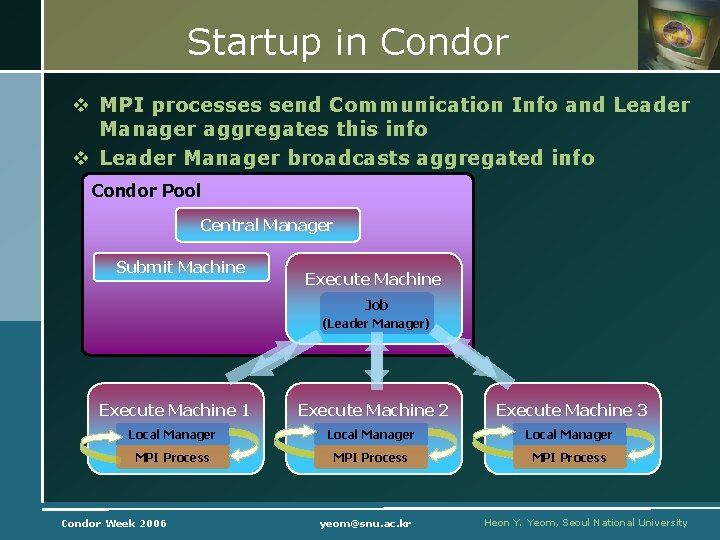

Startup in Condor v MPI processes send Communication Info and Leader Manager aggregates this info v Leader Manager broadcasts aggregated info Condor Pool Central Manager Submit Machine Execute Machine Job (Leader Manager) Execute Machine 1 Execute Machine 2 Execute Machine 3 Local Manager MPI Process Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

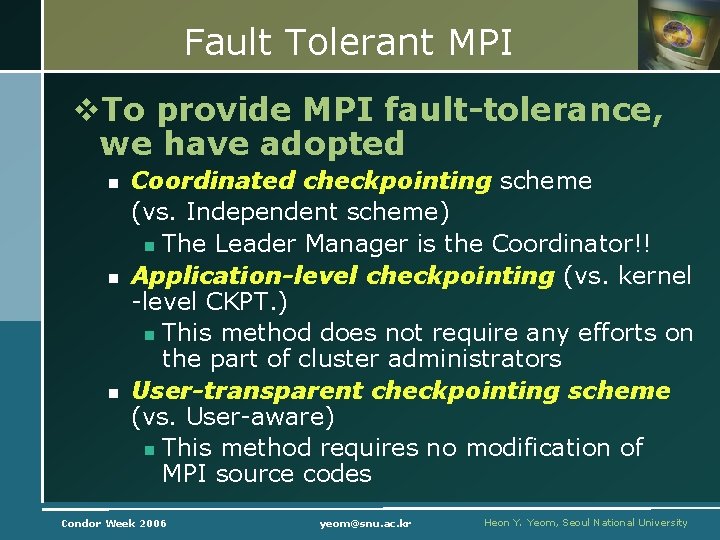

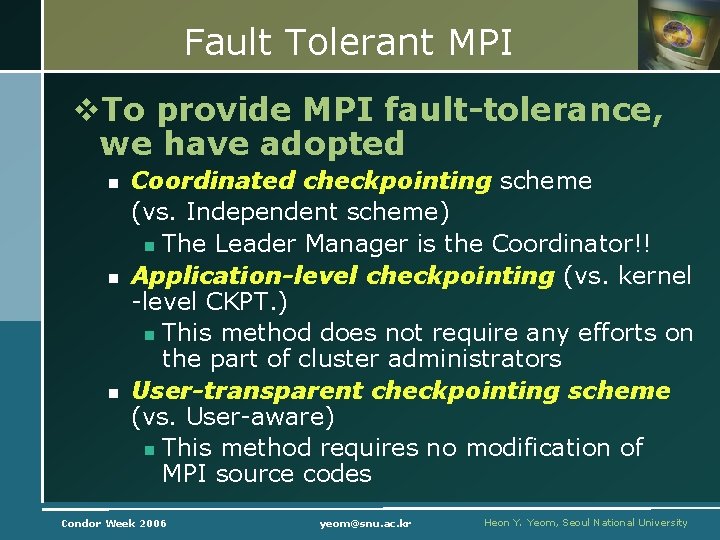

Fault Tolerant MPI v. To provide MPI fault-tolerance, we have adopted n n n Coordinated checkpointing scheme (vs. Independent scheme) n The Leader Manager is the Coordinator!! Application-level checkpointing (vs. kernel -level CKPT. ) n This method does not require any efforts on the part of cluster administrators User-transparent checkpointing scheme (vs. User-aware) n This method requires no modification of MPI source codes Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

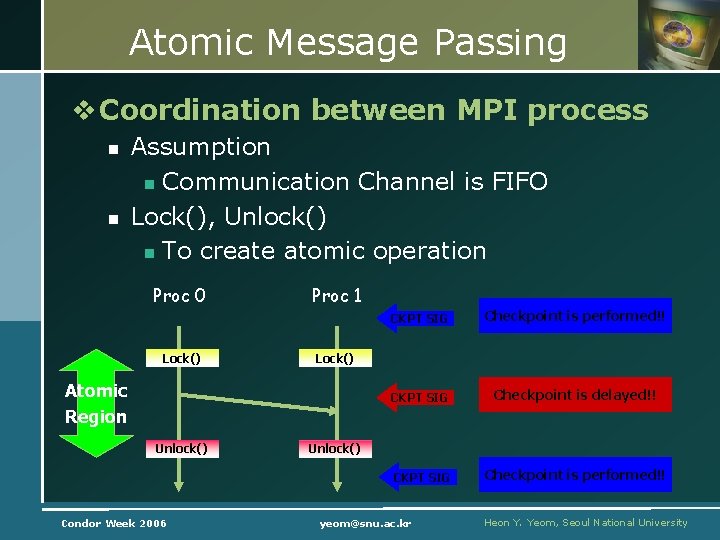

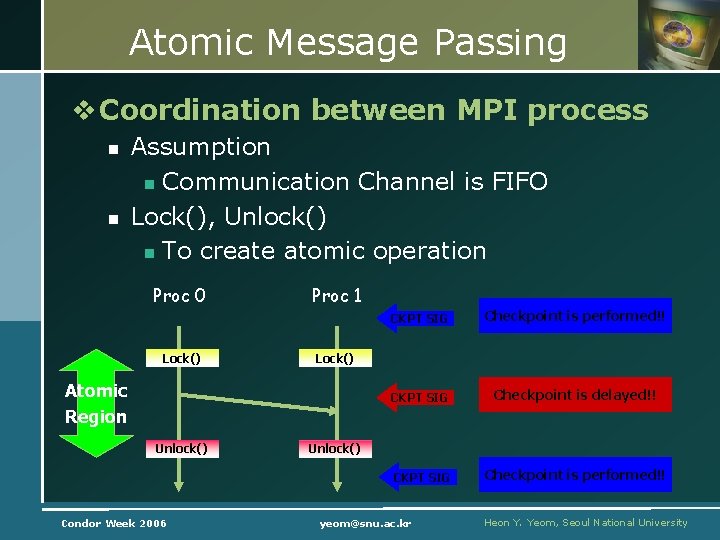

Atomic Message Passing v Coordination between MPI process n n Assumption n Communication Channel is FIFO Lock(), Unlock() n To create atomic operation Proc 0 Lock() Proc 1 Condor Week 2006 Checkpoint is performed!! CKPT SIG Checkpoint is delayed!! CKPT SIG Checkpoint is performed!! Lock() Atomic Region Unlock() CKPT SIG Unlock() yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

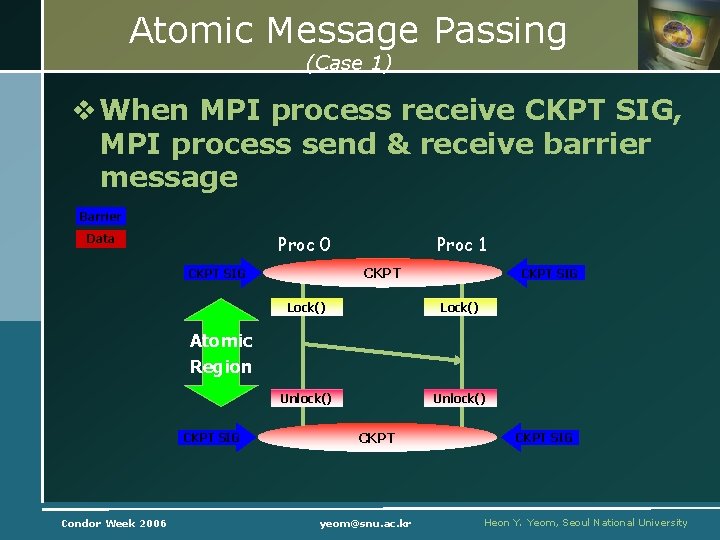

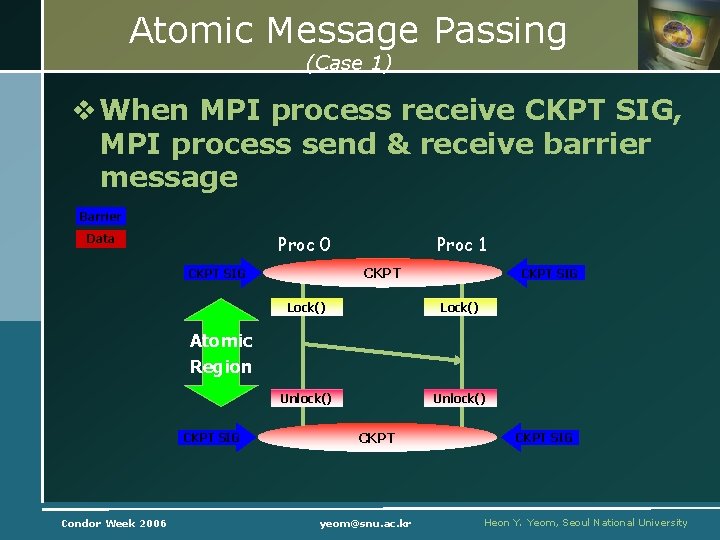

Atomic Message Passing (Case 1) v When MPI process receive CKPT SIG, MPI process send & receive barrier message Barrier Proc 0 Data Proc 1 CKPT SIG Lock() Unlock() Atomic Region CKPT SIG Condor Week 2006 CKPT yeom@snu. ac. kr CKPT SIG Heon Y. Yeom, Seoul National University

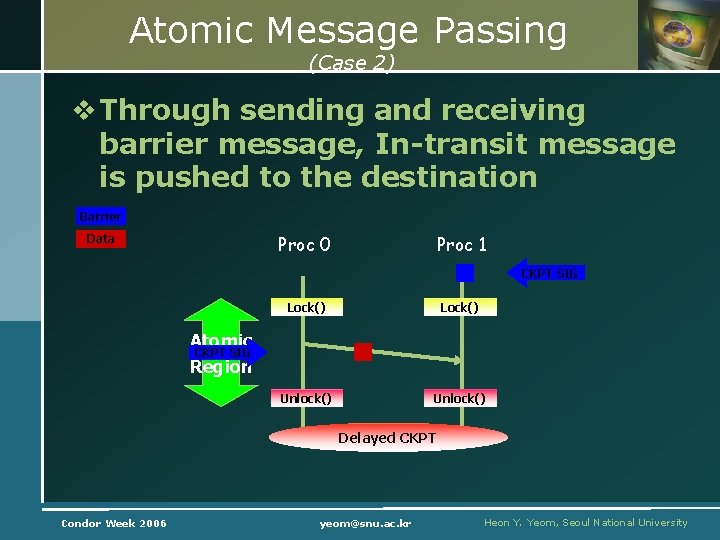

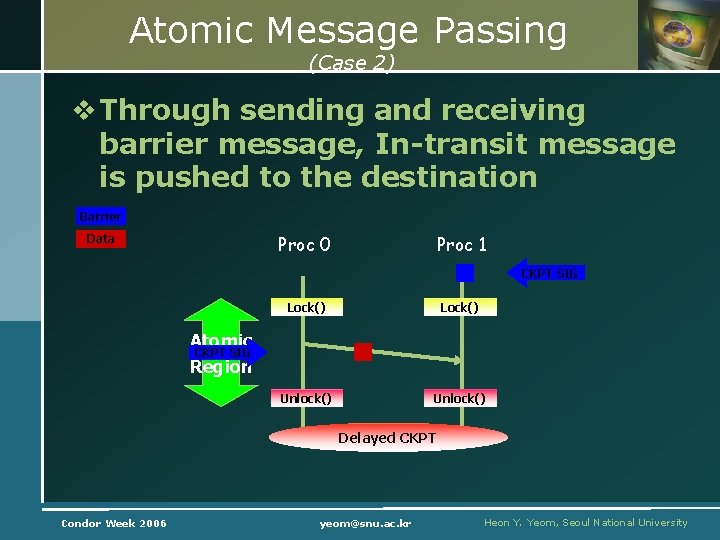

Atomic Message Passing (Case 2) v Through sending and receiving barrier message, In-transit message is pushed to the destination Barrier Proc 0 Data Proc 1 CKPT SIG Lock() Unlock() Atomic CKPT SIG Region Delayed CKPT Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

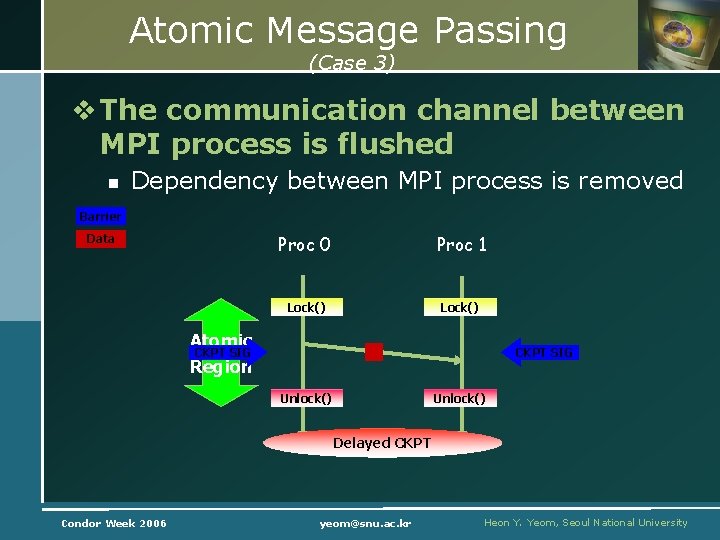

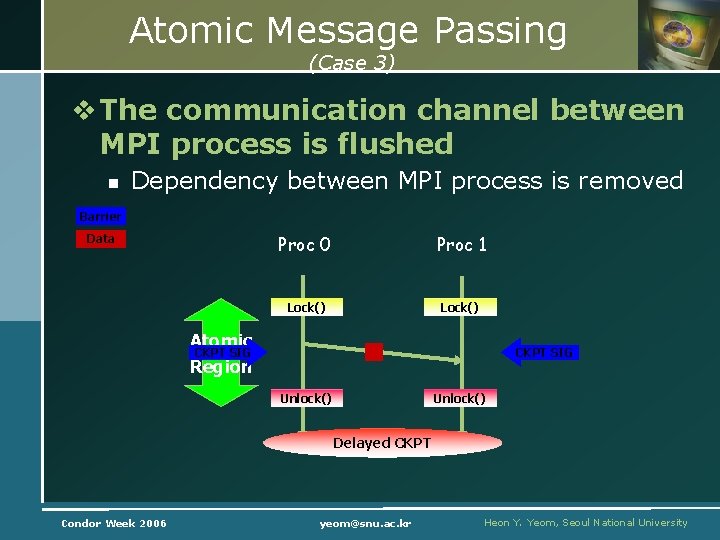

Atomic Message Passing (Case 3) v The communication channel between MPI process is flushed n Dependency between MPI process is removed Barrier Data Proc 0 Proc 1 Lock() Atomic CKPT SIG Region CKPT SIG Unlock() Delayed CKPT Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

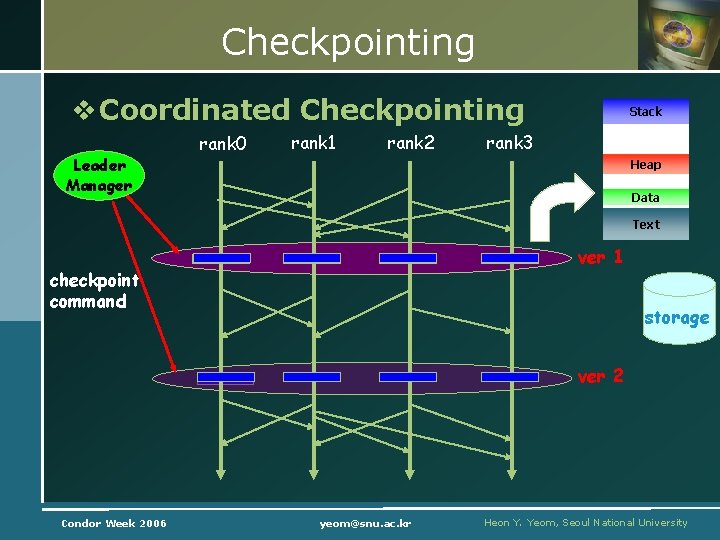

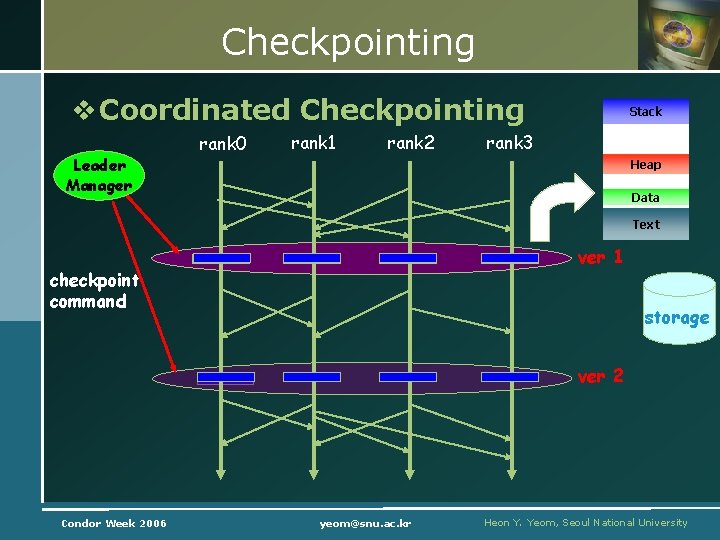

Checkpointing v Coordinated Checkpointing Leader Manager rank 0 rank 1 rank 2 Stack rank 3 Heap Data Text ver 1 checkpoint command storage ver 2 Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

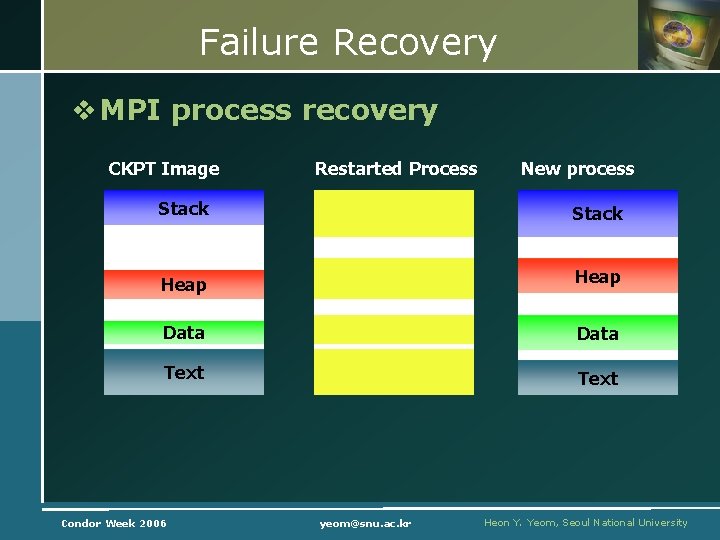

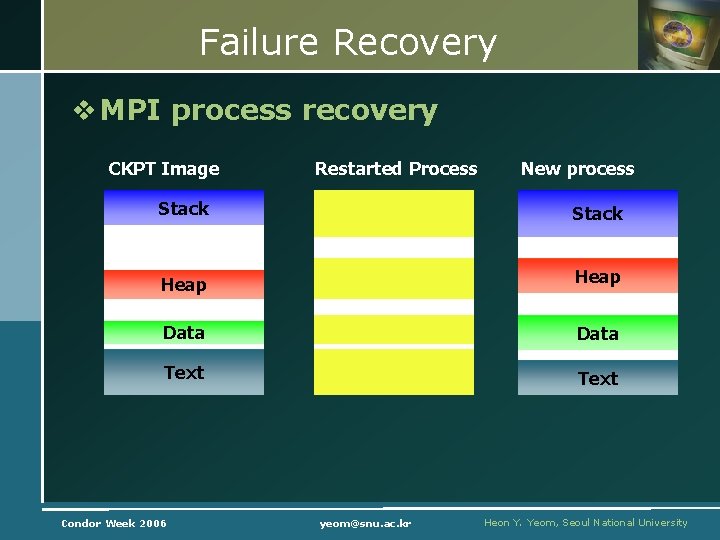

Failure Recovery v MPI process recovery CKPT Image Restarted Process New process Stack Heap Data Text Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

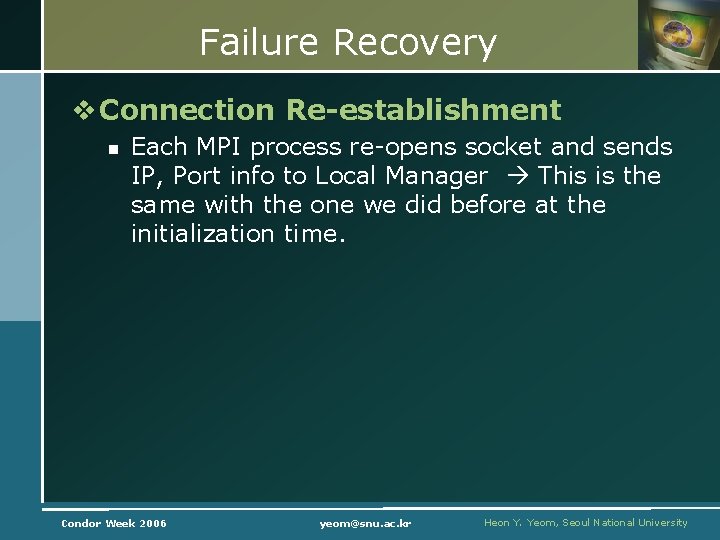

Failure Recovery v Connection Re-establishment n Each MPI process re-opens socket and sends IP, Port info to Local Manager This is the same with the one we did before at the initialization time. Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

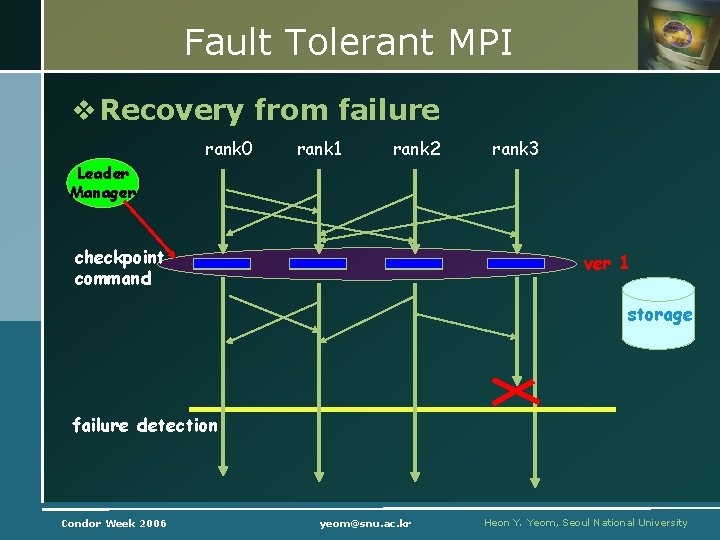

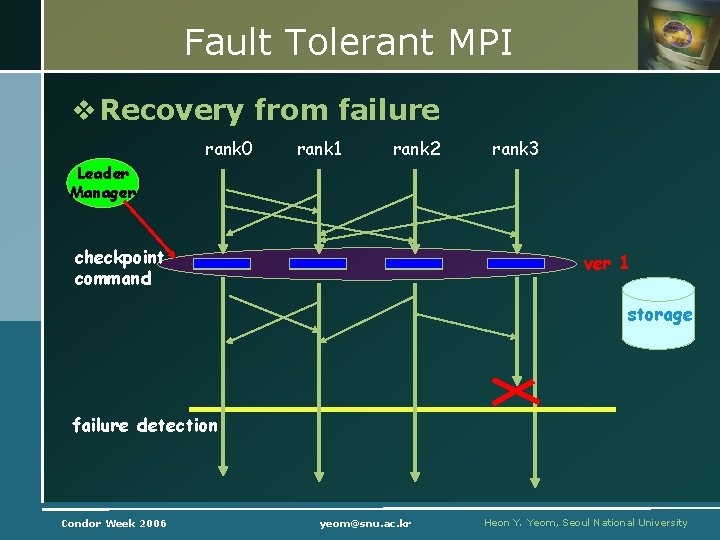

Fault Tolerant MPI v Recovery from failure rank 0 rank 1 rank 2 rank 3 Leader Manager checkpoint command ver 1 storage failure detection Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

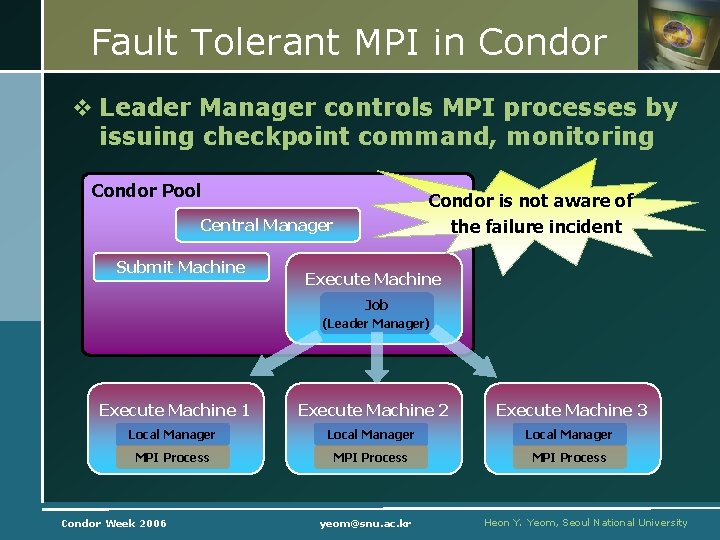

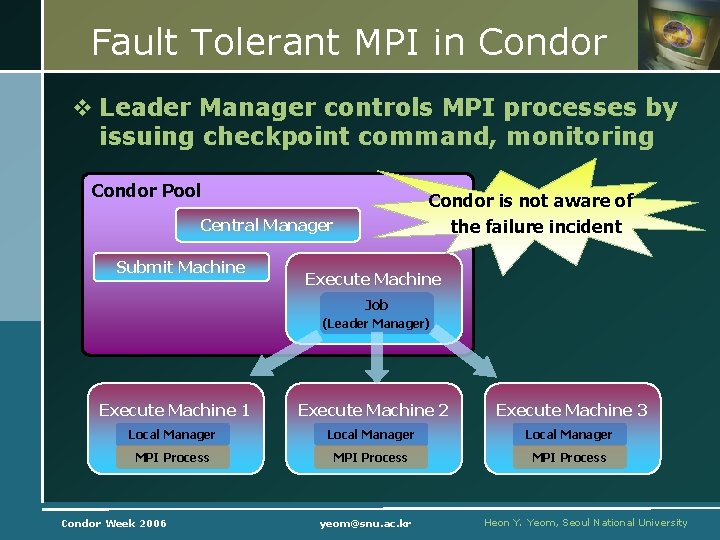

Fault Tolerant MPI in Condor v Leader Manager controls MPI processes by issuing checkpoint command, monitoring Condor Pool Condor is not aware of the failure incident Central Manager Submit Machine Execute Machine Job (Leader Manager) Execute Machine 1 Execute Machine 2 Execute Machine 3 Local Manager MPI Process Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University

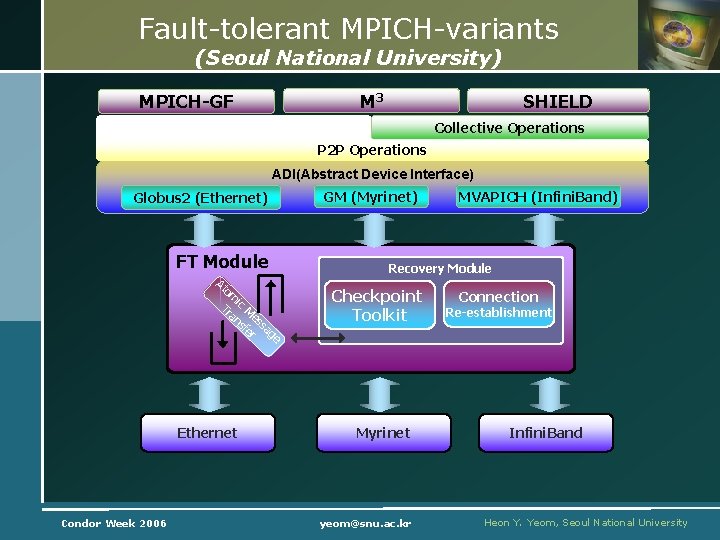

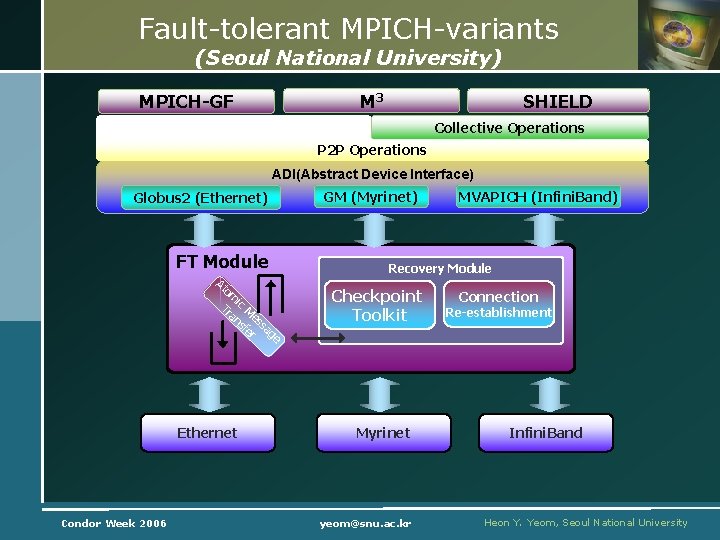

Fault-tolerant MPICH-variants (Seoul National University) M 3 MPICH-GF SHIELD Collective Operations P 2 P Operations ADI(Abstract Device Interface) Globus 2 (Ethernet) FT Module At om Tr ic M an es sf sa er g e Ethernet Condor Week 2006 GM (Myrinet) MVAPICH (Infini. Band) Recovery Module Checkpoint Toolkit Myrinet yeom@snu. ac. kr Connection Re-establishment Infini. Band Heon Y. Yeom, Seoul National University

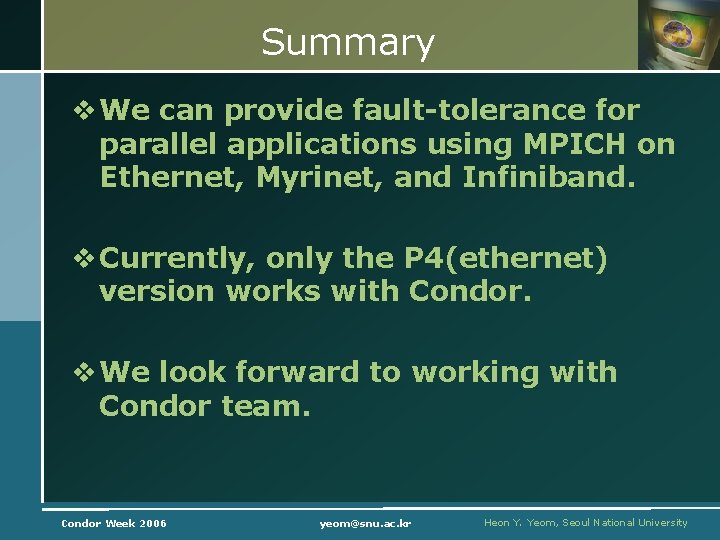

Summary v We can provide fault-tolerance for parallel applications using MPICH on Ethernet, Myrinet, and Infiniband. v Currently, only the P 4(ethernet) version works with Condor. v We look forward to working with Condor team. Condor Week 2006 yeom@snu. ac. kr Heon Y. Yeom, Seoul National University