FTK SIMULATION G Volpi HPC working groupo meeting

- Slides: 17

FTK SIMULATION G. Volpi

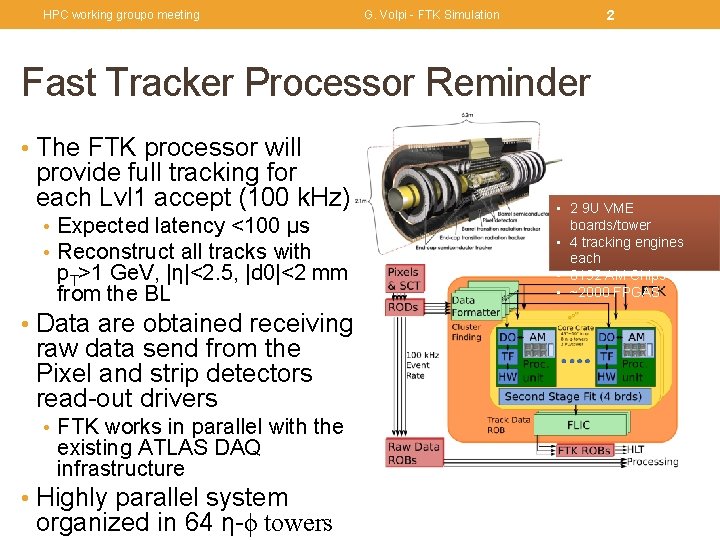

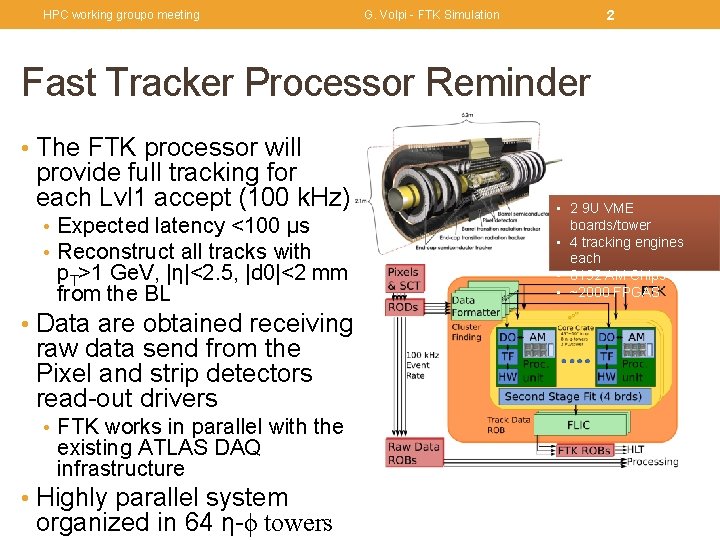

HPC working groupo meeting 2 G. Volpi - FTK Simulation Fast Tracker Processor Reminder • The FTK processor will provide full tracking for each Lvl 1 accept (100 k. Hz) • Expected latency <100 µs • Reconstruct all tracks with p. T>1 Ge. V, |η|<2. 5, |d 0|<2 mm from the BL • Data are obtained receiving raw data send from the Pixel and strip detectors read-out drivers • FTK works in parallel with the existing ATLAS DAQ infrastructure • Highly parallel system organized in 64 η-ϕ towers • 2 9 U VME boards/tower • 4 tracking engines each • 8192 AM Chips • ~2000 FPGAS

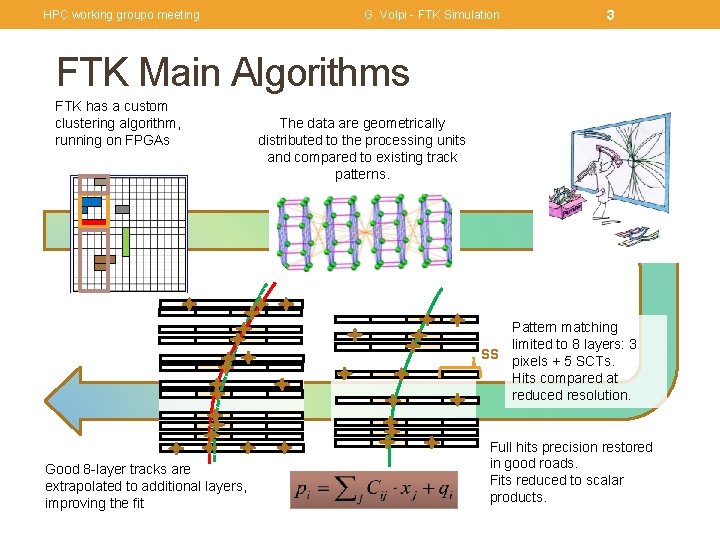

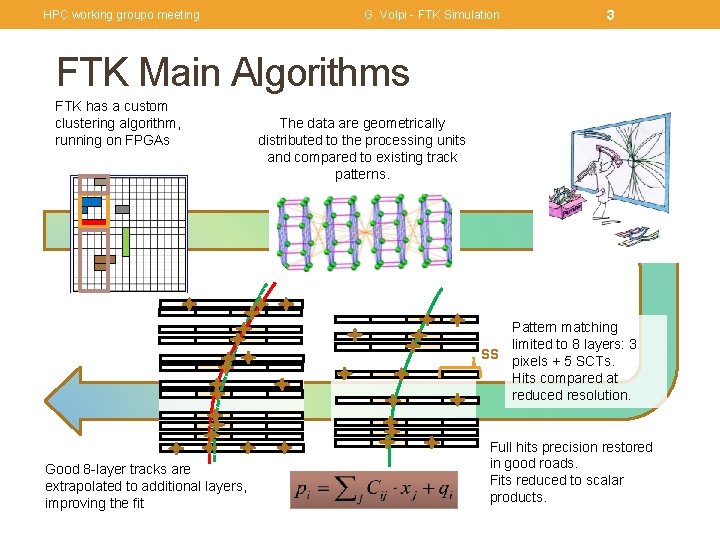

HPC working groupo meeting G. Volpi - FTK Simulation 3 FTK Main Algorithms FTK has a custom clustering algorithm, running on FPGAs The data are geometrically distributed to the processing units and compared to existing track patterns. Pattern matching limited to 8 layers: 3 SS pixels + 5 SCTs. Hits compared at reduced resolution. Good 8 -layer tracks are extrapolated to additional layers, improving the fit Full hits precision restored in good roads. Fits reduced to scalar products.

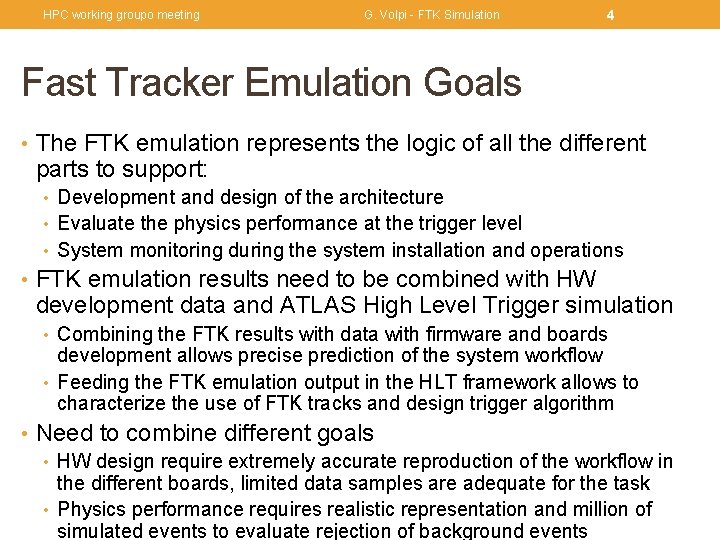

HPC working groupo meeting G. Volpi - FTK Simulation 4 Fast Tracker Emulation Goals • The FTK emulation represents the logic of all the different parts to support: • Development and design of the architecture • Evaluate the physics performance at the trigger level • System monitoring during the system installation and operations • FTK emulation results need to be combined with HW development data and ATLAS High Level Trigger simulation • Combining the FTK results with data with firmware and boards development allows precise prediction of the system workflow • Feeding the FTK emulation output in the HLT framework allows to characterize the use of FTK tracks and design trigger algorithm • Need to combine different goals • HW design require extremely accurate reproduction of the workflow in the different boards, limited data samples are adequate for the task • Physics performance requires realistic representation and million of simulated events to evaluate rejection of background events

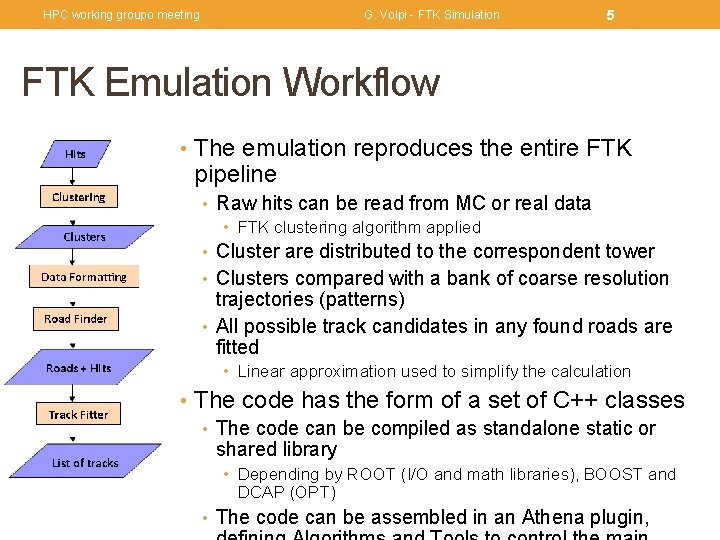

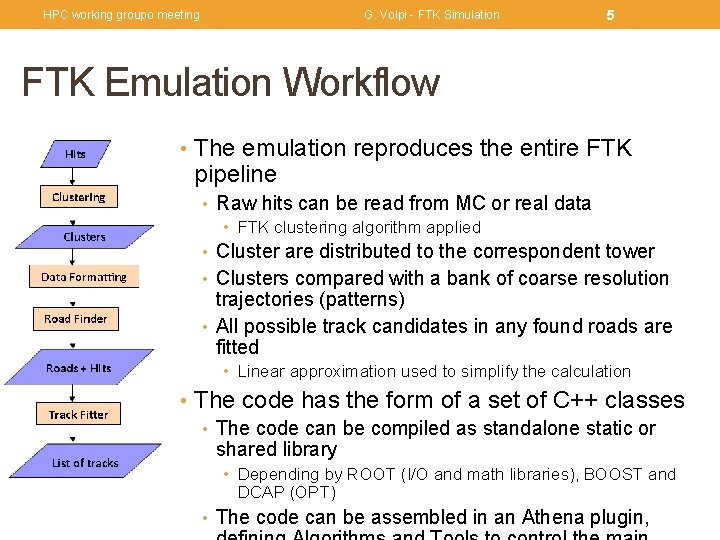

HPC working groupo meeting G. Volpi - FTK Simulation 5 FTK Emulation Workflow • The emulation reproduces the entire FTK pipeline • Raw hits can be read from MC or real data • FTK clustering algorithm applied • Cluster are distributed to the correspondent tower • Clusters compared with a bank of coarse resolution trajectories (patterns) • All possible track candidates in any found roads are fitted • Linear approximation used to simplify the calculation • The code has the form of a set of C++ classes • The code can be compiled as standalone static or shared library • Depending by ROOT (I/O and math libraries), BOOST and DCAP (OPT) • The code can be assembled in an Athena plugin,

HPC working groupo meeting G. Volpi - FTK Simulation 6 FTK Full Emulation Reminder • The FTK full emulation has been initially developed using the code of an existing standalone code, describing the functionality of the main components • Most detailed available representation of the hardware • Not an exact replica of the boards’ firmware but very close • Differences are expected to be reduced, or removed, where this is possible and feasible • Implementing algorithms designed for custom HW is resource intensive using standard computing resources • The whole AM system will have ~25 TB/s for data I/O, able to make about 4 E 17 32 bit comparisons per second, a single large FPGA can make up to 1 TFlop/s • Hard to reach similar numbers, for these very specific tasks, on regular CPU based systems • The full simulation used for a very broad range of studies: • HW dataflow, required to predict the impact of HW decisions

HPC working groupo meeting G. Volpi - FTK Simulation 7 FTK Full Emulation Resources • Large use of resource in memory and disk to load the values that will be loaded in the AM and the FPGAs used for the tracks fitting • 10^9 patterns, 9 integers: 36 byte in memory, ~35 GB in memory • 10^6 1 st stage fit constants, 11*12 floats, 500 MB in memory • 1. 6*10^6 2 nd stage fit constants, 16*17 floats, 1. 7 GB in memory • Other auxiliary configuration data will be within 100 MB • Disk usage is about 50% less, due to compression, • The requirements don’t allow to run in the a single jobs • Defined a general strategy to split the simulation in small orthogonal parts and then merge the result • Current splitting follows the subdivision in 64 towers with the possibility of further segmentations (currently 4 partition in each towers, a. k. a 4 sub-regions)

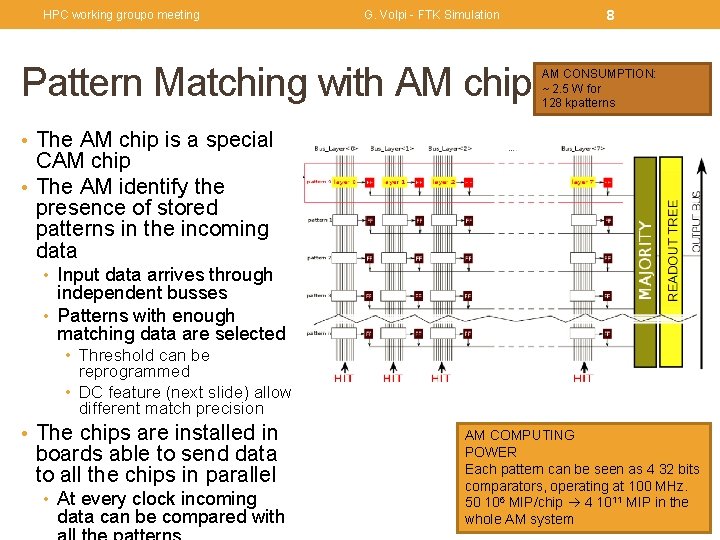

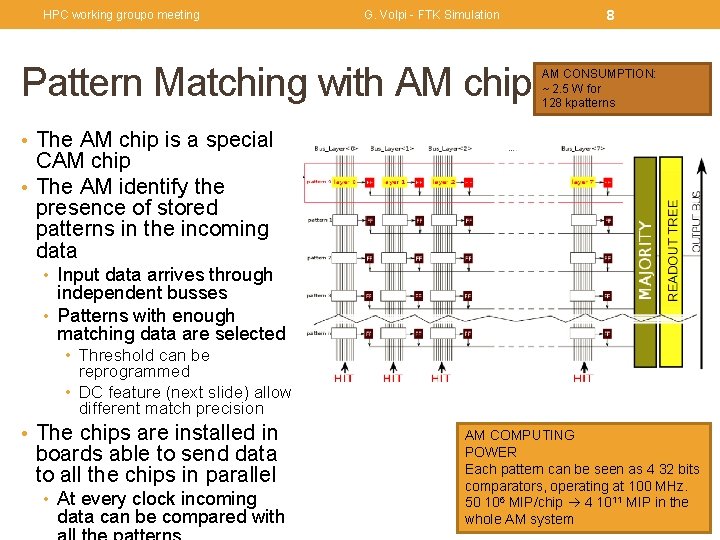

HPC working groupo meeting G. Volpi - FTK Simulation Pattern Matching with AM chip 8 AM CONSUMPTION: ~ 2. 5 W for 128 kpatterns • The AM chip is a special CAM chip • The AM identify the presence of stored patterns in the incoming data • Input data arrives through independent busses • Patterns with enough matching data are selected • Threshold can be reprogrammed • DC feature (next slide) allow different match precision • The chips are installed in boards able to send data to all the chips in parallel • At every clock incoming data can be compared with AM COMPUTING POWER Each pattern can be seen as 4 32 bits comparators, operating at 100 MHz. 50 106 MIP/chip 4 1011 MIP in the whole AM system

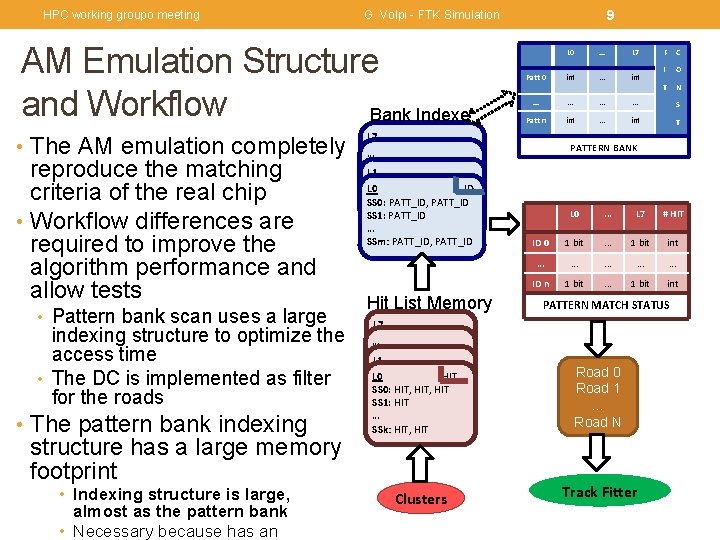

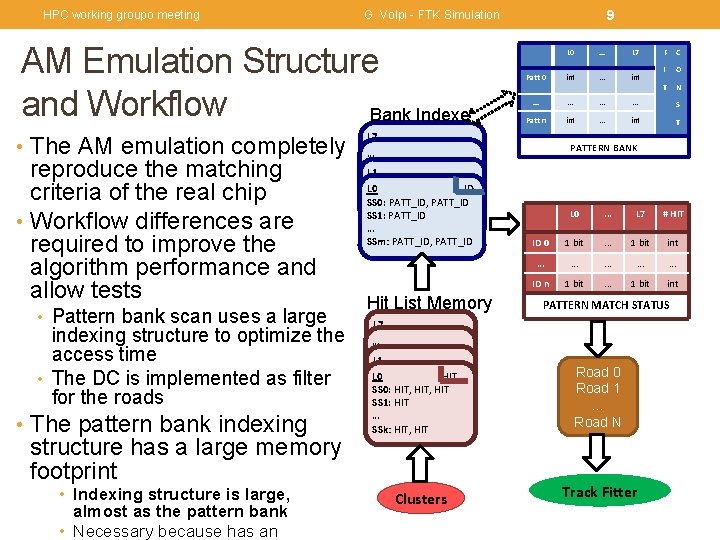

HPC working groupo meeting AM Emulation Structure and Workflow Bank Indexes • The AM emulation completely reproduce the matching criteria of the real chip • Workflow differences are required to improve the algorithm performance and allow tests • Pattern bank scan uses a large indexing structure to optimize the access time • The DC is implemented as filter for the roads • The pattern bank indexing structure has a large memory footprint • Indexing structure is large, almost as the pattern bank • Necessary because has an 9 G. Volpi - FTK Simulation Patt 0 L 0 . . . L 7 int . . . int F C I O T N . . . S Patt n int . . . int T L 7 PATTERN BANK … L 1 L 0 ID SS 0: PATT_ID, PATT_ID SS 1: PATT_ID … SSm: PATT_ID, PATT_ID Hit List Memory L 0 . . . L 7 # HIT ID 0 1 bit . . . 1 bit int . . . . ID n 1 bit . . . 1 bit int PATTERN MATCH STATUS L 7 … L 1 L 0 HIT SS 0: HIT, HIT SS 1: HIT … SSk: HIT, HIT Clusters Road 0 Road 1 … Road N Track Fitter

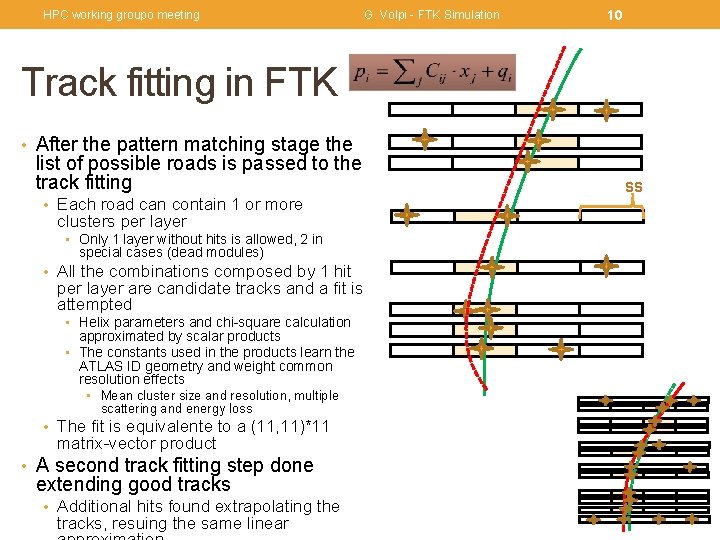

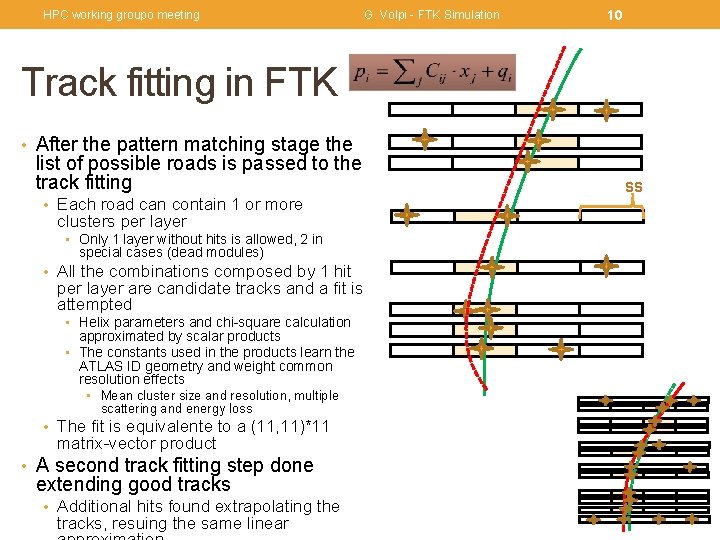

HPC working groupo meeting G. Volpi - FTK Simulation 10 Track fitting in FTK • After the pattern matching stage the list of possible roads is passed to the track fitting • Each road can contain 1 or more clusters per layer • Only 1 layer without hits is allowed, 2 in special cases (dead modules) • All the combinations composed by 1 hit per layer are candidate tracks and a fit is attempted • Helix parameters and chi-square calculation approximated by scalar products • The constants used in the products learn the ATLAS ID geometry and weight common resolution effects • Mean cluster size and resolution, multiple scattering and energy loss • The fit is equivalente to a (11, 11)*11 matrix-vector product • A second track fitting step done extending good tracks • Additional hits found extrapolating the tracks, resuing the same linear SS

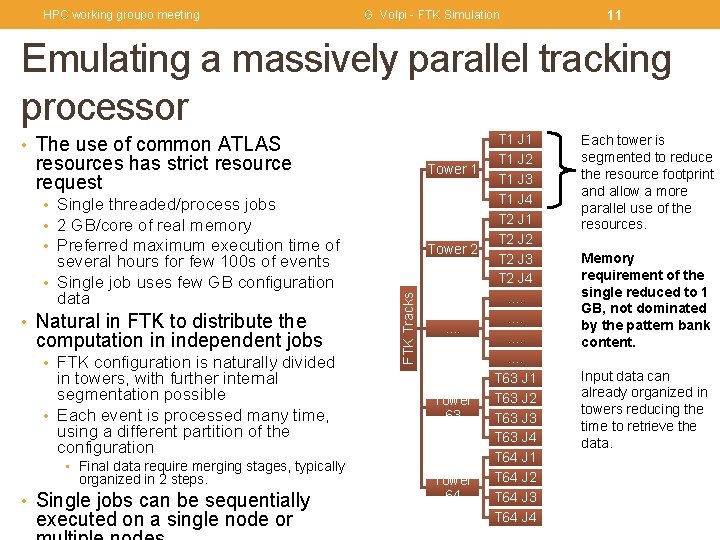

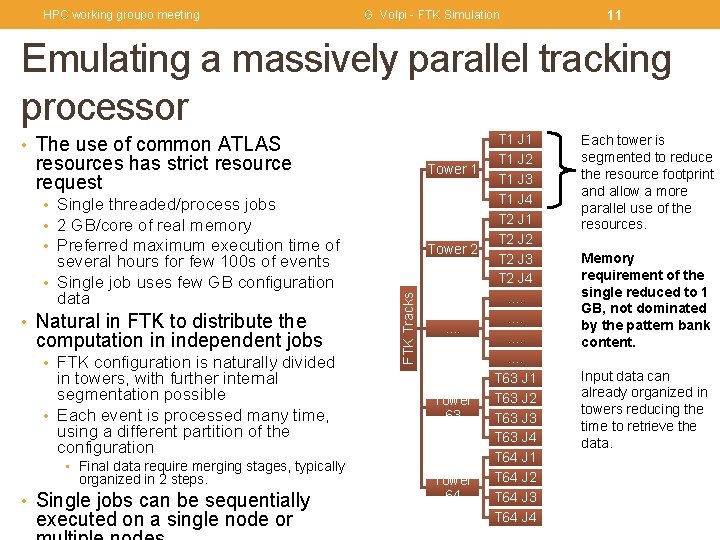

HPC working groupo meeting G. Volpi - FTK Simulation 11 Emulating a massively parallel tracking processor • The use of common ATLAS resources has strict resource request Tower 1 • Single threaded/process jobs • 2 GB/core of real memory • Preferred maximum execution time of • Natural in FTK to distribute the computation in independent jobs • FTK configuration is naturally divided in towers, with further internal segmentation possible • Each event is processed many time, using a different partition of the configuration • Final data require merging stages, typically organized in 2 steps. • Single jobs can be sequentially executed on a single node or Tower 2 FTK Tracks several hours for few 100 s of events • Single job uses few GB configuration data . . Tower 63 Tower 64 T 1 J 1 T 1 J 2 T 1 J 3 T 1 J 4 T 2 J 1 T 2 J 2 T 2 J 3 T 2 J 4. . . . T 63 J 1 T 63 J 2 T 63 J 3 T 63 J 4 T 64 J 1 T 64 J 2 T 64 J 3 T 64 J 4 Each tower is segmented to reduce the resource footprint and allow a more parallel use of the resources. Memory requirement of the single reduced to 1 GB, not dominated by the pattern bank content. Input data can already organized in towers reducing the time to retrieve the data.

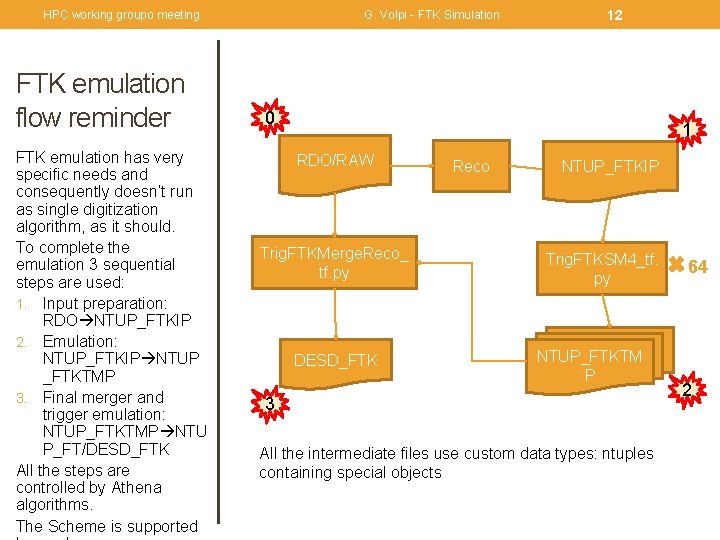

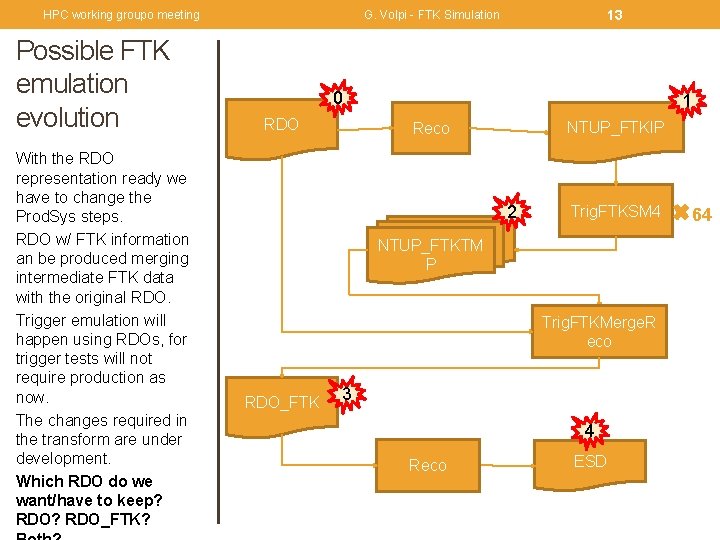

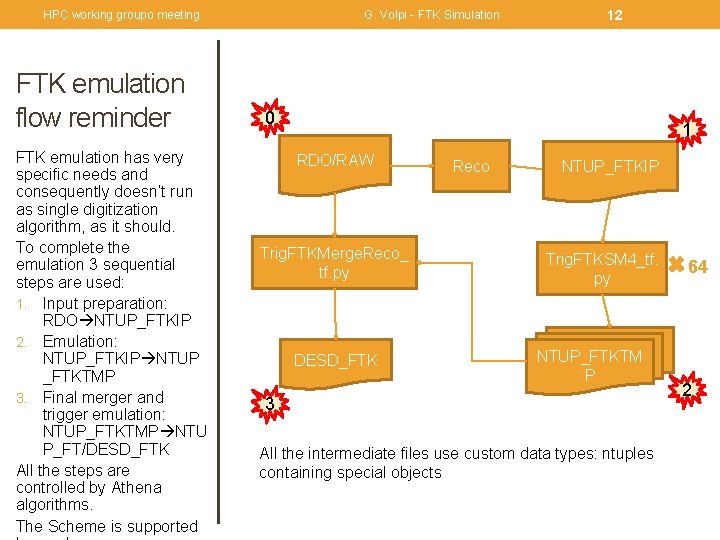

HPC working groupo meeting FTK emulation flow reminder FTK emulation has very specific needs and consequently doesn’t run as single digitization algorithm, as it should. To complete the emulation 3 sequential steps are used: 1. Input preparation: RDO NTUP_FTKIP 2. Emulation: NTUP_FTKIP NTUP _FTKTMP 3. Final merger and trigger emulation: NTUP_FTKTMP NTU P_FT/DESD_FTK All the steps are controlled by Athena algorithms. The Scheme is supported G. Volpi - FTK Simulation 12 0 1 RDO/RAW Trig. FTKMerge. Reco_ tf. py DESD_FTK Reco NTUP_FTKIP Trig. FTKSM 4_tf. py NTUP_FTKTM P 3 All the intermediate files use custom data types: ntuples containing special objects 64 2

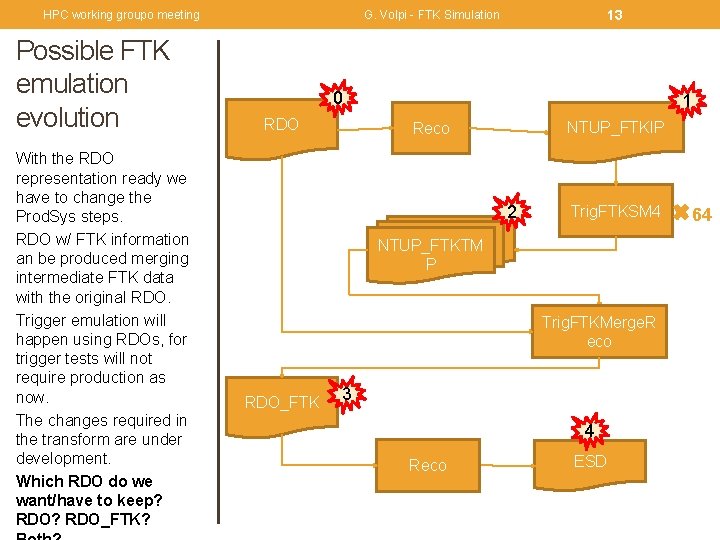

HPC working groupo meeting Possible FTK emulation evolution With the RDO representation ready we have to change the Prod. Sys steps. RDO w/ FTK information an be produced merging intermediate FTK data with the original RDO. Trigger emulation will happen using RDOs, for trigger tests will not require production as now. The changes required in the transform are under development. Which RDO do we want/have to keep? RDO_FTK? 13 G. Volpi - FTK Simulation 0 RDO 1 NTUP_FTKIP Reco 2 Trig. FTKSM 4 NTUP_FTKTM P Trig. FTKMerge. R eco RDO_FTK 3 4 Reco ESD 64

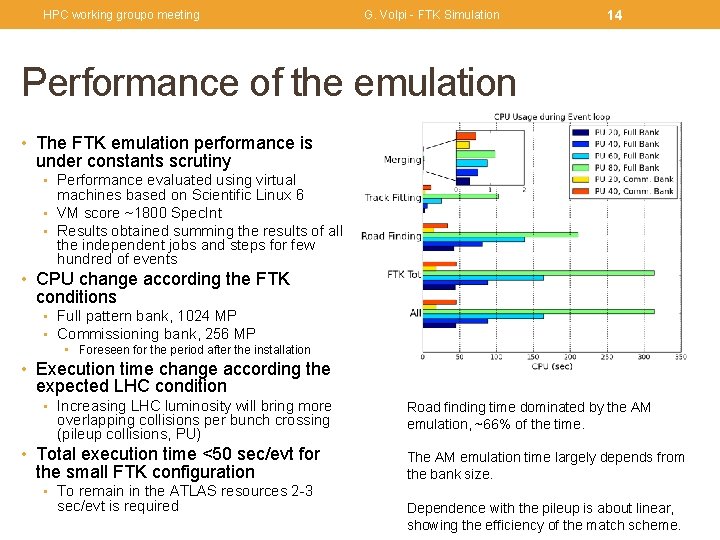

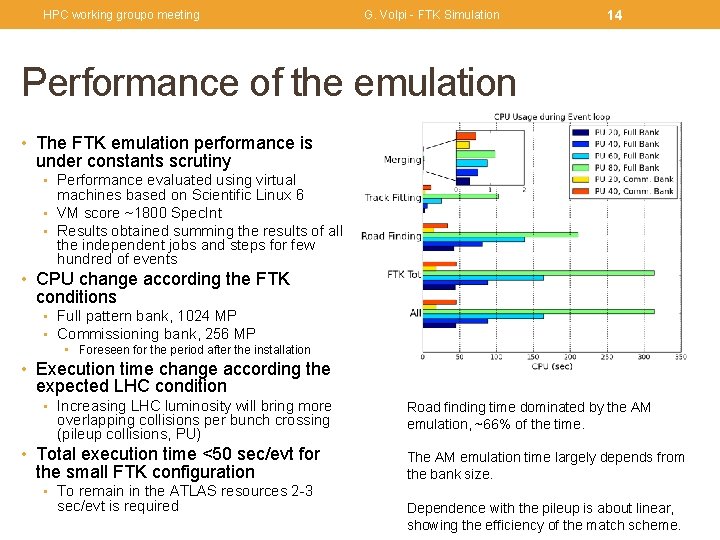

HPC working groupo meeting G. Volpi - FTK Simulation 14 Performance of the emulation • The FTK emulation performance is under constants scrutiny • Performance evaluated using virtual machines based on Scientific Linux 6 • VM score ~1800 Spec. Int • Results obtained summing the results of all the independent jobs and steps for few hundred of events • CPU change according the FTK conditions • Full pattern bank, 1024 MP • Commissioning bank, 256 MP • Foreseen for the period after the installation • Execution time change according the expected LHC condition • Increasing LHC luminosity will bring more overlapping collisions per bunch crossing (pileup collisions, PU) • Total execution time <50 sec/evt for the small FTK configuration Road finding time dominated by the AM emulation, ~66% of the time. The AM emulation time largely depends from the bank size. • To remain in the ATLAS resources 2 -3 sec/evt is required Dependence with the pileup is about linear, showing the efficiency of the match scheme.

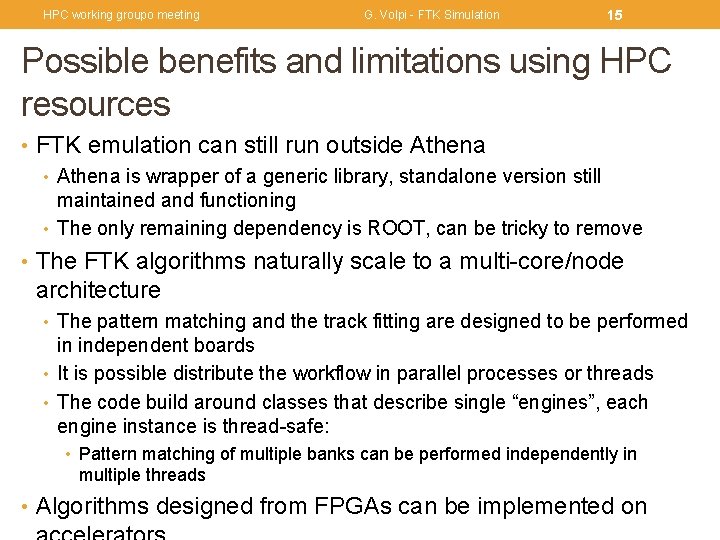

HPC working groupo meeting G. Volpi - FTK Simulation 15 Possible benefits and limitations using HPC resources • FTK emulation can still run outside Athena • Athena is wrapper of a generic library, standalone version still maintained and functioning • The only remaining dependency is ROOT, can be tricky to remove • The FTK algorithms naturally scale to a multi-core/node architecture • The pattern matching and the track fitting are designed to be performed in independent boards • It is possible distribute the workflow in parallel processes or threads • The code build around classes that describe single “engines”, each engine instance is thread-safe: • Pattern matching of multiple banks can be performed independently in multiple threads • Algorithms designed from FPGAs can be implemented on

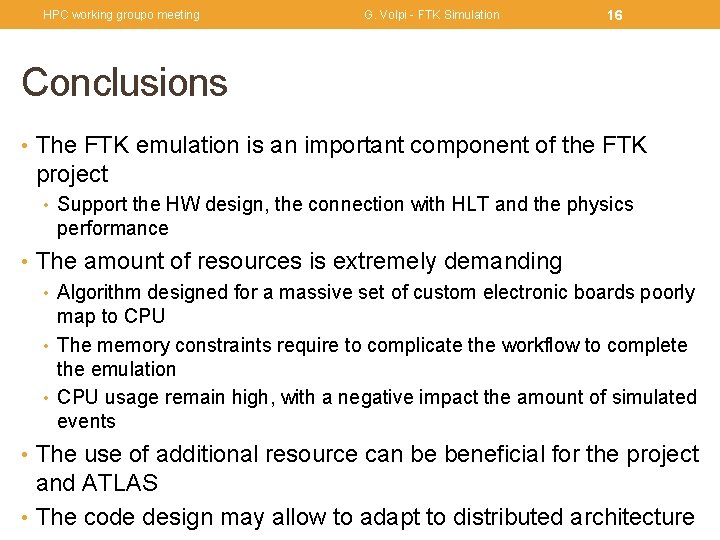

HPC working groupo meeting G. Volpi - FTK Simulation 16 Conclusions • The FTK emulation is an important component of the FTK project • Support the HW design, the connection with HLT and the physics performance • The amount of resources is extremely demanding • Algorithm designed for a massive set of custom electronic boards poorly map to CPU • The memory constraints require to complicate the workflow to complete the emulation • CPU usage remain high, with a negative impact the amount of simulated events • The use of additional resource can be beneficial for the project and ATLAS • The code design may allow to adapt to distributed architecture

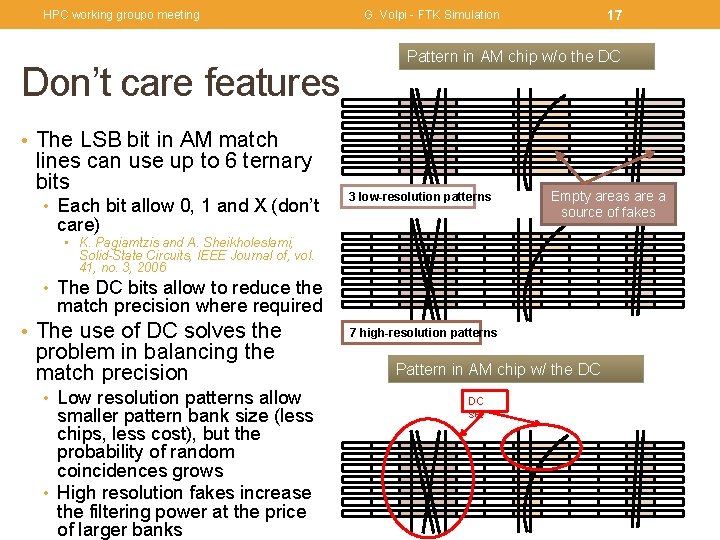

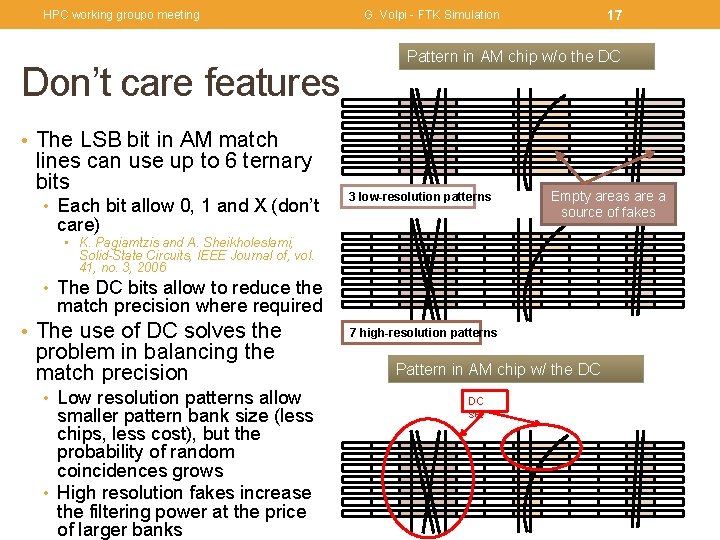

HPC working groupo meeting Don’t care features 17 G. Volpi - FTK Simulation Pattern in AM chip w/o the DC • The LSB bit in AM match lines can use up to 6 ternary bits • Each bit allow 0, 1 and X (don’t 3 low-resolution patterns care) Empty areas are a source of fakes • K. Pagiamtzis and A. Sheikholeslami, Solid-State Circuits, IEEE Journal of, vol. 41, no. 3, 2006 • The DC bits allow to reduce the match precision where required • The use of DC solves the problem in balancing the match precision • Low resolution patterns allow smaller pattern bank size (less chips, less cost), but the probability of random coincidences grows • High resolution fakes increase the filtering power at the price of larger banks 7 high-resolution patterns Pattern in AM chip w/ the DC DC set