From Measuring Impact to Measuring Contribution Rethinking approach

From Measuring Impact to Measuring Contribution Rethinking approach to evaluating complex Resilience Programmes Contribution Analysis (CA) and Qualitative Comparative Analysis (QCA) approaches Presented By: Grace Igweta October 2019

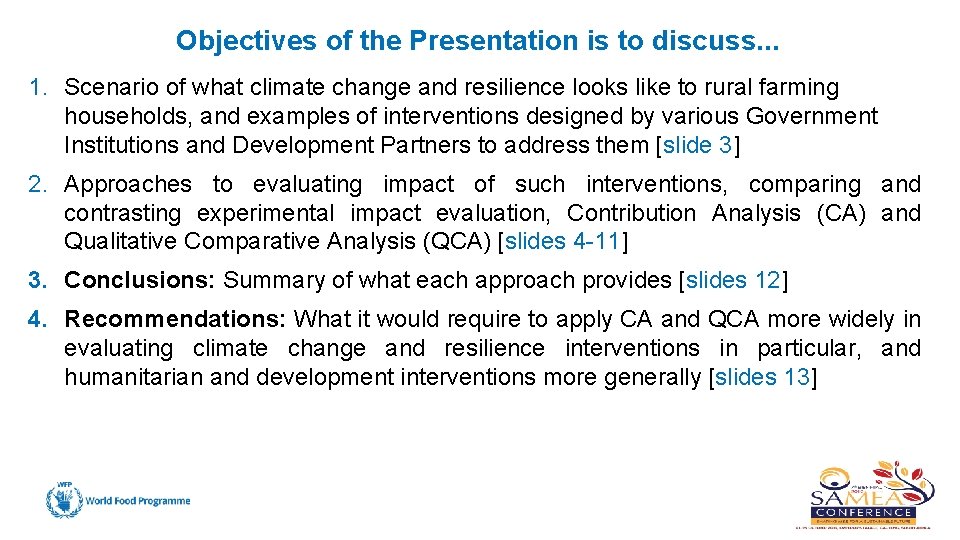

Objectives of the Presentation is to discuss. . . 1. Scenario of what climate change and resilience looks like to rural farming households, and examples of interventions designed by various Government Institutions and Development Partners to address them [slide 3] 2. Approaches to evaluating impact of such interventions, comparing and contrasting experimental impact evaluation, Contribution Analysis (CA) and Qualitative Comparative Analysis (QCA) [slides 4 -11] 3. Conclusions: Summary of what each approach provides [slides 12] 4. Recommendations: What it would require to apply CA and QCA more widely in evaluating climate change and resilience interventions in particular, and humanitarian and development interventions more generally [slides 13]

Understanding Climate change & Resilience| Rural HHs Scenario Due to climate change, rainfall is unpredictable (comes late, it is too little or too much), crops like Maize that used do well no longer do, leads to low productivity and reduced household incomes, leading to deepening poverty, poor household food consumption and malnutrition. During shocks (droughts or floods) families are forced to apply negative coping strategies including children dropping out of school, families selling productive assets etc Interventions 1. Distribution of drought resistant seeds [FAO/Ministry] 2. Climate Services information [IMO/Meteo Depart/Others] Re-invest Outputs Diversify livelihoods Intermediate Outcomes Immediate Outcomes? 4. Post-harvest loss reduction and access to market [WFP] Sell surplus to increase income to meet other Household needs 5. Health & Nutrition education [UNFPA, WFP, UNICEF] Harvesting practices 8. Savings & Loans [CBOs/NGOs] 10. Insurance [WFP/ firms] 1. I can feed my family throughout the year… 2. My children are well nourished 4. My family has access to health services 5. To achieve above, I don’t apply negative copying strategies 6. And so on…… 7. Micro-credits [MFIs, NGOs] 9. Publics Works [MOPWs, WFP] I am Resilient if: 3. My children attend school throughout the year 3. Farmer field schools [FAO] 6. Empowerment of women smallholder farmers [WFP] Impact Use of Food Storage /handling practices and facilities Household food Consumption What is the role of M&E in shaping this, sustainably?

![[1] Experimental Approach to Impact Evaluation: How it works Impact Evaluation: What is the [1] Experimental Approach to Impact Evaluation: How it works Impact Evaluation: What is the](http://slidetodoc.com/presentation_image_h2/49f2be73f7c39706035f45fabe6ebbb5/image-4.jpg)

[1] Experimental Approach to Impact Evaluation: How it works Impact Evaluation: What is the impact (or causal effect) of a program on an outcome of interest? Looks for the changes in outcome that are directly attributable to the program…. estimates the socalled counterfactual, that is, what the outcome would have been for program participants if they had not participated in the program [World Bank, 2011, pg 7] Some issues with this approach 1. Idealisation assumption that randomization will ensure that all factors likely to influence outcome(s) are distributed identically between treatment and control [ignore human agency? ] 2. Causality: Focus on effects of the causes rather than causal processes [don’t say what it is about the programme or context explains the outcomes] 3. Non-compliance/attrition after randomization 4. Don’t account for conversation factors: people’s ability to translate resources distributed by interventions to achieve desired outcomes; 5. Average treatment effects: may not tell for whom or how/may; it masks disparities in outcomes/ may not explain them; Results reported as… Average effect size of the intervention on outcome(s) of interest 6. Spill over effects: may or may not account for them; 7. External Validity: evaluation itself is an intervention [causes changes]; and generalisability of results

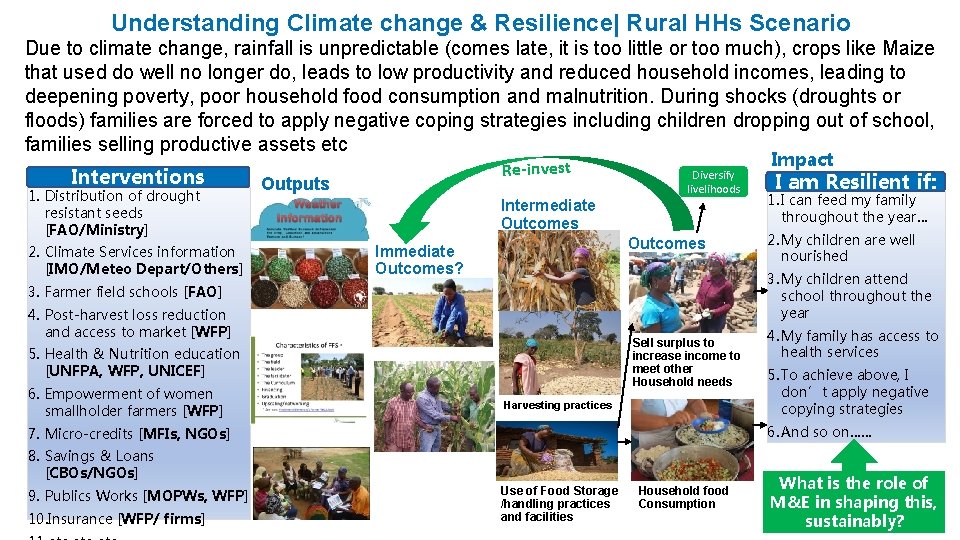

Experimental Approach to Impact Evaluation: Conclusions Experimentation has its place - for small pilots to test if they work; testing alternatives; experimenting with short duration projects, etc. . . there are sophisticated statistical tools to deal with many of the issues. However, for complex interventions, it has limitations Ø Human agency matters: It is people that make or break interventions and randomization may not always address some critical issues related to human agency: ü spillovers may be ‘just the way things work’ within the project space ü project staff may not culturally agree with randomization principles; ü participants may have religious/cultural concerns about project approaches, motives etc; which they find out AFTER they have been targeted through randomization; ü Power relations within the intervention space Ø Understanding what makes an intervention work, fail or work differently can: • Technically, help redesign interventions to make them work better and/or work in different contexts • Politically, explain why certain groups: failed to benefit, were noncompliant, monopolized the benefits etc. This is critical if the interventions are to address, rather than reproduce inequalities already present • These issues affect political economy of scaling up or extending Evaluation is both Technical & Political

![[2] Contribution Analysis: Key Concepts Contribution Analysis (CA) is A theory-based approach to evaluating [2] Contribution Analysis: Key Concepts Contribution Analysis (CA) is A theory-based approach to evaluating](http://slidetodoc.com/presentation_image_h2/49f2be73f7c39706035f45fabe6ebbb5/image-6.jpg)

[2] Contribution Analysis: Key Concepts Contribution Analysis (CA) is A theory-based approach to evaluating programs delivered in complex and dynamic settings [Mayne, 2001]; developed in response to three critical evaluation problems: Attribution – how to assess impact of one particular programme when there are multiple interventions targeting the same population and there is no clear way of allocating or identifying who was exposed to which combination of interventions or determining the level of exposure. Evaluability–the extent to which an intervention can be evaluated reliably and credibly when often they are not well specified unclear objectives and outcomes, target population and timeline for expected changes etc Complexity: Interventions being evaluated has multi-components, involves multiple partners and stakeholders, has an emergent/evolving focus and is being implemented within a dynamic setting.

![[2] Contribution Analysis Approach: How it works #2. Develop postulated theory of change Planning [2] Contribution Analysis Approach: How it works #2. Develop postulated theory of change Planning](http://slidetodoc.com/presentation_image_h2/49f2be73f7c39706035f45fabe6ebbb5/image-7.jpg)

[2] Contribution Analysis Approach: How it works #2. Develop postulated theory of change Planning #1. Set the cause-effect issue #7 In complex settings, assemble and assess contribution story for each sub-TOC and contribution story for any general TOC #3. Gather existing evidence CA is an iterative process #6. Revise and strengthen the contribution story Performance Story + Contribution Story It is reasonable to conclude that the intervention is contributing to/influencing the desired outcomes if: 1. There is a reasoned theory of change for the intervention (expected): assumptions why the intervention is expected to work make sense/plausible, may be supported by evidence and agreed by key players #4. Assemble and assess 2. The activities of the intervention were implemented: no contribution point assessing contribution of something that did not story #5. Seek out additional evidence happen (sufficient level of implementation? ) 3. The theory of change is supported and confirmed by evidence (observed TOC): the chain of expected results occurred. The theory of change has not been disproved 4. Other contextual factors that are known to affect the desired outcomes have been assessed and are either shown not to have made a significant contribution, or their relative role is recognized. Results reported as… Evidence and argumentation from which it is reasonable to conclude whether or not the intervention has made important contribution, and why, with some level of confidence.

![[2] Contribution Analysis Approach: Conclusions Strengths of CA • Conducting analysis of contextual and [2] Contribution Analysis Approach: Conclusions Strengths of CA • Conducting analysis of contextual and](http://slidetodoc.com/presentation_image_h2/49f2be73f7c39706035f45fabe6ebbb5/image-8.jpg)

[2] Contribution Analysis Approach: Conclusions Strengths of CA • Conducting analysis of contextual and intervening conditions can clarify the logic of interventions know the likelihood of programme leading to outcomes • Assesses programme implementation fidelity beyond measuring outputs and outomes [performance story] • It is structured and allows use of exitsing M&E data/evidence already collected [M&E data does not go to waste ) Issues with CA • Seen as a weak substitute for the traditional experimental design approaches to assessing causality. Approach not fully developed • Simplicty of the conceptual framework can make it difficult to judge what level of evidence is required or even the level of effort • Because CA focuses on the results, substainability may not be captured in the contribution story unless there was explicit postulation of a theory towards sustainability; • Examines alternative explainations for the • Its is iterative, continuous and may not sit well observed outcomes [ does not seek to with traditional ‘thinking’ of evaluation as a one-off claim impact, seeks to explain impact or assignment; sits well when thought of as part lack thereof] of M&E for improving performance • Not necessarily parsimonious: requires in depth analysis, mixing of methods, iterations

![[3] Qualitative Comparative Analysis (QCA): Definition and Key Concepts Qualitative Comparative Analysis (QCA) is [3] Qualitative Comparative Analysis (QCA): Definition and Key Concepts Qualitative Comparative Analysis (QCA) is](http://slidetodoc.com/presentation_image_h2/49f2be73f7c39706035f45fabe6ebbb5/image-9.jpg)

[3] Qualitative Comparative Analysis (QCA): Definition and Key Concepts Qualitative Comparative Analysis (QCA) is a theory-based and case-based approach that enables systematic comparison of cases, identifying key factors which are responsible for success of an intervention, allowing for more nuanced understanding of how different combinations of factors lead to success, and the influence context have on intervention success. [Baptist and Befani, 2015] Outcome is the desired change that is being tested/evaluated ( e. g. increased productivity) Condition is a factor used to explain presence/absence of an outcome (e. g. improved seeds, farmer training) Configuration is the combination of conditions which describe a group of empirically observed or hypothetical cases (e. g. combining distribution of improved seeds with farmer training and provision of timely weather information leads to increased productivity) Set Membership/QCA Variants • Crisp set (cs. QCA): cases are either full membership (scored 1) or non-membership (scored 0) e. g. a farmer either received improved seeds (scored 1) or did not receive improved seeds (scored 0) • Fuzzy Set (fs. QCA): degrees of membership expressed between 0 (non-membership) and 1 (full membership); >0 to 0. 5 is more out than in; >0. 5 to 1 is more in than in. e. g. productivity did not increased (0); increased by 1% - 10% (0. 33); increased by 10%-50% (0. 66); by more than 50% (1) • Multi-value sets (mv. QCA): cases are members of categories e. g. education level of head of farming households is Primary (scored as 1) Secondary (scored as 2) Higher (scored as

![[3] Qualitative Comparative Analysis (QCA): How it works #2. Assess whether QCA is feasible [3] Qualitative Comparative Analysis (QCA): How it works #2. Assess whether QCA is feasible](http://slidetodoc.com/presentation_image_h2/49f2be73f7c39706035f45fabe6ebbb5/image-10.jpg)

[3] Qualitative Comparative Analysis (QCA): How it works #2. Assess whether QCA is feasible #3 Develop/refine Theory of change and Hypothesis #4 Identify cases and outcomes of interest #5 Develop set of factors to test each outcome This is for illustration only, the type of sets used is based on an understanding of the concept behind the outcome of interest and the postulated theory Results reported as… Necessary and sufficient conditions/factors to achieve the outcome of interest Cases Factor A Factor B Factor C Outcome of Interest [improved seeds] [training/FFS] [weather information] Improved productivity 0 = Did not Received 0= increased by 1%Receive 1 = Received Training 10% 1 = Received 0. 33 = increased by 10% -50% 0. 66= increased by more than 50% Case 1 0 1 1 0 Case 2 1 0. 66 Case 3 1 0 0 0. 33 …. . … … Case N 1 1 1 0. 66 Set Membership #1 Assess whether QCA is appropriate #6 Collect Data #7 Re-assess the set of factors and cases for each outcome #8 Benchmark and Calibrate the data #9 Populate the data set #10 Analyse the data #11 Analyse findings based on original TOC and Hypothesis #12 Iteration if necessary, develop new hypothesis, add conditions/factors and repeat analysis

![[3] Qualitative Comparative Analysis (QCA): Conclusions Strengths of QCA 1. Potentially strong both on [3] Qualitative Comparative Analysis (QCA): Conclusions Strengths of QCA 1. Potentially strong both on](http://slidetodoc.com/presentation_image_h2/49f2be73f7c39706035f45fabe6ebbb5/image-11.jpg)

[3] Qualitative Comparative Analysis (QCA): Conclusions Strengths of QCA 1. Potentially strong both on external and internal validity, increasing generalisability and replicability 2. It is parsimonious 3. Works where it is impossible to establish counterfactual [qualitative assessment of impact] 4. Provides clarity when testing theories of change 5. Able to evaluate and identify multiple path ways to outcomes of interest [equifinality] i. e. which packages of factors are necesssary and sufficient for a succeessful outcome, and which are most critical; which are necessary but not sufficient 6. Combines strengths of qualitative and quantative research methods by linking theory and evidence; 7. Able to address complex settings with multiple interventions, actors, refocusing evaluation attention to context, systems and institutions; Issues with QCA • Relatively new, few evaluators experienced in its application outside academia (mostly in Europe] • Does not work well with large N (>50) • Works only when there is either a detailed TOC or one can be developed to identify conditions/factors related to outcome(s) • Requires Highly Specialist skills and knowledge including of set theory and associated software • Issues can arise on how datasets are calibrated analysis conducted, thus compromising results; • Requires synthesising qualitative data into scores, thus loosing the richness; • Requires deep understanding of the cases, factors and context

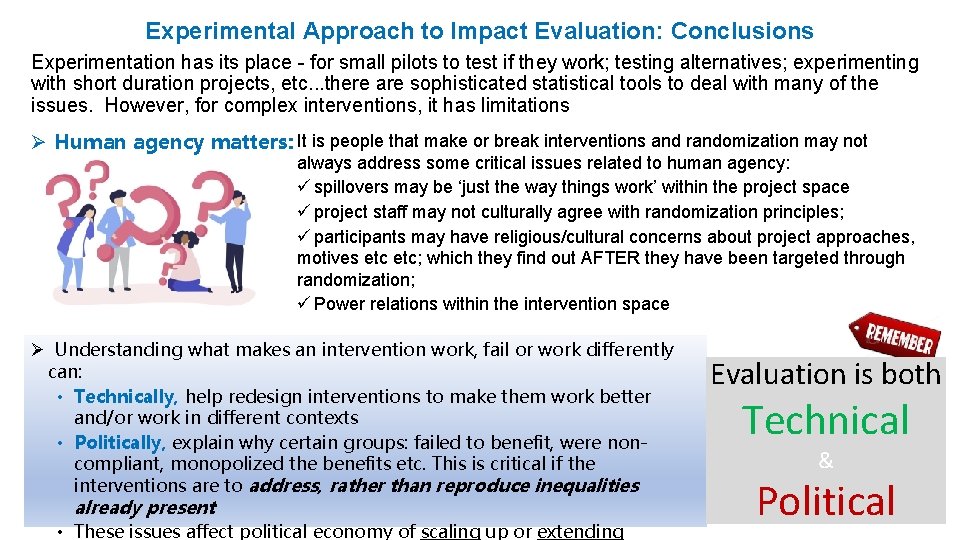

Conclusions Experimental IE reports on Average Effect Size of the intervention on outcome(s) of interest QCA tells us… What are the necessary and sufficient conditions/factors to achieve the outcome of interest CA gives us…. Evidence and argumentation from which it is reasonable to conclude whether or not the intervention has made important contribution, and why, with some level of confidence. 1. Wouldn’t it be nice if we can get the best of the three approaches? 2. Why has it taken time to get CA and QCA as part of common approaches in humanitarian and development space? 3. Does anyone know any African-based evaluators regularly applying CA or QCA in their work? 4. Is it worthy-while to create communities of practice around CA and QCA?

Recommendations 1. Evaluators and evaluation commissioners should acknowledge that each approach has its place no supremacy, no hierachy of evidence none is better than the other 2. Interventions to address Climate change and build resilience are complex; evaluation of these interventions should choose approaches and designs that do justice to complexity; 3. Use mixed methods, genuinely, systematically and meanginfully. Some evaluation contexts can at least mix CA and QCA; others can mix experiments with CA/QCA 4. Break path dependency [experienced evaluators may be too comfortable in their ways/methods? ] –involve younger/emerging evaluators willing to try new methods 5. Evaluation should commissioners allow for methodological innovation and provide resources 6. Continue conversation and work around Evaluation Made in Africa from a Methodogical development view point [ i. e. epistemological angle of MAE) 7. Continued collaboration between Academics & practitioners SAMEA provide space for that How do we keep the conversation going beyond the 2019 SAMEA conference?

References and other readings Baptist, C. and Befani, B. (2015), “Qualitative Comparative Analysis – A Rigorous Qualitative Method for Assessing Impact”, Coffey Davis, B. , Di Giuseppe, S. , & Zezza, A. (2017). Are African households (not) leaving agriculture? Patterns of households’ income sources in rural Sub-Saharan Africa. Food Policy, 67, 153 -174. Mayne, J. (2001). Addressing attribution through contribution analysis: using performance measures sensibly. Canadian journal of program evaluation, 16(1), 1 -24. Mayne, J. (2011). Addressing Cause and Effect in Simple and Complex Settings through Contribution Analysis. In Evaluating the Complex, R. Schwartz, K. Forss, and M. Marra (Eds. ), Transaction Publishers; Naila Kabeer (2019) Randomized Control Trials and Qualitative Evaluations of a Multifaceted Programme for Women in Extreme Poverty: Empirical Findings and Methodological Reflections, Journal of Human Development and Capabilities, 20: 2, 197 -217, DOI: 10. 1080/19452829. 2018. 1536696 Wimbush, E. , and Beeston, C. (2010). Contribution analysis: What is it and what does it offer impact evaluation. The Evaluator, 19 -24. World Bank, 2011, ’ Impact Evaluation in Practice’, https: //www. worldbank. org/en/programs/sief-trustfund/publication/impact-evaluation-in-practice Page

- Slides: 14