FPGAs for the Masses Hardware Acceleration without Hardware

- Slides: 28

FPGAs for the Masses: Hardware Acceleration without Hardware Design David B. Thomas dt 10@doc. ic. ac. uk

Contents • Motivation for hardware acceleration – Increase performance, reduce power – Types of hardware accelerator • Research achievements – Accelerated Finance research group – Research direction and publications • Highlighted contribution: Contessa – Domain specific language for Monte-Carlo – Push-button compilation to hardware • Conclusion

Motivation • Increasing demand for High Performance Computing – Everyone wants more compute-power – Finer time-steps; larger data-sets; better models • Decreasingle-threaded performance – Emphasis on multi-core CPUs and parallelism – Do computational biologists need to learn PThreads? • Increasing focus on power and space – Boxes are cheap: 16 node clusters are very affordable – Where do you put them? Who is paying for power? • How can we use hardware acceleration to help?

Types of Hardware Accelerator • GPU : Graphics Processing Unit – Many-core - 30 SIMD processors per device – High bandwidth, low complexity memory – no caches • MPPA : Massively Parallel Processor Array – Grid of simple processors – 300 tiny RISC CPUs – Point-to-point connections on 2 -D grid • FPGA : Field Programmable Gate Array – Fine-grained grid of logic and small RAMs – Build whatever you want

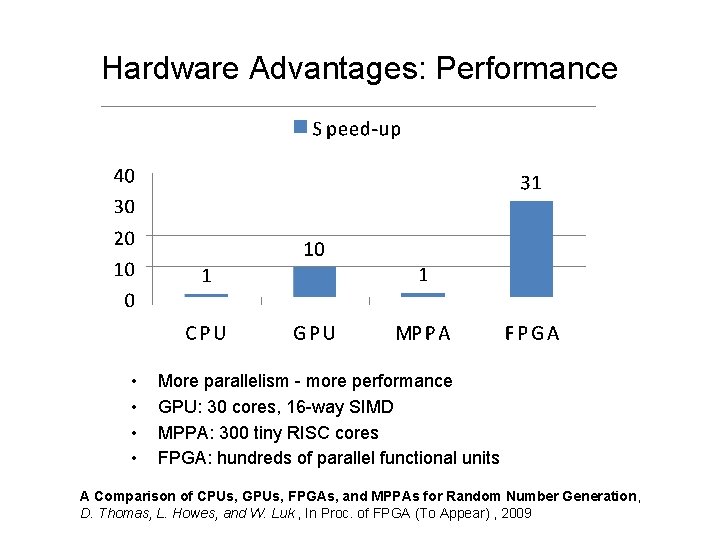

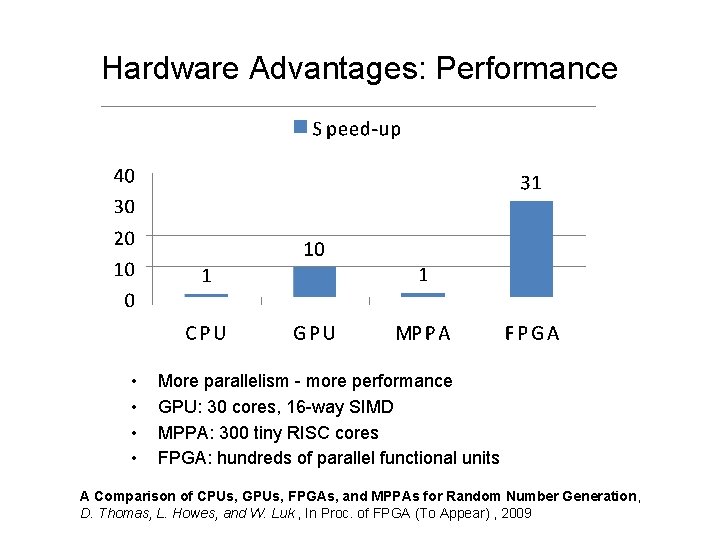

Hardware Advantages: Performance • • More parallelism - more performance GPU: 30 cores, 16 -way SIMD MPPA: 300 tiny RISC cores FPGA: hundreds of parallel functional units A Comparison of CPUs, GPUs, FPGAs, and MPPAs for Random Number Generation, D. Thomas, L. Howes, and W. Luk , In Proc. of FPGA (To Appear) , 2009

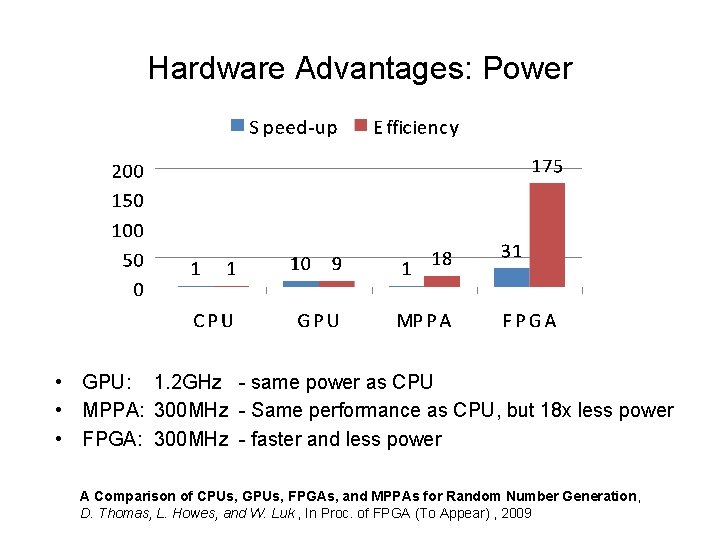

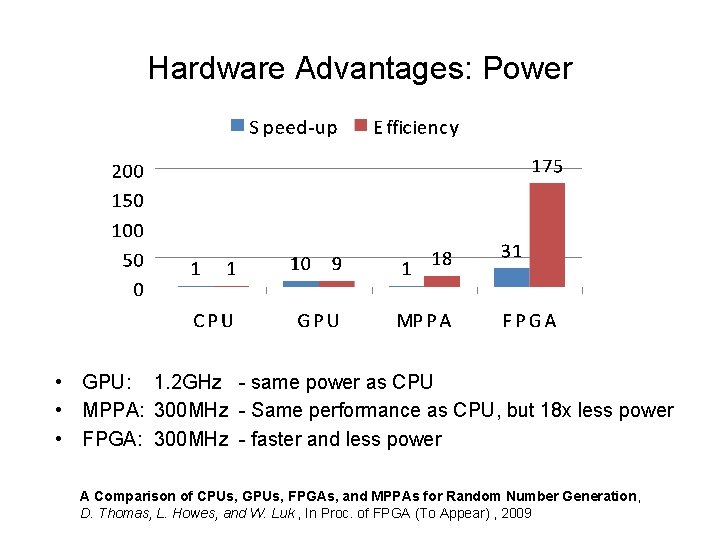

Hardware Advantages: Power • GPU: 1. 2 GHz - same power as CPU • MPPA: 300 MHz - Same performance as CPU, but 18 x less power • FPGA: 300 MHz - faster and less power A Comparison of CPUs, GPUs, FPGAs, and MPPAs for Random Number Generation, D. Thomas, L. Howes, and W. Luk , In Proc. of FPGA (To Appear) , 2009

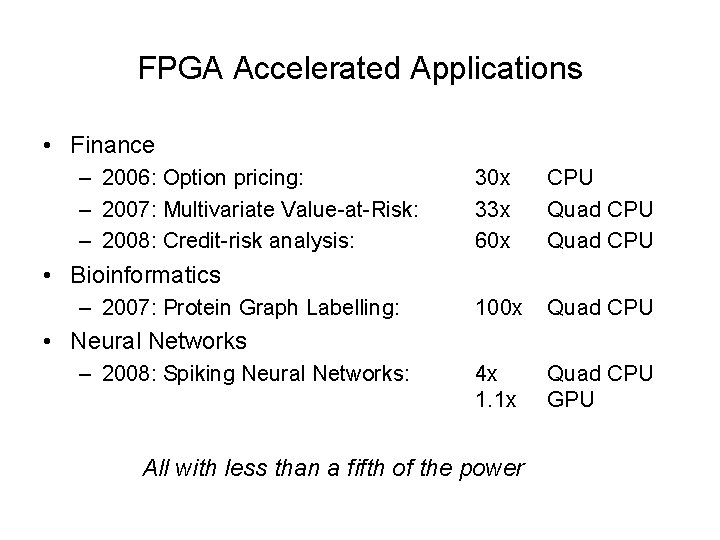

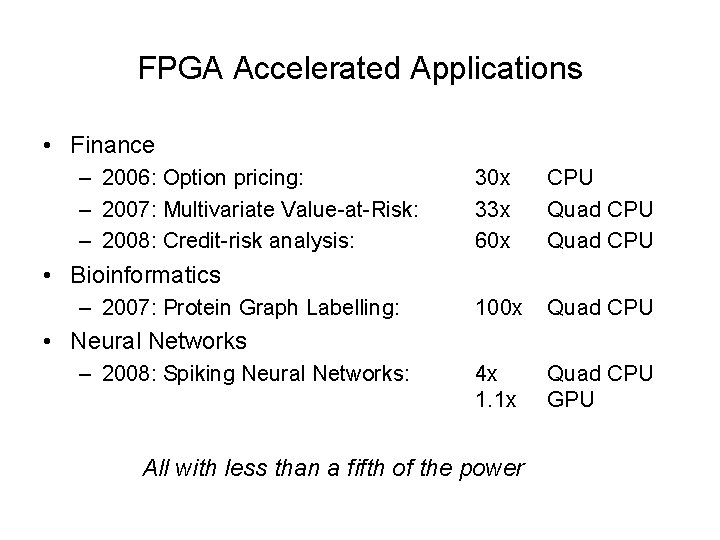

FPGA Accelerated Applications • Finance – 2006: Option pricing: – 2007: Multivariate Value-at-Risk: – 2008: Credit-risk analysis: 30 x 33 x 60 x CPU Quad CPU 100 x Quad CPU 4 x 1. 1 x Quad CPU GPU • Bioinformatics – 2007: Protein Graph Labelling: • Neural Networks – 2008: Spiking Neural Networks: All with less than a fifth of the power

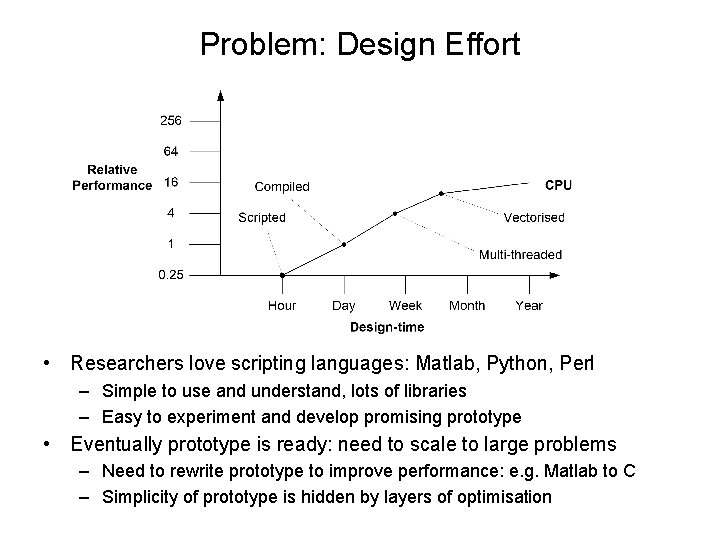

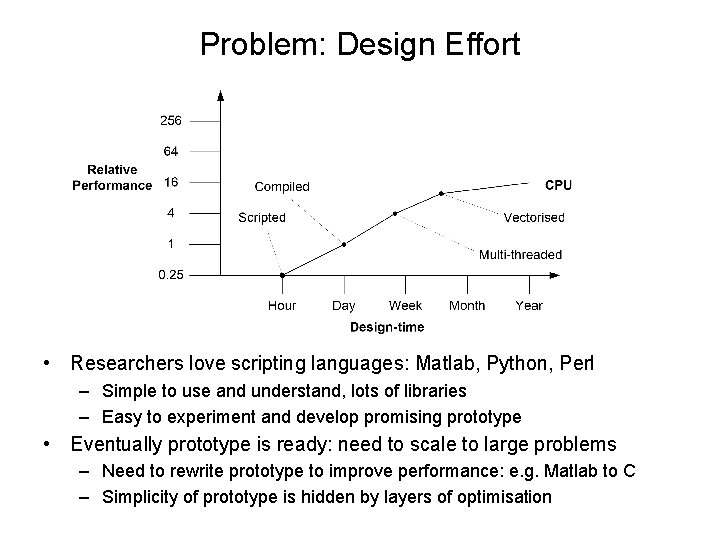

Problem: Design Effort • Researchers love scripting languages: Matlab, Python, Perl – Simple to use and understand, lots of libraries – Easy to experiment and develop promising prototype • Eventually prototype is ready: need to scale to large problems – Need to rewrite prototype to improve performance: e. g. Matlab to C – Simplicity of prototype is hidden by layers of optimisation

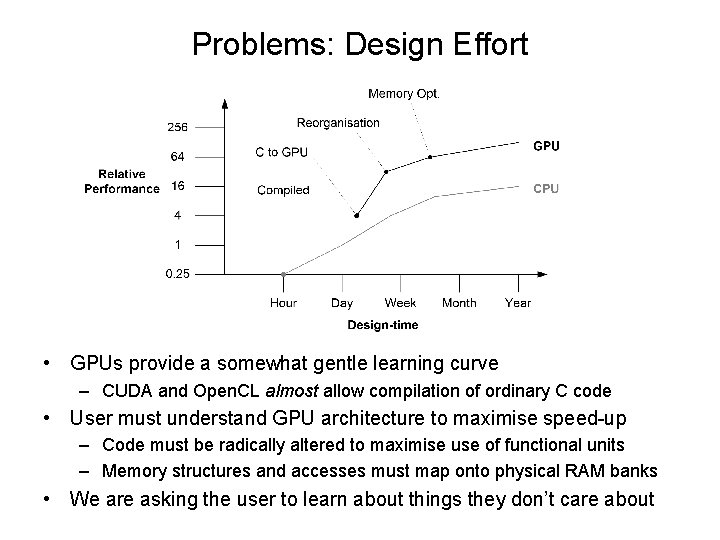

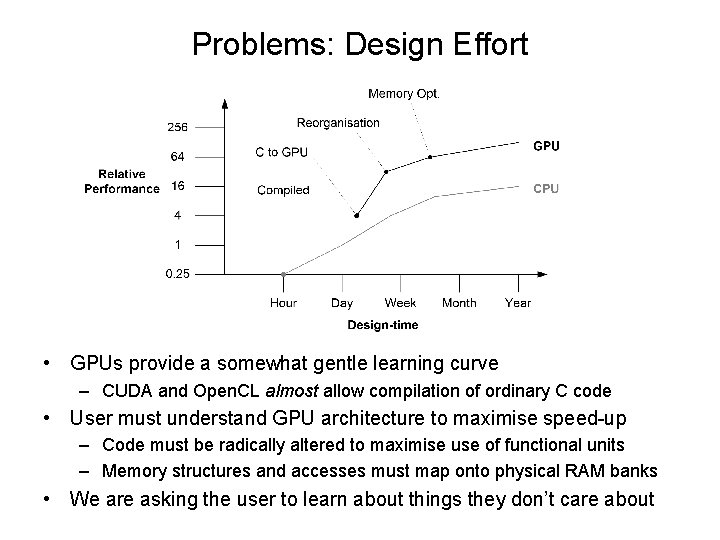

Problems: Design Effort • GPUs provide a somewhat gentle learning curve – CUDA and Open. CL almost allow compilation of ordinary C code • User must understand GPU architecture to maximise speed-up – Code must be radically altered to maximise use of functional units – Memory structures and accesses must map onto physical RAM banks • We are asking the user to learn about things they don’t care about

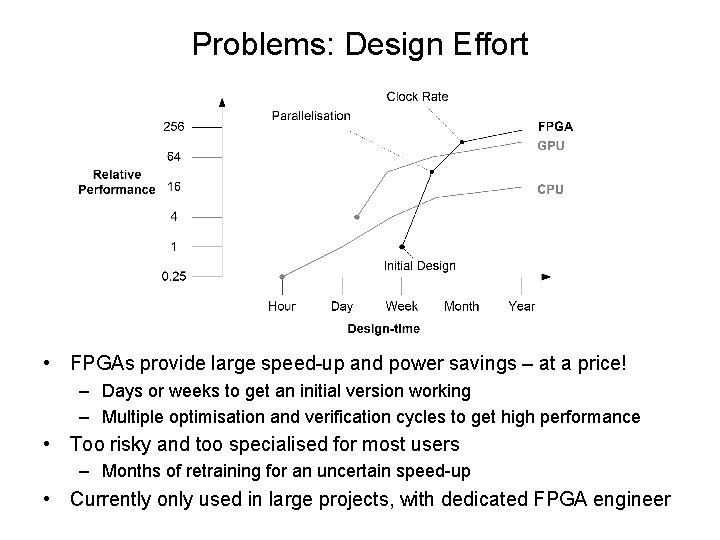

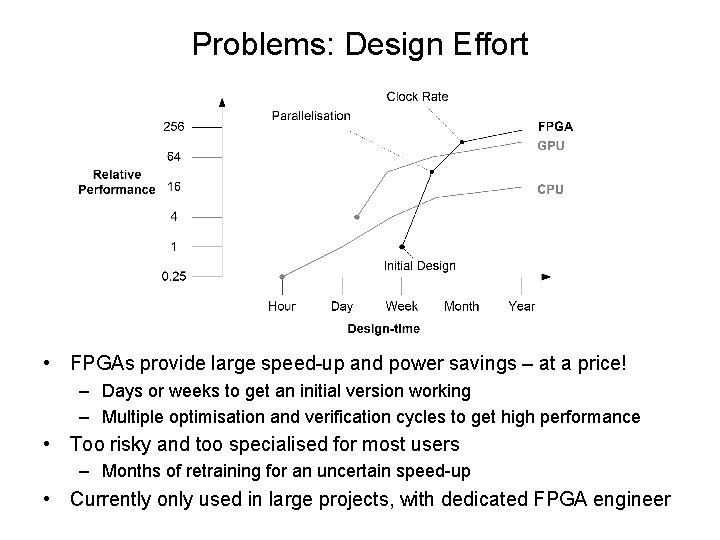

Problems: Design Effort • FPGAs provide large speed-up and power savings – at a price! – Days or weeks to get an initial version working – Multiple optimisation and verification cycles to get high performance • Too risky and too specialised for most users – Months of retraining for an uncertain speed-up • Currently only used in large projects, with dedicated FPGA engineer

Goal: FPGAs for the Masses • Accelerate niche applications with limited user-base – Don’t have to wait for traditional “heroic” optimisation • Single-source description – The prototype code is the final code • Encourage experimentation – Give users freedom to tweak and modify • Target platforms at multiple scales – Individual user; Research group; Enterprise • Use domain specific knowledge about applications – Identify bottlenecks: optimise them – Identify design patterns: automate them – Don’t try to do general purpose “C to hardware”

Accelerated Finance Research Project • Independent sub-group in Computer Systems section – EPSRC project: 3 years, £ 670 K • “Optimising hardware acceleration for financial computation” – Team of four: Me, Wayne Luk, 2 Ph. D students • Active engagement with financial institutes – Six month feasibility study for Morgan Stanley – Ph. D student funded by J. P. Morgan • Established a lead in financial computing using FPGAs – 7 journal papers, 17 refereed conference papers – Book chapter in “GPU Gems 3”

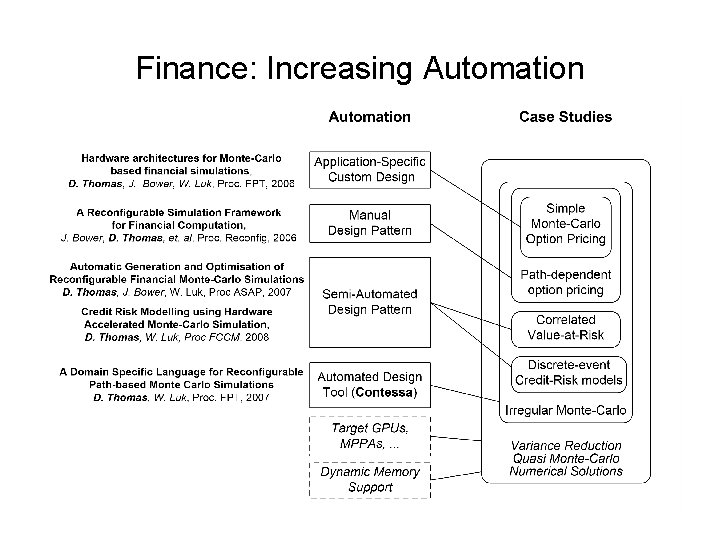

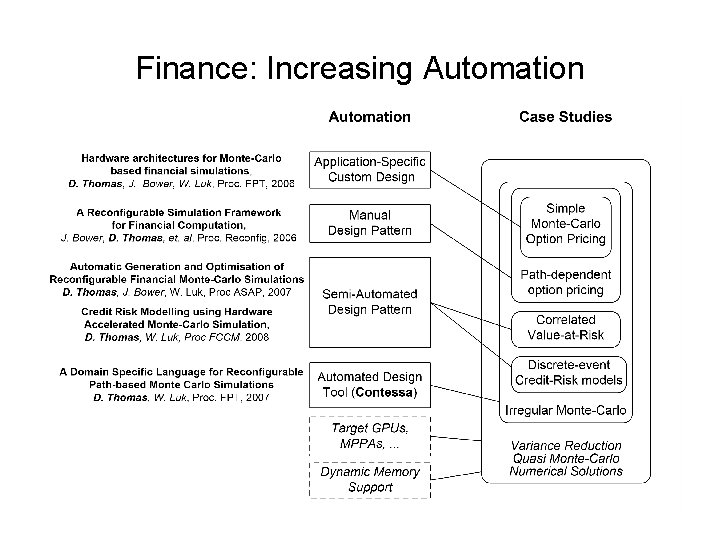

Finance: Increasing Automation

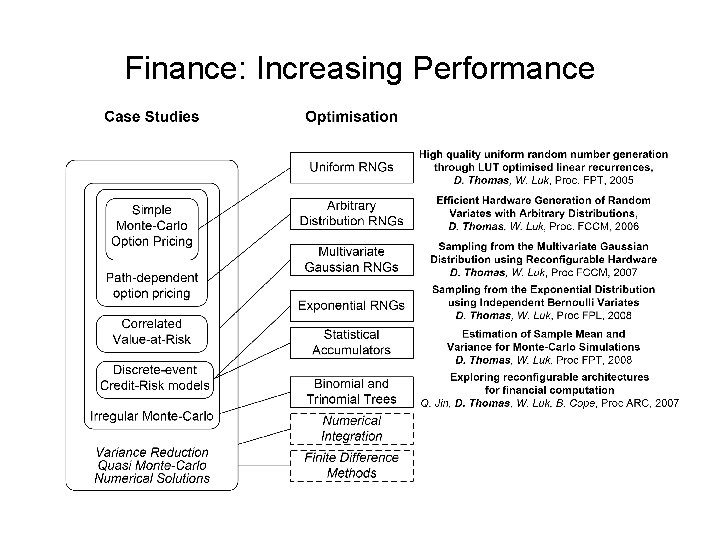

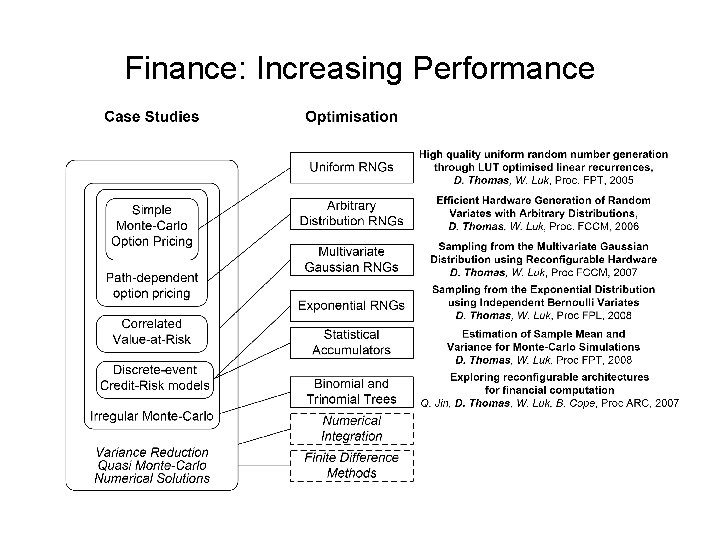

Finance: Increasing Performance

Contessa: Overall Goals • Language for Monte-Carlo applications • One description for all platforms – FPGA family independent – Hardware accelerator card independent • • “Good” performance across all platforms No hardware knowledge needed Quick to compile It Just Works: no verification against software

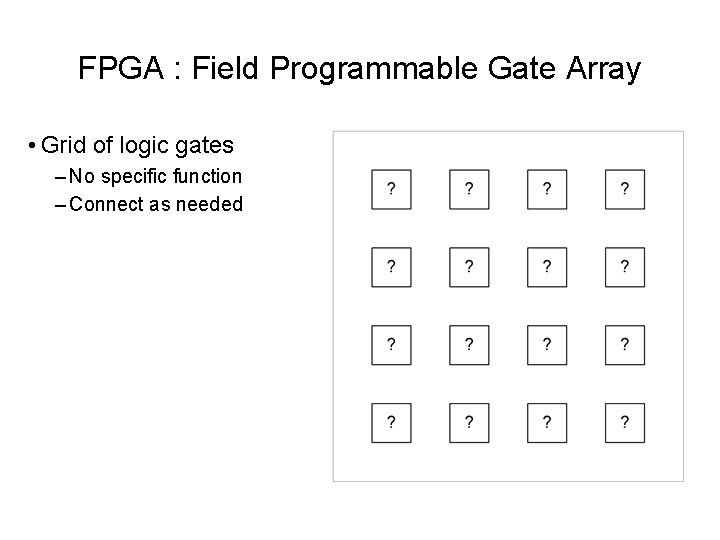

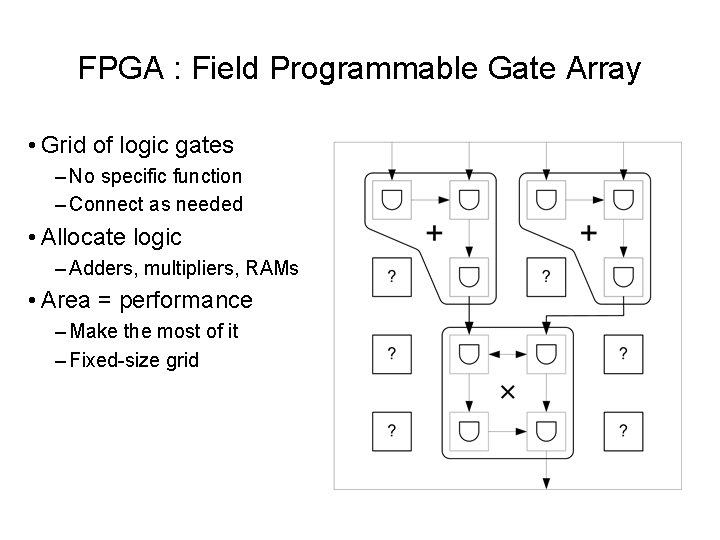

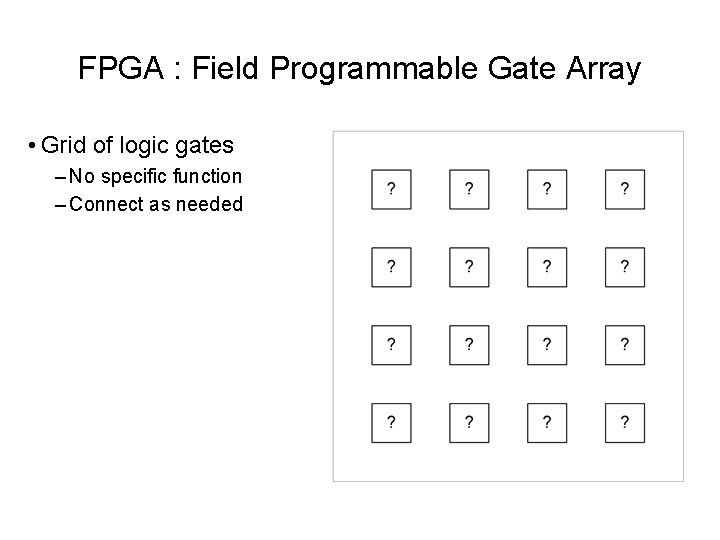

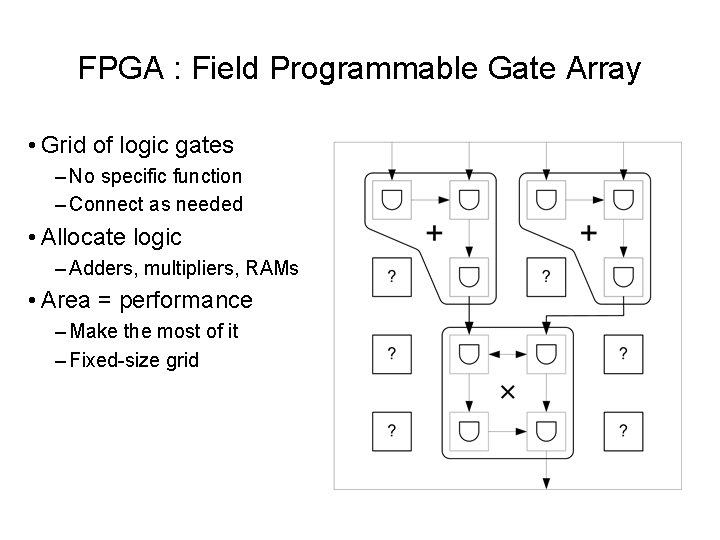

FPGA : Field Programmable Gate Array • Grid of logic gates – No specific function – Connect as needed

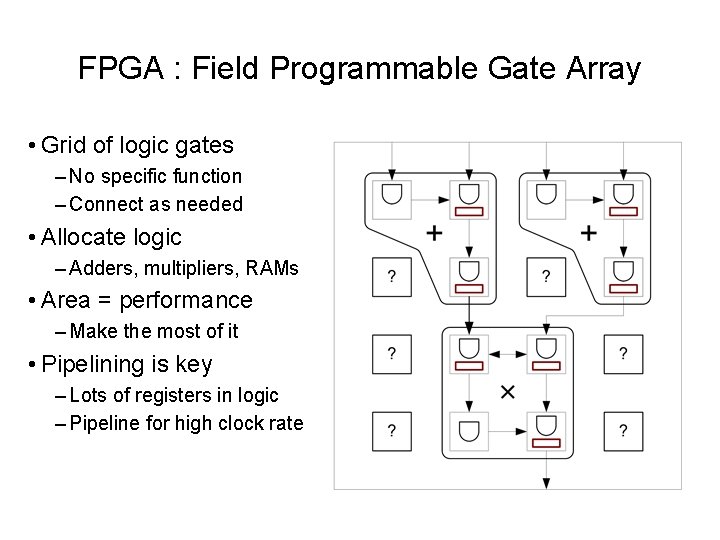

FPGA : Field Programmable Gate Array • Grid of logic gates – No specific function – Connect as needed • Allocate logic – Adders, multipliers, RAMs • Area = performance – Make the most of it – Fixed-size grid

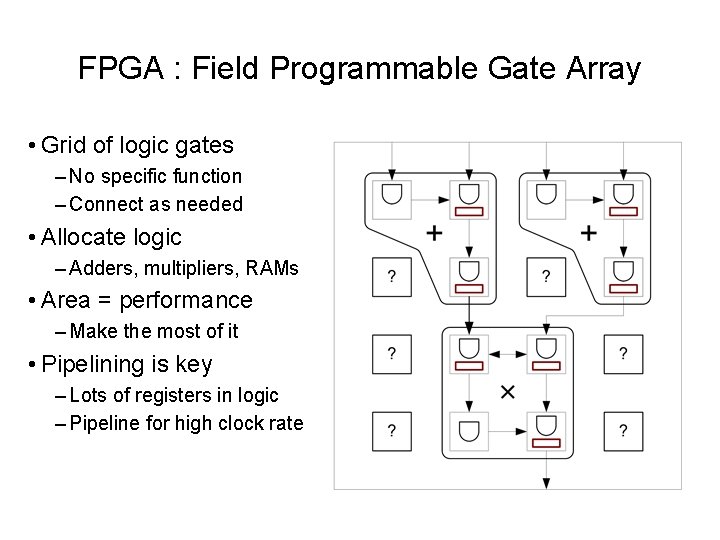

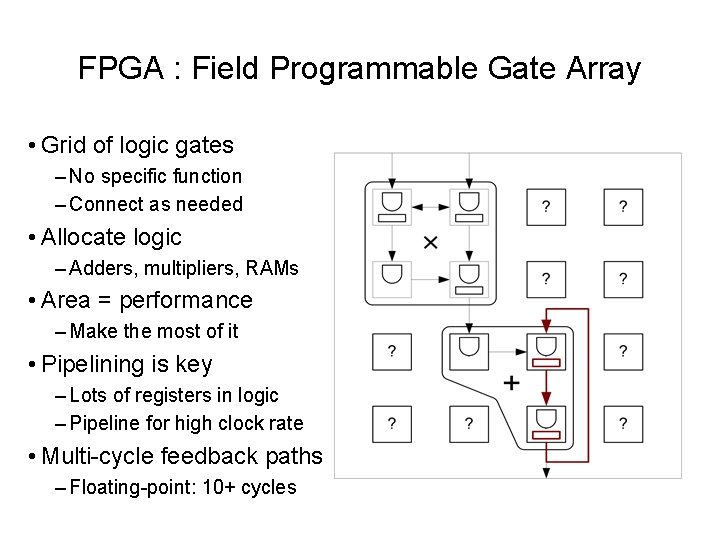

FPGA : Field Programmable Gate Array • Grid of logic gates – No specific function – Connect as needed • Allocate logic – Adders, multipliers, RAMs • Area = performance – Make the most of it • Pipelining is key – Lots of registers in logic – Pipeline for high clock rate

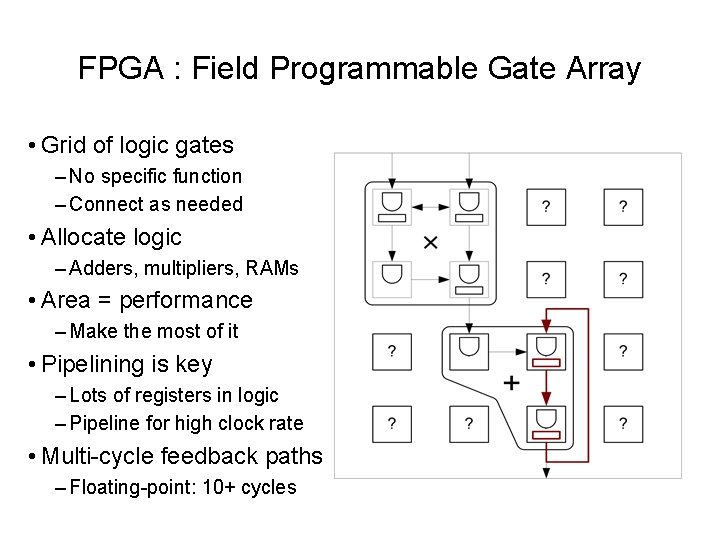

FPGA : Field Programmable Gate Array • Grid of logic gates – No specific function – Connect as needed • Allocate logic – Adders, multipliers, RAMs • Area = performance – Make the most of it • Pipelining is key – Lots of registers in logic – Pipeline for high clock rate • Multi-cycle feedback paths – Floating-point: 10+ cycles

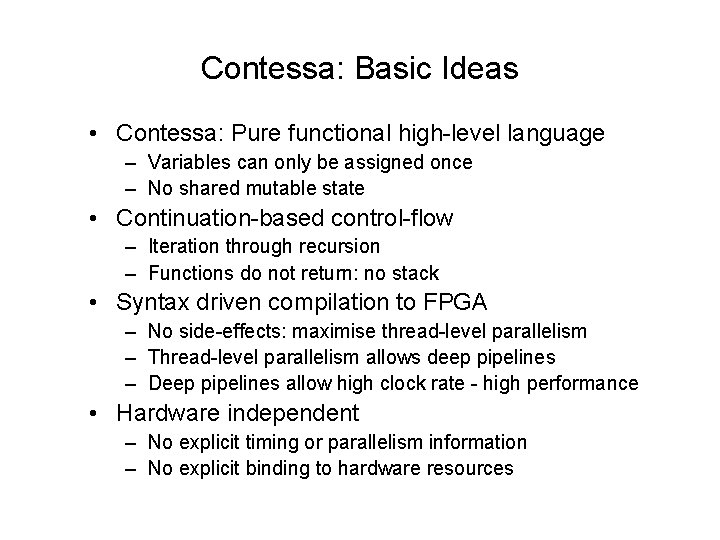

Contessa: Basic Ideas • Contessa: Pure functional high-level language – Variables can only be assigned once – No shared mutable state • Continuation-based control-flow – Iteration through recursion – Functions do not return: no stack • Syntax driven compilation to FPGA – No side-effects: maximise thread-level parallelism – Thread-level parallelism allows deep pipelines – Deep pipelines allow high clock rate - high performance • Hardware independent – No explicit timing or parallelism information – No explicit binding to hardware resources

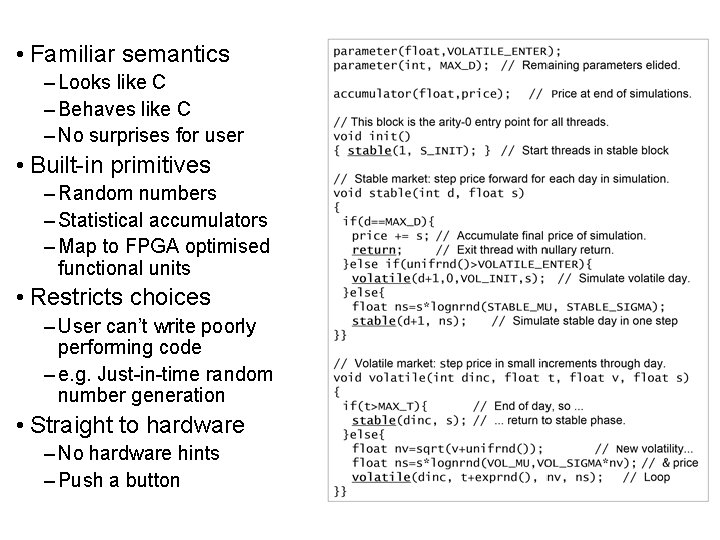

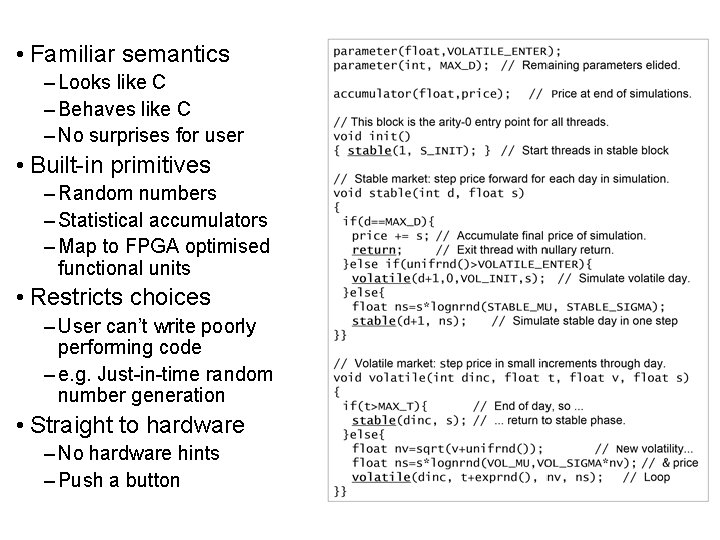

• Familiar semantics – Looks like C – Behaves like C – No surprises for user • Built-in primitives – Random numbers – Statistical accumulators – Map to FPGA optimised functional units • Restricts choices – User can’t write poorly performing code – e. g. Just-in-time random number generation • Straight to hardware – No hardware hints – Push a button

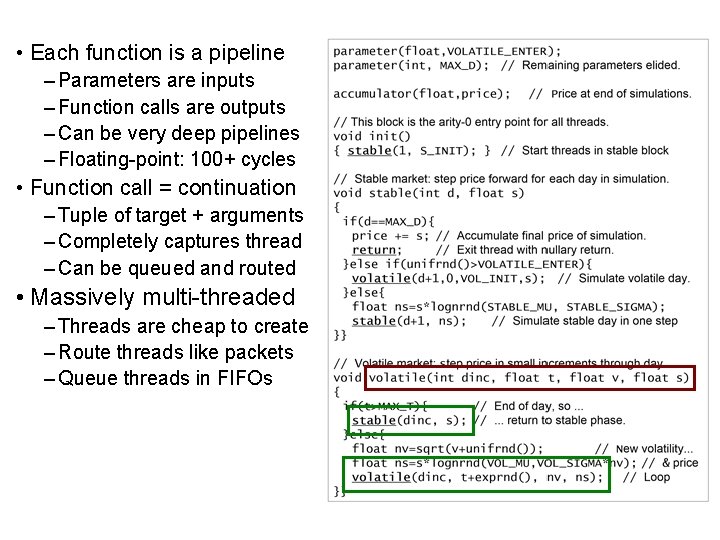

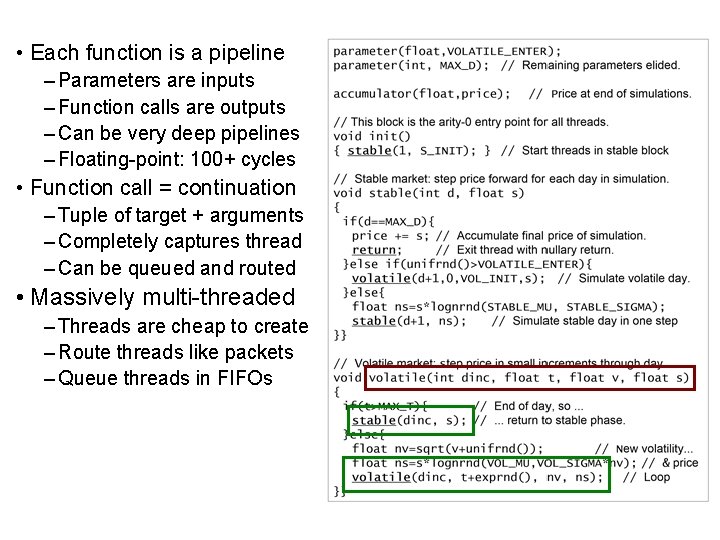

• Each function is a pipeline – Parameters are inputs – Function calls are outputs – Can be very deep pipelines – Floating-point: 100+ cycles • Function call = continuation – Tuple of target + arguments – Completely captures thread – Can be queued and routed • Massively multi-threaded – Threads are cheap to create – Route threads like packets – Queue threads in FIFOs

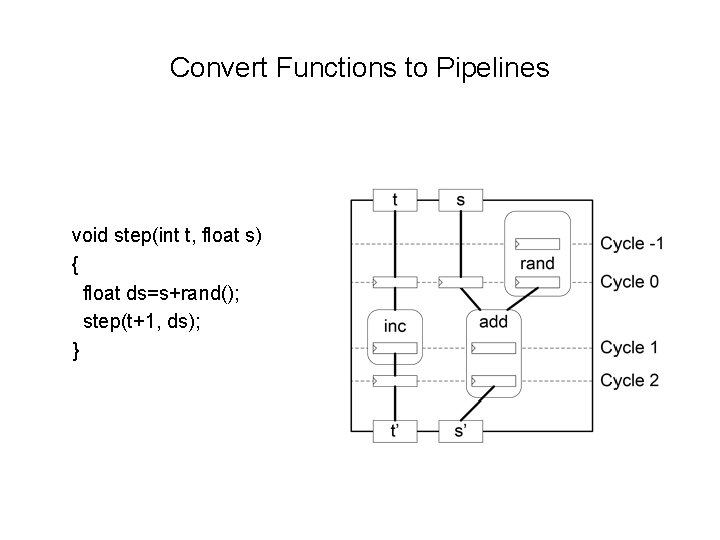

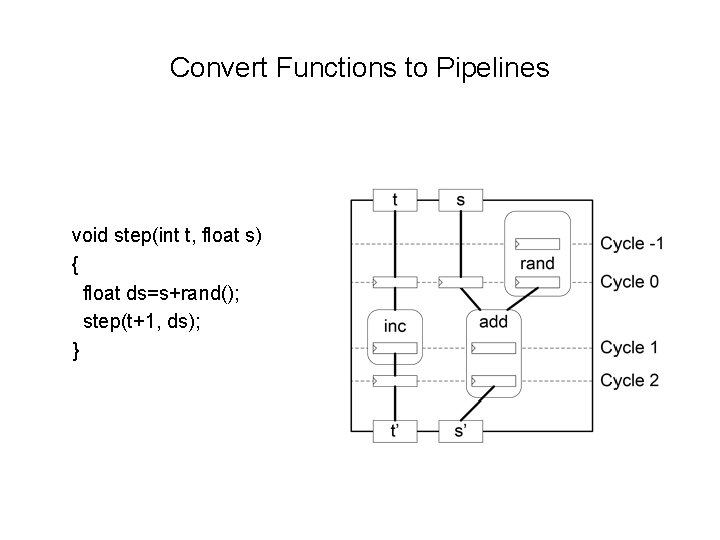

Convert Functions to Pipelines void step(int t, float s) { float ds=s+rand(); step(t+1, ds); }

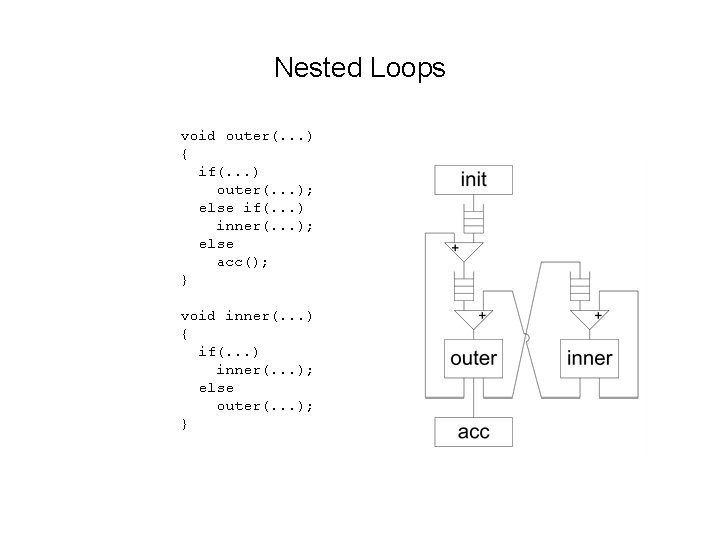

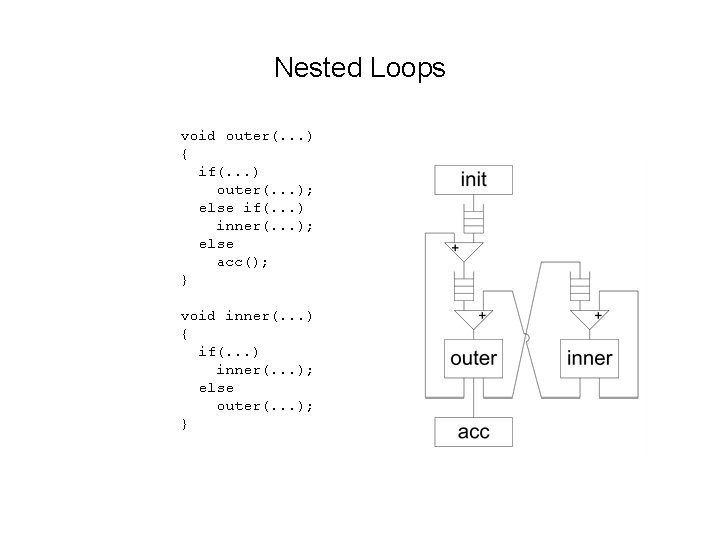

Nested Loops void outer(. . . ) { if(. . . ) outer(. . . ); else if(. . . ) inner(. . . ); else acc(); } void inner(. . . ) { if(. . . ) inner(. . . ); else outer(. . . ); }

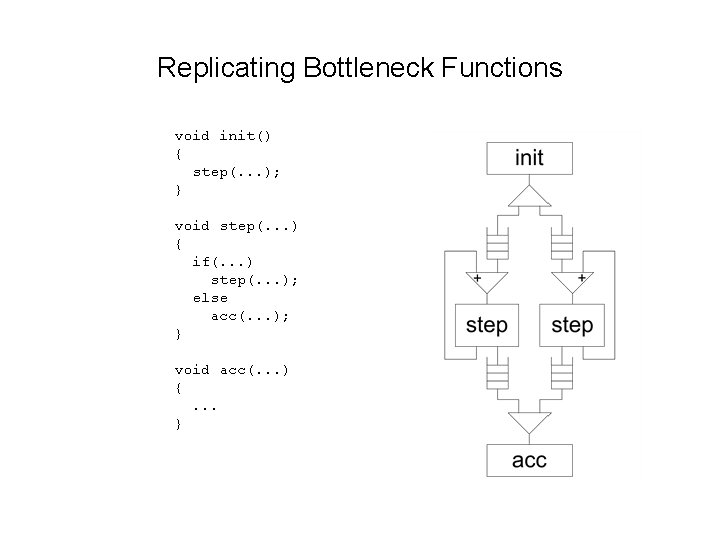

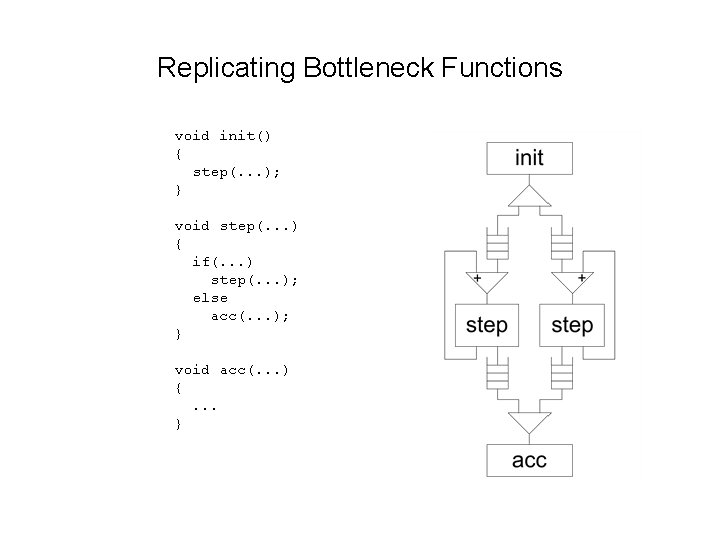

Replicating Bottleneck Functions void init() { step(. . . ); } void step(. . . ) { if(. . . ) step(. . . ); else acc(. . . ); } void acc(. . . ) {. . . }

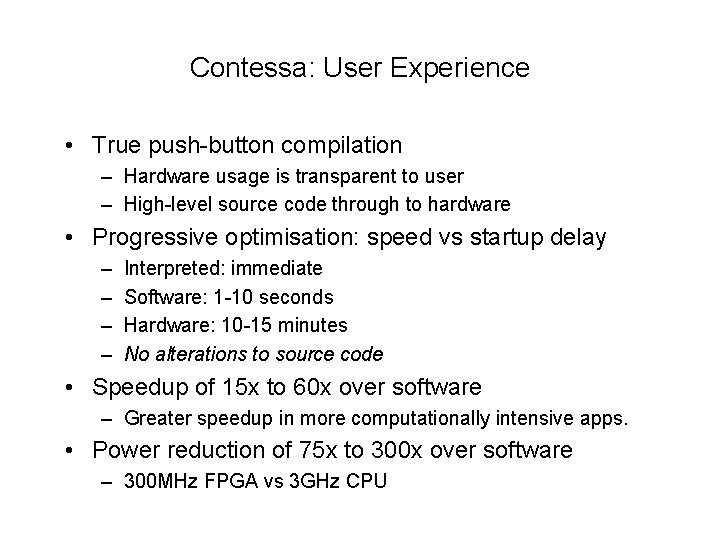

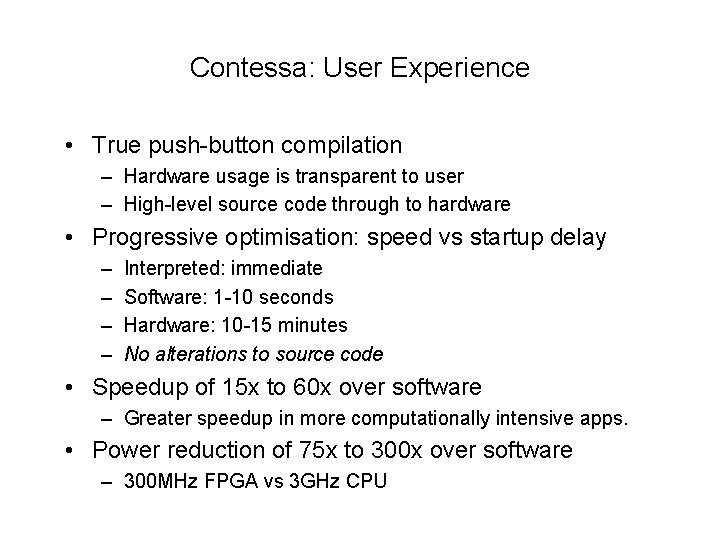

Contessa: User Experience • True push-button compilation – Hardware usage is transparent to user – High-level source code through to hardware • Progressive optimisation: speed vs startup delay – – Interpreted: immediate Software: 1 -10 seconds Hardware: 10 -15 minutes No alterations to source code • Speedup of 15 x to 60 x over software – Greater speedup in more computationally intensive apps. • Power reduction of 75 x to 300 x over software – 300 MHz FPGA vs 3 GHz CPU

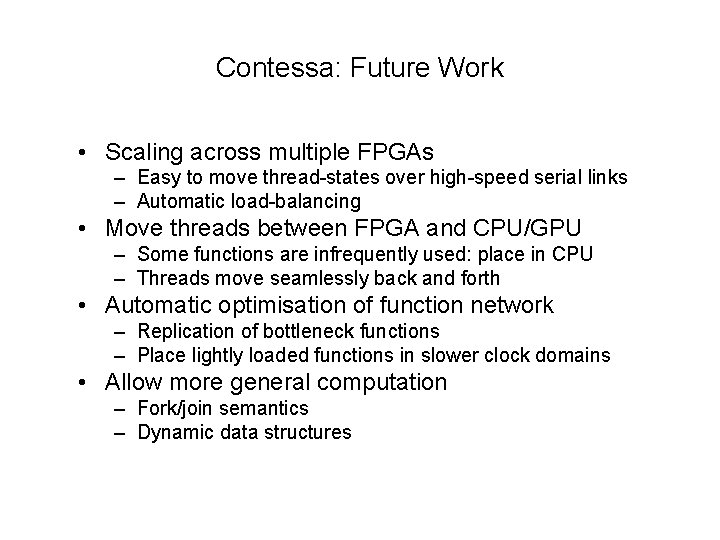

Contessa: Future Work • Scaling across multiple FPGAs – Easy to move thread-states over high-speed serial links – Automatic load-balancing • Move threads between FPGA and CPU/GPU – Some functions are infrequently used: place in CPU – Threads move seamlessly back and forth • Automatic optimisation of function network – Replication of bottleneck functions – Place lightly loaded functions in slower clock domains • Allow more general computation – Fork/join semantics – Dynamic data structures

Conclusion • Goal: hardware acceleration of applications – Increase performance, reduce power – Make hardware acceleration more widely available • Achievements: accelerated finance on FPGAs – Three year EPSRC project: 25 papers (so far) – Speedups of 100 x over quad CPU, using less power – Domain specific language for financial Monte-Carlo • Future: ease-of-use and generality – Target more platforms, hybrids: CPU+FPGA+GPU – DSLs for other domains: bioinformatics, neural nets