Foundations of Secure Computation Arpita Patra Arpita Patra

- Slides: 15

Foundations of Secure Computation Arpita Patra © Arpita Patra

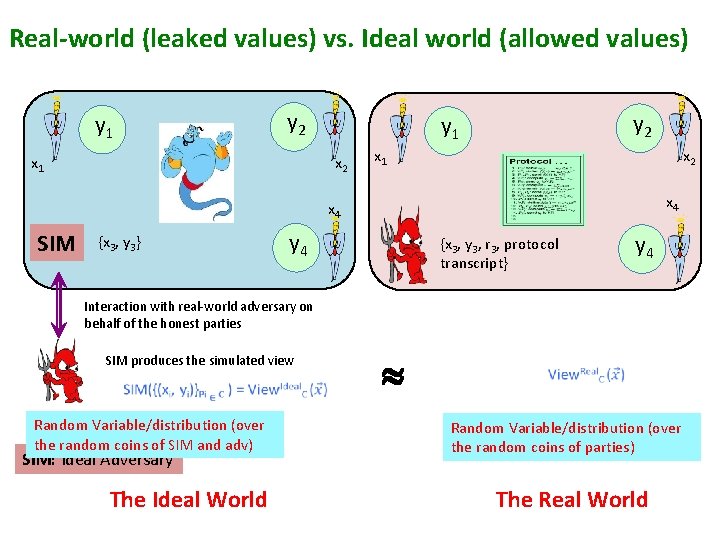

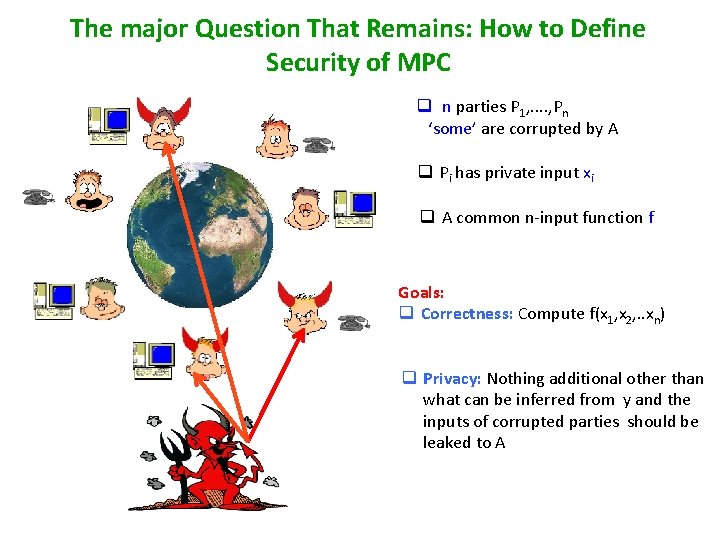

The major Question That Remains: How to Define Security of MPC – q n parties P 1, . . , Pn ‘some’ are corrupted by A q Pi has private input xi q A common n-input function f Goals: q Correctness: Compute f(x 1, x 2, . . xn) q Privacy: Nothing additional other than what can be inferred from y and the inputs of corrupted parties should be leaked to A

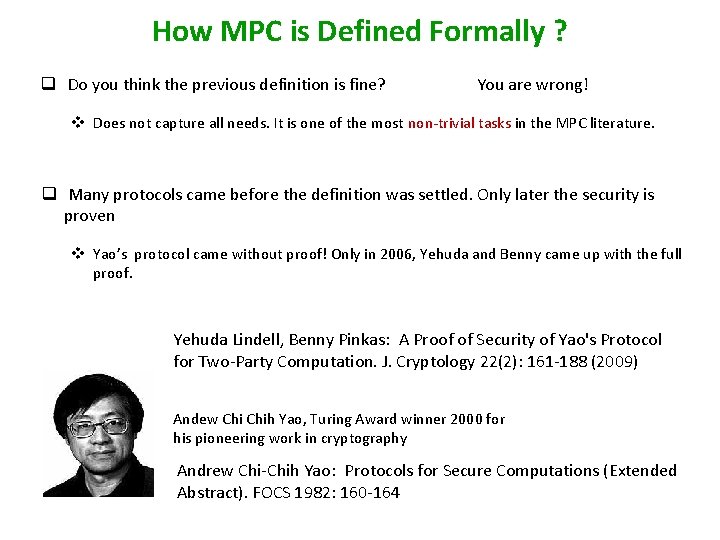

How MPC is Defined Formally ? q Do you think the previous definition is fine? You are wrong! v Does not capture all needs. It is one of the most non-trivial tasks in the MPC literature. q Many protocols came before the definition was settled. Only later the security is proven v Yao’s protocol came without proof! Only in 2006, Yehuda and Benny came up with the full proof. Yehuda Lindell, Benny Pinkas: A Proof of Security of Yao's Protocol for Two-Party Computation. J. Cryptology 22(2): 161 -188 (2009) Andew Chih Yao, Turing Award winner 2000 for his pioneering work in cryptography Andrew Chi-Chih Yao: Protocols for Secure Computations (Extended Abstract). FOCS 1982: 160 -164

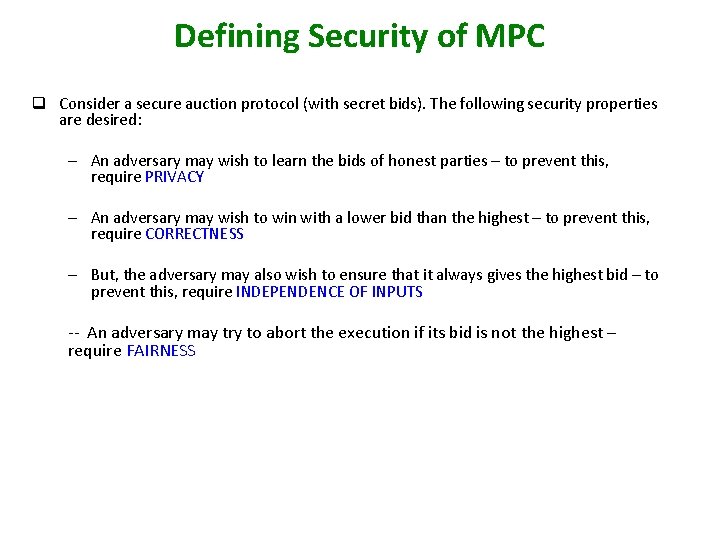

Defining Security of MPC q Consider a secure auction protocol (with secret bids). The following security properties are desired: – An adversary may wish to learn the bids of honest parties – to prevent this, require PRIVACY – An adversary may wish to win with a lower bid than the highest – to prevent this, require CORRECTNESS – But, the adversary may also wish to ensure that it always gives the highest bid – to prevent this, require INDEPENDENCE OF INPUTS -- An adversary may try to abort the execution if its bid is not the highest – require FAIRNESS

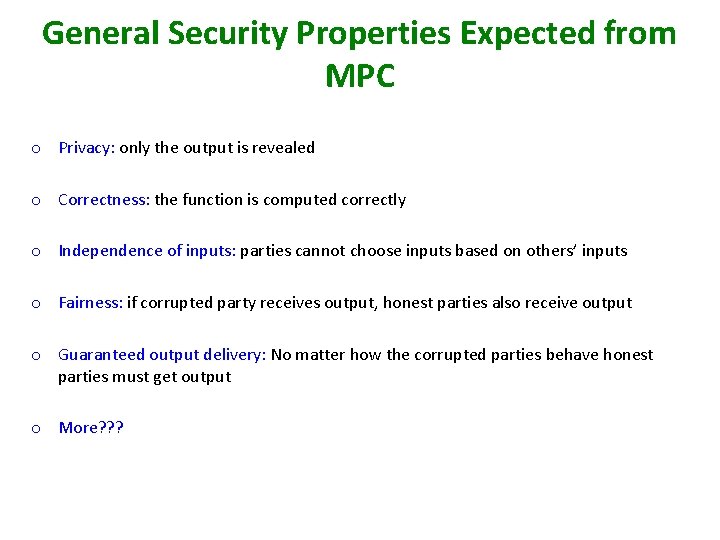

General Security Properties Expected from MPC o Privacy: only the output is revealed o Correctness: the function is computed correctly o Independence of inputs: parties cannot choose inputs based on others’ inputs o Fairness: if corrupted party receives output, honest parties also receive output o Guaranteed output delivery: No matter how the corrupted parties behave honest parties must get output o More? ? ?

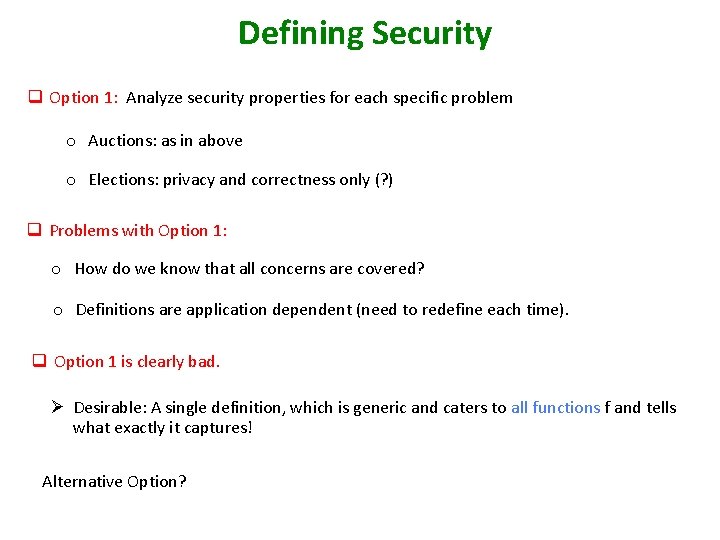

Defining Security q Option 1: Analyze security properties for each specific problem o Auctions: as in above o Elections: privacy and correctness only (? ) q Problems with Option 1: o How do we know that all concerns are covered? o Definitions are application dependent (need to redefine each time). q Option 1 is clearly bad. Ø Desirable: A single definition, which is generic and caters to all functions f and tells what exactly it captures! Alternative Option?

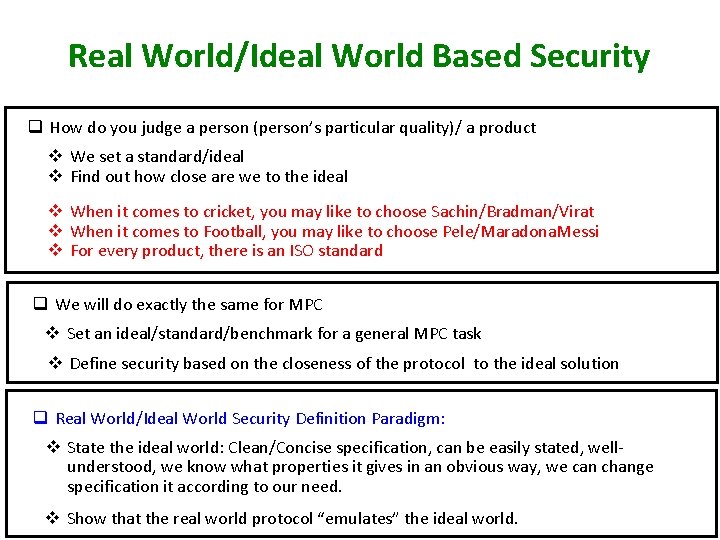

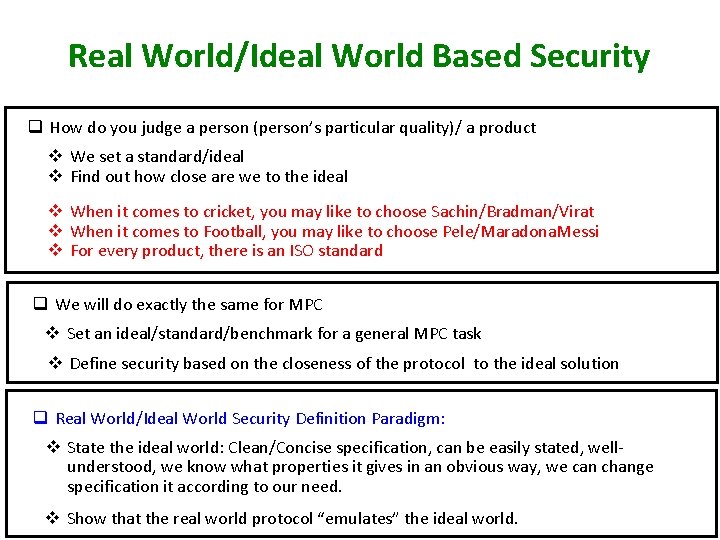

Real World/Ideal World Based Security q How do you judge a person (person’s particular quality)/ a product v We set a standard/ideal v Find out how close are we to the ideal v When it comes to cricket, you may like to choose Sachin/Bradman/Virat v When it comes to Football, you may like to choose Pele/Maradona. Messi v For every product, there is an ISO standard q We will do exactly the same for MPC v Set an ideal/standard/benchmark for a general MPC task v Define security based on the closeness of the protocol to the ideal solution q Real World/Ideal World Security Definition Paradigm: v State the ideal world: Clean/Concise specification, can be easily stated, wellunderstood, we know what properties it gives in an obvious way, we can change specification it according to our need. v Show that the real world protocol “emulates” the ideal world.

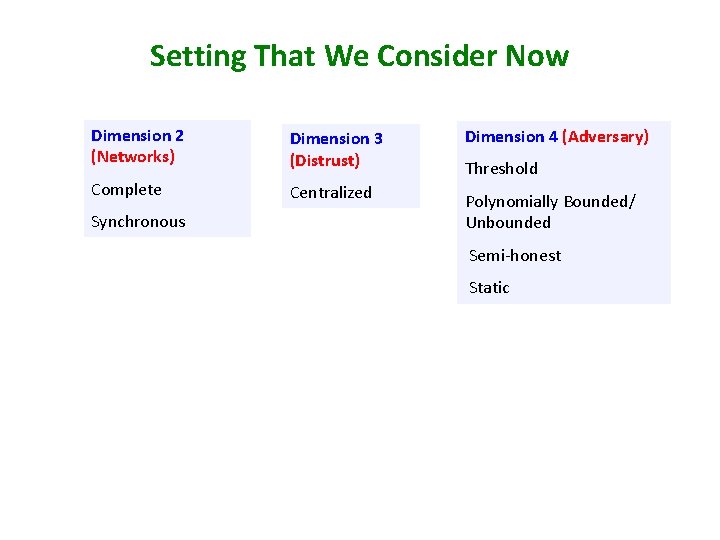

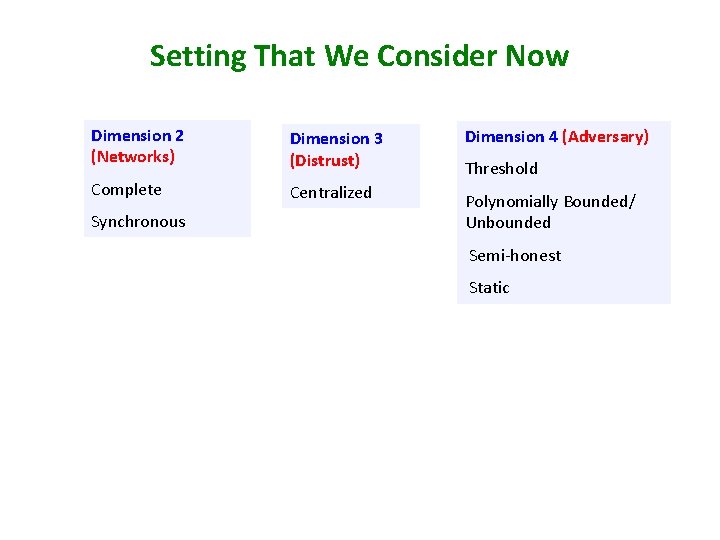

Setting That We Consider Now Dimension 2 (Networks) Dimension 3 (Distrust) Dimension 4 (Adversary) Complete Centralized Polynomially Bounded/ Unbounded Synchronous Threshold Semi-honest Static

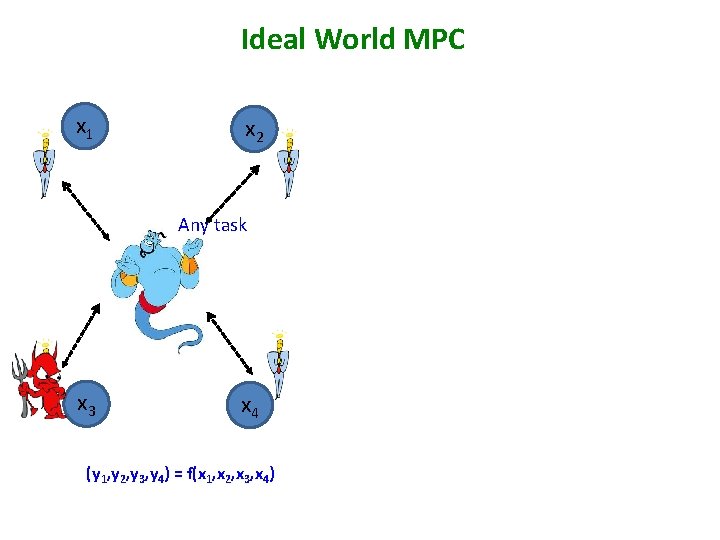

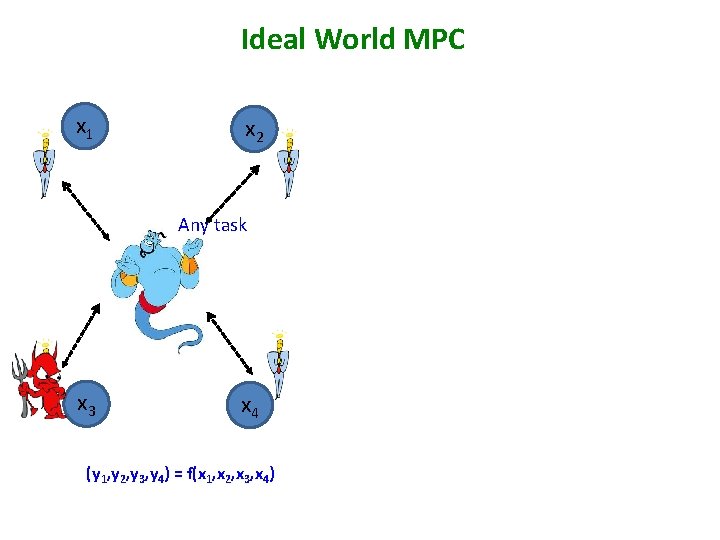

Ideal World MPC x 1 x 2 Any task x 3 x 4 (y 1, y 2, y 3, y 4) = f(x 1, x 2, x 3, x 4)

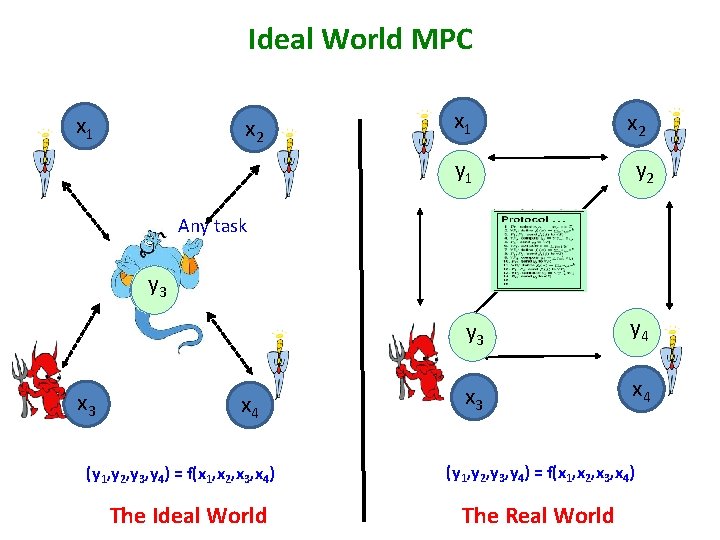

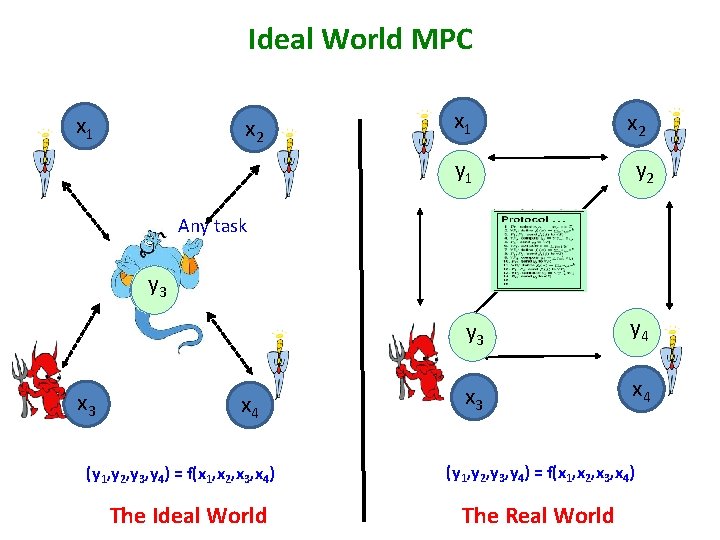

Ideal World MPC x 1 x 2 y 2 y 1 Any task y 2134 x 3 x 4 (y 1, y 2, y 3, y 4) = f(x 1, x 2, x 3, x 4) The Ideal World y 3 y 4 x 3 x 4 (y 1, y 2, y 3, y 4) = f(x 1, x 2, x 3, x 4) The Real World

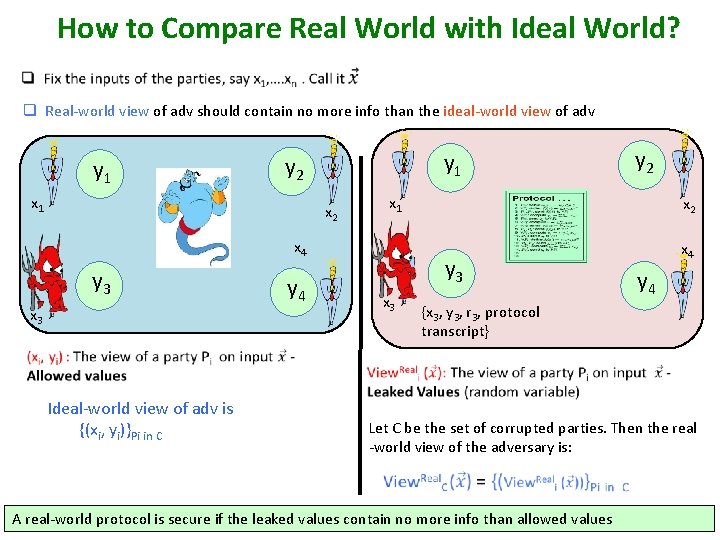

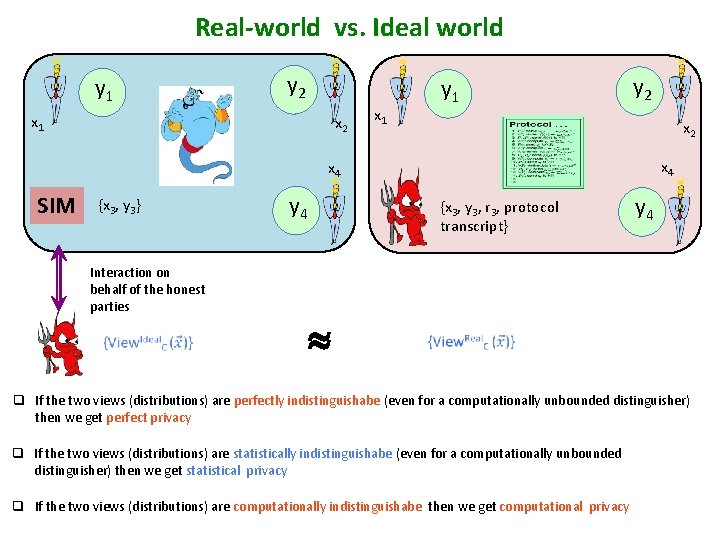

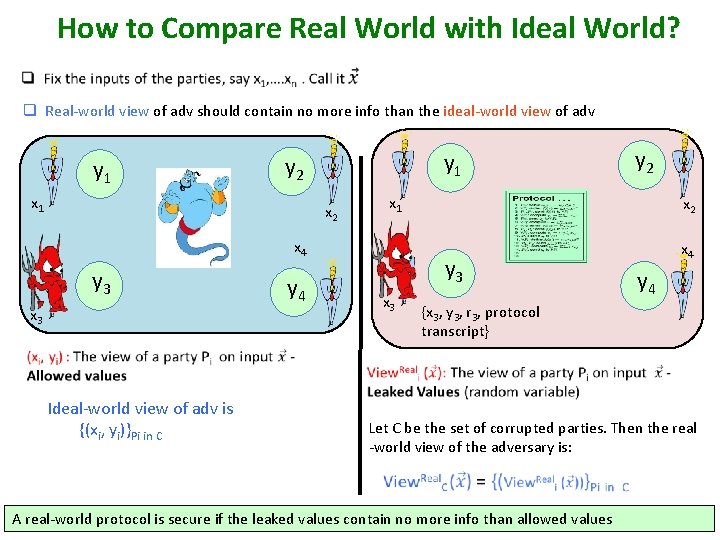

How to Compare Real World with Ideal World? q Real-world view of adv should contain no more info than the ideal-world view of adv y 1 y 2 x 1 x 2 x 4 y 3 x 3 y 2 x 2 y 3 y 4 x 3 x 4 y 4 {x 3, y 3, r 3, protocol transcript} Ideal-world view of adv is {(xi, yi)}Pi in C Let C be the set of corrupted parties. Then the real -world view of the adversary is: A real-world protocol is secure if the leaked values contain no more info than allowed values

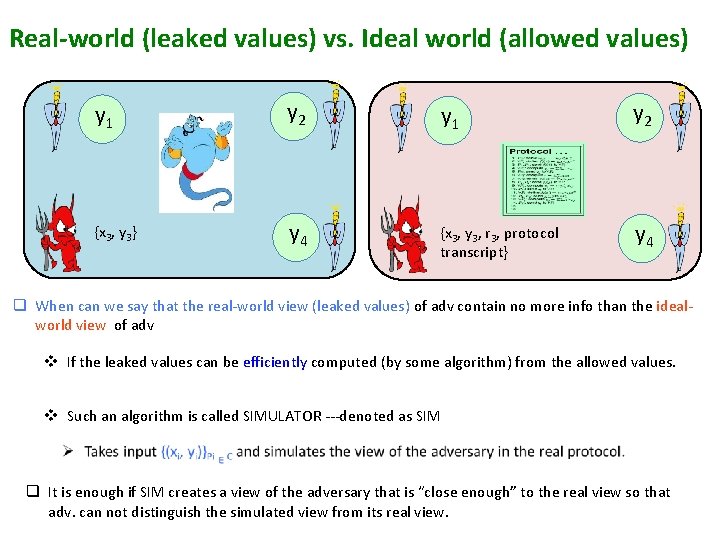

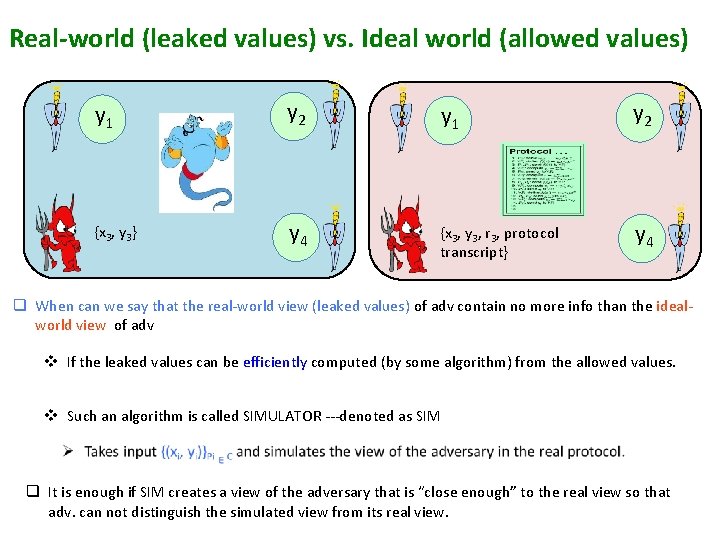

Real-world (leaked values) vs. Ideal world (allowed values) y 1 y 2 {x 3, y 3} y 4 {x 3, y 3, r 3, protocol transcript} y 4 q When can we say that the real-world view (leaked values) of adv contain no more info than the idealworld view of adv v If the leaked values can be efficiently computed (by some algorithm) from the allowed values. v Such an algorithm is called SIMULATOR ---denoted as SIM q It is enough if SIM creates a view of the adversary that is “close enough” to the real view so that adv. can not distinguish the simulated view from its real view.

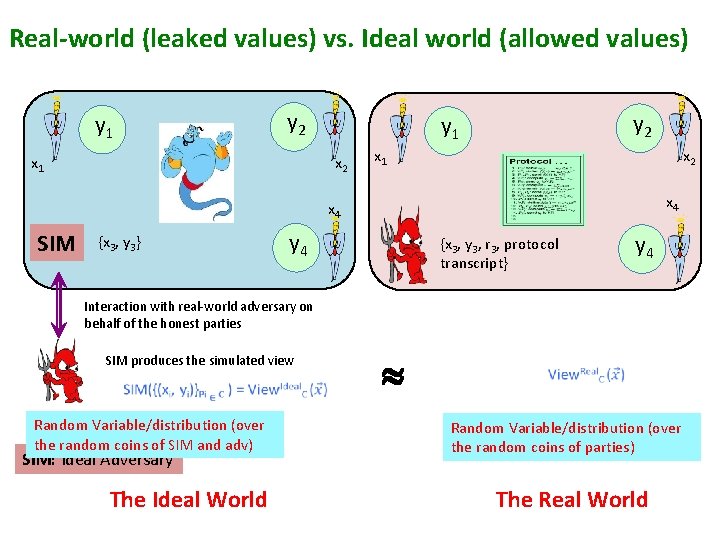

Real-world (leaked values) vs. Ideal world (allowed values) y 2 y 1 x 1 y 2 y 1 x 2 x 4 x 4 SIM {x 3, y 3} y 4 {x 3, y 3, r 3, protocol transcript} y 4 Interaction with real-world adversary on behalf of the honest parties SIM produces the simulated view Random Variable/distribution (over the random coins of SIM and adv) SIM: Ideal Adversary The Ideal World Random Variable/distribution (over the random coins of parties) The Real World

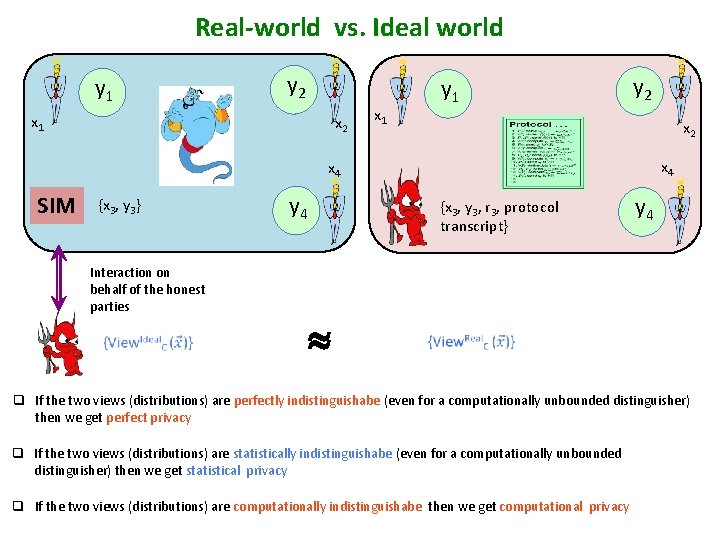

Real-world vs. Ideal world y 1 y 2 x 1 x 2 y 1 x 1 y 2 x 4 x 4 SIM {x 3, y 3} Interaction on behalf of the honest parties y 4 {x 3, y 3, r 3, protocol transcript} y 4 q If the two views (distributions) are perfectly indistinguishabe (even for a computationally unbounded distinguisher) then we get perfect privacy q If the two views (distributions) are statistically indistinguishabe (even for a computationally unbounded distinguisher) then we get statistical privacy q If the two views (distributions) are computationally indistinguishabe then we get computational privacy

Matchmaking

Matchmaking Recallcrypto. com

Recallcrypto. com Binary search in secure computation

Binary search in secure computation Secure multiparty computation

Secure multiparty computation Secure foundations

Secure foundations Rostlinná patra

Rostlinná patra Bi.bi.ni. form no. 4

Bi.bi.ni. form no. 4 Era of quality at the akshaya patra foundation

Era of quality at the akshaya patra foundation Cipher patra

Cipher patra Cipher patra

Cipher patra Sudhakar patra

Sudhakar patra Akshaya patra donation online

Akshaya patra donation online Sudhakar patra

Sudhakar patra Patra v lese

Patra v lese Goutam patra

Goutam patra Theory of computation

Theory of computation