Foundations of Dilated Convolutional Neural Networks Application to

- Slides: 33

• Foundations of Dilated Convolutional Neural Networks • Application to Retail Demand Forecasting

Exponential Smoothing LSTM early 1970 s Seq 2 Seq LSTM Support Vector Regression ARIMA 1950 s Boosted Decision Trees Bayesian Approach GARCH late 1970 s 1980 s Statistical Methods Random Lasso Forest Regression 1995 1996 1997 Classic Machine Learning 1999 GRU Dilated CNN 2014 2016 Surge of DNN methods Deep Learning J. Gooijer and R. Hyndman. 25 Years of Time Series Forecasting.

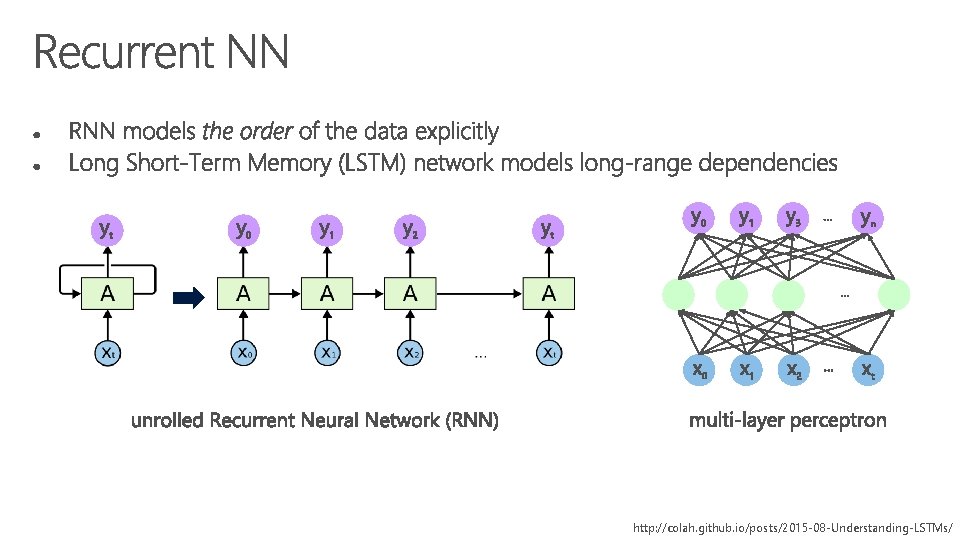

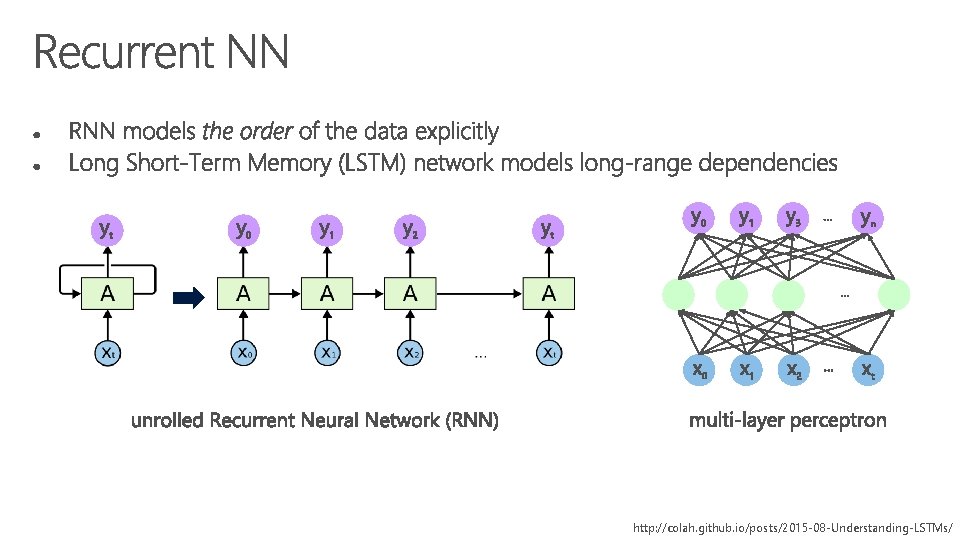

http: //colah. github. io/posts/2015 -08 -Understanding-LSTMs/

• Overview of Time Series Forecasting Methods • Application to Retail Demand Forecasting

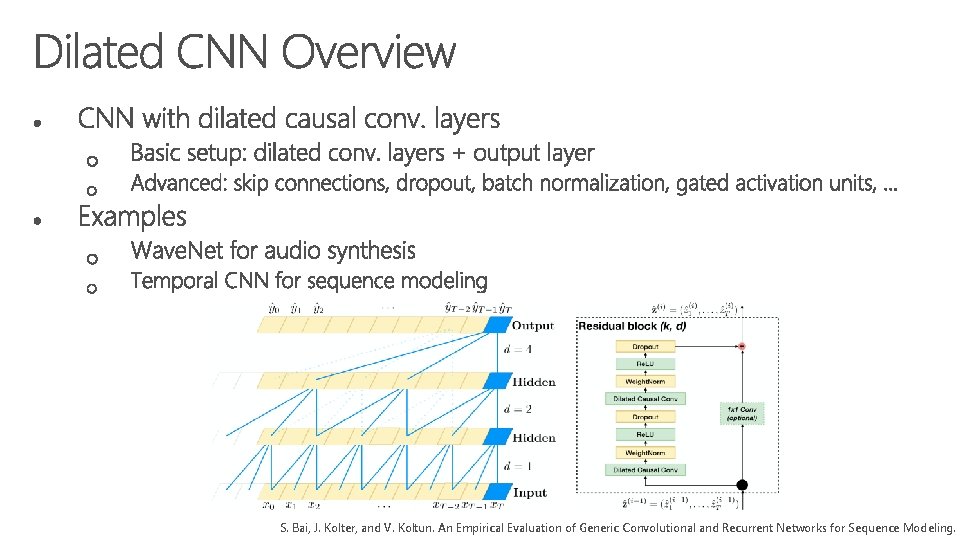

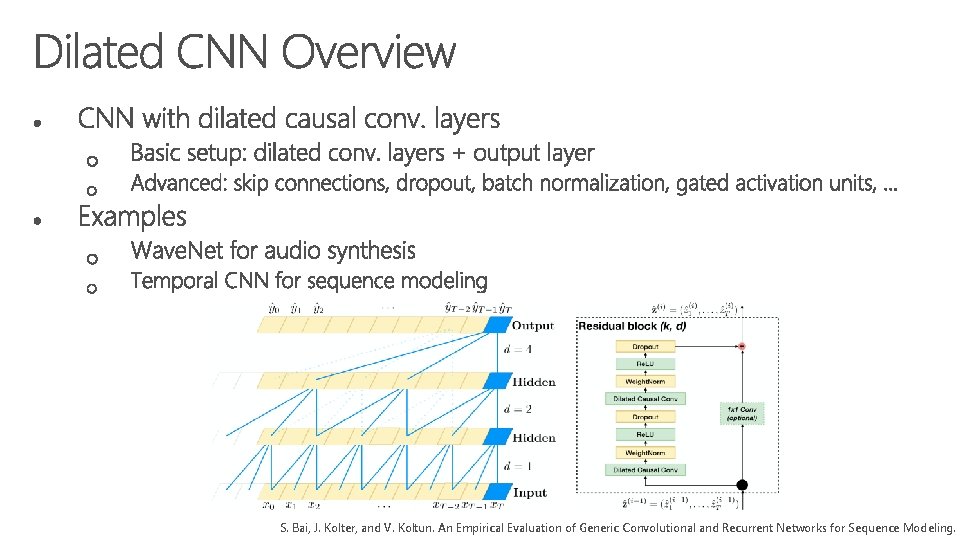

S. Bai, J. Kolter, and V. Koltun. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling.

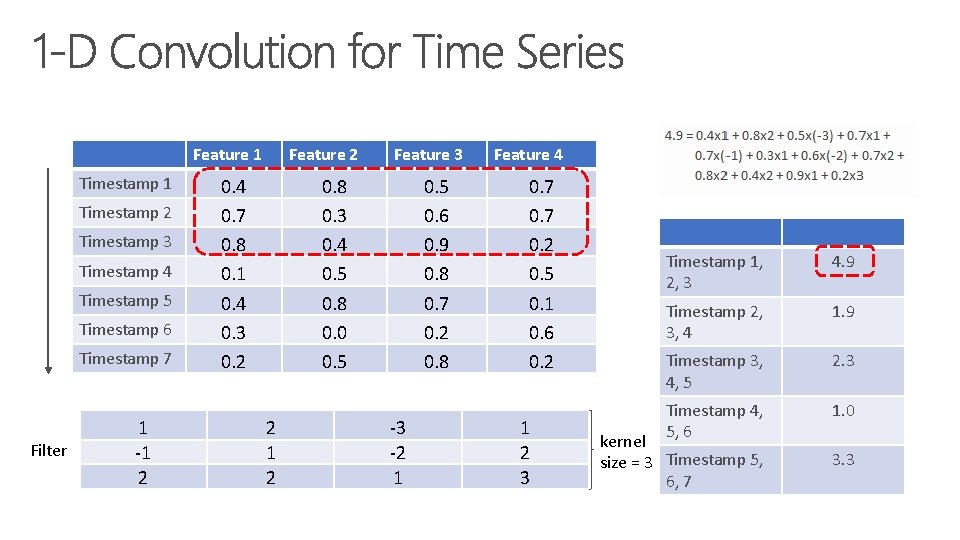

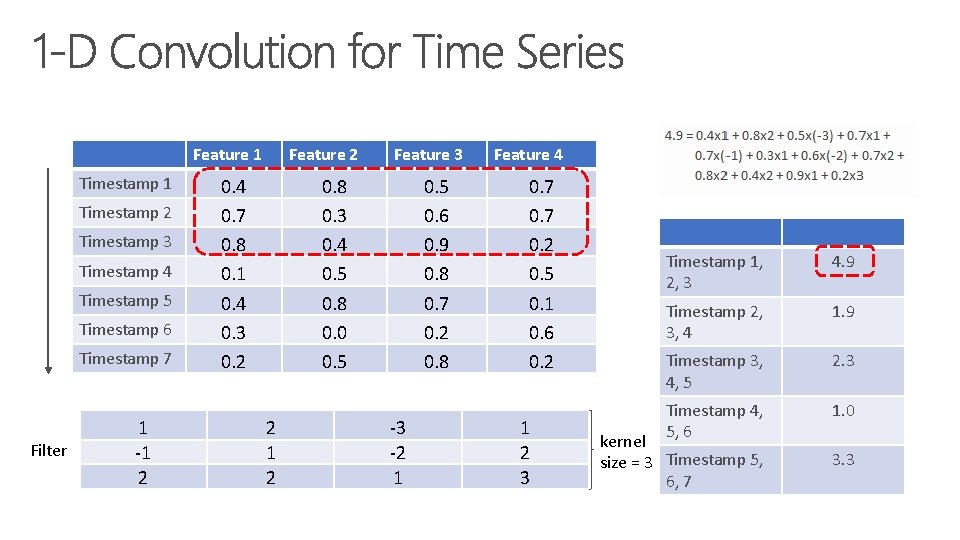

Feature 1 Timestamp 2 Timestamp 3 Timestamp 4 Timestamp 5 Timestamp 6 Timestamp 7 Filter 1 -1 2 Feature 2 0. 4 0. 7 0. 8 0. 1 0. 4 0. 3 0. 2 Feature 3 0. 8 0. 3 0. 4 0. 5 0. 8 0. 0 0. 5 2 1 2 0. 5 0. 6 0. 9 0. 8 0. 7 0. 2 0. 8 -3 -2 1 Feature 4 0. 7 0. 2 0. 5 0. 1 0. 6 0. 2 1 2 3 Timestamp 1, 2, 3 4. 9 Timestamp 2, 3, 4 1. 9 Timestamp 3, 4, 5 2. 3 Timestamp 4, 5, 6 1. 0 kernel size = 3 Timestamp 5, 6, 7 3. 3

Feature 1 Timestamp 2 Timestamp 3 Timestamp 4 Timestamp 5 Timestamp 6 Timestamp 7 Filter 1 -1 2 Feature 2 0. 4 0. 7 0. 8 0. 1 0. 4 0. 3 0. 2 Feature 3 0. 8 0. 3 0. 4 0. 5 0. 8 0. 0 0. 5 2 1 2 0. 5 0. 6 0. 9 0. 8 0. 7 0. 2 0. 8 -3 -2 1 Feature 4 0. 7 0. 2 0. 5 0. 1 0. 6 0. 2 1 2 3 Timestamp 1, 2, 3 4. 9 Timestamp 2, 3, 4 1. 9 Timestamp 3, 4, 5 2. 3 Timestamp 4, 5, 6 1. 0 kernel size = 3 Timestamp 5, 6, 7 3. 3

Feature 1 Timestamp 2 Timestamp 3 Timestamp 4 Timestamp 5 Timestamp 6 Timestamp 7 Filters 1 -1 2 2 1 2 Feature 2 0. 4 0. 7 0. 8 0. 1 0. 4 0. 3 0. 2 -3 -2 1 Feature 3 0. 8 0. 3 0. 4 0. 5 0. 8 0. 0 0. 5 1 2 3 Feature 4 0. 5 0. 6 0. 9 0. 8 0. 7 0. 2 0. 8 1 1 0 1 0. 7 0. 2 0. 5 0. 1 0. 6 0. 2 2 1 0 1 Filter 2 Filter 1 Timestamp 1, 2, 3 4. 9 7. 4 Timestamp 2, 3, 4 1. 9 7. 3 Timestamp 3, 4, 5 2. 3 6. 8 Timestamp 4, 5, 6 1. 0 5. 1 Timestamp 5, 6, 7 3. 3 5. 7

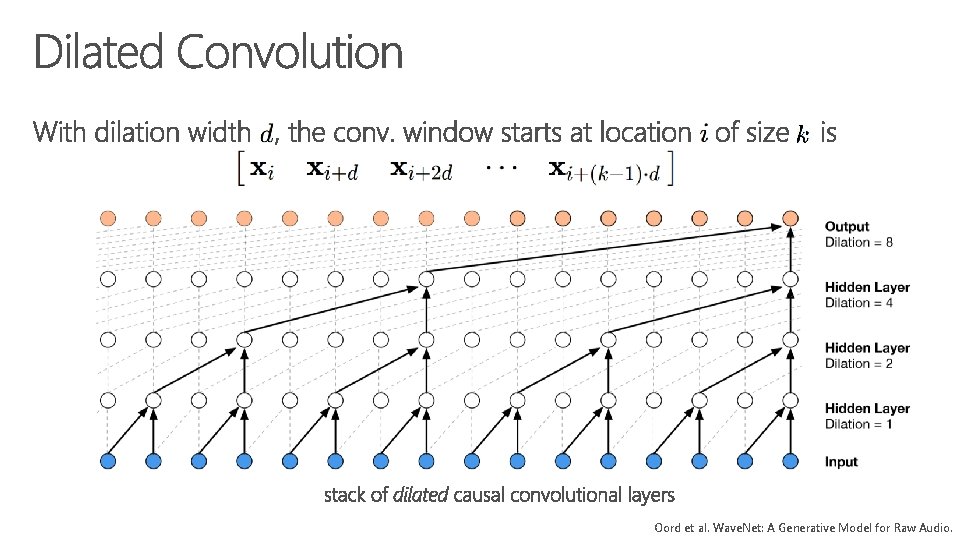

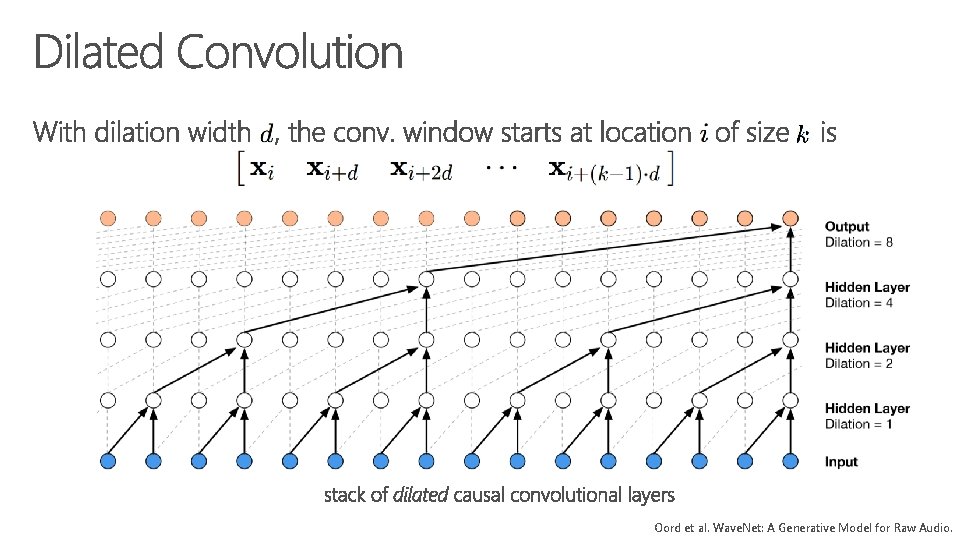

Oord et al. Wave. Net: A Generative Model for Raw Audio.

Oord et al. Wave. Net: A Generative Model for Raw Audio.

K. He, X. Zhang, S. Ren, and J. Sun. Deep Residual Learning for Image Recognition.

S. Bai, J. Kolter, and V. Koltun. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling.

Chang et al. Dilated Recurrent Neural Networks.

• Overview of Time Series Forecasting Methods • Foundations of Dilated Convolutional Neural Networks

https: //cran. r-project. org/web/packages/bayesm. pdf

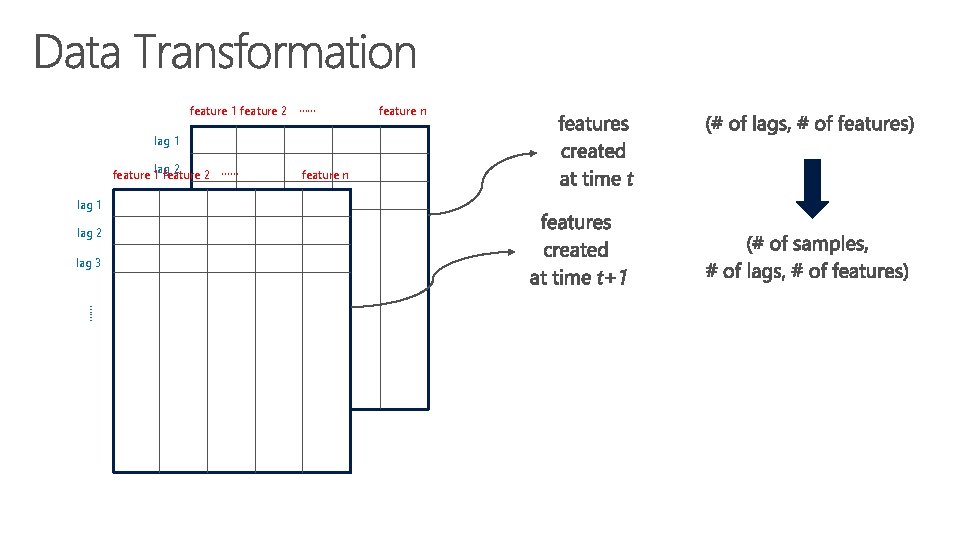

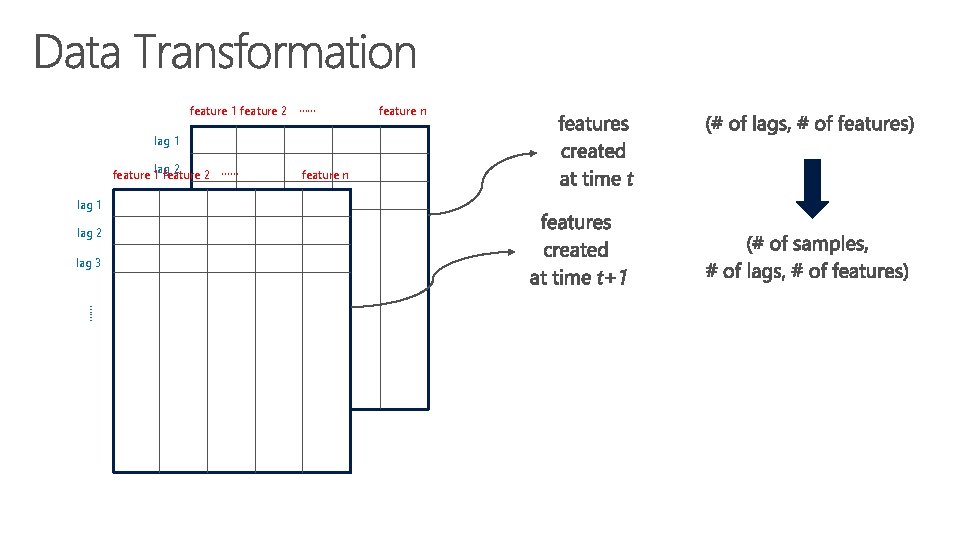

feature 1 feature 2 …… lag 1 2 feature 1 lag feature 2 …… lag 1 lag 3 lag 2 …… lag 3 feature n ……

https: //docs. microsoft. com/en-us/azure/machine-learning/service/how-to-tune-hyperparameters

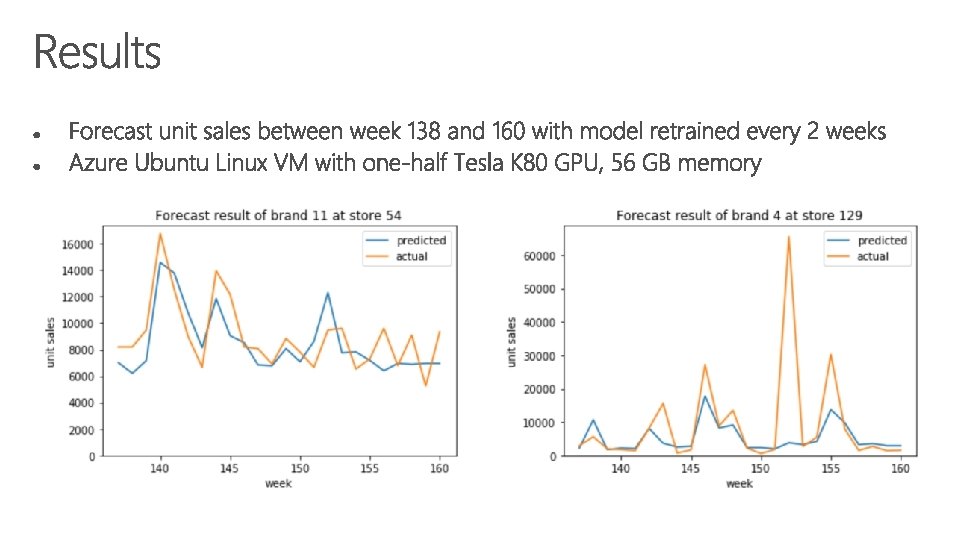

Method MAPE Running time Machine Dilated CNN 37. 09 % 413 s GPU Linux VM Seq 2 Seq RNN 37. 68 % 669 s GPU Linux VM Naive 109. 67 % 114. 06 s CPU Linux VM ETS 70. 99 % 277. 01 s CPU Linux VM ARIMA 70. 80 % 265. 94 s CPU Linux VM Results are collected based on the median of 5 run results

Session page on conference website O’Reilly Events App