Formal Methods for Privacy Jeannette M Wing Assistant

![Technical Progress: Privacy ? Examples: - Privacy-preserving data mining [Dwork. Nissam 04] - Private Technical Progress: Privacy ? Examples: - Privacy-preserving data mining [Dwork. Nissam 04] - Private](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-6.jpg)

![Philosophical Views on Privacy Violations [Solove 2006] Philosophical Views on Privacy Violations [Solove 2006]](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-12.jpg)

![Vanishing Data: Overcoming New Risks to Privacy (University of Washington) [USENIX June 2009] Yesterday Vanishing Data: Overcoming New Risks to Privacy (University of Washington) [USENIX June 2009] Yesterday](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-26.jpg)

![Differential Privacy Motivation: Dalenius [1977] proposes the privacy requirement that an adversary with aggregate Differential Privacy Motivation: Dalenius [1977] proposes the privacy requirement that an adversary with aggregate](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-27.jpg)

- Slides: 43

Formal Methods for Privacy Jeannette M. Wing Assistant Director Computer and Information Science and Engineering Directorate and President’s Professor of Computer Science Carnegie Mellon University Formal Methods 2009 Eindhoven, The Netherlands November 4, 2009

Broader Context: Trustworthy Systems • Trustworthy = + Reliability + Security + Privacy + Usability FM 2009 2 Jeannette M. Wing

Broader Context: Trustworthy Systems • Trustworthy = + Reliability • Does it do the right thing? + Security • How vulnerable is it to attack? + Privacy • Does it protect a person’s information? + Usability • Can a human use it easily? FM 2009 3 Jeannette M. Wing

Technical Progress: Reliability • Formal definitions, theories, models, logics, languages, algorithms, etc. for stating and proving notions of correctness. • Tools for analyzing systems—from code to architecture—for desired and undesired properties • Use of languages, tools, etc. in industry. – “Reliable” [= “good enough”] systems in practice: telephony, the Internet, desktop software, your automobile • Examples: – Strongly typed programming languages rule out entire classes of errors. – Database systems are built to satisfy ACID properties: atomicity, consistency, isolation, durability – Byzantine fault-tolerance, n > 3 t+1 – Impossibility results, e. g. , distributed consensus with 1 faulty node Current challenge: Nature and scale of systems and their operating environments are more complex, forcing us to revisit these fundamental results. E. g. , cyber-physical FM 2009 4 Jeannette M. Wing systems, safety-critical systems.

Technical Progress: Security • Formal definitions, theories, models, logics, languages, algorithms, etc. for stating and proving notions of security. • Tools for analyzing systems—from code to architecture—desired and undesired properties • Use of languages, tools, etc. in industry. – Secure [= “secure enough”] systems in practice: telephony, the Internet, desktop software, your automobile (today) • Examples: – Cryptography – Systems designed to satisfy informally CIA properties (confidentiality, integrity, availability). – Logic of authentication [Burrows. Abadi. Needham 89], logic for access control [Lampson. Abadi. Burrows. Wobber 92] Current challenges: (1) Assumptions have changed; revisit the blue. (2) Fill in the gray. (3) Nature and scale of systems and their operating environments are more complex, forcing us to revisit the fundamentals. E. g. , today’s crypto rests (mostly) on RSA, i. e. , FM 2009 of factoring. 5 Jeannette M. Wing hardness

![Technical Progress Privacy Examples Privacypreserving data mining Dwork Nissam 04 Private Technical Progress: Privacy ? Examples: - Privacy-preserving data mining [Dwork. Nissam 04] - Private](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-6.jpg)

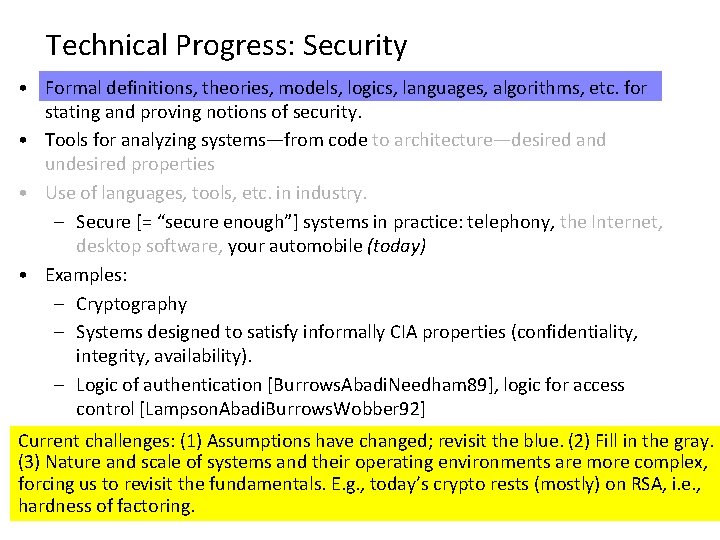

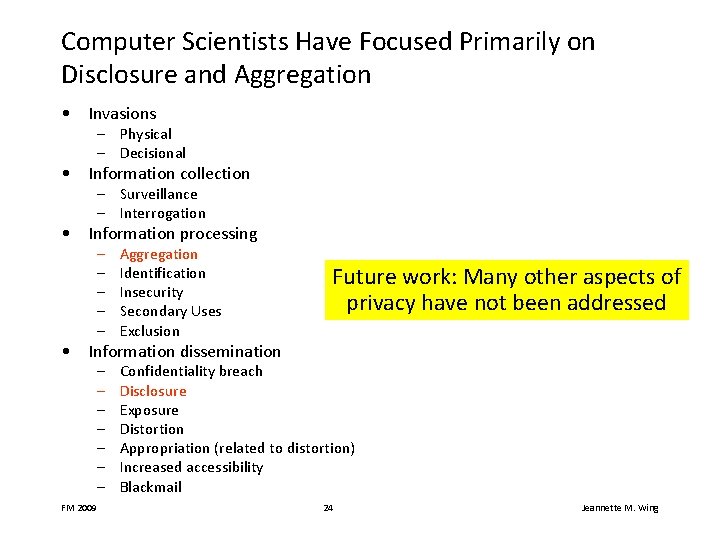

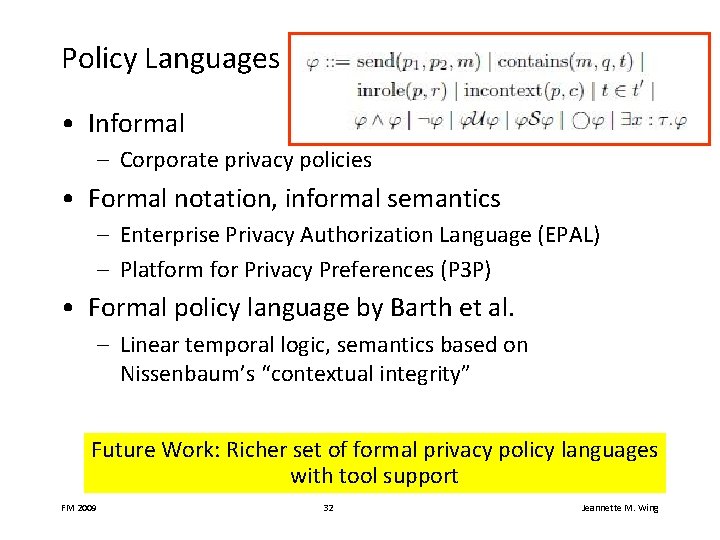

Technical Progress: Privacy ? Examples: - Privacy-preserving data mining [Dwork. Nissam 04] - Private matching [Li. Tygar. Hellerstein 05] - Differential privacy [Dwork et al. 06] - Privacy policy language [Barth. Datta. Mitchell. Nissenbaum 06] - Privacy in statistical databases [Fienberg et al. 04, 06] - Non-interference, confidentiality [Goguen. Meseguer 82, Tschantz. Wing 08] FM 2009 6 Jeannette M. Wing

Aspects to Privacy Technical Philosophical Legal Political FM 2009 7 Societal Jeannette M. Wing

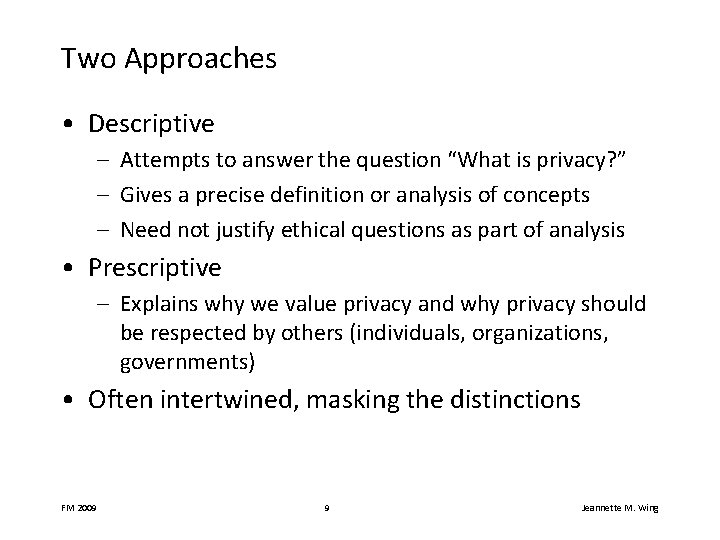

Philosophical Views on Privacy Rights

Two Approaches • Descriptive – Attempts to answer the question “What is privacy? ” – Gives a precise definition or analysis of concepts – Need not justify ethical questions as part of analysis • Prescriptive – Explains why we value privacy and why privacy should be respected by others (individuals, organizations, governments) • Often intertwined, masking the distinctions FM 2009 9 Jeannette M. Wing

Descriptive (What is Privacy? ) • Restrictions on the access of other people to an individual’s information. vs. • An individual’s control over personal information. FM 2009 10 Jeannette M. Wing

Prescriptive (Value of Privacy) • Fundamental human right vs. • Instrumental: value of privacy derives from the value of other, more fundamental values privacy allows – E. g. , autonomy, fairness, intimate relations FM 2009 11 Jeannette M. Wing

![Philosophical Views on Privacy Violations Solove 2006 Philosophical Views on Privacy Violations [Solove 2006]](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-12.jpg)

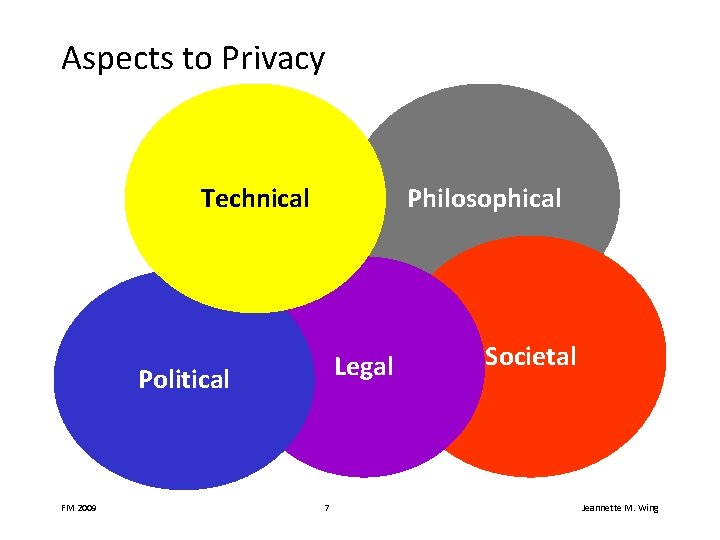

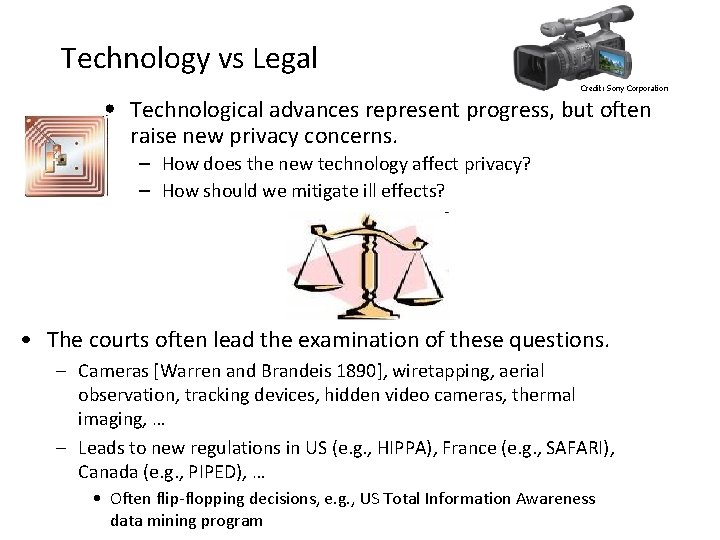

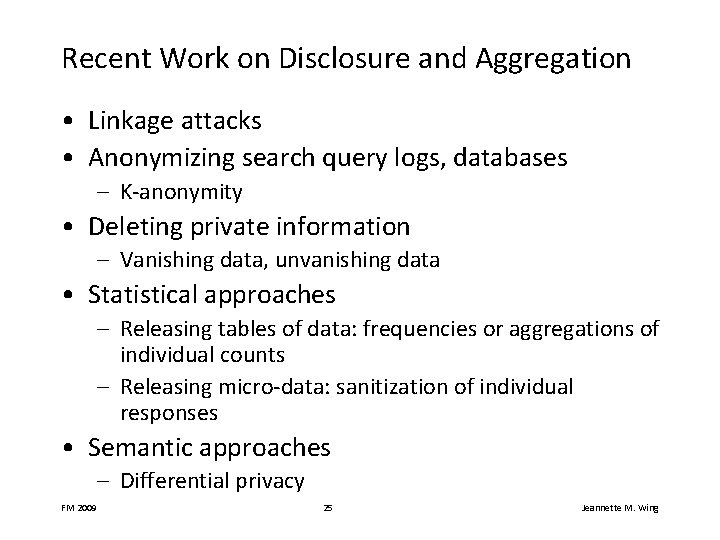

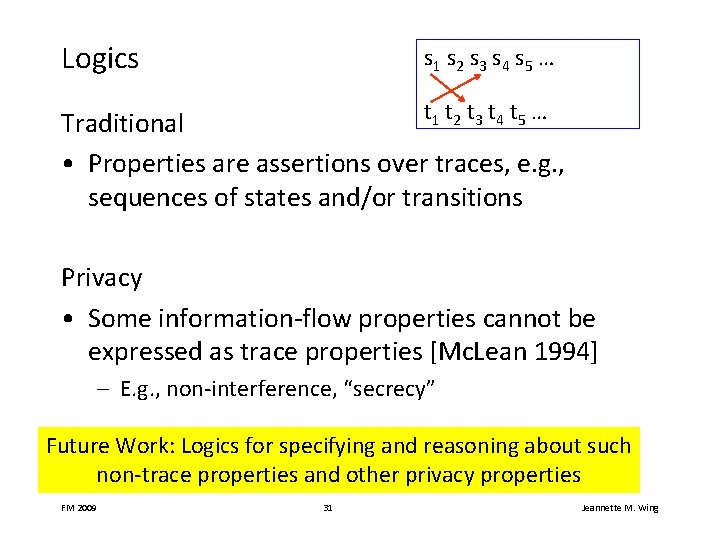

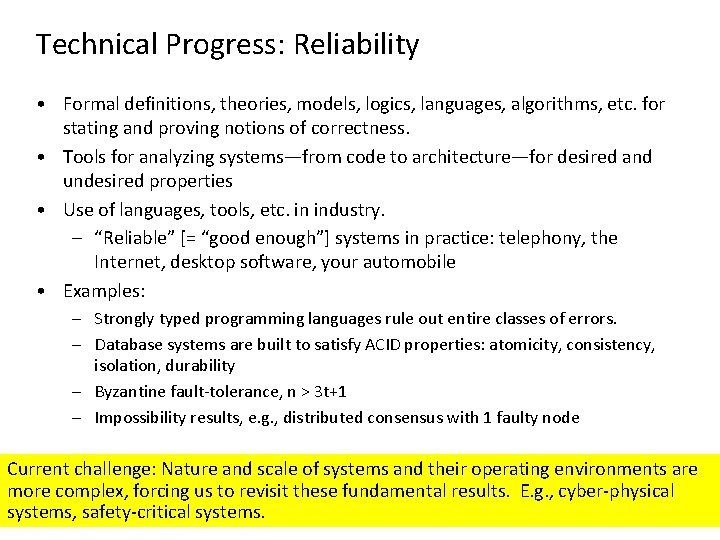

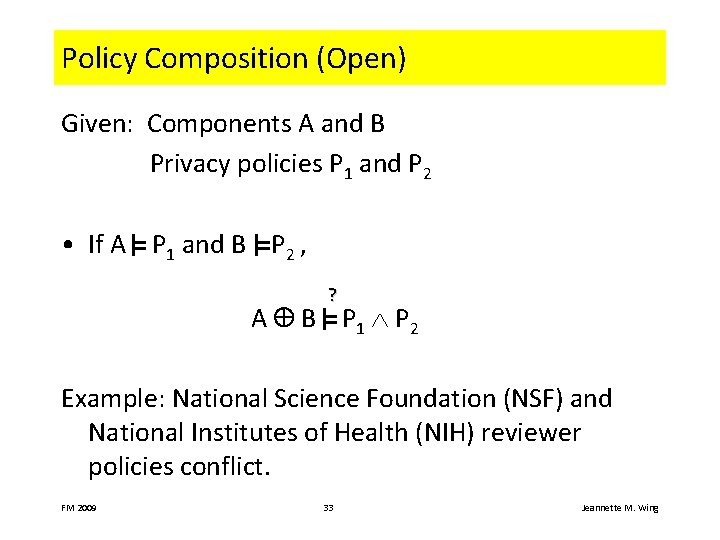

Philosophical Views on Privacy Violations [Solove 2006]

Model Data Subject FM 2009 Data Holder 13 Jeannette M. Wing

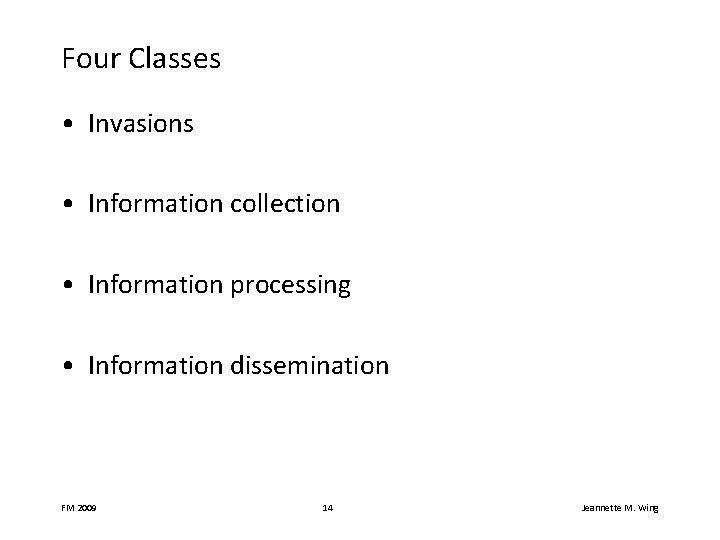

Four Classes • Invasions • Information collection • Information processing • Information dissemination FM 2009 14 Jeannette M. Wing

Invasions • Physical intrusions • Decisional interference – Trespassing – Blocking passage – Interfering with personal decisions • E. g. , use of contraceptives, abortion, sodomy Perhaps reducible to violations of other rights o property and security intrusions o autonomy and liberty decisional interference FM 2009 15 Jeannette M. Wing

Information Collection • Surveillance • Interrogation } Making observations Credit: Apple, Inc. Makes people uncomfortable about how collected information will be used, even if never used. l Puts people in awkward positions of having to refuse to answer questions. l Even in the absence of violations, collection should be controlled in order to prevent other violations, e. g. , blackmail. l FM 2009 16 Jeannette M. Wing

Information Processing (I) • Aggregation - Combines diffuse pieces of information - Enables inferences otherwise unavailable • Identification - Links information with a person - Enables inferences, alters how a person is treated • Insecurity - Makes information more available to those unauthorized - Leads to identity theft, distortion of data • Secondary Uses • Exclusion - Makes information available for purposes not originally intended - Inability of data subject to know what records are kept, to 17 view them, to know how they are used, or to correct them FM 2009 Jeannette M. Wing Credit: Abingdon Press

Information Processing (II) • All types create uncertainty on the part of the data subject • Even in the absence of abuse, uncertainty can cause people to live in fear of how information may be used FM 2009 18 Jeannette M. Wing

Information Dissemination • Confidentiality breach Trusted data holder provides information about data subject, e. g. , doctor-patient, lawyer-client, priest-parishioner • Disclosure Making private information known outside the group of individuals expected to know it • Exposure Embarrassing information shared, stripping data subject of dignity • Increased accessibility Data holder makes previously available information more easily acquirable • Blackmail • Distortion Presentation of false information about person, harming not just subject, but also third parties no longer able to accurately judge subject’s character • Appropriation (related to distortion) Associates a person with a cause/product he did not agree to endorse Threat of dissemination unless demand is met, creating a power relationship FM 2009 no social benefits 19 Jeannette M. Wing with

Technology Raises New Privacy Concerns

Technology vs Legal Credit: Sony Corporation • Technological advances represent progress, but often raise new privacy concerns. – How does the new technology affect privacy? – How should we mitigate ill effects? • The courts often lead the examination of these questions. – Cameras [Warren and Brandeis 1890], wiretapping, aerial observation, tracking devices, hidden video cameras, thermal imaging, … – Leads to new regulations in US (e. g. , HIPPA), France (e. g. , SAFARI), Canada (e. g. , PIPED), … • Often flip-flopping decisions, 21 e. g. , US Total Information Awareness Jeannette M. Wing data mining program FM 2009

Technology Helps Preserve Privacy

Diversity of Technical Approaches • Technology can make some violations impossible – Cryptography • One-time pads guarantee perfect secrecy • Voting schemes that prevent attacks of coercion – Formal verification • Secure operating systems kernels • Technology can mitigate attacks of intrusion – Intrusion detection systems, spam filters • Technology can preserve degrees of privacy – Onion routing for anonymity – Privacy-preserving data mining • Technology can provide assurance – Logics of knowledge for reasoning about secrecy and anonymity FM 2009 23 Jeannette M. Wing

Computer Scientists Have Focused Primarily on Disclosure and Aggregation • Invasions – Physical – Decisional • Information collection – Surveillance – Interrogation • Information processing – – – Aggregation Identification Insecurity Secondary Uses Exclusion – – – – Confidentiality breach Disclosure Exposure Distortion Appropriation (related to distortion) Increased accessibility Blackmail Future work: Many other aspects of privacy have not been addressed • Information dissemination FM 2009 24 Jeannette M. Wing

Recent Work on Disclosure and Aggregation • Linkage attacks • Anonymizing search query logs, databases – K-anonymity • Deleting private information – Vanishing data, unvanishing data • Statistical approaches – Releasing tables of data: frequencies or aggregations of individual counts – Releasing micro-data: sanitization of individual responses • Semantic approaches – Differential privacy FM 2009 25 Jeannette M. Wing

![Vanishing Data Overcoming New Risks to Privacy University of Washington USENIX June 2009 Yesterday Vanishing Data: Overcoming New Risks to Privacy (University of Washington) [USENIX June 2009] Yesterday](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-26.jpg)

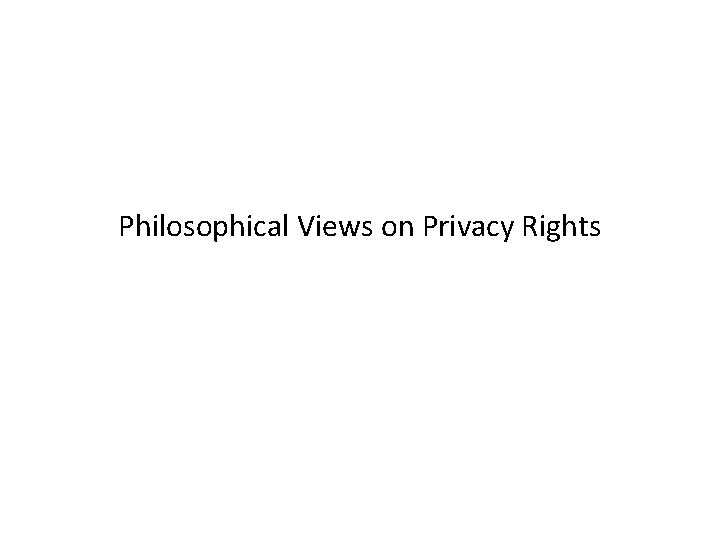

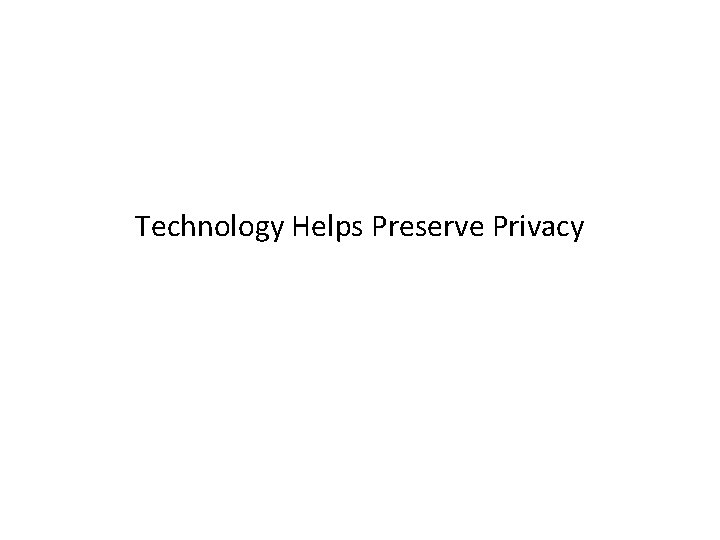

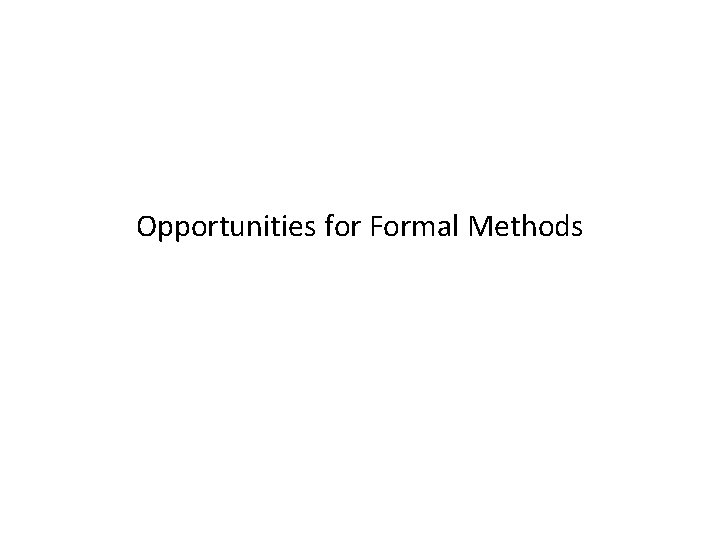

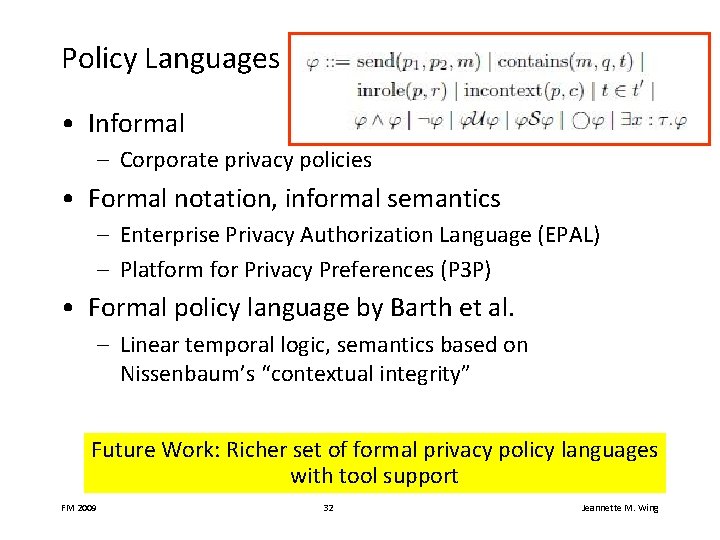

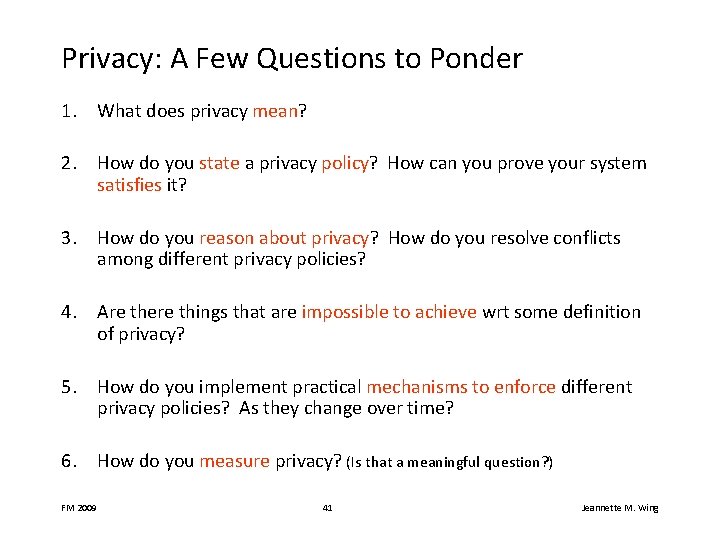

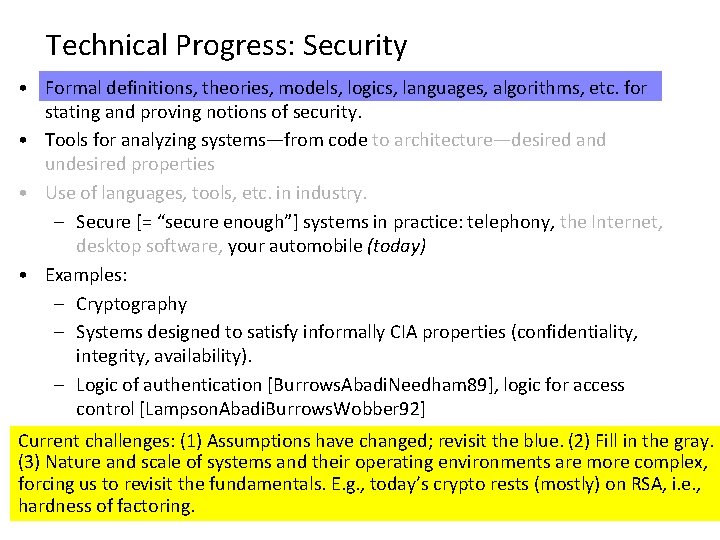

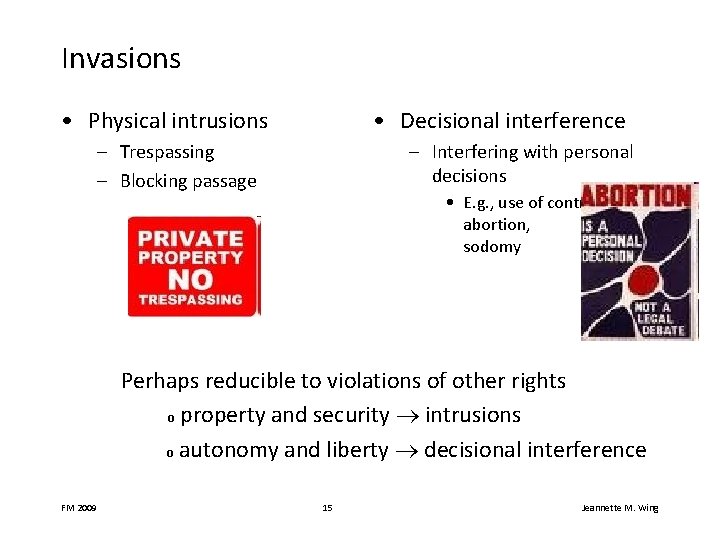

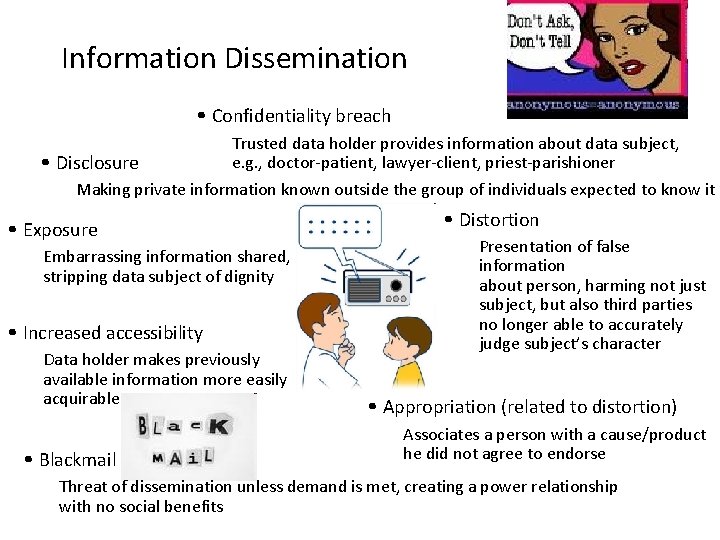

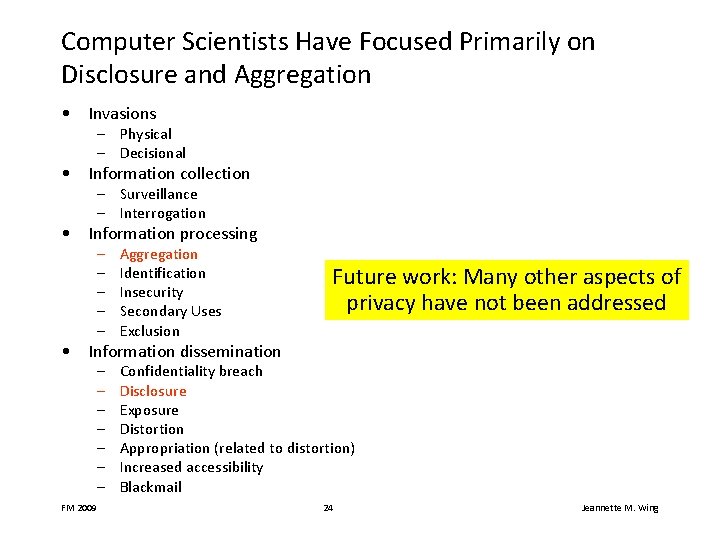

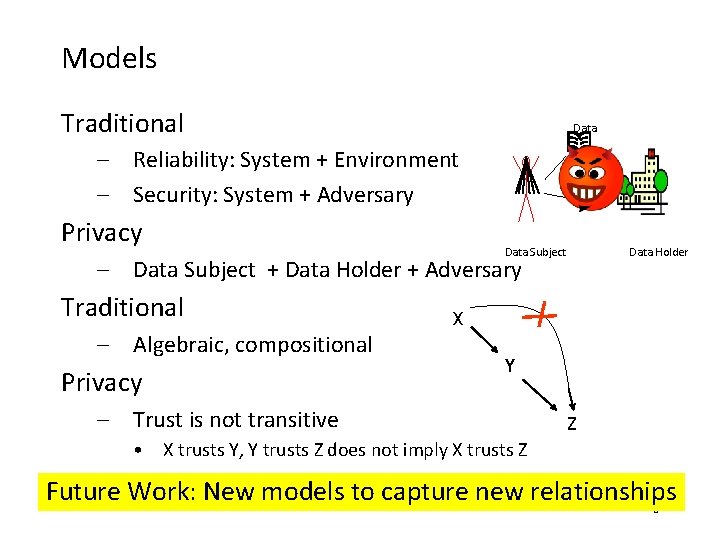

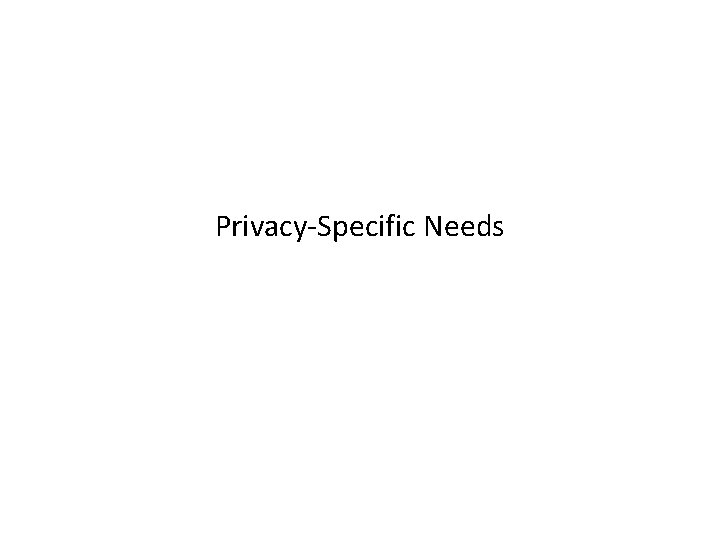

Vanishing Data: Overcoming New Risks to Privacy (University of Washington) [USENIX June 2009] Yesterday (Data on Personal/Company Machines) Private Data Tomorrow (Data in “The Cloud”) Data passes through ISPs Data stored in the “Cloud” Sept 18: Unvanishing Data (Princeton and Rice) breaks Vanishing Data FM 2009 26 Jeannette M. Wing

![Differential Privacy Motivation Dalenius 1977 proposes the privacy requirement that an adversary with aggregate Differential Privacy Motivation: Dalenius [1977] proposes the privacy requirement that an adversary with aggregate](https://slidetodoc.com/presentation_image_h/738035e4f55a07169d8bd98a0338eca6/image-27.jpg)

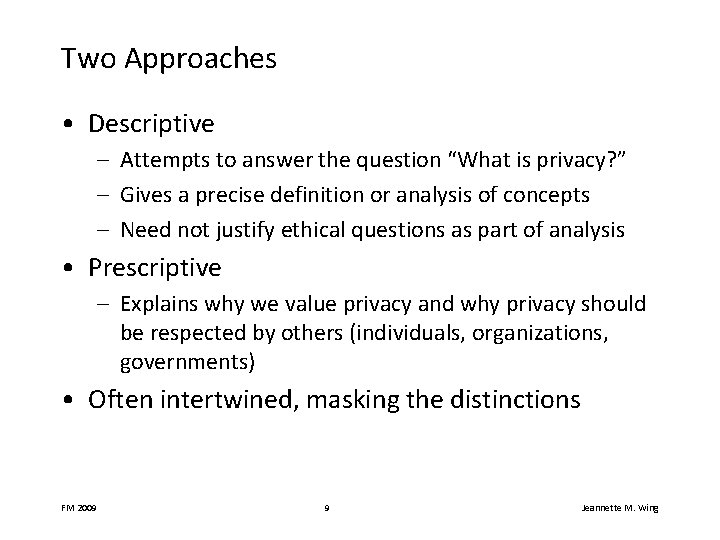

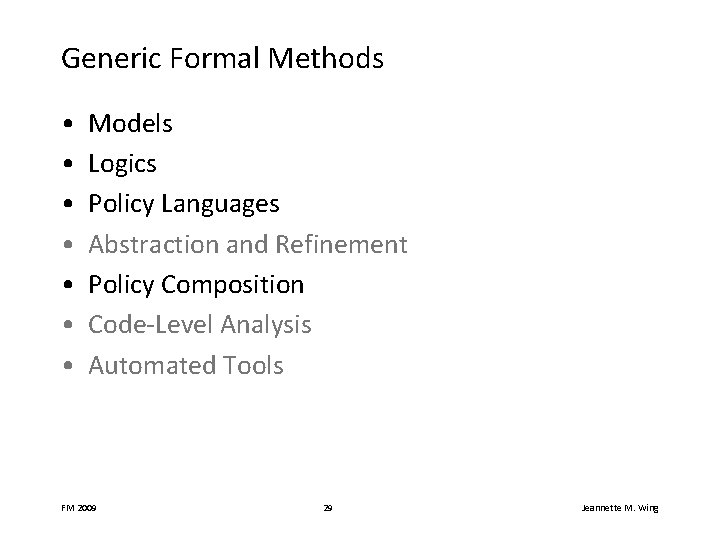

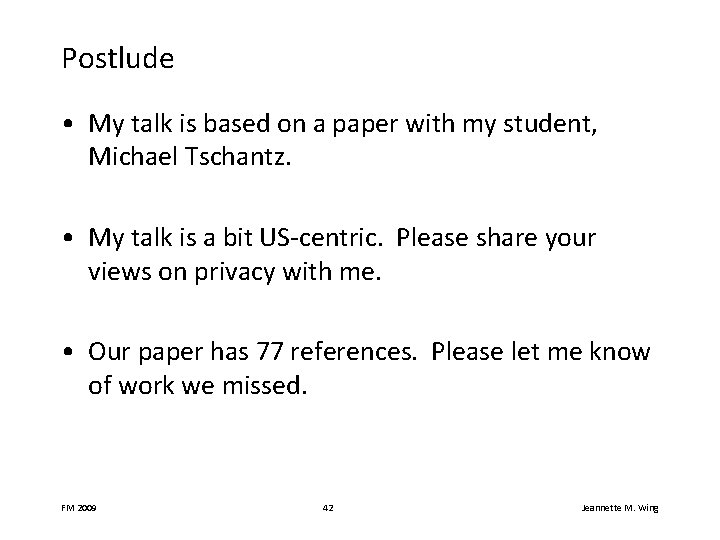

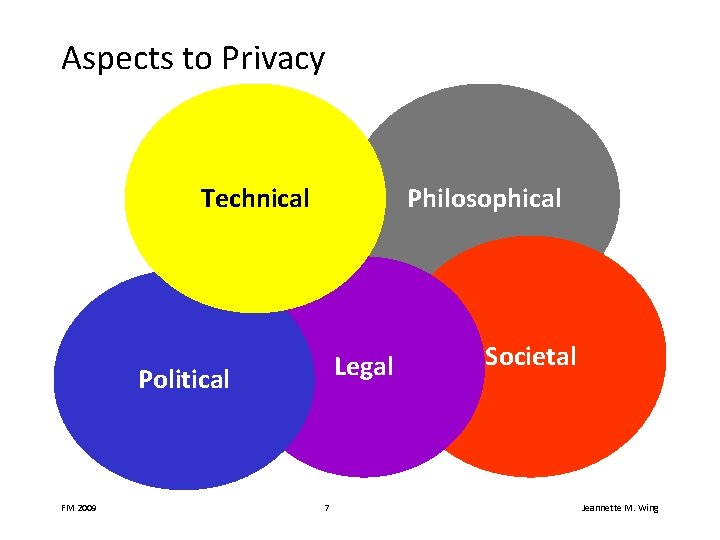

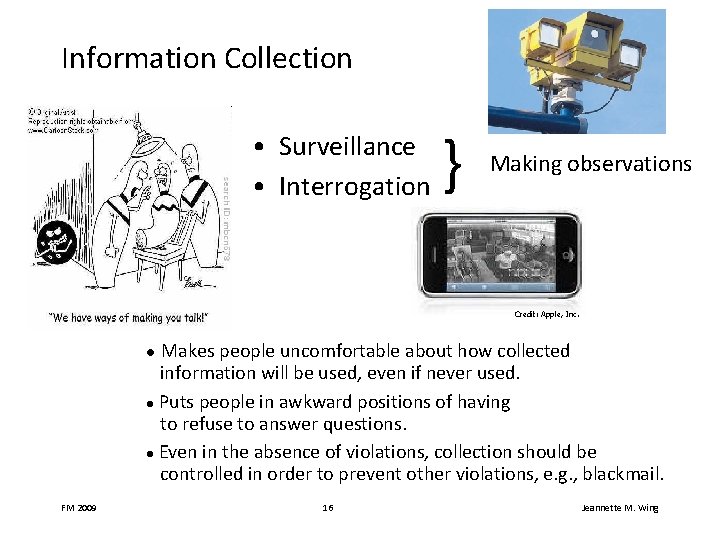

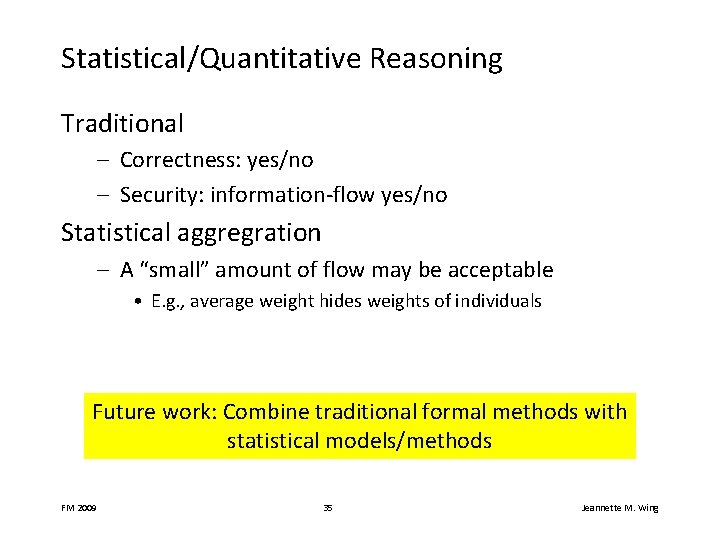

Differential Privacy Motivation: Dalenius [1977] proposes the privacy requirement that an adversary with aggregate information learns nothing about any of the data subjects that he could not have known without the aggregrate information. Dwork [2006] proves this is impossible to hold. Dwork et al. [2006] introduces a tunable probabilistic differential privacy requirement. • Add noise to value of statistic. • Adversary cannot learn about any one individual. FM 2009 27 Jeannette M. Wing

Opportunities for Formal Methods

Generic Formal Methods • • Models Logics Policy Languages Abstraction and Refinement Policy Composition Code-Level Analysis Automated Tools FM 2009 29 Jeannette M. Wing

Models Traditional Data – Reliability: System + Environment – Security: System + Adversary Privacy Data Holder Data Subject – Data Subject + Data Holder + Adversary Traditional – Algebraic, compositional Privacy X Y – Trust is not transitive Z • X trusts Y, Y trusts Z does not imply X trusts Z Future Work: New models to capture new relationships FM 2009 30 Jeannette M. Wing

Logics s 1 s 2 s 3 s 4 s 5 … t 1 t 2 t 3 t 4 t 5 … Traditional • Properties are assertions over traces, e. g. , sequences of states and/or transitions Privacy • Some information-flow properties cannot be expressed as trace properties [Mc. Lean 1994] – E. g. , non-interference, “secrecy” Future Work: Logics for specifying and reasoning about such non-trace properties and other privacy properties FM 2009 31 Jeannette M. Wing

Policy Languages • Informal – Corporate privacy policies • Formal notation, informal semantics – Enterprise Privacy Authorization Language (EPAL) – Platform for Privacy Preferences (P 3 P) • Formal policy language by Barth et al. – Linear temporal logic, semantics based on Nissenbaum’s “contextual integrity” Future Work: Richer set of formal privacy policy languages with tool support FM 2009 32 Jeannette M. Wing

Policy Composition (Open) Given: Components A and B Privacy policies P 1 and P 2 • If A P 1 and B P 2 , ? A B P 1 P 2 Example: National Science Foundation (NSF) and National Institutes of Health (NIH) reviewer policies conflict. FM 2009 33 Jeannette M. Wing

Privacy-Specific Needs

Statistical/Quantitative Reasoning Traditional – Correctness: yes/no – Security: information-flow yes/no Statistical aggregration – A “small” amount of flow may be acceptable • E. g. , average weight hides weights of individuals Future work: Combine traditional formal methods with statistical models/methods FM 2009 35 Jeannette M. Wing

Broader Context: Trustworthy Systems • Trustworthy = + Reliability + Security + Privacy + Usability Tradeoffs among all four. FM 2009 36 Jeannette M. Wing

Privacy and Usability

Clicking Your Way Through Privacy (Firefox) FM 2009 39 Jeannette M. Wing

Do You Read These? What Are They Saying? Windows Media Player 10 Privacy Statement This privacy statement goes on for seven screenfuls! FM 2009 40 Jeannette M. Wing

Privacy: A Few Questions to Ponder 1. What does privacy mean? 2. How do you state a privacy policy? How can you prove your system satisfies it? 3. How do you reason about privacy? How do you resolve conflicts among different privacy policies? 4. Are there things that are impossible to achieve wrt some definition of privacy? 5. How do you implement practical mechanisms to enforce different privacy policies? As they change over time? 6. How do you measure privacy? (Is that a meaningful question? ) FM 2009 41 Jeannette M. Wing

Postlude • My talk is based on a paper with my student, Michael Tschantz. • My talk is a bit US-centric. Please share your views on privacy with me. • Our paper has 77 references. Please let me know of work we missed. FM 2009 42 Jeannette M. Wing

Thank you! FM 2009 43 Jeannette M. Wing

Credits • Copyrighted material used under Fair Use. If you are the copyright holder and believe your material has been used unfairly, or if you have any suggestions, feedback, or support, please contact: jsoleil@nsf. gov • Except where otherwise indicated, permission is granted to copy, distribute, and/or modify all images in this document under the terms of the GNU Free Documentation license, Version 1. 2 or any later version published by the Free Software Foundation; with no Invariant Sections, no Front. Cover Texts, and no Back-Cover Texts. A copy of the license is included in the section entitled “GNU Free Documentation license” (http: //commons. wikimedia. org/wiki/Commons: GNU_Free_Documentation_License) • The inclusion of a logo does not express or imply the endorsement by NSF of the entities' products, services or enterprises 44