Formal Computational Skills Matrices 1 Overview Motivation many

- Slides: 41

Formal Computational Skills Matrices 1

Overview Motivation: many mathematical uses eg • Writing networks operations • Solving linear equations • Calculating transformations • Changing coordinate systems By the end you should: • Be able to add/subtract/multiply 2 matrices • Be able to add/subtract/multiply 2 vectors • Use matrix inverses to solve linear equations • Write network operations with matrices Advanced topics - will also discuss: • Matrices as transformations • Eigenvectors and eigenvalues of a matrix

Today’s Topics Matrix/Vector Basics • Matrix definitions (square matrix, identity etc) • Matrix addition/subtraction/multiplication • Matrix inverse • Vector definitions • Vectors as geometric objects Tomorrow’s Topics Uses of Matrices • Matrices as sets of linear equations • Networks as matrices • Solving sets of linear equations • (Briefly) Matrix operations as transformations • (Briefly) Eigenvectors and eigenvalues of a matrix

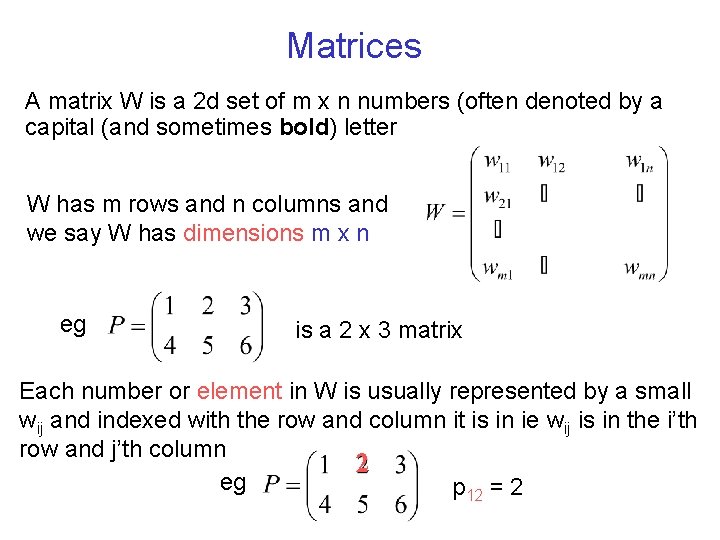

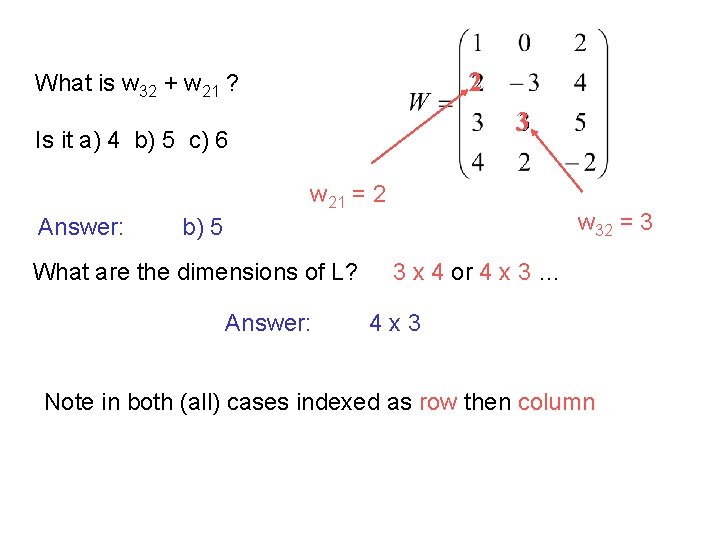

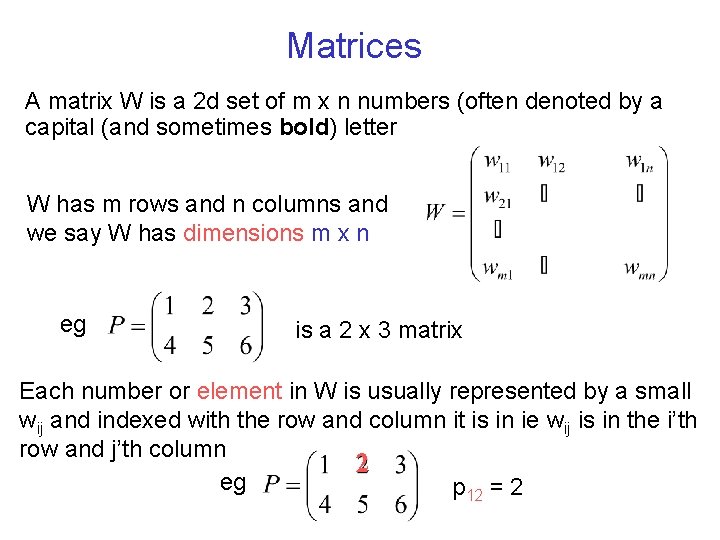

Matrices A matrix W is a 2 d set of m x n numbers (often denoted by a capital (and sometimes bold) letter W has m rows and n columns and we say W has dimensions m x n eg is a 2 x 3 matrix Each number or element in W is usually represented by a small wij and indexed with the row and column it is in ie wij is in the i’th row and j’th column 2 eg p 12 = 2

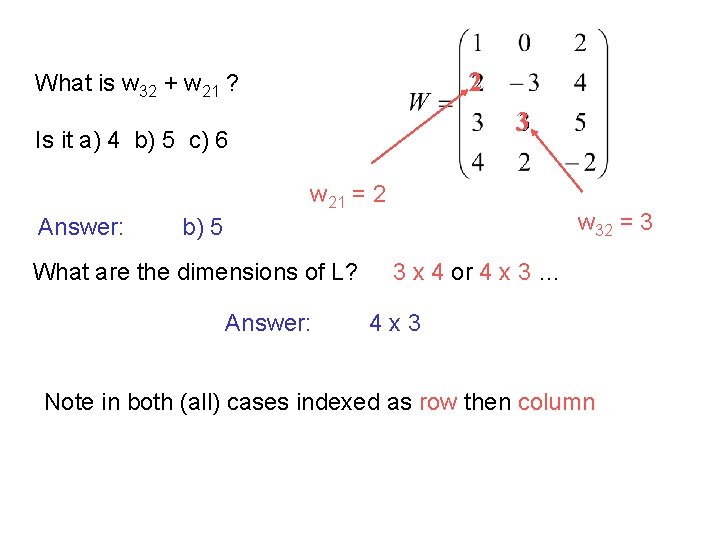

2 What is w 32 + w 21 ? 3 Is it a) 4 b) 5 c) 6 w 21 = 2 Answer: w 32 = 3 b) 5 What are the dimensions of L? Answer: 3 x 4 or 4 x 3 … 4 x 3 Note in both (all) cases indexed as row then column

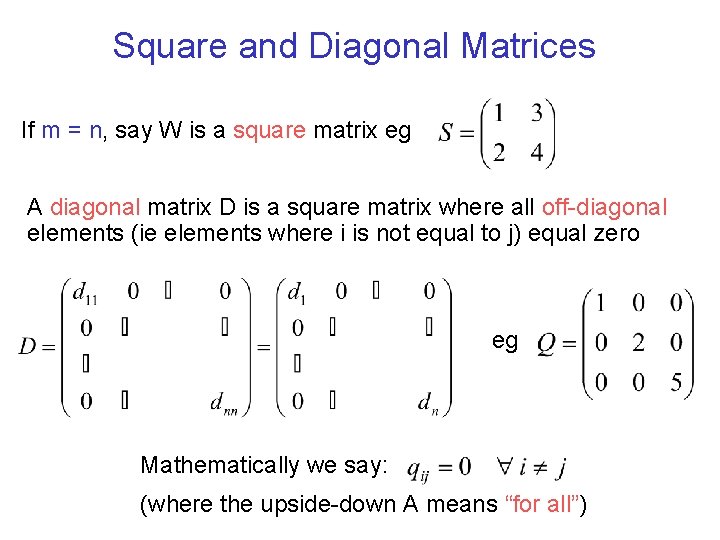

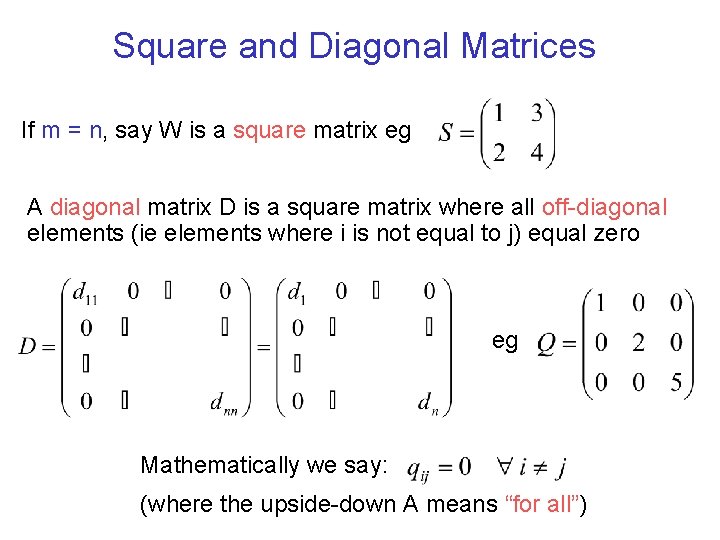

Square and Diagonal Matrices If m = n, say W is a square matrix eg A diagonal matrix D is a square matrix where all off-diagonal elements (ie elements where i is not equal to j) equal zero eg Mathematically we say: (where the upside-down A means “for all”)

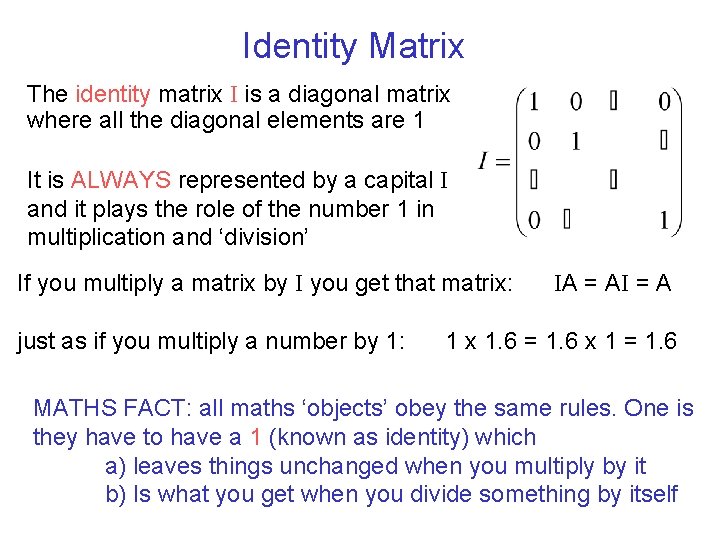

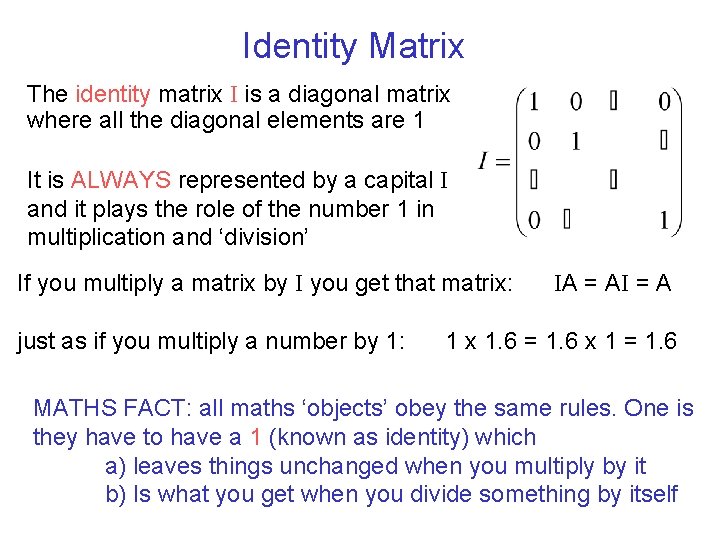

Identity Matrix The identity matrix I is a diagonal matrix where all the diagonal elements are 1 It is ALWAYS represented by a capital I and it plays the role of the number 1 in multiplication and ‘division’ If you multiply a matrix by I you get that matrix: just as if you multiply a number by 1: IA = AI = A 1 x 1. 6 = 1. 6 x 1 = 1. 6 MATHS FACT: all maths ‘objects’ obey the same rules. One is they have to have a 1 (known as identity) which a) leaves things unchanged when you multiply by it b) Is what you get when you divide something by itself

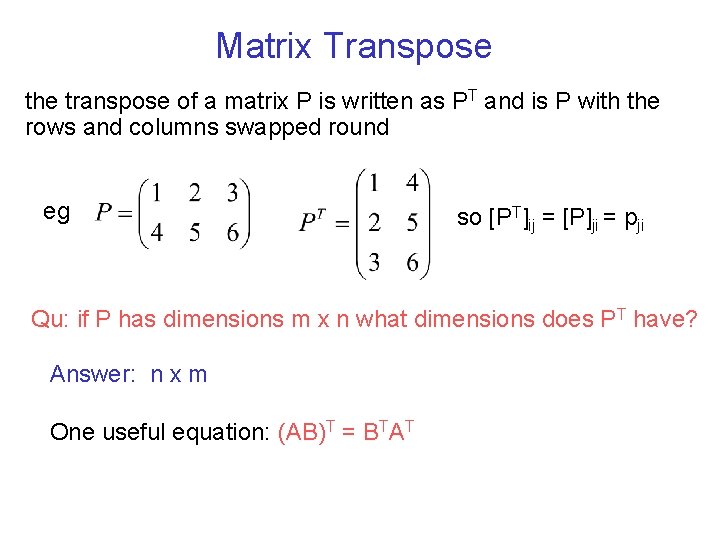

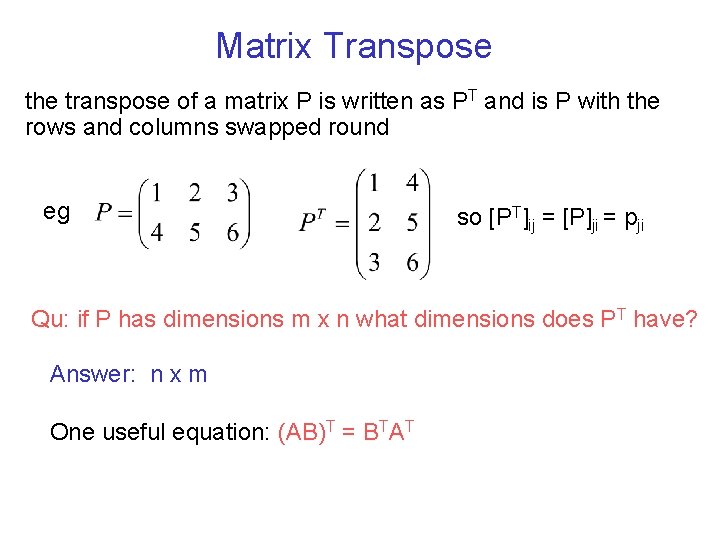

Matrix Transpose the transpose of a matrix P is written as PT and is P with the rows and columns swapped round eg so [PT]ij = [P]ji = pji Qu: if P has dimensions m x n what dimensions does PT have? Answer: n x m One useful equation: (AB)T = BTAT

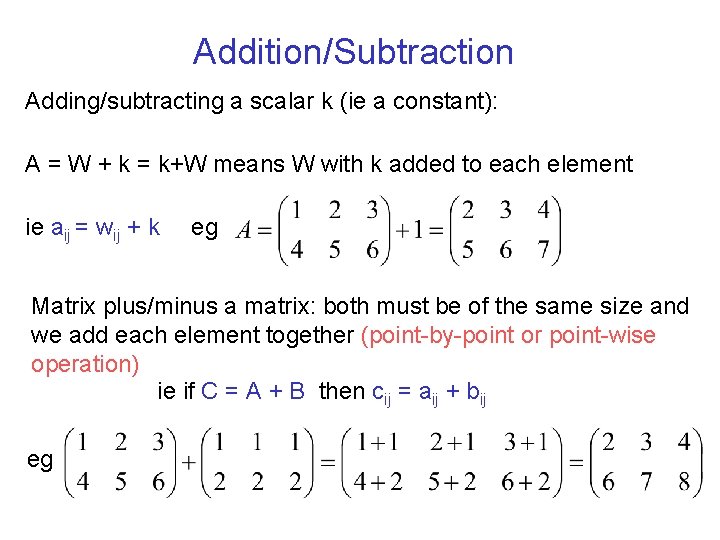

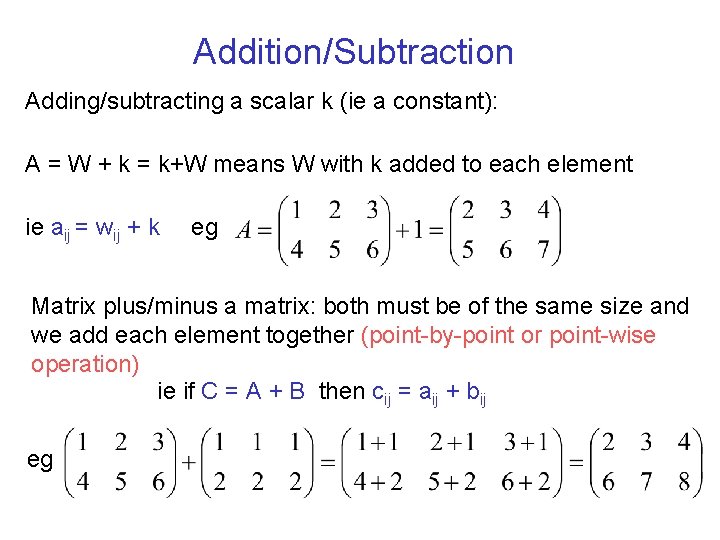

Addition/Subtraction Adding/subtracting a scalar k (ie a constant): A = W + k = k+W means W with k added to each element ie aij = wij + k eg Matrix plus/minus a matrix: both must be of the same size and we add each element together (point-by-point or point-wise operation) ie if C = A + B then cij = aij + bij eg

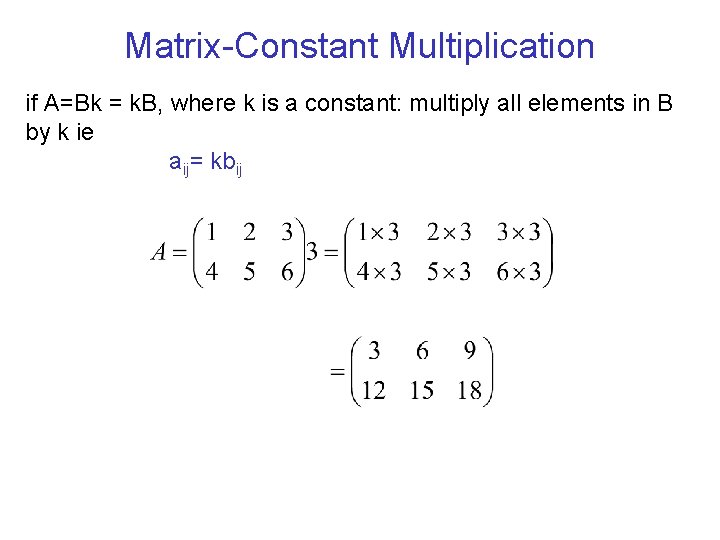

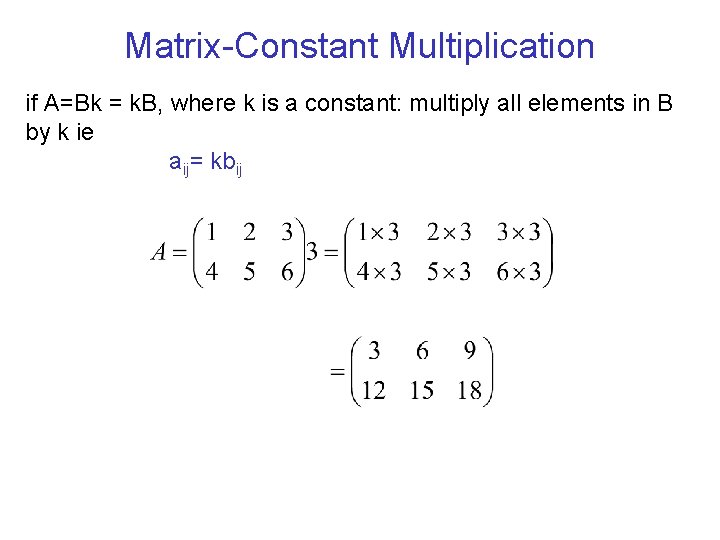

Matrix-Constant Multiplication if A=Bk = k. B, where k is a constant: multiply all elements in B by k ie aij= kbij

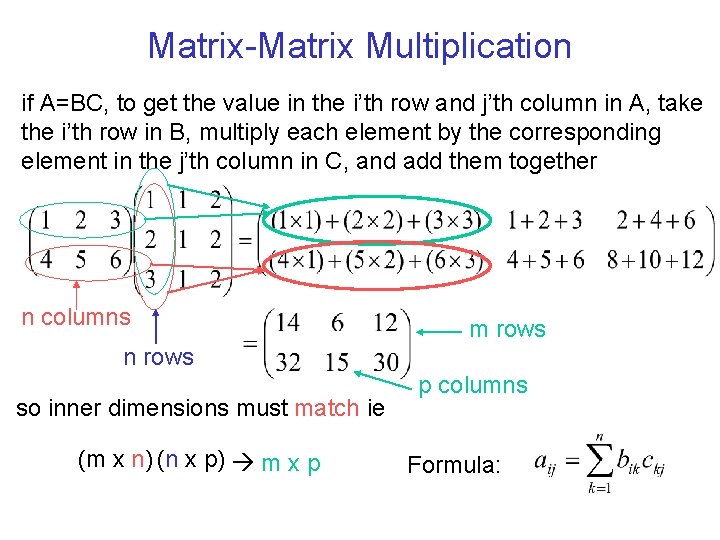

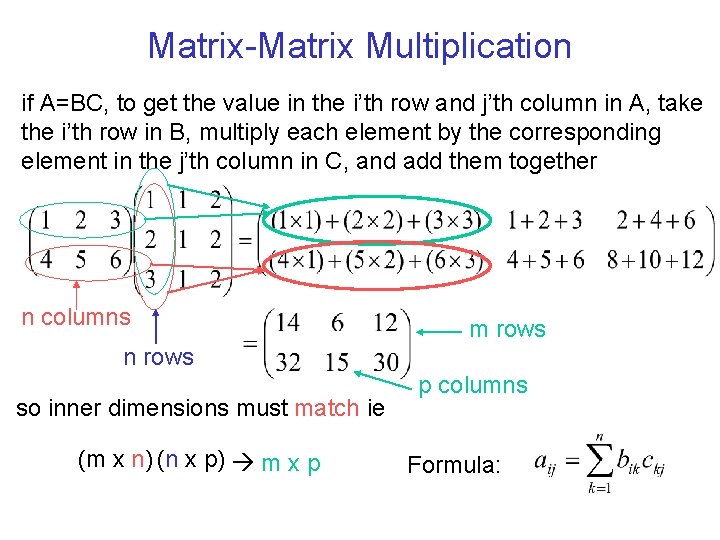

Matrix-Matrix Multiplication if A=BC, to get the value in the i’th row and j’th column in A, take the i’th row in B, multiply each element by the corresponding element in the j’th column in C, and add them together n columns m rows n rows so inner dimensions must match ie (m x n) (n x p) m x p p columns Formula:

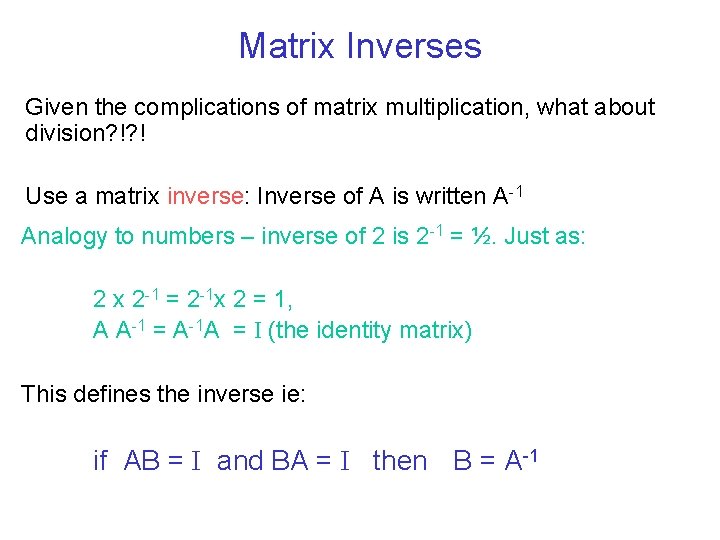

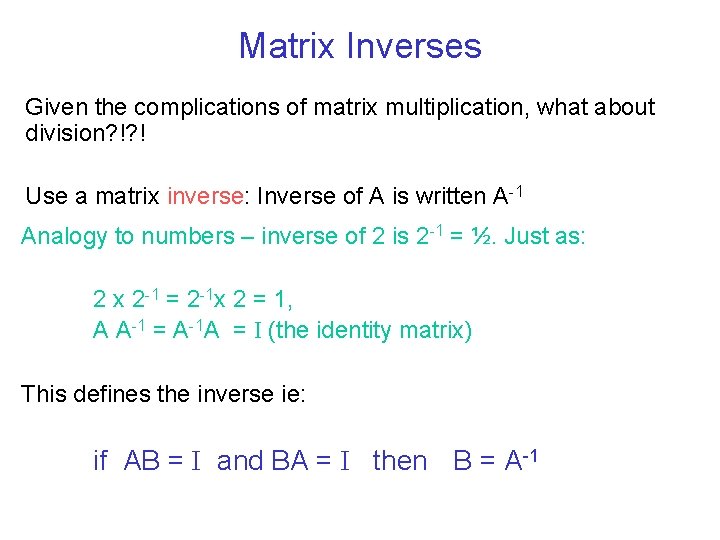

Matrix Inverses Given the complications of matrix multiplication, what about division? !? ! Use a matrix inverse: Inverse of A is written A-1 Analogy to numbers – inverse of 2 is 2 -1 = ½. Just as: 2 x 2 -1 = 2 -1 x 2 = 1, A A-1 = A-1 A = I (the identity matrix) This defines the inverse ie: if AB = I and BA = I then B = A-1

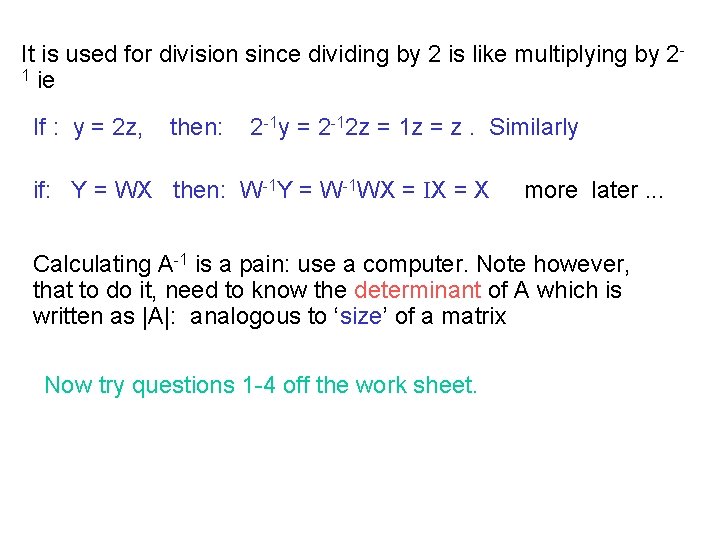

It is used for division since dividing by 2 is like multiplying by 21 ie If : y = 2 z, then: 2 -1 y = 2 -12 z = 1 z = z. Similarly if: Y = WX then: W-1 Y = W-1 WX = IX = X more later. . . Calculating A-1 is a pain: use a computer. Note however, that to do it, need to know the determinant of A which is written as |A|: analogous to ‘size’ of a matrix Now try questions 1 -4 off the work sheet.

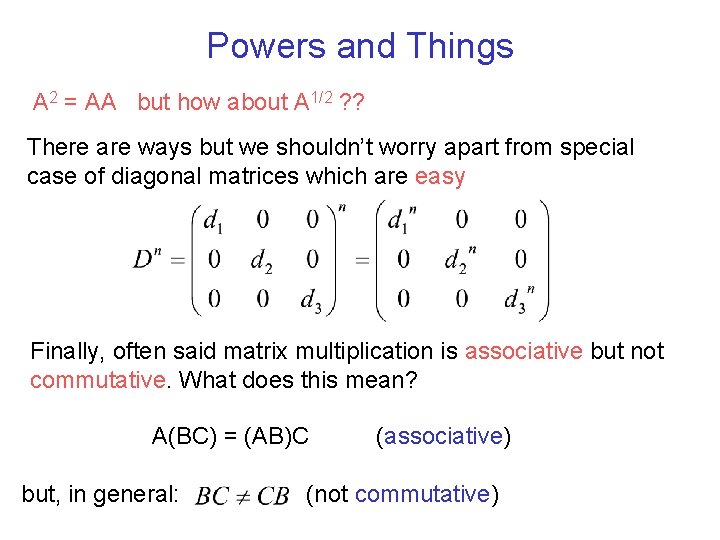

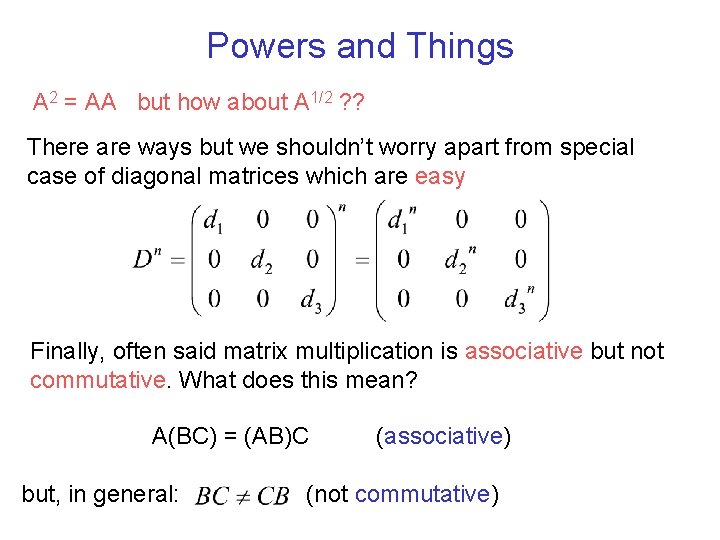

Powers and Things A 2 = AA but how about A 1/2 ? ? There are ways but we shouldn’t worry apart from special case of diagonal matrices which are easy Finally, often said matrix multiplication is associative but not commutative. What does this mean? A(BC) = (AB)C but, in general: (associative) (not commutative)

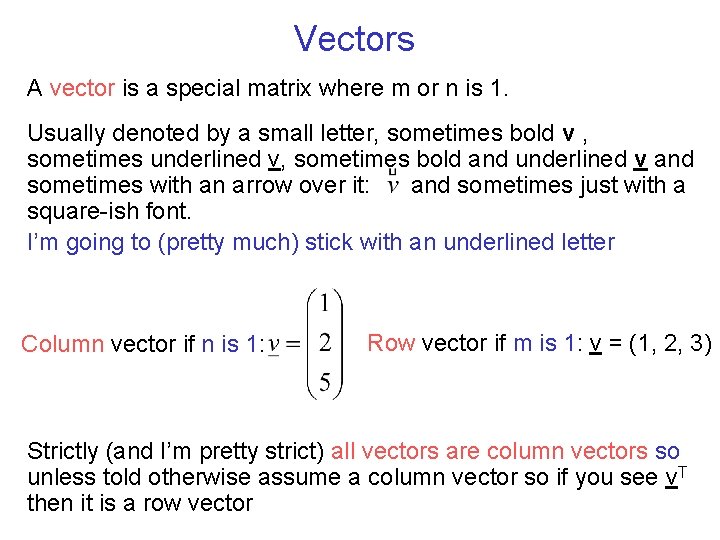

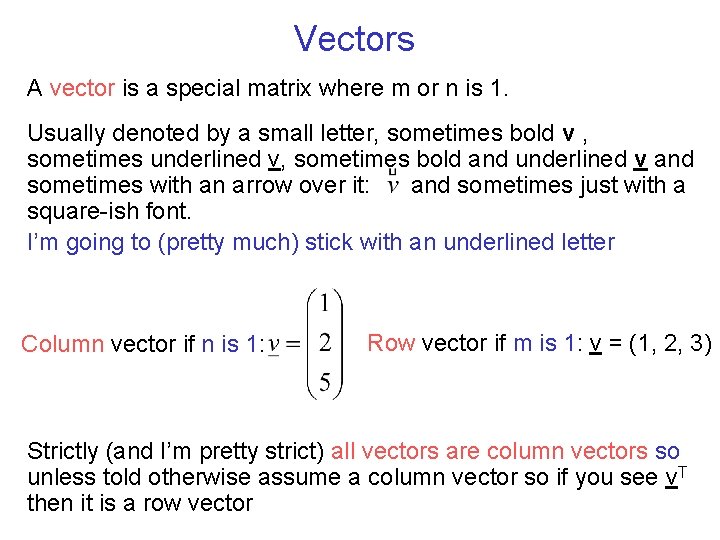

Vectors A vector is a special matrix where m or n is 1. Usually denoted by a small letter, sometimes bold v , sometimes underlined v, sometimes bold and underlined v and sometimes with an arrow over it: and sometimes just with a square-ish font. I’m going to (pretty much) stick with an underlined letter Column vector if n is 1: Row vector if m is 1: v = (1, 2, 3) Strictly (and I’m pretty strict) all vectors are column vectors so unless told otherwise assume a column vector so if you see v. T then it is a row vector

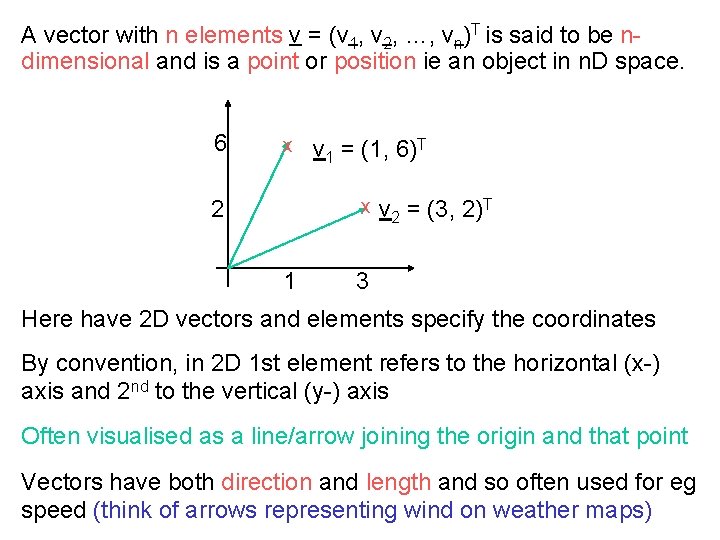

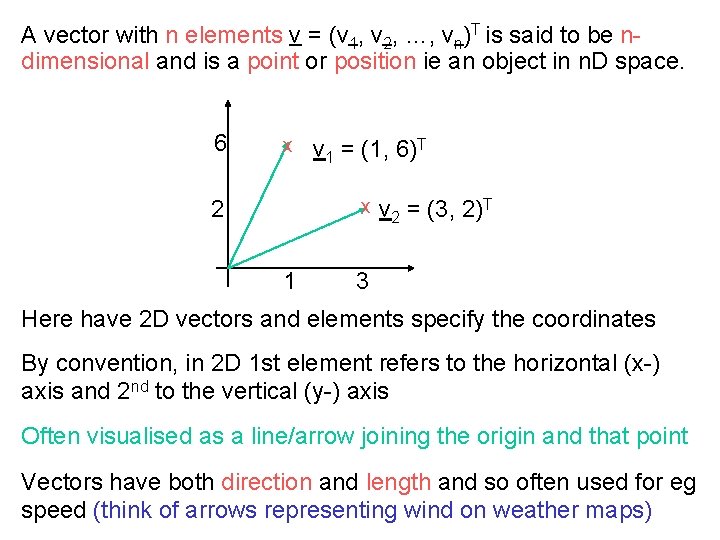

A vector with n elements v = (v 1, v 2, …, vn)T is said to be ndimensional and is a point or position ie an object in n. D space. 6 x v 1 = (1, 6)T x 2 1 v 2 = (3, 2)T 3 Here have 2 D vectors and elements specify the coordinates By convention, in 2 D 1 st element refers to the horizontal (x-) axis and 2 nd to the vertical (y-) axis Often visualised as a line/arrow joining the origin and that point Vectors have both direction and length and so often used for eg speed (think of arrows representing wind on weather maps)

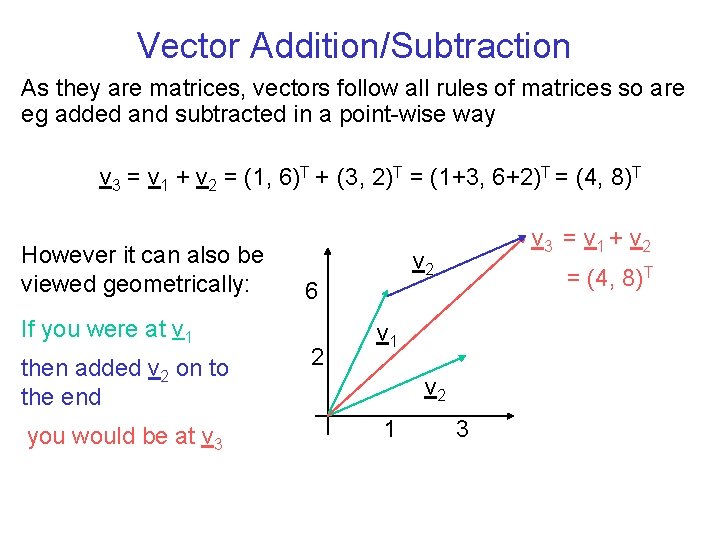

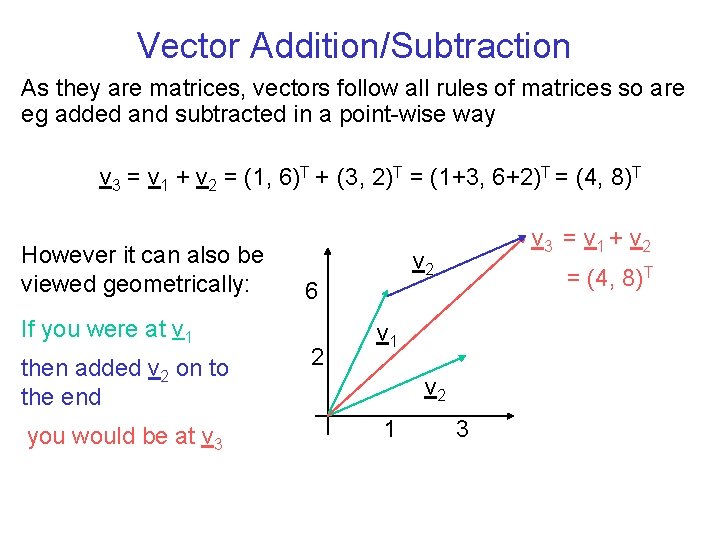

Vector Addition/Subtraction As they are matrices, vectors follow all rules of matrices so are eg added and subtracted in a point-wise way v 3 = v 1 + v 2 = (1, 6)T + (3, 2)T = (1+3, 6+2)T = (4, 8)T However it can also be viewed geometrically: If you were at v 1 then added v 2 on to the end you would be at v 3 v 2 6 2 v 3 = v 1 + v 2 = (4, 8)T v 1 v 2 1 3

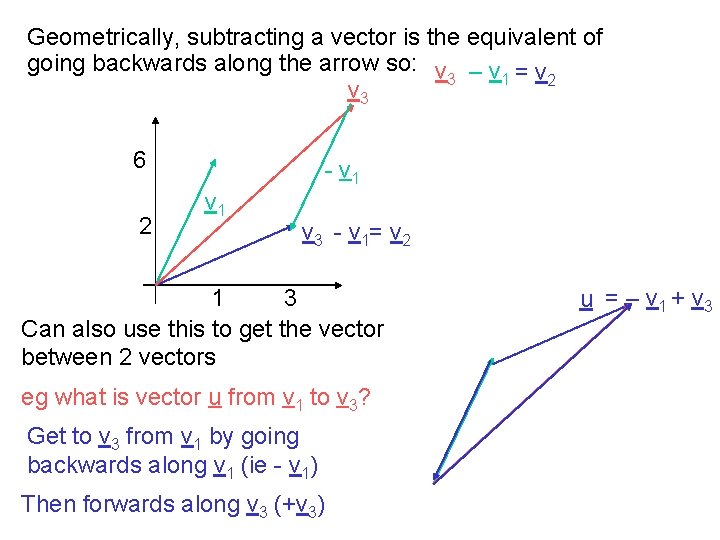

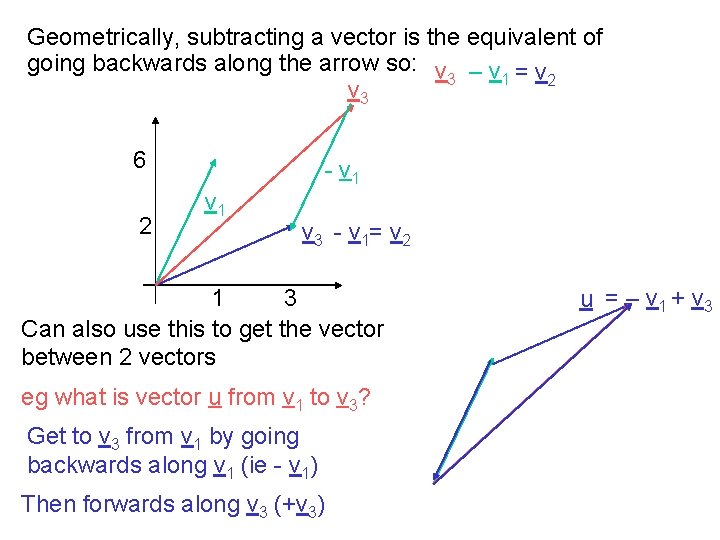

Geometrically, subtracting a vector is the equivalent of going backwards along the arrow so: v – v = v 3 1 2 v 3 6 2 - v 1 v 3 - v 1= v 2 1 3 Can also use this to get the vector between 2 vectors eg what is vector u from v 1 to v 3? Get to v 3 from v 1 by going backwards along v 1 (ie - v 1) Then forwards along v 3 (+v 3) u = – v 1 + v 3

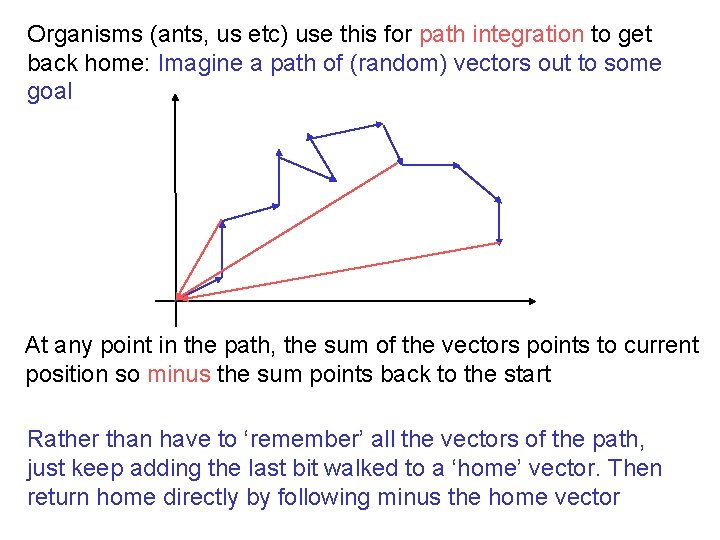

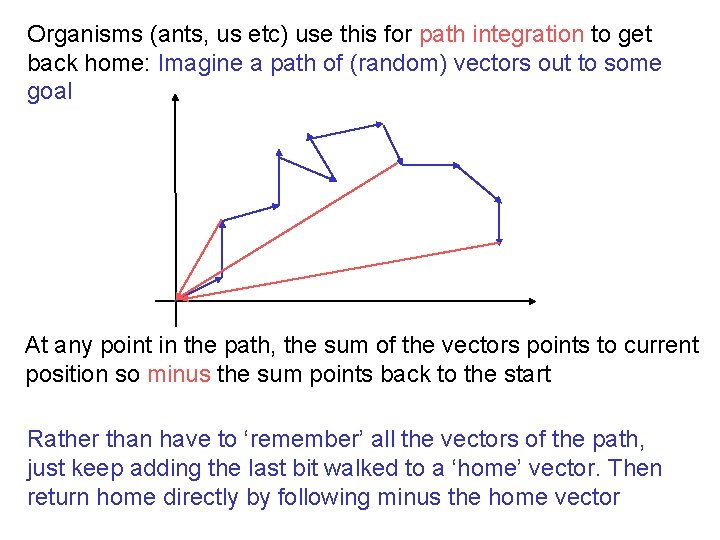

Organisms (ants, us etc) use this for path integration to get back home: Imagine a path of (random) vectors out to some goal At any point in the path, the sum of the vectors points to current position so minus the sum points back to the start Rather than have to ‘remember’ all the vectors of the path, just keep adding the last bit walked to a ‘home’ vector. Then return home directly by following minus the home vector

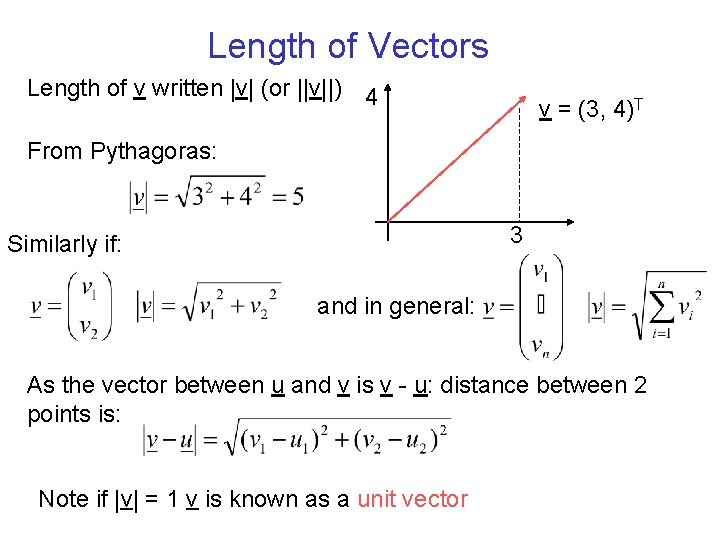

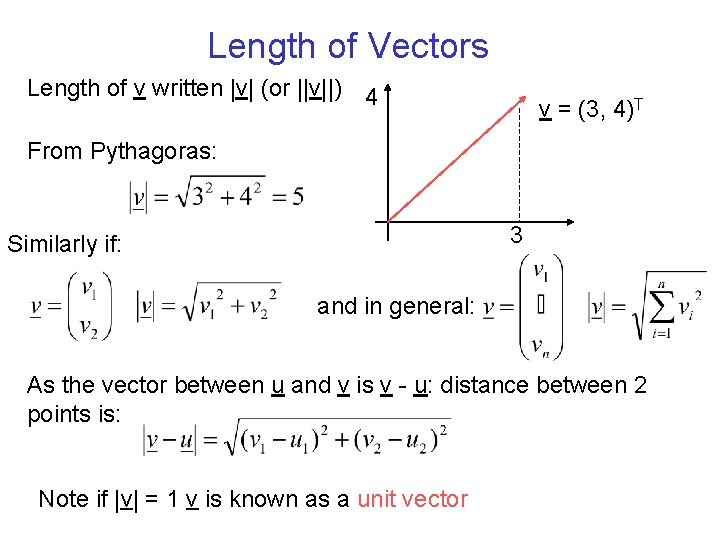

Length of Vectors Length of v written |v| (or ||v||) 4 v = (3, 4)T From Pythagoras: 3 Similarly if: and in general: As the vector between u and v is v - u: distance between 2 points is: Note if |v| = 1 v is known as a unit vector

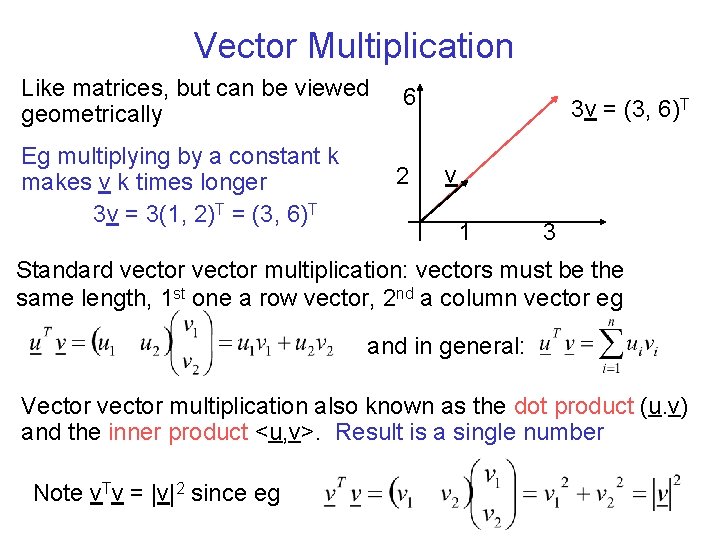

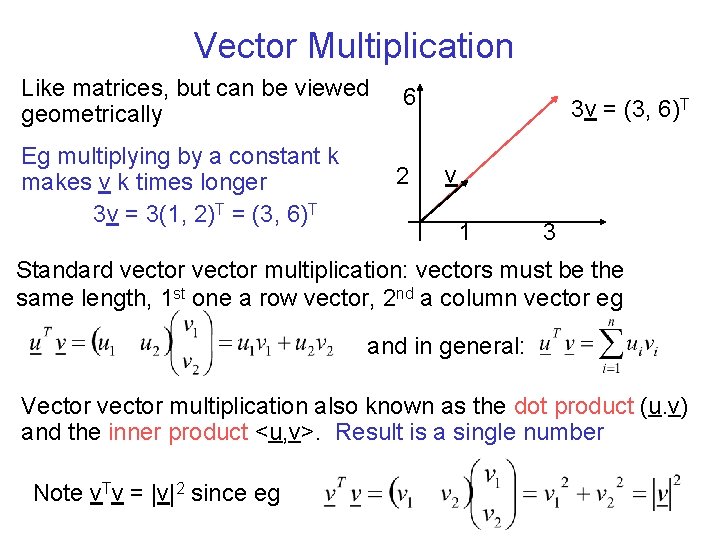

Vector Multiplication Like matrices, but can be viewed geometrically Eg multiplying by a constant k makes v k times longer 3 v = 3(1, 2)T = (3, 6)T 6 2 3 v = (3, 6)T v 1 3 Standard vector multiplication: vectors must be the same length, 1 st one a row vector, 2 nd a column vector eg and in general: Vector vector multiplication also known as the dot product (u. v) and the inner product <u, v>. Result is a single number Note v. Tv = |v|2 since eg

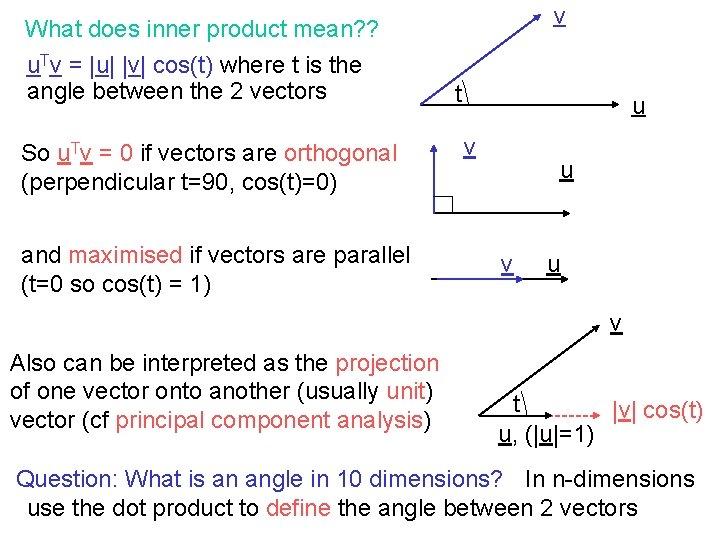

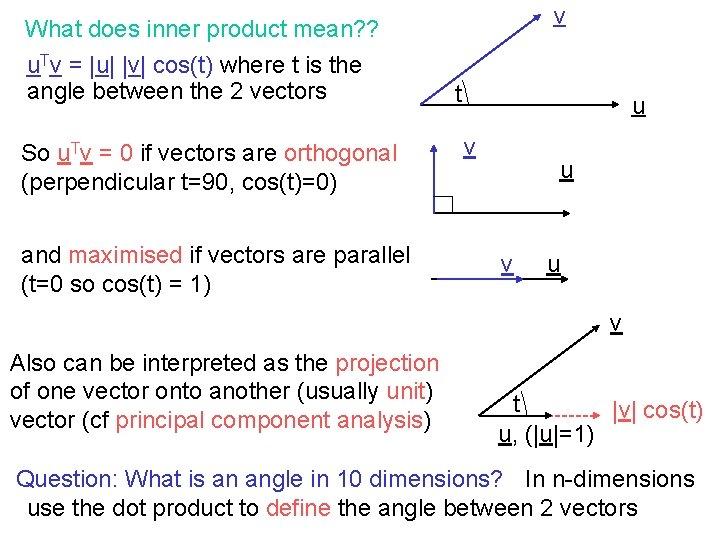

v What does inner product mean? ? u. Tv = |u| |v| cos(t) where t is the angle between the 2 vectors So u. Tv = 0 if vectors are orthogonal (perpendicular t=90, cos(t)=0) and maximised if vectors are parallel (t=0 so cos(t) = 1) t u v u v Also can be interpreted as the projection of one vector onto another (usually unit) vector (cf principal component analysis) t |v| cos(t) u, (|u|=1) Question: What is an angle in 10 dimensions? In n-dimensions use the dot product to define the angle between 2 vectors

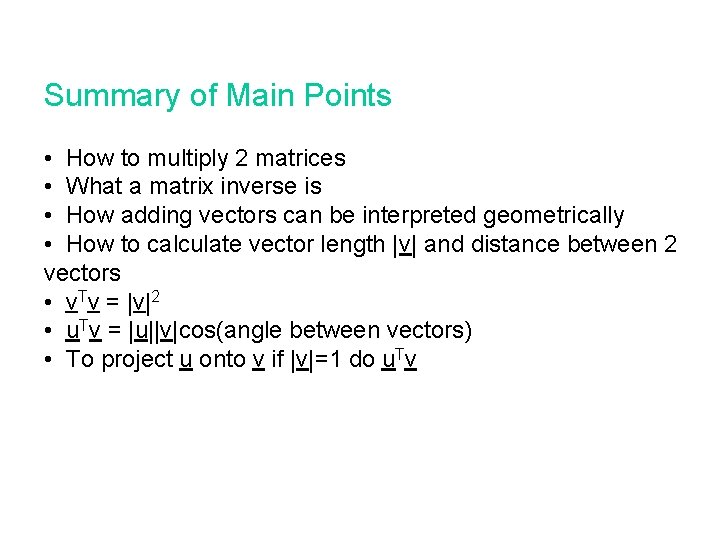

Summary of Main Points • How to multiply 2 matrices • What a matrix inverse is • How adding vectors can be interpreted geometrically • How to calculate vector length |v| and distance between 2 vectors • v. Tv = |v|2 • u. Tv = |u||v|cos(angle between vectors) • To project u onto v if |v|=1 do u. Tv

Formal Computational Skills Matrices 2

Yesterday’s Topics Matrix/Vector Basics • Matrix definitions (square matrix, identity etc) • Matrix addition/subtraction/multiplication • Matrix inverse • Vector definitions • Vectors as geometric objects Today’s Topics Uses of Matrices • Matrices as sets of equations • Networks written as matrices • Solving sets of linear equations • (Briefly) Matrix operations as transformations • (Briefly) Eigenvectors and eigenvalues of a matrix

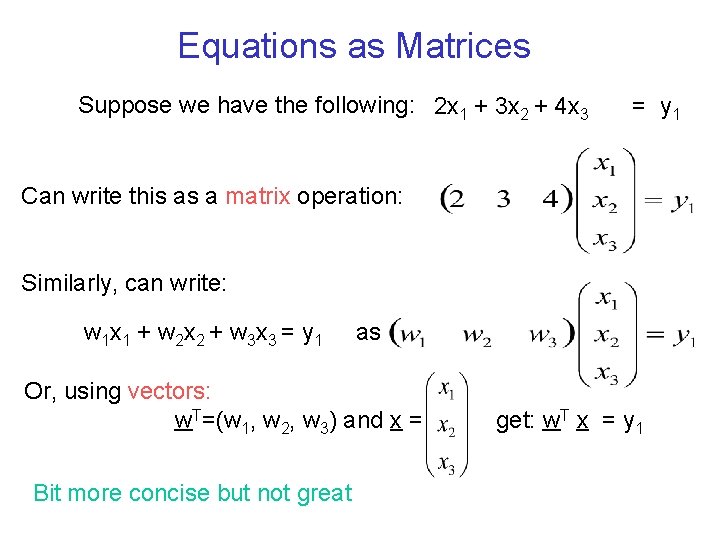

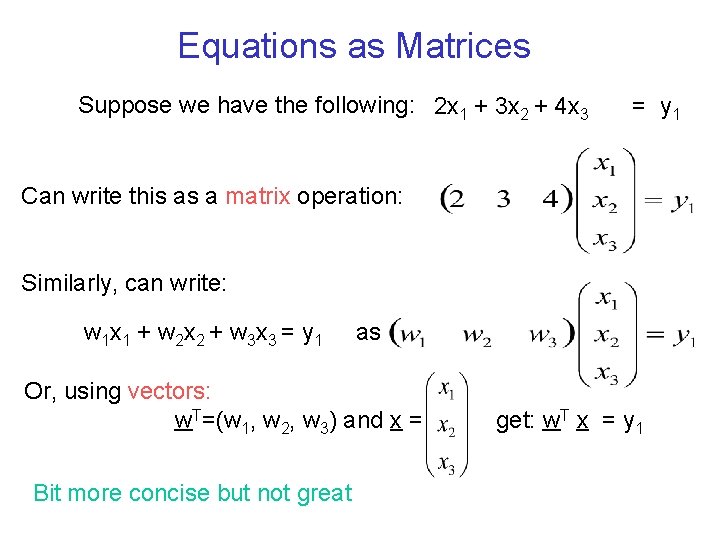

Equations as Matrices Suppose we have the following: 2 x 1 + 3 x 2 + 4 x 3 = y 1 Can write this as a matrix operation: Similarly, can write: w 1 x 1 + w 2 x 2 + w 3 x 3 = y 1 as Or, using vectors: w. T=(w 1, w 2, w 3) and x = Bit more concise but not great get: w. T x = y 1

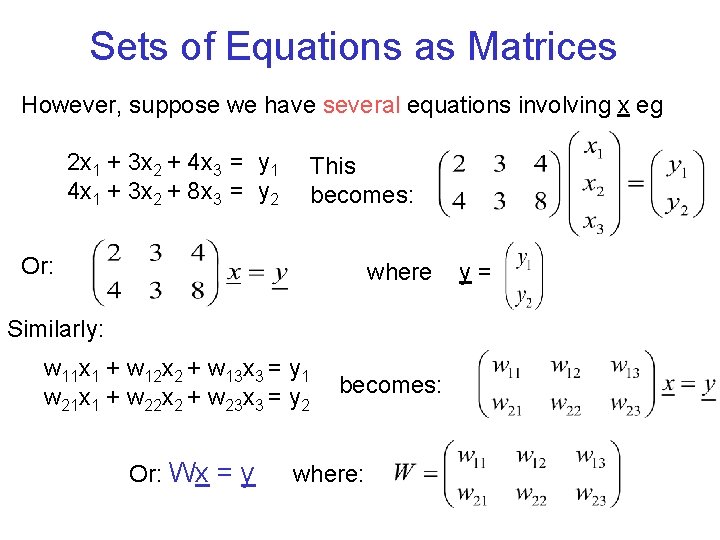

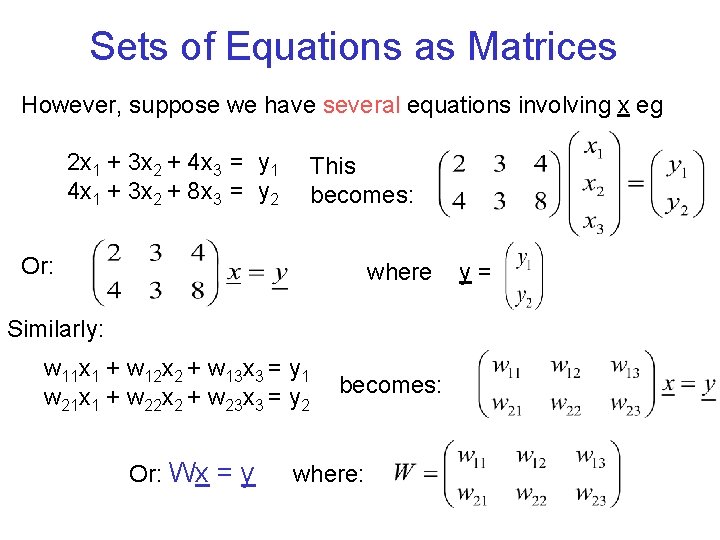

Sets of Equations as Matrices However, suppose we have several equations involving x eg 2 x 1 + 3 x 2 + 4 x 3 = y 1 4 x 1 + 3 x 2 + 8 x 3 = y 2 This becomes: Or: where Similarly: w 11 x 1 + w 12 x 2 + w 13 x 3 = y 1 w 21 x 1 + w 22 x 2 + w 23 x 3 = y 2 Or: Wx = y becomes: where: y=

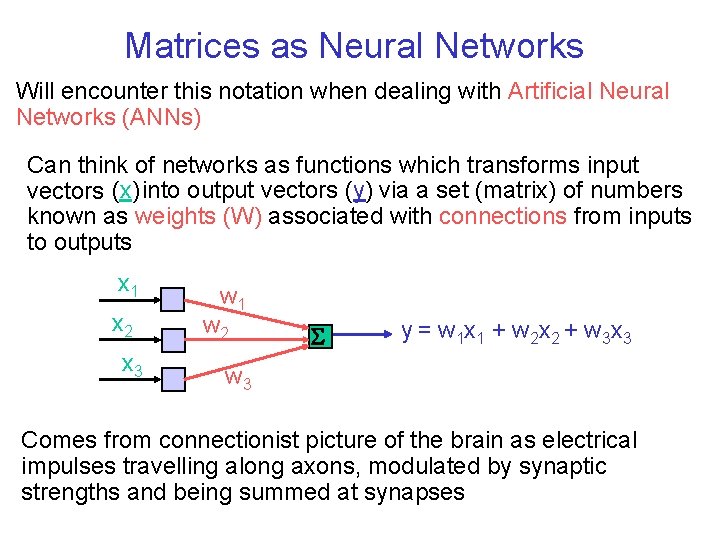

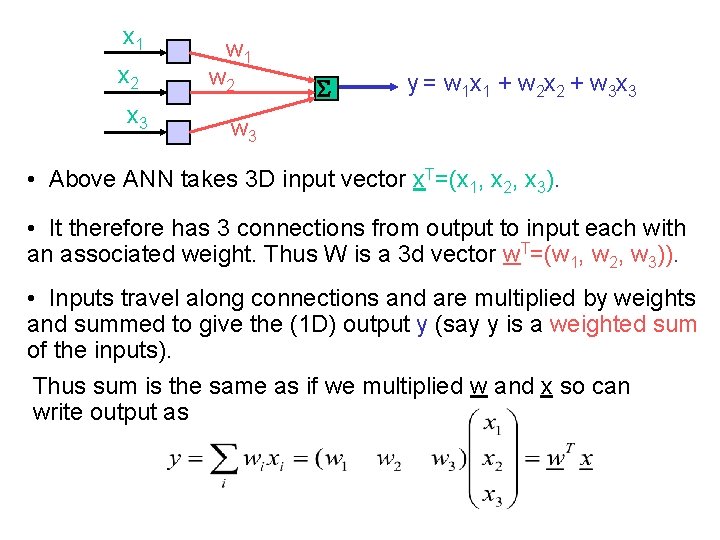

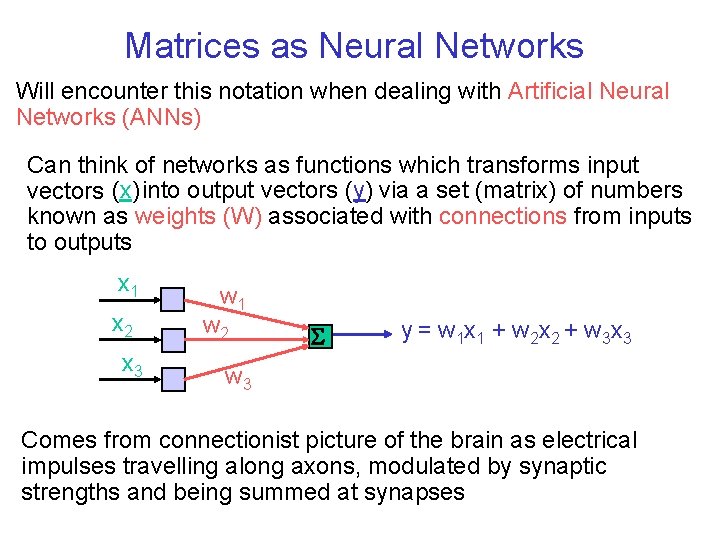

Matrices as Neural Networks Will encounter this notation when dealing with Artificial Neural Networks (ANNs) Can think of networks as functions which transforms input vectors (x) into output vectors (y) via a set (matrix) of numbers known as weights (W) associated with connections from inputs to outputs x 1 x 2 x 3 w 1 w 2 S y = w 1 x 1 + w 2 x 2 + w 3 x 3 w 3 Comes from connectionist picture of the brain as electrical impulses travelling along axons, modulated by synaptic strengths and being summed at synapses

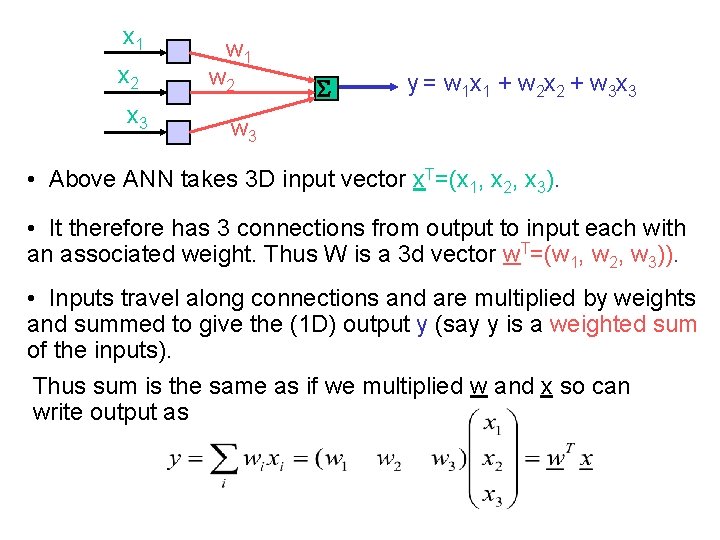

x 1 x 2 x 3 w 1 w 2 S y = w 1 x 1 + w 2 x 2 + w 3 x 3 w 3 • Above ANN takes 3 D input vector x. T=(x 1, x 2, x 3). • It therefore has 3 connections from output to input each with an associated weight. Thus W is a 3 d vector w. T=(w 1, w 2, w 3)). • Inputs travel along connections and are multiplied by weights and summed to give the (1 D) output y (say y is a weighted sum of the inputs). Thus sum is the same as if we multiplied w and x so can write output as

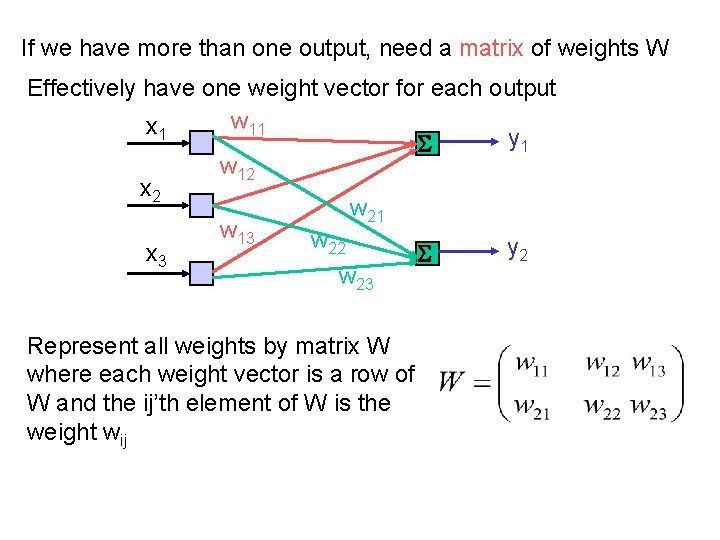

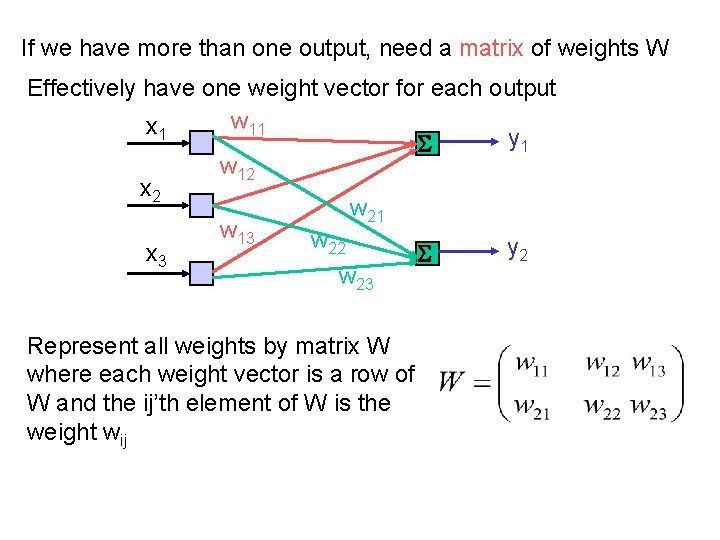

If we have more than one output, need a matrix of weights W Effectively have one weight vector for each output w 11 x 1 y 1 S w 12 x 2 w 21 w 13 w 22 y 2 x 3 S w 23 Represent all weights by matrix W where each weight vector is a row of W and the ij’th element of W is the weight wij

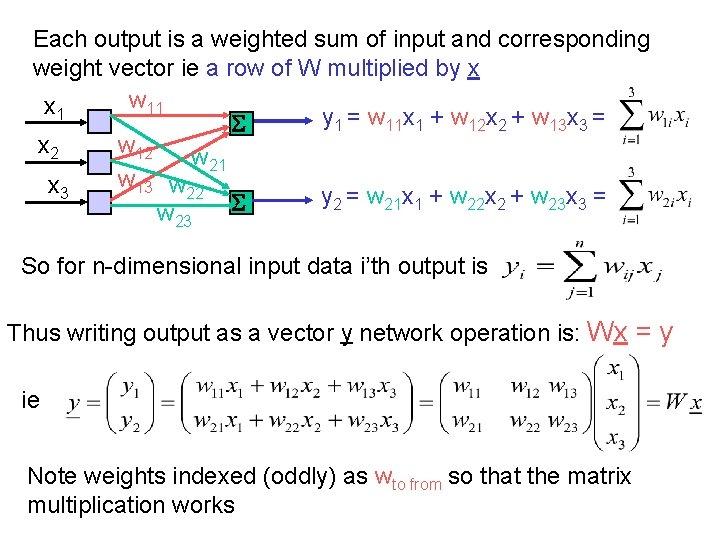

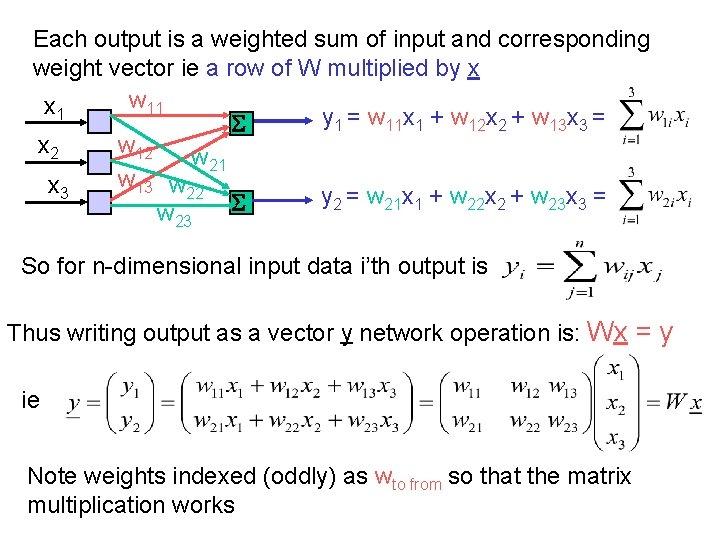

Each output is a weighted sum of input and corresponding weight vector ie a row of W multiplied by x w 11 x 1 y 1 = w 11 x 1 + w 12 x 2 + w 13 x 3 = S x 2 w 12 w 21 w 13 w 22 x 3 y 2 = w 21 x 1 + w 22 x 2 + w 23 x 3 = S w 23 So for n-dimensional input data i’th output is Thus writing output as a vector y network operation is: Wx = y ie Note weights indexed (oddly) as wto from so that the matrix multiplication works

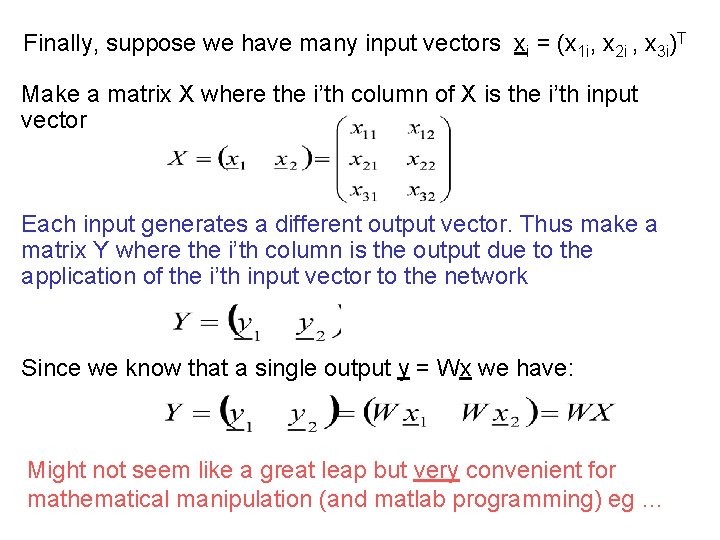

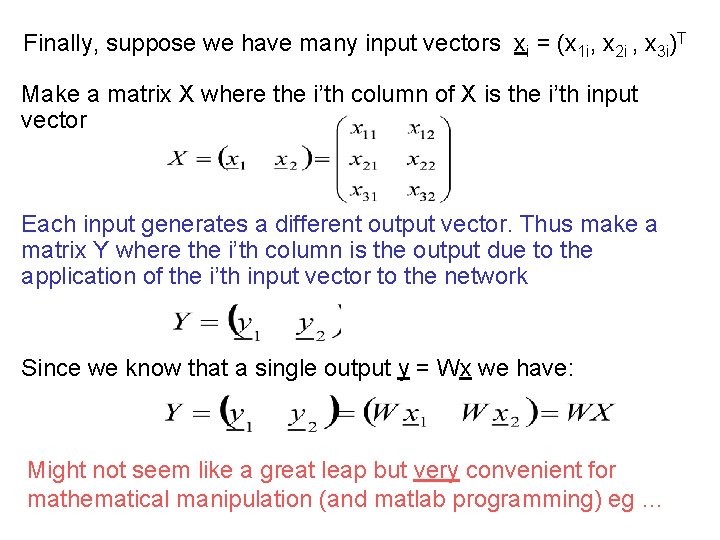

Finally, suppose we have many input vectors xi = (x 1 i, x 2 i , x 3 i)T Make a matrix X where the i’th column of X is the i’th input vector Each input generates a different output vector. Thus make a matrix Y where the i’th column is the output due to the application of the i’th input vector to the network Since we know that a single output y = Wx we have: Might not seem like a great leap but very convenient for mathematical manipulation (and matlab programming) eg …

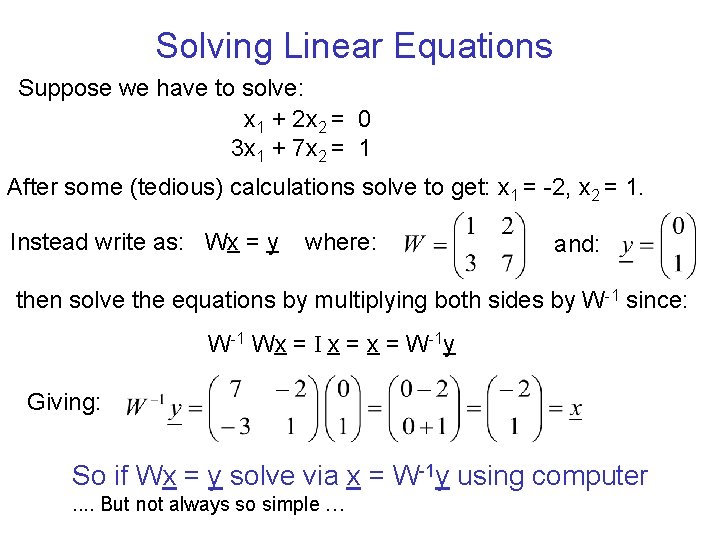

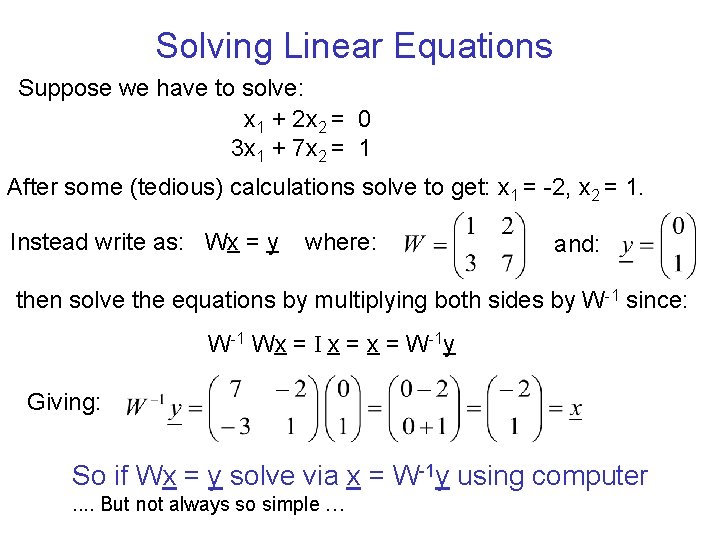

Solving Linear Equations Suppose we have to solve: x 1 + 2 x 2 = 0 3 x 1 + 7 x 2 = 1 After some (tedious) calculations solve to get: x 1 = -2, x 2 = 1. Instead write as: Wx = y where: and: then solve the equations by multiplying both sides by W-1 since: W-1 Wx = I x = W-1 y Giving: So if Wx = y solve via x = W-1 y using computer. . But not always so simple …

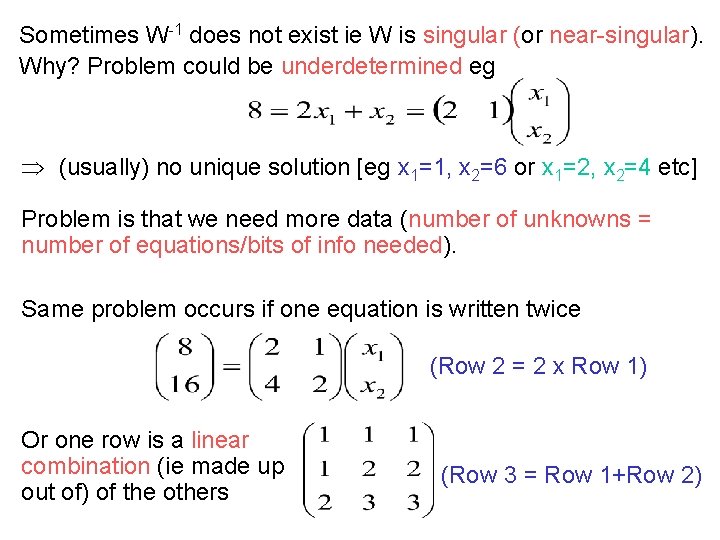

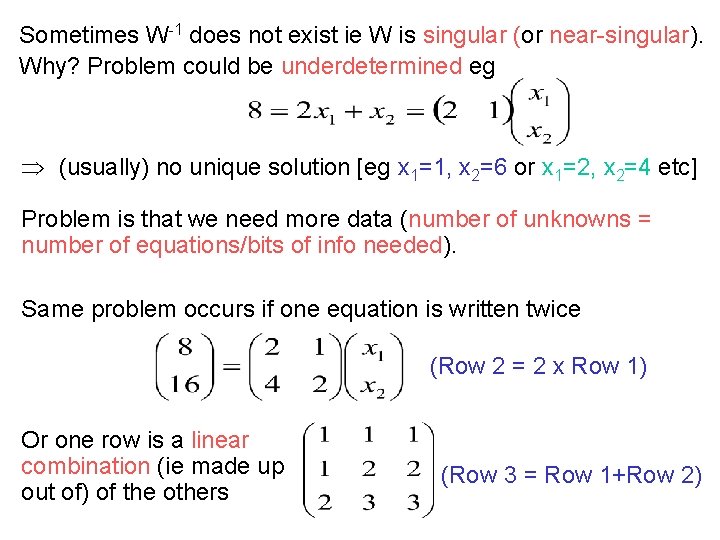

Sometimes W-1 does not exist ie W is singular (or near-singular). Why? Problem could be underdetermined eg Þ (usually) no unique solution [eg x 1=1, x 2=6 or x 1=2, x 2=4 etc] Problem is that we need more data (number of unknowns = number of equations/bits of info needed). Same problem occurs if one equation is written twice (Row 2 = 2 x Row 1) Or one row is a linear combination (ie made up out of) of the others (Row 3 = Row 1+Row 2)

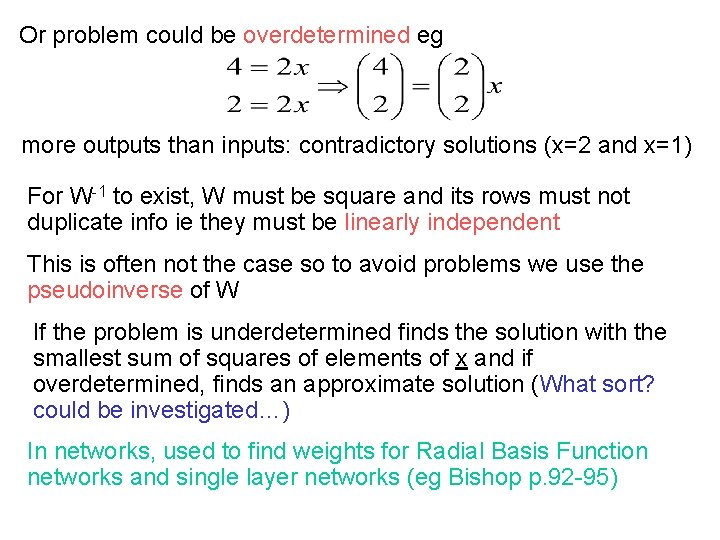

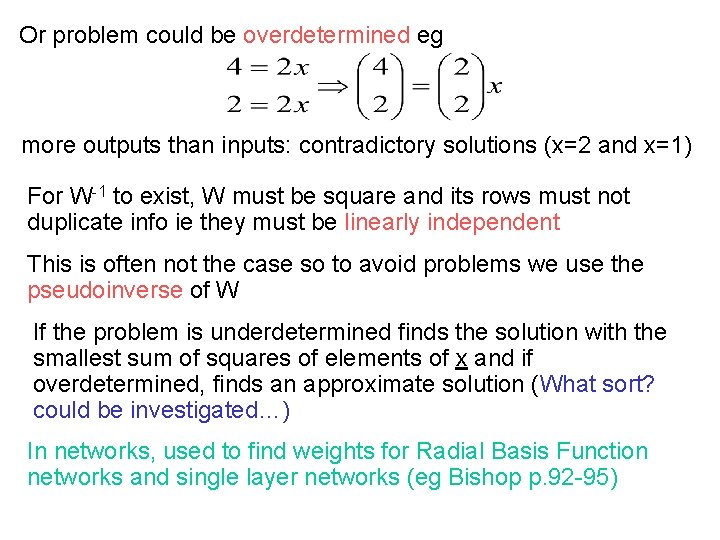

Or problem could be overdetermined eg more outputs than inputs: contradictory solutions (x=2 and x=1) For W-1 to exist, W must be square and its rows must not duplicate info ie they must be linearly independent This is often not the case so to avoid problems we use the pseudoinverse of W If the problem is underdetermined finds the solution with the smallest sum of squares of elements of x and if overdetermined, finds an approximate solution (What sort? could be investigated…) In networks, used to find weights for Radial Basis Function networks and single layer networks (eg Bishop p. 92 -95)

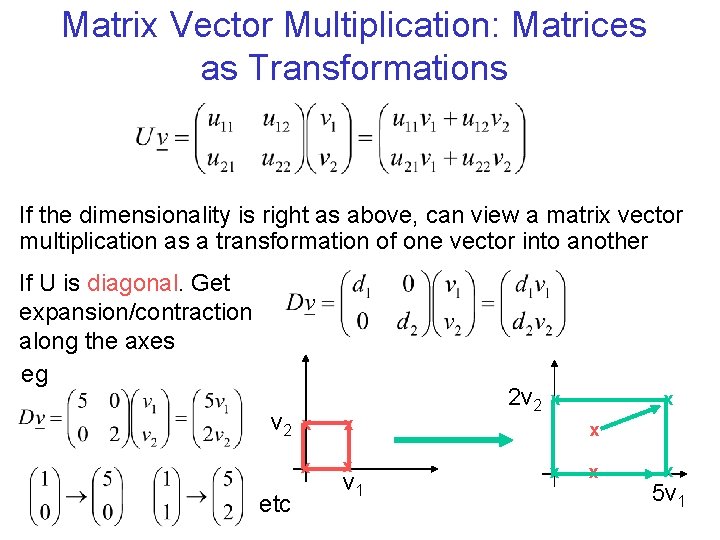

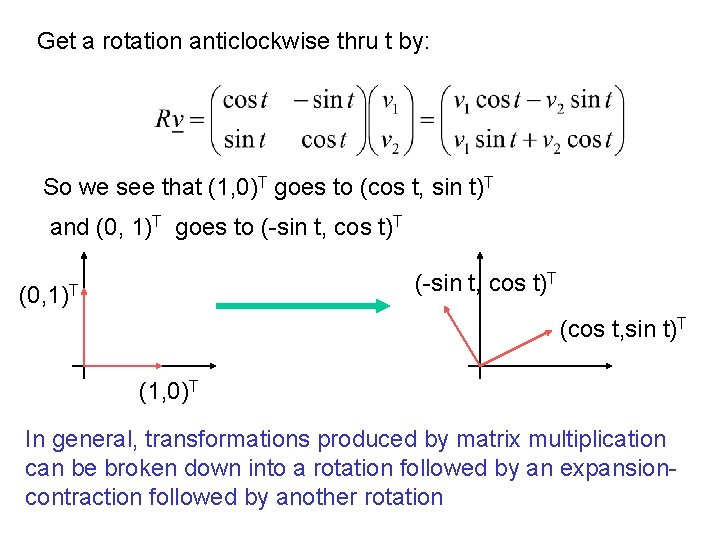

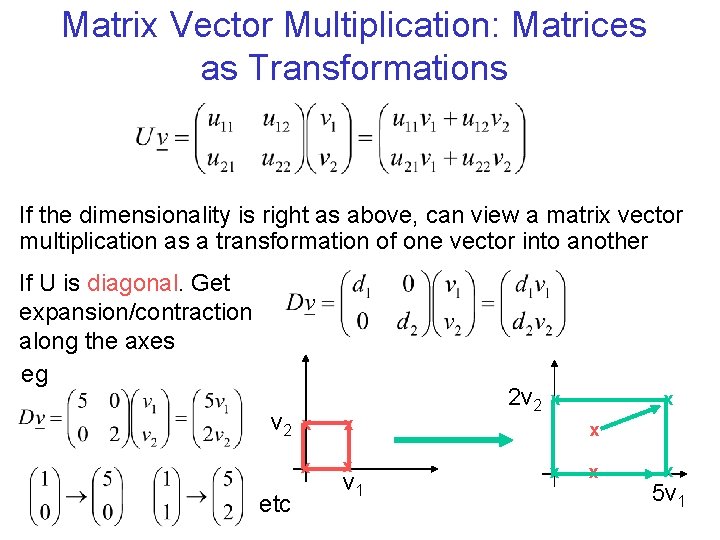

Matrix Vector Multiplication: Matrices as Transformations If the dimensionality is right as above, can view a matrix vector multiplication as a transformation of one vector into another If U is diagonal. Get expansion/contraction along the axes eg v 2 etc x x v 1 2 v 2 x x x 5 v 1

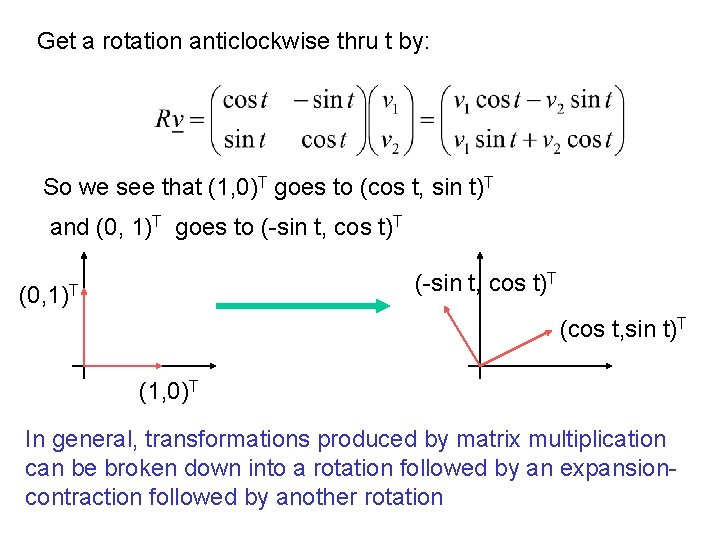

Get a rotation anticlockwise thru t by: So we see that (1, 0)T goes to (cos t, sin t)T and (0, 1)T goes to (-sin t, cos t)T (0, 1)T (cos t, sin t)T (1, 0)T In general, transformations produced by matrix multiplication can be broken down into a rotation followed by an expansioncontraction followed by another rotation

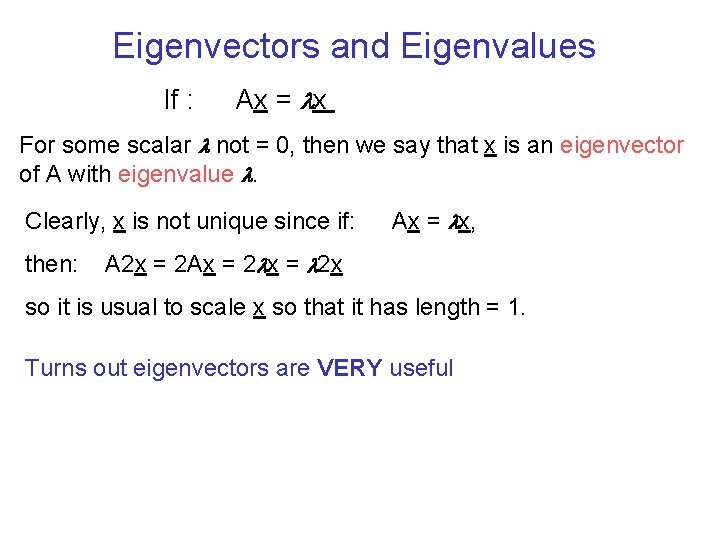

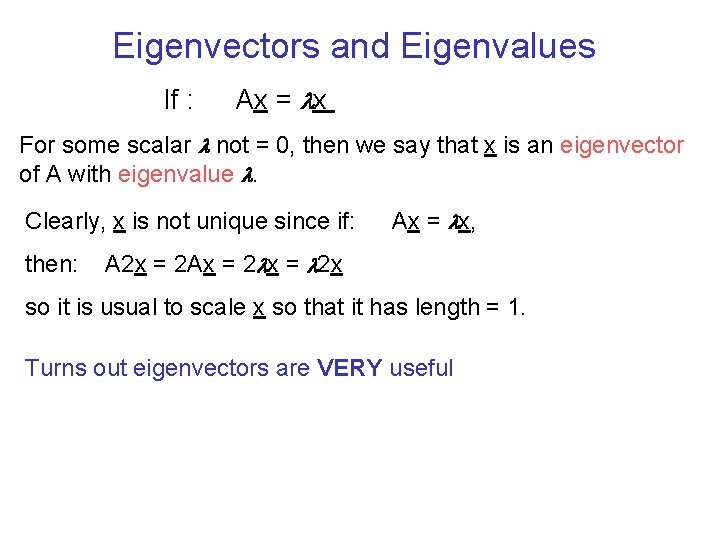

Eigenvectors and Eigenvalues If : Ax = lx For some scalar l not = 0, then we say that x is an eigenvector of A with eigenvalue l. Clearly, x is not unique since if: then: Ax = lx, A 2 x = 2 Ax = 2 lx = l 2 x so it is usual to scale x so that it has length = 1. Turns out eigenvectors are VERY useful

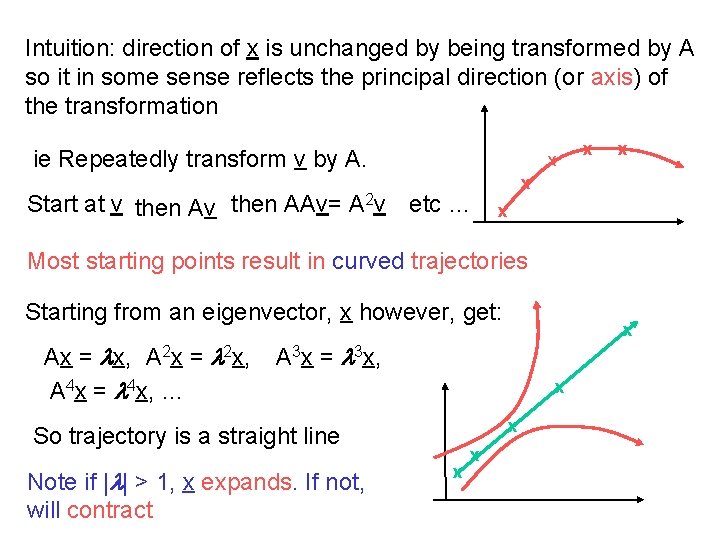

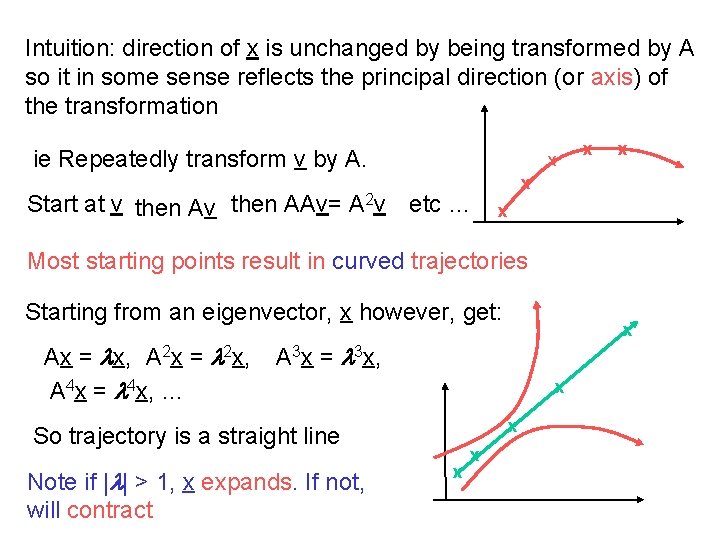

Intuition: direction of x is unchanged by being transformed by A so it in some sense reflects the principal direction (or axis) of the transformation ie Repeatedly transform v by A. x Start at v then AAv= A 2 v etc … x x Most starting points result in curved trajectories Starting from an eigenvector, x however, get: Ax = lx, A 2 x = l 2 x, A 4 x = l 4 x, … x A 3 x = l 3 x, x x So trajectory is a straight line Note if |l| > 1, x expands. If not, will contract x x

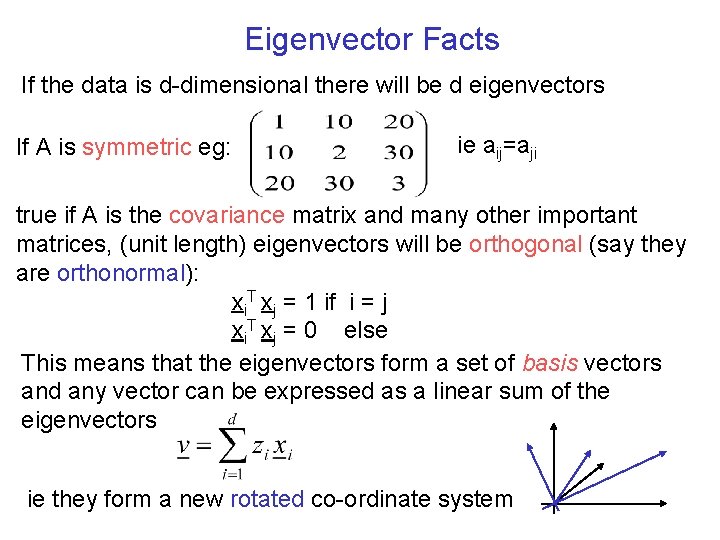

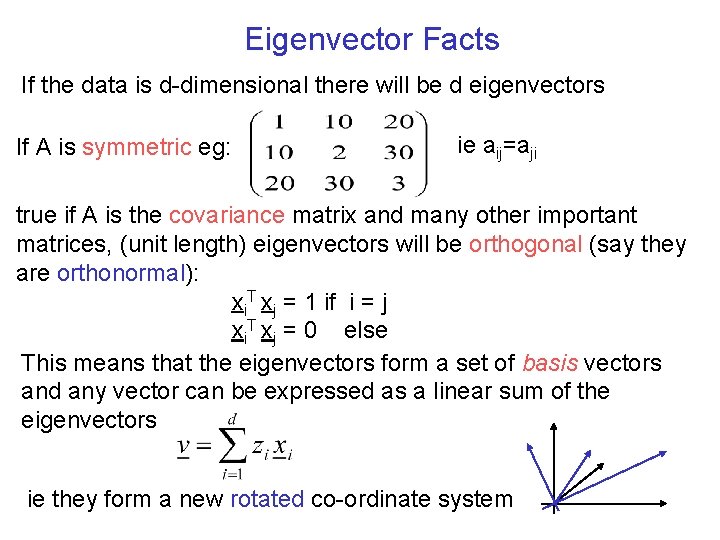

Eigenvector Facts If the data is d-dimensional there will be d eigenvectors If A is symmetric eg: ie aij=aji true if A is the covariance matrix and many other important matrices, (unit length) eigenvectors will be orthogonal (say they are orthonormal): xi. T xj = 1 if i = j xi. T xj = 0 else This means that the eigenvectors form a set of basis vectors and any vector can be expressed as a linear sum of the eigenvectors ie they form a new rotated co-ordinate system

Summary of Main Points • When dealing with networks, Wx = y means “outputs y are weighted sum of input x. That is: y is the network output given input x” • Similarly, WX = Y means “each column of Y is the network output operating on the corresponding column of X” • Matrix inverse can be used to solve linear equations, but pseudoinverse is more robust • In networks, use pseudoinverse to calculate ‘best’ weights that will transform training input vectors into known target vectors • Matrix vector multiplication can be seen as a transformation (eg rotations and expansions) • If Ax = lx x is an eigenvector, with eigenvalue l • Eigenvectors and eigenvalues tell us about main axes and behaviour of matrix transformations