Force field adaptation can be learned using vision

Force field adaptation can be learned using vision in the absence of proprioceptive error A. Melendez-Calderon, L. Masia, R. Gassert, G. Sandini, E. Burdet Motor Control Reading Group Michele Rotella August 30, 2013

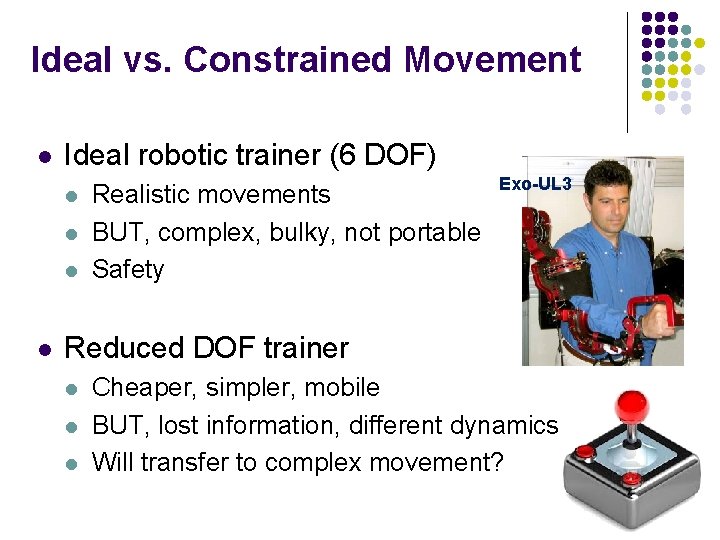

Ideal vs. Constrained Movement l Ideal robotic trainer (6 DOF) l l Realistic movements BUT, complex, bulky, not portable Safety Exo-UL 3 Reduced DOF trainer l l l Cheaper, simpler, mobile BUT, lost information, different dynamics Will transfer to complex movement?

Research Question! Can performance gains in a constrained environment transfer to an unconstrained (real movement) environment? If mechanical constraints limits arm movement, can vision replace proprioceptive information in learning new arm dynamics?

Integration of Sensory Modes Used successfully Vision by deafferented patients Type influences performance Proprioception Dynamics learned in muscle space Importance ? Influences speed of learning Not necessary to

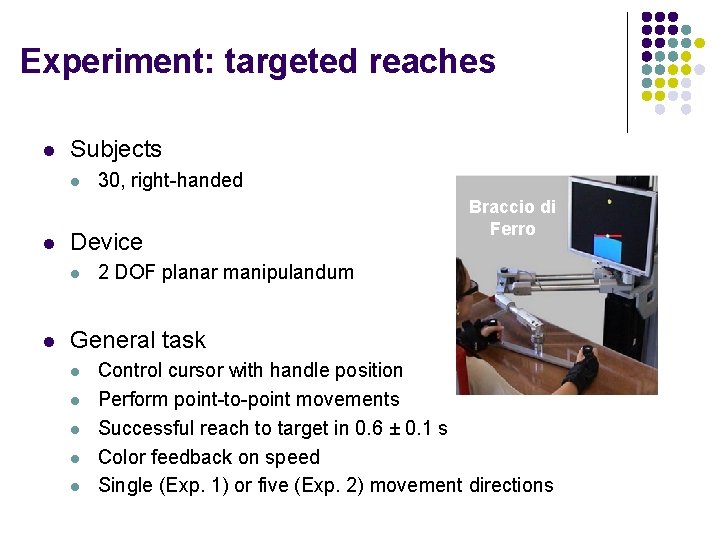

Experiment: targeted reaches l Subjects l l Device l l 30, right-handed Braccio di Ferro 2 DOF planar manipulandum General task l l l Control cursor with handle position Perform point-to-point movements Successful reach to target in 0. 6 ± 0. 1 s Color feedback on speed Single (Exp. 1) or five (Exp. 2) movement directions

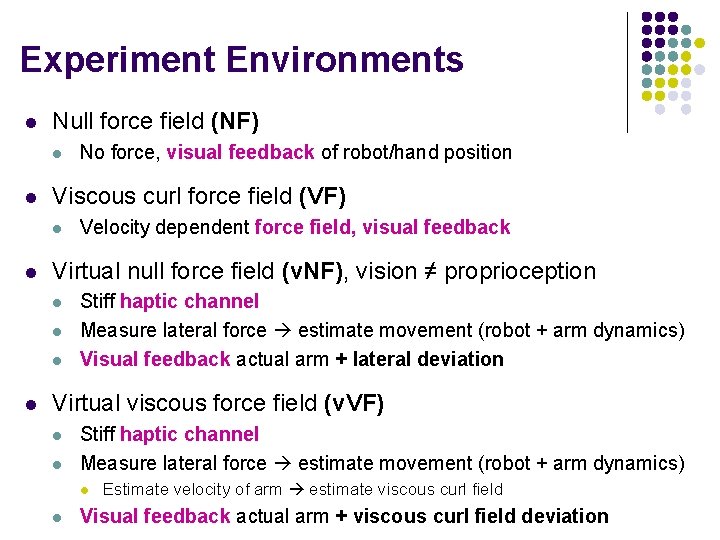

Experiment Environments l Null force field (NF) l l Viscous curl force field (VF) l l Velocity dependent force field, visual feedback Virtual null force field (v. NF), vision ≠ proprioception l l No force, visual feedback of robot/hand position Stiff haptic channel Measure lateral force estimate movement (robot + arm dynamics) Visual feedback actual arm + lateral deviation Virtual viscous force field (v. VF) l l Stiff haptic channel Measure lateral force estimate movement (robot + arm dynamics) l l Estimate velocity of arm estimate viscous curl field Visual feedback actual arm + viscous curl field deviation

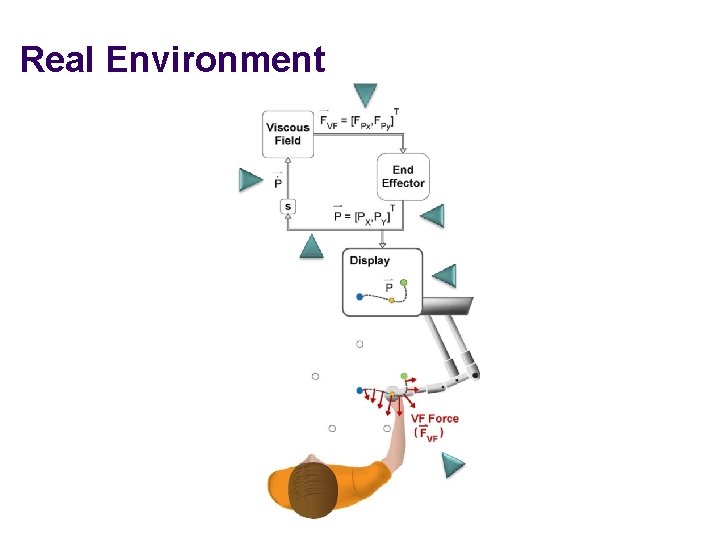

Real Environment

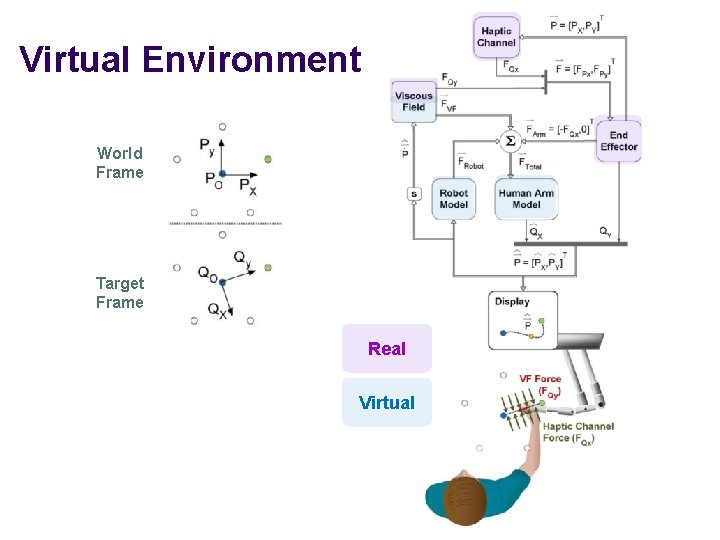

Virtual Environment World Frame Target Frame Real Virtual

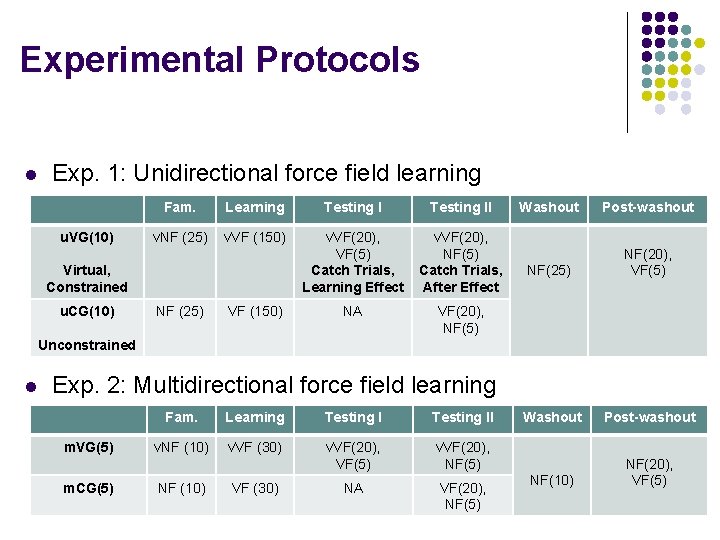

Experimental Protocols l Exp. 1: Unidirectional force field learning u. VG(10) Fam. Learning Testing II v. NF (25) v. VF (150) v. VF(20), VF(5) Catch Trials, Learning Effect v. VF(20), NF(5) Catch Trials, After Effect NA VF(20), NF(5) Virtual, Constrained u. CG(10) NF (25) VF (150) Washout Post-washout NF(25) NF(20), VF(5) Washout Post-washout NF(10) NF(20), VF(5) Unconstrained l Exp. 2: Multidirectional force field learning m. VG(5) m. CG(5) Fam. Learning Testing II v. NF (10) v. VF (30) v. VF(20), VF(5) v. VF(20), NF(5) NA VF(20), NF(5) NF (10) VF (30)

Data Analysis & Expected Results l Performance metrics l l l Between-group analysis l l l Feed-forward control: Aiming error at 150 ms Directional Error: Aiming error at 300 ms Pearson’s correlation coefficient between mean trajectories T-tests between groups Hypothesis l l Over time, directional error decreases, catch trial error increases Similar trajectories for v. VF and VF

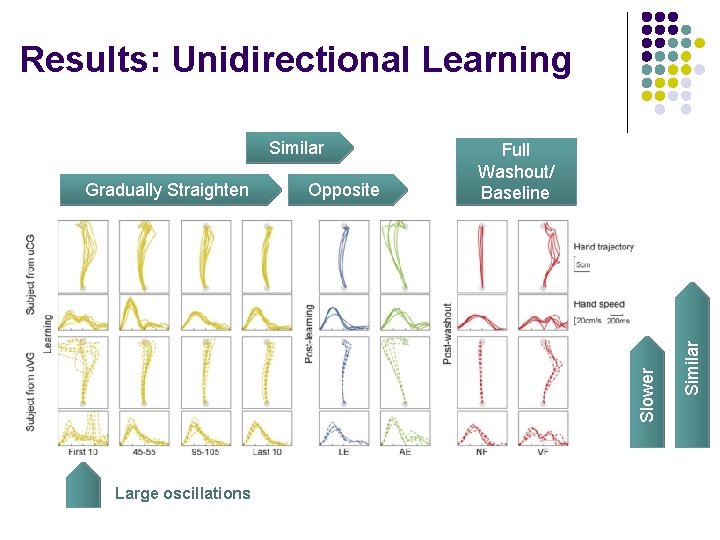

Results: Unidirectional Learning Opposite Slower Gradually Straighten Full Washout/ Baseline Large oscillations Similar

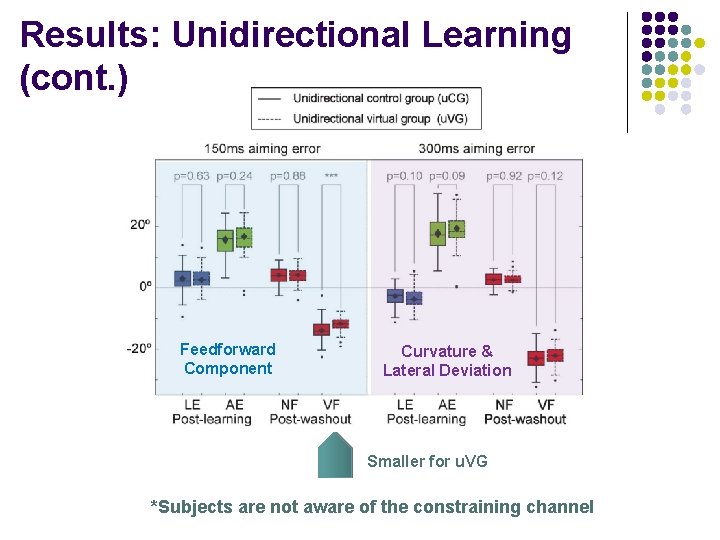

Results: Unidirectional Learning (cont. ) Feedforward Component Curvature & Lateral Deviation Smaller for u. VG *Subjects are not aware of the constraining channel

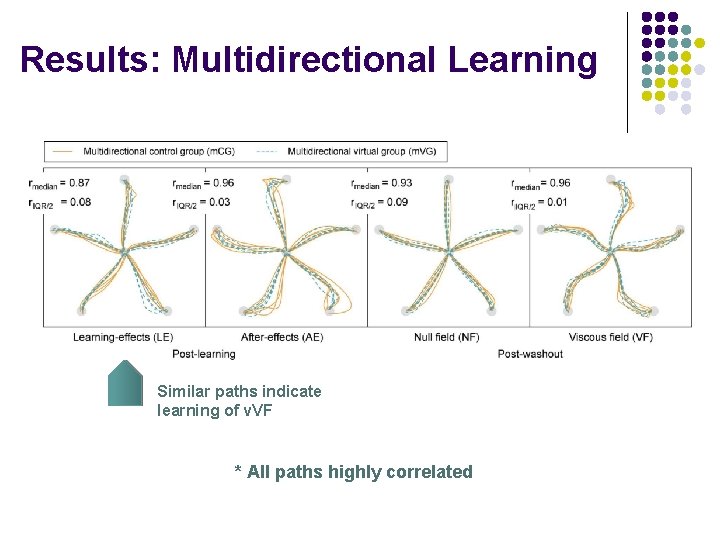

Results: Multidirectional Learning Similar paths indicate learning of v. VF * All paths highly correlated

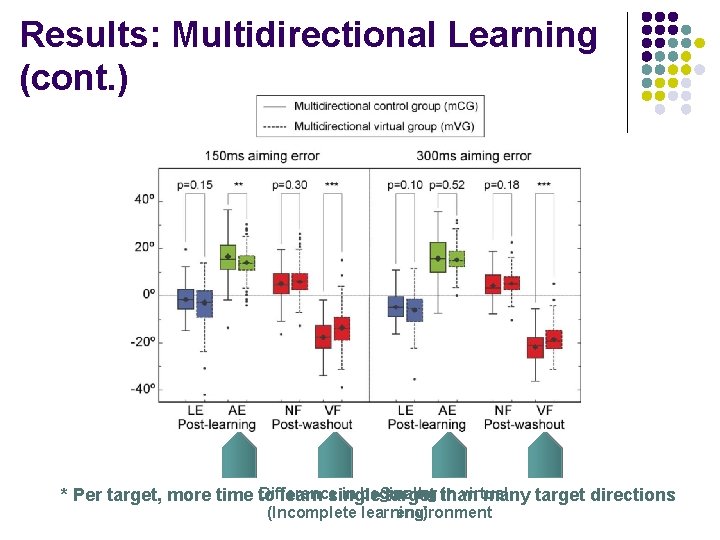

Results: Multidirectional Learning (cont. ) Difference in beginning Smaller in virtual * Per target, more time to learn single target than many target directions (Incomplete learning) environment

Discussion l Can learn new dynamics without proprioceptive error l l Visual feedback shows arm dynamics Uni- vs. multidirectional task l l Unidirectional – no difference between u. VG and UCG Multidirectional – different aftereffects, incomplete learning l Transfer of learning in a virtual environ. to real movement l But, some proprioception + force feedback from channel l Maybe the CNS favored visual information over proprioception based on reliability

Applications l Sport training l l Complex movements with simple (take-home) devices Rehabilitation l l l Simple devices, safer, cheaper Stroke patients have impaired feed-forward control Create visual feedback that could correct lateral forces

Thoughts… l Direct connection to our isometric studies! l l We totally constrain movement Consider a visual perturbation We use simple dynamics that do not necessarily represent the arm How realistic do the virtual dynamics have to be for training? l l l Actual arm dynamics? How much error in the arm model? Virtual dynamics of another system?

Thoughts… l Why could subjects not tell when their arm was constrained? l l How can we manipulate how much someone relies on a certain type of feedback? l l How would results change if people could see their hand? This has come up before! Why did the required reaching length change between uniand multi-directional experiments?

- Slides: 18