Float PIM InMemory Acceleration of Deep Neural Network

- Slides: 19

Float. PIM: In-Memory Acceleration of Deep Neural Network Training with High Precision Mohsen Imani, Saransh Gupta, Yeseong Kim, Tajana Rosing University of California San Diego System Energy Efficiency Lab.

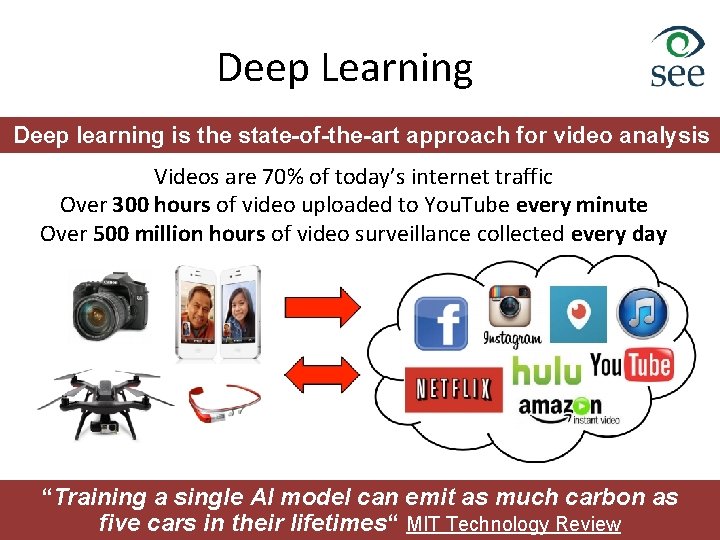

Deep Learning Deep learning is the state-of-the-art approach for video analysis Videos are 70% of today’s internet traffic Over 300 hours of video uploaded to You. Tube every minute Over 500 million hours of video surveillance collected every day “Training a single AI model can emit as much carbon as five cars in their lifetimes“ MIT Technology Review Slide from: V. Sze presentation, MIT‘ 17

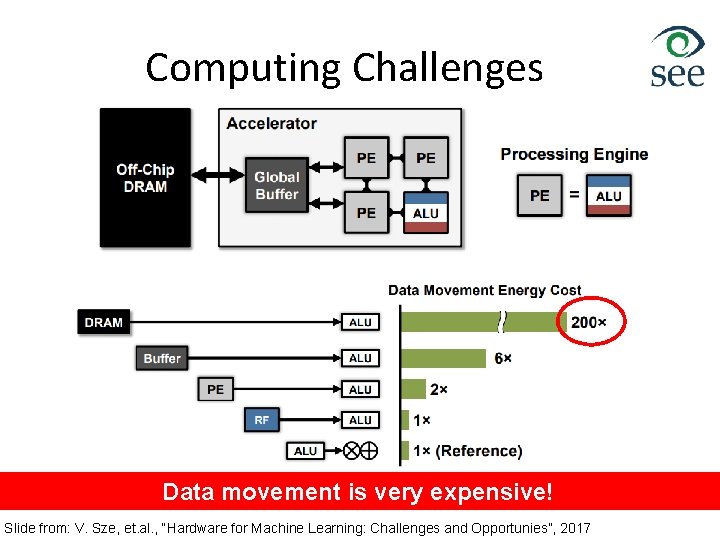

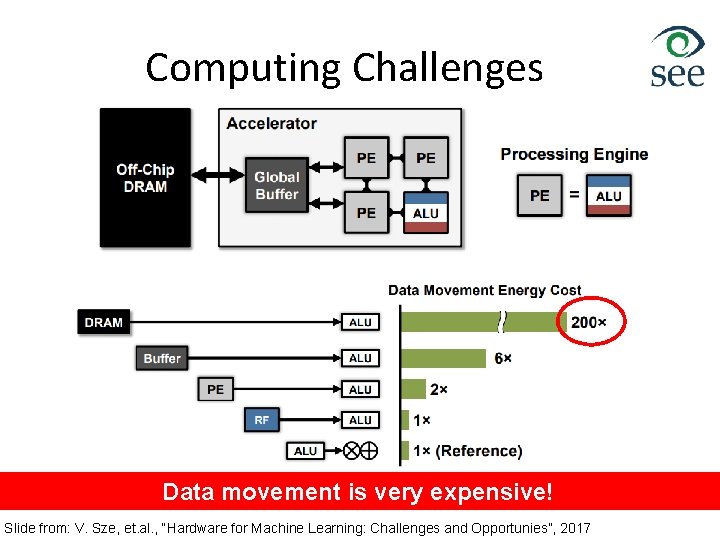

Computing Challenges Data movement is very expensive! Slide from: V. Sze, et. al. , “Hardware for Machine Learning: Challenges and Opportunies”, 2017

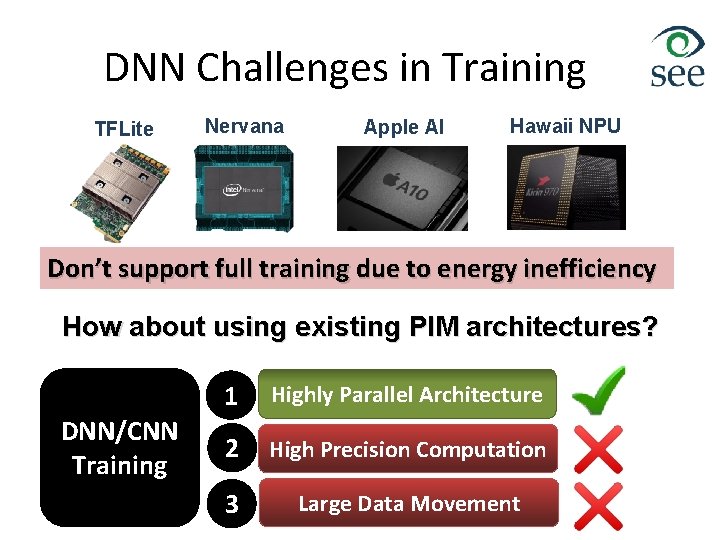

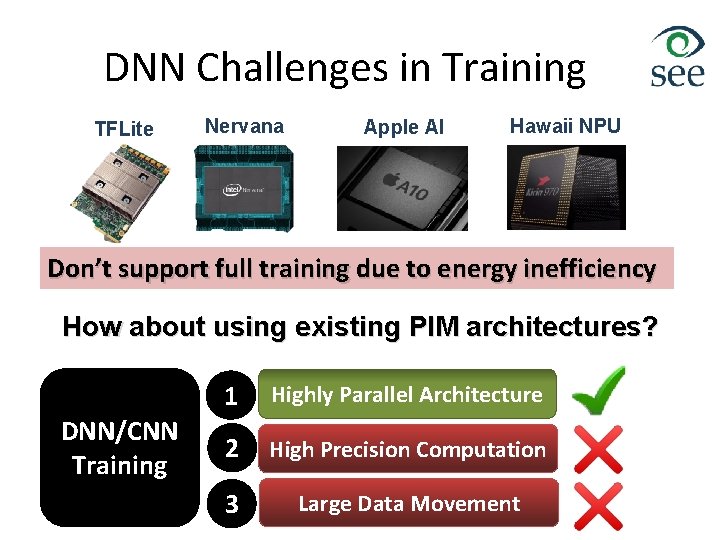

DNN Challenges in Training TFLite Nervana Apple AI Hawaii NPU Don’t support full training due to energy inefficiency How about using existing PIM architectures? DNN/CNN Training 1 Highly Parallel Architecture 2 High Precision Computation 3 Large Data Movement

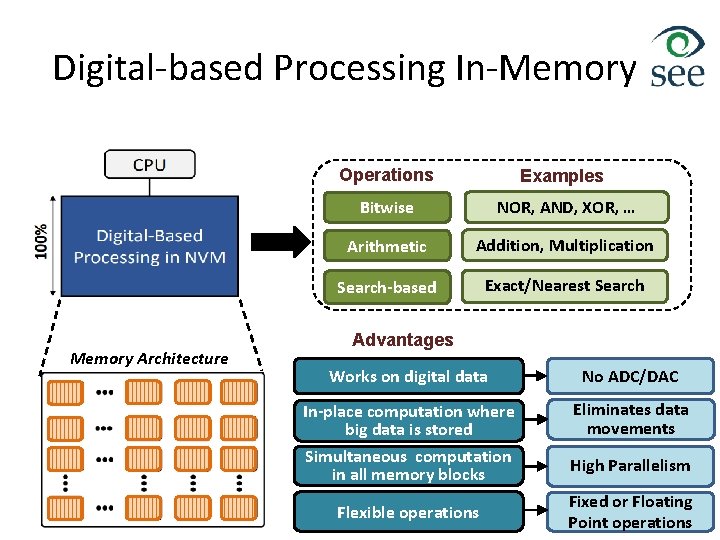

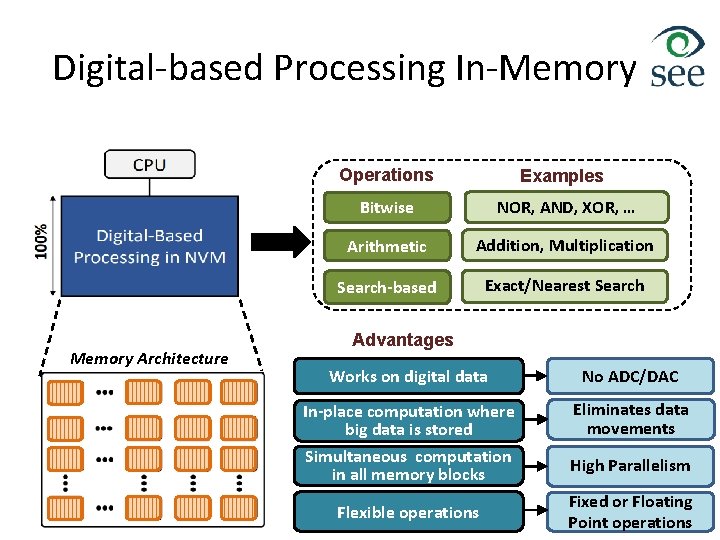

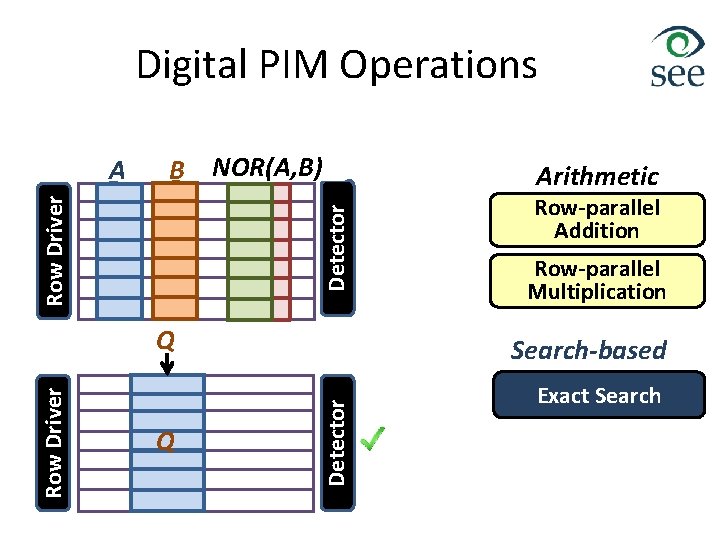

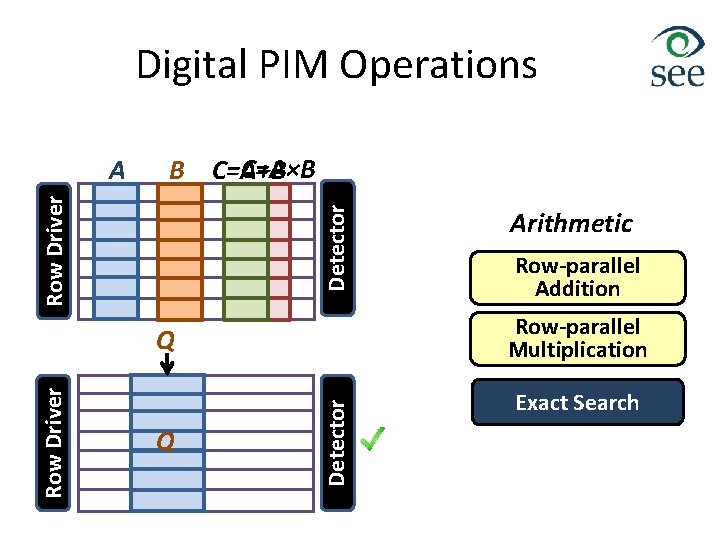

Digital-based Processing In-Memory Architecture Operations Examples Bitwise NOR, AND, XOR, … Arithmetic Addition, Multiplication Search-based Exact/Nearest Search Advantages Works on digital data No ADC/DAC In-place computation where big data is stored Simultaneous computation in all memory blocks Eliminates data movements Flexible operations High Parallelism Fixed or Floating Point operations

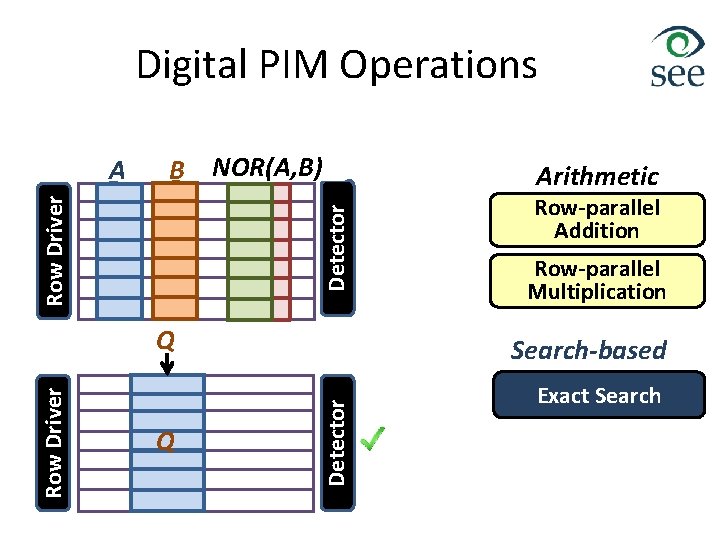

Digital PIM Operations NOR(A, B) C=A×B B C=A+B Detector Row Driver A Q Row-parallel Addition Row-parallel Multiplication Search-based Detector Row Driver Q Arithmetic Exact Search

Digital PIM Operations C=A×B B C=A+B Detector Row Driver A Detector Row Driver Row-parallel Addition Row-parallel Multiplication Q Q Arithmetic Exact Search

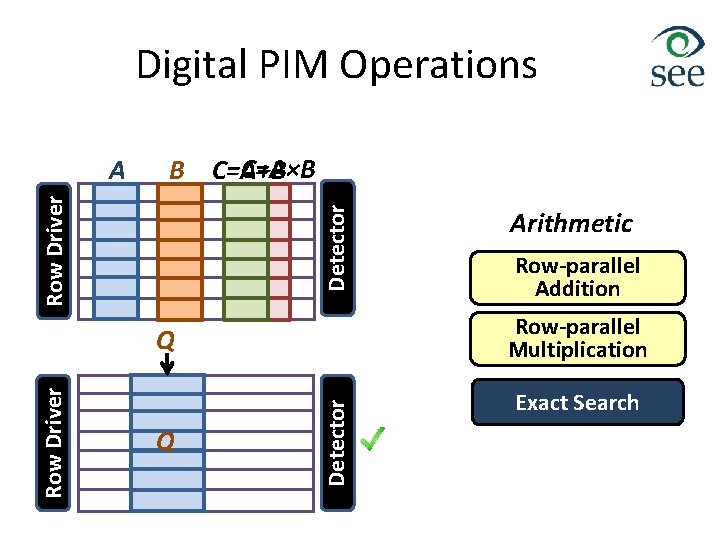

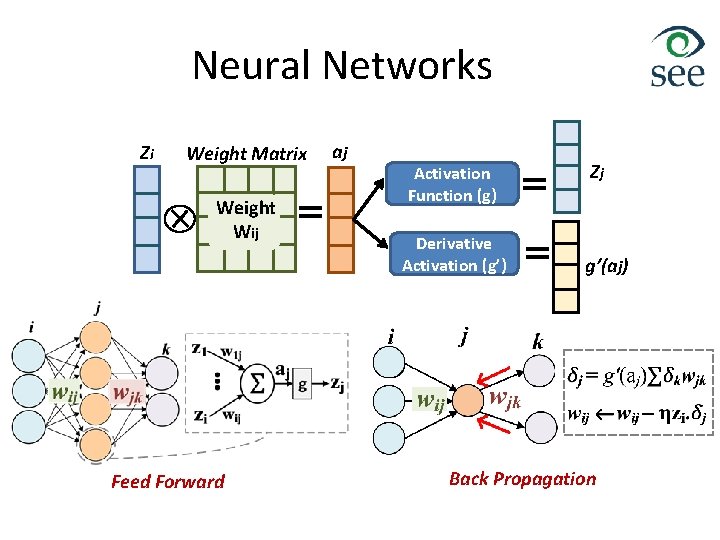

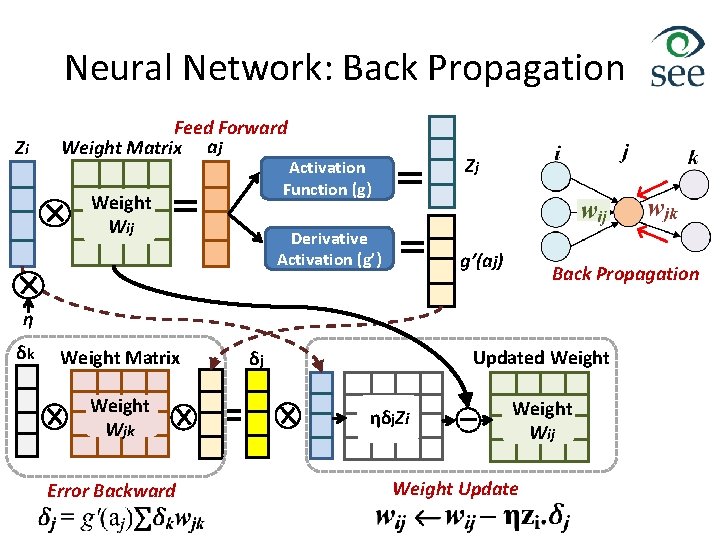

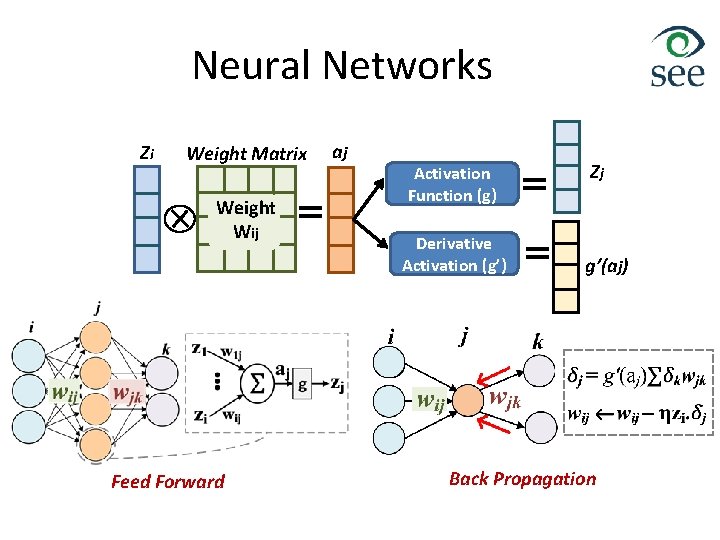

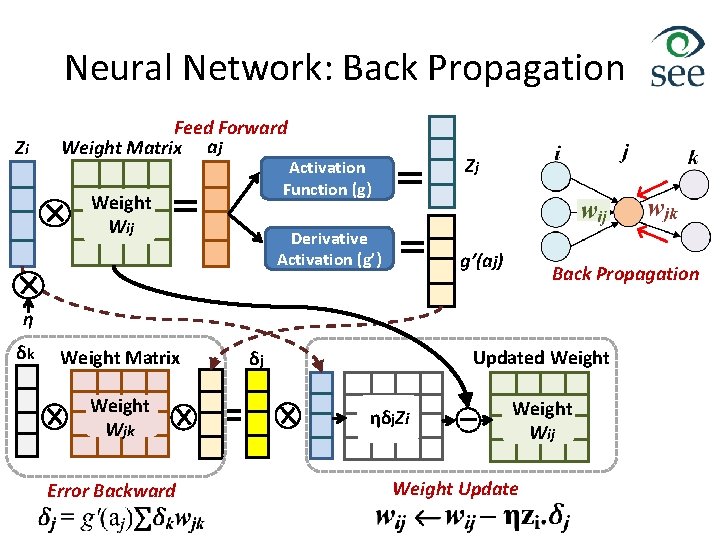

Neural Networks Zi Weight Matrix Weight Wij Feed Forward aj Activation Function (g) Derivative Activation (g’) Zj g’(aj) Back Propagation

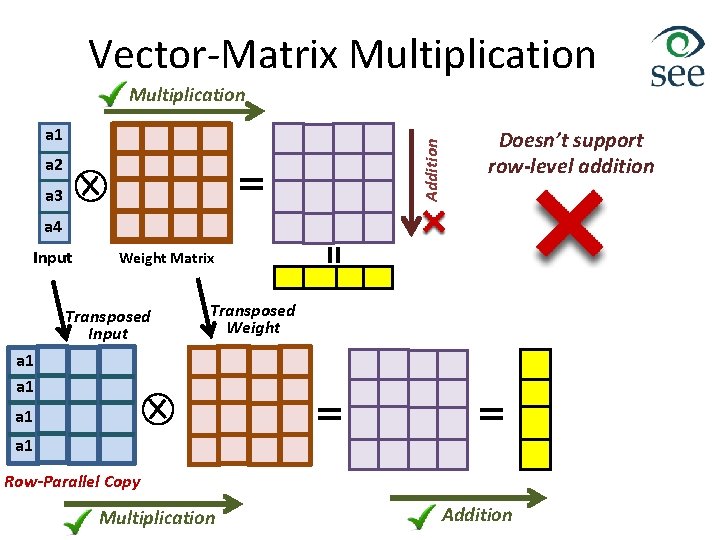

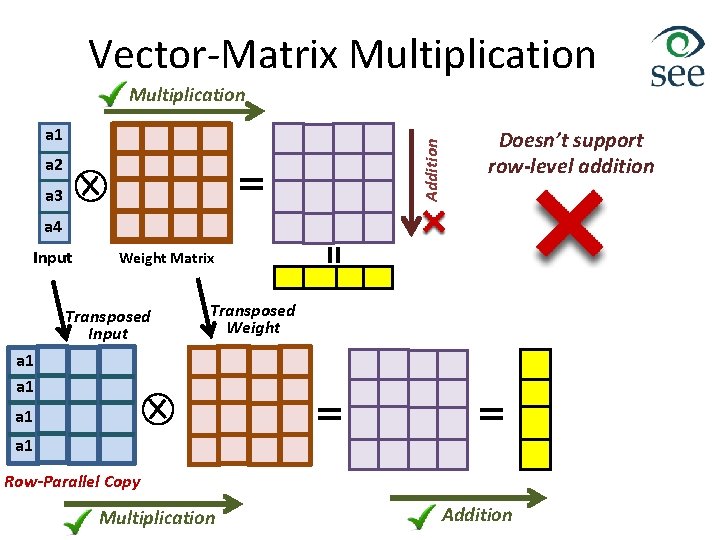

Vector-Matrix Multiplication Addition a 1 a 2 a 3 Doesn’t support row-level addition a 4 Input Weight Matrix Transposed Input Transposed Weight a 1 a 2 a 3 a 4 Row-Parallel Copy Multiplication Addition

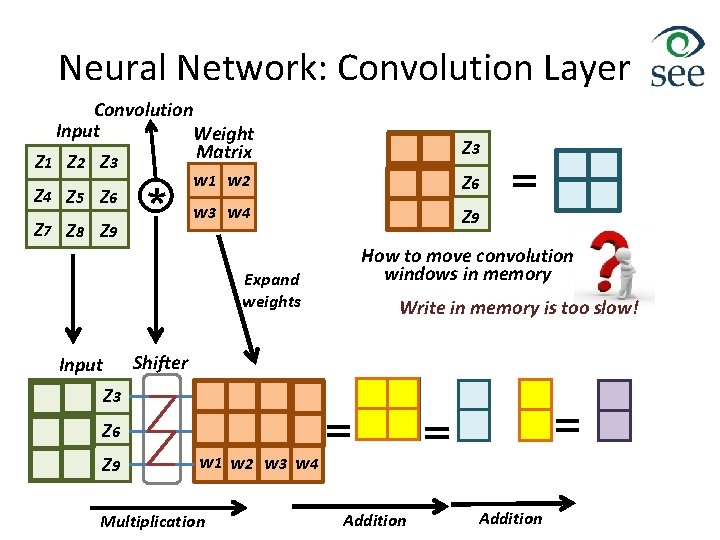

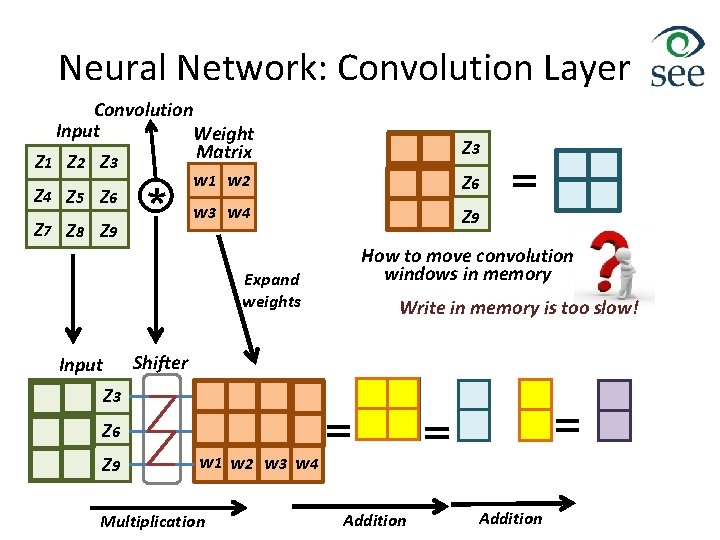

Neural Network: Convolution Layer Convolution Input Weight Matrix Z 1 Z 2 Z 3 w 1 w 2 Z 4 Z 5 Z 6 w 3 w 4 Z 7 Z 8 Z 9 * Expand weights Input Z 4 Z 5 Z 6 Z 7 Z 8 Z 9 How to move convolution windows in memory Write in memory is too slow! Shifter Z 1 Z 2 Z 3 w 1 w 2 w 3 w 4 Z 5 Z 6 w 1 w 2 w 3 w 4 Z 7 Z 8 Z 9 Z 1 Z 2 Z 3 Multiplication Addition

Neural Network: Back Propagation Zi Feed Forward Weight Matrix aj Zj Activation Function (g) Weight Wij Derivative Activation (g’) g’(aj) Back Propagation η δk Weight Matrix Weight Wjk Error Backward Updated Weight δj ηδj. Zi Weight Wij Weight Update

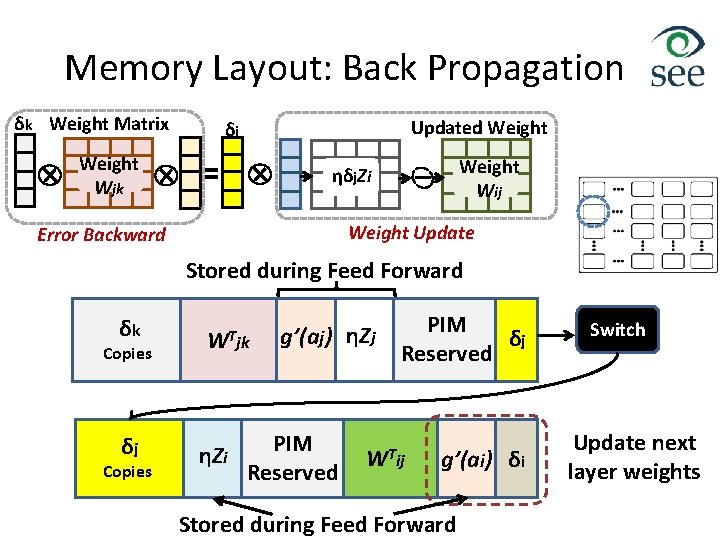

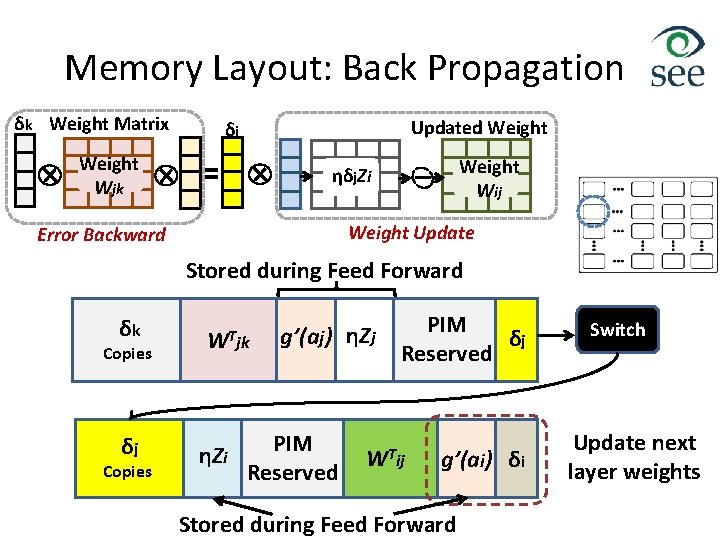

Memory Layout: Back Propagation δk Weight Matrix Updated Weight δj Weight Wjk Weight Wij ηδj. Zi Weight Update Error Backward Stored during Feed Forward δk Copies δj Copies WTjk ηZi g’(aj) ηZj PIM Reserved PIM δj Reserved WTij g’(ai) δi Stored during Feed Forward Switch Update next layer weights

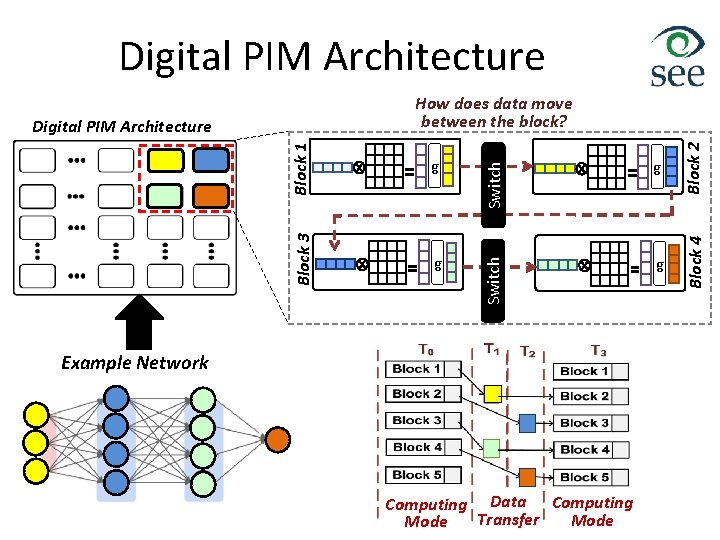

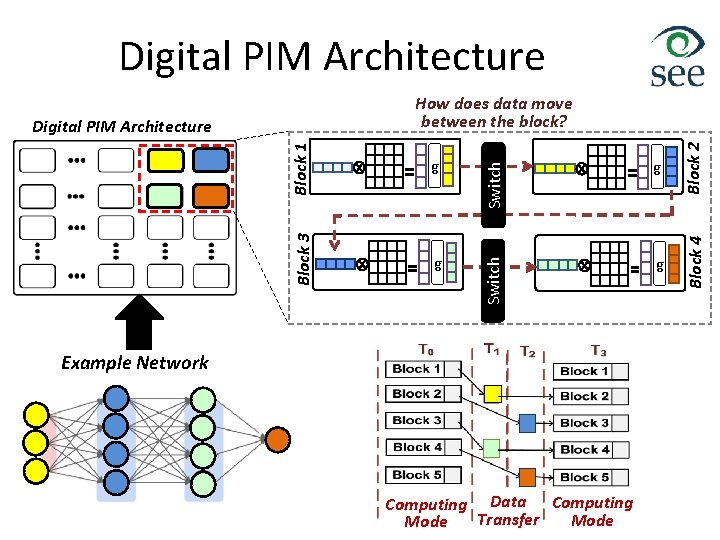

Digital PIM Architecture g g Block 2 g g Block 4 z Switch Block 3 Block 1 Digital PIM Architecture Switch How does data move between the block? Example Network Computing Data Computing Transfer Mode

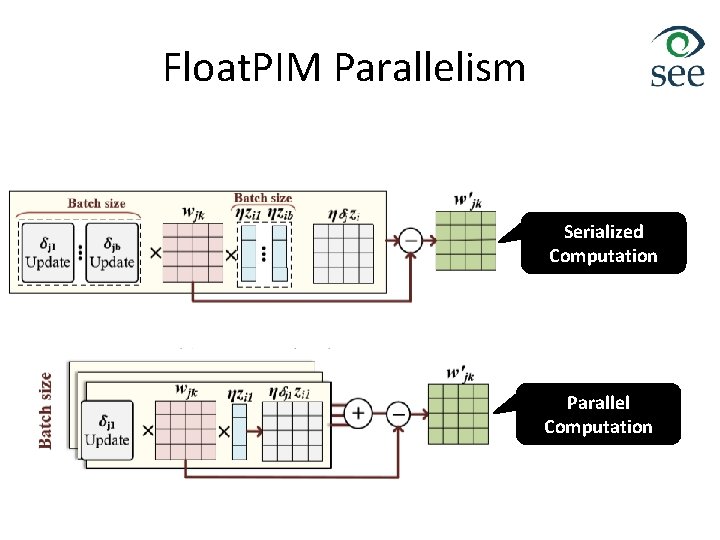

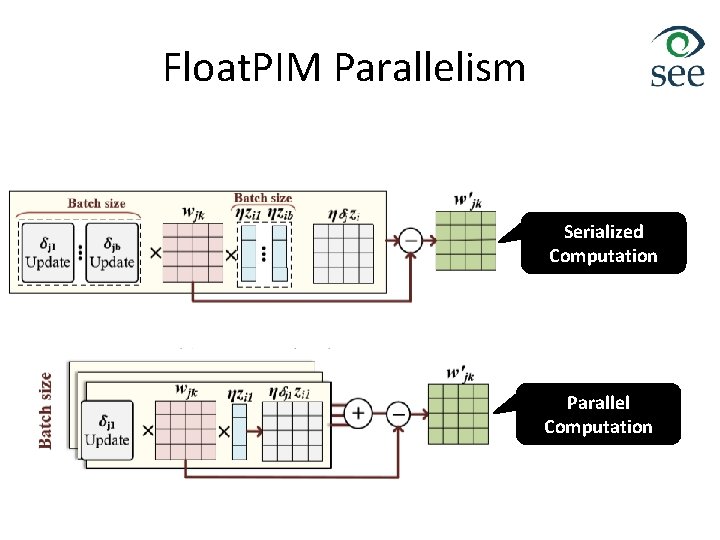

Float. PIM Parallelism Serialized Computation Parallel Computation

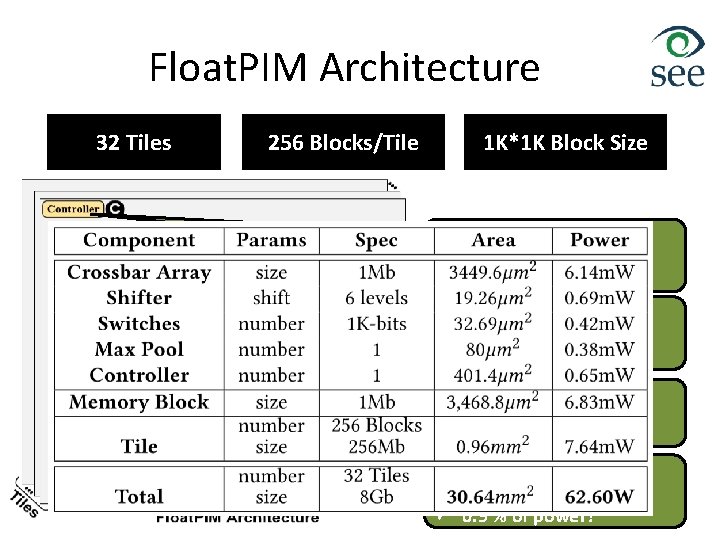

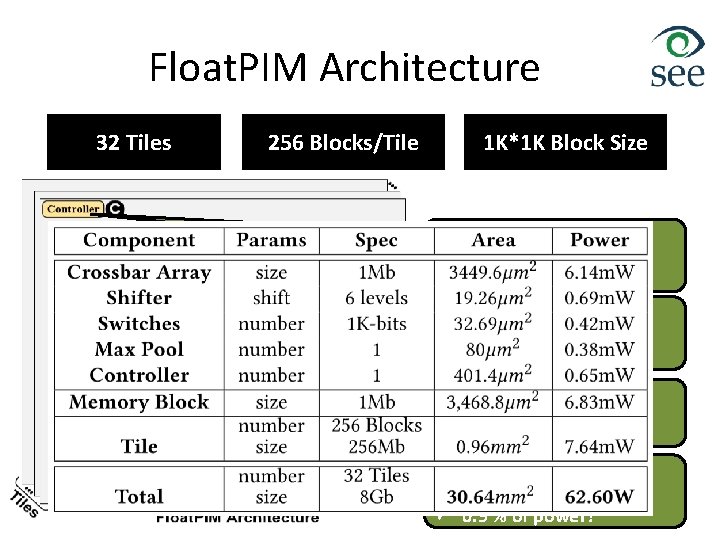

Float. PIM Architecture 32 Tiles 256 Blocks/Tile 1 K*1 K Block Size ü ü Controller per tile 11. 5% of area 9. 7% of power! Crossbar array: 1 K*1 k 99% of area 89% of power! 6 -levels barrel shifter ü 0. 5% of area ü ~10% of power! Switches ü 6. 3% of area ü 0. 9 % of power!

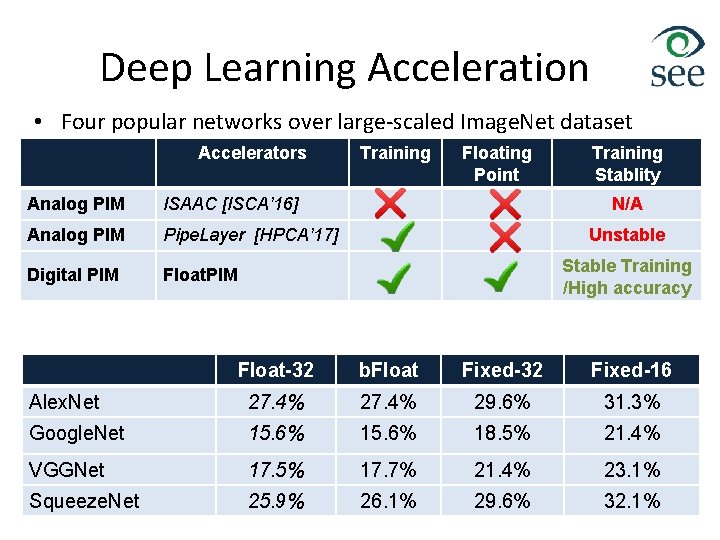

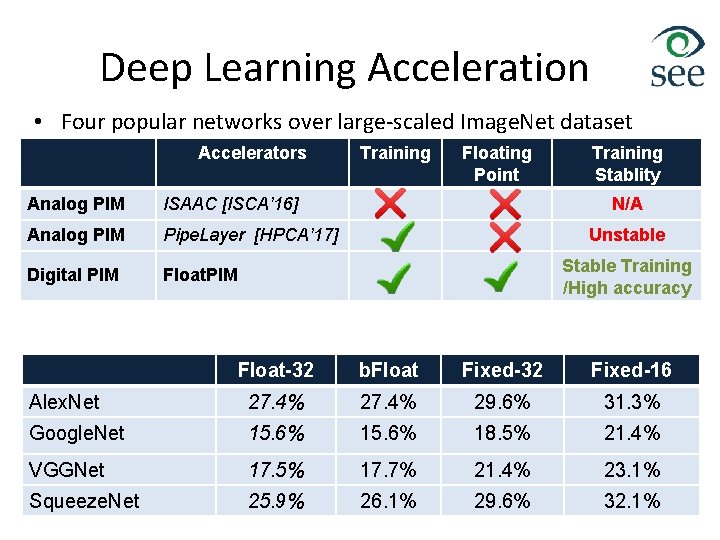

Deep Learning Acceleration • Four popular networks over large-scaled Image. Net dataset Accelerators Analog PIM ISAAC [ISCA’ 16] Analog PIM Pipe. Layer [HPCA’ 17] Digital PIM Float. PIM Training Floating Point Training Stablity N/A Unstable Stable Training /High accuracy Float-32 b. Float Fixed-32 Fixed-16 Alex. Net 27. 4% 29. 6% 31. 3% Google. Net 15. 6% 18. 5% 21. 4% VGGNet 17. 5% 17. 7% 21. 4% 23. 1% Squeeze. Net 25. 9% 26. 1% 29. 6% 32. 1%

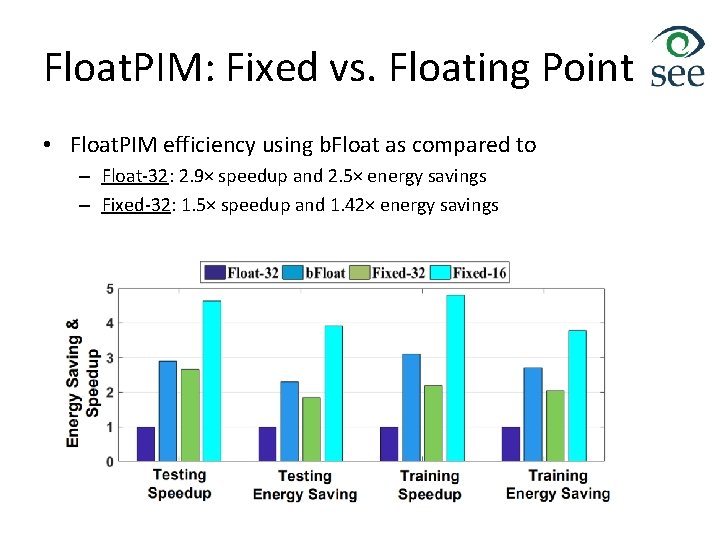

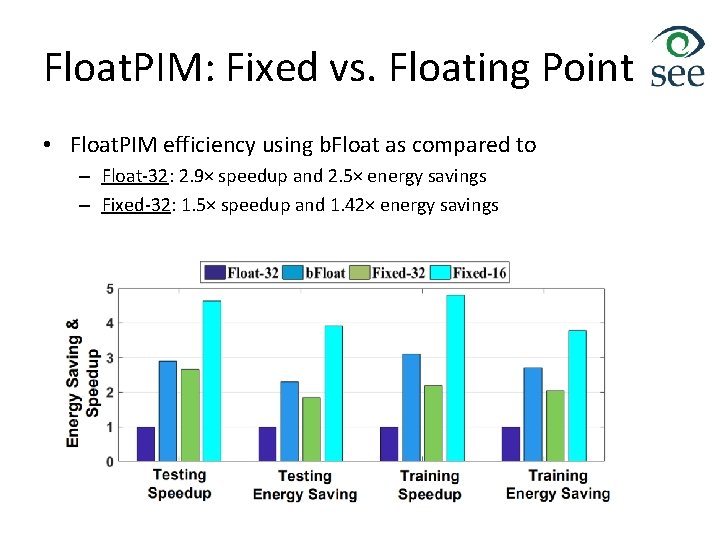

Float. PIM: Fixed vs. Floating Point • Float. PIM efficiency using b. Float as compared to – Float-32: 2. 9× speedup and 2. 5× energy savings – Fixed-32: 1. 5× speedup and 1. 42× energy savings

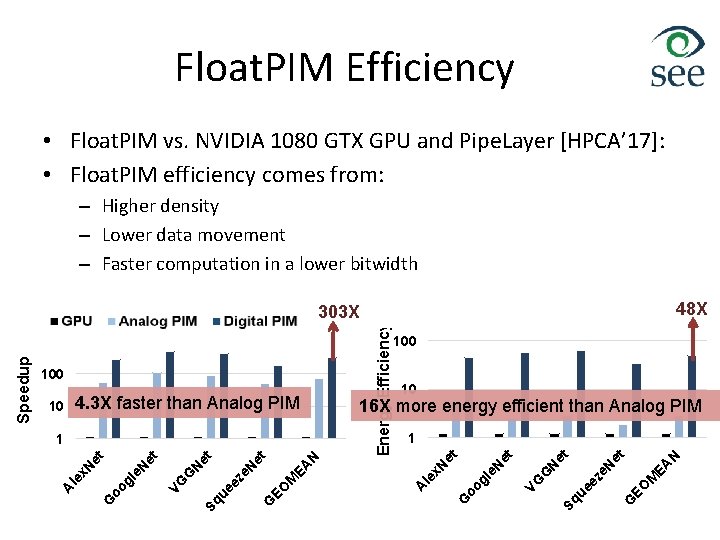

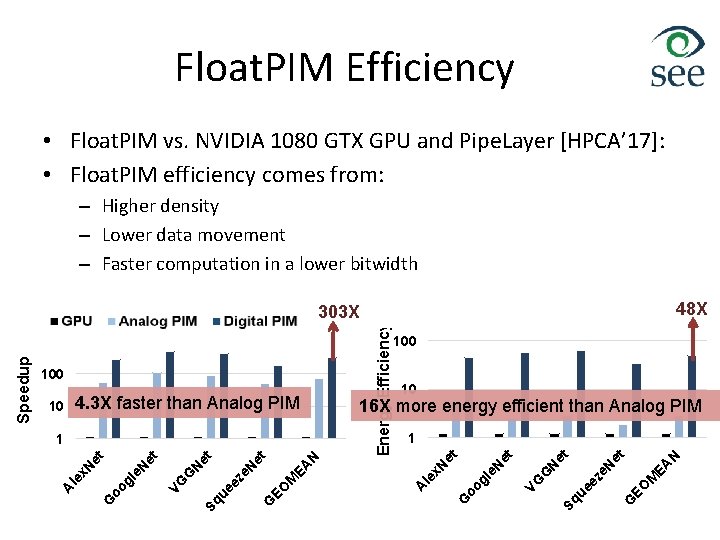

Float. PIM Efficiency • Float. PIM vs. NVIDIA 1080 GTX GPU and Pipe. Layer [HPCA’ 17]: • Float. PIM efficiency comes from: – Higher density – Lower data movement – Faster computation in a lower bitwidth 48 X Energy Efficiency 100 4. 3 X faster than Analog PIM N EA M EO ue Sq G ez e. N et et N G gl e. N oo VG et et x. N EA le G G EO M N ee ze 1 N et et G N Sq u oo G VG gl e. N et et x. N 10 16 X more energy efficient than Analog PIM 1 le 100 A 10 A Speedup 303 X

Conclusion • Several existing challenges in analog-based computing in today’s PIM technology • Proposed the idea of digital-based PIM architecture – Exploits analog characteristics of NVMs to support row-parallel NOR -operations – Extends it to row-parallel arithmetic; addition/multiplication • Maps the entire DNN training/inference to a crossbar memory with minimal changes in the memory • Results as compared to: – NVIDIA GTX 1080 GPU : 302 X faster and 48 X more energy efficient – Analog PIM[HPCA’ 17]: 4. 3 X faster and 16 X more energy efficient