Flight Time Allocation Using Reinforcement Learning Ville Mattila

- Slides: 28

Flight Time Allocation Using Reinforcement Learning Ville Mattila and Kai Virtanen Systems Analysis Laboratory, Helsinki University of Technology www. sal. tkk. fi Ville. A. Mattila@tkk. fi S ystems Analysis Laboratory Helsinki University of Technology 1

Abstract Fighter aircraft are maintained periodically on the basis of cumulated usage hours. In a fleet of aircraft, the timing of the maintenance therefore depends on the allocation of flight time. The timing is also subject to a number of uncertainties such as failures of the aircraft. A fleet with limited maintenance resources is faced with a design problem in assigning the aircraft to flight missions so that the overall amount of maintenance needs will not exceed the maintenance capacity. We consider the assignment of aircraft to flight missions as a Markov Decision Problem over a finite time horizon. The average availability of aircraft is taken as the optimization criterion. We describe the fleet operations with a simulation model. An efficient assignment policy is solved using a Reinforcement Learning technique called Q-learning that presents actions to the simulation and observes the resulting system behavior. We compare the performance of the Q-learning algorithm to a set of heuristic assignment rules using problems involving varying number of aircraft and types of periodic maintenance. Moreover, we consider the possibilities of practical implementation of the produced solutions. S ystems Analysis Laboratory Helsinki University of Technology 2

1. The Flight Time Allocation Problem S ystems Analysis Laboratory Helsinki University of Technology 3

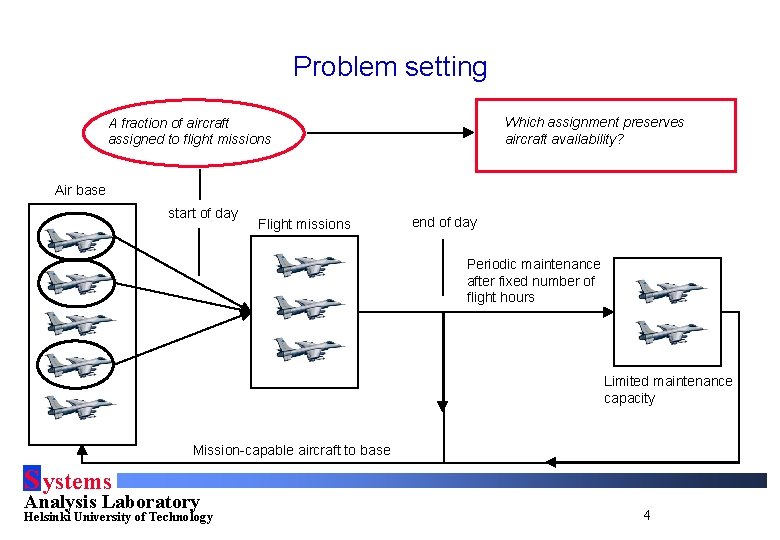

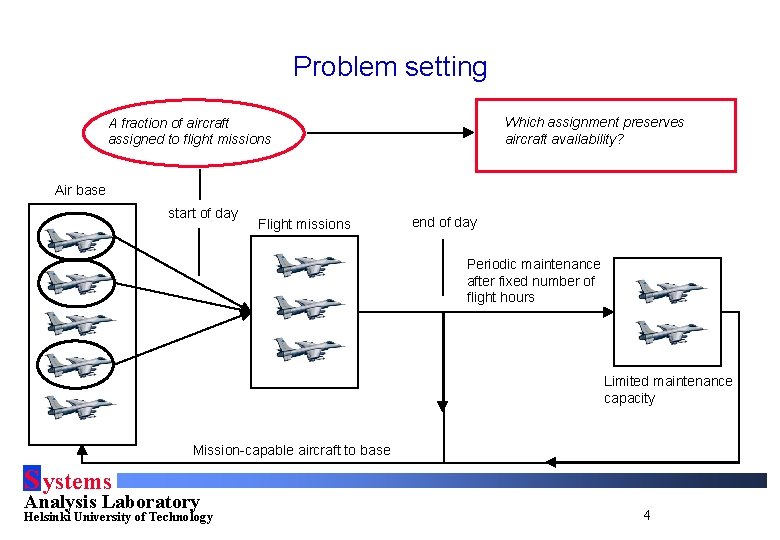

Problem setting Which assignment preserves aircraft availability? A fraction of aircraft assigned to flight missions Air base start of day Flight missions end of day Periodic maintenance after fixed number of flight hours Limited maintenance capacity Mission-capable aircraft to base S ystems Analysis Laboratory Helsinki University of Technology 4

The flight time allocation problem • The timing of periodic maintenance depends on the assignment of aircraft to flight missions, i. e. , allocation of flight time → Problem: How to allocate flight time so that aircraft availability is preserved S ystems Analysis Laboratory Helsinki University of Technology 5

Availability as performance indicator • Availability: The proportion of mission-capable aircraft to the total size of the fleet • One of the primary performance indicators of operational capability in actual maintenance-related decision making • We consider average availability over a finite time horizon – Need to study operational capability given certain initial state – Operational environment remains the same for a limited amount of time S ystems Analysis Laboratory Helsinki University of Technology 6

Difficulty of flight time allocation • Uncertainties – Maintenance duration – Accumulated flight hours during missions – Unplanned maintenance through failure repairs – Unplanned maintenance through battle damage repairs • Dimension of the problem – Potentially a large number of aircraft – Different types of periodic maintenance – Multiple, different level maintenance facilities S ystems Analysis Laboratory Helsinki University of Technology 7

2. Problem formulation S ystems Analysis Laboratory Helsinki University of Technology 8

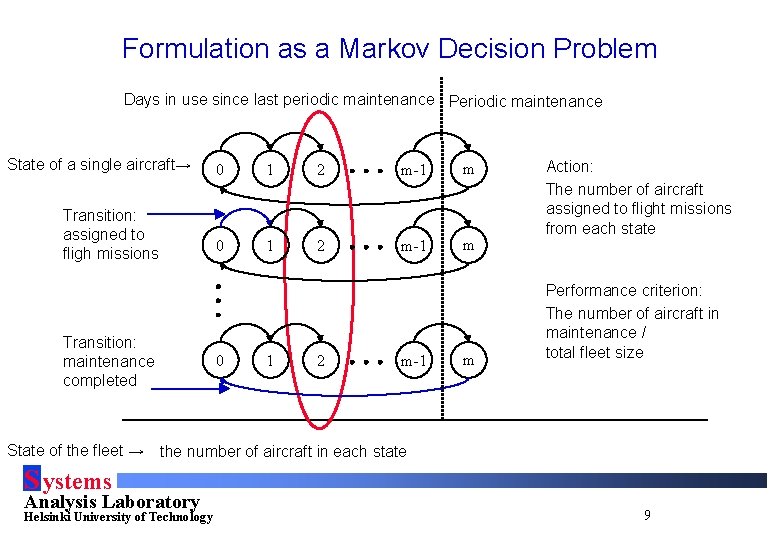

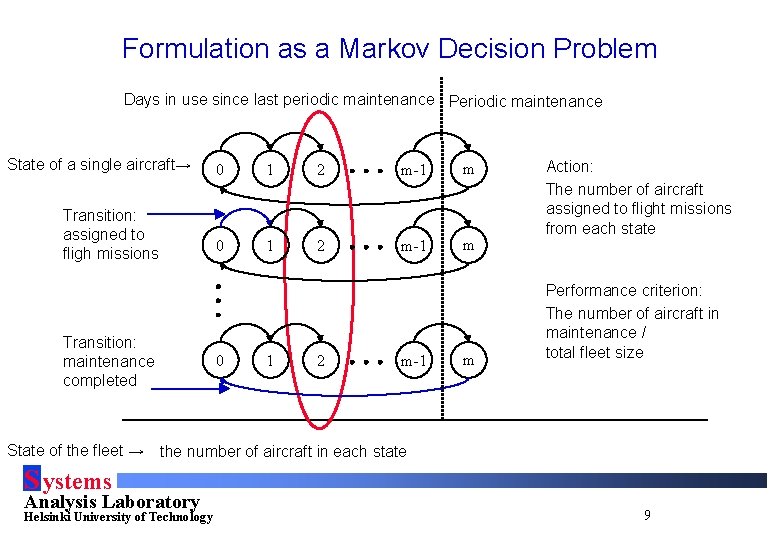

Formulation as a Markov Decision Problem Days in use since last periodic maintenance Periodic maintenance State of a single aircraft→ Transition: assigned to fligh missions Transition: maintenance completed 0 1 2 m-1 m Action: The number of aircraft assigned to flight missions from each state Performance criterion: The number of aircraft in maintenance / total fleet size State of the fleet → the number of aircraft in each state S ystems Analysis Laboratory Helsinki University of Technology 9

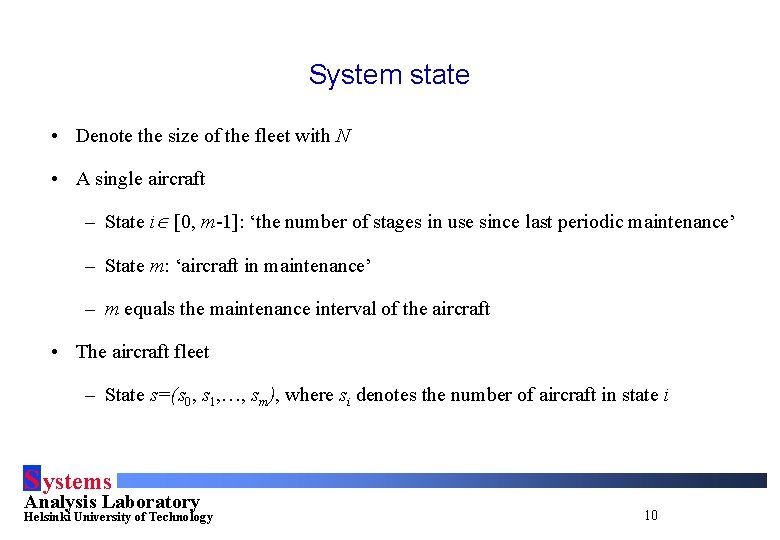

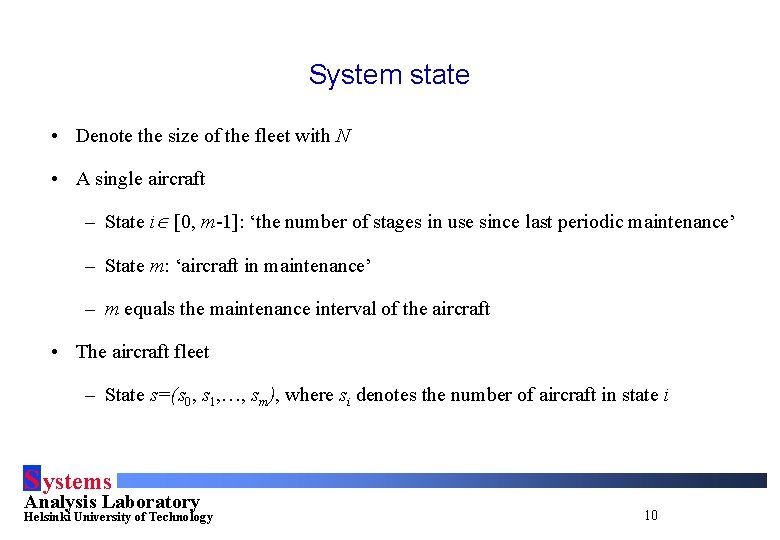

System state • Denote the size of the fleet with N • A single aircraft – State i [0, m-1]: ‘the number of stages in use since last periodic maintenance’ – State m: ‘aircraft in maintenance’ – m equals the maintenance interval of the aircraft • The aircraft fleet – State s=(s 0, s 1, …, sm), where si denotes the number of aircraft in state i S ystems Analysis Laboratory Helsinki University of Technology 10

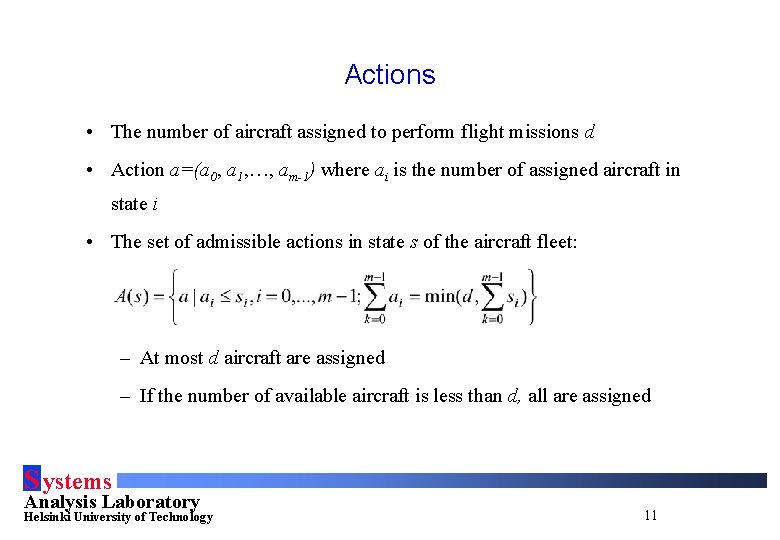

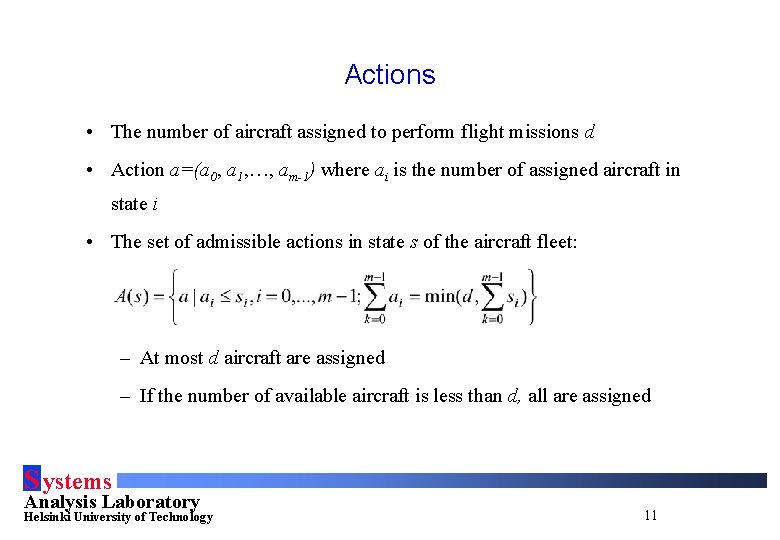

Actions • The number of aircraft assigned to perform flight missions d • Action a=(a 0, a 1, …, am-1) where ai is the number of assigned aircraft in state i • The set of admissible actions in state s of the aircraft fleet: – At most d aircraft are assigned – If the number of available aircraft is less than d, all are assigned S ystems Analysis Laboratory Helsinki University of Technology 11

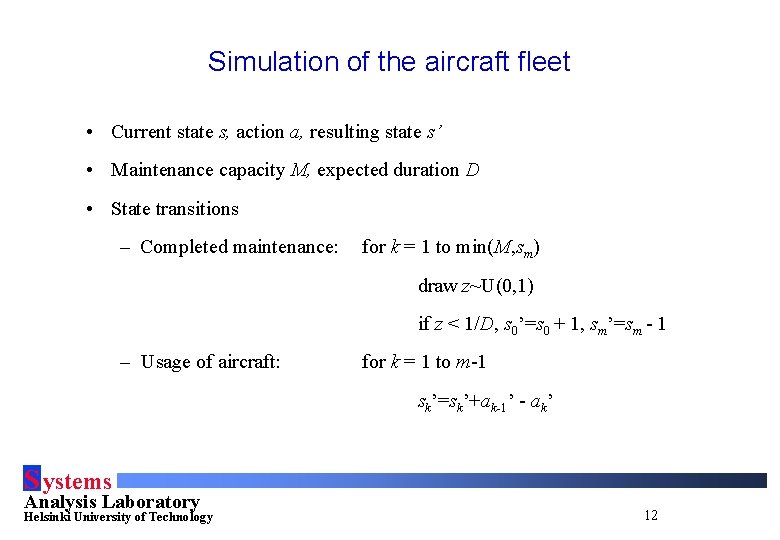

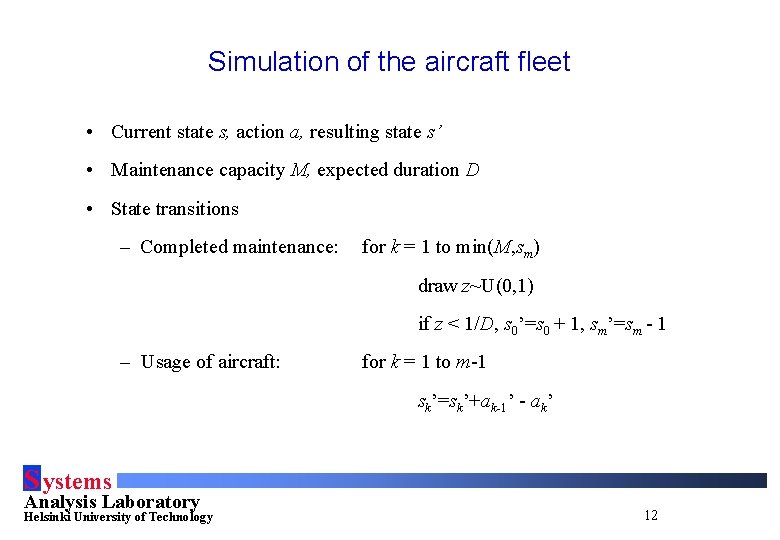

Simulation of the aircraft fleet • Current state s, action a, resulting state s’ • Maintenance capacity M, expected duration D • State transitions – Completed maintenance: for k = 1 to min(M, sm) draw z~U(0, 1) if z < 1/D, s 0’=s 0 + 1, sm’=sm - 1 – Usage of aircraft: for k = 1 to m-1 sk’=sk’+ak-1’ - ak’ S ystems Analysis Laboratory Helsinki University of Technology 12

Optimization criterion • Immediate reward r(s, a, s’) is the aircraft availability in s’ • Optimization criterion: average availability over finite number of stages S ystems Analysis Laboratory Helsinki University of Technology 13

3. The reinforcement learning approach S ystems Analysis Laboratory Helsinki University of Technology 14

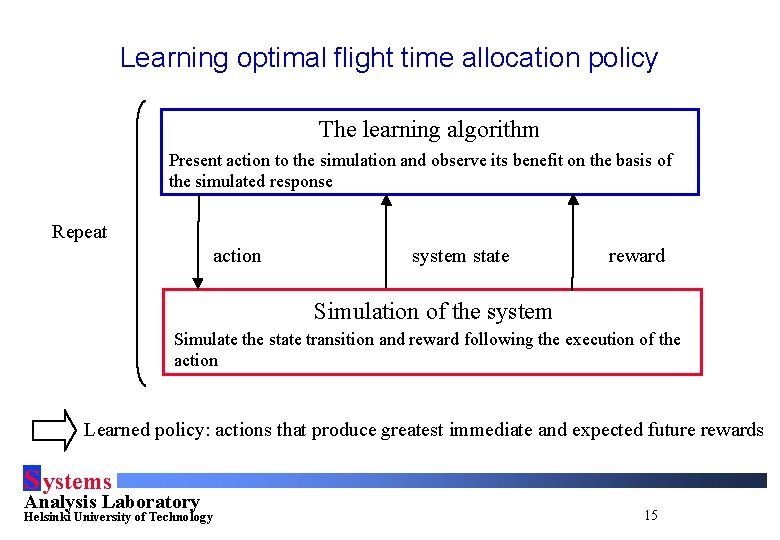

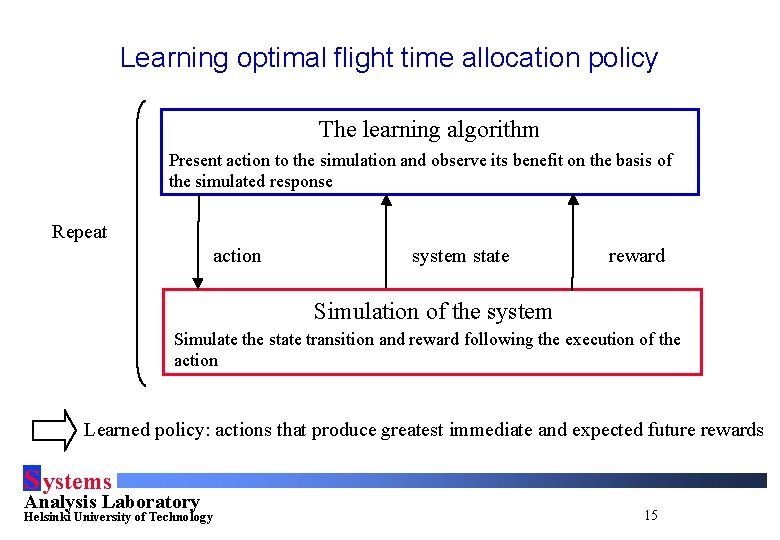

Learning optimal flight time allocation policy The learning algorithm Present action to the simulation and observe its benefit on the basis of the simulated response Repeat action system state reward Simulation of the system Simulate the state transition and reward following the execution of the action Learned policy: actions that produce greatest immediate and expected future rewards S ystems Analysis Laboratory Helsinki University of Technology 15

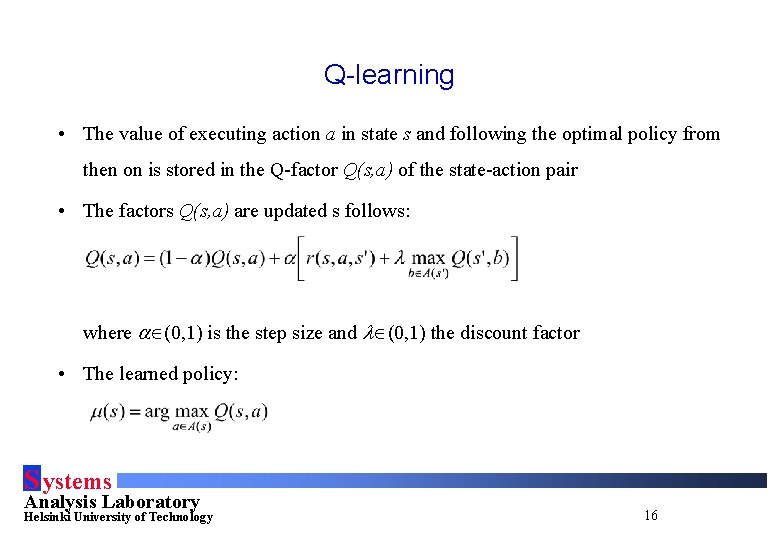

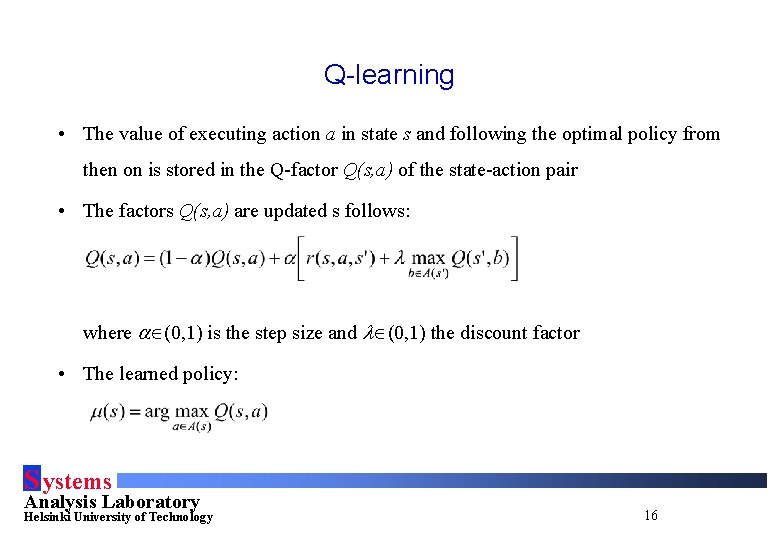

Q-learning • The value of executing action a in state s and following the optimal policy from then on is stored in the Q-factor Q(s, a) of the state-action pair • The factors Q(s, a) are updated s follows: where (0, 1) is the step size and (0, 1) the discount factor • The learned policy: S ystems Analysis Laboratory Helsinki University of Technology 16

Details of the learning algorithm • Action selection with probability e, select a for which Q(s, a) is highest, i. e. , a greedy action with probability 1 -e, select any other a randomly from A(s) • Step size where V(s, a) denotes number of times pair (s, a) has been visited • Discounting – Q-learning is actually a technique for discounted total reward – Can however optimize average reward, if is sufficiently high S ystems Analysis Laboratory Helsinki University of Technology 17

Heuristic policies • Can represent efficient solution for many complex problems • Can act as reference to the policy produced by Q-learning • Two simple policies are considered – ‘advance’: flight time is allocated to aircraft with least time to maintenance – ‘postpone’: flight time is allocated to aircraft with most time to maintenance S ystems Analysis Laboratory Helsinki University of Technology 18

4. Results S ystems Analysis Laboratory Helsinki University of Technology 19

Example problem • Problem instance – N=4 the number of aircraft – m=2 maintenance interval – d=1 number of aircraft to Learning parameters – e = 0. 9 probability of choosing a greedy action – = 0. 98 the discount factor flight missions – M = 1 maintenance capacity – D=2 expected duration of maintenance – L = 50 number of stages – Initial state s(0)=[1 2 1] S ystems Analysis Laboratory Helsinki University of Technology 20

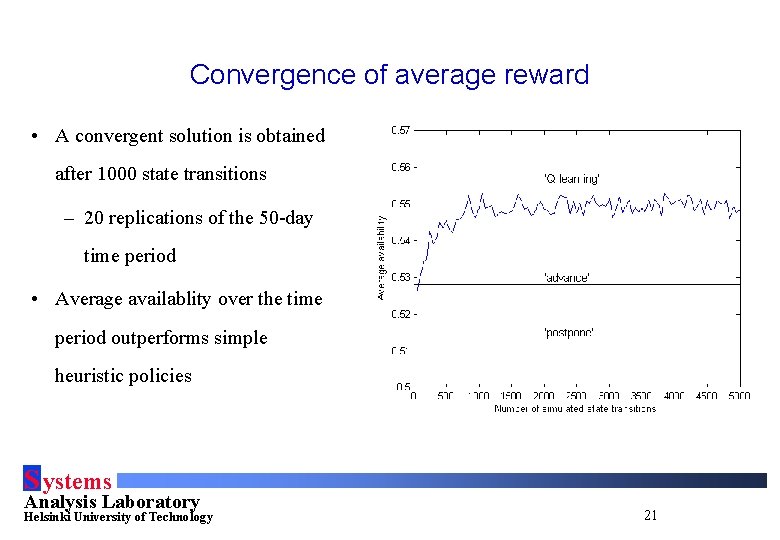

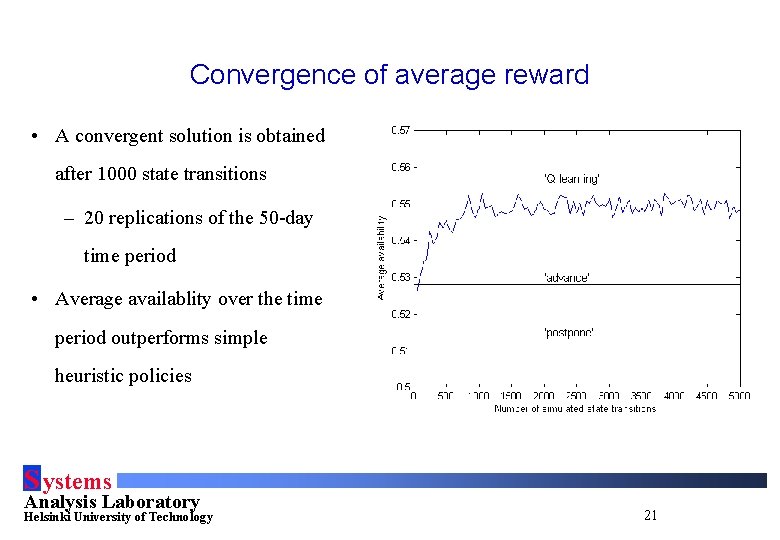

Convergence of average reward • A convergent solution is obtained after 1000 state transitions – 20 replications of the 50 -day time period • Average availablity over the time period outperforms simple heuristic policies S ystems Analysis Laboratory Helsinki University of Technology 21

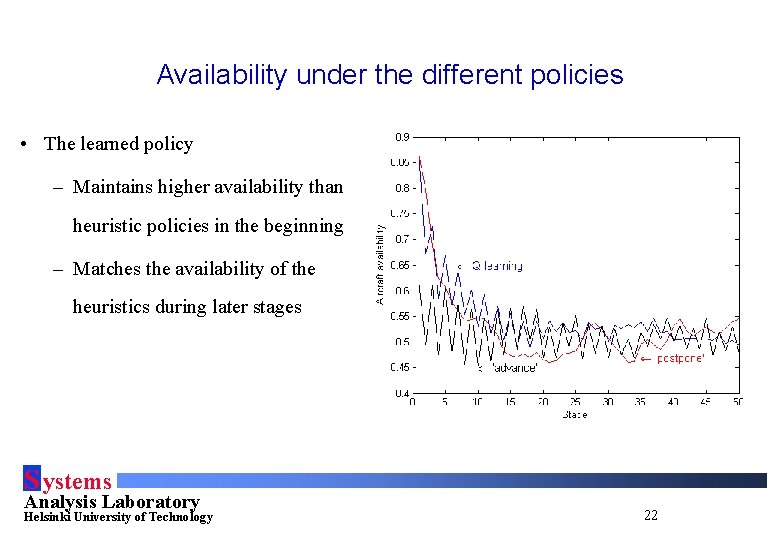

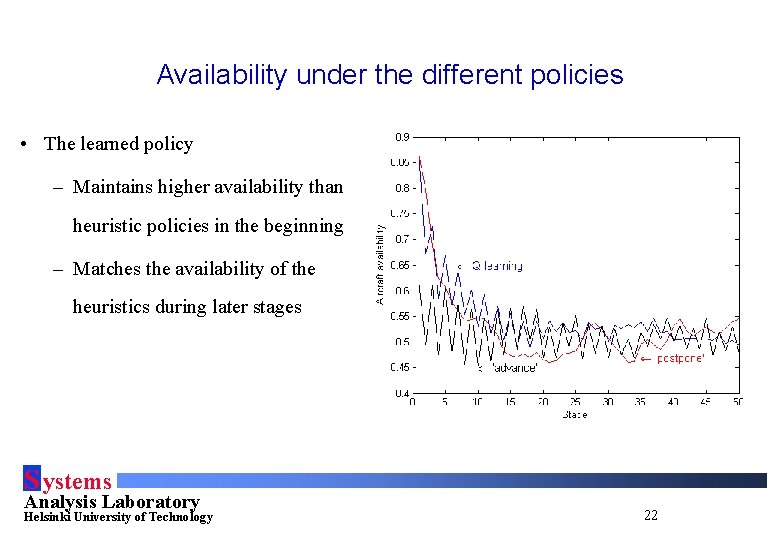

Availability under the different policies • The learned policy – Maintains higher availability than heuristic policies in the beginning – Matches the availability of the heuristics during later stages S ystems Analysis Laboratory Helsinki University of Technology 22

Characterizing the learned policy • Since m was taken very small, the learned solution can be characterized with the ‘advance’ and the ‘postpone’ heuristic policies as follows: – if number of aircraft in maintenance is equal to or more than capacity: → ‘postpone’ – if maintenance facility is idle: if s 2 >1 → ‘advance’ else → ‘postpone’ S ystems Analysis Laboratory Helsinki University of Technology 23

5. Conclusions S ystems Analysis Laboratory Helsinki University of Technology 24

Contributions • Insight to a difficult problem actually faced by fleet commanders • Flight time allocation as a means for timing maintenance – Has not been considered as a dynamic problem to the best of our knowledge – Has not been considered with RL-techniques S ystems Analysis Laboratory Helsinki University of Technology 25

The reinforcement learning approach • Results of the reinforcement learning approach for the studied problem instances are promising – A convergent policy is found – The obtained policy outperforms simple heuristic policies – Learning time is manageable for fleet sizes of up to 16 aircraft S ystems Analysis Laboratory Helsinki University of Technology 26

Extensions to the model • A number of extensions to the presented model are likely required in order to describe more realistic scenarios • Of particular interest are the effects of – Additional uncertainties such as battle damage – Operational environment that evolves through time – Violations of the Markovian property of states S ystems Analysis Laboratory Helsinki University of Technology 27

Analysis of obtained policies • The purpose of studying the flight time allocation problem is to obtain new insight for the use of human decision makers • Until now, Q-learning has been implemented as a look-up table version – Q-factors are stored explicitly → representation of learned requires large storage space – Post-learning analysis to build intuition of efficient policies • Future challenge is to represent policies in compact form that allows both – Efficient learning – Intuitive representation to human decision makers S ystems Analysis Laboratory Helsinki University of Technology 28