Flexible and Creative Chinese Poetry Generation Using Neural

Flexible and Creative Chinese Poetry Generation Using Neural Memory Jiyuan Zhang NLP Group, CSLT, Tsinghua University

Outline • Introduction • The memory-augmented neural model(The MNM) • The analysis of memory mechanism • Evaluation & Experiments • Conclusions & Future work

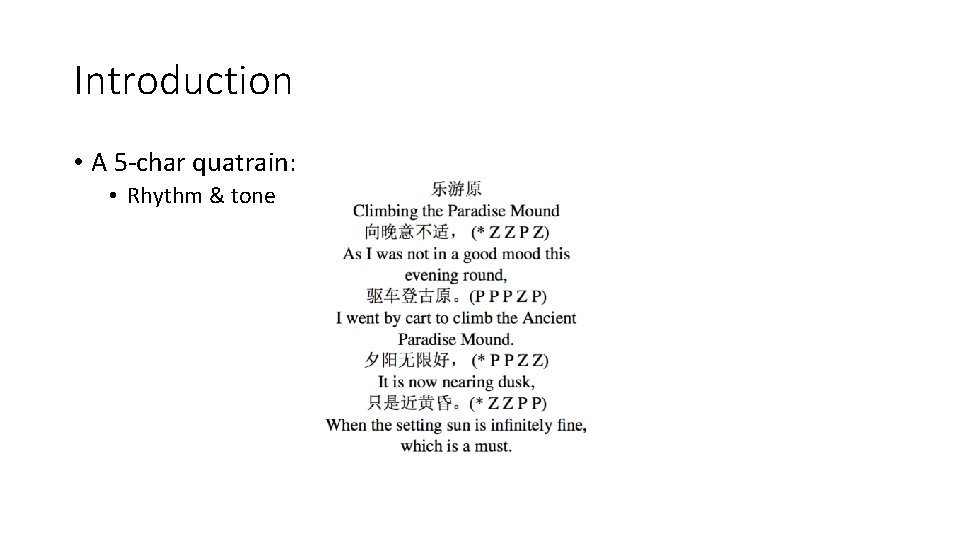

Introduction • A 5 -char quatrain: • Rhythm & tone

Introduction • Statistical or rule-based model: • For example, n-gram, formula is as follows: P(T)=P(W 1 W 2 W 3 Wn)=P(W 1)P(W 2|W 1)P(W 3|W 1 W 2)…P(Wn|W 1 W 2…Wn-1) • Neural model: • Compared to previous approaches(e. g. , rule-based or SM), the neural model approach tends to generate more fluent poems.

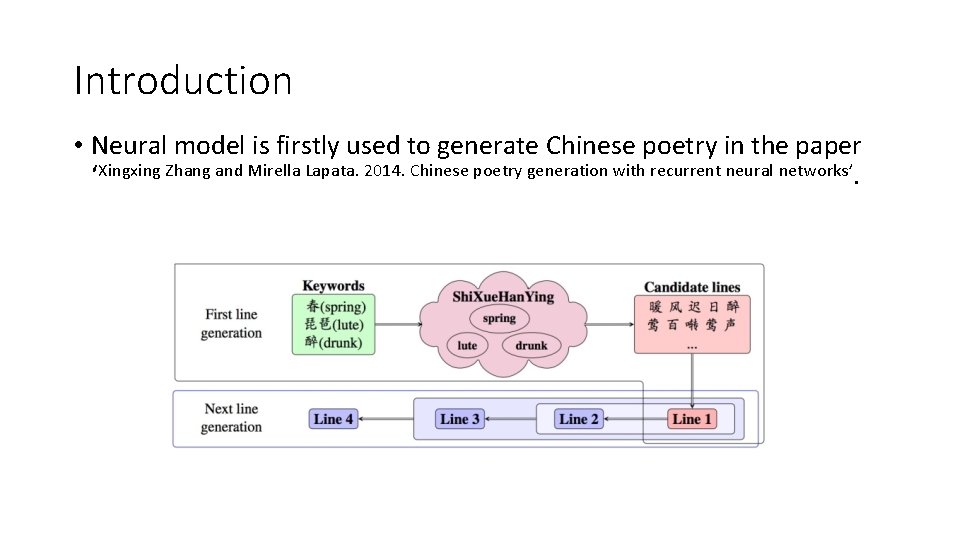

Introduction • Neural model is firstly used to generate Chinese poetry in the paper ‘Xingxing Zhang and Mirella Lapata. 2014. Chinese poetry generation with recurrent neural networks’.

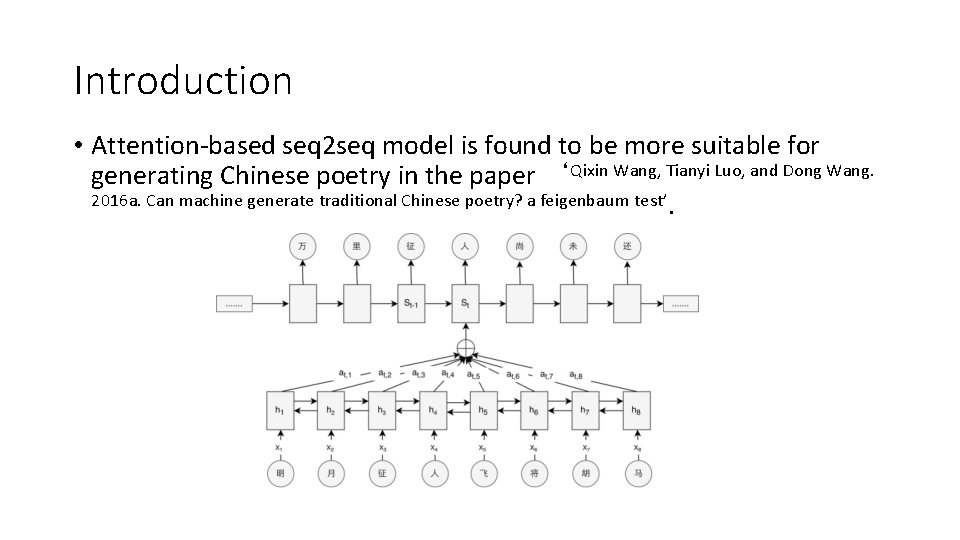

Introduction • Attention-based seq 2 seq model is found to be more suitable for generating Chinese poetry in the paper ‘Qixin Wang, Tianyi Luo, and Dong Wang. 2016 a. Can machine generate traditional Chinese poetry? a feigenbaum test’.

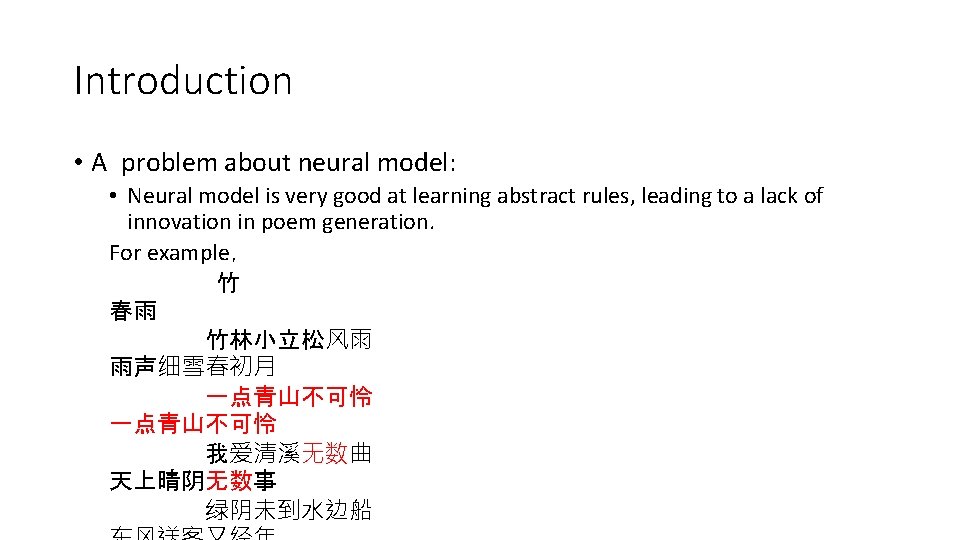

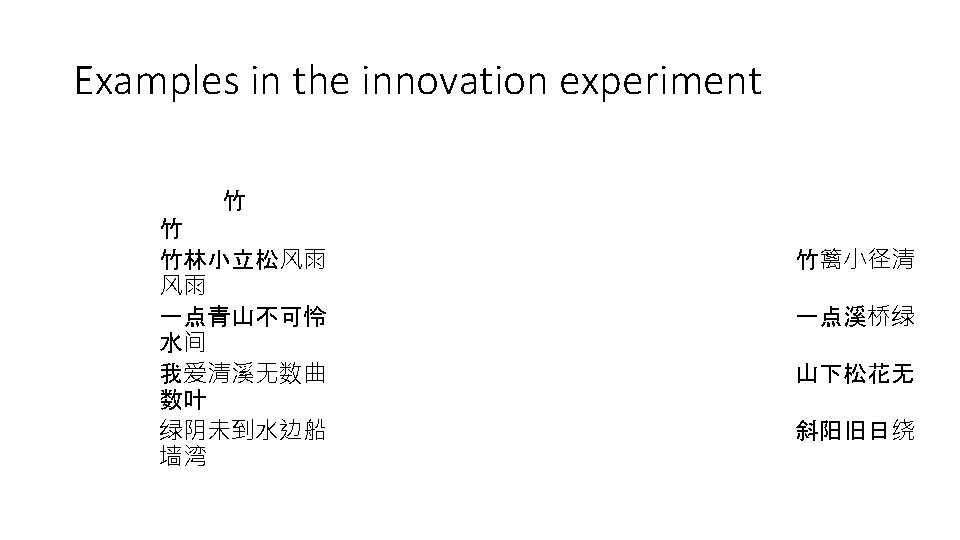

Introduction • A problem about neural model: • Neural model is very good at learning abstract rules, leading to a lack of innovation in poem generation. For example, 竹 春雨 竹林小立松风雨 雨声细雪春初月 一点青山不可怜 我爱清溪无数曲 天上晴阴无数事 绿阴未到水边船

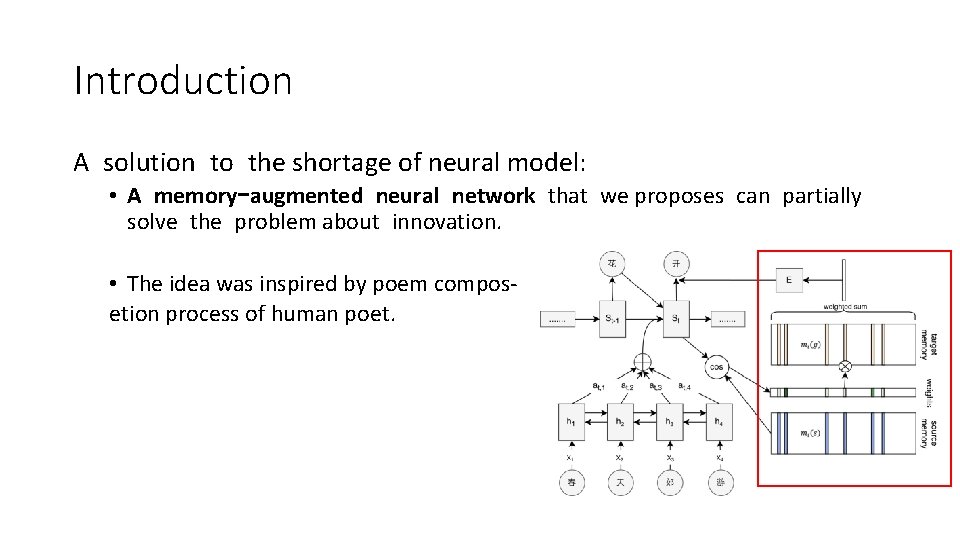

Introduction A solution to the shortage of neural model: • A memory-augmented neural network that we proposes can partially solve the problem about innovation. • The idea was inspired by poem composetion process of human poet.

Introduction • The aims of the memory: • Linguistic accordance and aesthetic innovation. • Generating poems with different styles.

Outline • Introduction • The memory-augmented neural model(The MNM) • The analysis of memory mechanism • Evaluation & Experiments • Conclusions & Future work

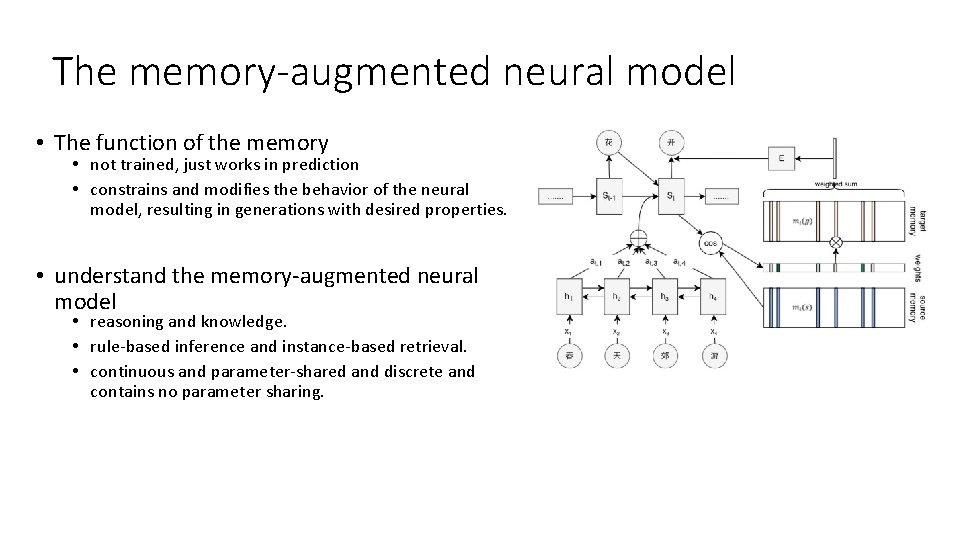

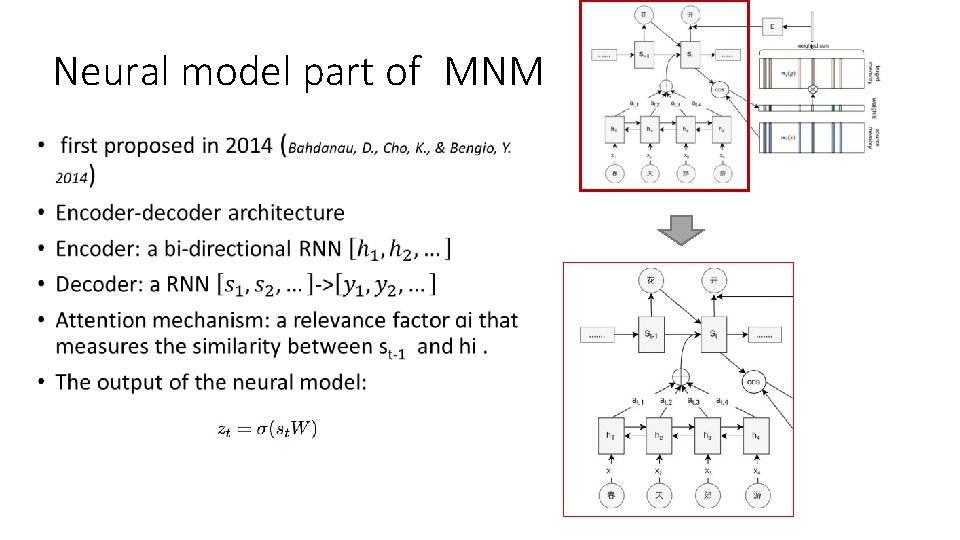

The memory-augmented neural model • The function of the memory • not trained, just works in prediction • constrains and modifies the behavior of the neural model, resulting in generations with desired properties. • understand the memory-augmented neural model • reasoning and knowledge. • rule-based inference and instance-based retrieval. • continuous and parameter-shared and discrete and contains no parameter sharing.

Neural model part of MNM •

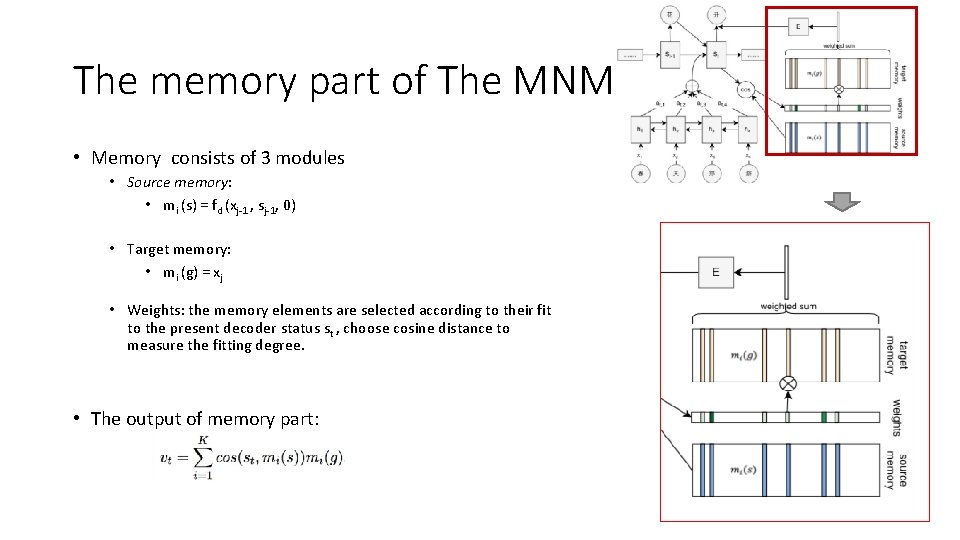

The memory part of The MNM • Memory consists of 3 modules • Source memory: • mi (s) = fd (xj-1 , sj-1, 0) • Target memory: • mi (g) = xj • Weights: the memory elements are selected according to their fit to the present decoder status st , choose cosine distance to measure the fitting degree. • The output of memory part:

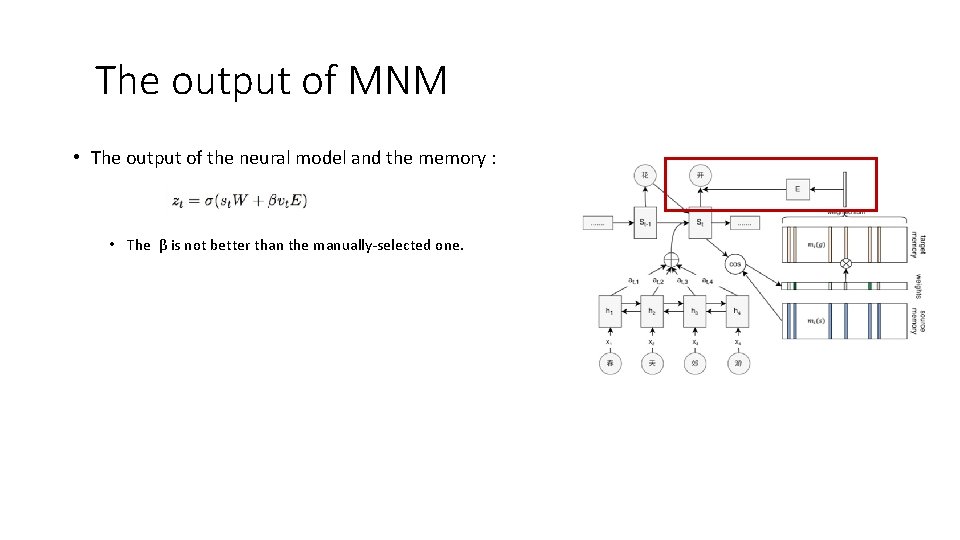

The output of MNM • The output of the neural model and the memory : • The β is not better than the manually-selected one.

Outline • Introduction • The memory-augmented neural model(The MNM) • The analysis of memory mechanism • Evaluation & Experiments • Conclusions & Future work

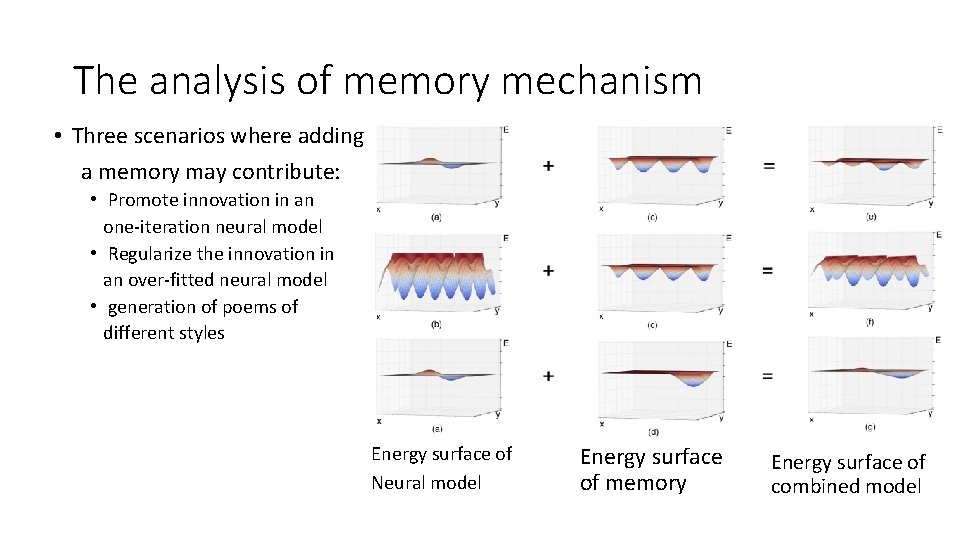

The analysis of memory mechanism • Three scenarios where adding a memory may contribute: • Promote innovation in an one-iteration neural model • Regularize the innovation in an over-fitted neural model • generation of poems of different styles Energy surface of Neural model Energy surface of memory Energy surface of combined model

Outline • Introduction • The memory-augmented neural model(The MNM) • The analysis of memory mechanism • Evaluation & Experiments • Conclusions & Future work

Evaluation metrics • five metrics to evaluate the generation: Compliance: if regulations on tones and rhymes are satisfied; Fluency: if the sentences read fluently and convey reasonable meaning; Theme consistency: if the entire poem adheres to a single theme; Aesthetic innovation: if the quatrain stimulates any aesthetic feeling with elaborate innovation; • Scenario consistency: if the scenario remains consistent. • •

Evaluation process • In innovation experiment: • Judging which of the two poems was better in terms of the five metrics including Compliance, Fluency, Theme consistency, Aesthetic innovation, Scenario consistency. • In style-transfer experiment: • Given a poem, select that which style it belongs to and mark it in four metrics including Compliance, Fluency, Aesthetic innovation, Scenario consistency.

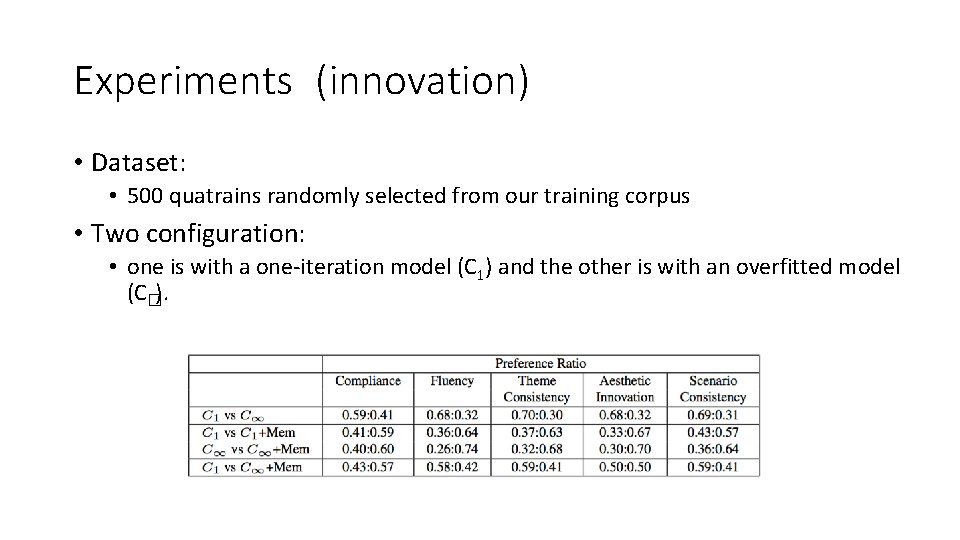

Experiments (innovation) • Dataset: • 500 quatrains randomly selected from our training corpus • Two configuration: • one is with a one-iteration model (C 1) and the other is with an overfitted model (C�).

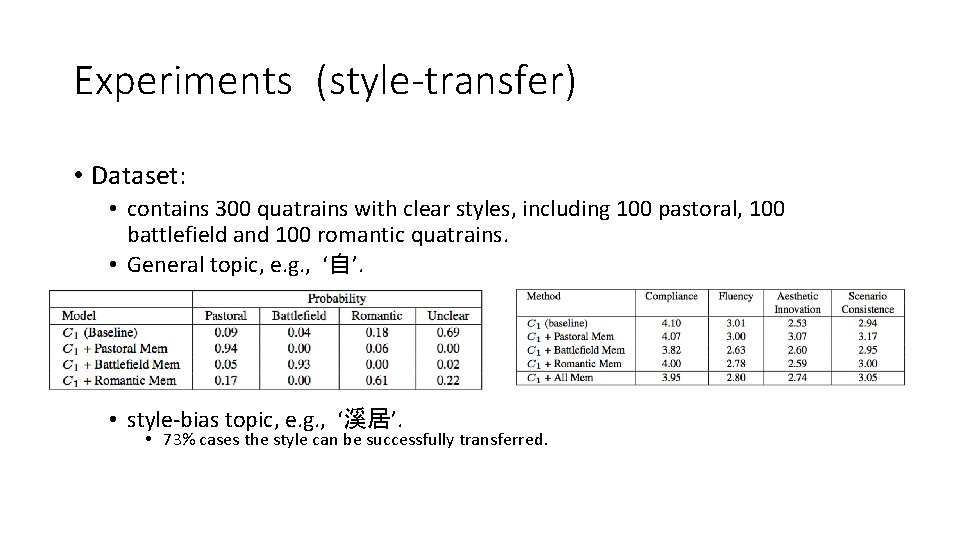

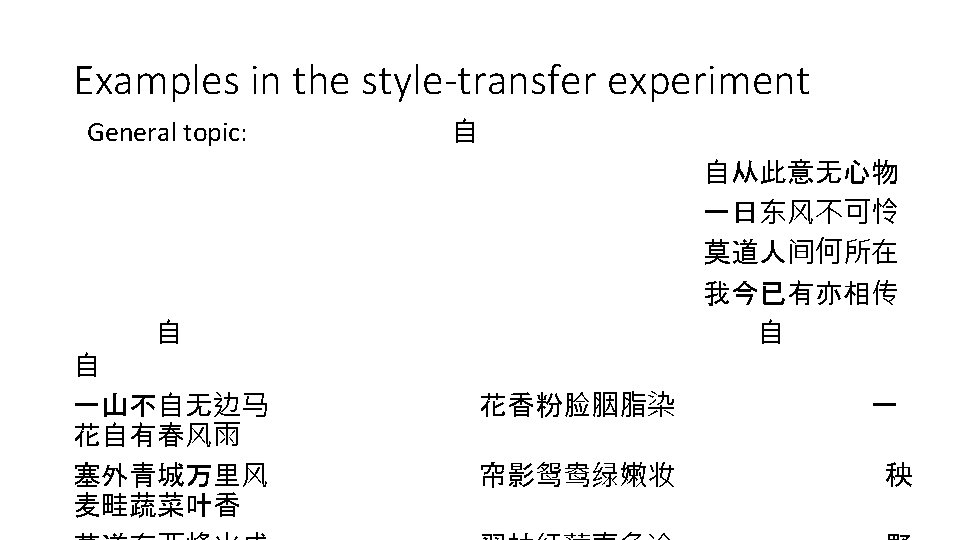

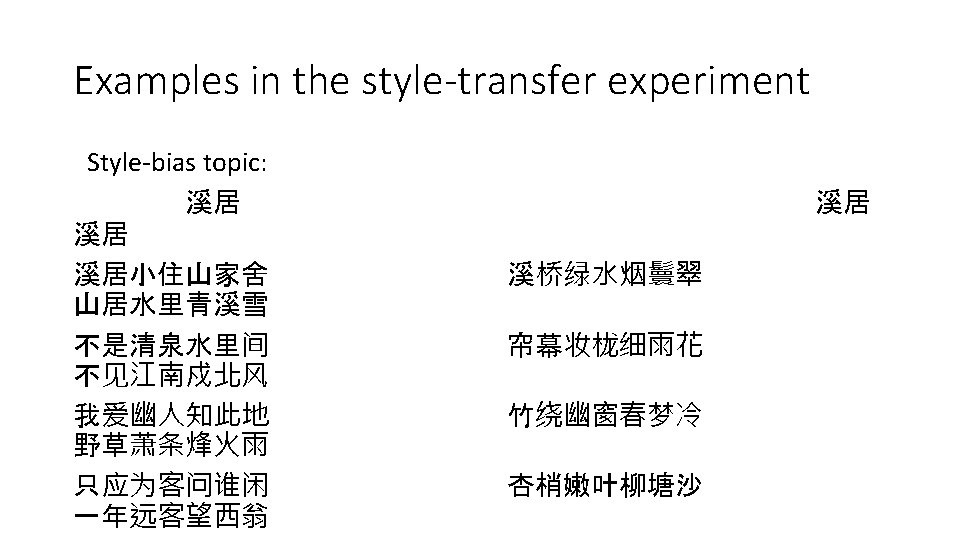

Experiments (style-transfer) • Dataset: • contains 300 quatrains with clear styles, including 100 pastoral, 100 battlefield and 100 romantic quatrains. • General topic, e. g. , ‘自’. • style-bias topic, e. g. , ‘溪居’. • 73% cases the style can be successfully transferred.

Outline • Introduction • The memory-augmented neural model(The MNM) • The analysis of memory mechanism • Evaluation & Experiments • Conclusions & Future work

Conclusions • The memory can encourage creative generation for regularly-trained models. • The memory can encourage rule-compliance for overfitted models. • The memory can modify the style of the generated poems in a flexible way.

Future work • Investigating a better memory selection scheme • Other regularization methods (e. g. , norm or drop out) may alleviate the over-fitting problem.

Thanks! Q&A

- Slides: 28