Fine Grain Cache Partitioning using PerInstruction Working Blocks

Fine Grain Cache Partitioning using Per-Instruction Working Blocks Jason Jong Kyu Park 1, Yongjun Park 2, and Scott Mahlke 1 1 University of Michigan, Ann Arbor 2 Hongik University 1 University of Michigan Electrical Engineering and Computer Science

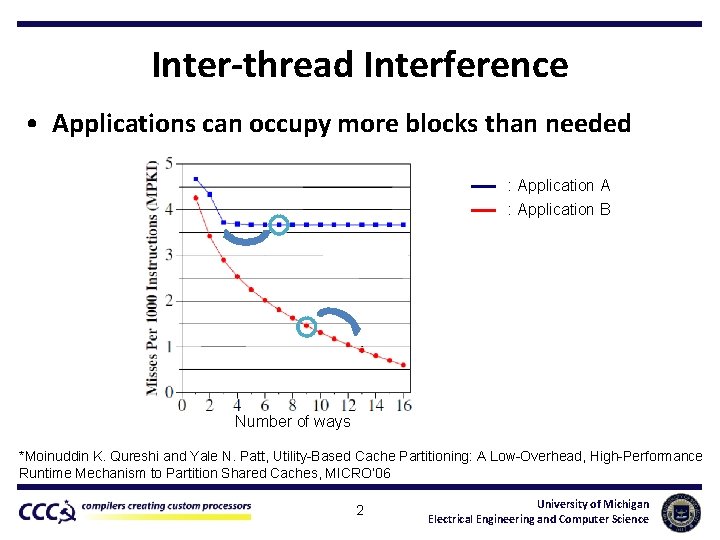

Inter-thread Interference • Applications can occupy more blocks than needed : Application A : Application B Number of ways *Moinuddin K. Qureshi and Yale N. Patt, Utility-Based Cache Partitioning: A Low-Overhead, High-Performance Runtime Mechanism to Partition Shared Caches, MICRO’ 06 2 University of Michigan Electrical Engineering and Computer Science

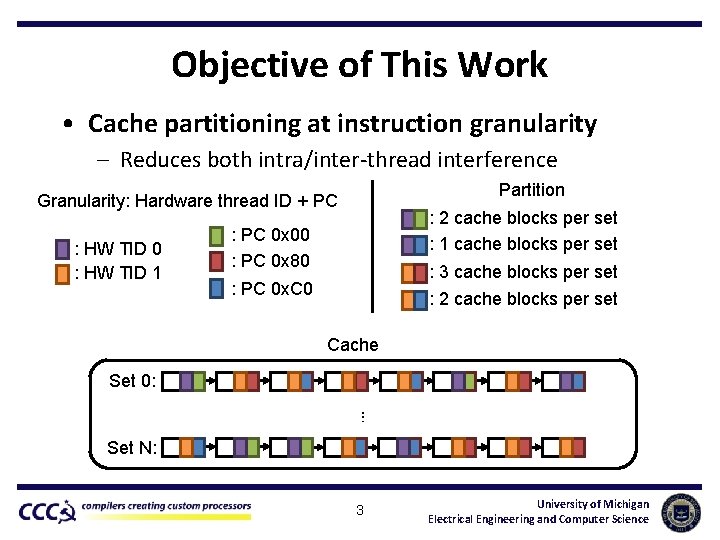

Objective of This Work • Cache partitioning at instruction granularity – Reduces both intra/inter-thread interference Partition Granularity: Hardware thread ID + PC : HW TID 0 : HW TID 1 : 2 cache blocks per set : 1 cache blocks per set : PC 0 x 00 : PC 0 x 80 : 3 cache blocks per set : 2 cache blocks per set : PC 0 x. C 0 Cache … Set 0: Set N: 3 University of Michigan Electrical Engineering and Computer Science

Background: Shared Cache Partitioning • Cache partitioning at thread granularity Hardware Thread ID Cache Partition Predictor Table Update Replacement policy Set 0: … Shadow tags for HW TID 0 Set N: Cache 4 Shadow tags for HW TID 1 University of Michigan Electrical Engineering and Computer Science

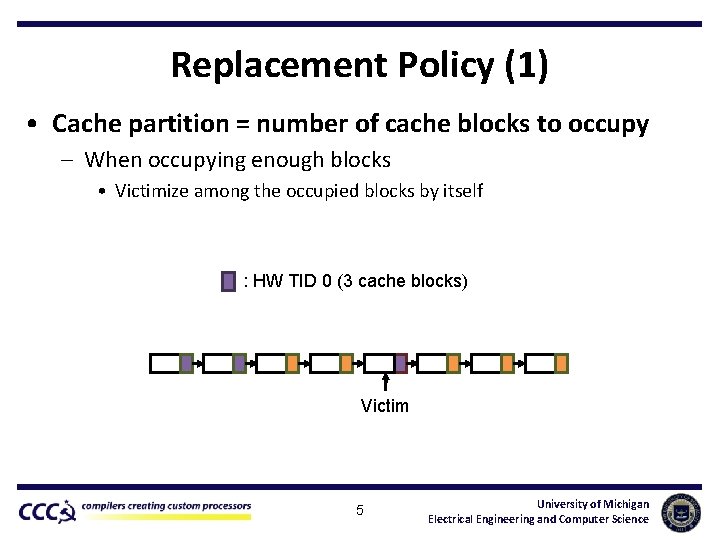

Replacement Policy (1) • Cache partition = number of cache blocks to occupy – When occupying enough blocks • Victimize among the occupied blocks by itself : HW TID 0 (3 cache blocks) Victim 5 University of Michigan Electrical Engineering and Computer Science

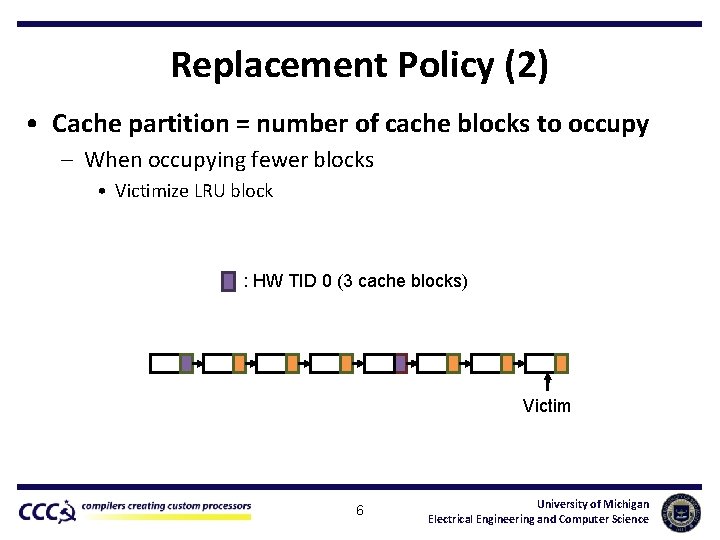

Replacement Policy (2) • Cache partition = number of cache blocks to occupy – When occupying fewer blocks • Victimize LRU block : HW TID 0 (3 cache blocks) Victim 6 University of Michigan Electrical Engineering and Computer Science

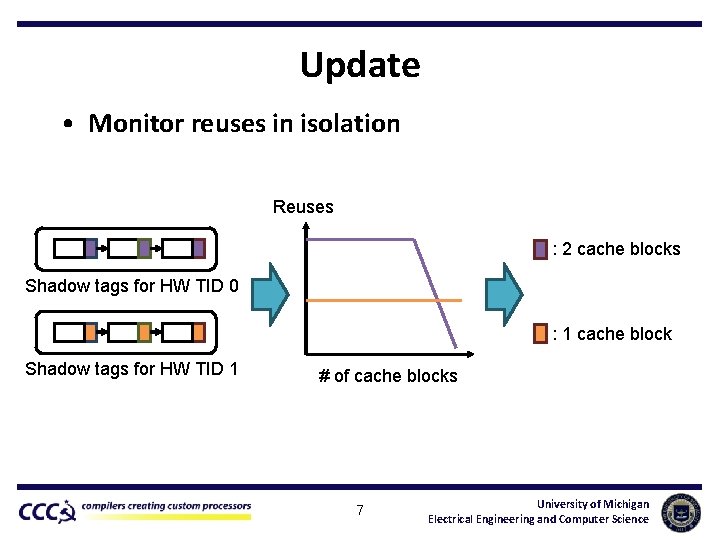

Update • Monitor reuses in isolation Reuses : 2 cache blocks Shadow tags for HW TID 0 : 1 cache block Shadow tags for HW TID 1 # of cache blocks 7 University of Michigan Electrical Engineering and Computer Science

Instruction-based LRU (ILRU) 8 University of Michigan Electrical Engineering and Computer Science

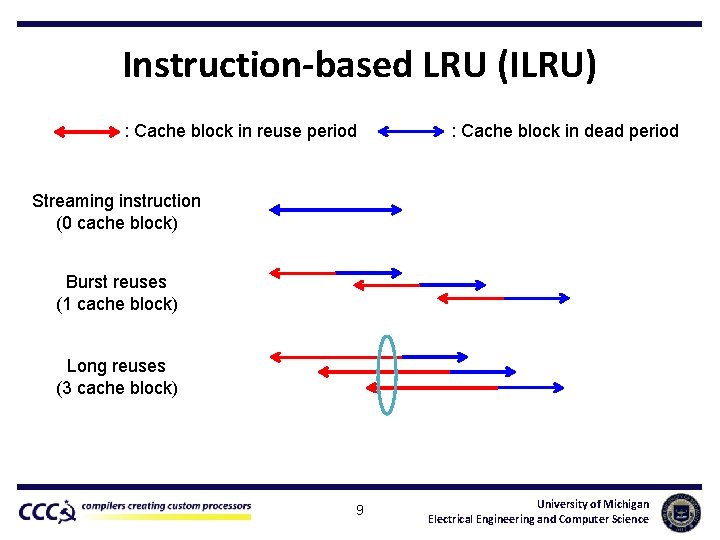

Instruction-based LRU (ILRU) : Cache block in reuse period : Cache block in dead period Streaming instruction (0 cache block) Burst reuses (1 cache block) Long reuses (3 cache block) 9 University of Michigan Electrical Engineering and Computer Science

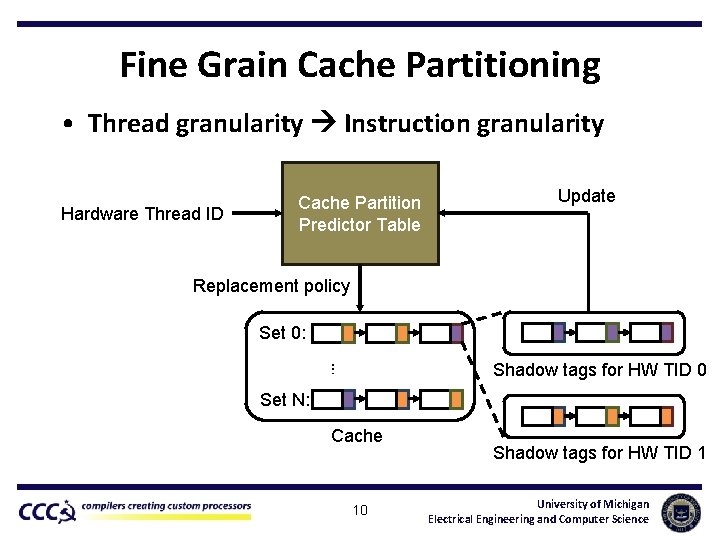

Fine Grain Cache Partitioning • Thread granularity Instruction granularity Hardware Thread ID Cache Partition Predictor Table Update Replacement policy Set 0: … Shadow tags for HW TID 0 Set N: Cache 10 Shadow tags for HW TID 1 University of Michigan Electrical Engineering and Computer Science

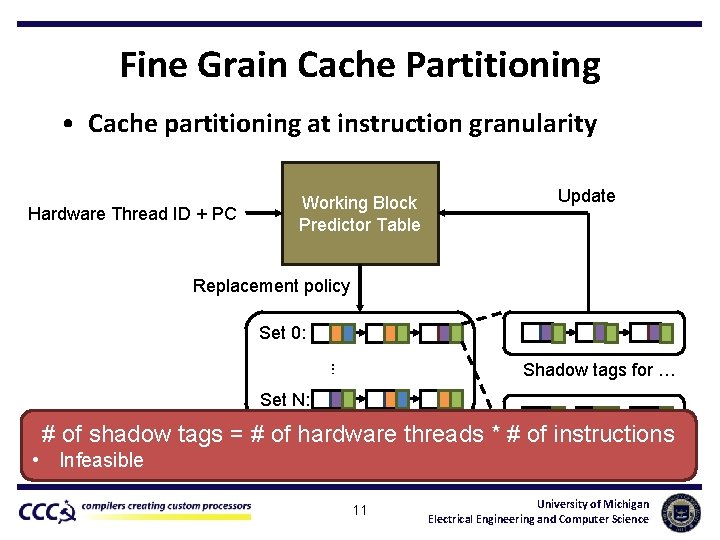

Fine Grain Cache Partitioning • Cache partitioning at instruction granularity Hardware Thread ID + PC Working Block Predictor Table Update Replacement policy Set 0: … Shadow tags for … Set N: Cache threads * # of instructions # of shadow tags = # of hardware Shadow tags for … • Infeasible 11 University of Michigan Electrical Engineering and Computer Science

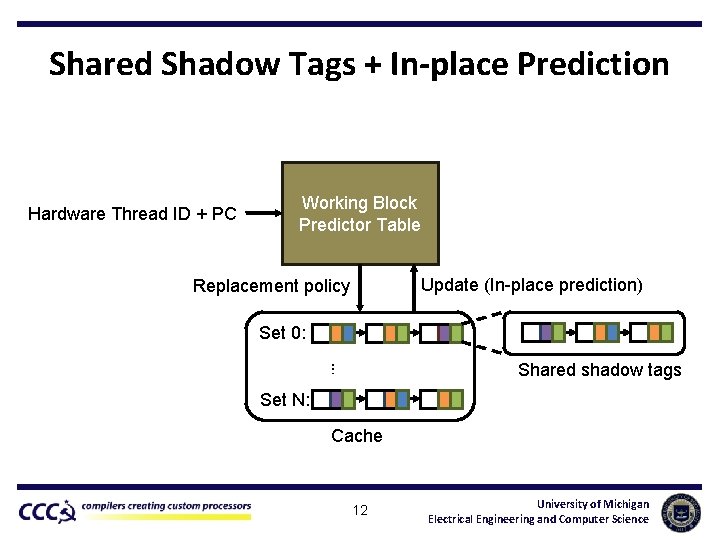

Shared Shadow Tags + In-place Prediction Hardware Thread ID + PC Working Block Predictor Table Update (In-place prediction) Replacement policy Set 0: … Shared shadow tags Set N: Cache 12 University of Michigan Electrical Engineering and Computer Science

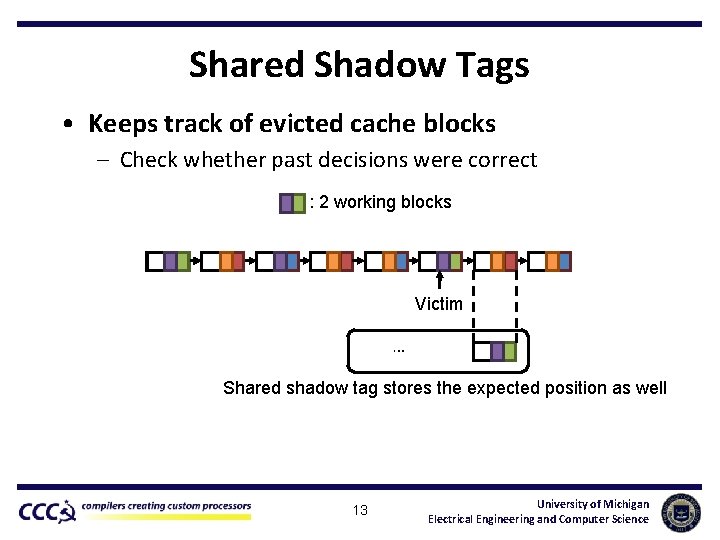

Shared Shadow Tags • Keeps track of evicted cache blocks – Check whether past decisions were correct : 2 working blocks Victim … Shared shadow tag stores the expected position as well 13 University of Michigan Electrical Engineering and Computer Science

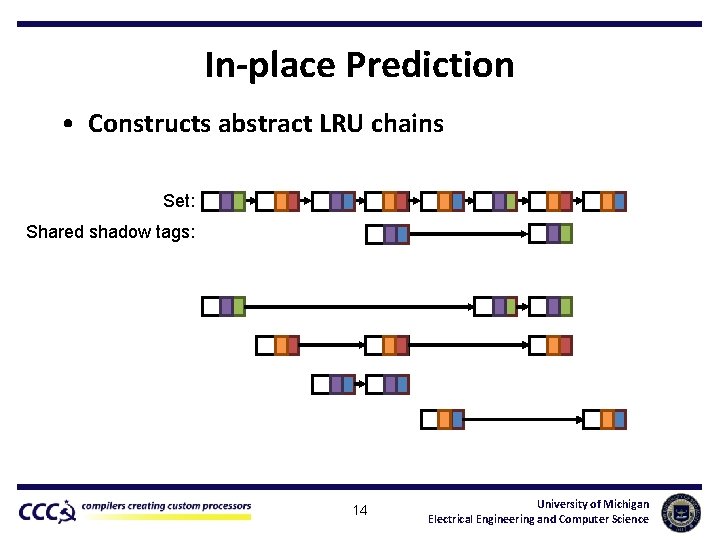

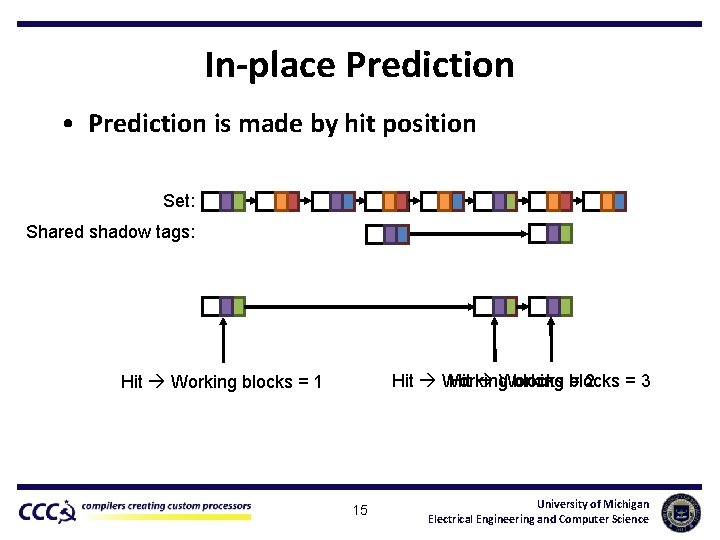

In-place Prediction • Constructs abstract LRU chains Set: Shared shadow tags: 14 University of Michigan Electrical Engineering and Computer Science

In-place Prediction • Prediction is made by hit position Set: Shared shadow tags: Hit Working blocks = blocks 2 =3 Hit Working blocks = 1 15 University of Michigan Electrical Engineering and Computer Science

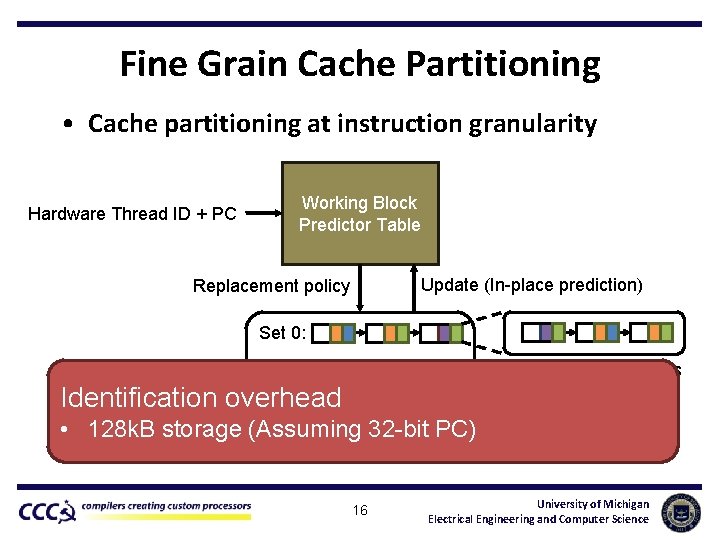

Fine Grain Cache Partitioning • Cache partitioning at instruction granularity Hardware Thread ID + PC Working Block Predictor Table Update (In-place prediction) Replacement policy Set 0: … Shared shadow tags Set N: Identification overhead • 128 k. B storage (Assuming 32 -bit PC) Cache 16 University of Michigan Electrical Engineering and Computer Science

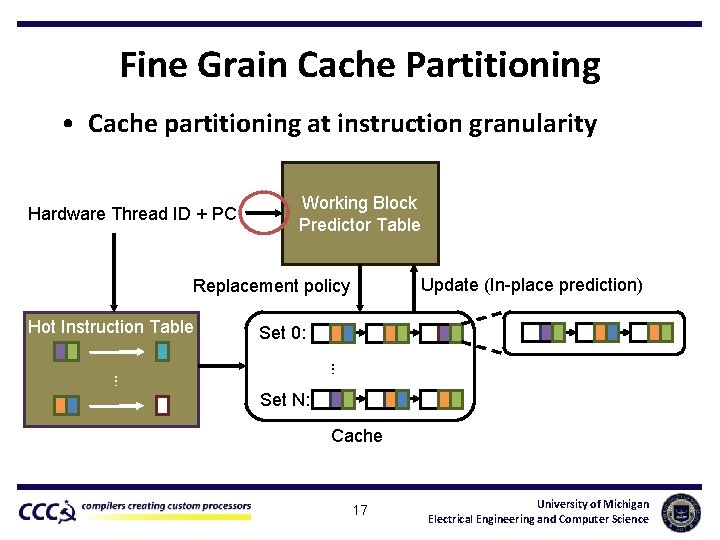

Fine Grain Cache Partitioning • Cache partitioning at instruction granularity Hardware Thread ID + PC Working Block Predictor Table Update (In-place prediction) Replacement policy … Set 0: … Hot Instruction Table Set N: Cache 17 University of Michigan Electrical Engineering and Computer Science

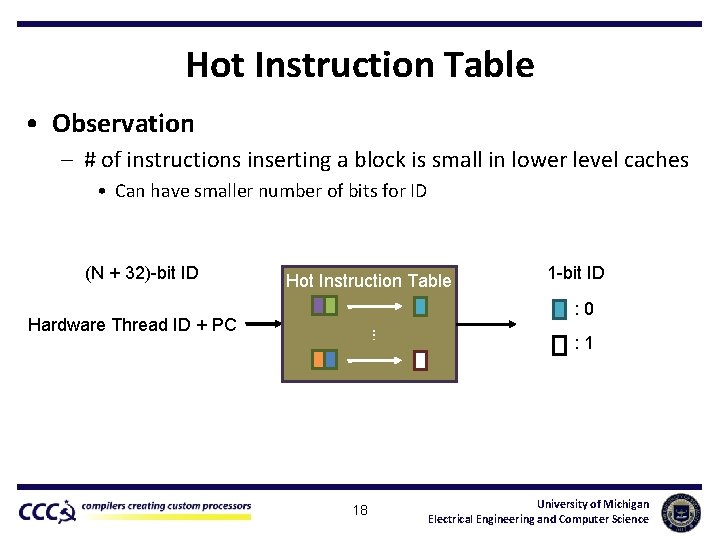

Hot Instruction Table • Observation – # of instructions inserting a block is small in lower level caches • Can have smaller number of bits for ID Hardware Thread ID + PC Hot Instruction Table 1 -bit ID : 0 … (N + 32)-bit ID 18 : 1 University of Michigan Electrical Engineering and Computer Science

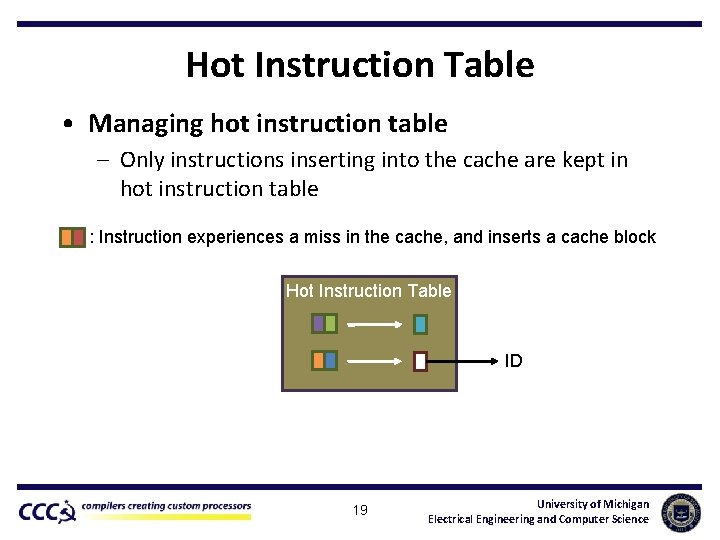

Hot Instruction Table • Managing hot instruction table – Only instructions inserting into the cache are kept in hot instruction table : Instruction experiences a miss in the cache, and inserts a cache block Hot Instruction Table ID 19 University of Michigan Electrical Engineering and Computer Science

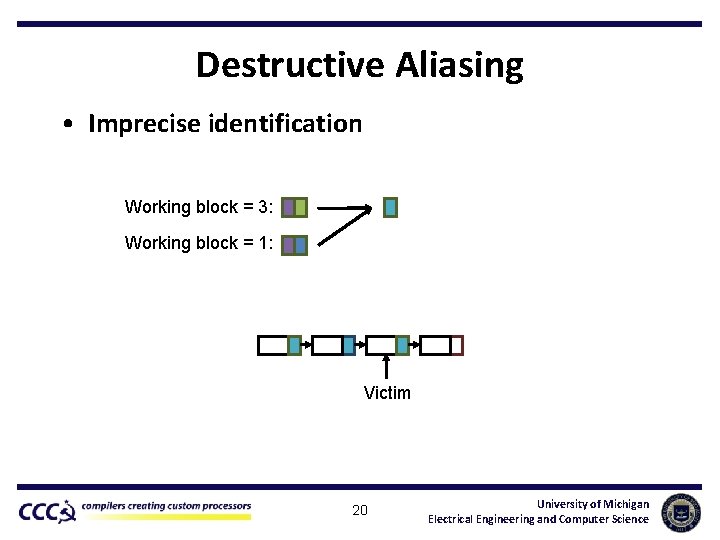

Destructive Aliasing • Imprecise identification Working block = 3: Working block = 1: Victim 20 University of Michigan Electrical Engineering and Computer Science

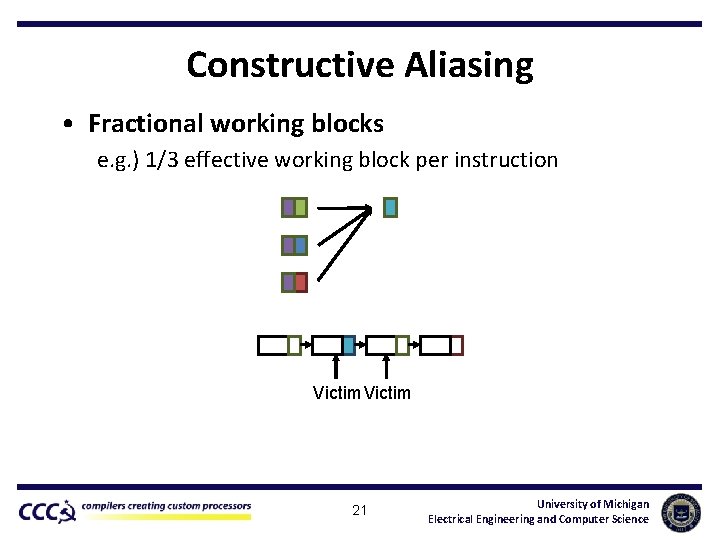

Constructive Aliasing • Fractional working blocks e. g. ) 1/3 effective working block per instruction Victim 21 University of Michigan Electrical Engineering and Computer Science

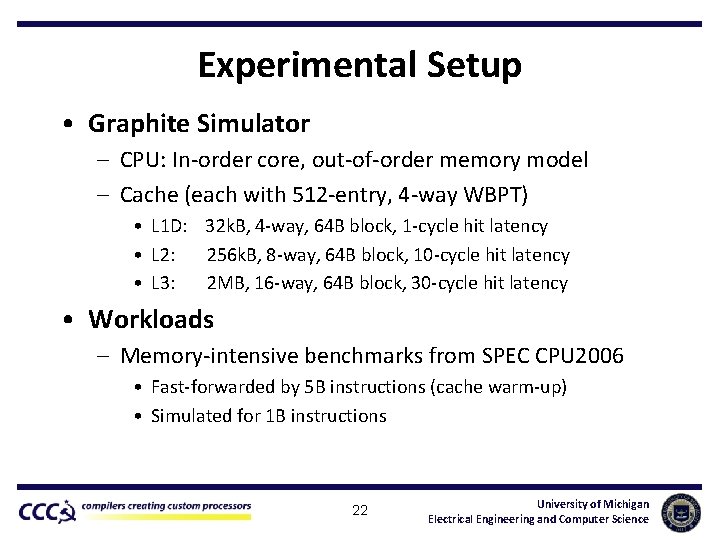

Experimental Setup • Graphite Simulator – CPU: In-order core, out-of-order memory model – Cache (each with 512 -entry, 4 -way WBPT) • L 1 D: 32 k. B, 4 -way, 64 B block, 1 -cycle hit latency • L 2: 256 k. B, 8 -way, 64 B block, 10 -cycle hit latency • L 3: 2 MB, 16 -way, 64 B block, 30 -cycle hit latency • Workloads – Memory-intensive benchmarks from SPEC CPU 2006 • Fast-forwarded by 5 B instructions (cache warm-up) • Simulated for 1 B instructions 22 University of Michigan Electrical Engineering and Computer Science

Misses per Kilo Instructions (MPKI) • 7%, 9. 1%, 8. 7% Reduction – MPKI is reduced in every cache levels 23 University of Michigan Electrical Engineering and Computer Science

Performance Comparison • 5. 3% improvement – DRRIP/SHi. P less effective at L 1/L 2 24 University of Michigan Electrical Engineering and Computer Science

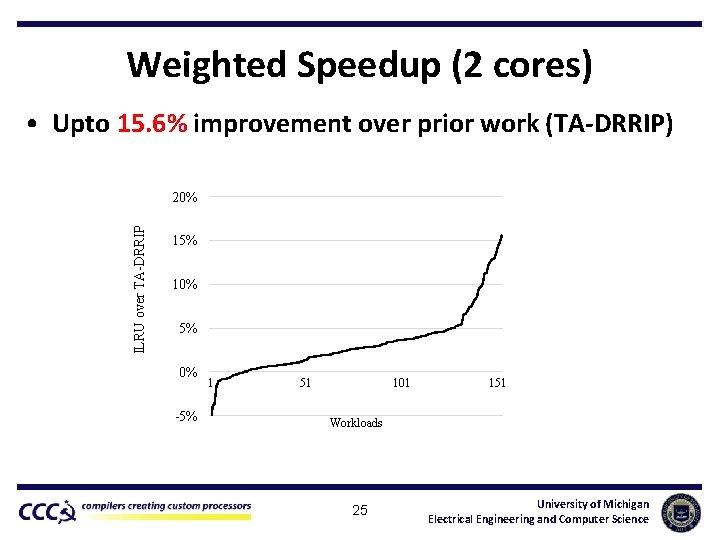

Weighted Speedup (2 cores) • Upto 15. 6% improvement over prior work (TA-DRRIP) ILRU over TA-DRRIP 20% 15% 10% 5% 0% -5% 1 51 101 151 Workloads 25 University of Michigan Electrical Engineering and Computer Science

Summary • Cache performance with interference: ↓ – Fine grain cache partitioning at instruction granularity • Improves for private caches • Naturally extends to shared cache partitioning • Instruction-based LRU (ILRU) – In-place prediction + shared shadow tags – Hot instruction table • Consistent improvement for L 1/L 2/L 3 – 7%, 9. 1%, 8. 7% MPKI reduction 26 University of Michigan Electrical Engineering and Computer Science

Questions? Fine Grain Cache Partitioning using Per-Instruction Working Blocks Jason Jong Kyu Park 1, Yongjun Park 2, and Scott Mahlke 1 1 University of Michigan, Ann Arbor 2 Hongik University 27 University of Michigan Electrical Engineering and Computer Science

- Slides: 27