Finding surprising patterns in a time series database

Finding surprising patterns in a time series database in linear time and space Advisor:Dr. Hsu Graduate:Ching-Lung Chen 9/13/2021 IDSL, Intelligent Database System Lab 1

Outline • • • Motivation Objective Introduction Discretizing Time Series Background on string processing Markov models Suffix Trees Computing score by comparing trees TARZAN algorithm Experimental evaluation Conclusions Personal opinion 9/13/2021 IDSL, Intelligent Database System Lab 2

Motivation • In time series database, the important problem of enumerating all surprising or interesting pattern has received far less attention. • This problem requires a meaningful definition of “surprise”, and an efficient search technique. 9/13/2021 IDSL, Intelligent Database System Lab 3

Objective • To overcome these limitations that allow a user to find surprising patterns in a massive database without having to specify in advance what a surprising pattern looks like. 9/13/2021 IDSL, Intelligent Database System Lab 4

Introduction • To find the surprising pattern should not be confused with the relatively simple problem of outlier detection. • We are not interested in finding individually surprising datapoints, we are interested in finding surprising patterns, 9/13/2021 IDSL, Intelligent Database System Lab 5

Introduction • We defines a pattern surprising if the frequency of its occurrence differs substantially from that expected by chance given some previously seen data. • This notion has the advantage of not requiring an explicit definition of surprise, which may in any case be impossible to elicit from a domain expert. 9/13/2021 IDSL, Intelligent Database System Lab 6

Introduction • The suffix tree can be used to efficiently encode the frequency of all observed patterns. • We demonstrate a technique based Markov models to calculate the expected frequency of previously unobserved patterns. 9/13/2021 IDSL, Intelligent Database System Lab 7

Discretizing Time Series • The obvious solution to this problem is to discretize the time series into some finite alphabet • The input are a reference time series database R, the feature window length and the size of the desired alphabet 9/13/2021 IDSL, Intelligent Database System Lab 8

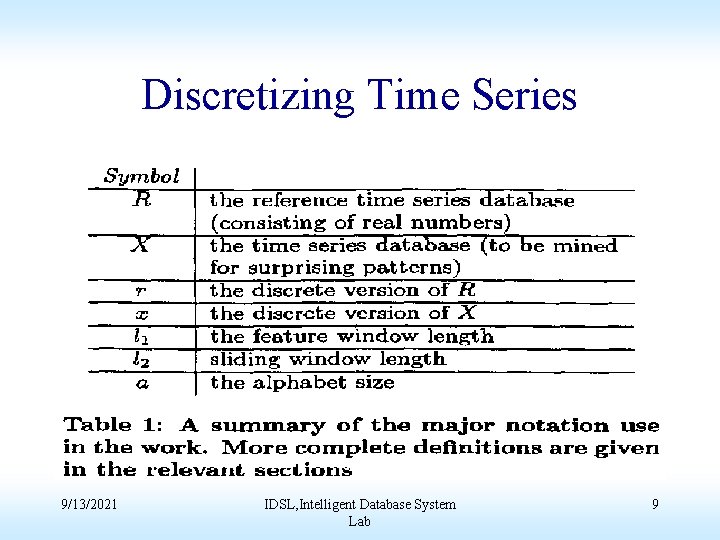

Discretizing Time Series 9/13/2021 IDSL, Intelligent Database System Lab 9

Discretizing Time Series • The feature window length is the length of a sliding window that is moved across the time series. • Here we did not specify the extract-feature、 feature window length and size of desired alphabet. 9/13/2021 IDSL, Intelligent Database System Lab 10

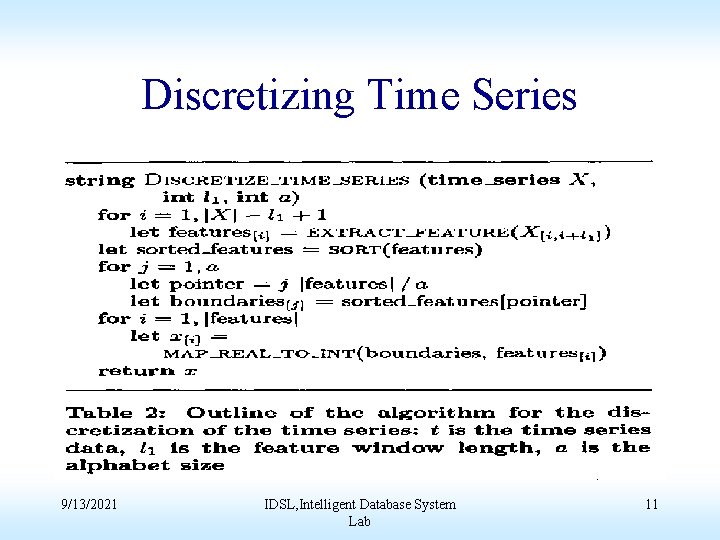

Discretizing Time Series 9/13/2021 IDSL, Intelligent Database System Lab 11

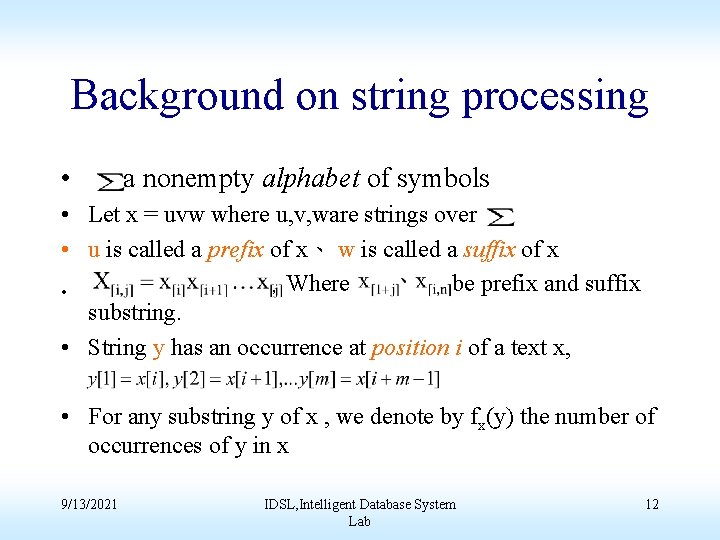

Background on string processing • a nonempty alphabet of symbols • Let x = uvw where u, v, ware strings over • u is called a prefix of x、 w is called a suffix of x be prefix and suffix , Where • substring. • String y has an occurrence at position i of a text x, • For any substring y of x , we denote by fx(y) the number of occurrences of y in x 9/13/2021 IDSL, Intelligent Database System Lab 12

![Markov models • Let x=x[1] x[2]…x[n] be an observation of random process and y=y[1] Markov models • Let x=x[1] x[2]…x[n] be an observation of random process and y=y[1]](http://slidetodoc.com/presentation_image_h2/a563879f3fc563a7a0dcb44891cca36f/image-13.jpg)

Markov models • Let x=x[1] x[2]…x[n] be an observation of random process and y=y[1] y[2]…. y[m] an arbitrary but fixed pattern over with m<n • The stationary Markov chain is completely determined by its transition matrix where are call transition probabilities with y[1], …, y[M] 9/13/2021 IDSL, Intelligent Database System Lab 13

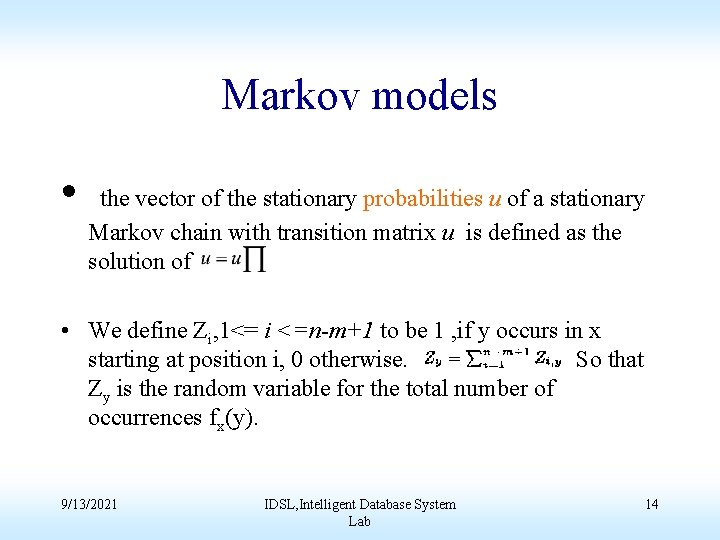

Markov models • the vector of the stationary probabilities u of a stationary Markov chain with transition matrix u is defined as the solution of • We define Zi, 1<= i <=n-m+1 to be 1 , if y occurs in x starting at position i, 0 otherwise. So that Zy is the random variable for the total number of occurrences fx(y). 9/13/2021 IDSL, Intelligent Database System Lab 14

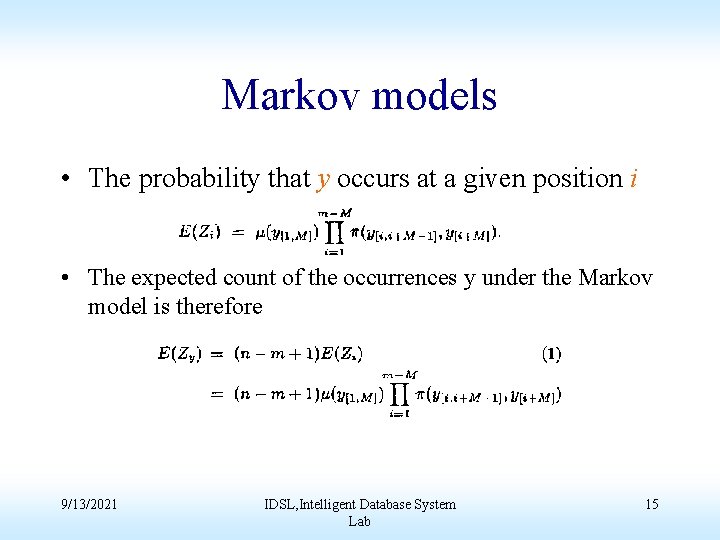

Markov models • The probability that y occurs at a given position i • The expected count of the occurrences y under the Markov model is therefore 9/13/2021 IDSL, Intelligent Database System Lab 15

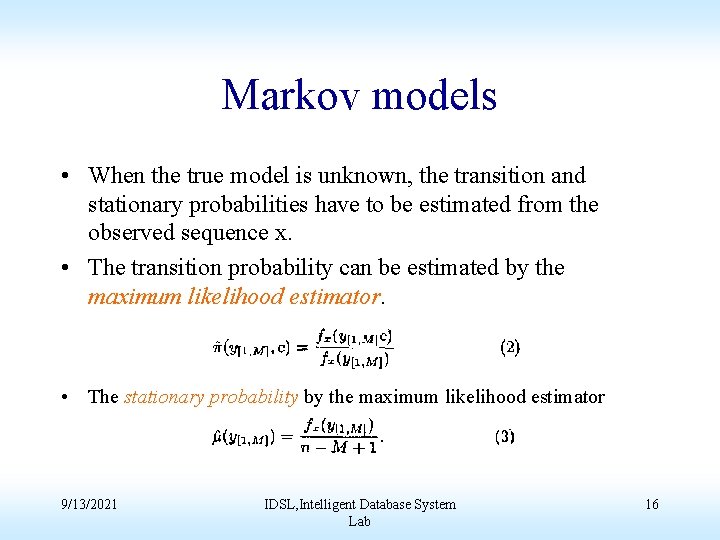

Markov models • When the true model is unknown, the transition and stationary probabilities have to be estimated from the observed sequence x. • The transition probability can be estimated by the maximum likelihood estimator. • The stationary probability by the maximum likelihood estimator 9/13/2021 IDSL, Intelligent Database System Lab 16

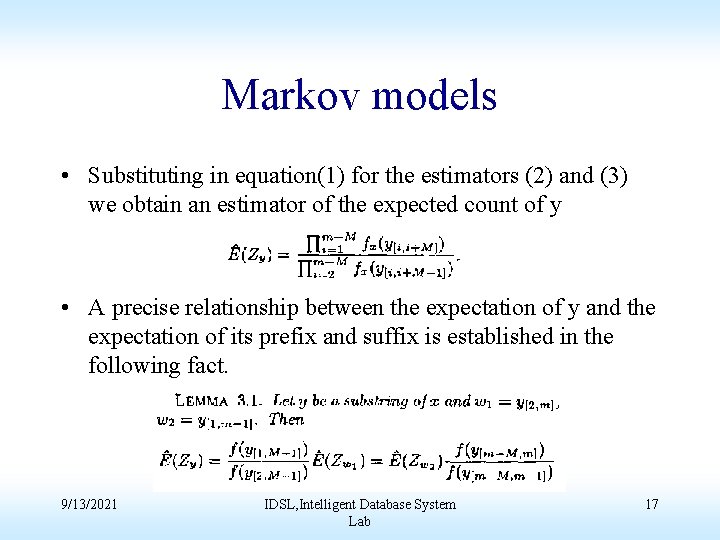

Markov models • Substituting in equation(1) for the estimators (2) and (3) we obtain an estimator of the expected count of y • A precise relationship between the expectation of y and the expectation of its prefix and suffix is established in the following fact. 9/13/2021 IDSL, Intelligent Database System Lab 17

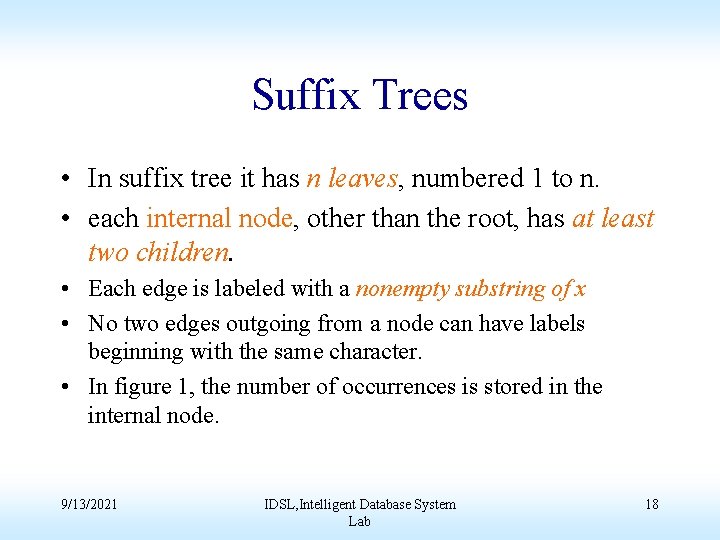

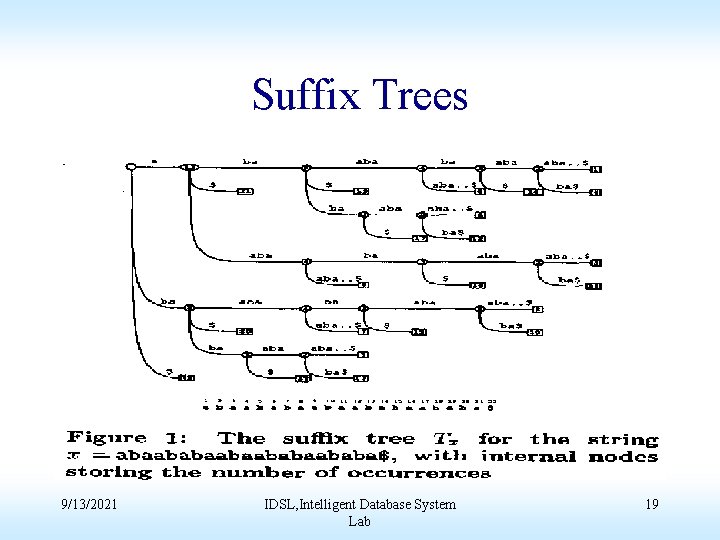

Suffix Trees • In suffix tree it has n leaves, numbered 1 to n. • each internal node, other than the root, has at least two children. • Each edge is labeled with a nonempty substring of x • No two edges outgoing from a node can have labels beginning with the same character. • In figure 1, the number of occurrences is stored in the internal node. 9/13/2021 IDSL, Intelligent Database System Lab 18

Suffix Trees 9/13/2021 IDSL, Intelligent Database System Lab 19

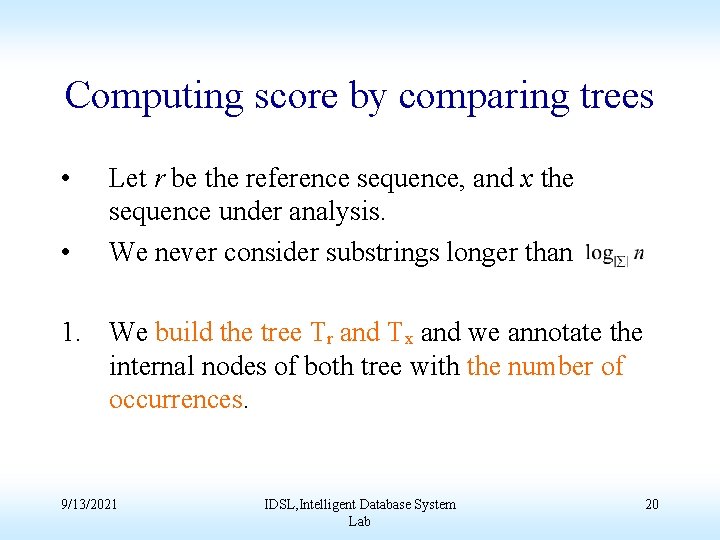

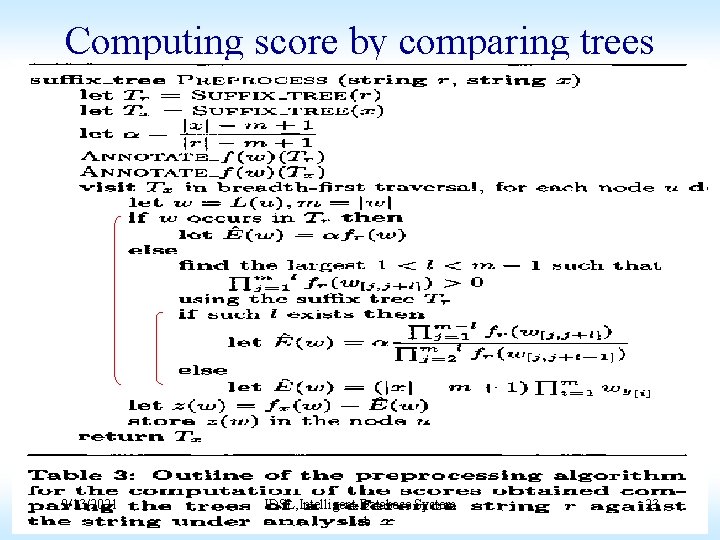

Computing score by comparing trees • • Let r be the reference sequence, and x the sequence under analysis. We never consider substrings longer than 1. We build the tree Tr and Tx and we annotate the internal nodes of both tree with the number of occurrences. 9/13/2021 IDSL, Intelligent Database System Lab 20

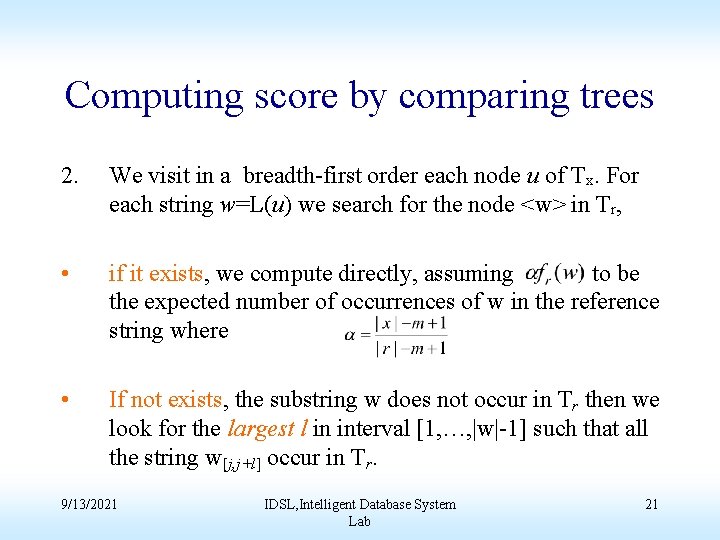

Computing score by comparing trees 2. We visit in a breadth-first order each node u of Tx. For each string w=L(u) we search for the node <w> in Tr, • if it exists, we compute directly, assuming to be the expected number of occurrences of w in the reference string where • If not exists, the substring w does not occur in Tr then we look for the largest l in interval [1, …, |w|-1] such that all the string w[j, j+l] occur in Tr. 9/13/2021 IDSL, Intelligent Database System Lab 21

Computing score by comparing trees 3. Se set the surprise z(w) to be the difference between the observed number of occurrences fx(w) and 9/13/2021 IDSL, Intelligent Database System Lab 22

Computing score by comparing trees 9/13/2021 IDSL, Intelligent Database System Lab 23

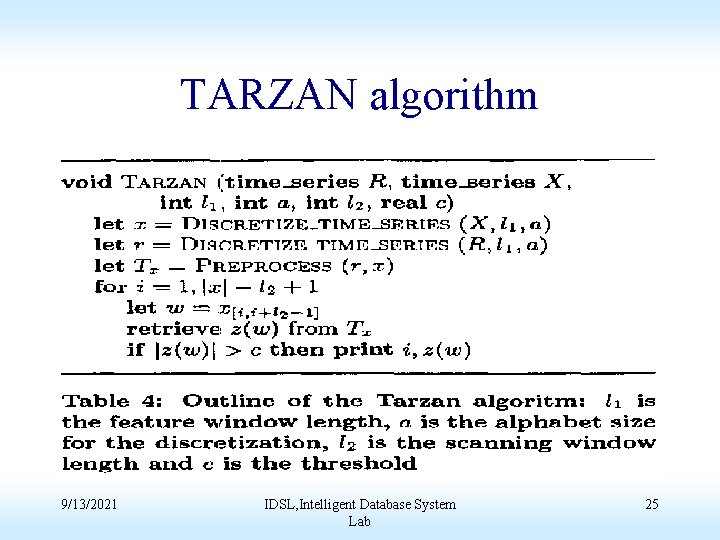

TARZAN algorithm • The inputs are the reference database R, the database to be examined X, and the three parameter which control the feature extraction and representation. • Those substring which have surprising ratings exceeding a certain user defined threshold. 9/13/2021 IDSL, Intelligent Database System Lab 24

TARZAN algorithm 9/13/2021 IDSL, Intelligent Database System Lab 25

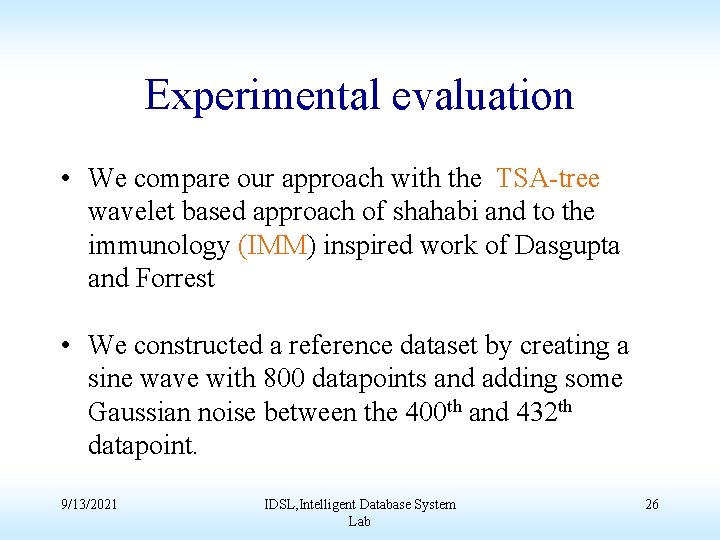

Experimental evaluation • We compare our approach with the TSA-tree wavelet based approach of shahabi and to the immunology (IMM) inspired work of Dasgupta and Forrest • We constructed a reference dataset by creating a sine wave with 800 datapoints and adding some Gaussian noise between the 400 th and 432 th datapoint. 9/13/2021 IDSL, Intelligent Database System Lab 26

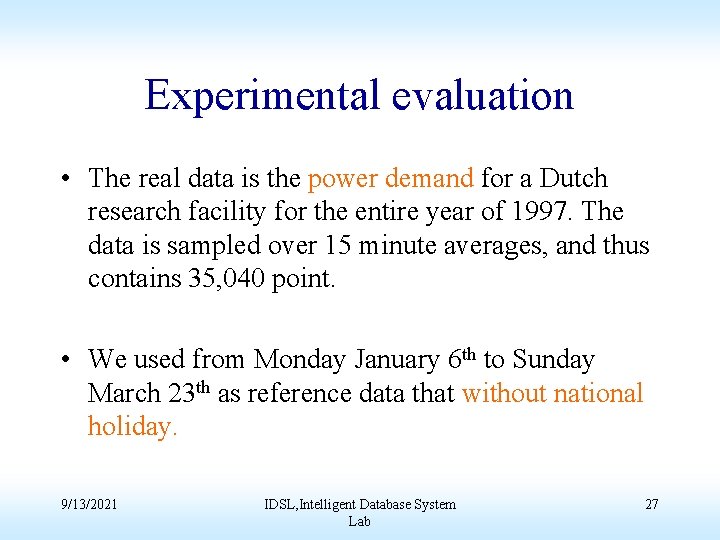

Experimental evaluation • The real data is the power demand for a Dutch research facility for the entire year of 1997. The data is sampled over 15 minute averages, and thus contains 35, 040 point. • We used from Monday January 6 th to Sunday March 23 th as reference data that without national holiday. 9/13/2021 IDSL, Intelligent Database System Lab 27

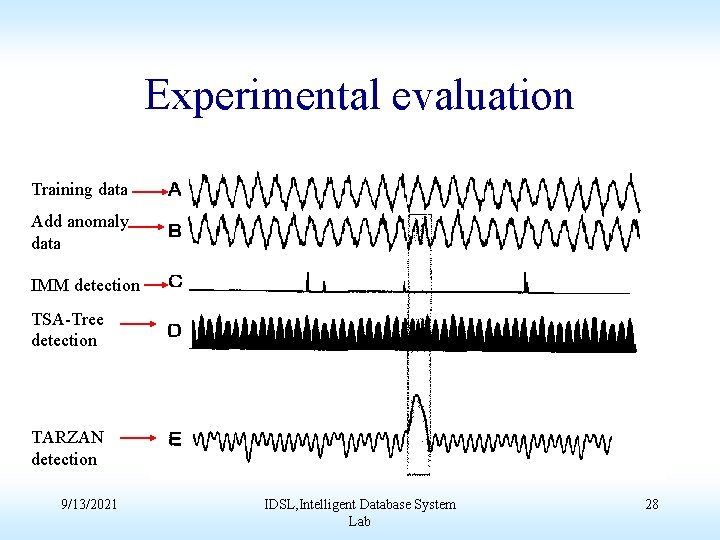

Experimental evaluation Training data Add anomaly data IMM detection TSA-Tree detection TARZAN detection 9/13/2021 IDSL, Intelligent Database System Lab 28

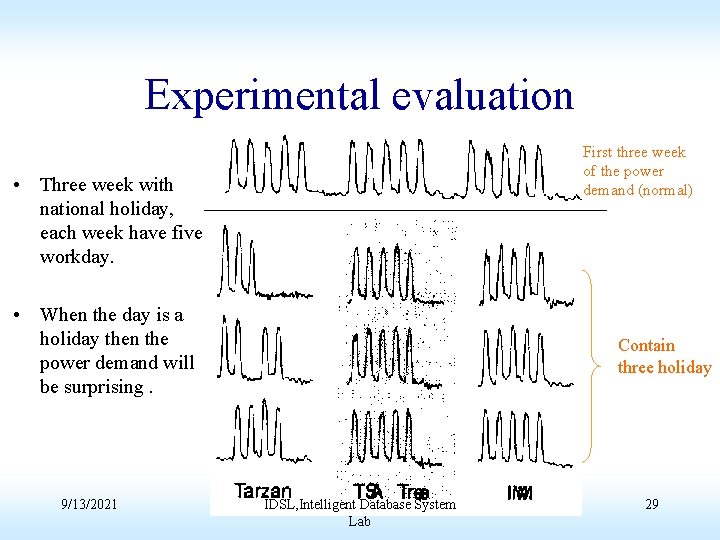

Experimental evaluation First three week of the power demand (normal) • Three week with national holiday, each week have five workday. • When the day is a holiday then the power demand will be surprising. 9/13/2021 Contain three holiday IDSL, Intelligent Database System Lab 29

Related work • In several paper suggest a method to find both trends and surprises in large time series datasets. • TSA-Tree is not suitable for detecting unusual data patterns that hide inside the normal signal range. 9/13/2021 IDSL, Intelligent Database System Lab 30

Conclusions • We introduced TARZAN, an algorithm that detects surprising patterns in a time series database in linear space and time. • Our definition of surprising is general and domain independent. • We compared it to two other algorithms on both real and synthetic data, and found it to have much higher sensitivity and selectivity. 9/13/2021 IDSL, Intelligent Database System Lab 31

Personal Opinion • Maybe we can use such method to find surprising pattern in CIS database. • The Markov models is too difficult to understand in this paper, so I can’t give an example with it. 9/13/2021 IDSL, Intelligent Database System Lab 32

- Slides: 32