Finding Selfsimilarity in People Opportunistic Networks LingJyh Chen

- Slides: 22

Finding Self-similarity in People Opportunistic Networks Ling-Jyh Chen, Yung-Chih Chen, Paruvelli Sreedevi, Kuan-Ta Chen-Hung Yu, Hao Chu

Motivation • Fundamental properties of opportunistic networks are still under investigation. • Observe inter-contact time distribution to better understand network connectivity. • The long been ignored censorship issue • Regular people mobility

Contribution • Point out and recover censorship existing in opportunistic traces – Propose Censorship Removal Algorithm (CRA) – Recover censored measurements • Prove the inter-contact time process as self-similar for future research on opportunistic networks

Outline • • • Trace Description Censorship Issue Survival Analysis Censorship Removal Algorithm Self-similarity

Trace Description • UCSD campus trace – 77 days, 273 nodes involved – Client-based trace using PDAs • Dartmouth College trace – 1777 days, 5148 nodes involved – 77 days extracted for comparison – Interface-based trace using Wi-Fi adapters • Basic assumption for a contact – Two nodes are associated to the same AP at the same time period.

Inter-contact time • Time period between 2 consecutive contacts • Simplest way to observe network connectivity – Disconnection duration – Reconnection/ disconnection frequency – Distribution of inter-contact time

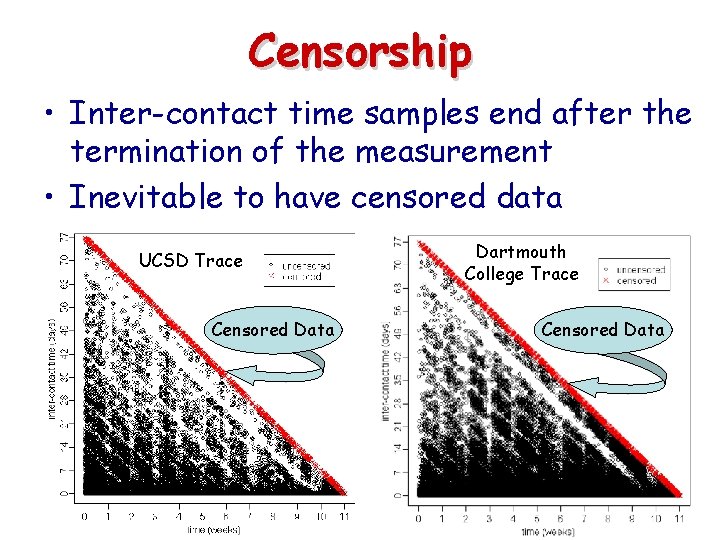

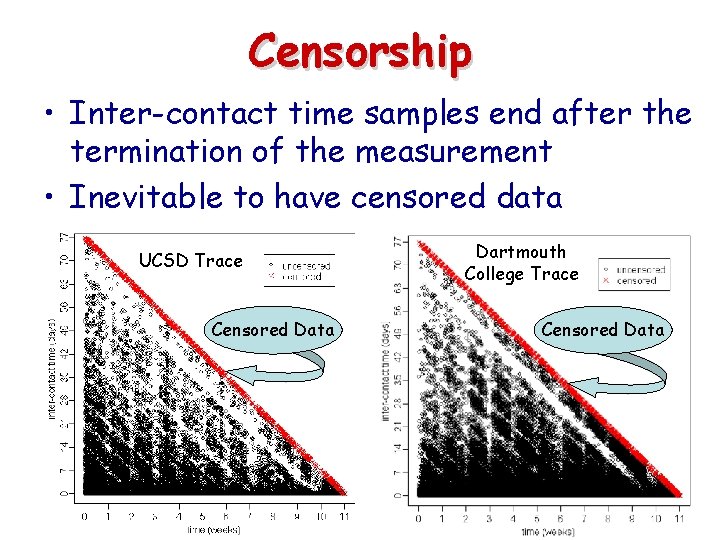

Censorship • Inter-contact time samples end after the termination of the measurement • Inevitable to have censored data UCSD Trace Censored Data Dartmouth College Trace Censored Data

Survival Analysis • Important study in biostatistics, medicine, … – Estimate censored patients’ time to live or death – Map to censored inter-contact time samples • Censored samples should have the same likelihood distribution as the uncensored’s. – Kaplan-Meier’s Estimator – Survivorship’s Function

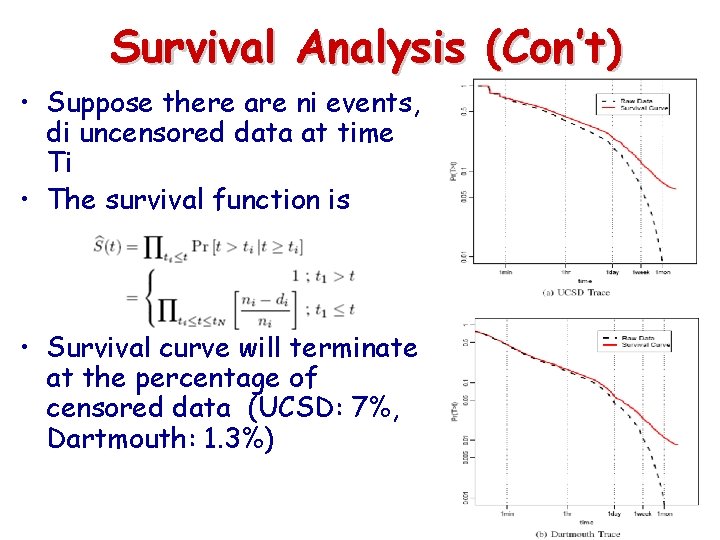

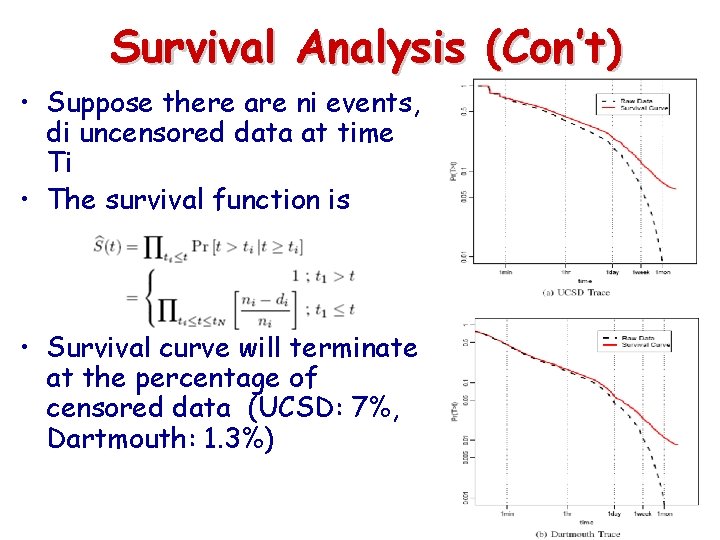

Survival Analysis (Con’t) • Suppose there are ni events, di uncensored data at time Ti • The survival function is • Survival curve will terminate at the percentage of censored data (UCSD: 7%, Dartmouth: 1. 3%)

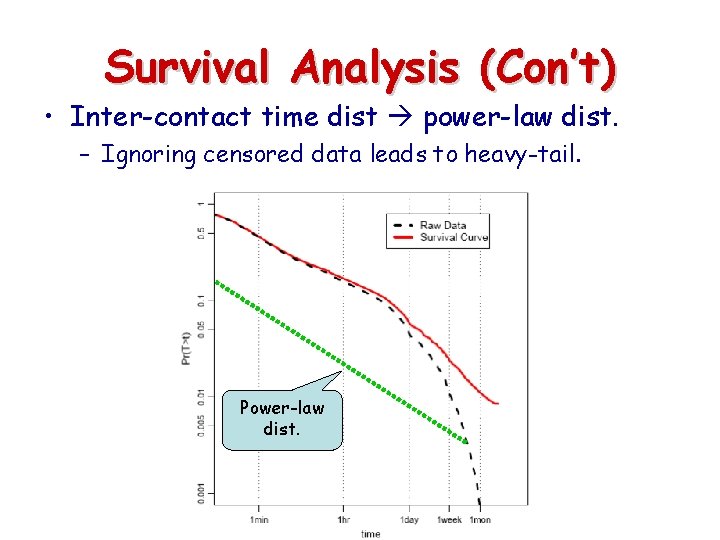

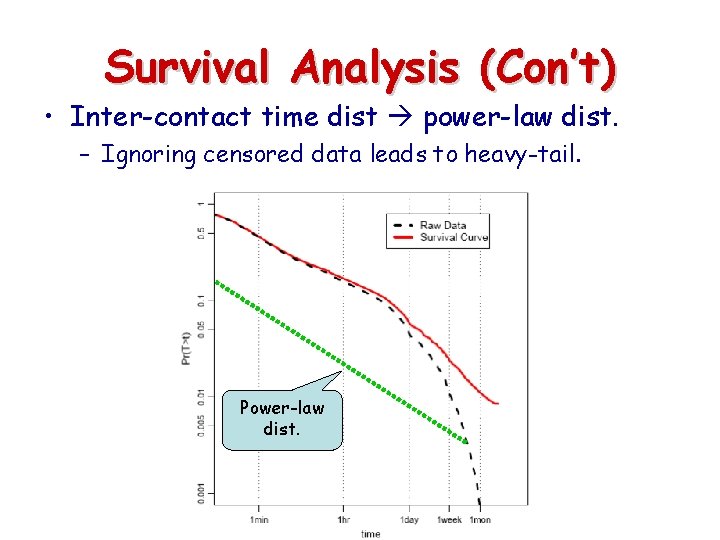

Survival Analysis (Con’t) • Inter-contact time dist power-law dist. – Ignoring censored data leads to heavy-tail. Power-law dist.

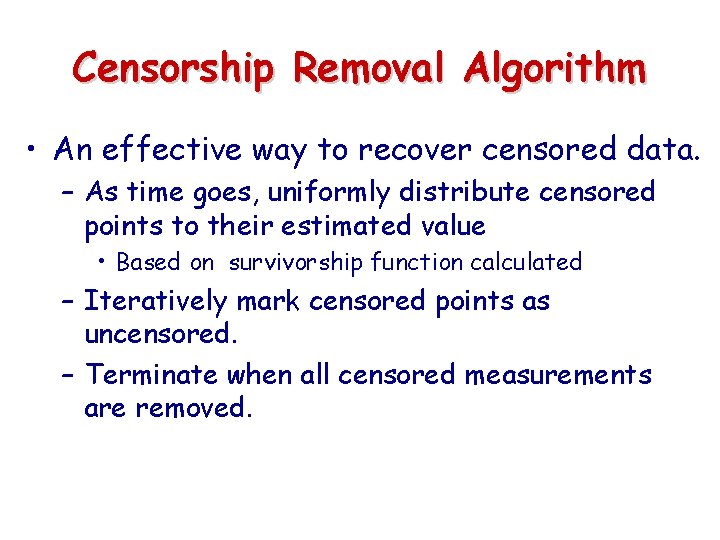

Censorship Removal Algorithm • An effective way to recover censored data. – As time goes, uniformly distribute censored points to their estimated value • Based on survivorship function calculated – Iteratively mark censored points as uncensored. – Terminate when all censored measurements are removed.

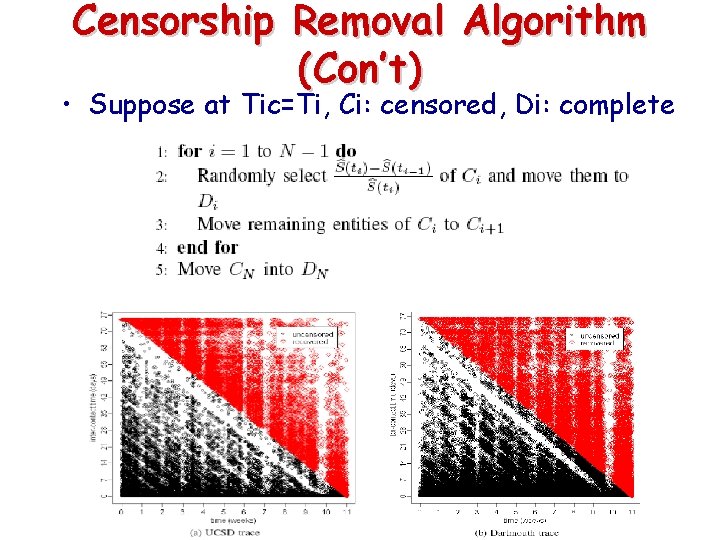

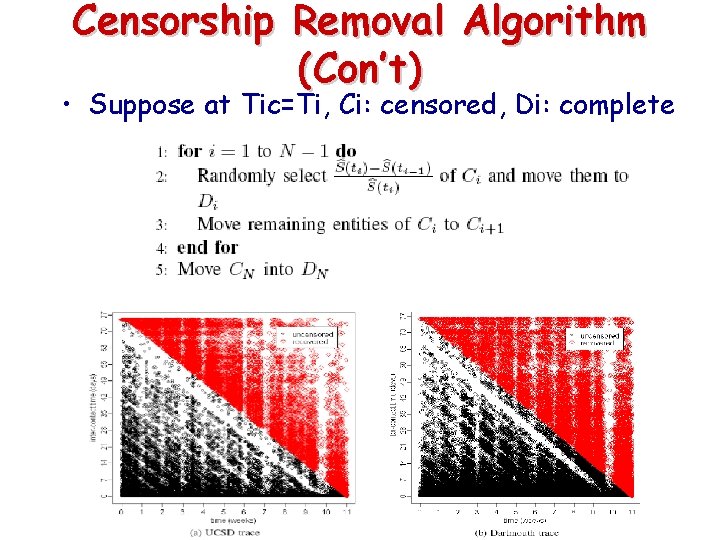

Censorship Removal Algorithm (Con’t) • Suppose at Tic=Ti, Ci: censored, Di: complete

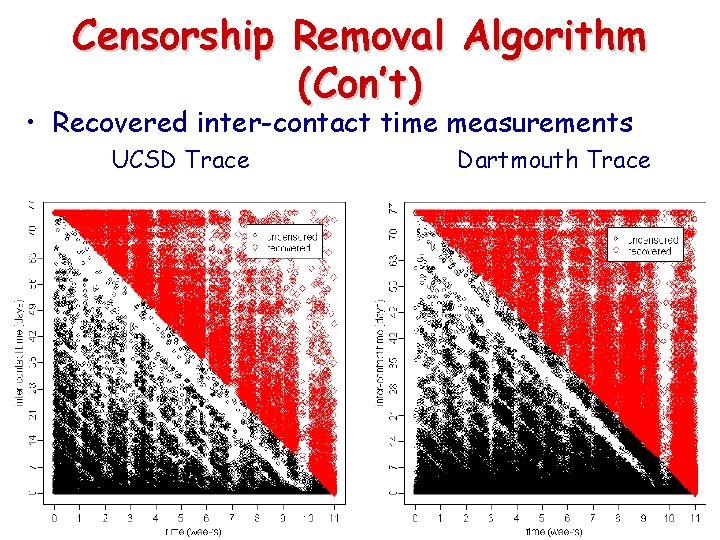

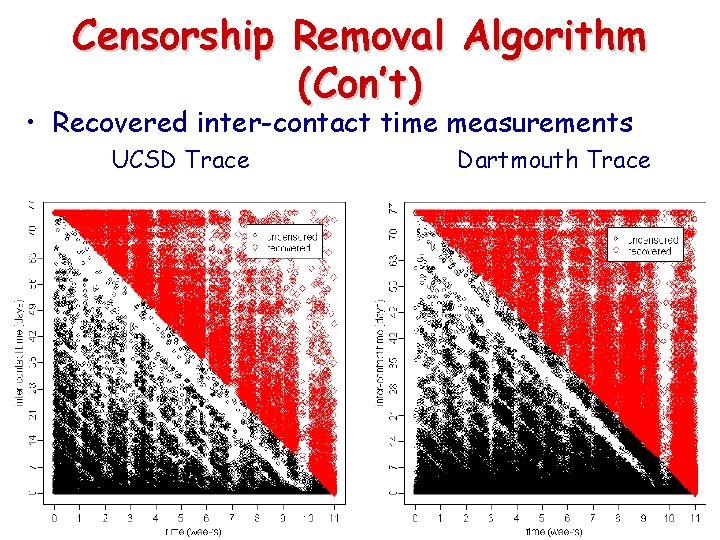

Censorship Removal Algorithm (Con’t) • Recovered inter-contact time measurements UCSD Trace Dartmouth Trace

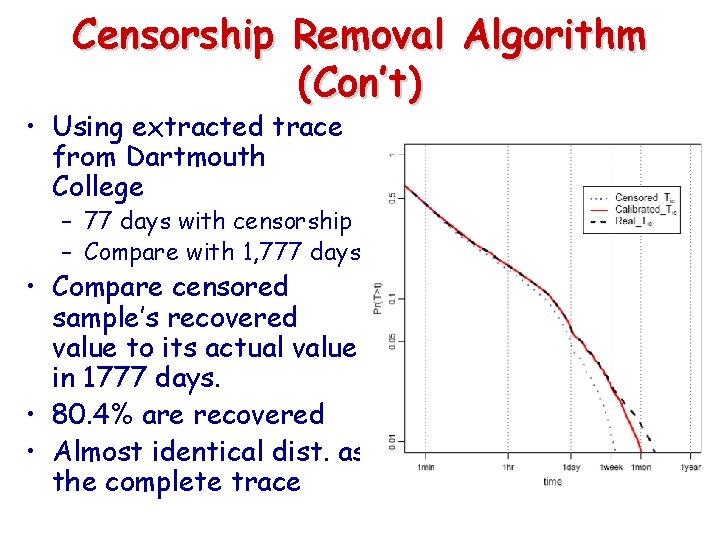

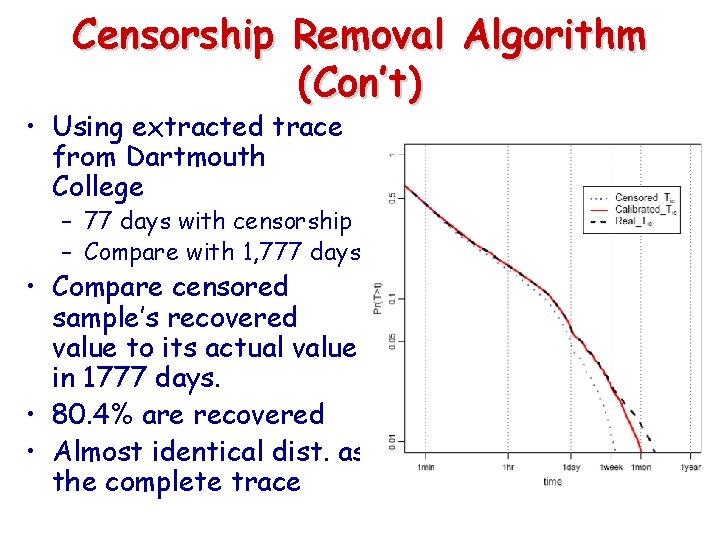

Censorship Removal Algorithm (Con’t) • Using extracted trace from Dartmouth College – 77 days with censorship – Compare with 1, 777 days • Compare censored sample’s recovered value to its actual value in 1777 days. • 80. 4% are recovered • Almost identical dist. as the complete trace

Self-Similarity • What is self-similarity? – By definition, a self-similar object is exactly or approximately similar to part of itself. • In opportunistic network, we focus on the network connectivity: inter-contact time • With recovered measurements, we prove intercontact time series as self-similar process – Periodical reconnection/disconnection – Regular pattern in people opportunistic networks

Self-Similarity • A self-similar series – Distribution should be heavy-tailed – Should satisfy three statistical analyses • Estimated by a specific parameter : Hurst Parameter • Variance Plot, R/S Plot, Periodogram Plot • H should be in the range of 0. 5~1 – Results of three methods should be in the 95% confidence interval of Whittle estimator

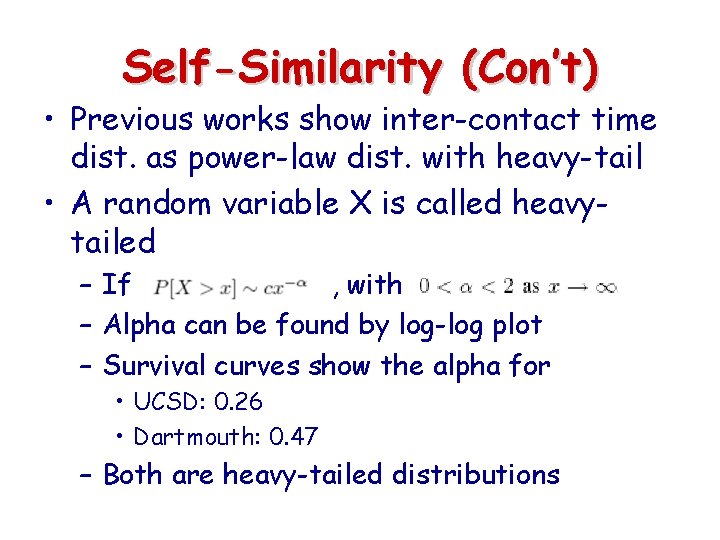

Self-Similarity (Con’t) • Previous works show inter-contact time dist. as power-law dist. with heavy-tail • A random variable X is called heavytailed – If , with – Alpha can be found by log-log plot – Survival curves show the alpha for • UCSD: 0. 26 • Dartmouth: 0. 47 – Both are heavy-tailed distributions

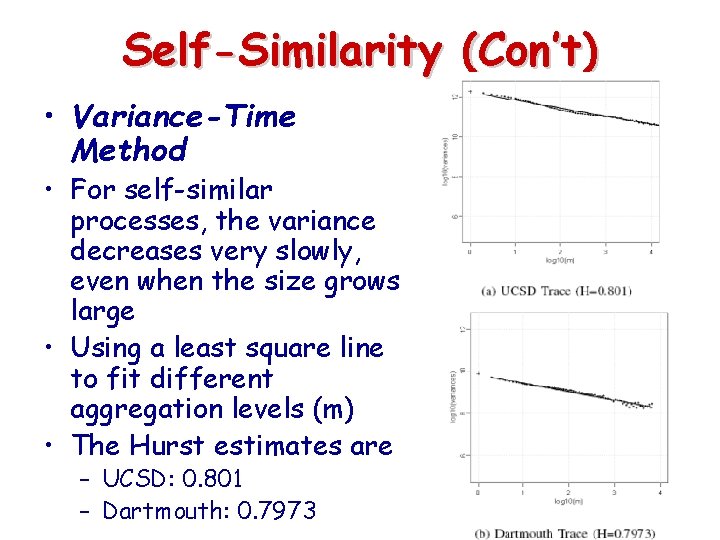

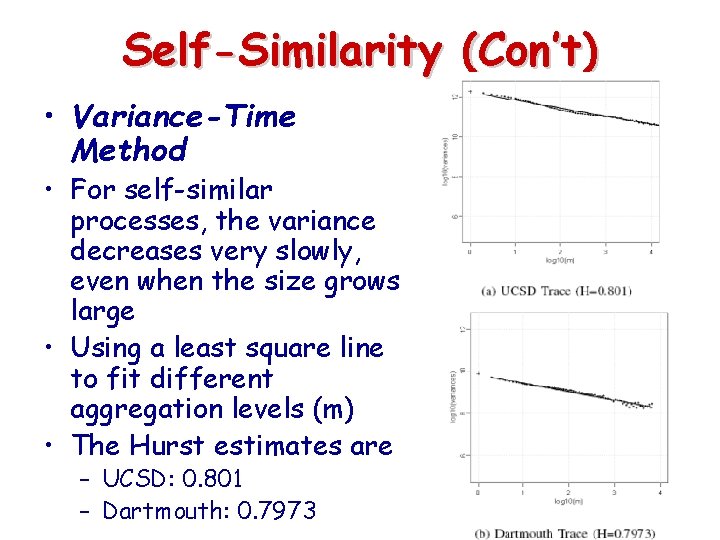

Self-Similarity (Con’t) • Variance-Time Method • For self-similar processes, the variance decreases very slowly, even when the size grows large • Using a least square line to fit different aggregation levels (m) • The Hurst estimates are – UCSD: 0. 801 – Dartmouth: 0. 7973

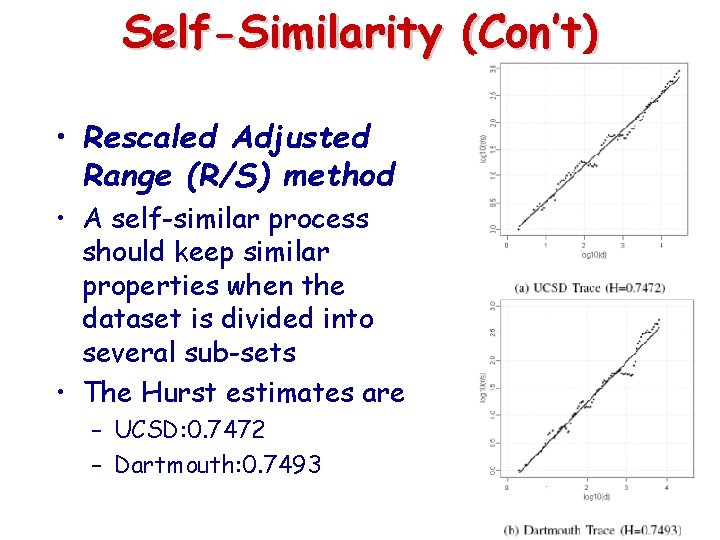

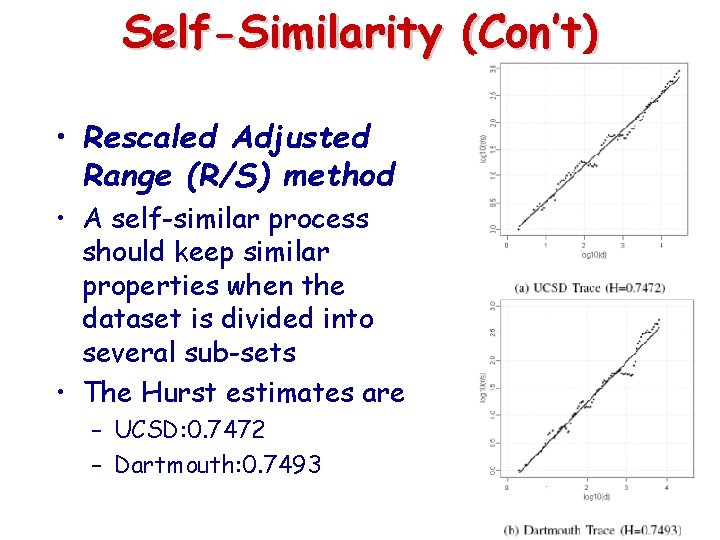

Self-Similarity (Con’t) • Rescaled Adjusted Range (R/S) method • A self-similar process should keep similar properties when the dataset is divided into several sub-sets • The Hurst estimates are – UCSD: 0. 7472 – Dartmouth: 0. 7493

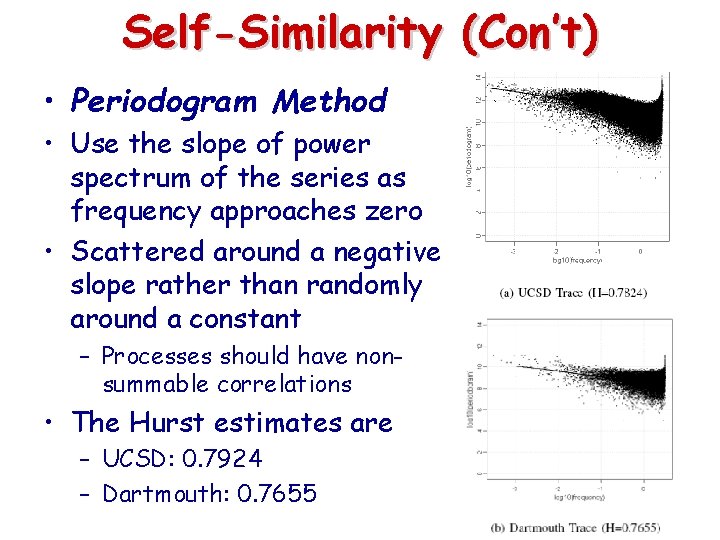

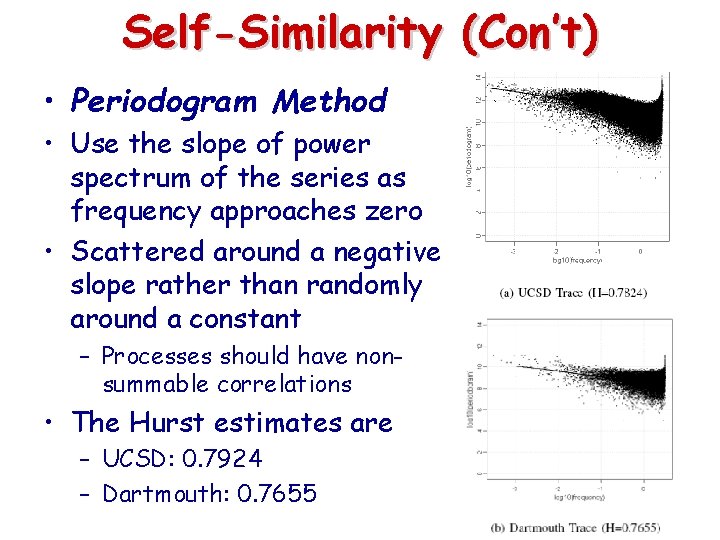

Self-Similarity (Con’t) • Periodogram Method • Use the slope of power spectrum of the series as frequency approaches zero • Scattered around a negative slope rather than randomly around a constant – Processes should have nonsummable correlations • The Hurst estimates are – UCSD: 0. 7924 – Dartmouth: 0. 7655

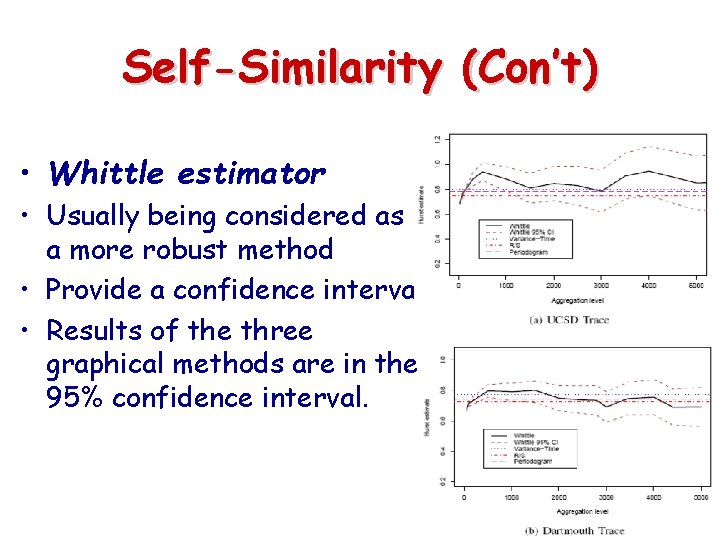

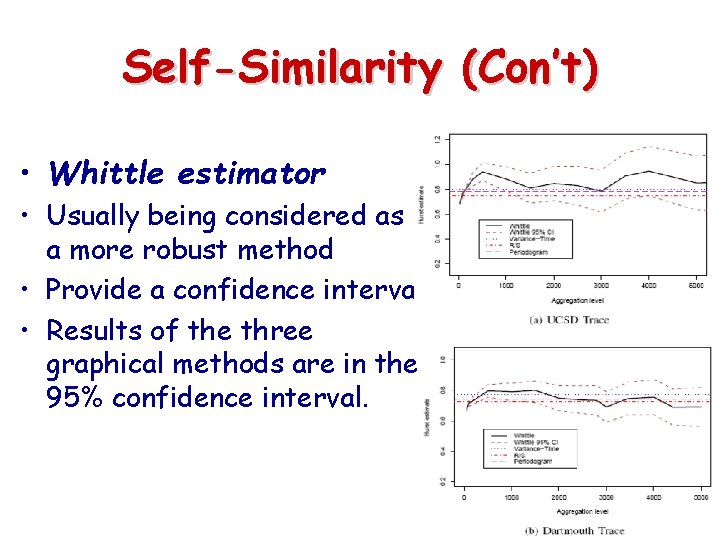

Self-Similarity (Con’t) • Whittle estimator • Usually being considered as a more robust method • Provide a confidence interval • Results of the three graphical methods are in the 95% confidence interval.

Conclusion • Two major properties exist in modern people opportunistic networks – Censorship – Self-similarity • CRA helps recover more accurate datasets • Finding self-similarity helps us design routing algorithm via specific mobility patterns and discover queuing properties in the opportunistic networks

Chen chen berlin

Chen chen berlin Retroviruses and opportunistic infections

Retroviruses and opportunistic infections Opportunistic infections

Opportunistic infections Opportunistic approach adalah model proses untuk

Opportunistic approach adalah model proses untuk Isp curriculum

Isp curriculum Opportunistic infections

Opportunistic infections Finding community structure in very large networks

Finding community structure in very large networks Finding a team of experts in social networks

Finding a team of experts in social networks Basestore iptv

Basestore iptv Virtual circuit and datagram network

Virtual circuit and datagram network Recovery community

Recovery community People just people

People just people What is the best example of people media?

What is the best example of people media? People killin people dying

People killin people dying Ksrten

Ksrten Chen hsong

Chen hsong Sabine chen

Sabine chen Jaye chen

Jaye chen Erd versi martin

Erd versi martin Eresume

Eresume Chiang kai shek

Chiang kai shek Chen

Chen Chen miller

Chen miller