File System NETOS 6 File System Architecture Design

- Slides: 39

File System

NET+OS 6 File System • • • Architecture Design Goals File System Layer Design Storage Services Layer Design RAM Services Layer Design Flash Services Layer Design File Structure File and Directory Tables RTC support Security

NET+OS 6 File System (p 2) • • File System Layer Power Loss Recovery Flash Services Layer Power Loss Recovery Memory Requirements File System API Storage Services API RAM Services API Flash Services API

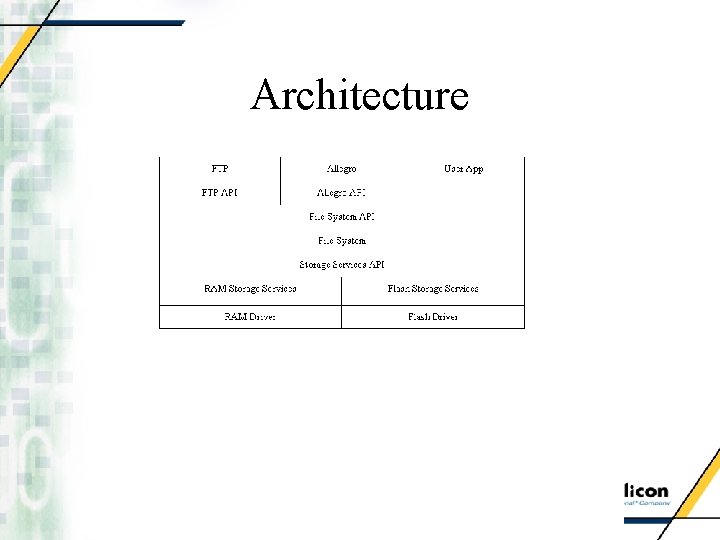

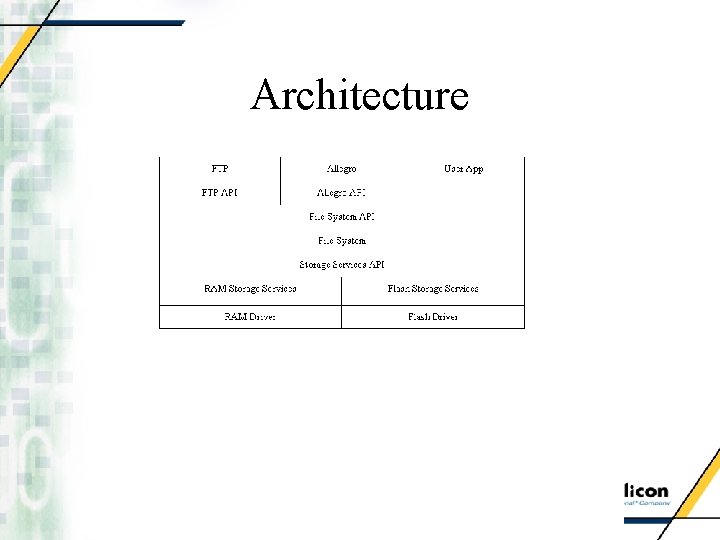

Architecture

Design Goals • Support our existing libraries (FTP and AWS) • Allow multiple file system volumes for a specific media type (RAM or NOR Flash) • Allow user to install their own device drivers to create file system volumes on other types of devices (i. e. NAND Flash) • Power loss recoverable for persistent media • Background flash sector dirty block removal for faster writes • Optimize for best overall Flash I/O performance.

Design Goals (p 2) • Variable block size support • Dynamic user adjustable file I/O performance

File System Layer Design • File I/O related API functions are asynchronous and send a I/O requests to the internal threads • Up to 8 internal threads to process file I/O requests to respective file system volumes • Each user thread can service up to 8 file system volumes of the same media type and block size (i. e. 3 RAM volumes with 1 K block sizes serviced by an internal thread) • User thread can poll the status of a file I/O request or provide a callback function. • Block sizes supported are 512, 1 K, 2 K and 4 K

File System Layer Design (p 2) • User must install the functions for the specific media’s services driver (i. e. Flash Services API)

Storage Services Layer Design • Storage Services layer is the interface between the File System API and the specific I/O services layers. • Maintains an array of pointers to NAFS_IO_INTF control blocks which store the pointers to a specific I/O services API. • File system layer passes a number which is the index to the array of pointers where the specific I/O Services API function pointers are stored. • Respective I/O Services API function is then called.

RAM Services Layer Design • RAM volume must be continuous region of “statically” or “dynamically” allocated RAM • File system layer blocks are mapped directly to the RAM volume blocks. Thus, the file system layer’s logical block numbers correspond to the physical block numbers in the RAM volume. • Each block in RAM volume has a 20 byte block header which includes a CRC 32 checksum, a not dirty flag and a logical block number. • Maintains an array of pointers to each block in the volume.

Flash Services Layer Design • Flash volume must be at least two “consecutive” and “equal size” flash sectors. • File system layer blocks are mapped indirectly to the Flash volume blocks. Thus, the file system layer’s logical block numbers do not correspond to the physical block numbers in the Flash volume. • Each block in Flash volume has a 20 byte block header which includes a CRC 32 checksum, a not dirty flag, a logical block number and a serial number.

Flash Services Layer Design (p 2) • Flash volume must have an erased sector available at all times to use as the transfer sector. • Transfer sector is used to copy valid blocks from a full sector when the volume has no more empty (or erased) blocks. • After the copy is completed, the source sector is erased and becomes the transfer sector and the dirty blocks from the source sector are removed.

Flash Services Layer Design (p 3) • User selects from two sector transfer algorithms: • Most dirty sector algorithm provides the most erased blocks per sector transfer operation “potentially” at the expense of wear leveling • Random dirty sector algorithm provides better wear leveling “potentially” at the expense the efficiency of a sector transfer operation.

Flash Services Layer Design (p 4) • Indirectly block mapping requires maintaining 4 tables: • Physical block status table • Logical block to physical block table • Sector block table • Erased block table

Flash Services Layer Design (p 5) • Each Flash volume can have a dedicated background sector compacting thread that removes dirty blocks and creates erased blocks during idle time. • Improves write performance since Flash sectors were erased ahead of time.

File Structure • Based on the Unix I-node file structure • Directories and files use the same I-node file structure • Up to double indirect addressing is implemented. • 512 block size allows mapping files over 8 MB and over 96 K files and directories. • 1 K block size allows mapping files over 64 MB and over 768 K files and directories. • 2 K block size allows mapping files over 512 MB and over 6400 K files and directories.

File Structure (p 2) • 4 K block size allows mapping files over 4096 MB and over 52224 K files and directories. • Group ID security feature is implemented. • Max file or directory name is 64 chars • Max directory path is 256 chars

Directory and File Tables • Each volume has a directory and file table to track open files and directories associated with open files. • Doubles as a directory and file cache since it remembers the location of previous accessed files and directories. • File is opened with exclusive rights. It cannot be opened more than once. • Opened file cannot be deleted or renamed. • Directory where the opened file is located cannot be deleted or renamed either.

RTC Support • SNTP (Simple Network Time Protocol) • Hardware RTC • File system uses time() to get raw time data which is number of seconds since 01/01/70 • File or directory's timestamp needs to be converted to calendar time using the C library's time related functions. • No RTC option forces file system to not use time(). Files and directories are created with a timestamp of 0 and is incremented whenever file or directory is changed.

Security • 8 Group ID levels • Can be used as 8 owner IDs where a user can only access files and directories created by itself. • Can be used as group IDs where different users have different read/write access different groups. For example, User 1 has read and write access to Group 1, but only read access to Group 2. • Can be used as combined owner and group IDs. For example groups 1 to 4 are used as owner ID’s and groups 5 to 8 are used as group ID’s. • Users can use this feature as required.

Security (p 2) • Group IDs are defined by NAFS_GROUP 1 to NAFS_GROUP 8 bitmasks. When a file or directory is created, it will have one of the above group IDs. • Root directory has a group ID that is the bitwise OR of the above group ID bitmasks. Any user can see the root directory, but can only access files directories that it has access permissions. • Group access read masks are defined by NASYSACC_FS_GROUP 1_READ to NASYSACC_FS_GROUP 8_READ

Security (p 3) • Group access write masks are defined by NASYSACC_FS_GROUP 1_WRITE to NASYSACC_FS_GROUP 8_WRITE • A user must have read access to group X to see (or list) files and directories that belong to group X. • A user must have read access to group X in order to open, read and close files that belong to group X. • A user must have read and write access to group X to open, write and close files that belong to group X.

Security (p 4) • When creating a file (or directory), a user must have read and write access to the directory where the file (or directory) to be created is located, and the group id of the new file must be a group that the user has both read and write access. • When renaming files and directories, a user must have read and write access to the directory where the file or directory to be renamed (or deleted ) is located, and have read and write access to the file or directory to be renamed (or deleted).

File System Layer Power Loss Recovery • Power loss related errors affect only the last file or last directory accessed for write • Power loss recovery algorithms are the reverse of the write algorithms to create, write and delete files and directories. • These algorithms are very tightly integrated such that write algorithms cannot be changed without affecting the power loss recovery algorithms.

File System Layer Power Loss Recovery(p 2) • Power loss recovery algorithm is recursive and requires up to 4 blocks of RAM per directory level traversed. For example, if directory has 10 levels and 1 K blocks are used, up to 40 K of RAM are needed for power loss recovery algorithm. • Current implementation allows power loss recovery checking for about 60 subdirectory levels.

Flash Services Layer Power Loss Recovery • When a block is moved around due to a change in data or a sector transfer, the serial number in the block header is incremented and the new block is written before marking the old block as dirty. • If power fails after the new block is written but before the old block is marked “dirty”, then there are 2 instances of the same logical block in the Flash volume. • When power is resumed, two instances of the same logical block are detected and the block with the larger serial number is mapped the logical block table.

Flash Services Layer Power Loss Recovery (p 2) • Power loss recovery algorithms handle failures during a sector transfer operation.

Memory Requirements • “Flash” centric design uses RAM tables to track free blocks and free inodes instead of storing these tables in the media. • Reduces number of writes to the Flash volume. Updating tables in the Flash constantly will increase the number of “dirty” blocks, resulting in more sector transfer operations to remove “dirty” blocks. This design decision attempts to reduce the number of writes to the Flash volume to reduce wear and loss of performance.

Memory Requirements (p 2) • File system layer requires 4 bytes per free block in the volume and 4 bytes per free inode. For example, if volume has 1000 blocks and 500 inodes, a total of (4 * 1000) + (4 * 500) or 6 KB of RAM is required. • RAM I/O Services module’s require 4 bytes per block in the volume. For example, if volume has 1000 blocks, a total of (4 * 1000) or 4 KB of RAM is required.

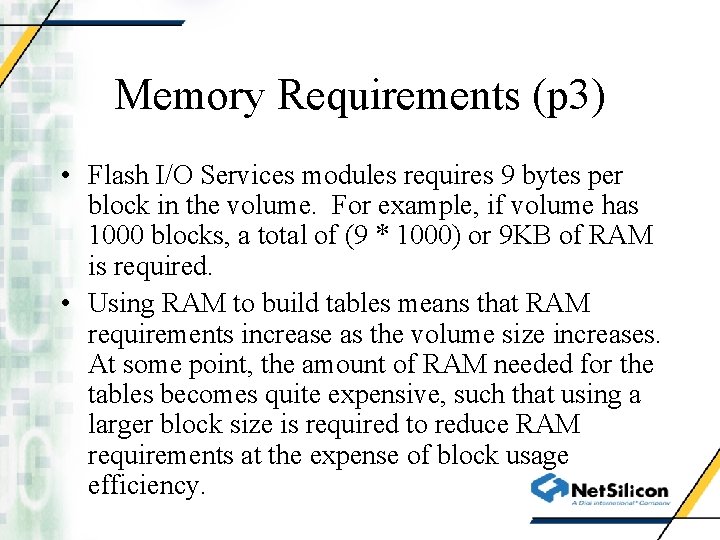

Memory Requirements (p 3) • Flash I/O Services modules requires 9 bytes per block in the volume. For example, if volume has 1000 blocks, a total of (9 * 1000) or 9 KB of RAM is required. • Using RAM to build tables means that RAM requirements increase as the volume size increases. At some point, the amount of RAM needed for the tables becomes quite expensive, such that using a larger block size is required to reduce RAM requirements at the expense of block usage efficiency.

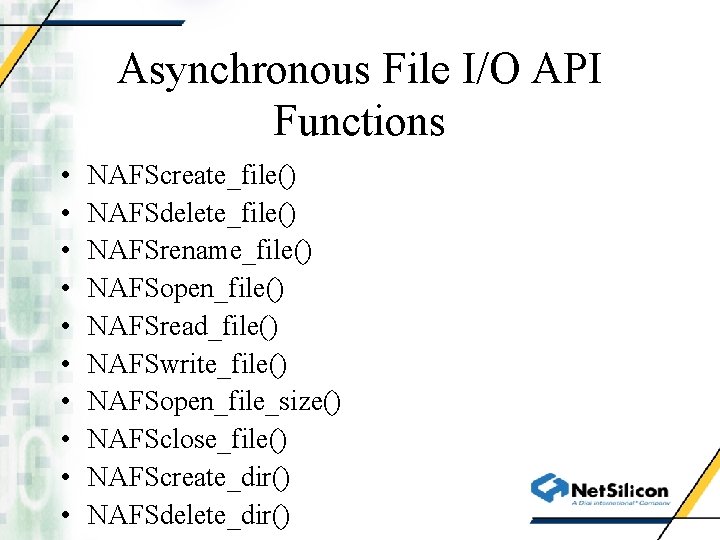

Asynchronous File I/O API Functions • • • NAFScreate_file() NAFSdelete_file() NAFSrename_file() NAFSopen_file() NAFSread_file() NAFSwrite_file() NAFSopen_file_size() NAFSclose_file() NAFScreate_dir() NAFSdelete_dir()

Asynchronous File I/O API Functions (p 2) • NAFSrename_dir() • NAFSdir_entry_count() • NAFSlist_dir()

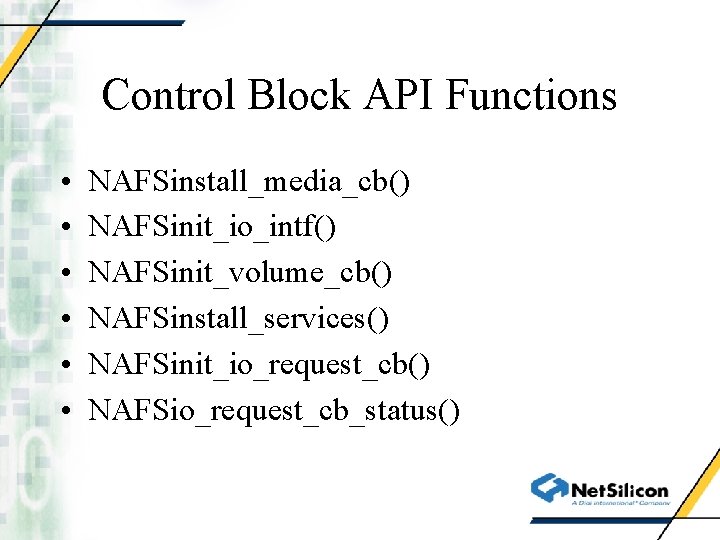

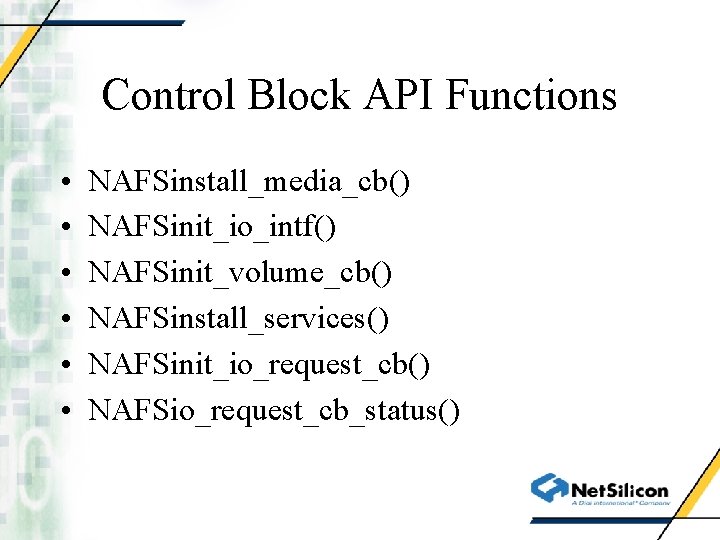

Control Block API Functions • • • NAFSinstall_media_cb() NAFSinit_io_intf() NAFSinit_volume_cb() NAFSinstall_services() NAFSinit_io_request_cb() NAFSio_request_cb_status()

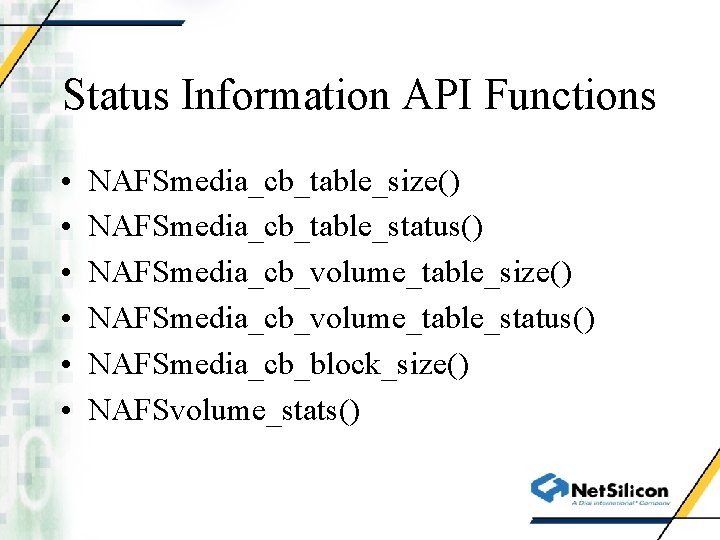

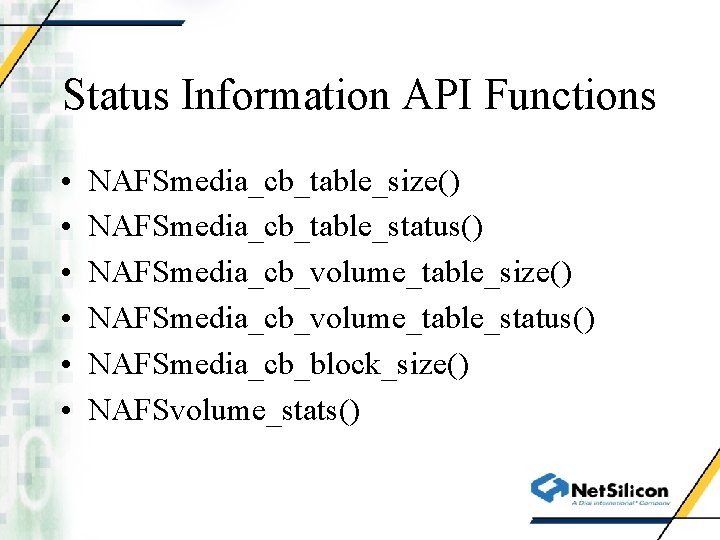

Status Information API Functions • • • NAFSmedia_cb_table_size() NAFSmedia_cb_table_status() NAFSmedia_cb_volume_table_size() NAFSmedia_cb_volume_table_status() NAFSmedia_cb_block_size() NAFSvolume_stats()

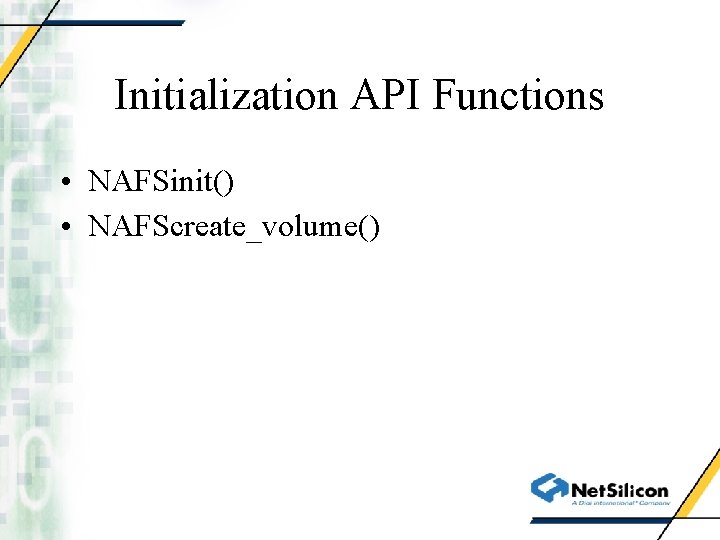

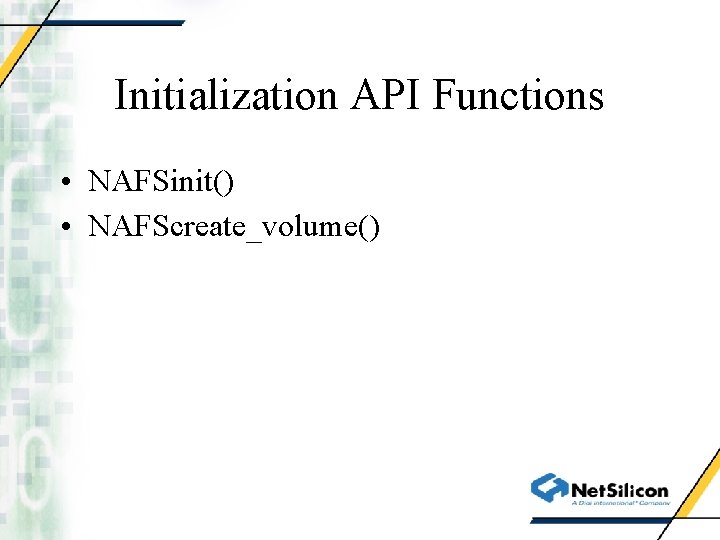

Initialization API Functions • NAFSinit() • NAFScreate_volume()

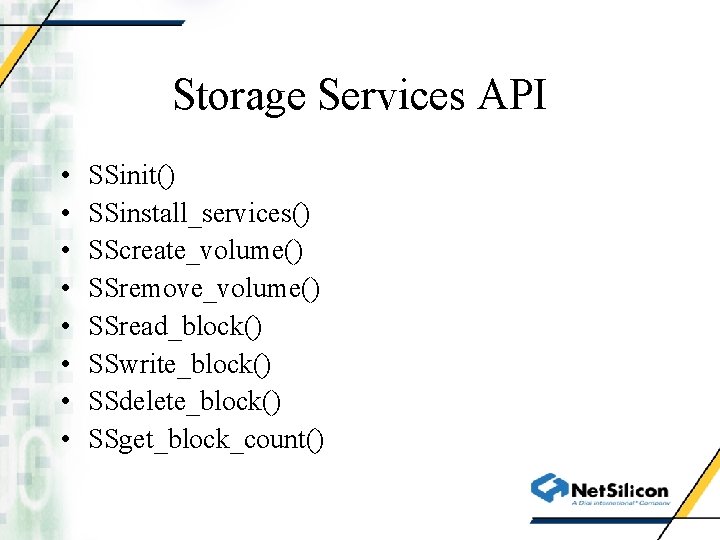

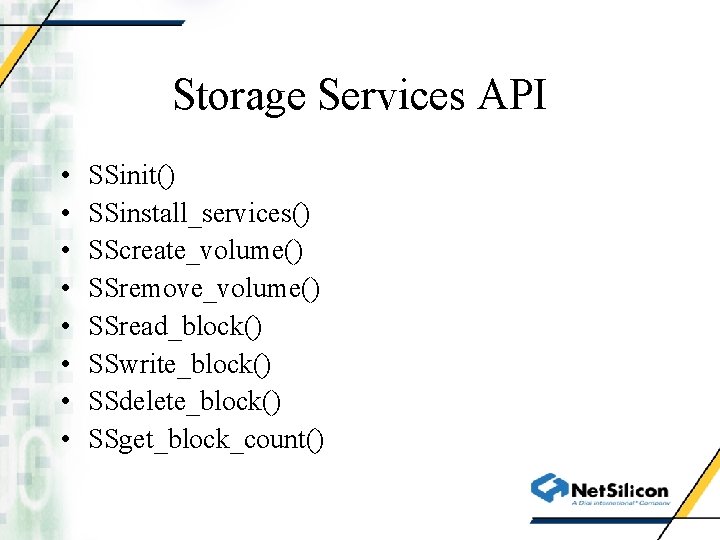

Storage Services API • • SSinit() SSinstall_services() SScreate_volume() SSremove_volume() SSread_block() SSwrite_block() SSdelete_block() SSget_block_count()

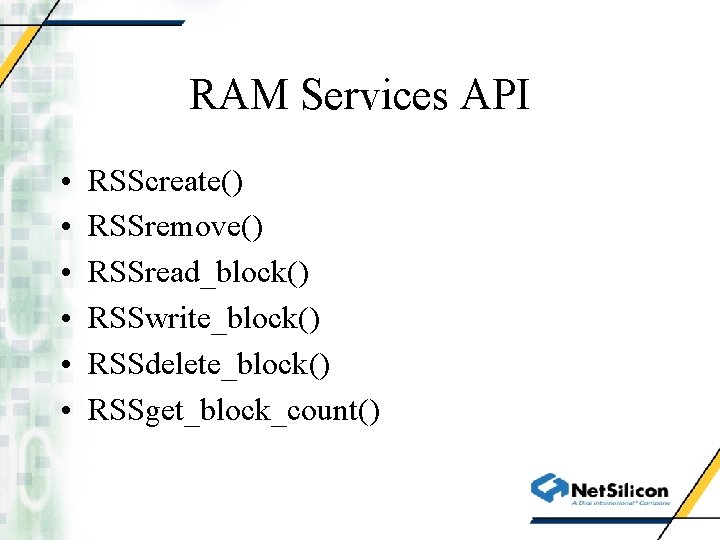

RAM Services API • • • RSScreate() RSSremove() RSSread_block() RSSwrite_block() RSSdelete_block() RSSget_block_count()

Flash Services API • • • FSScreate() FSSremove() FSSread_block() FSSwrite_block() FSSdelete_block() FSSget_block_count()

Recommended Readings • File System Specification • Storage Services Specification • Unix file system related material