Feature Competitive Algorithm for Dimension Reduction of the

![Feature Competitive Algorithm Feature competition 2/3 • [1, 3, 9, 11] be the winning Feature Competitive Algorithm Feature competition 2/3 • [1, 3, 9, 11] be the winning](https://slidetodoc.com/presentation_image_h2/7b7b45f508d2850a9d55e45bb2aaa71d/image-19.jpg)

- Slides: 30

Feature Competitive Algorithm for Dimension Reduction of the Self-Organizing Map Input Space Advisor: Dr. Hsu Graduate: Ching-Lung Chen 6/18/2021 IDSL, Intelligent Database System Lab 1

• • • Outline Motivation Objective Introduction Self-Organizing Map Construction of the SOM Input Feature Space Learning Algorithm and Convergence of the SOM Feature Competitive Algorithm Testing the FCA Measure retrieval performances by recall and precision Conclusion Personal Opinion 6/18/2021 IDSL, Intelligent Database System Lab 2

Motivation • The dimensionality of the SOM input data space is generally high. • High dimensionality not only lowers the efficiency of the initial learning process but also lower the efficiencies of the subsequent retrieval and relearning process. 6/18/2021 IDSL, Intelligent Database System Lab 3

Objective • To reduce dimension, capture the most significant features without excessively losing of essential information. 6/18/2021 IDSL, Intelligent Database System Lab 4

Introduction • SOM is an unsupervised neural network which learns from its input data items and is able to reflect their interrelationships on its output layer. • Document to be classified will need to be represented as n-dimensional vectors. The input vector are usually obtained by indexing the document. 6/18/2021 IDSL, Intelligent Database System Lab 5

Introduction(cont. 1) The feature selection rough methods: • Exhaustive search: tries to find the best subset among candidate subsets • Heuristic search: only quadratic or less in terms of the dimension of the feature set. • Mathematical methods: the computational complexity of this do not have a uniform behavior but depend on the individual algorithms. 6/18/2021 IDSL, Intelligent Database System Lab 6

Introduction(cont. 2) The proposed FCA may be classified as a heuristic method. Different from general heuristic methods, it uses domain knowledge in feature selection. 6/18/2021 IDSL, Intelligent Database System Lab 7

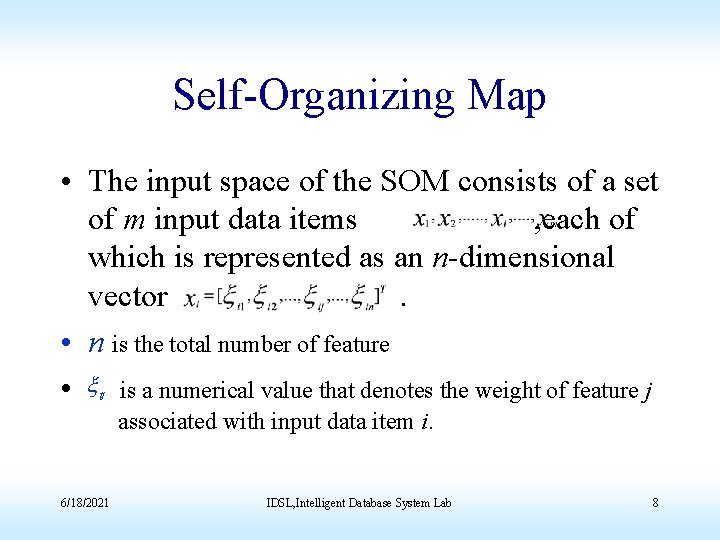

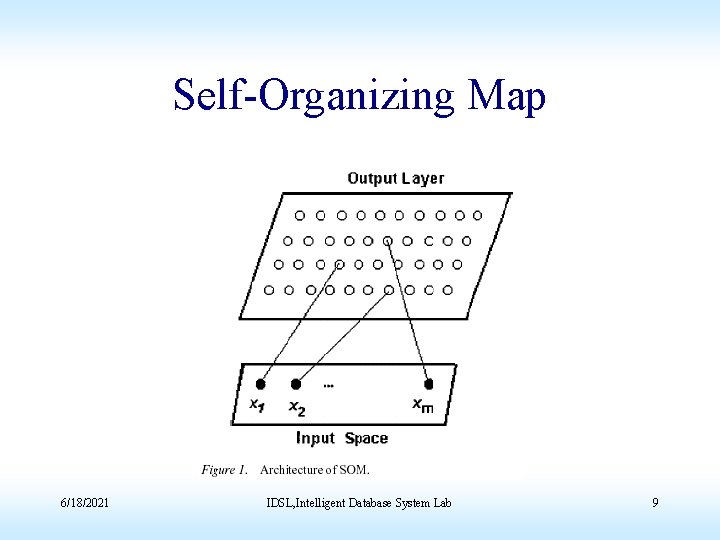

Self-Organizing Map • The input space of the SOM consists of a set of m input data items , each of which is represented as an n-dimensional vector. • n is the total number of feature • x is a numerical value that denotes the weight of feature j ij associated with input data item i. 6/18/2021 IDSL, Intelligent Database System Lab 8

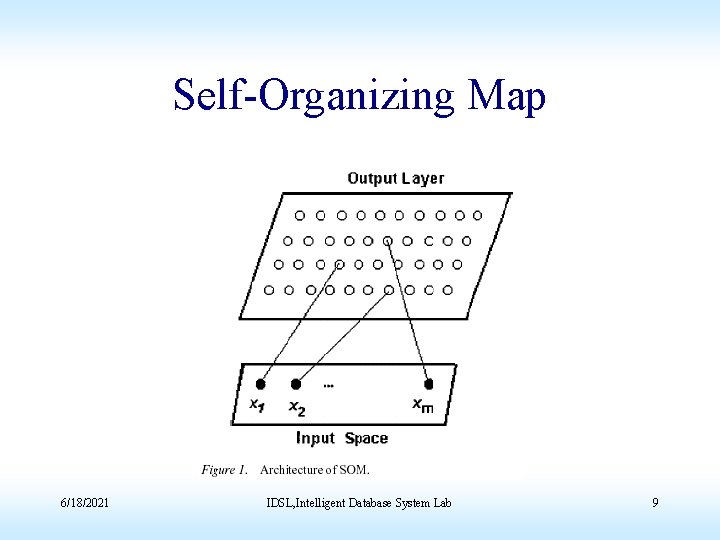

Self-Organizing Map 6/18/2021 IDSL, Intelligent Database System Lab 9

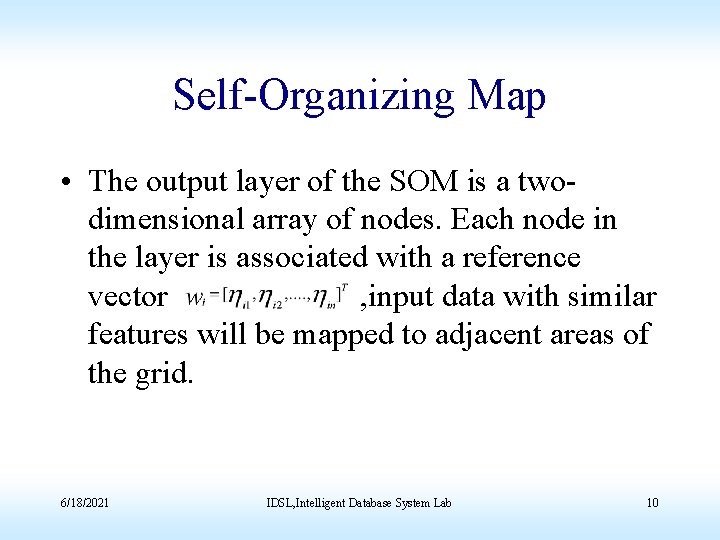

Self-Organizing Map • The output layer of the SOM is a twodimensional array of nodes. Each node in the layer is associated with a reference vector , input data with similar features will be mapped to adjacent areas of the grid. 6/18/2021 IDSL, Intelligent Database System Lab 10

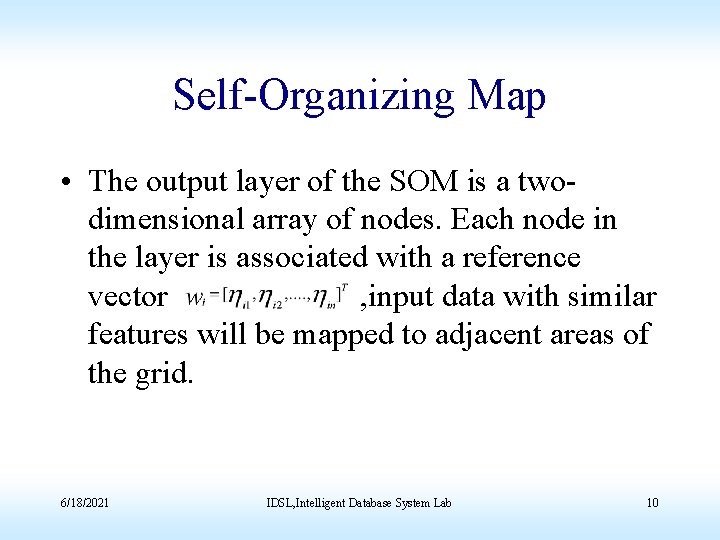

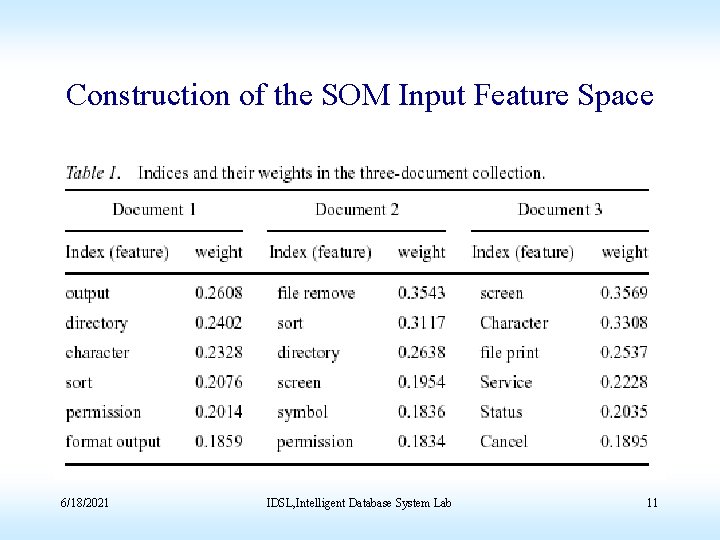

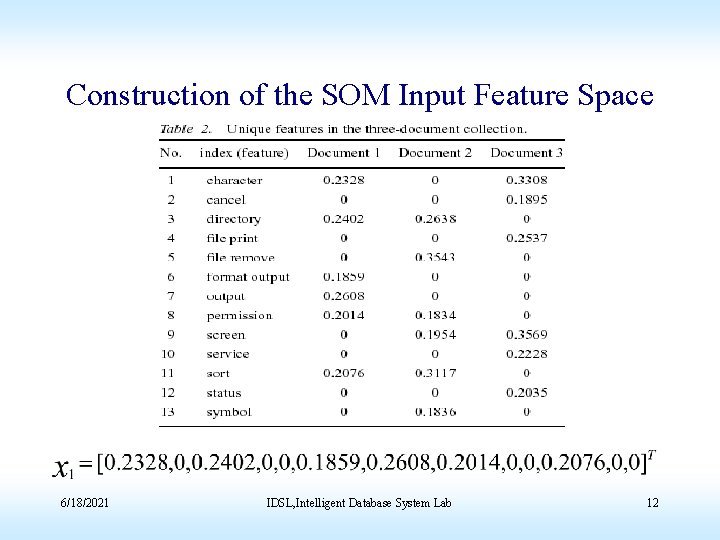

Construction of the SOM Input Feature Space 6/18/2021 IDSL, Intelligent Database System Lab 11

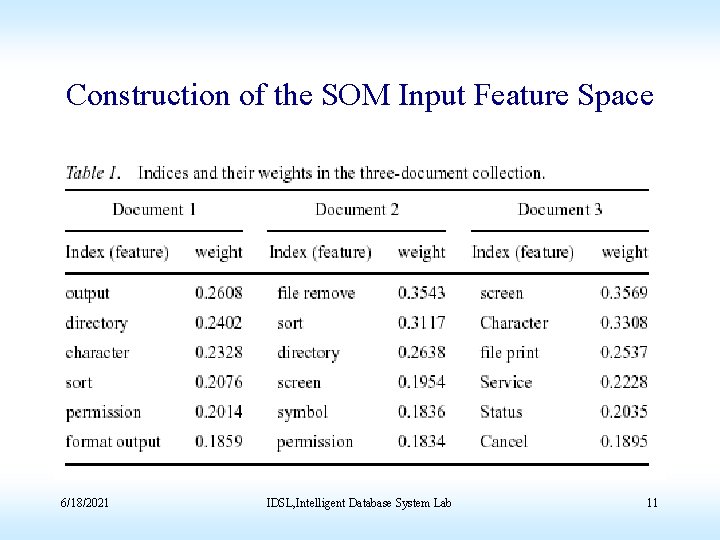

Construction of the SOM Input Feature Space 6/18/2021 IDSL, Intelligent Database System Lab 12

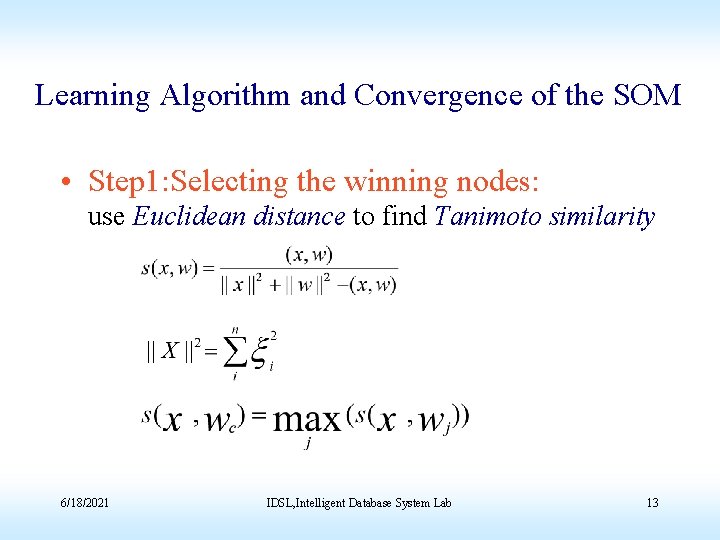

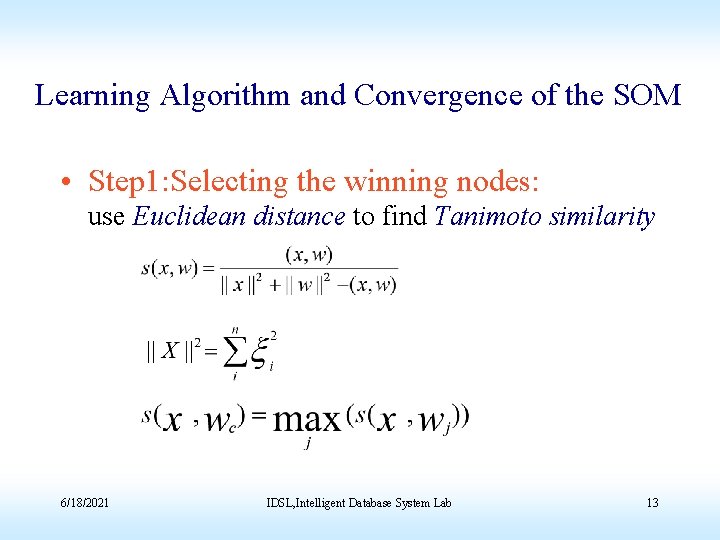

Learning Algorithm and Convergence of the SOM • Step 1: Selecting the winning nodes: use Euclidean distance to find Tanimoto similarity 6/18/2021 IDSL, Intelligent Database System Lab 13

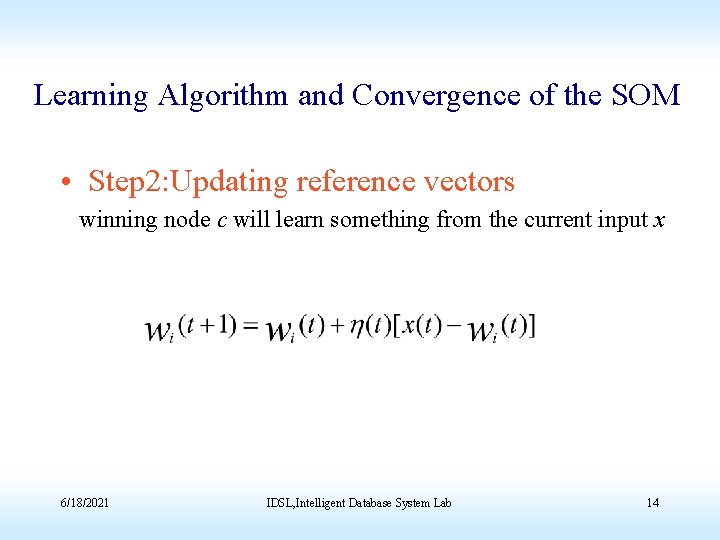

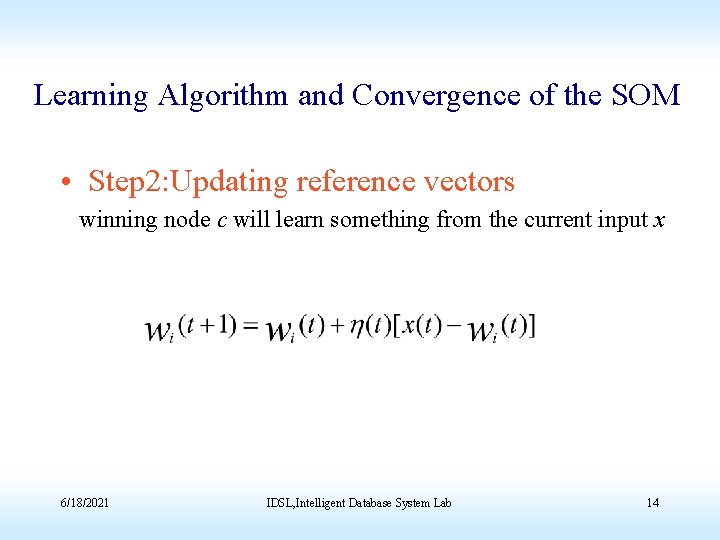

Learning Algorithm and Convergence of the SOM • Step 2: Updating reference vectors winning node c will learn something from the current input x 6/18/2021 IDSL, Intelligent Database System Lab 14

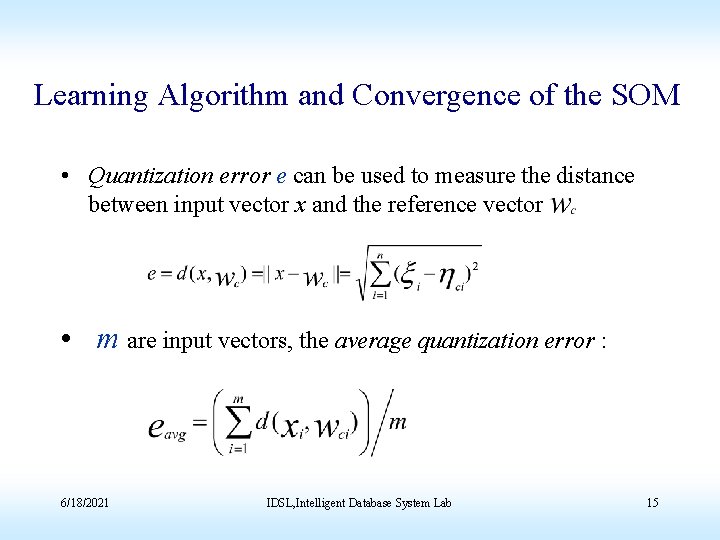

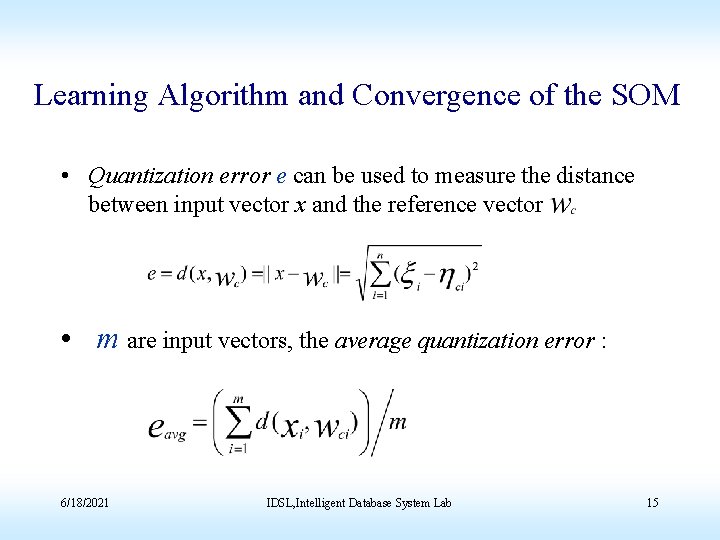

Learning Algorithm and Convergence of the SOM • Quantization error e can be used to measure the distance between input vector x and the reference vector • m are input vectors, the average quantization error : 6/18/2021 IDSL, Intelligent Database System Lab 15

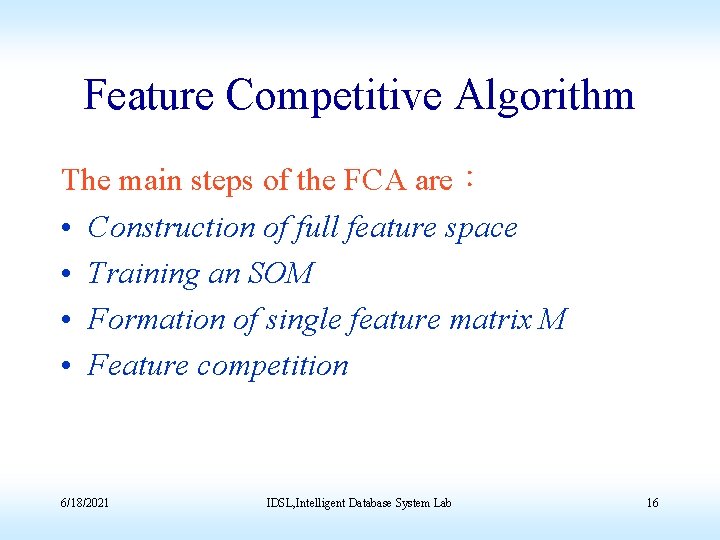

Feature Competitive Algorithm The main steps of the FCA are: • Construction of full feature space • Training an SOM • Formation of single feature matrix M • Feature competition 6/18/2021 IDSL, Intelligent Database System Lab 16

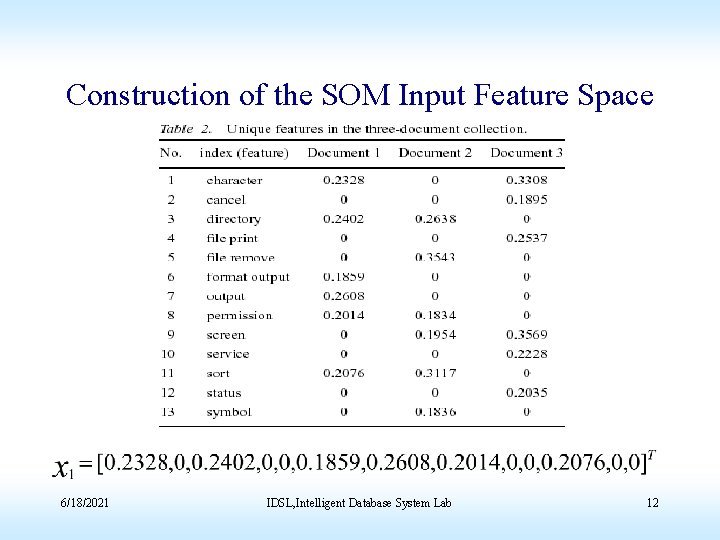

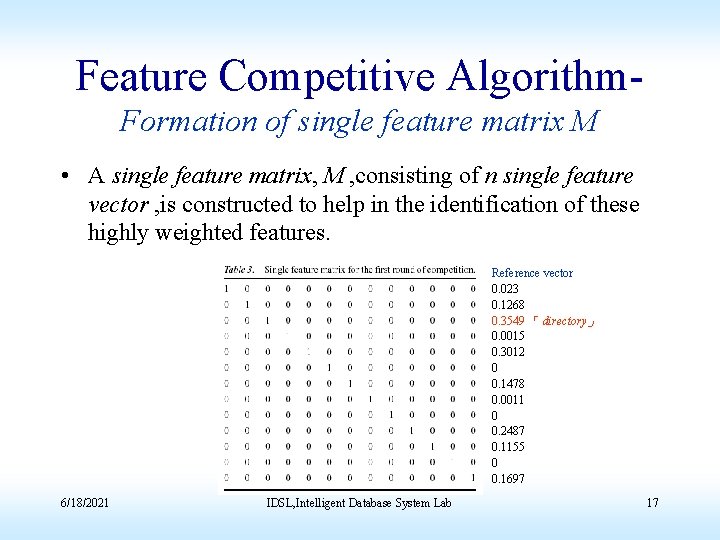

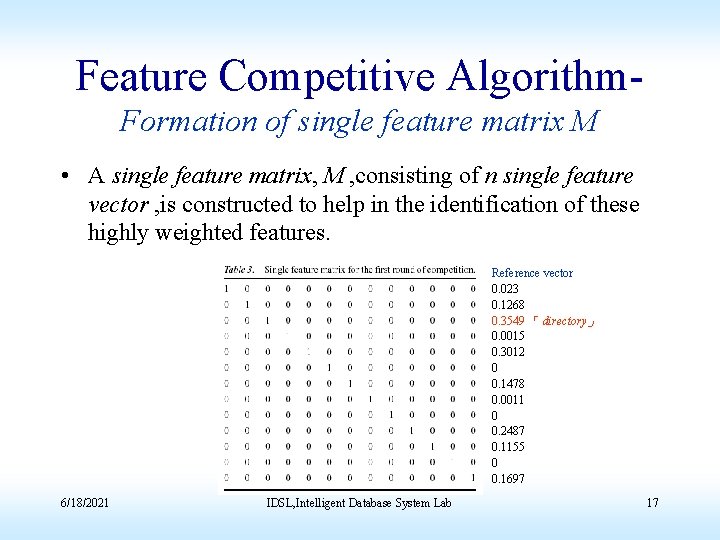

Feature Competitive Algorithm. Formation of single feature matrix M • A single feature matrix, M , consisting of n single feature vector , is constructed to help in the identification of these highly weighted features. Reference vector 0. 023 0. 1268 0. 3549 「 directory」 0. 0015 0. 3012 0 0. 1478 0. 0011 0 0. 2487 0. 1155 0 0. 1697 6/18/2021 IDSL, Intelligent Database System Lab 17

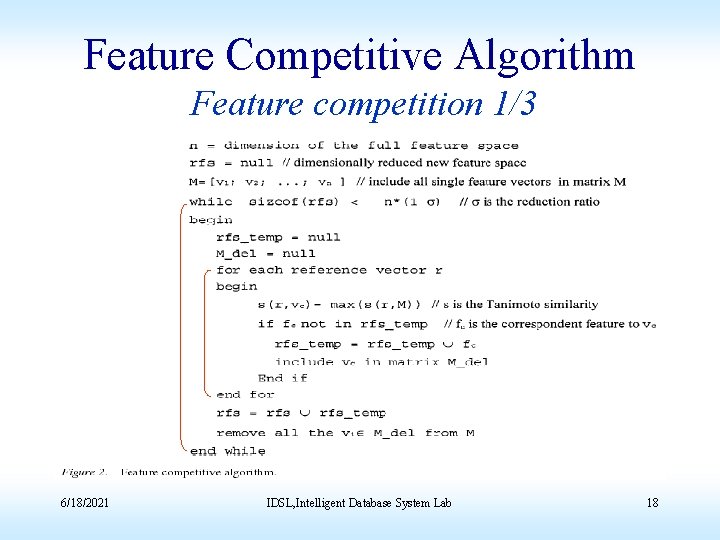

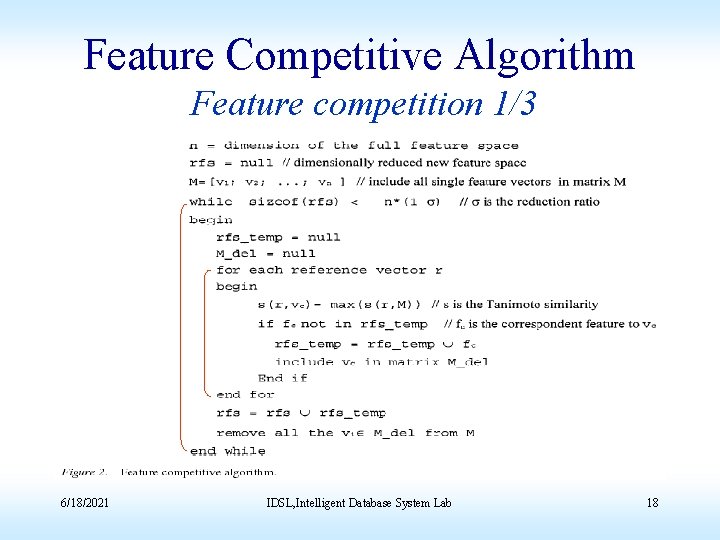

Feature Competitive Algorithm Feature competition 1/3 6/18/2021 IDSL, Intelligent Database System Lab 18

![Feature Competitive Algorithm Feature competition 23 1 3 9 11 be the winning Feature Competitive Algorithm Feature competition 2/3 • [1, 3, 9, 11] be the winning](https://slidetodoc.com/presentation_image_h2/7b7b45f508d2850a9d55e45bb2aaa71d/image-19.jpg)

Feature Competitive Algorithm Feature competition 2/3 • [1, 3, 9, 11] be the winning feature 6/18/2021 IDSL, Intelligent Database System Lab 19

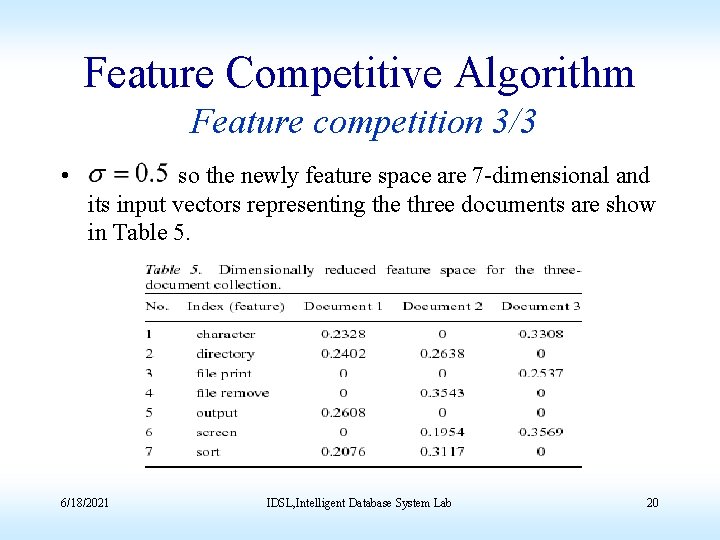

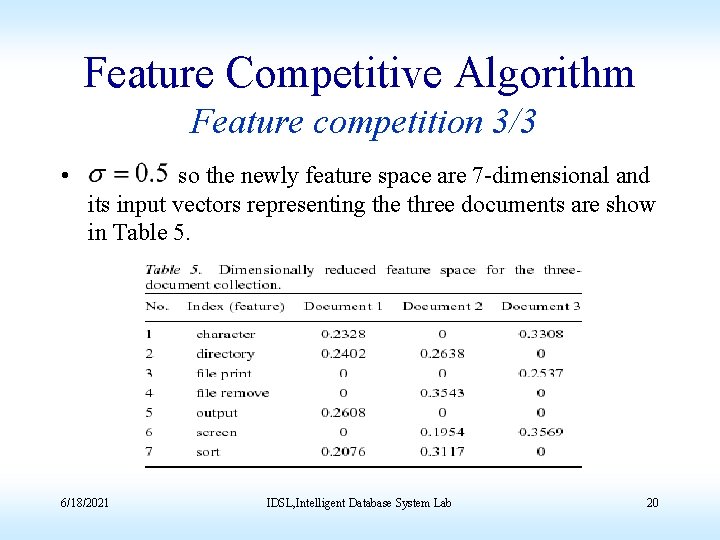

Feature Competitive Algorithm Feature competition 3/3 • so the newly feature space are 7 -dimensional and its input vectors representing the three documents are show in Table 5. 6/18/2021 IDSL, Intelligent Database System Lab 20

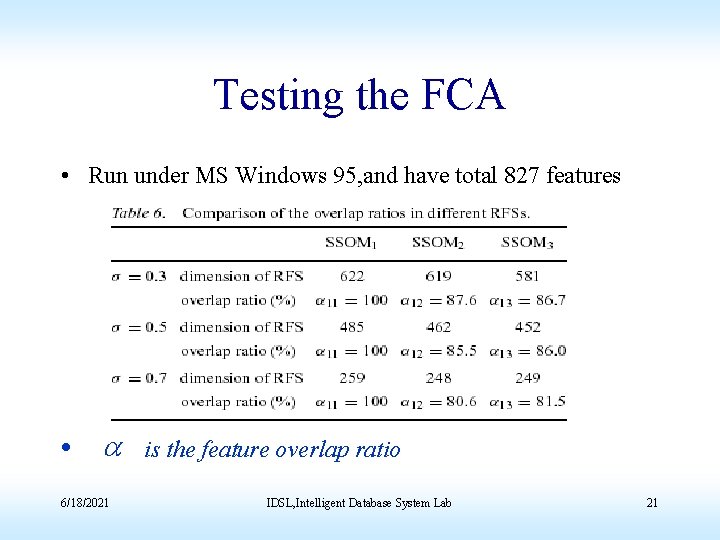

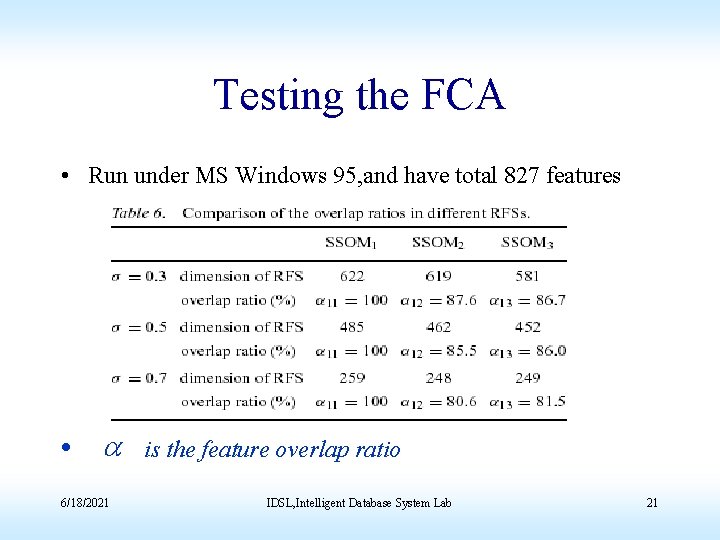

Testing the FCA • Run under MS Windows 95, and have total 827 features • a 6/18/2021 is the feature overlap ratio IDSL, Intelligent Database System Lab 21

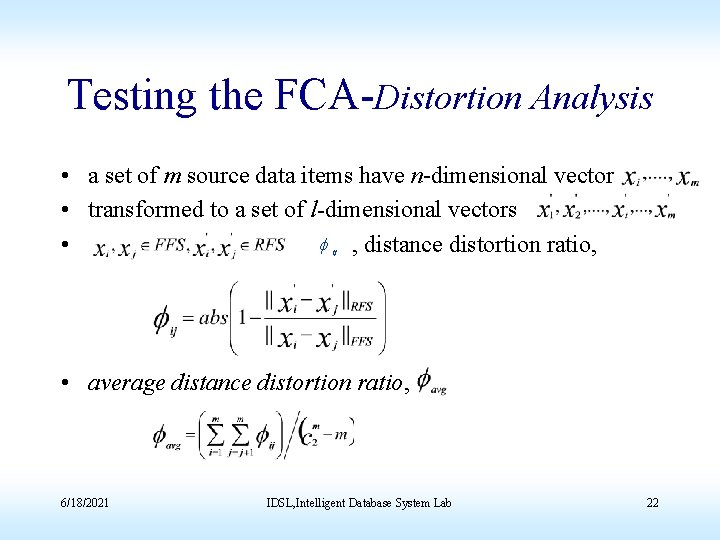

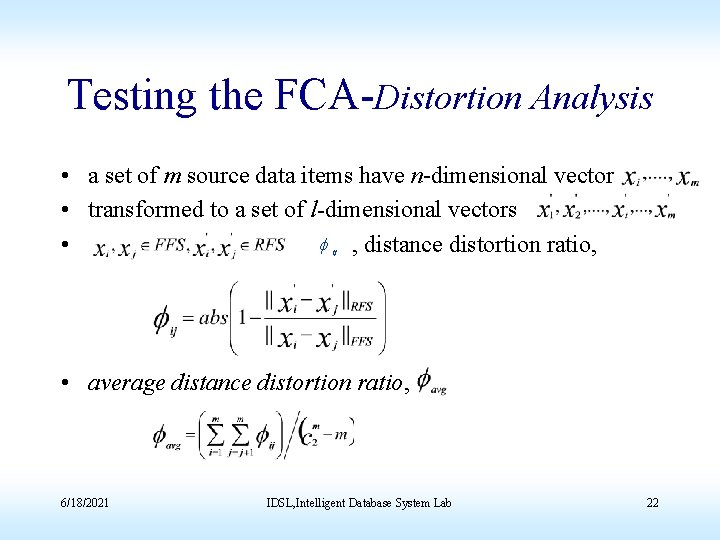

Testing the FCA-Distortion Analysis • a set of m source data items have n-dimensional vector • transformed to a set of l-dimensional vectors f , distance distortion ratio, • ij • average distance distortion ratio, 6/18/2021 IDSL, Intelligent Database System Lab 22

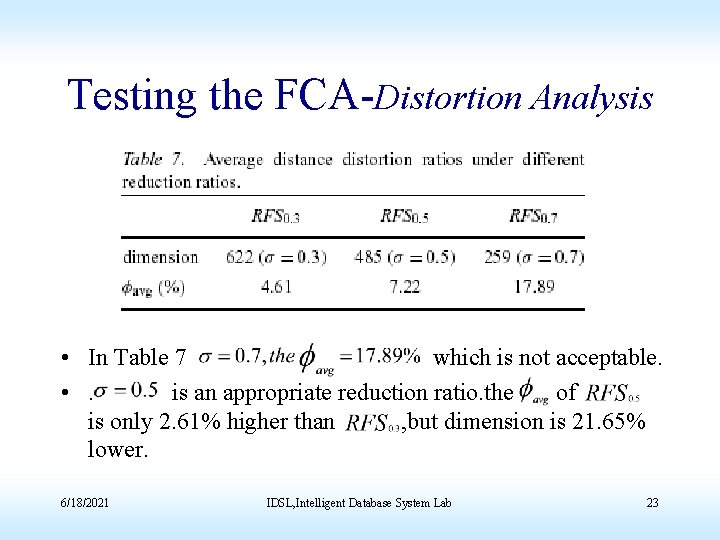

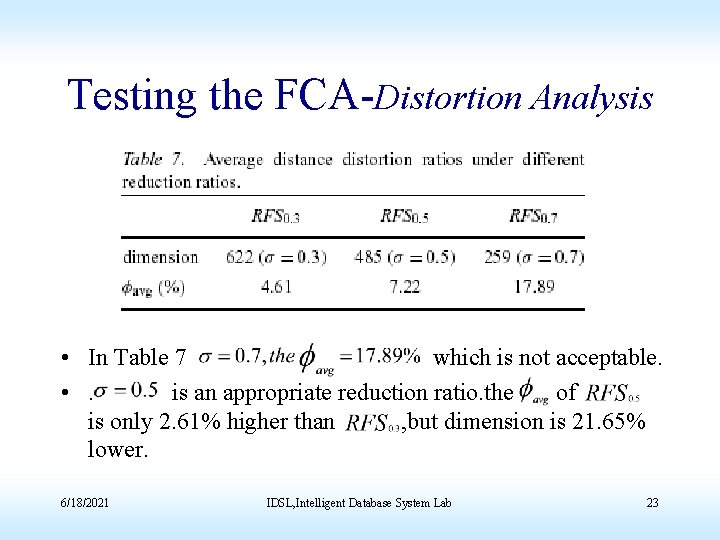

Testing the FCA-Distortion Analysis • In Table 7 which is not acceptable. • . is an appropriate reduction ratio. the of is only 2. 61% higher than , but dimension is 21. 65% lower. 6/18/2021 IDSL, Intelligent Database System Lab 23

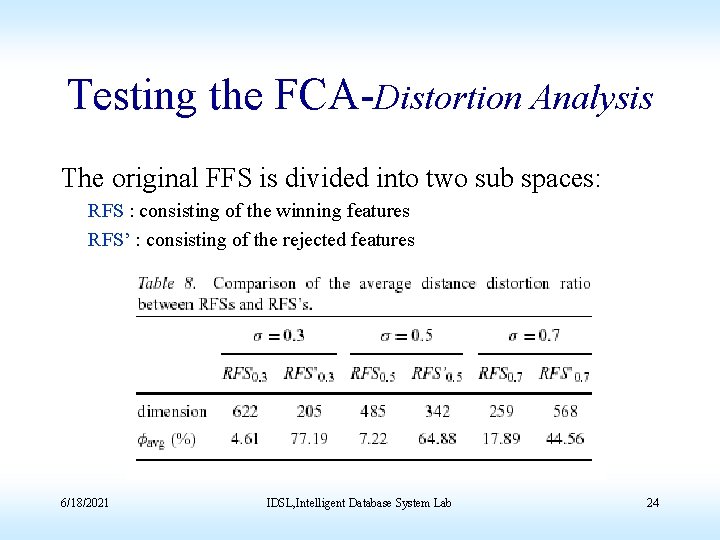

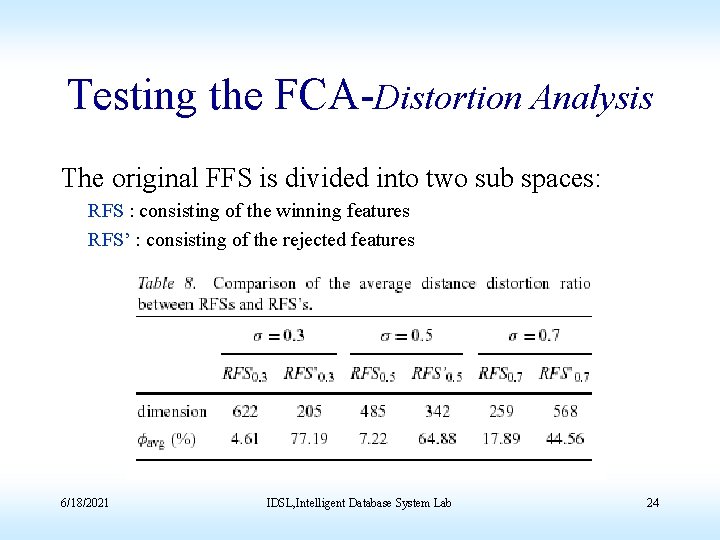

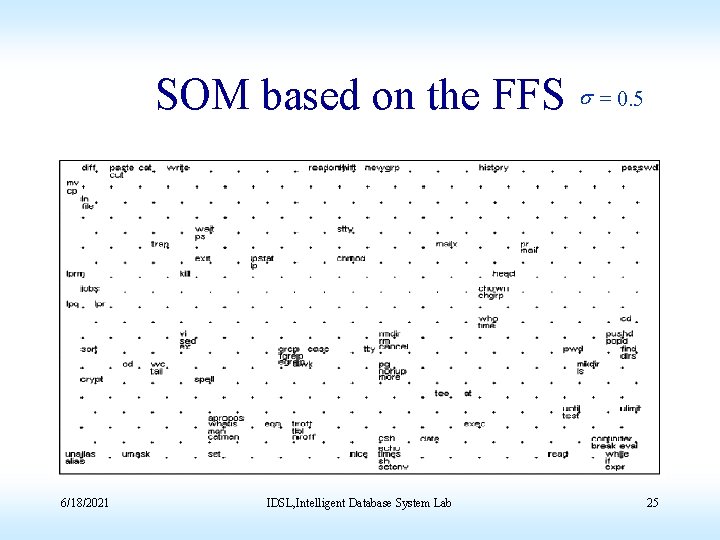

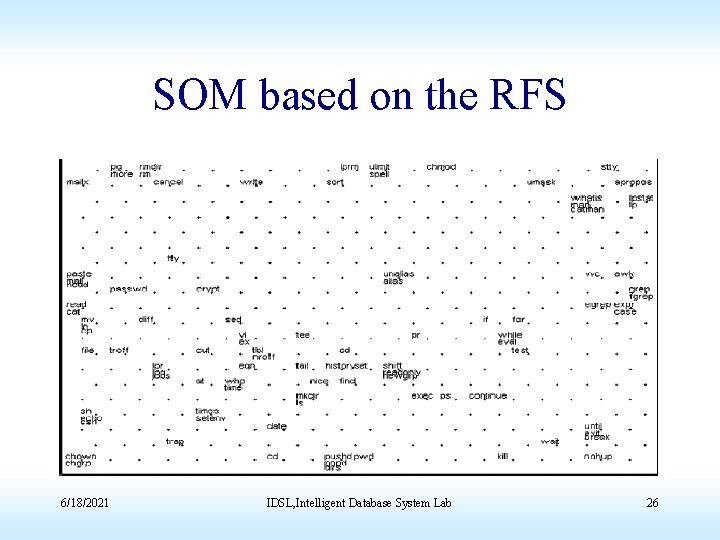

Testing the FCA-Distortion Analysis The original FFS is divided into two sub spaces: RFS : consisting of the winning features RFS’ : consisting of the rejected features 6/18/2021 IDSL, Intelligent Database System Lab 24

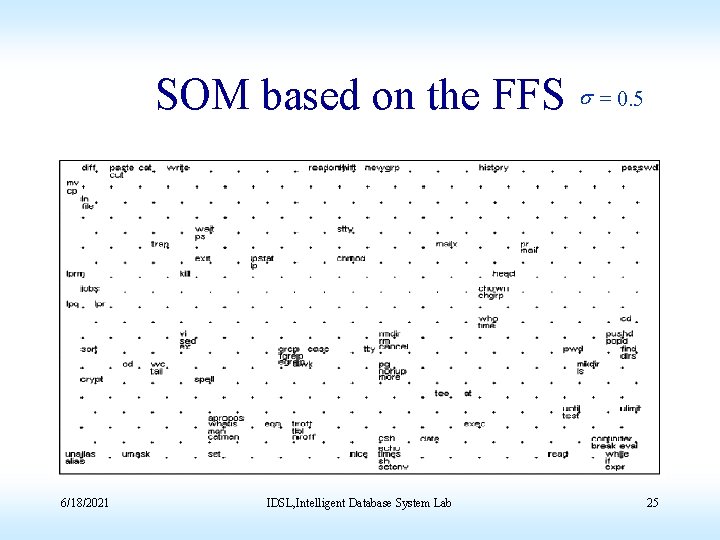

SOM based on the FFS s = 0. 5 6/18/2021 IDSL, Intelligent Database System Lab 25

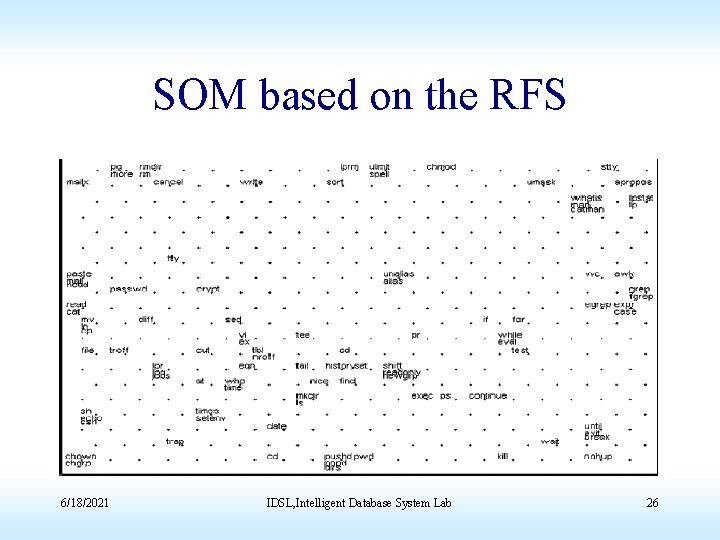

SOM based on the RFS 6/18/2021 IDSL, Intelligent Database System Lab 26

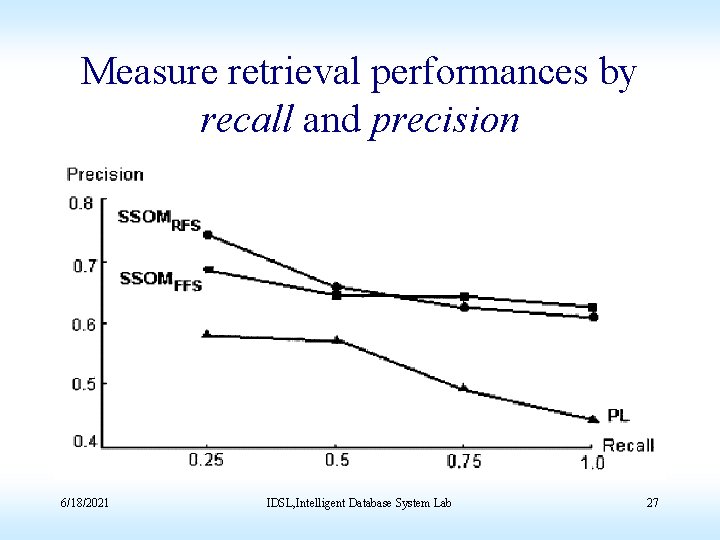

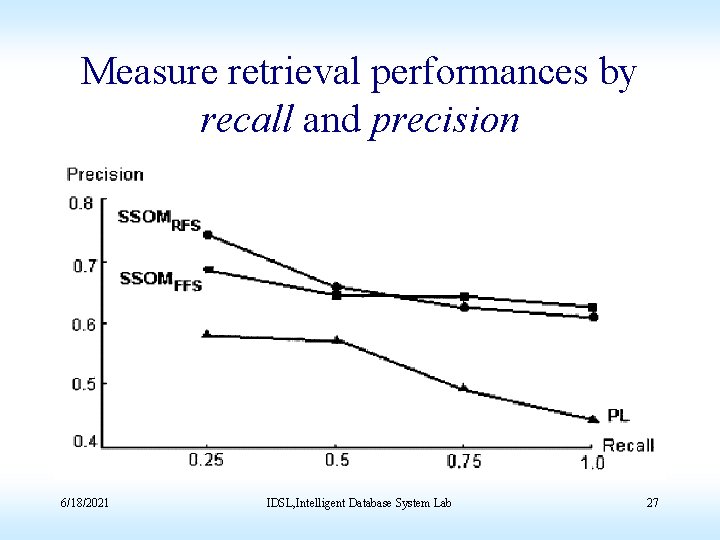

Measure retrieval performances by recall and precision 6/18/2021 IDSL, Intelligent Database System Lab 27

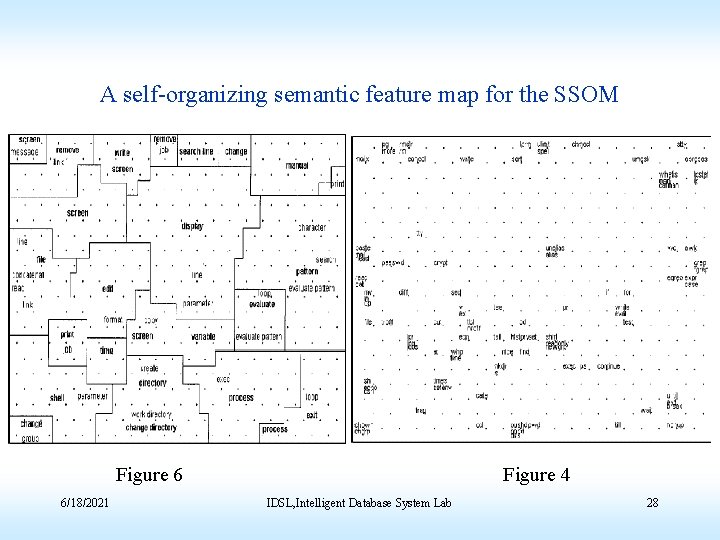

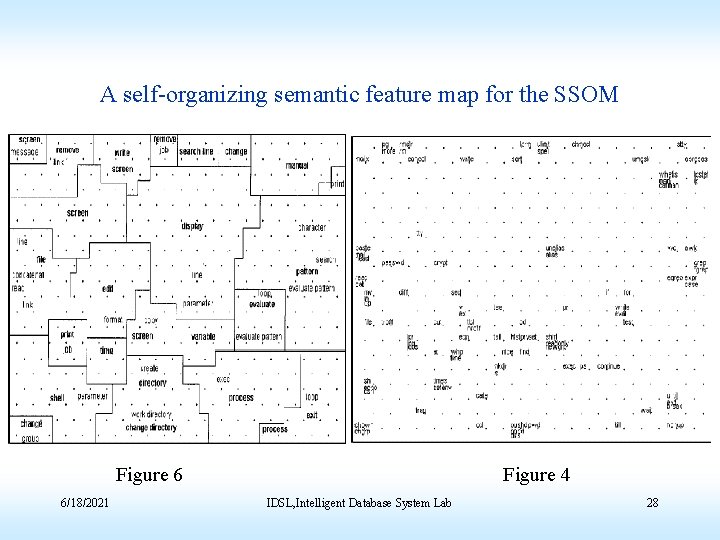

A self-organizing semantic feature map for the SSOM Figure 6 6/18/2021 Figure 4 IDSL, Intelligent Database System Lab 28

Conclusions • The proposed FCA provides an effective dimension reduction method for the application of the SOM in document classification and retrieval. • A quantitative metric, the average distance distortion ratio, provides an easy way to evaluate the quality of the resultant RFSs, making this method easier to apply. 6/18/2021 IDSL, Intelligent Database System Lab 29

Personal Opinion • May be we can use Euclidean distance and average distance distortion ratio to reduce feature in our study. 6/18/2021 IDSL, Intelligent Database System Lab 30