Fast DepthofField Rendering with Surface Splatting Jaroslav Kivnek

![Depth-of-Field Techniques in CG • Supersampling – Distributed ray tracing [Cook et al. 1984] Depth-of-Field Techniques in CG • Supersampling – Distributed ray tracing [Cook et al. 1984]](https://slidetodoc.com/presentation_image_h/792f6f9827c3c0bb83eebeacfcedd80d/image-7.jpg)

![Depth of Field Techniques in CG • Post-filtering [Potmesil & Chakravarty 1981] – Out-of-focus Depth of Field Techniques in CG • Post-filtering [Potmesil & Chakravarty 1981] – Out-of-focus](https://slidetodoc.com/presentation_image_h/792f6f9827c3c0bb83eebeacfcedd80d/image-8.jpg)

- Slides: 25

Fast Depth-of-Field Rendering with Surface Splatting Jaroslav Křivánek Jiří Žára Kadi Bouatouch CTU Prague IRISA – INRIA Rennes Computer Graphics Group

Goal • Depth-of-field rendering with point-based objects • Why point-based ? – Efficient for complex objects • Why depth-of-field ? – Nice and naturally looking images 2

Overview • Introduction – Point-based rendering – Depth-of-field • Depth-of-field techniques • Our contribution: Point-based depth-of-field rendering – Basic approach – Extended method: depth-of-field with level of detail • Results • Discussion • Conclusions 3

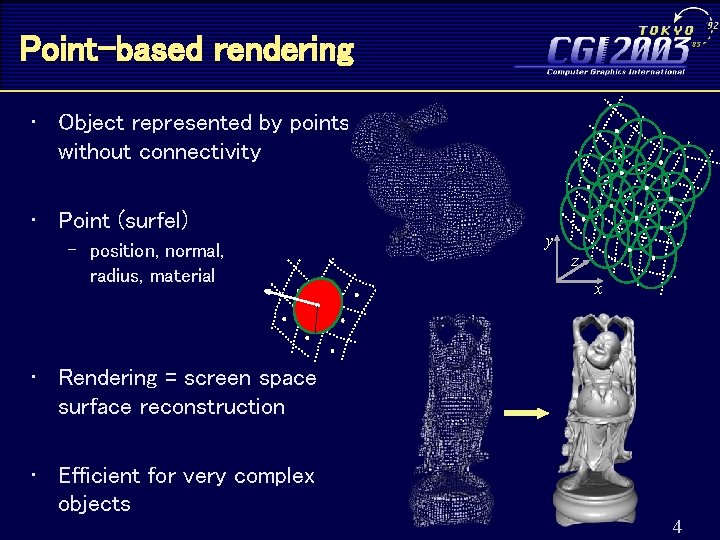

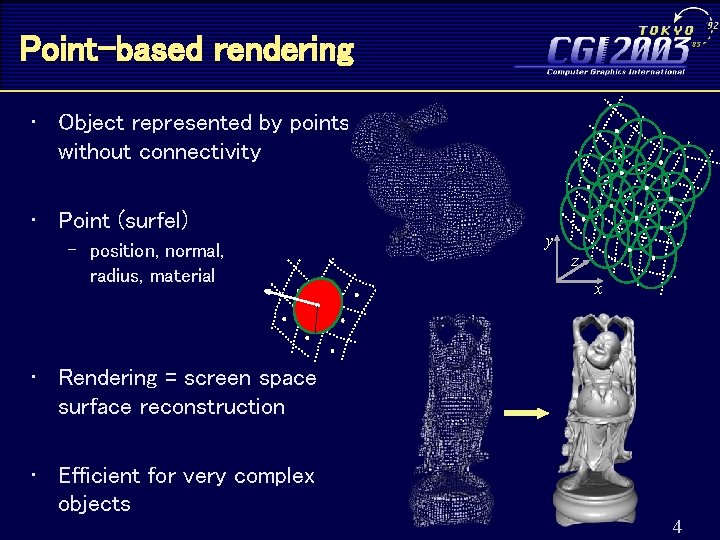

Point-based rendering • Object represented by points without connectivity • Point (surfel) – position, normal, radius, material y z x • Rendering = screen space surface reconstruction • Efficient for very complex objects 4

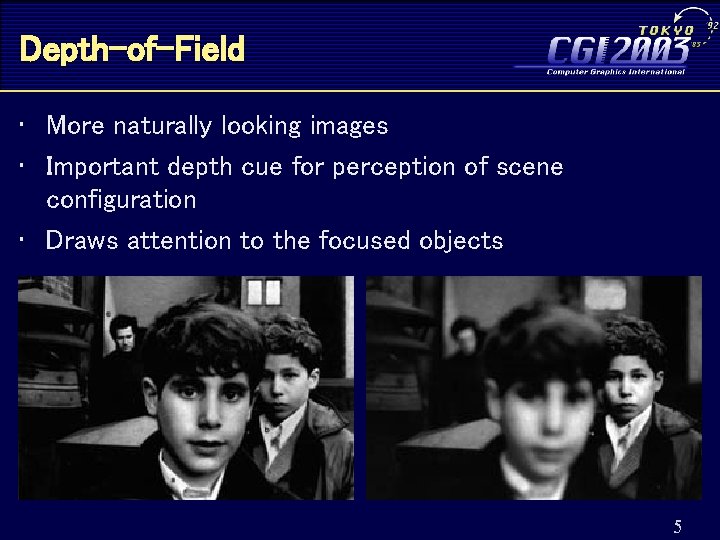

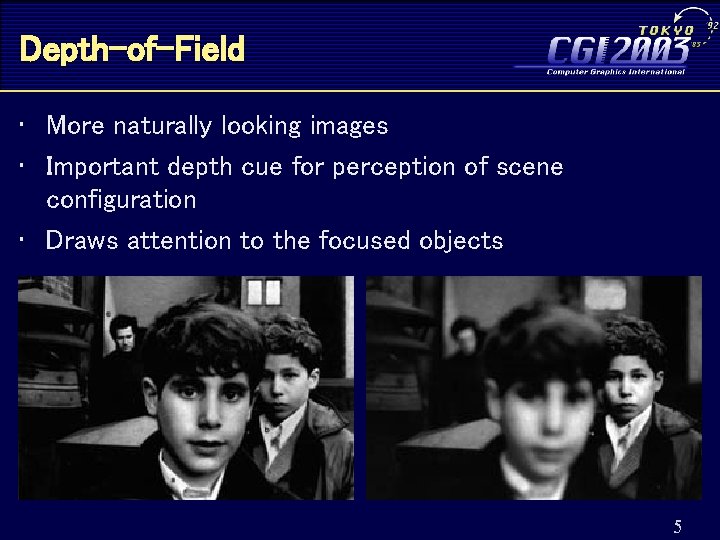

Depth-of-Field • More naturally looking images • Important depth cue for perception of scene configuration • Draws attention to the focused objects 5

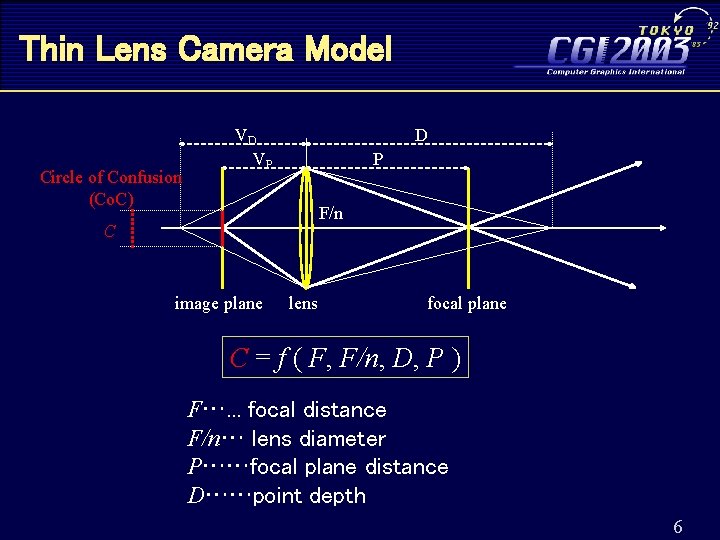

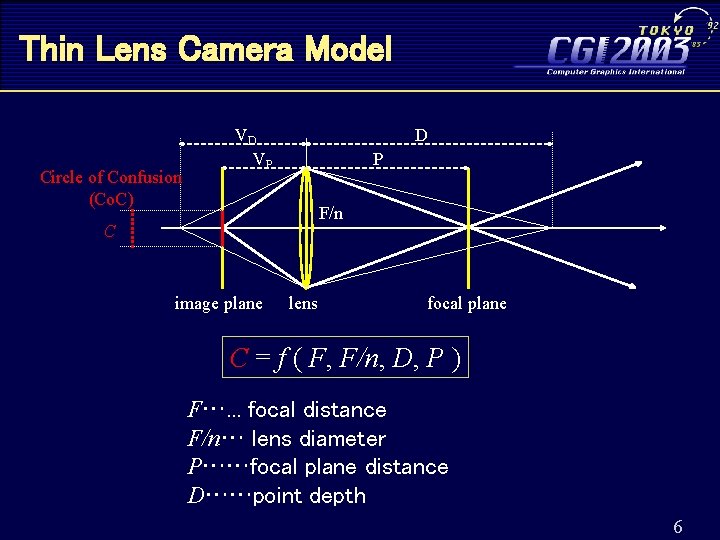

Thin Lens Camera Model Circle of Confusion (Co. C) VD VP D P F/n C image plane lens focal plane C = f ( F, F/n, D, P ) F…. . . focal distance F/n… lens diameter P……focal plane distance D……point depth 6

![DepthofField Techniques in CG Supersampling Distributed ray tracing Cook et al 1984 Depth-of-Field Techniques in CG • Supersampling – Distributed ray tracing [Cook et al. 1984]](https://slidetodoc.com/presentation_image_h/792f6f9827c3c0bb83eebeacfcedd80d/image-7.jpg)

Depth-of-Field Techniques in CG • Supersampling – Distributed ray tracing [Cook et al. 1984] – Sample the light paths through the lens • Multisampling [Haeberli & Akeley 1990] – Several images from different viewpoints on the lens – Average the resulting images using accumulation buffer 7

![Depth of Field Techniques in CG Postfiltering Potmesil Chakravarty 1981 Outoffocus Depth of Field Techniques in CG • Post-filtering [Potmesil & Chakravarty 1981] – Out-of-focus](https://slidetodoc.com/presentation_image_h/792f6f9827c3c0bb83eebeacfcedd80d/image-8.jpg)

Depth of Field Techniques in CG • Post-filtering [Potmesil & Chakravarty 1981] – Out-of-focus pixels displayed as Co. C – Intensity leakage, hypo-intensity – Slow for larger kernels Image synthesizer Image + depth Focus processor (filtering) Image with DOF 8

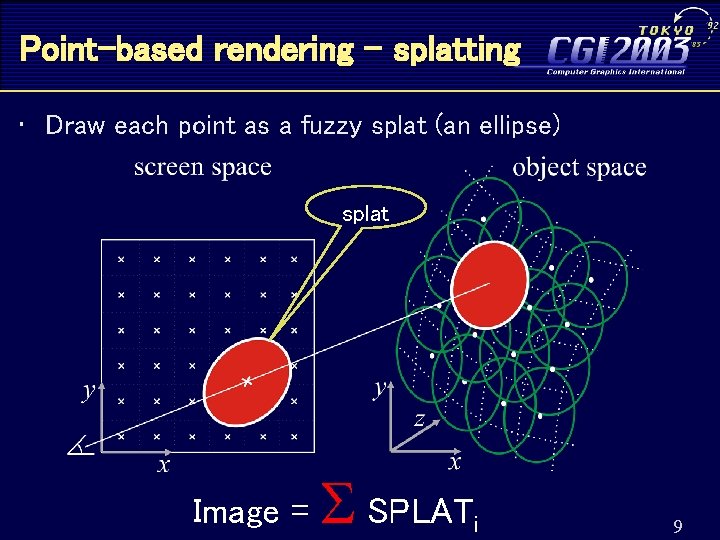

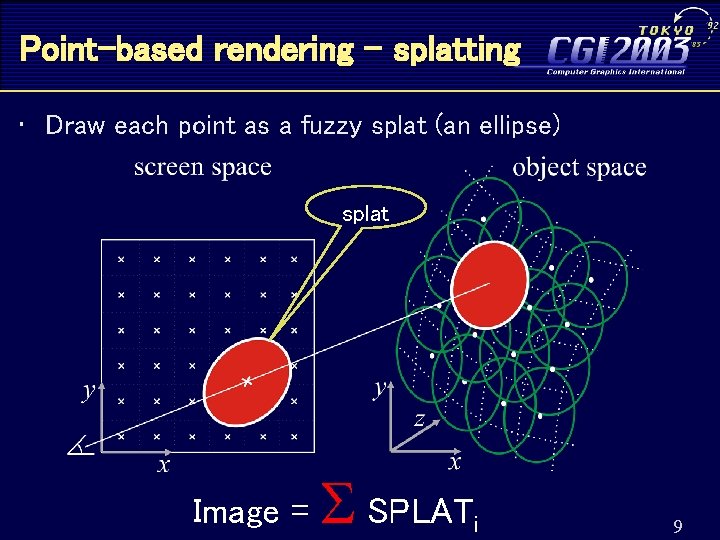

Point-based rendering - splatting • Draw each point as a fuzzy splat (an ellipse) splat Image = SPLAT i 9

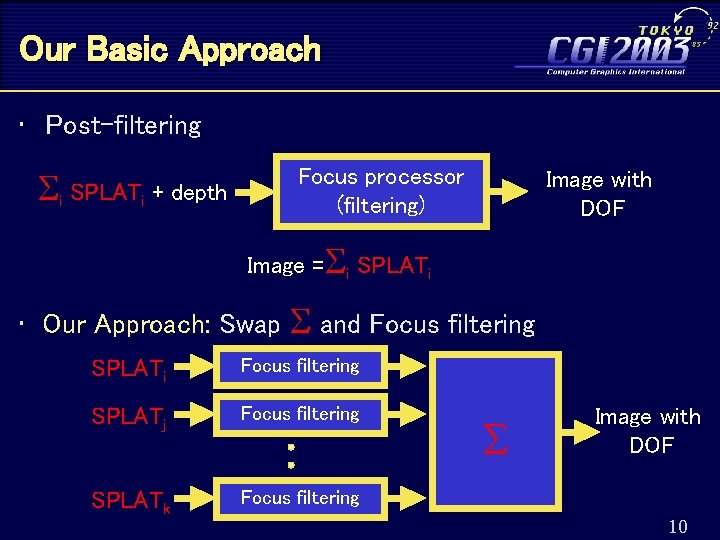

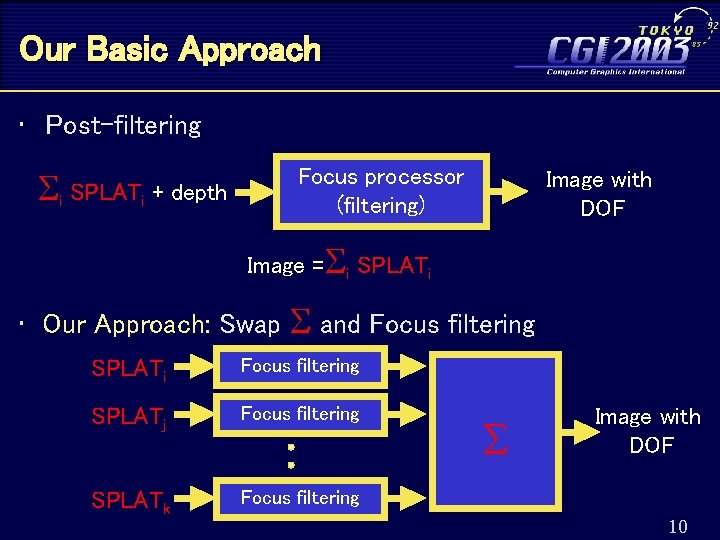

Our Basic Approach • Post-filtering Focus processor (filtering) i SPLAT Imagei + depth Image with DOF Image = i SPLATi • Our Approach: Swap and Focus filtering SPLATi Focus filtering SPLATj Focus filtering SPLATk Focus filtering Image with DOF 10

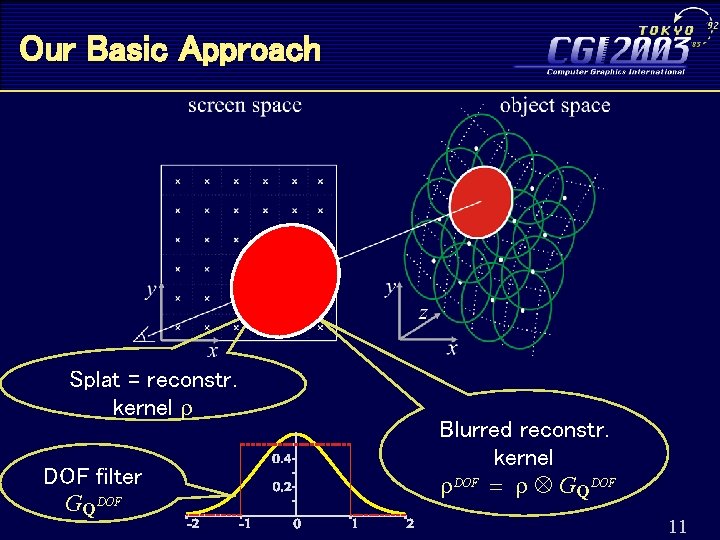

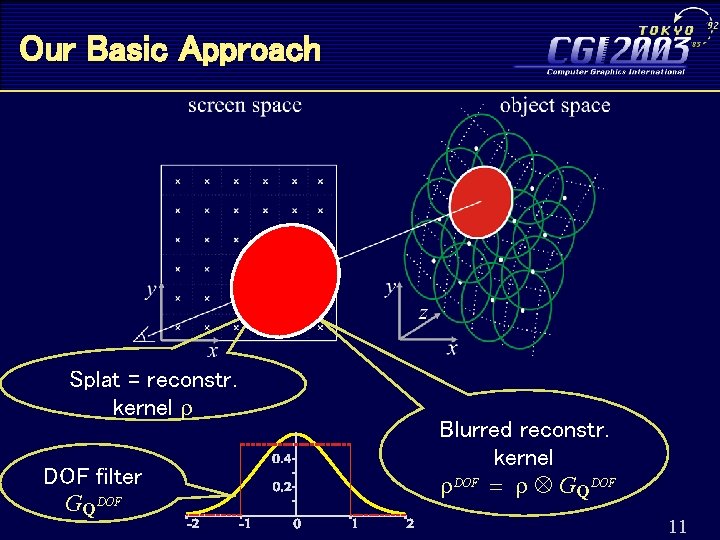

Our Basic Approach Splat = reconstr. kernel r DOF filter GQDOF Blurred reconstr. kernel r. DOF = r GQDOF 11

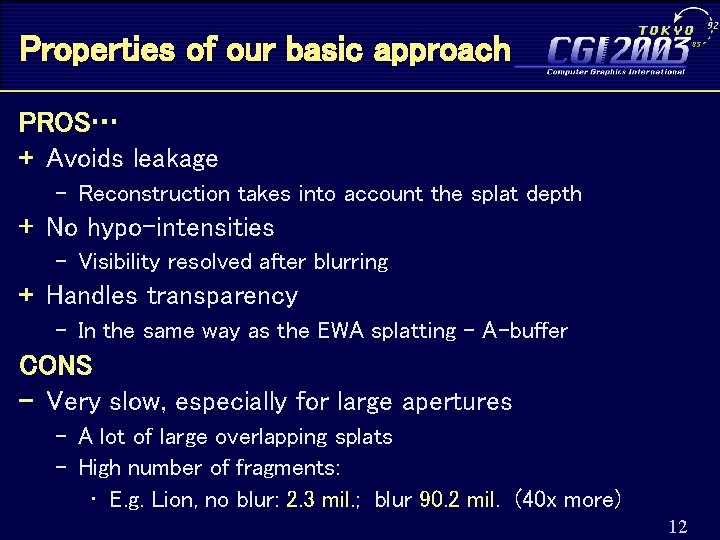

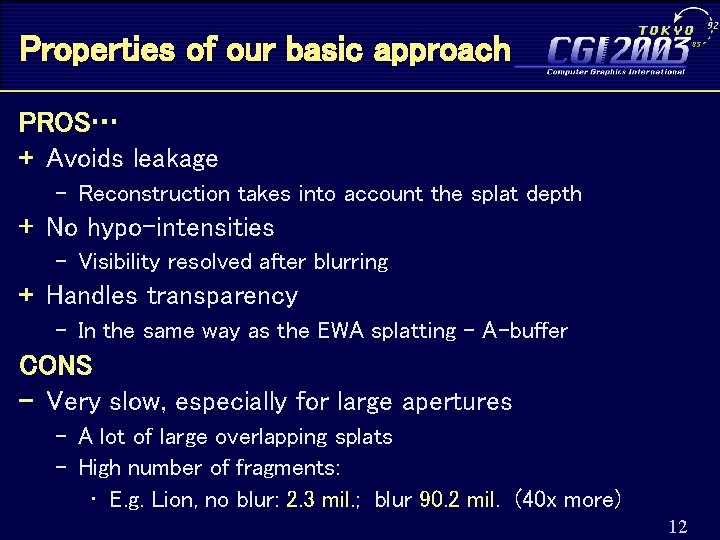

Properties of our basic approach PROS… + Avoids leakage – Reconstruction takes into account the splat depth + No hypo-intensities – Visibility resolved after blurring + Handles transparency – In the same way as the EWA splatting – A-buffer CONS - Very slow, especially for large apertures – A lot of large overlapping splats – High number of fragments: • E. g. Lion, no blur: 2. 3 mil. ; blur 90. 2 mil. (40 x more) 12

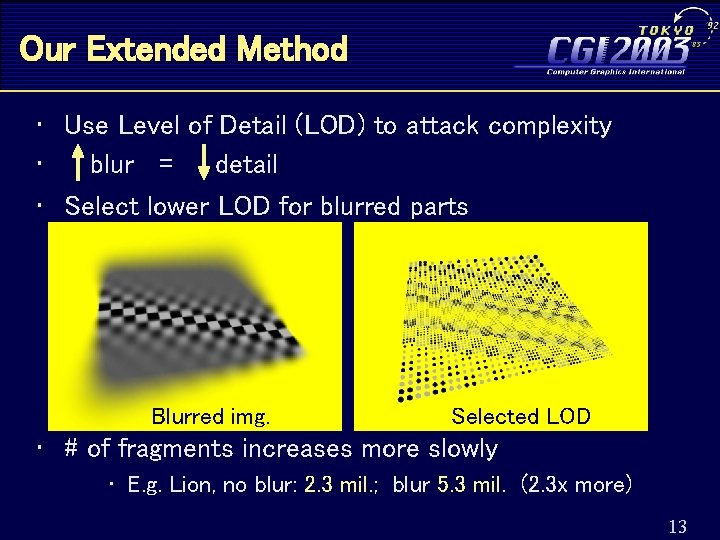

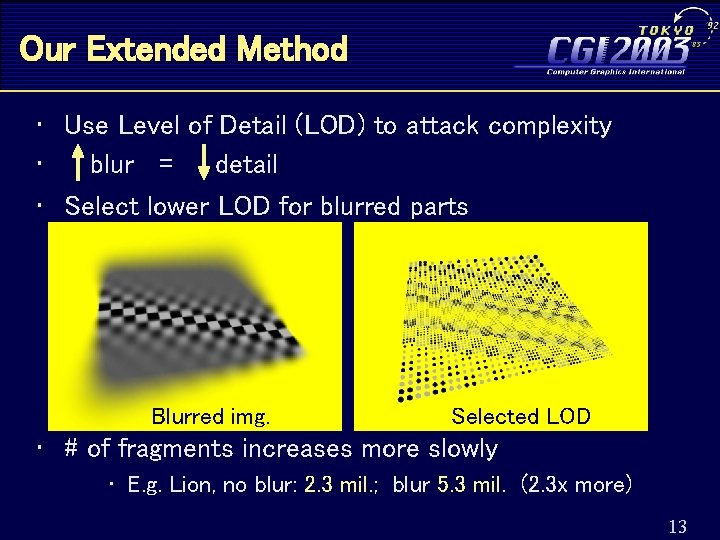

Our Extended Method • Use Level of Detail (LOD) to attack complexity • blur = detail • Select lower LOD for blurred parts Blurred img. Selected LOD • # of fragments increases more slowly • E. g. Lion, no blur: 2. 3 mil. ; blur 5. 3 mil. (2. 3 x more) 13

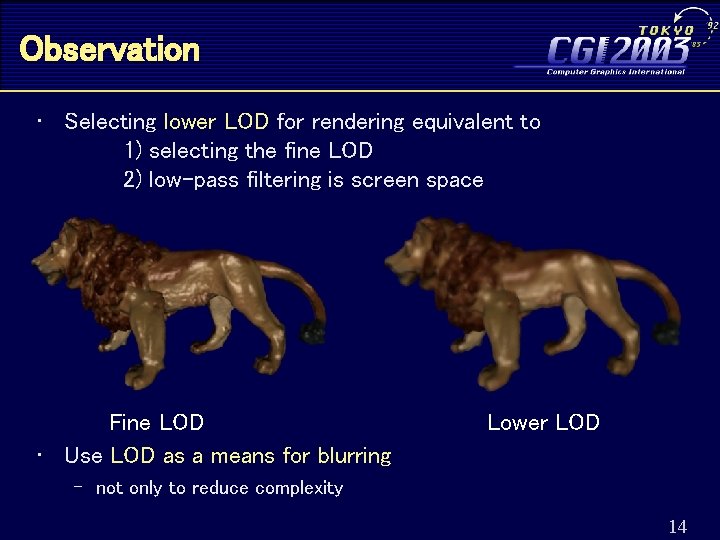

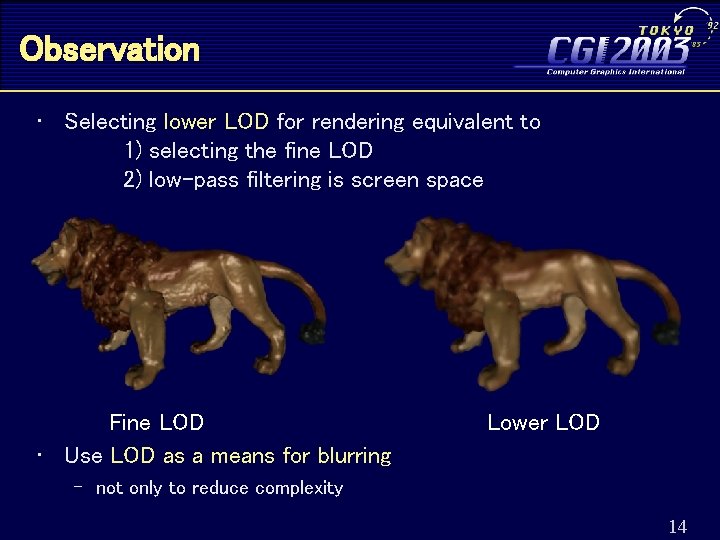

Observation • Selecting lower LOD for rendering equivalent to 1) selecting the fine LOD 2) low-pass filtering is screen space Fine LOD • Use LOD as a means for blurring Lower LOD – not only to reduce complexity 14

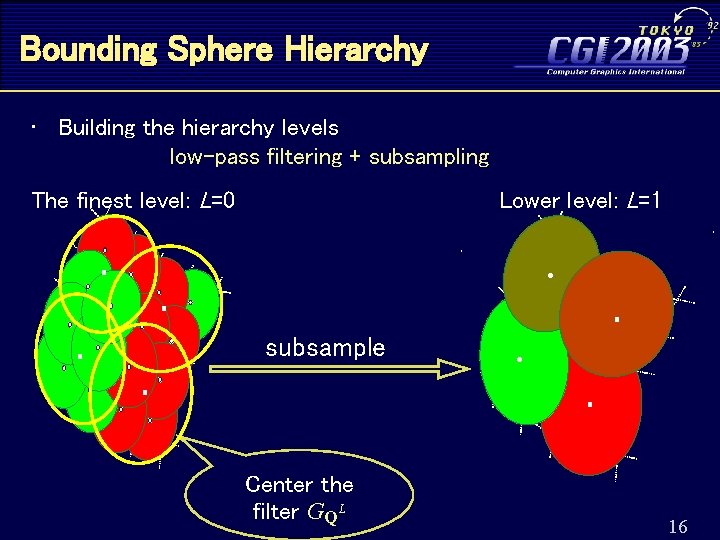

Effect of LOD Selection • How to quantify the effect of LOD selection in terms of blur in the resulting image ? • We use Bounding sphere hierarchy – Qsplat [Rusinkiewicz & Levoy, 2000] 15

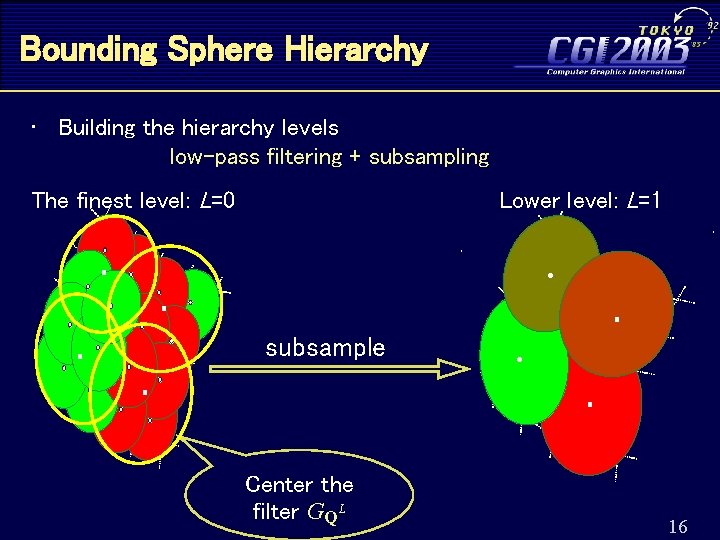

Bounding Sphere Hierarchy • Building the hierarchy levels low-pass filtering + subsampling The finest level: L=0 Lower level: L=1 subsample Center the filter GQL 16

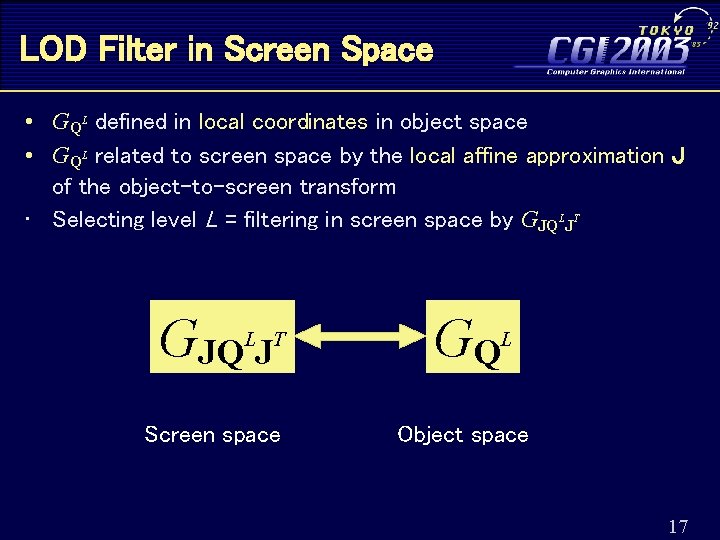

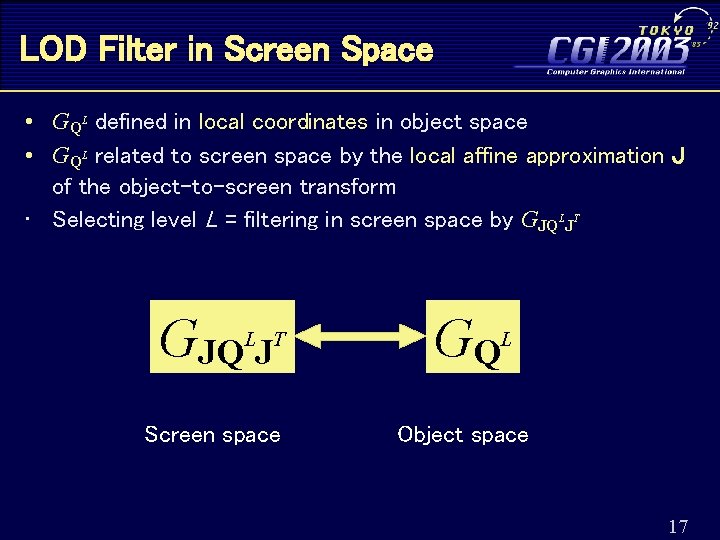

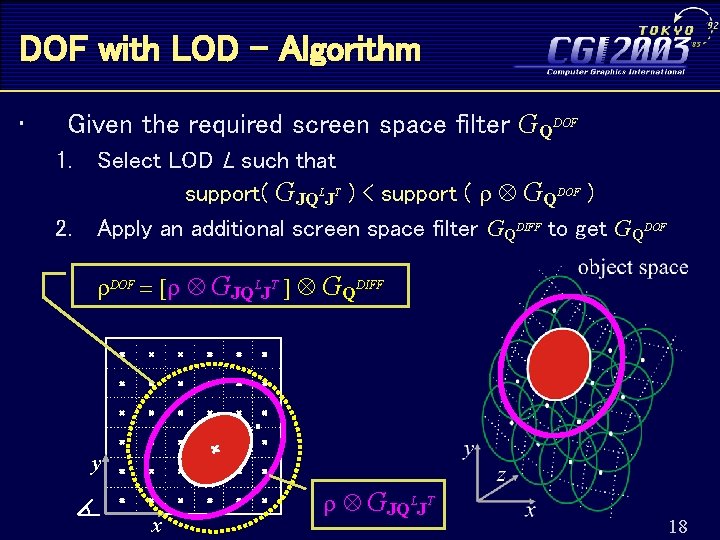

LOD Filter in Screen Space • GQL defined in local coordinates in object space • GQL related to screen space by the local affine approximation J of the object-to-screen transform • Selecting level L = filtering in screen space by GJQLJT GJQ J GQ Screen space Object space L T L 17

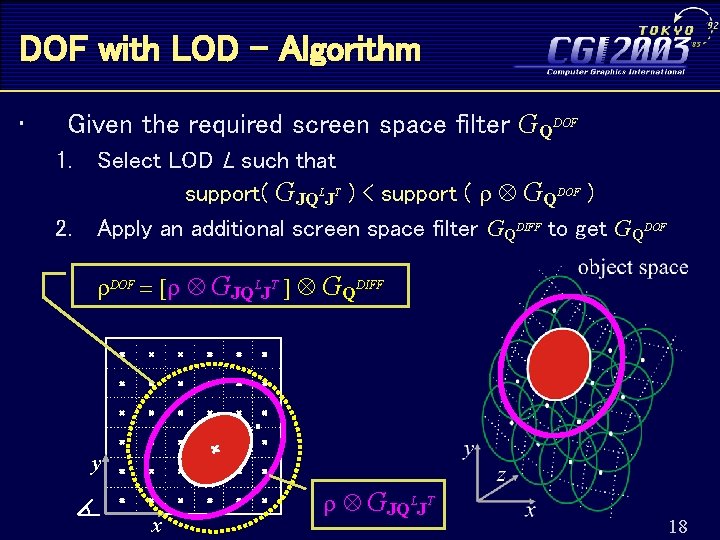

DOF with LOD - Algorithm • Given the required screen space filter GQDOF 1. 2. Select LOD L such that support( GJQLJT ) < support ( r GQDOF ) Apply an additional screen space filter GQDIFF to get GQDOF T ] G DIFF r GQLJDOF r. DOFr=DOF[r= GJQ Q y x r GJQLJT 18

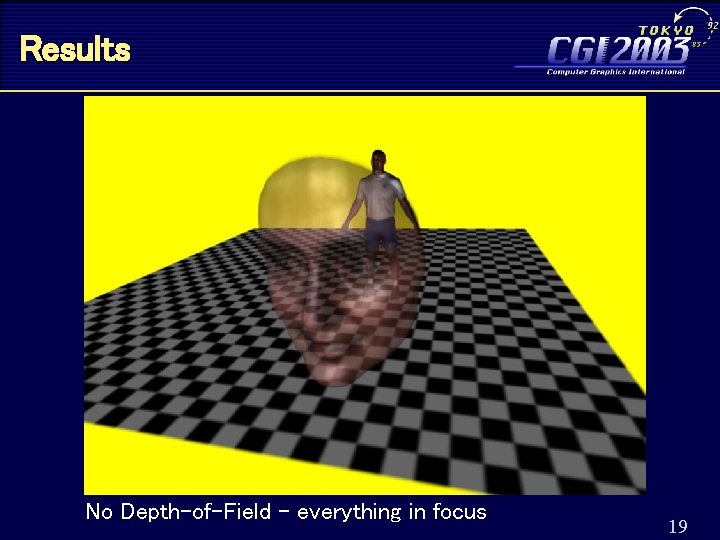

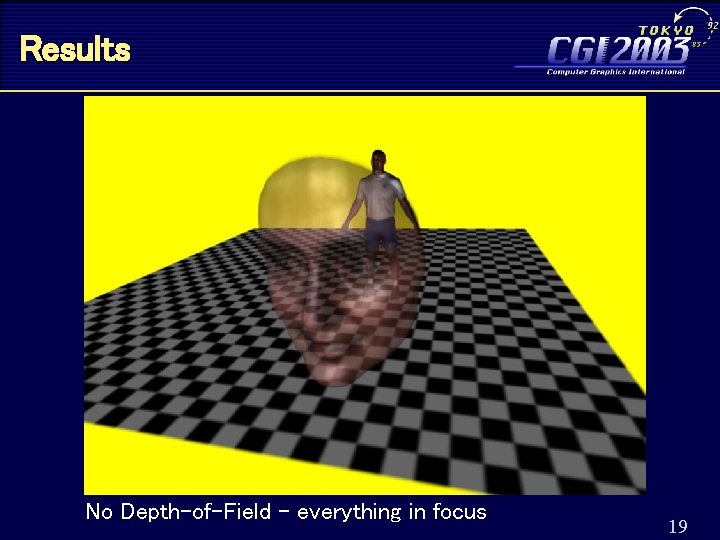

Results No Depth-of-Field – everything in focus 19

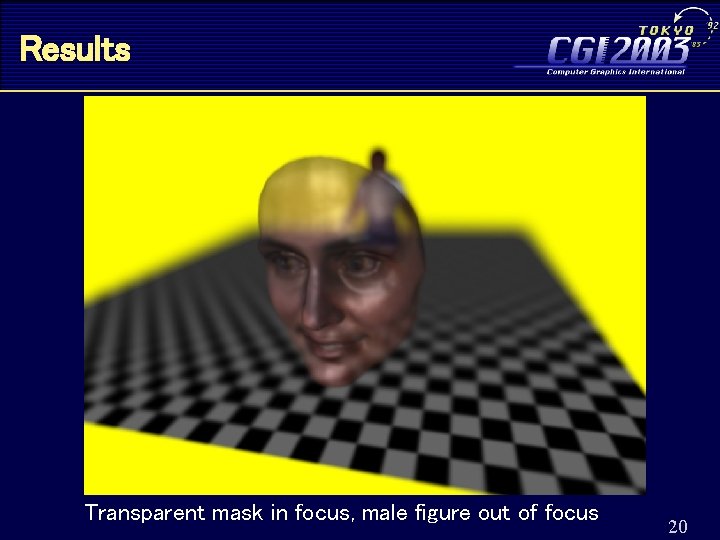

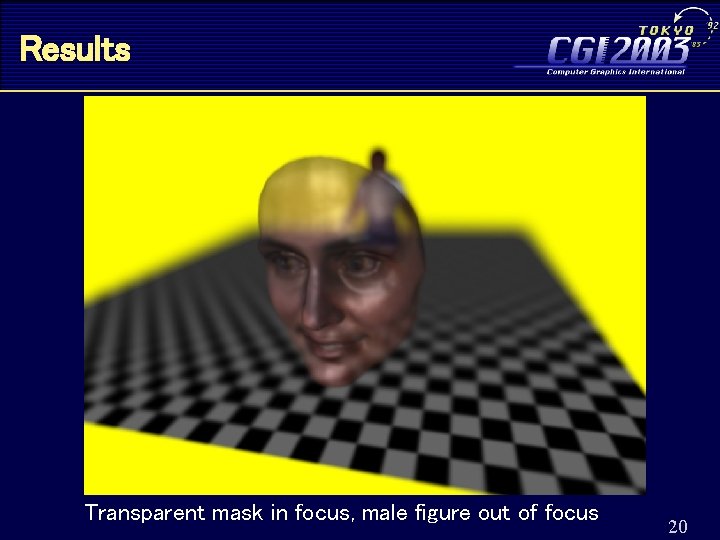

Results Transparent mask in focus, male figure out of focus 20

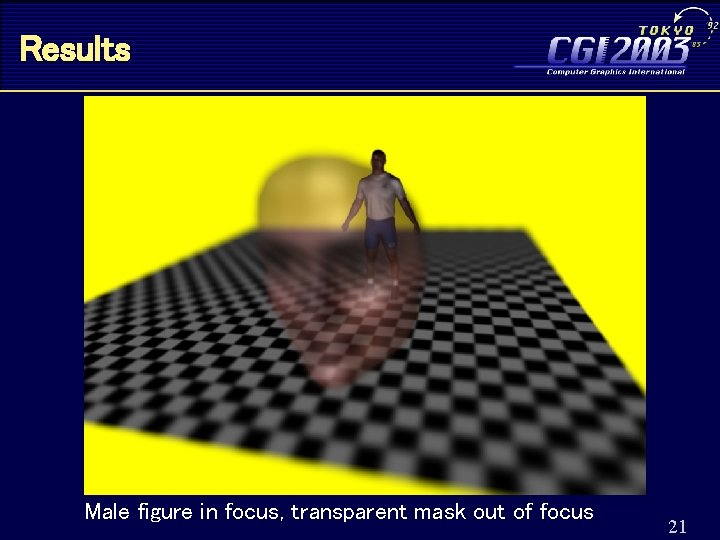

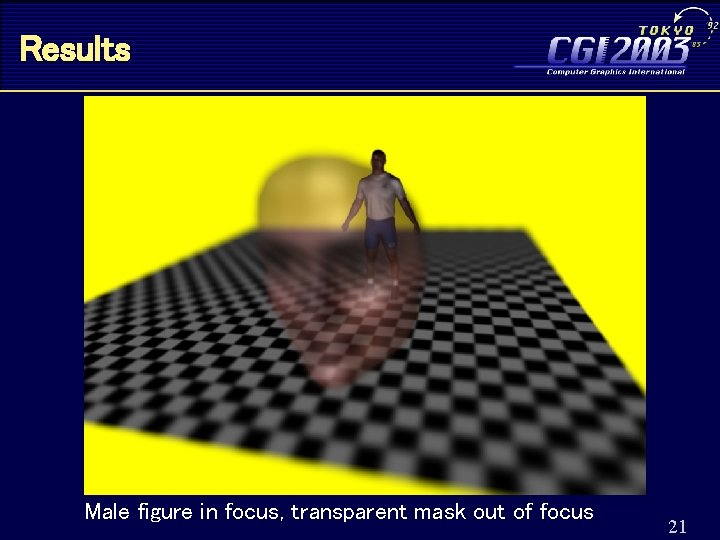

Results Male figure in focus, transparent mask out of focus 21

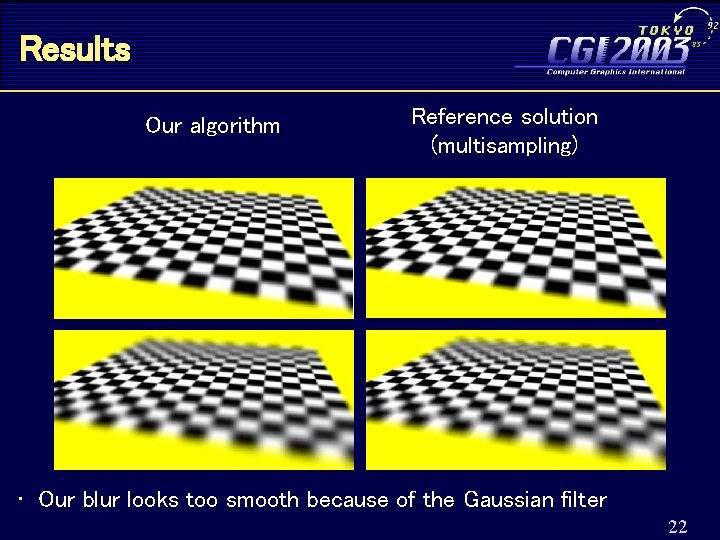

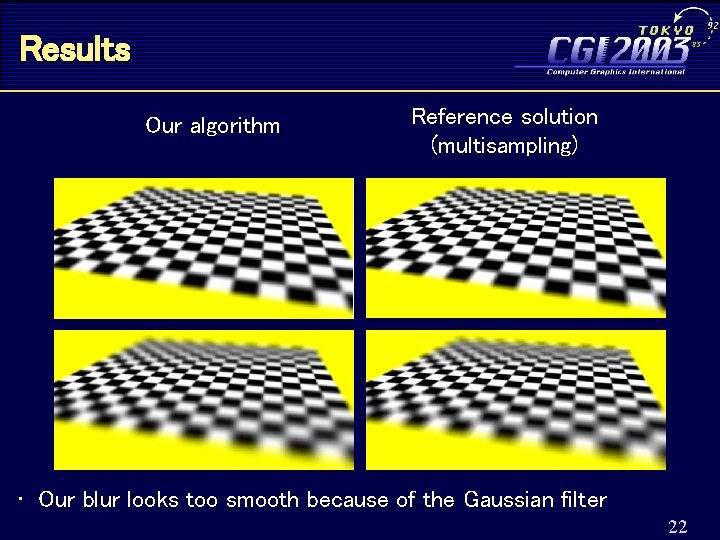

Results Our algorithm Reference solution (multisampling) • Our blur looks too smooth because of the Gaussian filter 22

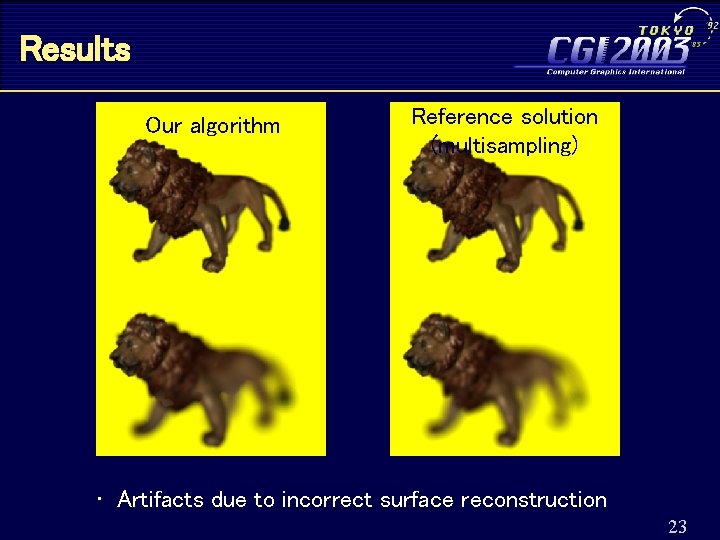

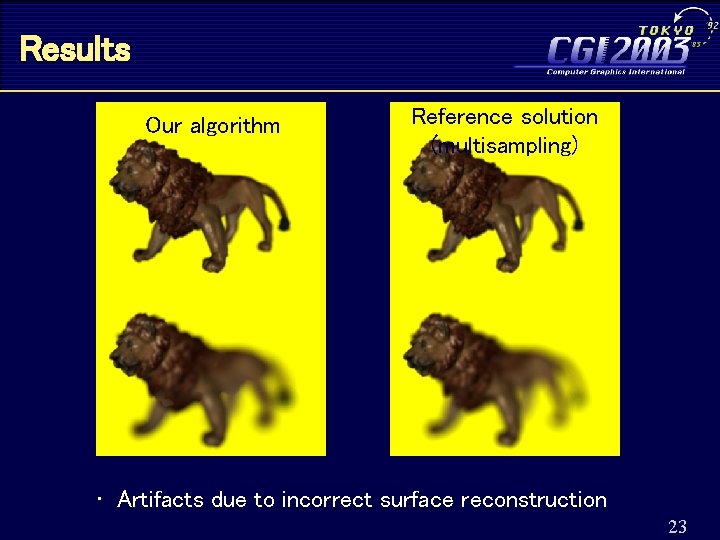

Results Our algorithm Reference solution (multisampling) • Artifacts due to incorrect surface reconstruction 23

Discussion • Simplifying assumptions & limitations – Gaussian distribution of light within the Co. C • Mostly ok – We are blurring the texture before lighting • We should blur after lighting – Possible incorrect image reconstruction from blurred splats 24

Conclusion • • + + + • A novel algorithm for depth of field rendering LOD as a means for depth-blurring Transparency Avoids intensity leakage Running time independent of the DOF Only for point based rendering A number of artifacts can appear Ideal tool for interactive DOF previewing – Trial and error camera parameters setting Acknowledgement: Grant 2159/2002 MSMT Czech Republic 25