Fast Data apps with Alpakka Kafka connector and

- Slides: 45

Fast Data apps with Alpakka Kafka connector and Akka Streams Sean Glover, Lightbend @seg 1 o

Who am I? I’m Sean Glover • Principal Engineer at Lightbend • Member of the Fast Data Platform team • Organizer of Scala Toronto (scalator) • Contributor to various projects in the Kafka ecosystem including Kafka, Alpakka Kafka (reactive-kafka), Strimzi, DC/OS Commons SDK / seg 1 o 2

“ “ The Alpakka project is an initiative to implement a library of integration modules to build stream-aware, reactive, pipelines for Java and Scala. 3

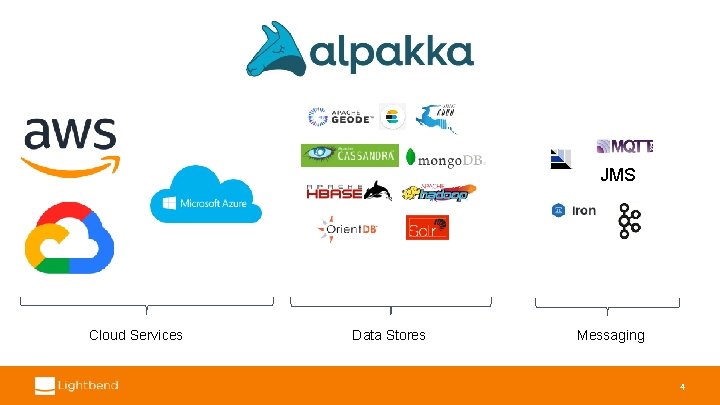

JMS Cloud Services Data Stores Messaging 4

kafka connector “ “ This Alpakka Kafka connector lets you connect Apache Kafka to Akka Streams. It was formerly known as Akka Streams Kafka and even Reactive Kafka. 5

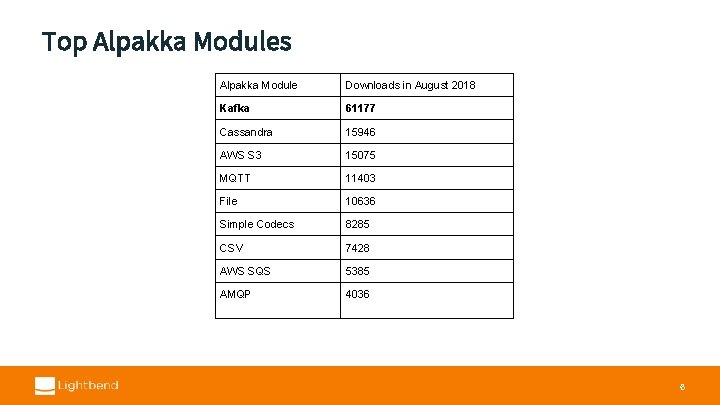

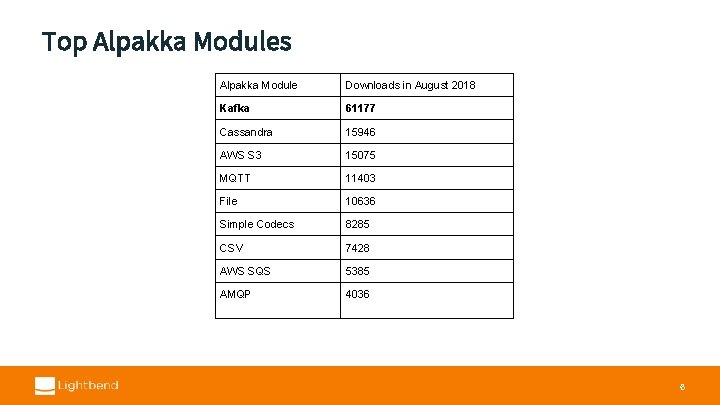

Top Alpakka Modules Alpakka Module Downloads in August 2018 Kafka 61177 Cassandra 15946 AWS S 3 15075 MQTT 11403 File 10636 Simple Codecs 8285 CSV 7428 AWS SQS 5385 AMQP 4036 6

streams “ “ Akka Streams is a library toolkit to provide low latency complex event processing streaming semantics using the Reactive Streams specification implemented internally with an Akka actor system. 7

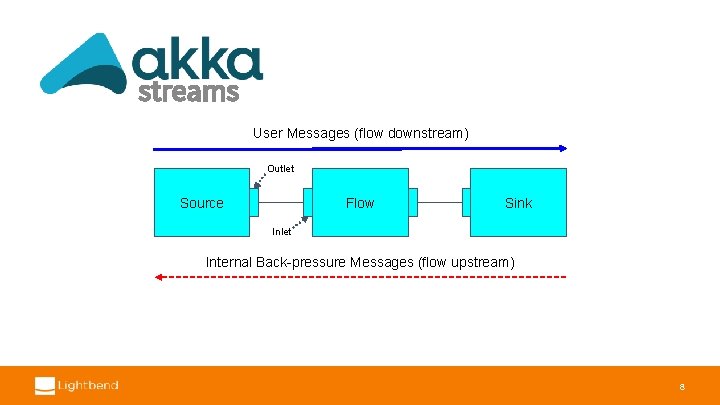

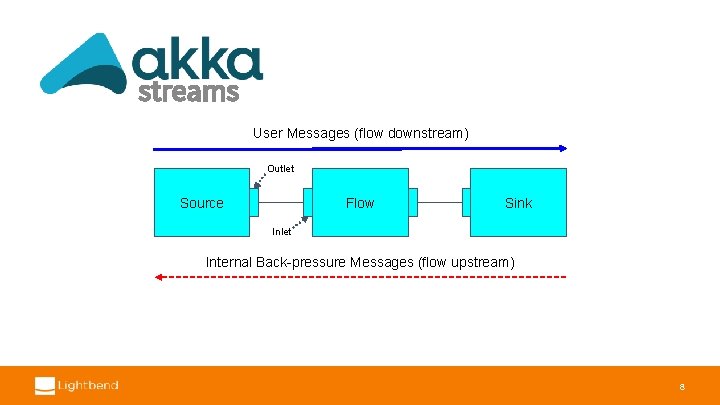

streams User Messages (flow downstream) Outlet Source Flow Sink Inlet Internal Back-pressure Messages (flow upstream) 8

Reactive Streams Specification “ “ Reactive Streams is an initiative to provide a standard for asynchronous stream processing with non-blocking back pressure. http: //www. reactive-streams. org/ 9

Reactive Streams Libraries streams migrating to Spec now part of JDK 9 java. util. concurrent. Flow 10

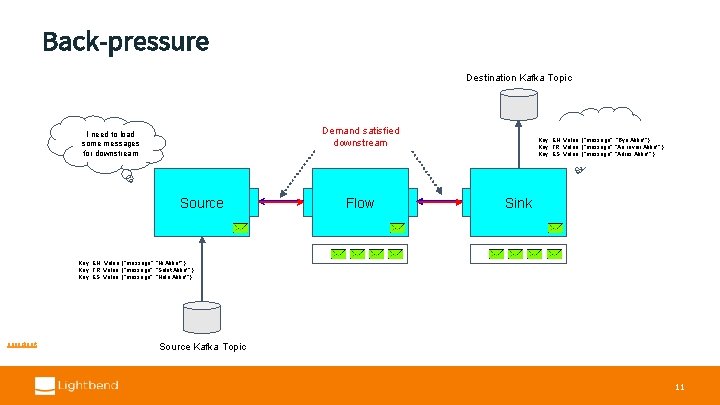

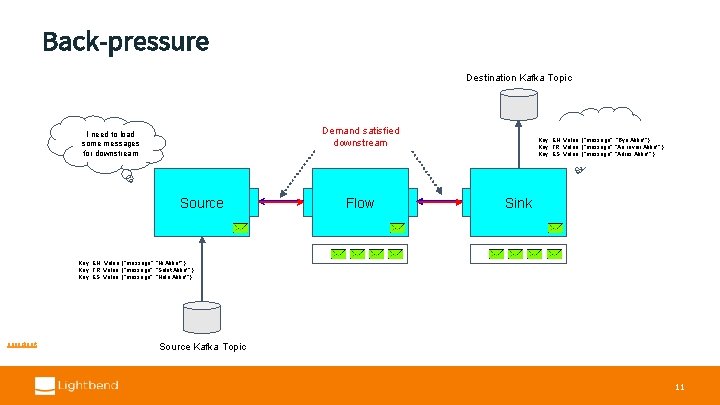

Back-pressure Destination Kafka Topic Demandrequest satisfiedis sent upstream downstream I need to load some messages for downstream Source Flow I need some. . . Key: EN, Value: {“message”: “Bye Akka!” } messages. Key: FR, Value: {“message”: “Au revoir Akka!” } Key: ES, Value: {“message”: “Adiós Akka!” }. . . Sink . . . Key: EN, Value: {“message”: “Hi Akka!” } Key: FR, Value: {“message”: “Salut Akka!” } Key: ES, Value: {“message”: “Hola Akka!” }. . . openclipart Source Kafka Topic 11

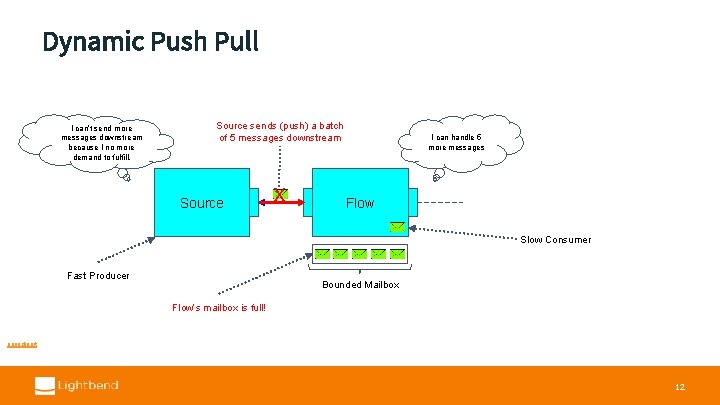

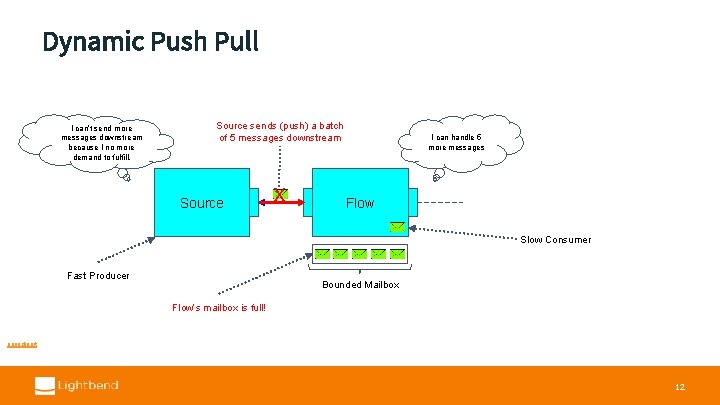

Dynamic Push Pull I can’t send more messages downstream because I no more demand to fulfill. Source sends (push) request a batch Flow sends demand of(pull) 5 messages downstream of 5 messages max Source x I can handle 5 more messages Flow Slow Consumer Fast Producer Bounded Mailbox Flow’s mailbox is full! openclipart 12

Kafka “ “ Kafka is a distributed streaming system. It’s best suited to support fast, high volume, and fault tolerant, data streaming platforms. Kafka Documentation 13

When to use Alpakka Kafka over Kafka Streams? 1. To build back-pressure aware integrations 2. Complex Event Processing 3. A need to model the most complex of graphs 14

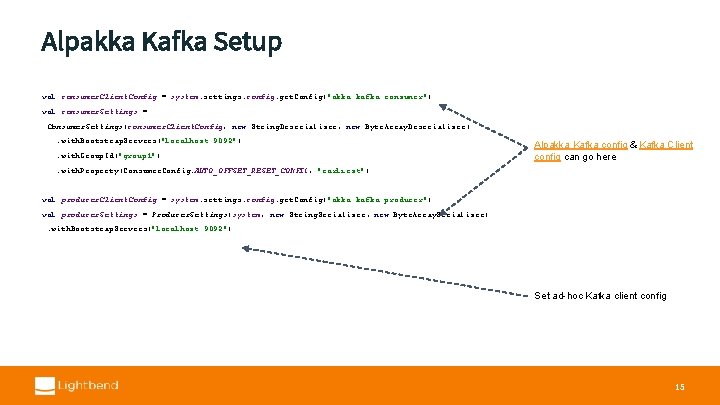

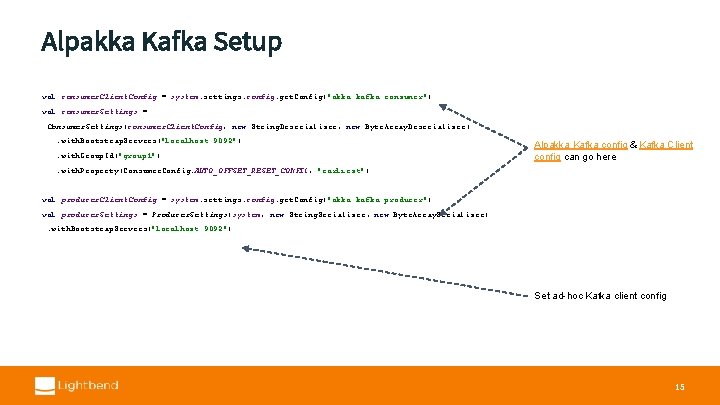

Alpakka Kafka Setup val consumer. Client. Config = system. settings. config. get. Config("akka. kafka. consumer") val consumer. Settings = Consumer. Settings(consumer. Client. Config, new String. Deserializer, new Byte. Array. Deserializer). with. Bootstrap. Servers("localhost: 9092"). with. Group. Id("group 1") Alpakka Kafka config & Kafka Client config can go here . with. Property(Consumer. Config. AUTO_OFFSET_RESET_CONFIG, "earliest") val producer. Client. Config = system. settings. config. get. Config("akka. kafka. producer") val producer. Settings = Producer. Settings(system, new String. Serializer, new Byte. Array. Serializer). with. Bootstrap. Servers("localhost: 9092") Set ad-hoc Kafka client config 15

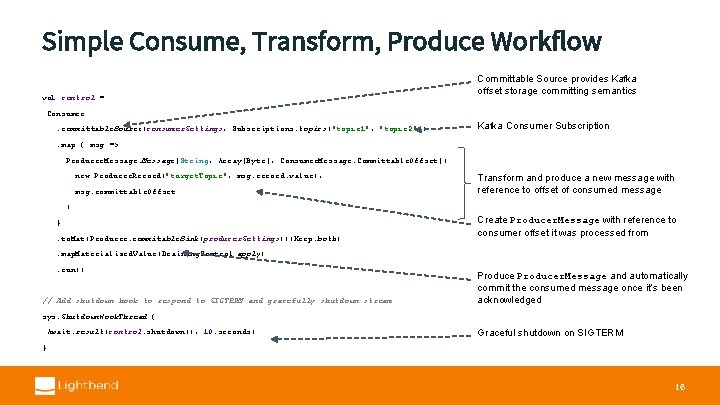

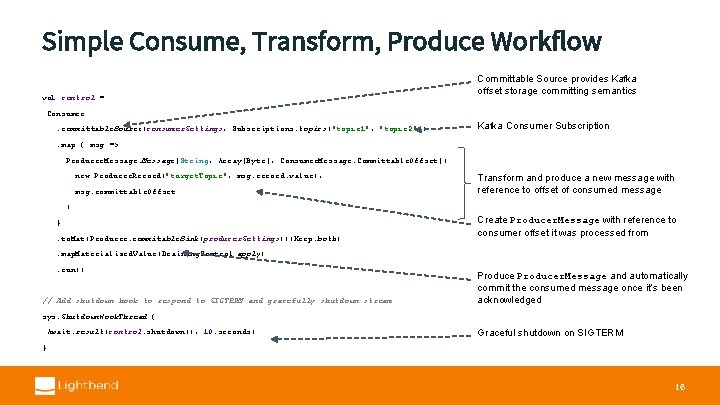

Simple Consume, Transform, Produce Workflow val control = Committable Source provides Kafka offset storage committing semantics Consumer. committable. Source(consumer. Settings, Subscriptions. topics("topic 1", "topic 2")) Kafka Consumer Subscription . map { msg => Producer. Message[String, Array[Byte], Consumer. Message. Committable. Offset]( new Producer. Record("target. Topic", msg. record. value), msg. committable. Offset Transform and produce a new message with reference to offset of consumed message ) }. to. Mat(Producer. commitable. Sink(producer. Settings))(Keep. both) Create Producer. Message with reference to consumer offset it was processed from . map. Materialized. Value(Draining. Control. apply). run() // Add shutdown hook to respond to SIGTERM and gracefully shutdown stream Producer. Message and automatically commit the consumed message once it’s been acknowledged sys. Shutdown. Hook. Thread { Await. result(control. shutdown(), 10. seconds) Graceful shutdown on SIGTERM } 16

Transactional “Exactly-Once”

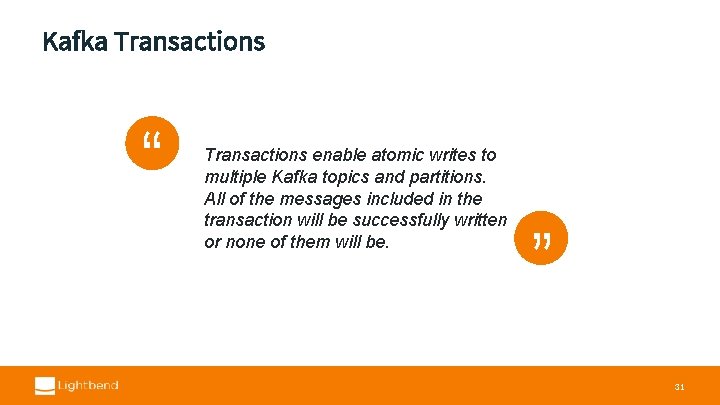

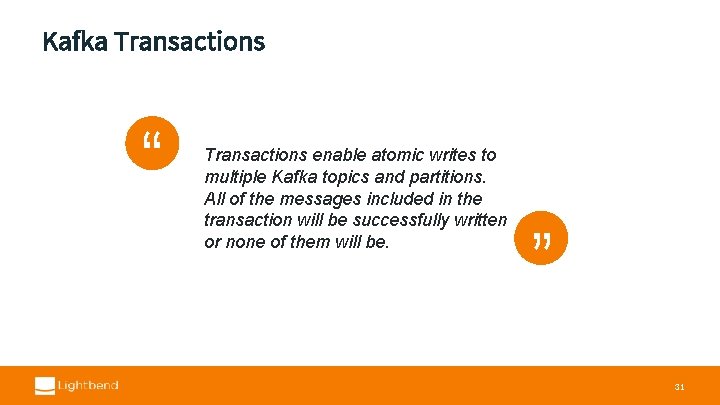

Kafka Transactions “ “ Transactions enable atomic writes to multiple Kafka topics and partitions. All of the messages included in the transaction will be successfully written or none of them will be. 31

Message Delivery Semantics • At most once • At least once • “Exactly once” openclipart 32

Exactly Once Delivery vs Exactly Once Processing “ “ Exactly-once message delivery is impossible between two parties where failures of communication are possible. Two Generals/Byzantine Generals problem 33

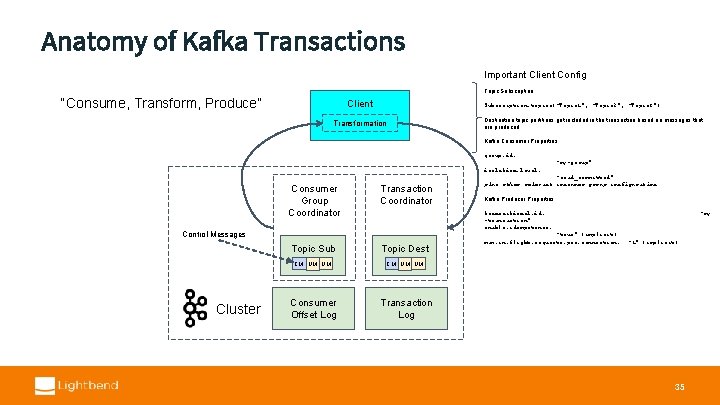

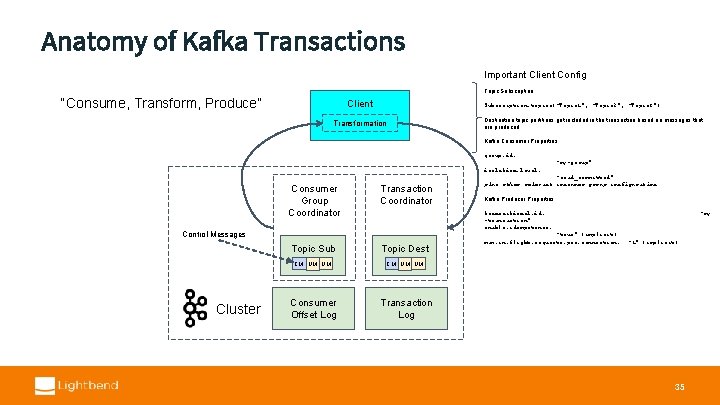

Anatomy of Kafka Transactions Important Client Config Topic Subscription: “Consume, Transform, Produce” Client Subscription. topics(“Topic 1”, “Topic 2”, “Topic 3”) Transformation Destination topic partitions get included in the transaction based on messages that are produced. Kafka Consumer Properties: group. id: “my-group” isolation. level: Consumer Group Coordinator Transaction Coordinator Topic Sub Topic Dest CM UM UM Consumer Offset Log Kafka Producer Properties: transactional. id: -transaction” enable. idempotence: Control Messages Cluster “read_committed” plus other relevant consumer group configuration “true” (implicit) max. in. flight. requests. per. connection: “my “ 1” (implicit) Transaction Log 35

Kafka Features That Enable Transactions 1. Idempotent producer 2. Multiple partition atomic writes 3. Consumer read isolation level 36

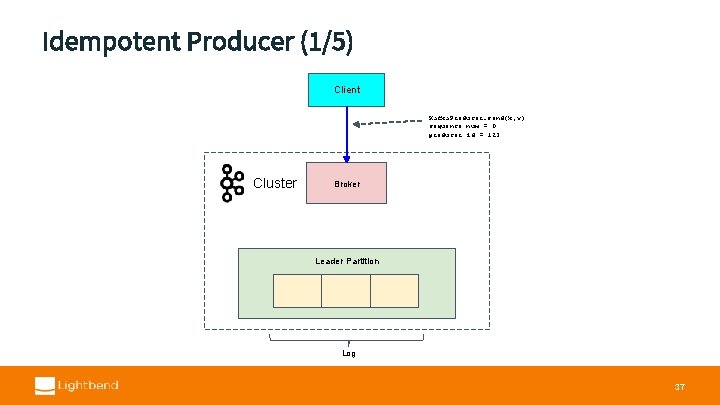

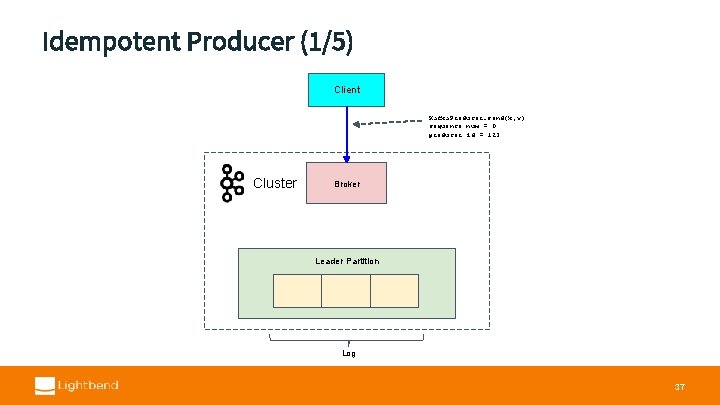

Idempotent Producer (1/5) Client Kafka. Producer. send(k, v) sequence num = 0 producer id = 123 Cluster Broker Leader Partition Log 37

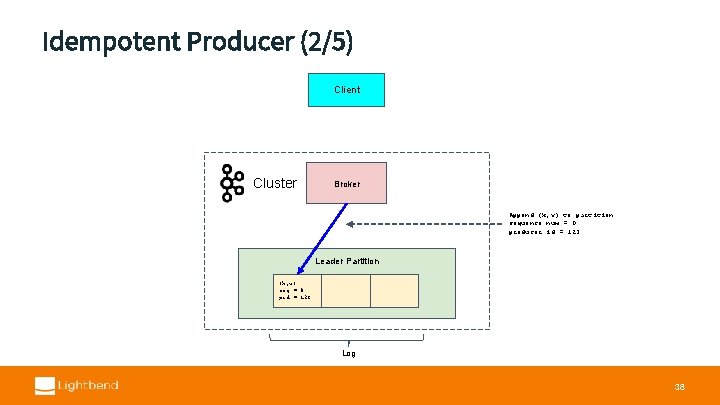

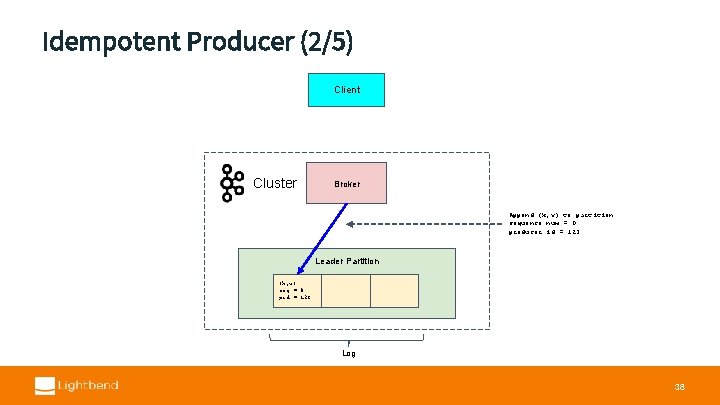

Idempotent Producer (2/5) Client Cluster Broker Append (k, v) to partition sequence num = 0 producer id = 123 Leader Partition (k, v) seq = 0 pid = 123 Log 38

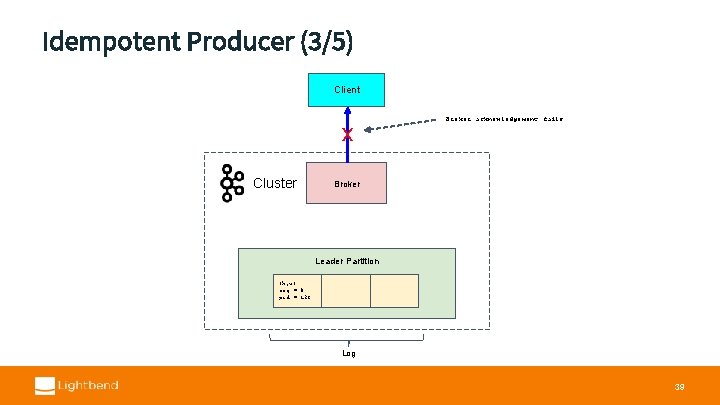

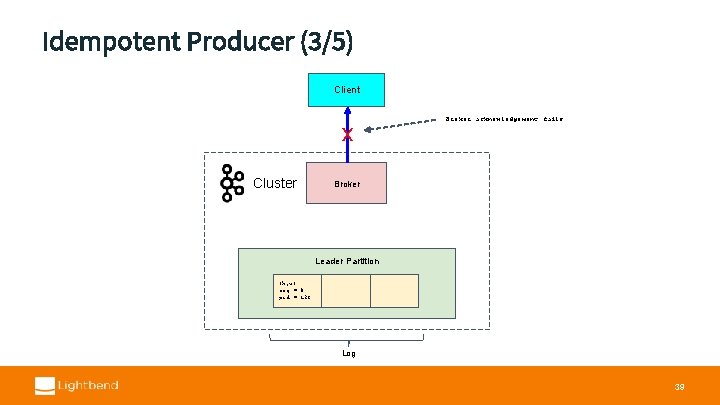

Idempotent Producer (3/5) Client x Cluster Broker acknowledgement fails Kafka. Producer. send(k, v) sequence num = 0 producer id = 123 Broker Leader Partition (k, v) seq = 0 pid = 123 Log 39

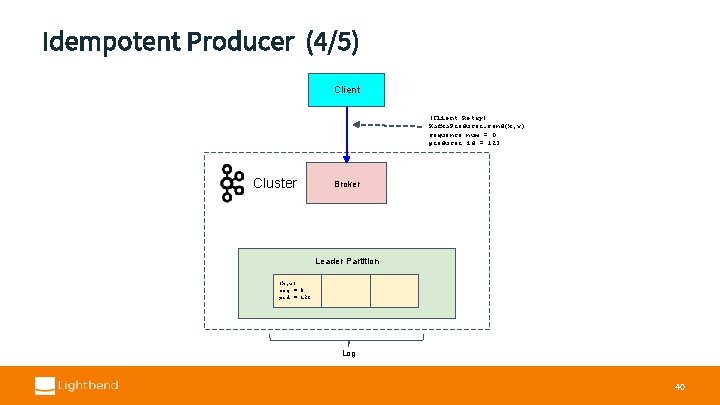

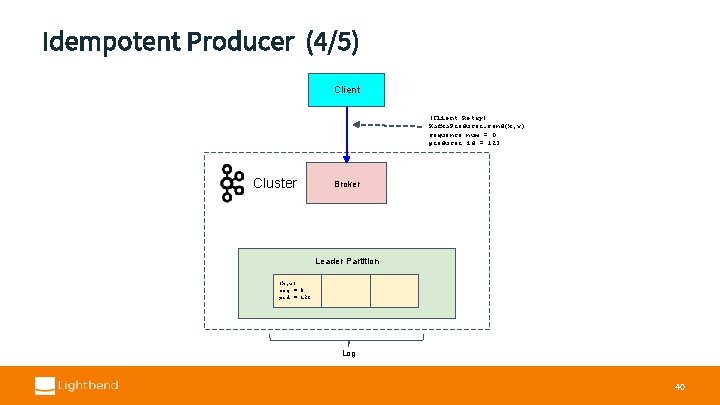

Idempotent Producer (4/5) Client (Client Retry) Kafka. Producer. send(k, v) sequence num = 0 producer id = 123 Cluster Broker Leader Partition (k, v) seq = 0 pid = 123 Log 40

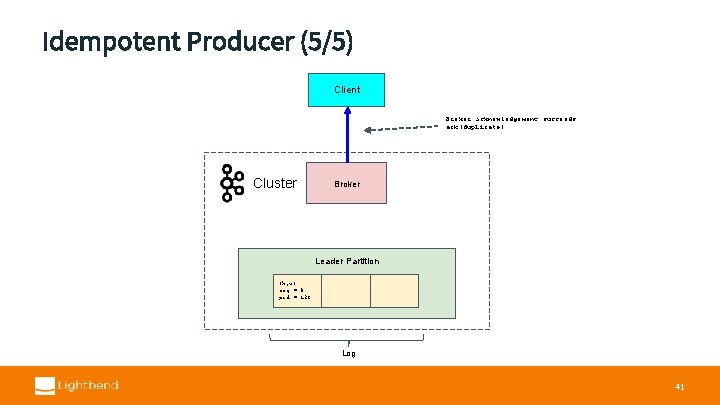

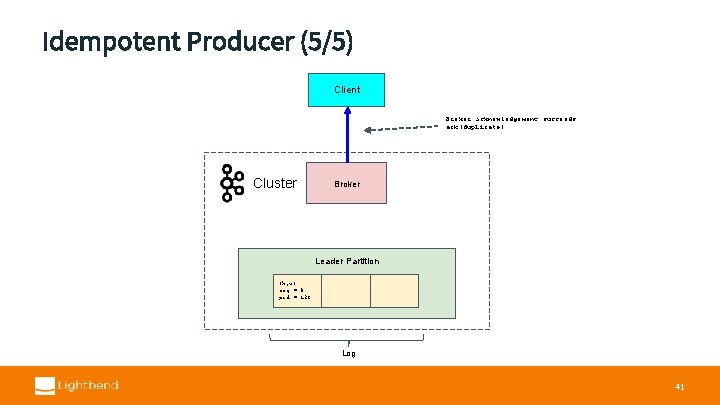

Idempotent Producer (5/5) Client Broker acknowledgement succeeds Kafka. Producer. send(k, v) ack(duplicate) sequence num = 0 producer id = 123 Cluster Broker Leader Partition (k, v) seq = 0 pid = 123 Log 41

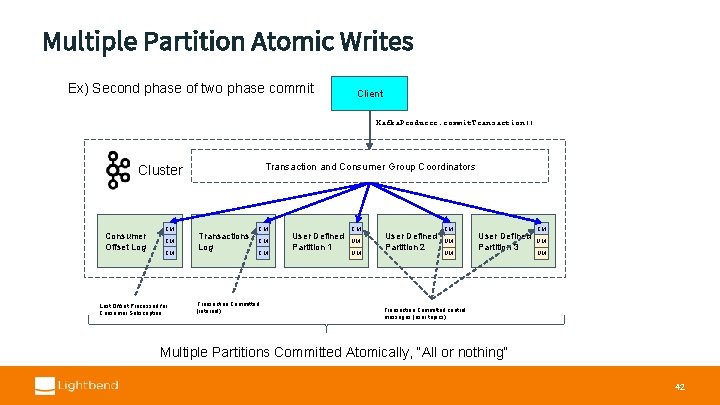

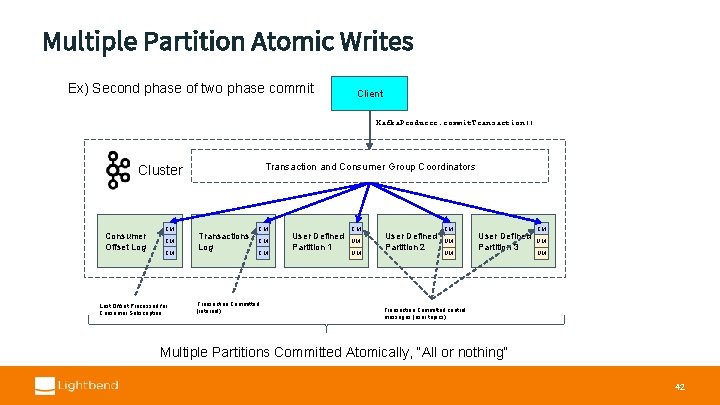

Multiple Partition Atomic Writes Ex) Second phase of two phase commit Client Kafka. Producer. commit. Transaction() Transaction and Consumer Group Coordinators Cluster Consumer Offset Log CM CM CM Last Offset Processed for Consumer Subscription Transactions Log CM CM CM Transaction Committed (internal) User Defined Partition 1 CM UM UM User Defined Partition 2 CM UM UM User Defined Partition 3 CM UM UM Transaction Committed control messages (user topics) Multiple Partitions Committed Atomically, “All or nothing” 42

Consumer Read Isolation Level Cluster User Defined Partition 1 CM UM UM User Defined Partition 2 Client CM UM UM User Defined Partition 3 CM UM UM Kafka Consumer Properties: isolation. level: “read_committed” 43

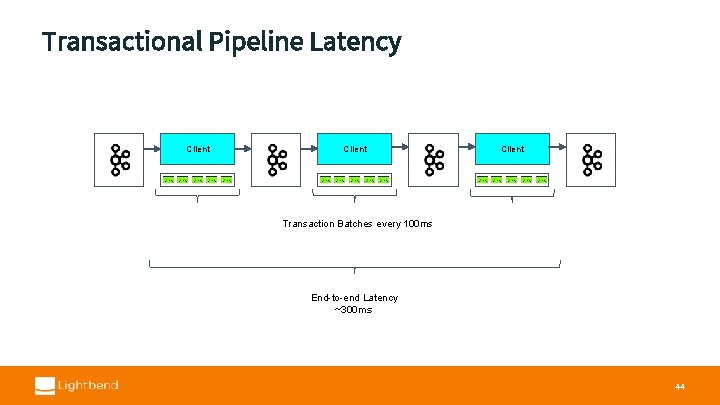

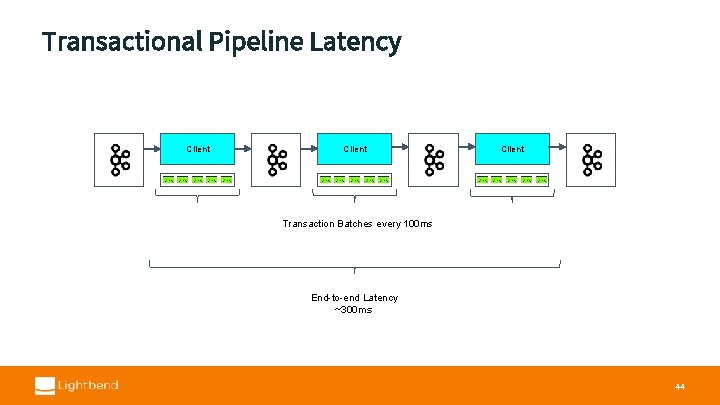

Transactional Pipeline Latency Client Transaction Batches every 100 ms End-to-end Latency ~300 ms 44

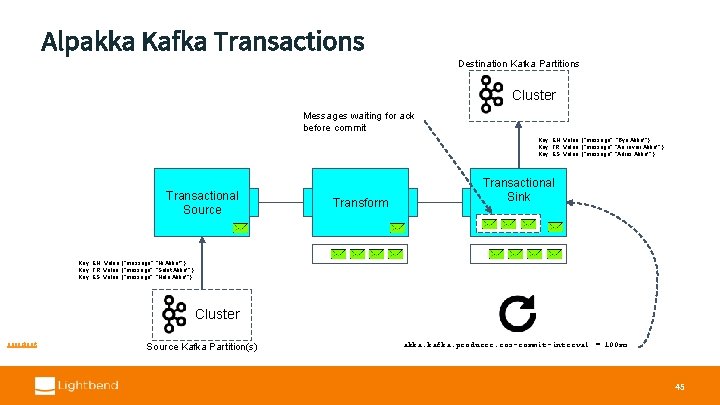

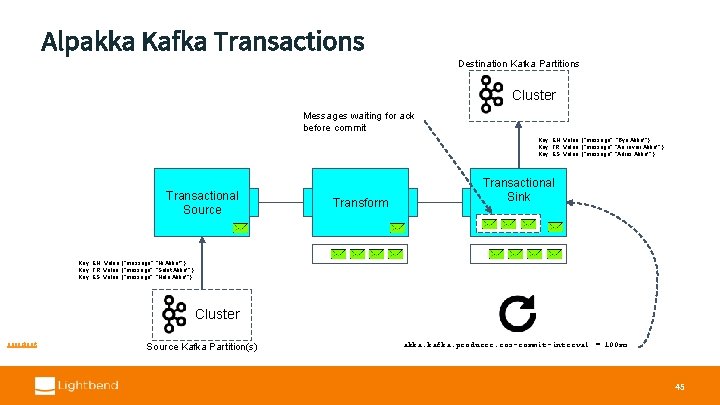

Alpakka Kafka Transactions Destination Kafka Partitions Cluster Messages waiting for ack before commit Transactional Source Transform . . . Key: EN, Value: {“message”: “Bye Akka!” } Key: FR, Value: {“message”: “Au revoir Akka!” } Key: ES, Value: {“message”: “Adiós Akka!” }. . . Transactional Sink . . . Key: EN, Value: {“message”: “Hi Akka!” } Key: FR, Value: {“message”: “Salut Akka!” } Key: ES, Value: {“message”: “Hola Akka!” }. . . Cluster openclipart Source Kafka Partition(s) akka. kafka. producer. eos-commit-interval = 100 ms 45

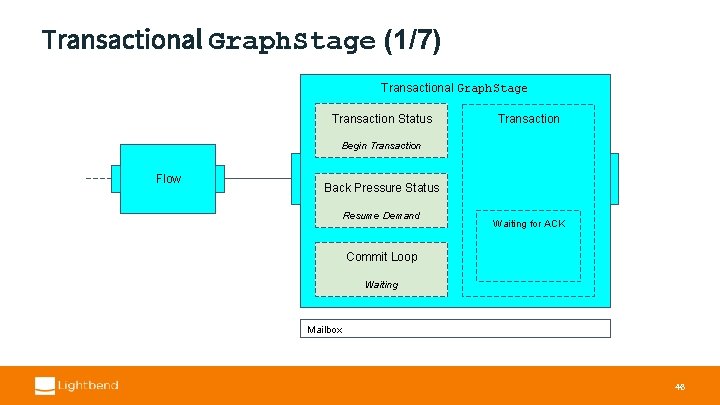

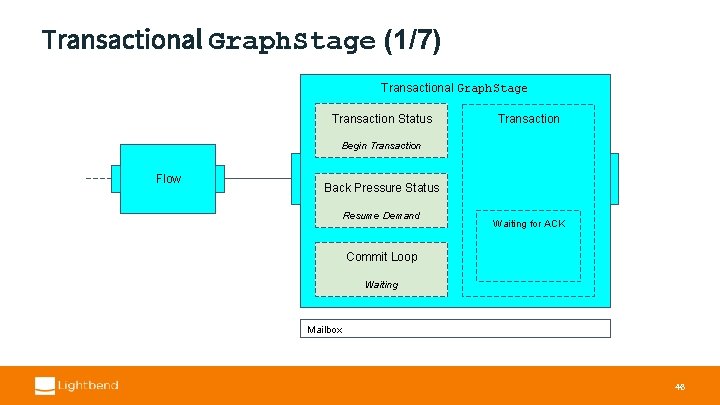

Transactional Graph. Stage (1/7) Transactional Graph. Stage Transaction Status Transaction Begin Transaction Flow Back Pressure Status Resume Demand Waiting for ACK Commit Loop Waiting Mailbox 46

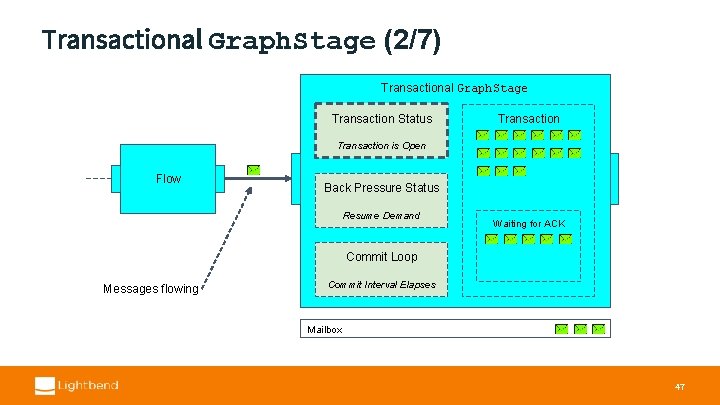

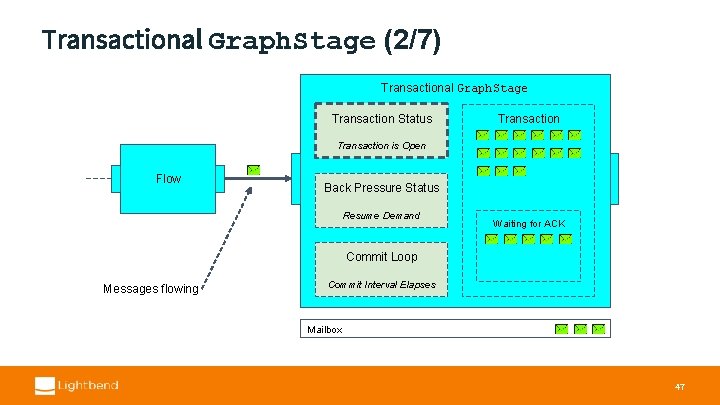

Transactional Graph. Stage (2/7) Transactional Graph. Stage Transaction Status Transaction is Open Flow Back Pressure Status Resume Demand Waiting for ACK Commit Loop Messages flowing Commit Interval Elapses Mailbox 47

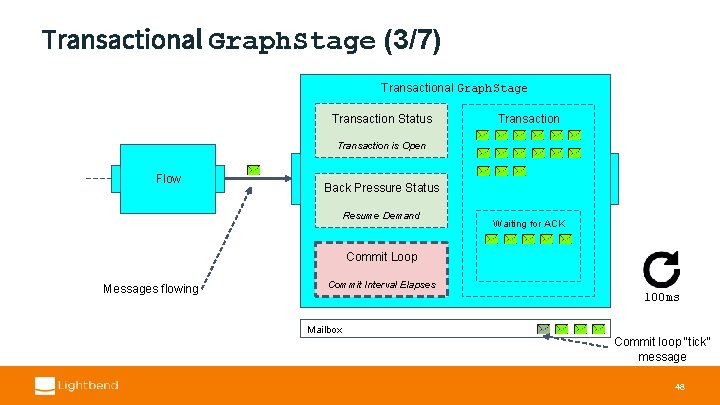

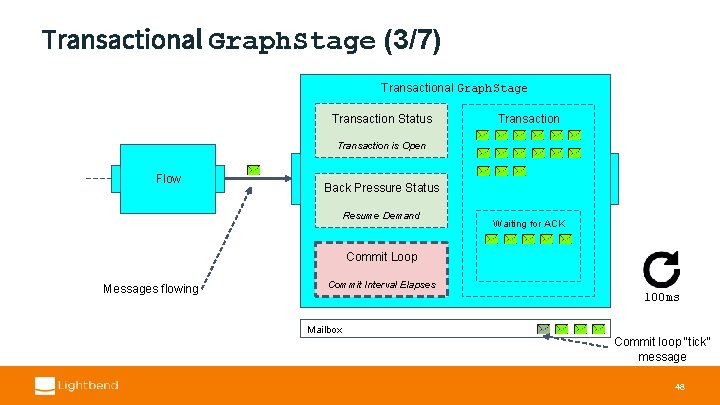

Transactional Graph. Stage (3/7) Transactional Graph. Stage Transaction Status Transaction is Open Flow Back Pressure Status Resume Demand Waiting for ACK Commit Loop Messages flowing Commit Interval Elapses 100 ms Mailbox Commit loop “tick” message 48

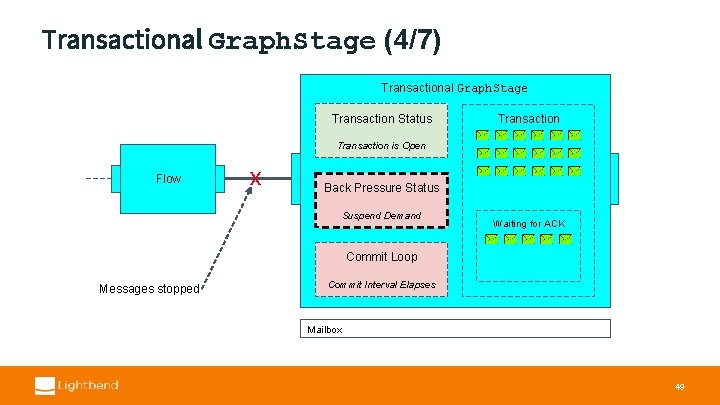

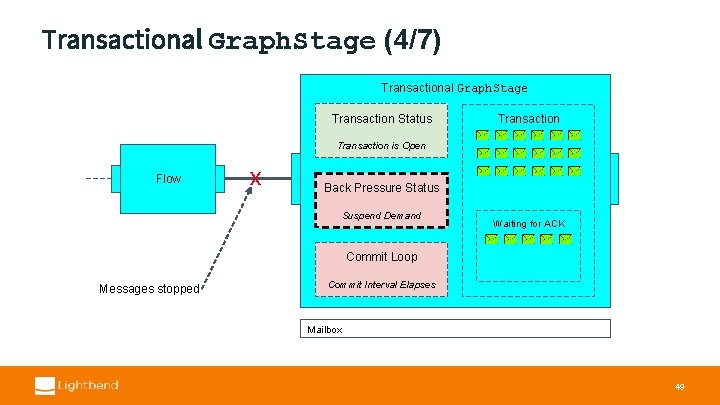

Transactional Graph. Stage (4/7) Transactional Graph. Stage Transaction Status Transaction is Open Flow x Back Pressure Status Suspend Demand Waiting for ACK Commit Loop Messages stopped Commit Interval Elapses Mailbox 49

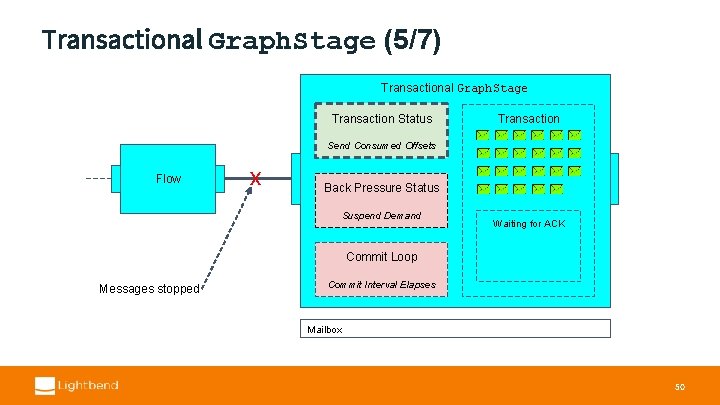

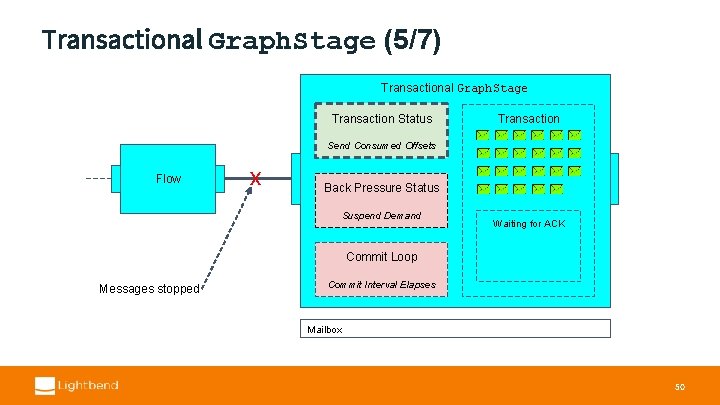

Transactional Graph. Stage (5/7) Transactional Graph. Stage Transaction Status Transaction Send Consumed Offsets Flow x Back Pressure Status Suspend Demand Waiting for ACK Commit Loop Messages stopped Commit Interval Elapses Mailbox 50

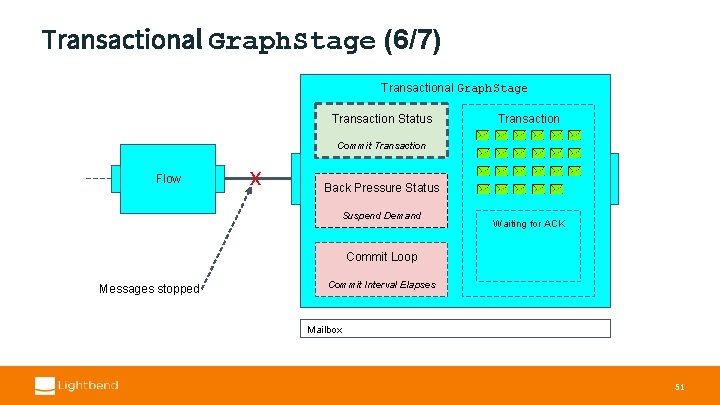

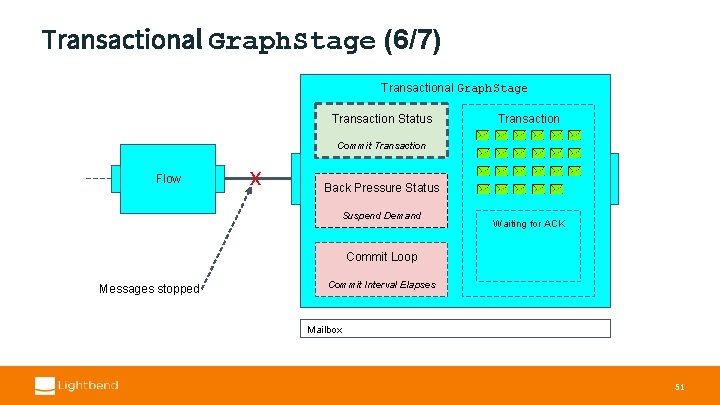

Transactional Graph. Stage (6/7) Transactional Graph. Stage Transaction Status Transaction Commit Transaction Flow x Back Pressure Status Suspend Demand Waiting for ACK Commit Loop Messages stopped Commit Interval Elapses Mailbox 51

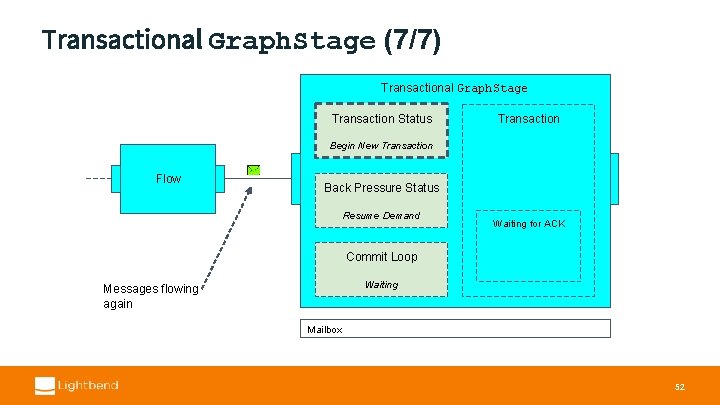

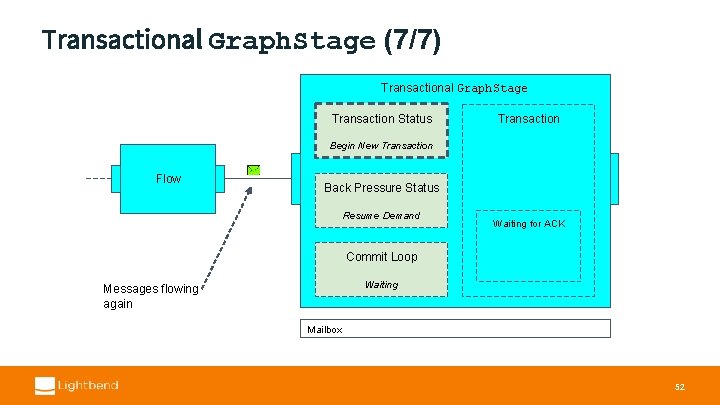

Transactional Graph. Stage (7/7) Transactional Graph. Stage Transaction Status Transaction Begin New Transaction Flow Back Pressure Status Resume Demand Waiting for ACK Commit Loop Waiting Messages flowing again Mailbox 52

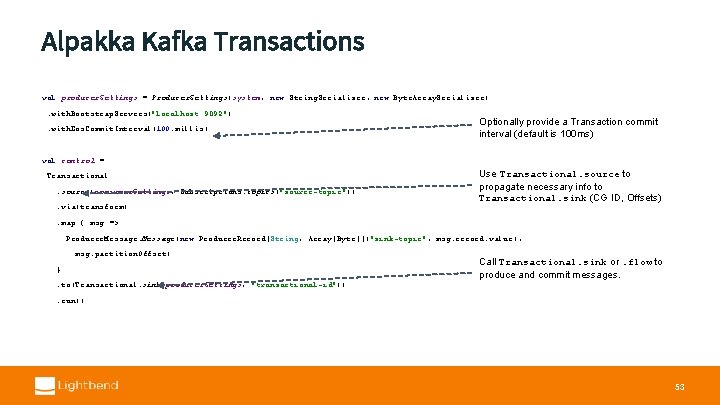

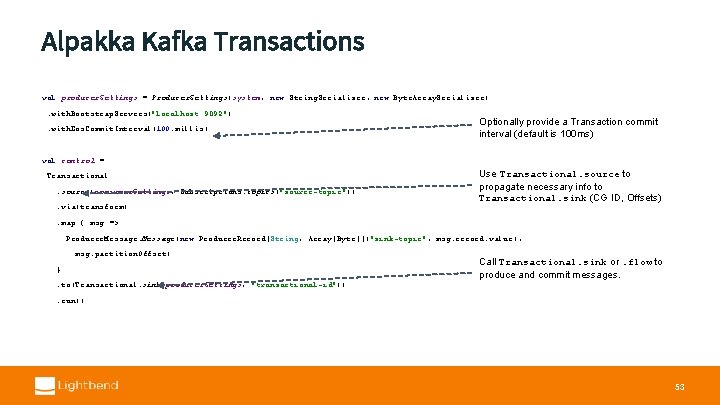

Alpakka Kafka Transactions val producer. Settings = Producer. Settings(system, new String. Serializer, new Byte. Array. Serializer). with. Bootstrap. Servers("localhost: 9092"). with. Eos. Commit. Interval(100. millis) Optionally provide a Transaction commit interval (default is 100 ms) val control = Transactional. source(consumer. Settings, Subscriptions. topics("source-topic")). via(transform) Use Transactional. source to propagate necessary info to Transactional. sink (CG ID, Offsets) . map { msg => Producer. Message(new Producer. Record[String, Array[Byte]]("sink-topic", msg. record. value), msg. partition. Offset) } Call Transactional. sink or. flow to produce and commit messages. . to(Transactional. sink(producer. Settings, "transactional-id")). run() 53

New in Alpakka Kafka 1. 0 -M 1

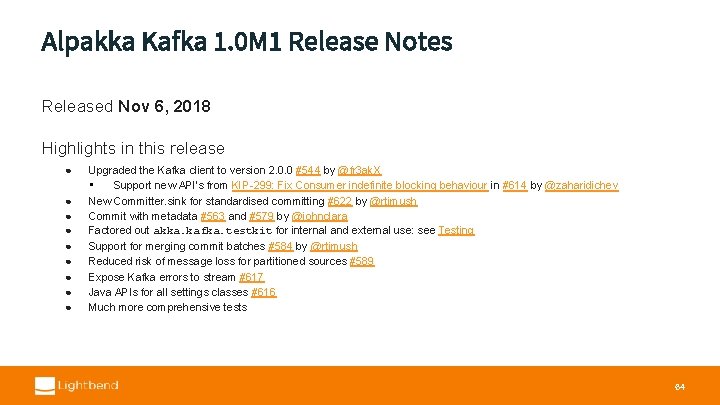

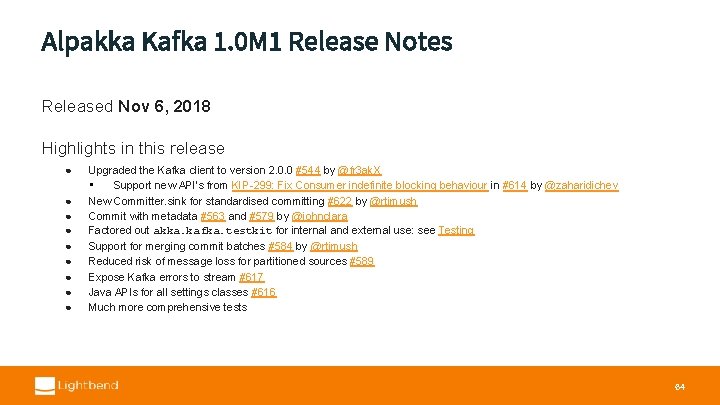

Alpakka Kafka 1. 0 M 1 Release Notes Released Nov 6, 2018 Highlights in this release ● ● ● ● ● Upgraded the Kafka client to version 2. 0. 0 #544 by @fr 3 ak. X • Support new API’s from KIP-299: Fix Consumer indefinite blocking behaviour in #614 by @zaharidichev New Committer. sink for standardised committing #622 by @rtimush Commit with metadata #563 and #579 by @johnclara Factored out akka. kafka. testkit for internal and external use: see Testing Support for merging commit batches #584 by @rtimush Reduced risk of message loss for partitioned sources #589 Expose Kafka errors to stream #617 Java APIs for all settings classes #616 Much more comprehensive tests 64

Conclusion

kafka connector openclipart 66

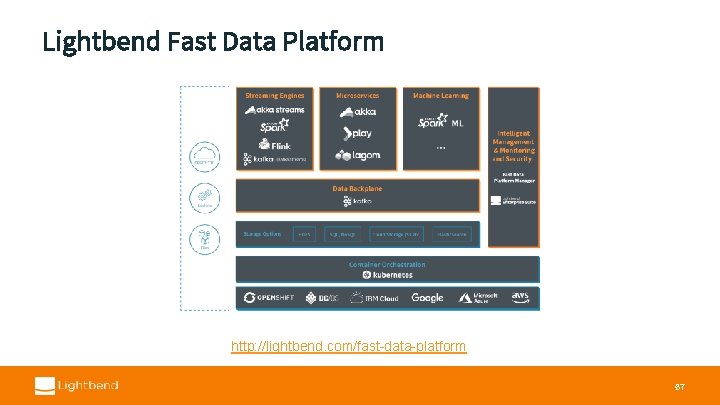

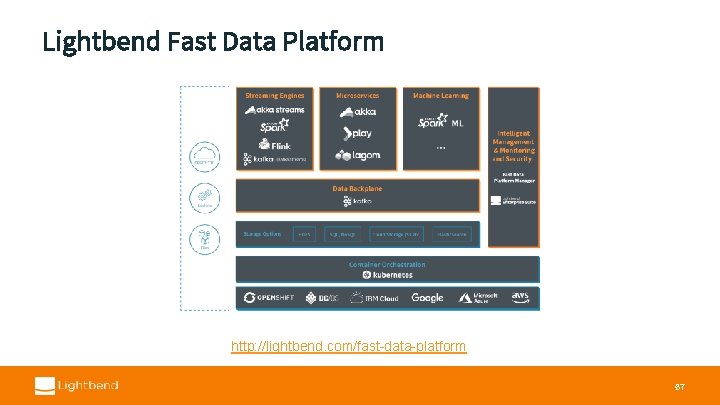

Lightbend Fast Data Platform http: //lightbend. com/fast-data-platform 67

Free e. Book! https: //bit. ly/2 J 9 xm. Zm Thank You! Sean Glover @seg 1 o in/seanaglover sean. glover@lightbend. com