Fast and Efficient Implementation of Convolutional Neural Networks

![Results Summary VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8. 04 Results Summary VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8. 04](https://slidetodoc.com/presentation_image/1f60bdc70f8674f2551b3e5c560eea12/image-6.jpg)

![Experimental Results (1) VGG 16 Layer(Metric) [1] Our work Data Precision 16 bit fixed Experimental Results (1) VGG 16 Layer(Metric) [1] Our work Data Precision 16 bit fixed](https://slidetodoc.com/presentation_image/1f60bdc70f8674f2551b3e5c560eea12/image-19.jpg)

![Experimental Results (2) VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8. Experimental Results (2) VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8.](https://slidetodoc.com/presentation_image/1f60bdc70f8674f2551b3e5c560eea12/image-20.jpg)

- Slides: 25

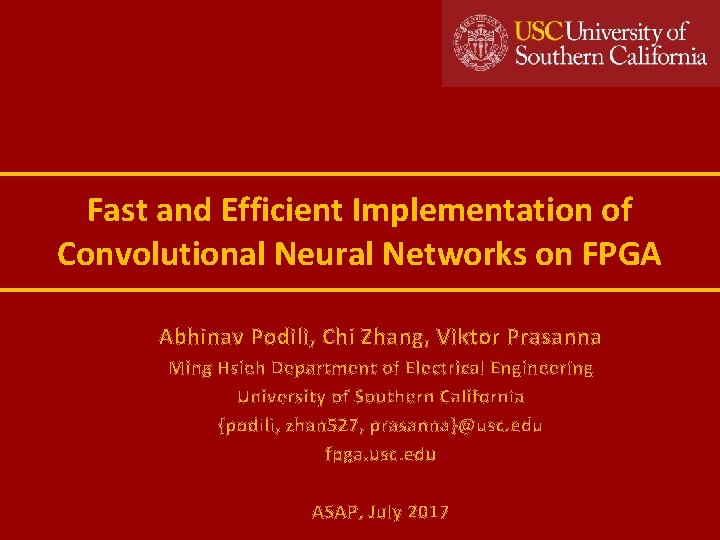

Fast and Efficient Implementation of Convolutional Neural Networks on FPGA Abhinav Podili, Chi Zhang, Viktor Prasanna Ming Hsieh Department of Electrical Engineering University of Southern California {podili, zhan 527, prasanna}@usc. edu fpga. usc. edu ASAP, July 2017

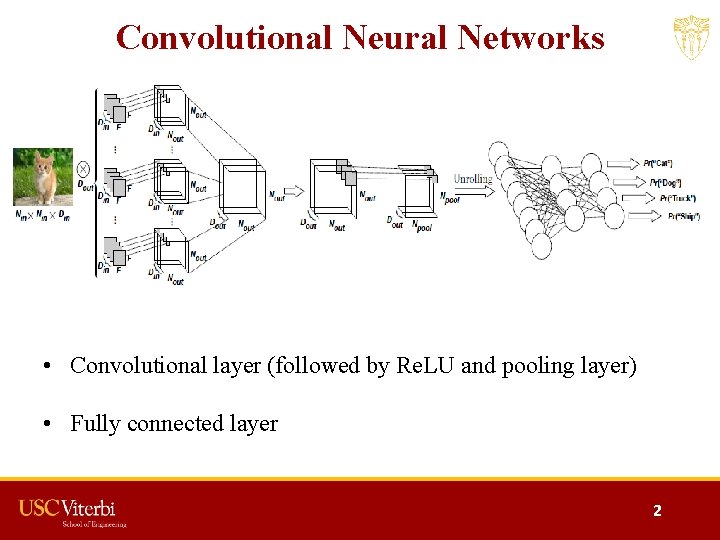

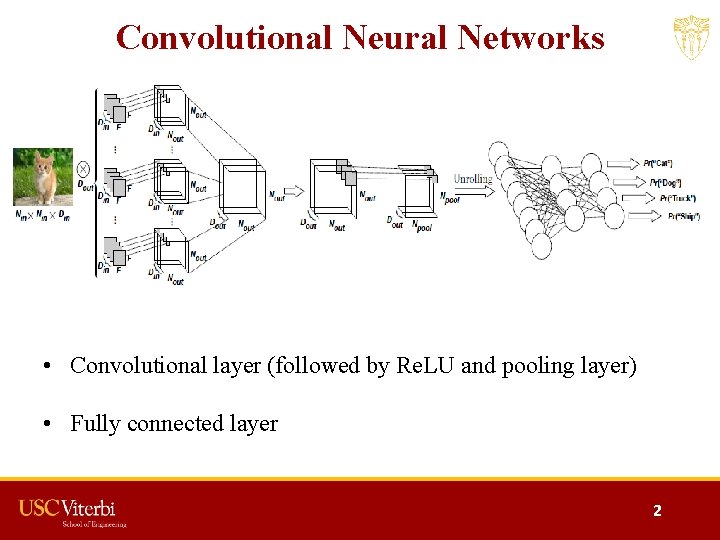

Convolutional Neural Networks • Convolutional layer (followed by Re. LU and pooling layer) • Fully connected layer 2

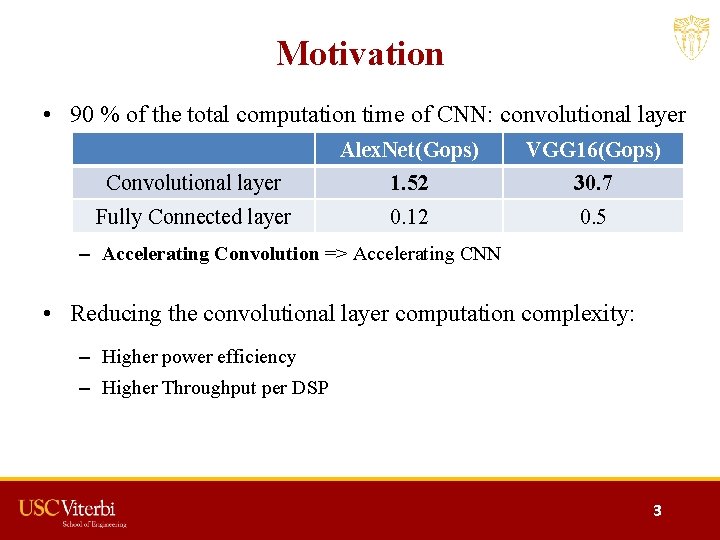

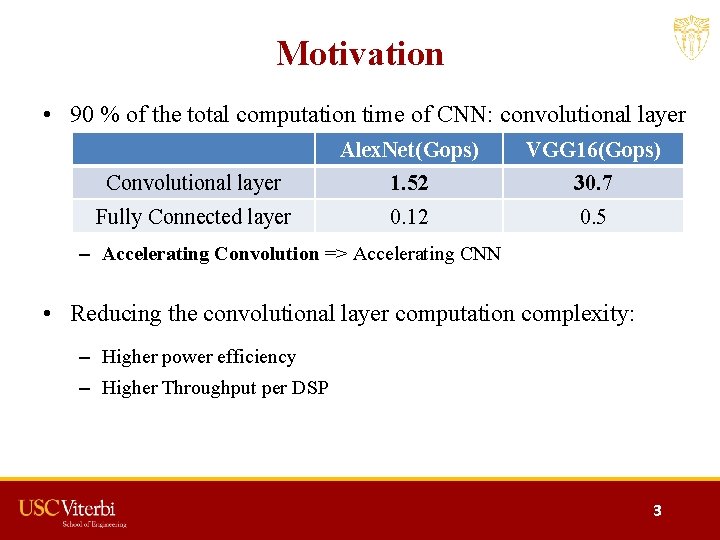

Motivation • 90 % of the total computation time of CNN: convolutional layer Alex. Net(Gops) VGG 16(Gops) Convolutional layer 1. 52 30. 7 Fully Connected layer 0. 12 0. 5 – Accelerating Convolution => Accelerating CNN • Reducing the convolutional layer computation complexity: – Higher power efficiency – Higher Throughput per DSP 3

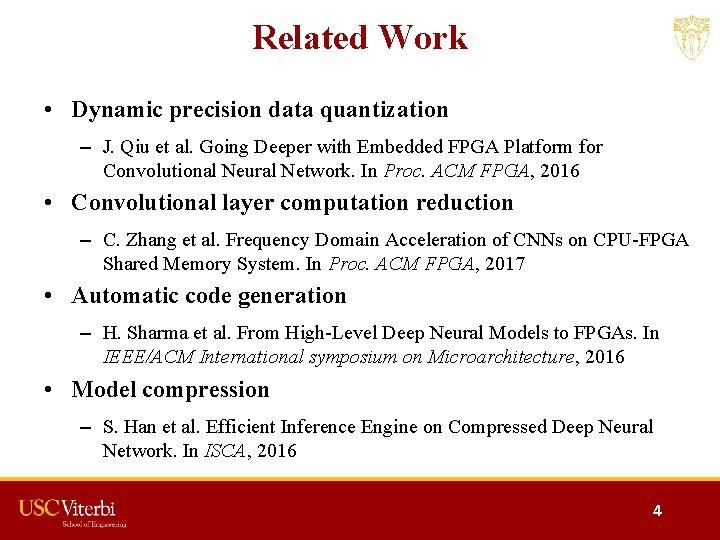

Related Work • Dynamic precision data quantization – J. Qiu et al. Going Deeper with Embedded FPGA Platform for Convolutional Neural Network. In Proc. ACM FPGA, 2016 • Convolutional layer computation reduction – C. Zhang et al. Frequency Domain Acceleration of CNNs on CPU-FPGA Shared Memory System. In Proc. ACM FPGA, 2017 • Automatic code generation – H. Sharma et al. From High-Level Deep Neural Models to FPGAs. In IEEE/ACM International symposium on Microarchitecture, 2016 • Model compression – S. Han et al. Efficient Inference Engine on Compressed Deep Neural Network. In ISCA, 2016 4

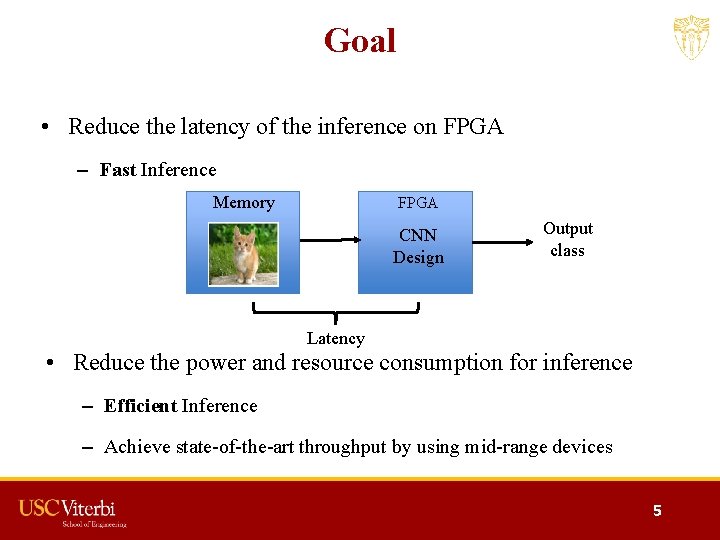

Goal • Reduce the latency of the inference on FPGA – Fast Inference Memory FPGA CNN Design Output class Latency • Reduce the power and resource consumption for inference – Efficient Inference – Achieve state-of-the-art throughput by using mid-range devices 5

![Results Summary VGG 16 LayerMetric 1 Our work Power W 9 63 8 04 Results Summary VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8. 04](https://slidetodoc.com/presentation_image/1f60bdc70f8674f2551b3e5c560eea12/image-6.jpg)

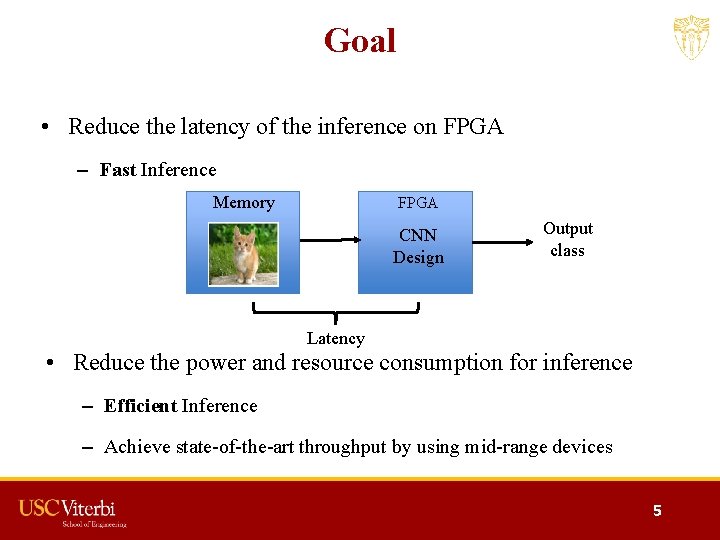

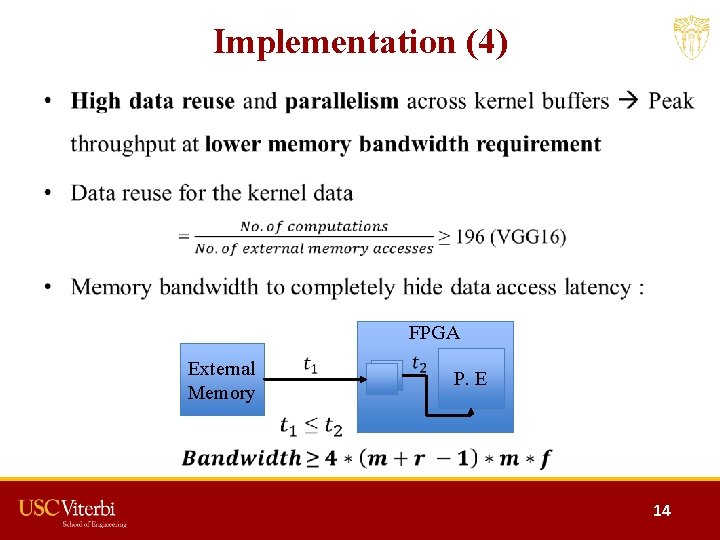

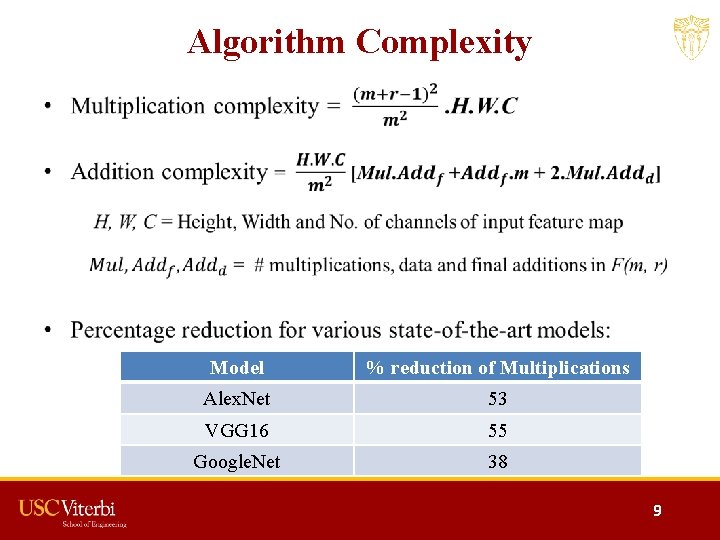

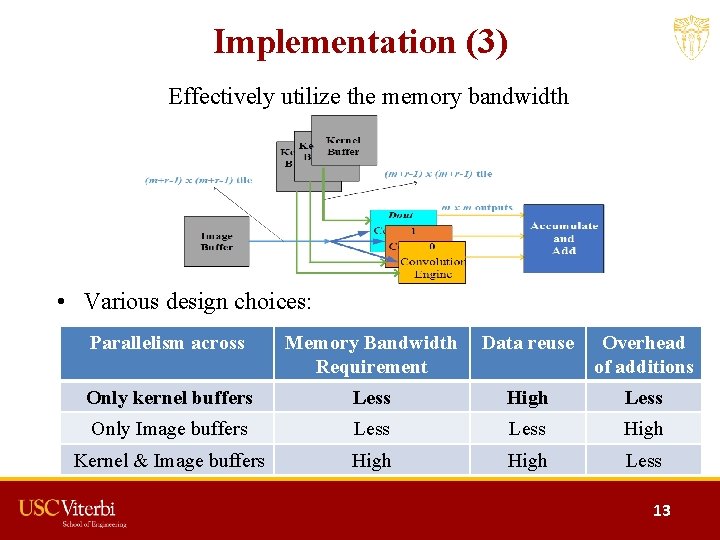

Results Summary VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8. 04 Resource Efficiency (GOP/s/Multiplier) 0. 24 0. 89 Memory Efficiency (GOP/s/Memory) 88. 17 208. 3 Power Efficiency (GOP/s/Power) 19. 5 28. 5 [1] J. Qiu, et al. Going Deeper with Embedded FPGA Platform for Convolutional Neural Network. ACM, FPGA 2016 6

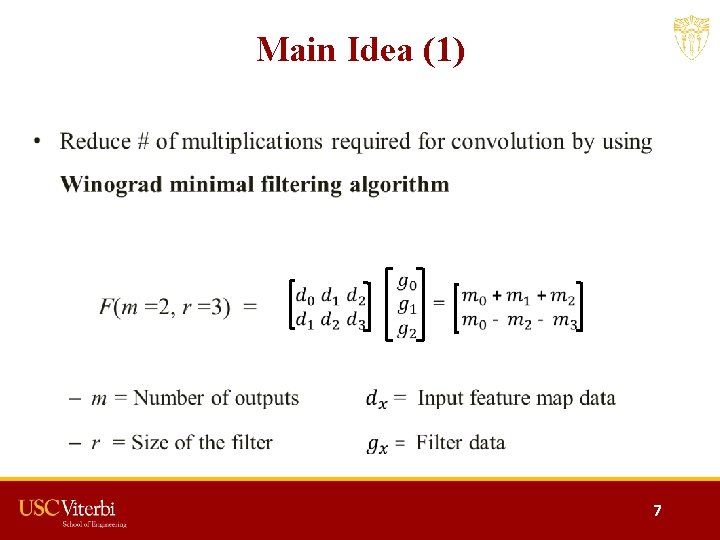

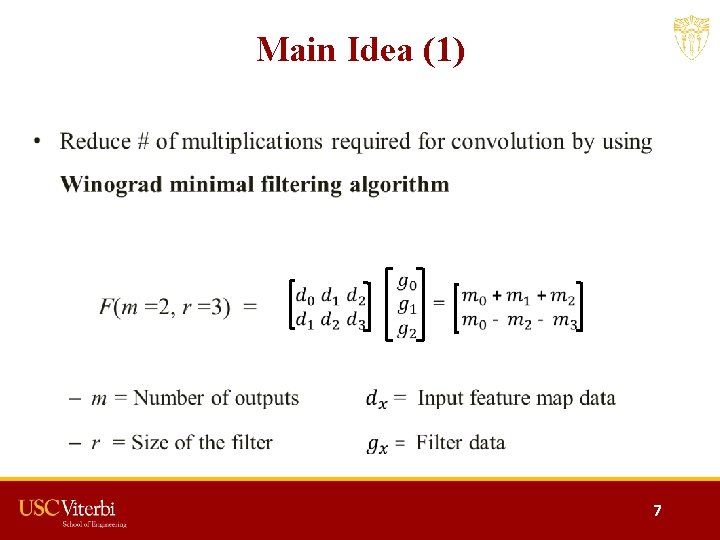

Main Idea (1) • 7

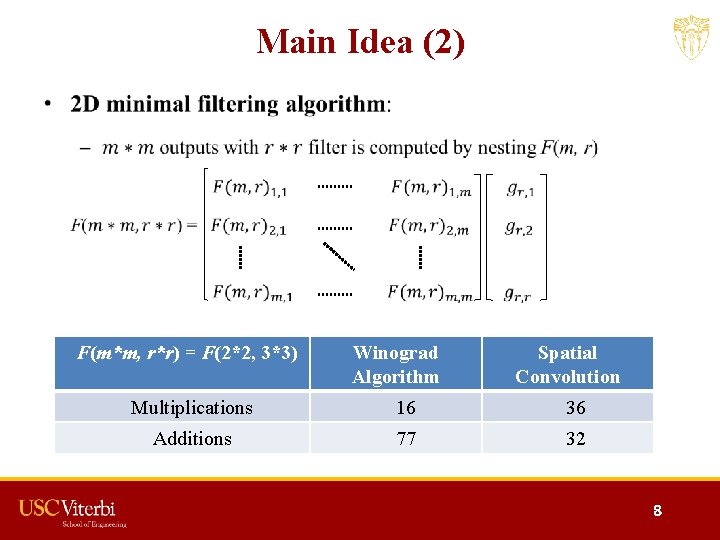

Main Idea (2) • F(m*m, r*r) = F(2*2, 3*3) Winograd Algorithm Spatial Convolution Multiplications 16 36 Additions 77 32 8

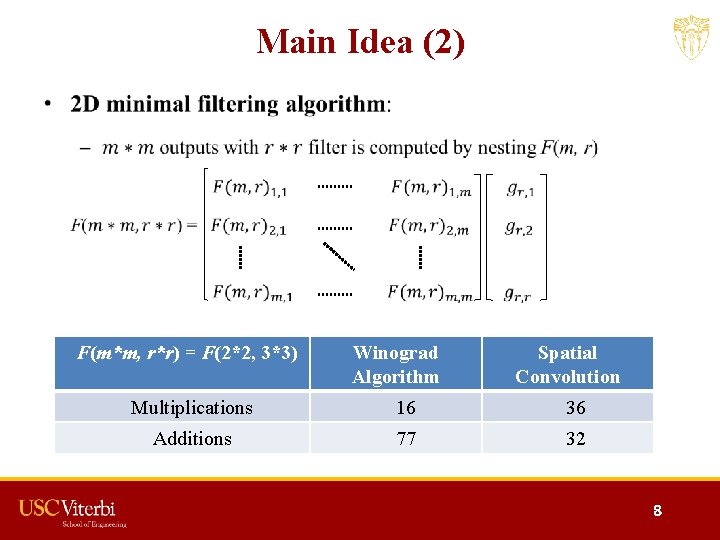

Algorithm Complexity • Model % reduction of Multiplications Alex. Net 53 VGG 16 55 Google. Net 38 9

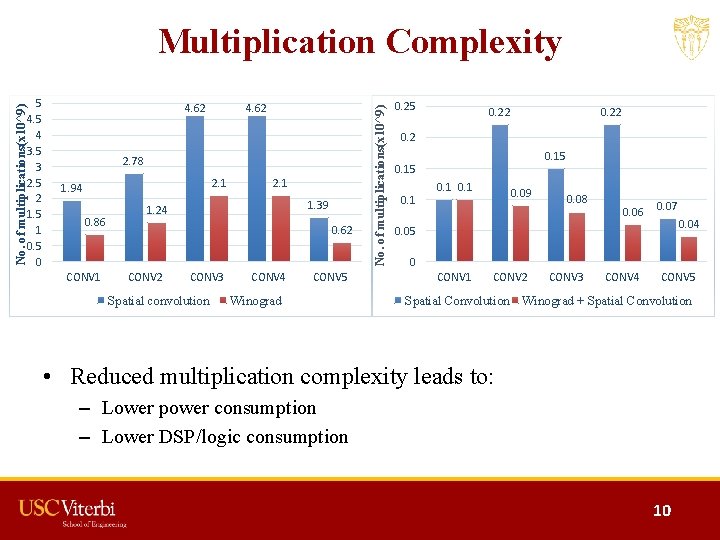

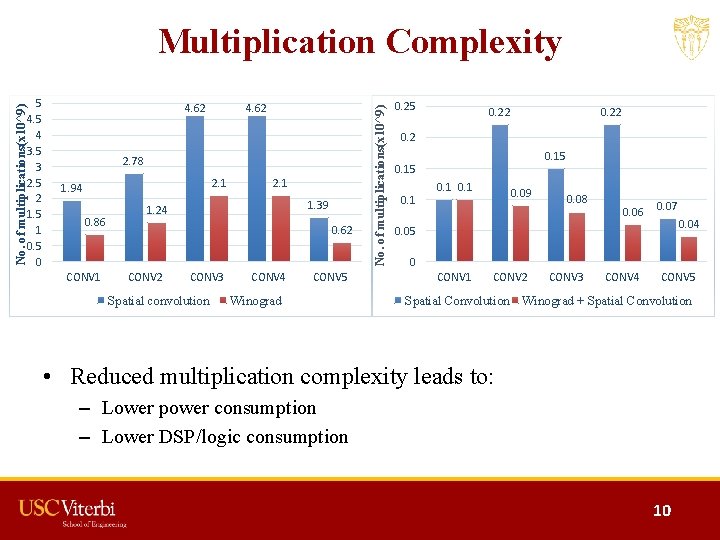

No. of multiplications(x 10^9) 5 4 3. 5 3 2. 5 2 1. 5 1 0. 5 0 4. 62 2. 78 2. 1 1. 94 0. 86 CONV 1 2. 1 1. 39 1. 24 0. 62 CONV 3 Spatial convolution CONV 4 CONV 5 Winograd No. of multiplications(x 10^9) Multiplication Complexity 0. 25 0. 22 0. 15 0. 1 0. 09 0. 08 0. 06 0. 07 0. 04 0. 05 0 CONV 1 CONV 2 CONV 3 CONV 4 CONV 5 Spatial Convolution Winograd + Spatial Convolution • Reduced multiplication complexity leads to: – Lower power consumption – Lower DSP/logic consumption 10

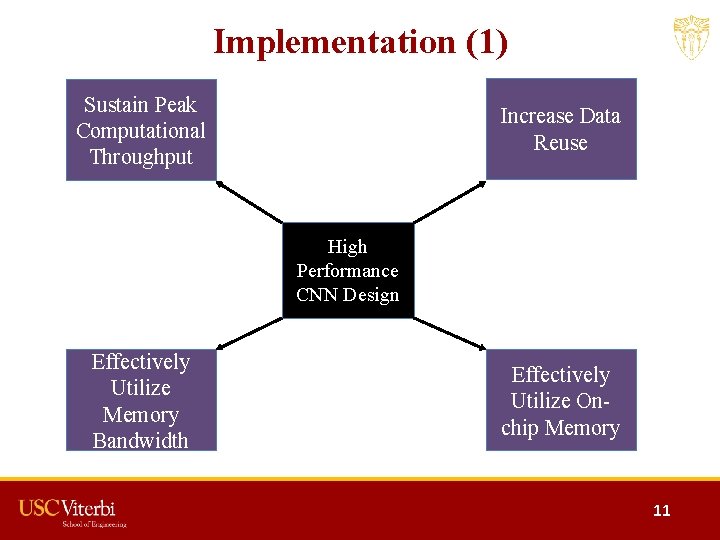

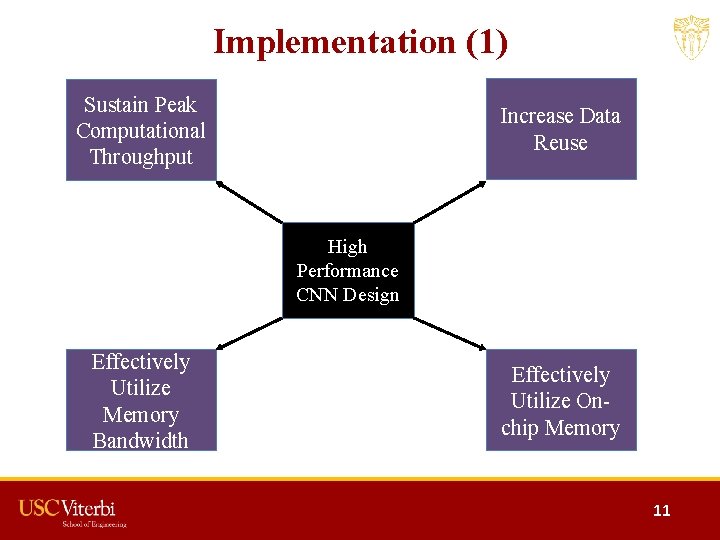

Implementation (1) Sustain Peak Computational Throughput Increase Data Reuse High Performance CNN Design Effectively Utilize Memory Bandwidth Effectively Utilize Onchip Memory 11

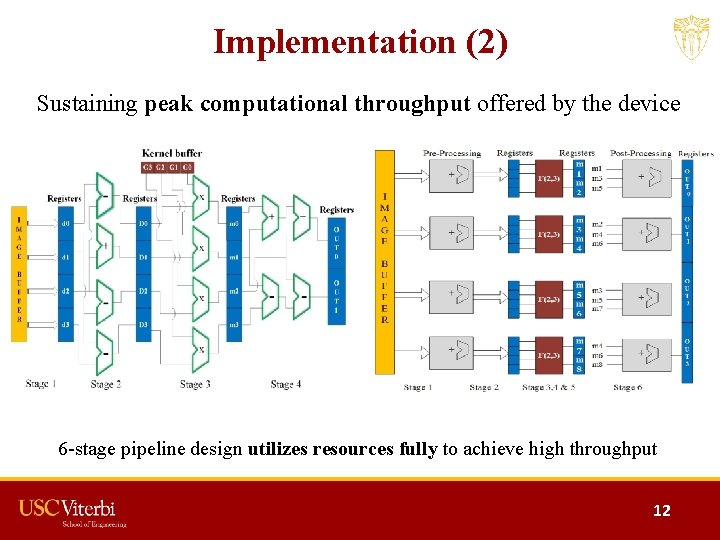

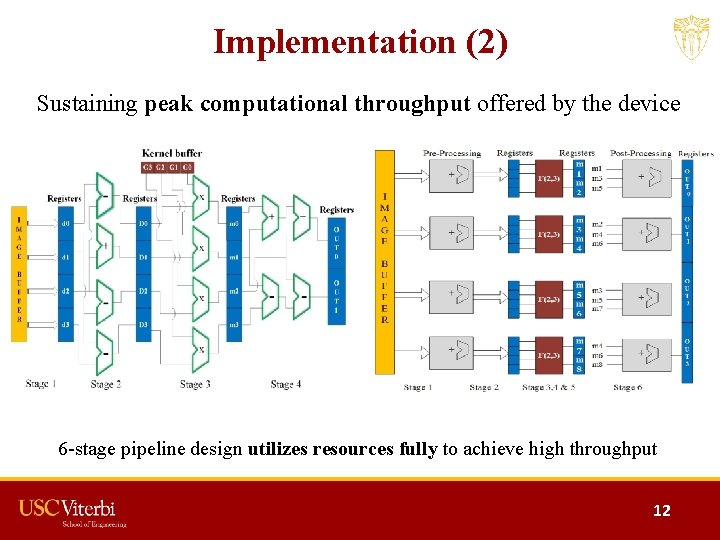

Implementation (2) Sustaining peak computational throughput offered by the device 6 -stage pipeline design utilizes resources fully to achieve high throughput 12

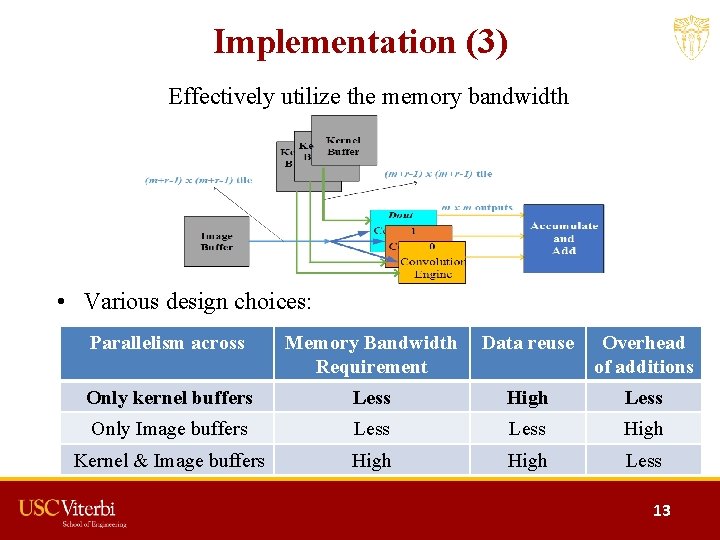

Implementation (3) Effectively utilize the memory bandwidth • Various design choices: Parallelism across Memory Bandwidth Requirement Data reuse Overhead of additions Only kernel buffers Less High Less Only Image buffers Less High Kernel & Image buffers High Less 13

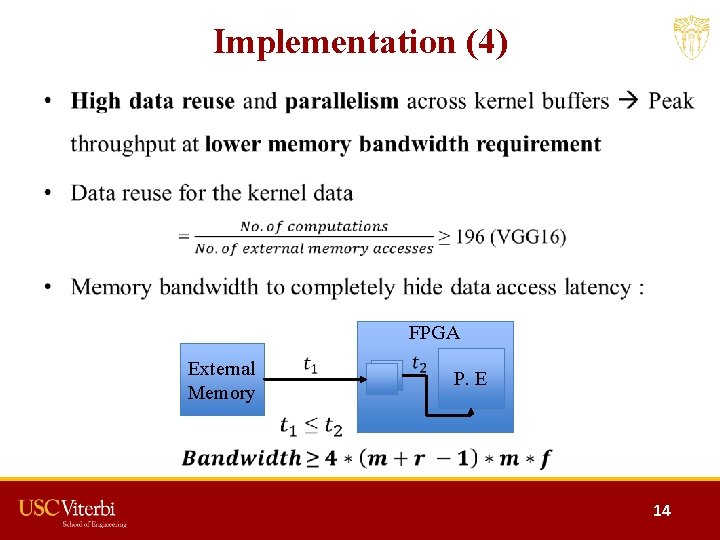

Implementation (4) • FPGA External Memory P. E 14

Implementation (5) Effectively use on-chip memory • On-chip memory consumed depends on – Number of buffers – Size of the buffers • Storage optimization for image buffer – Number of image buffers • Storage optimization for kernel buffer – Size of kernel buffers 15

Data Layout • 16

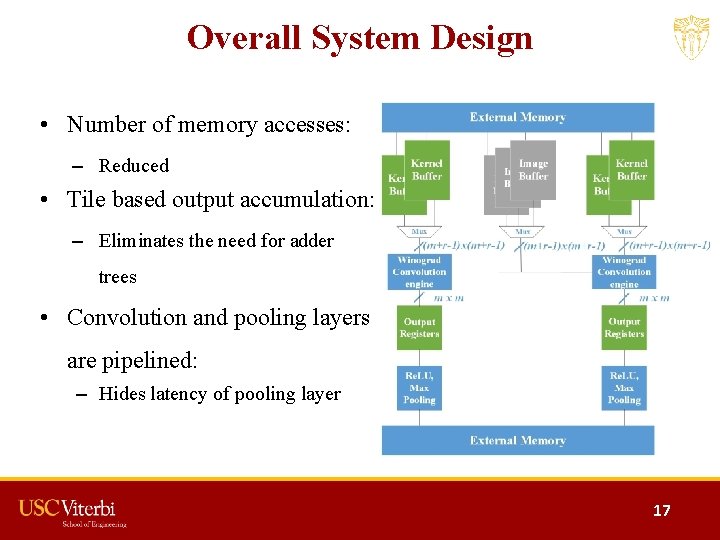

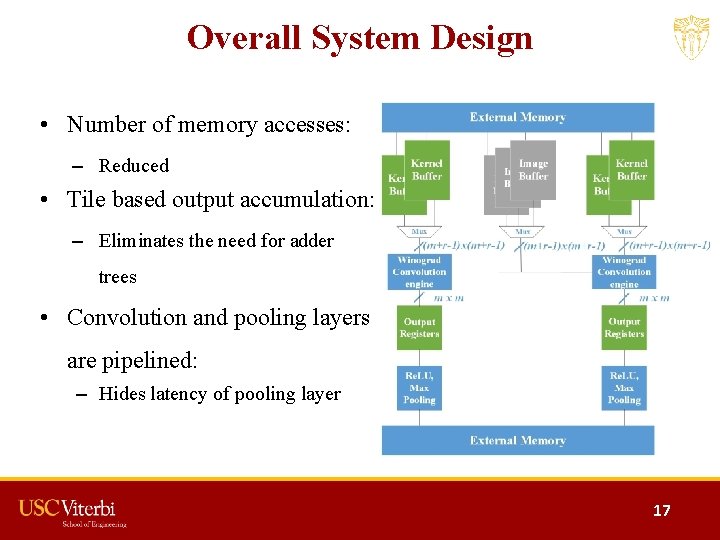

Overall System Design • Number of memory accesses: – Reduced • Tile based output accumulation: – Eliminates the need for adder trees • Convolution and pooling layers are pipelined: – Hides latency of pooling layer 17

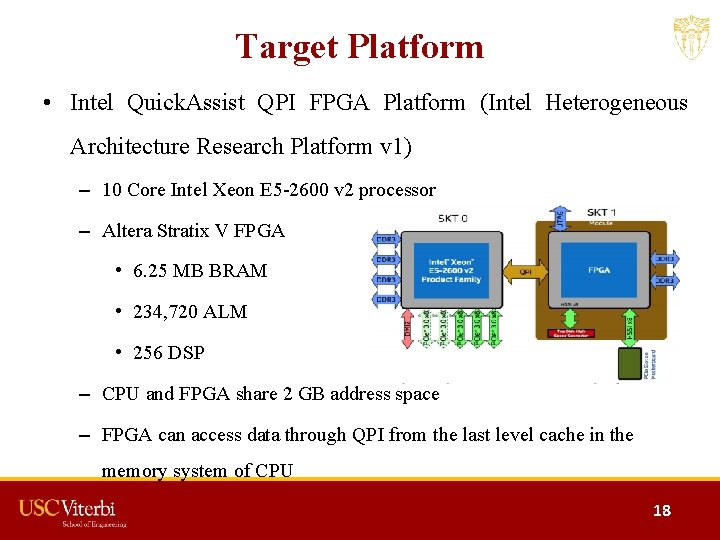

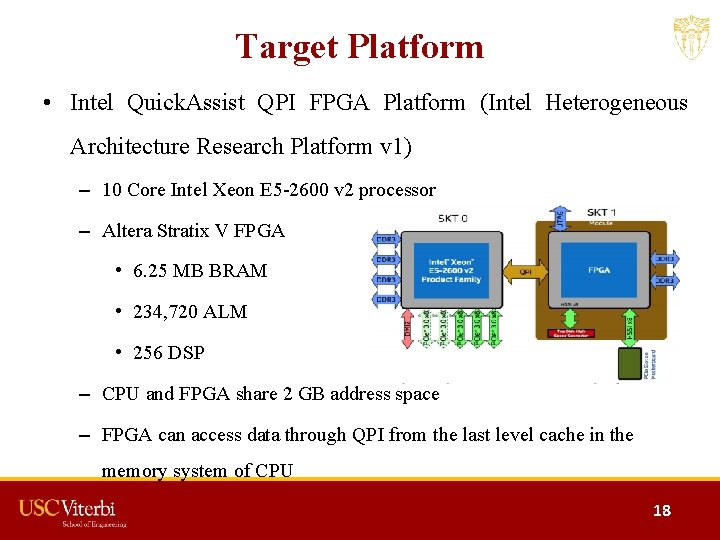

Target Platform • Intel Quick. Assist QPI FPGA Platform (Intel Heterogeneous Architecture Research Platform v 1) – 10 Core Intel Xeon E 5 -2600 v 2 processor – Altera Stratix V FPGA • 6. 25 MB BRAM • 234, 720 ALM • 256 DSP – CPU and FPGA share 2 GB address space – FPGA can access data through QPI from the last level cache in the memory system of CPU 18

![Experimental Results 1 VGG 16 LayerMetric 1 Our work Data Precision 16 bit fixed Experimental Results (1) VGG 16 Layer(Metric) [1] Our work Data Precision 16 bit fixed](https://slidetodoc.com/presentation_image/1f60bdc70f8674f2551b3e5c560eea12/image-19.jpg)

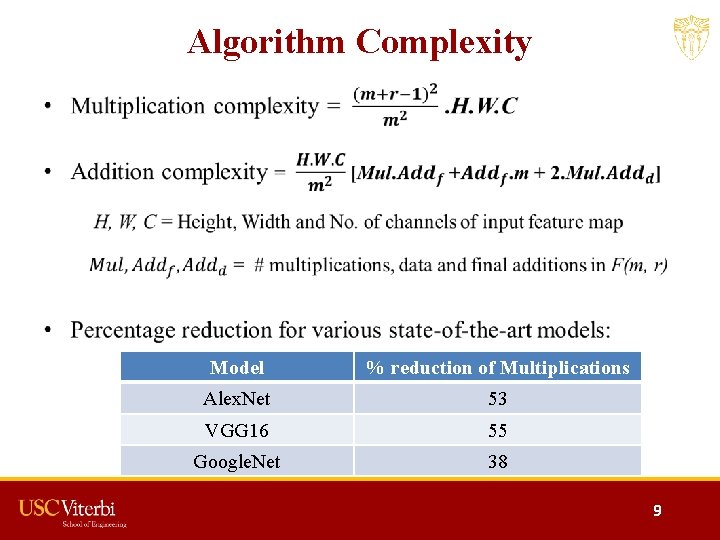

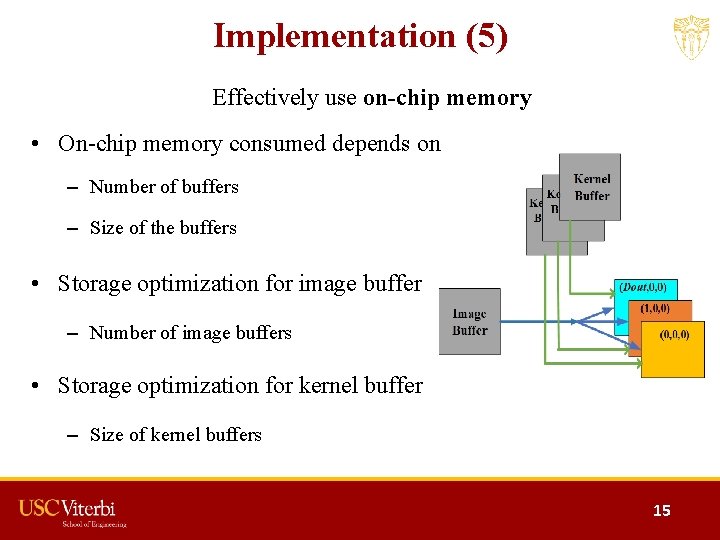

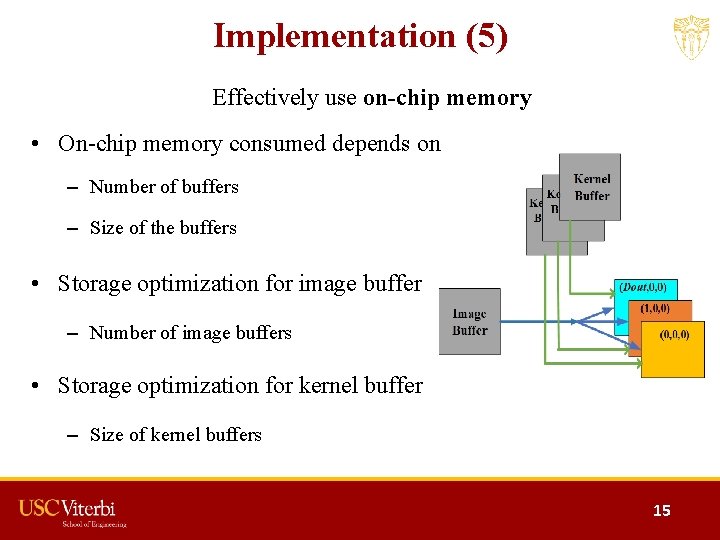

Experimental Results (1) VGG 16 Layer(Metric) [1] Our work Data Precision 16 bit fixed 32 bit fixed Frequency (MHz) 150 200 Memory (MB) 2. 13 1. 05 # Multipliers 780 256 Overall Delay (ms) 163. 4 142. 3 Throughput (GOP/s) 187. 8 229. 2 [1] J. Qiu, et al. , Going Deeper with Embedded FPGA Platform for Convolutional Neural Network. ACM, FPGA 2016 19

![Experimental Results 2 VGG 16 LayerMetric 1 Our work Power W 9 63 8 Experimental Results (2) VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8.](https://slidetodoc.com/presentation_image/1f60bdc70f8674f2551b3e5c560eea12/image-20.jpg)

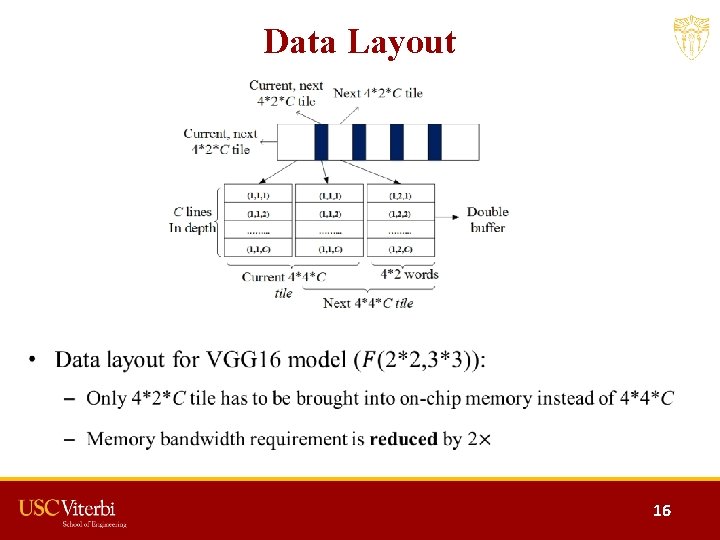

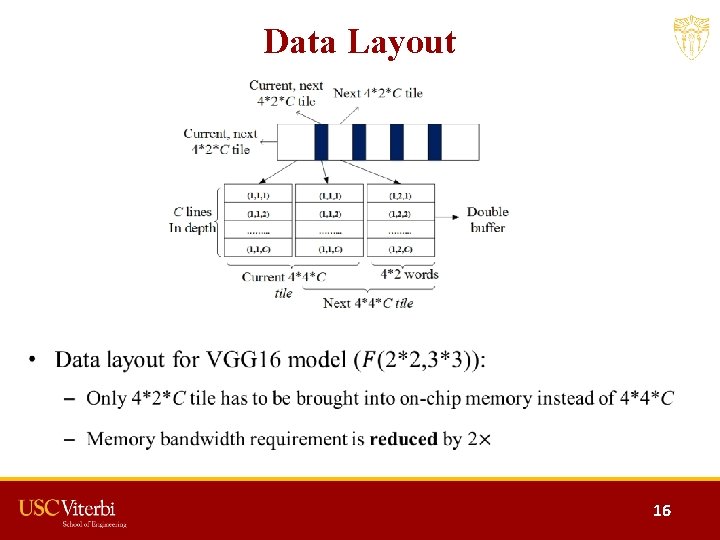

Experimental Results (2) VGG 16 Layer(Metric) [1] Our work Power (W) 9. 63 8. 04 GOP/s/Multiplier 0. 24 0. 89 Memory Efficiency (GOP/s/Memory) 88. 17 208. 3 Power Efficiency (GOP/s/Power) 19. 5 28. 5 [1] J. Qiu, et al. , Going Deeper with Embedded FPGA Platform for Convolutional Neural Network. ACM, FPGA 2016 20

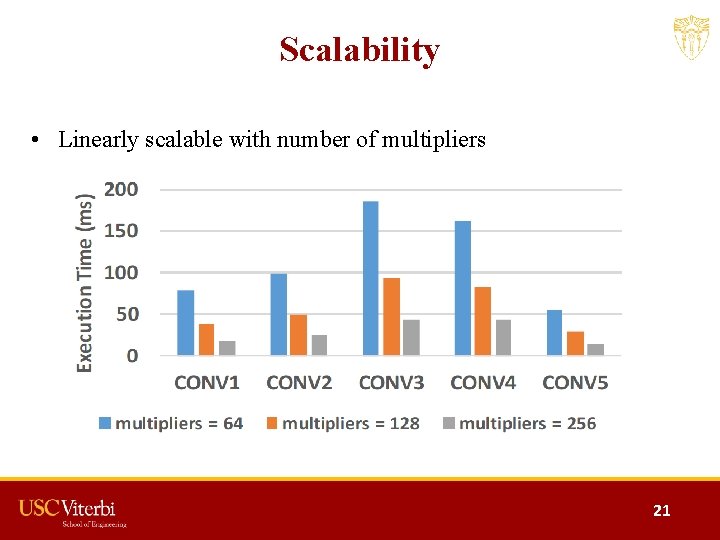

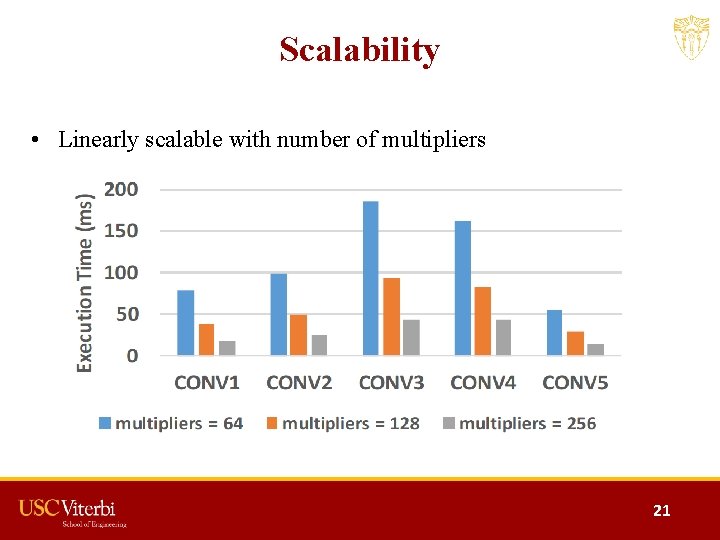

Scalability • Linearly scalable with number of multipliers 21

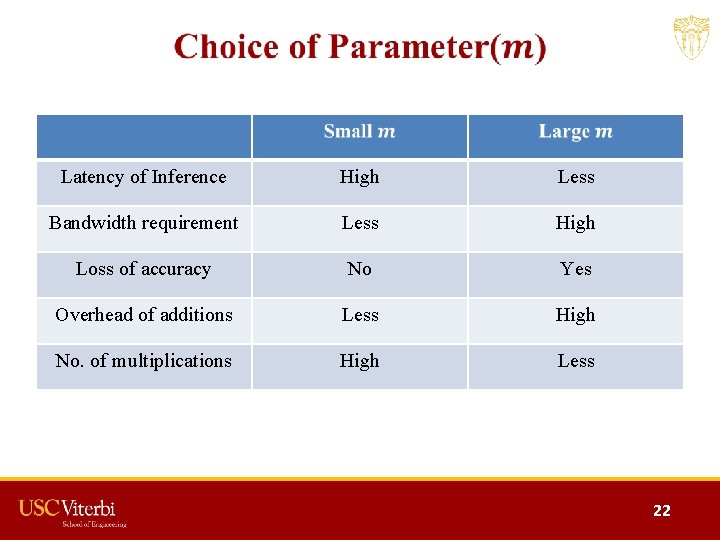

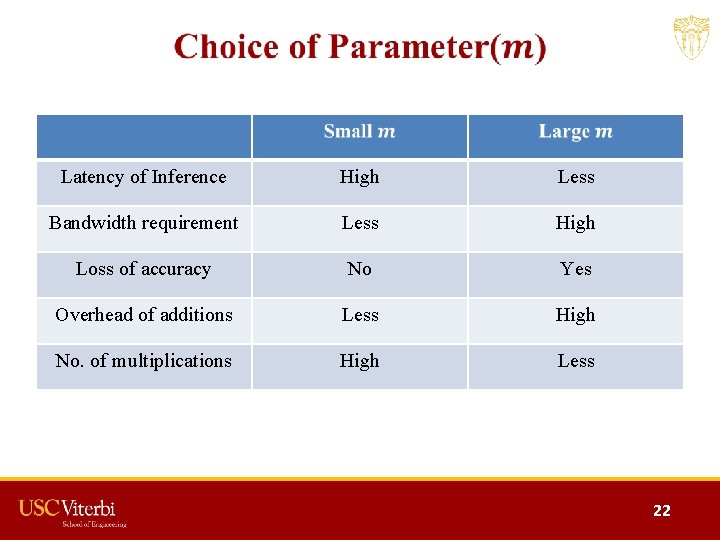

Latency of Inference High Less Bandwidth requirement Less High Loss of accuracy No Yes Overhead of additions Less High No. of multiplications High Less 22

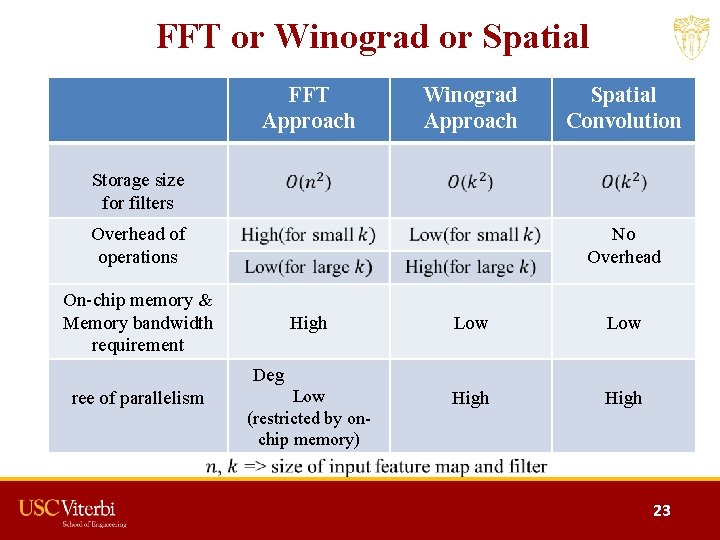

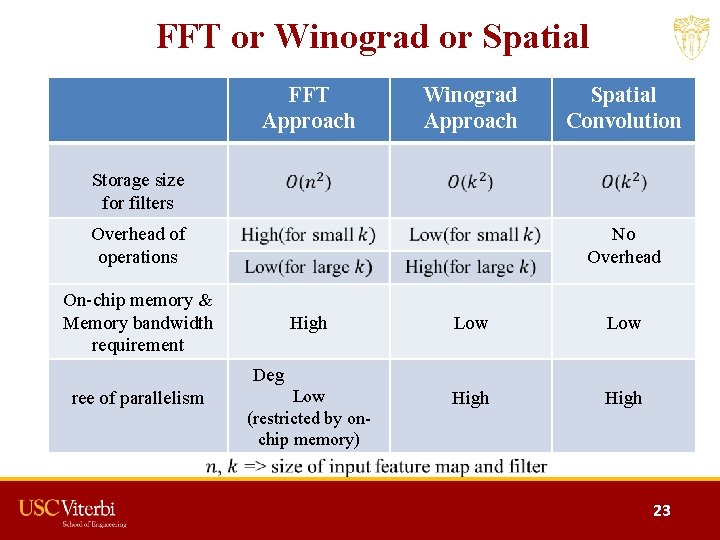

FFT or Winograd or Spatial FFT Approach Winograd Approach Spatial Convolution Storage size for filters Overhead of operations No Overhead On-chip memory & Memory bandwidth requirement High Low Low (restricted by onchip memory) High Deg ree of parallelism 23

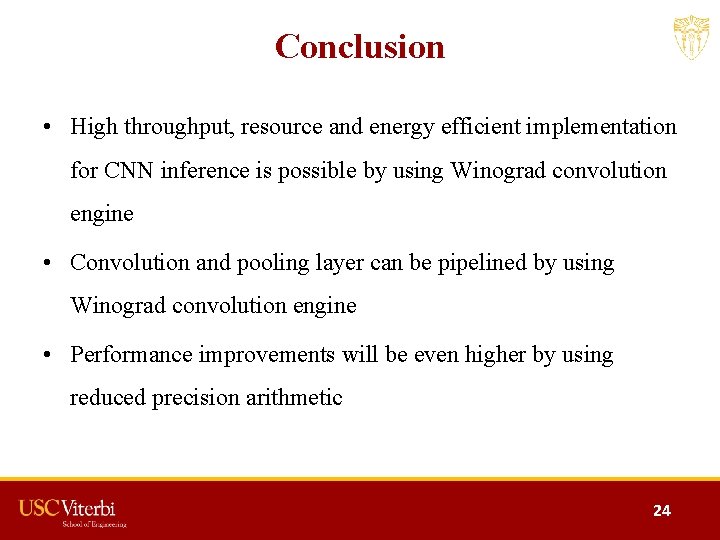

Conclusion • High throughput, resource and energy efficient implementation for CNN inference is possible by using Winograd convolution engine • Convolution and pooling layer can be pipelined by using Winograd convolution engine • Performance improvements will be even higher by using reduced precision arithmetic 24

Thank You fpga. usc. edu