Fast Algorithms for Mining Frequent Itemsets Dept of

- Slides: 63

探勘頻繁項目集合之快速演算法研究 Fast Algorithms for Mining Frequent Itemsets 博士論文初稿 指導教授: 張真誠 教授 研究生: 李育強 Dept. of Computer Science and Information Engineering, National Chung Cheng University Date: May 31, 2007

Outline n n n n n Introduction Background and Related Work NFP-Tree Structure Fast Share Measure (FSM) Algorithm Three Efficient Algorithms Direct Candidate Generate (DCG) Algorithm Isolated Items Discarding Strategy (IIDS) Maximum Item Conflict First (MICF) Sanitization Method Conclusions 2

Introduction n n Data mining techniques have been developed to find a small set of precious nugget from reams of data (Cabena et al. , 1998; Kantardzic, 2002) Mining association rules constitutes one of the most important data mining problem Two sub-problem (Agrawal & Srikant, 1994) n Identifying all frequent itemsets n Using these frequent itemsets to generate association rules The first sub-problem plays an essential role in mining association rules 3

Introduction (con’t) n n Mining frequent itemsets Mining share-frequent itemsets Mining high utility itemsets Hiding sensitive patterns 4

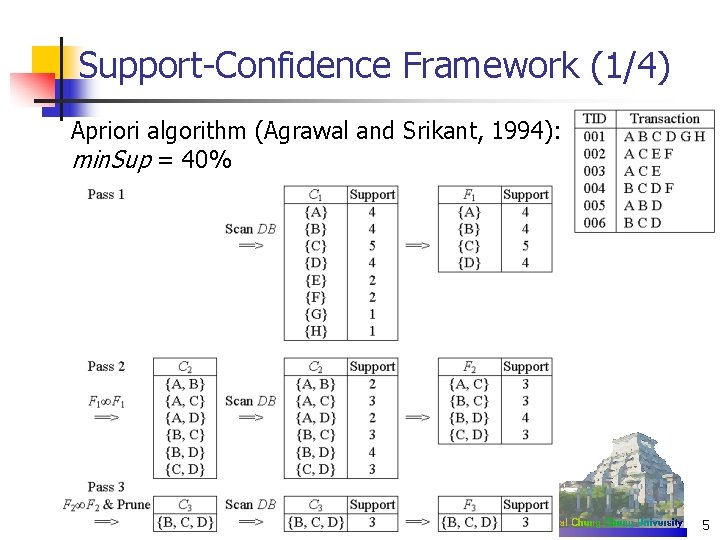

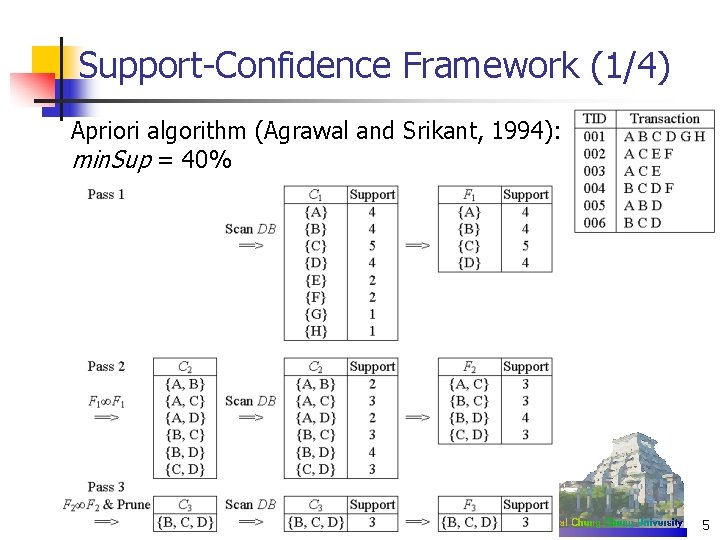

Support-Confidence Framework (1/4) Apriori algorithm (Agrawal and Srikant, 1994): min. Sup = 40% 5

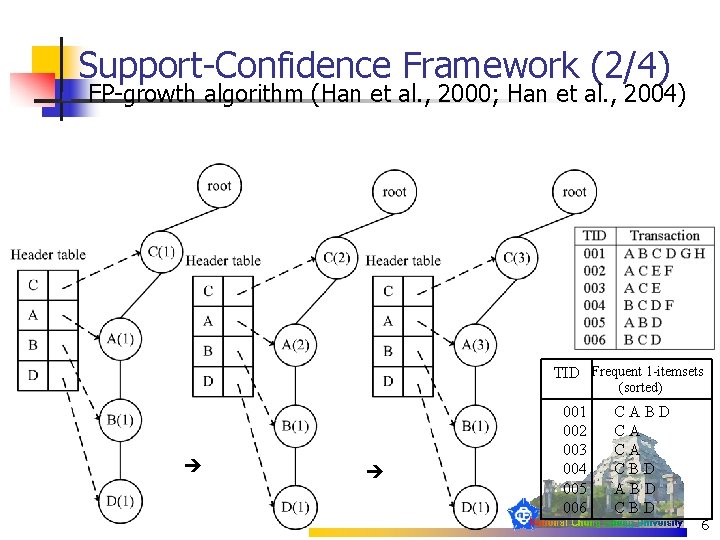

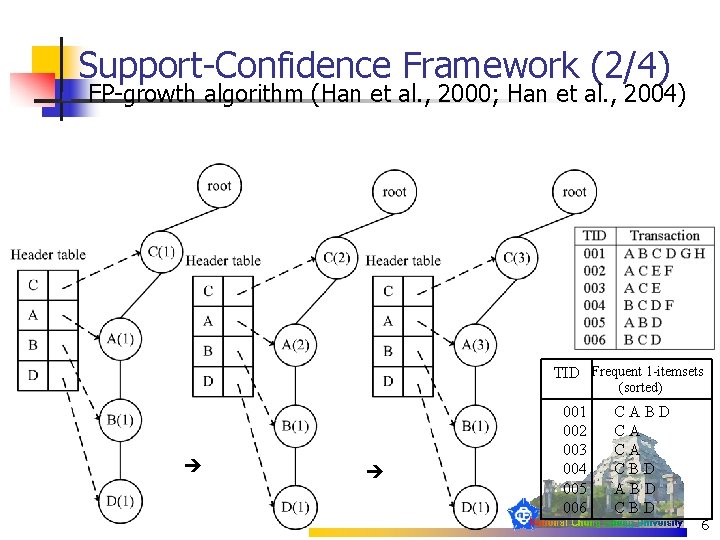

Support-Confidence Framework (2/4) n FP-growth algorithm (Han et al. , 2000; Han et al. , 2004) TID Frequent 1 -itemsets (sorted) 001 002 003 004 005 006 CABD CA CA CBD ABD CBD 6

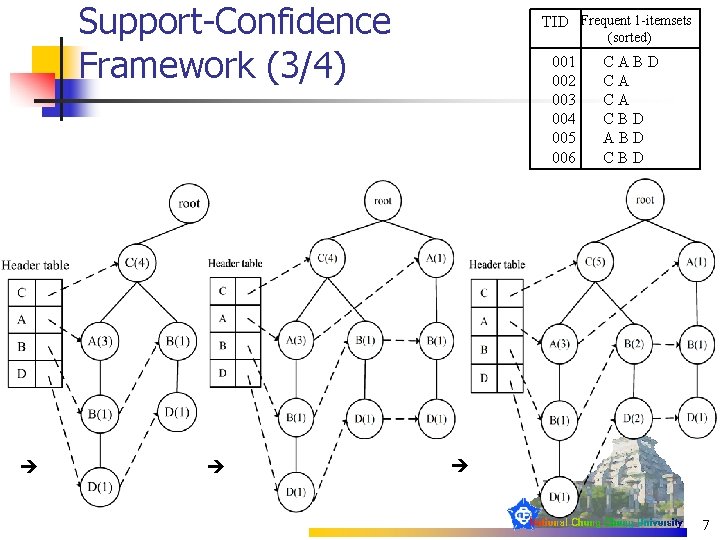

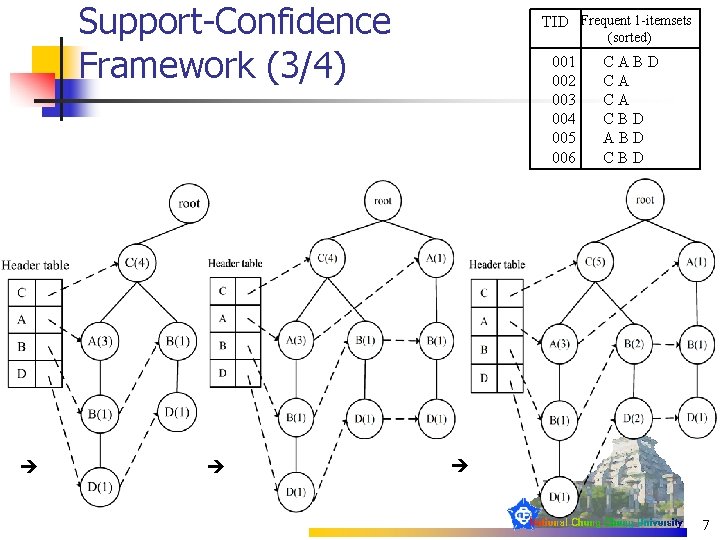

Support-Confidence Framework (3/4) TID Frequent 1 -itemsets (sorted) 001 002 003 004 005 006 CABD CA CA CBD ABD CBD 7

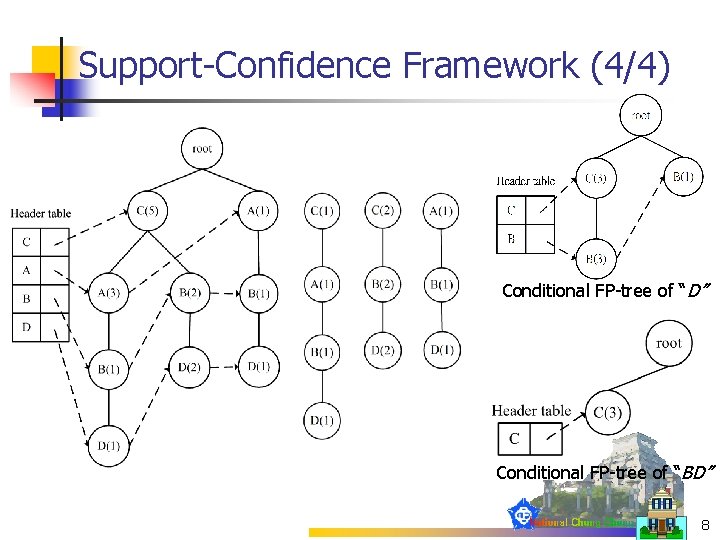

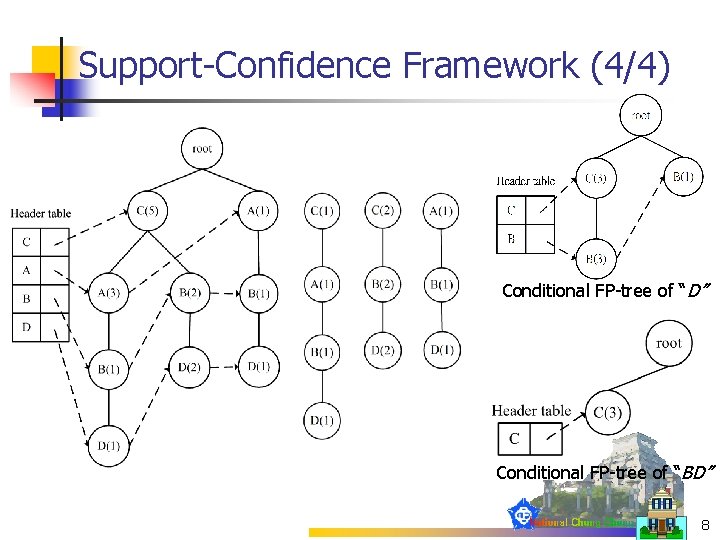

Support-Confidence Framework (4/4) Conditional FP-tree of “D” Conditional FP-tree of “BD” 8

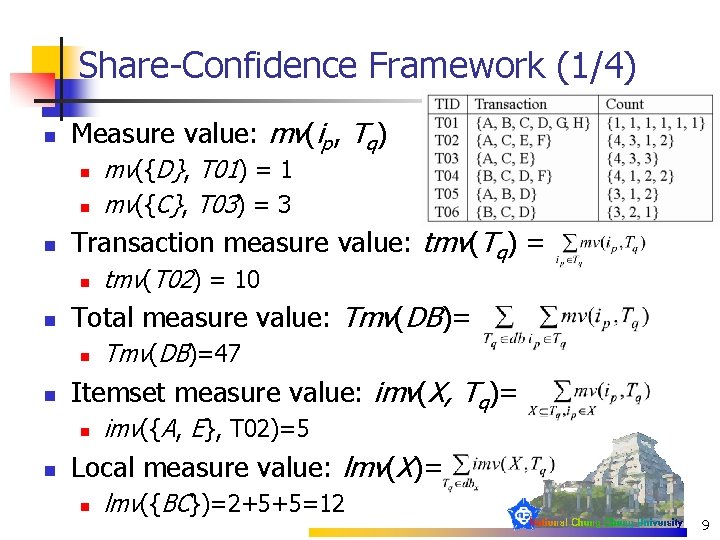

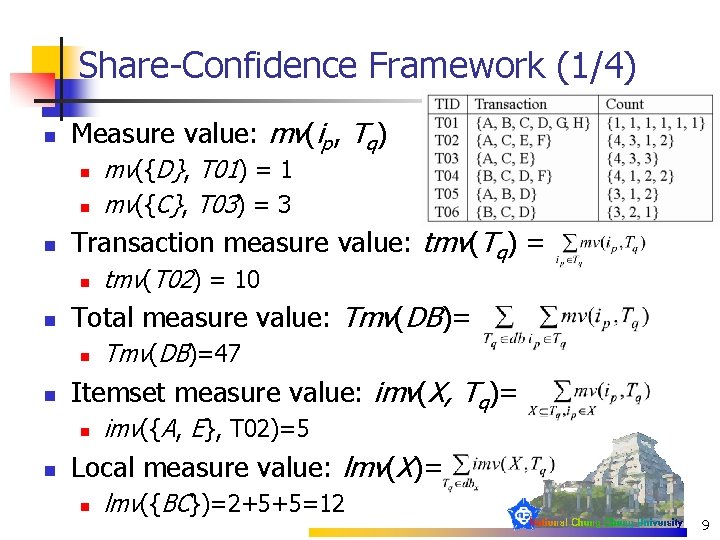

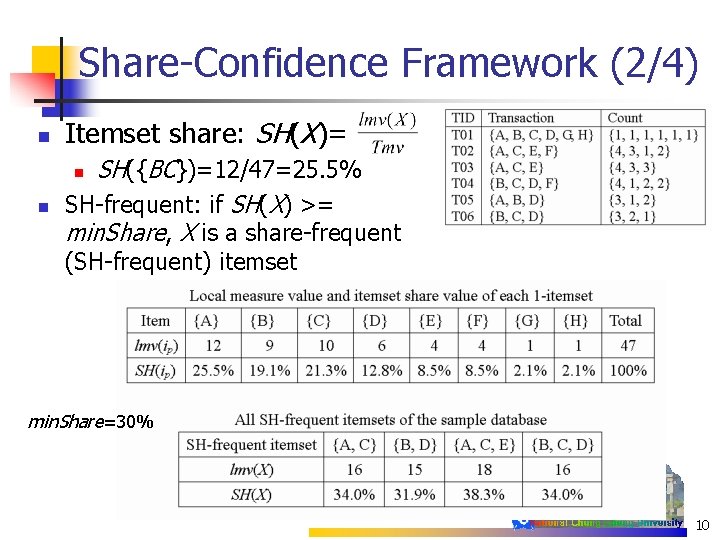

Share-Confidence Framework (1/4) n Measure value: mv(ip, Tq) n n n Transaction measure value: tmv(Tq) = n n Tmv(DB)=47 Itemset measure value: imv(X, Tq)= n n tmv(T 02) = 10 Total measure value: Tmv(DB)= n n mv({D}, T 01) = 1 mv({C}, T 03) = 3 imv({A, E}, T 02)=5 Local measure value: lmv(X)= n lmv({BC})=2+5+5=12 9

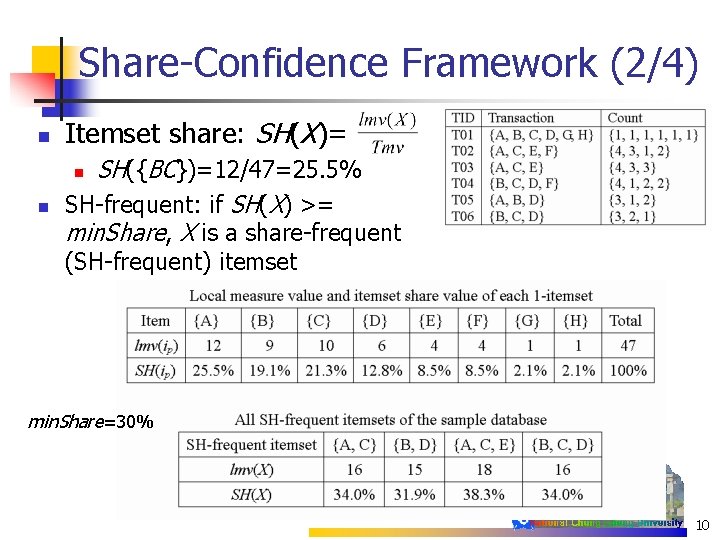

Share-Confidence Framework (2/4) n Itemset share: SH(X)= SH({BC})=12/47=25. 5% SH-frequent: if SH(X) >= min. Share, X is a share-frequent n n (SH-frequent) itemset min. Share=30% 10

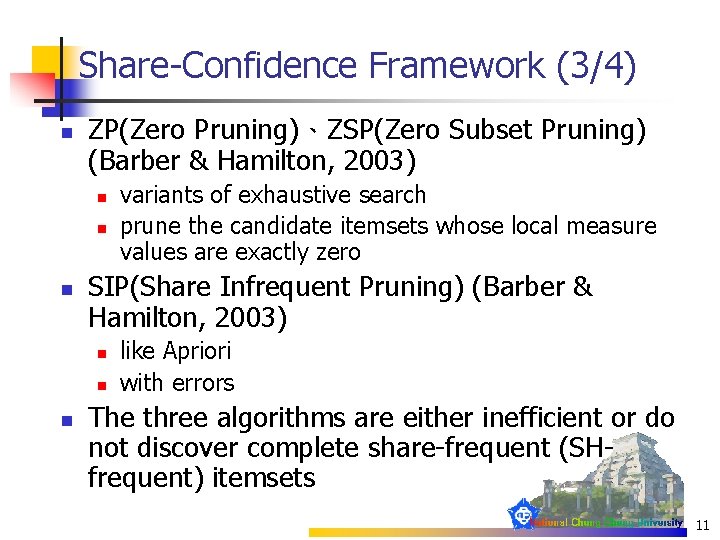

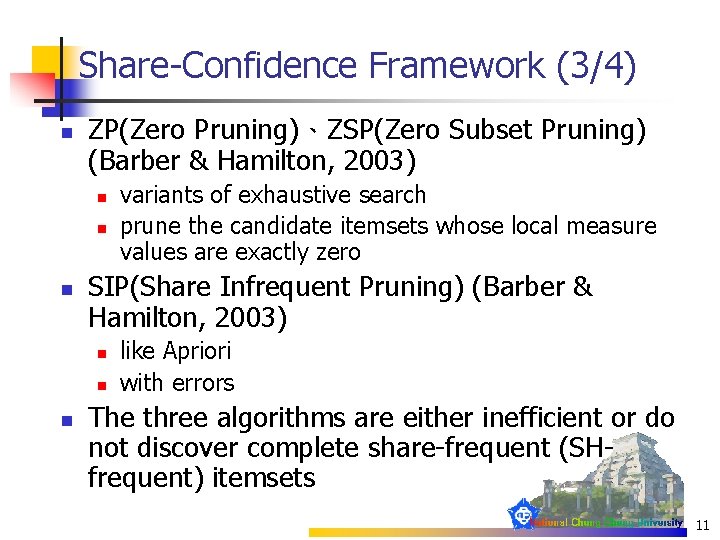

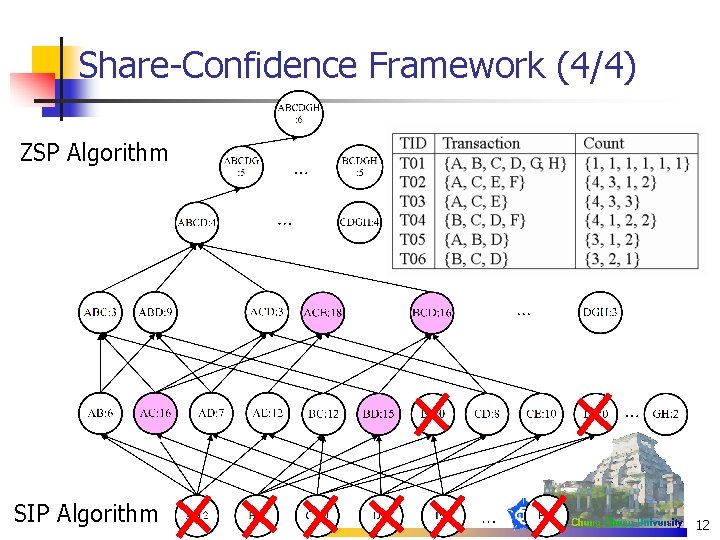

Share-Confidence Framework (3/4) n ZP(Zero Pruning)、ZSP(Zero Subset Pruning) (Barber & Hamilton, 2003) n n n SIP(Share Infrequent Pruning) (Barber & Hamilton, 2003) n n n variants of exhaustive search prune the candidate itemsets whose local measure values are exactly zero like Apriori with errors The three algorithms are either inefficient or do not discover complete share-frequent (SHfrequent) itemsets 11

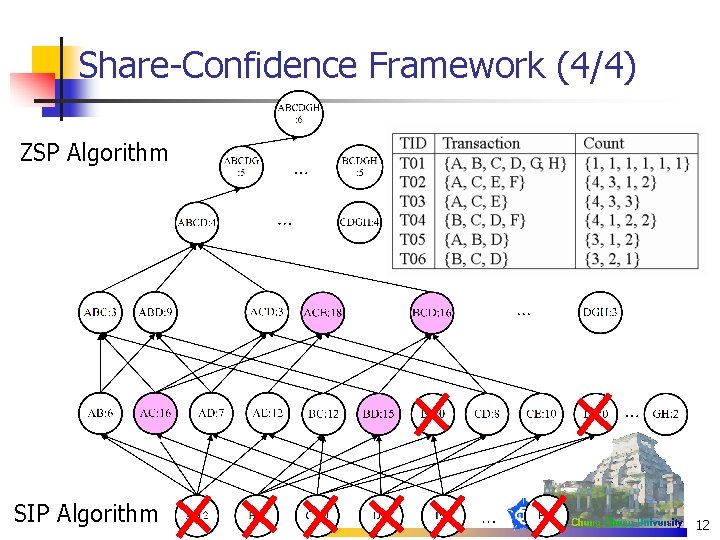

Share-Confidence Framework (4/4) ZSP Algorithm SIP Algorithm 12

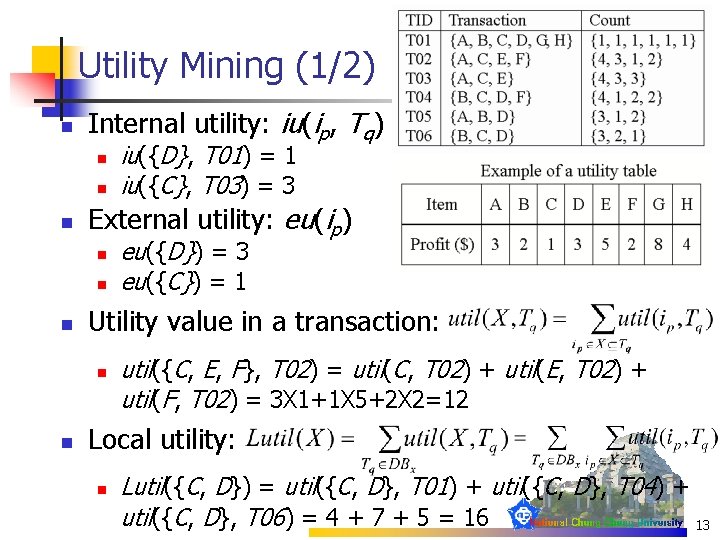

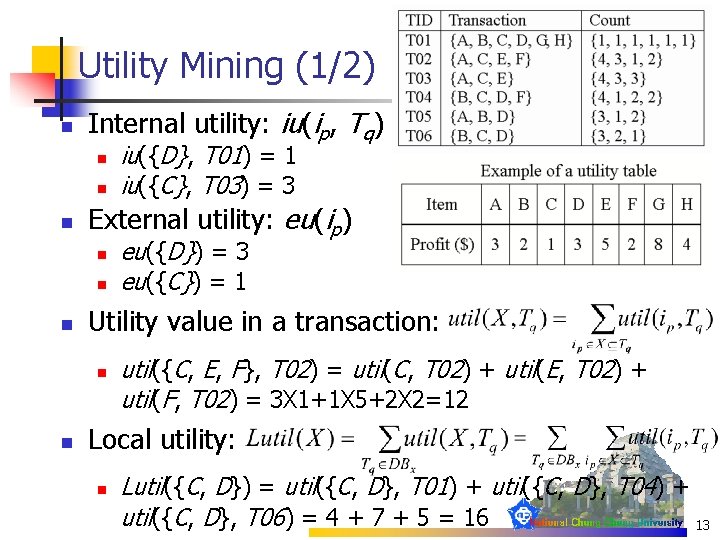

Utility Mining (1/2) n Internal utility: iu(ip, Tq) n n n External utility: eu(ip) n n n eu({D}) = 3 eu({C}) = 1 Utility value in a transaction: n n iu({D}, T 01) = 1 iu({C}, T 03) = 3 util({C, E, F}, T 02) = util(C, T 02) + util(E, T 02) + util(F, T 02) = 3 X 1+1 X 5+2 X 2=12 Local utility: n Lutil({C, D}) = util({C, D}, T 01) + util({C, D}, T 04) + util({C, D}, T 06) = 4 + 7 + 5 = 16 13

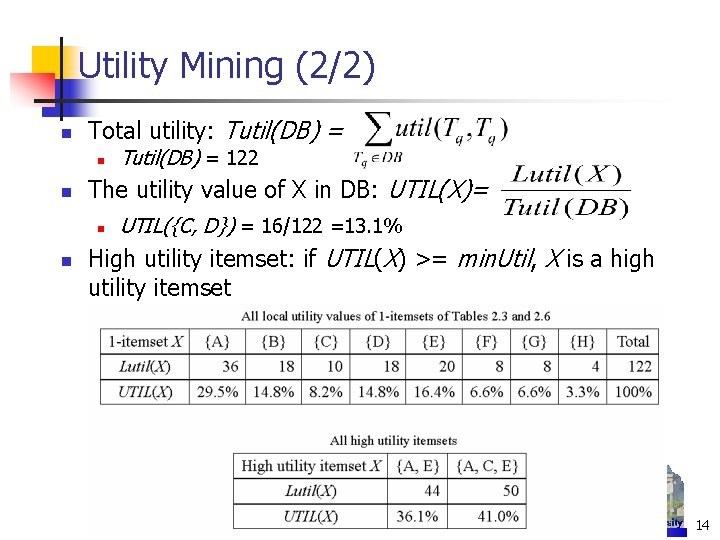

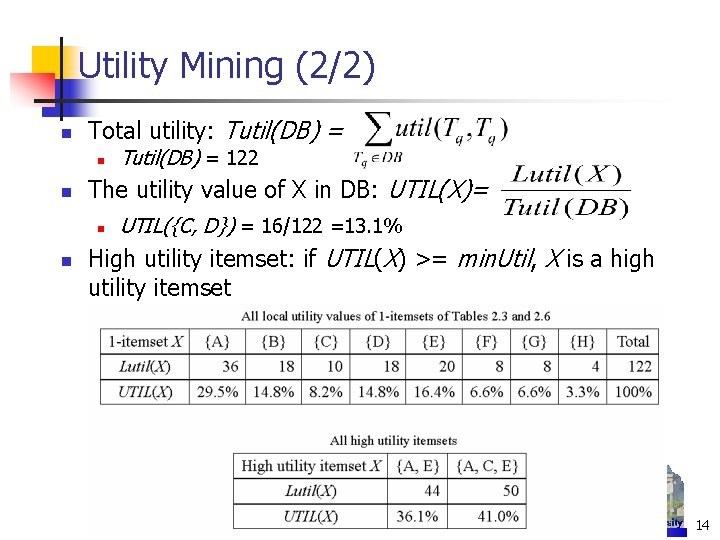

Utility Mining (2/2) n Total utility: Tutil(DB) = n n The utility value of X in DB: UTIL(X)= n n Tutil(DB) = 122 UTIL({C, D}) = 16/122 =13. 1% High utility itemset: if UTIL(X) >= min. Util, X is a high utility itemset 14

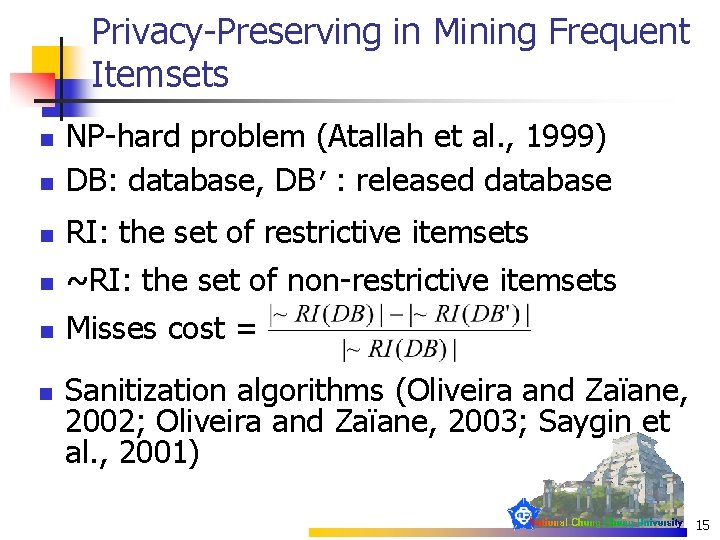

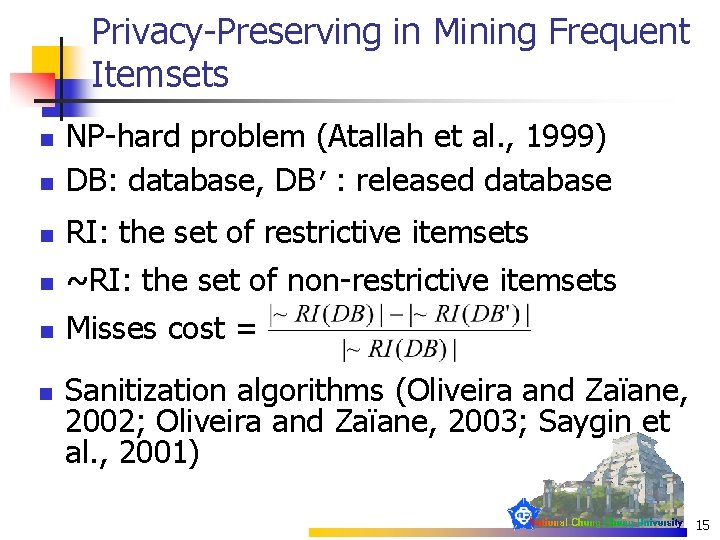

Privacy-Preserving in Mining Frequent Itemsets n NP-hard problem (Atallah et al. , 1999) DB: database, DB’: released database n RI: the set of restrictive itemsets n ~RI: the set of non-restrictive itemsets n Misses cost = n n Sanitization algorithms (Oliveira and Zaïane, 2002; Oliveira and Zaïane, 2003; Saygin et al. , 2001) 15

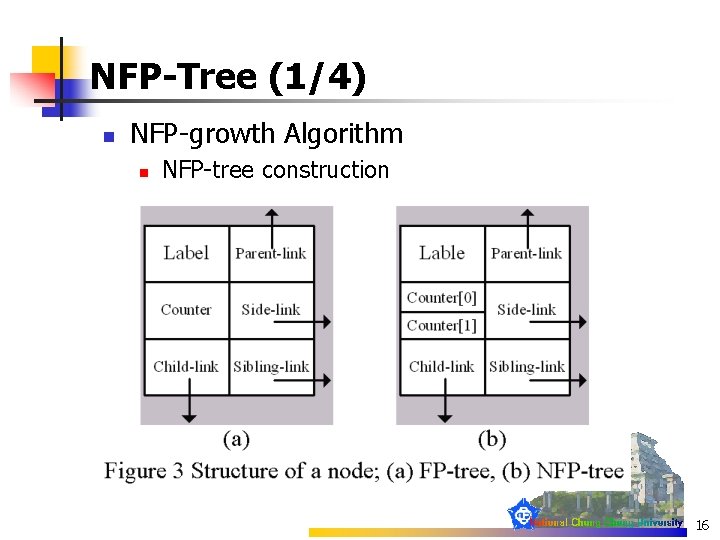

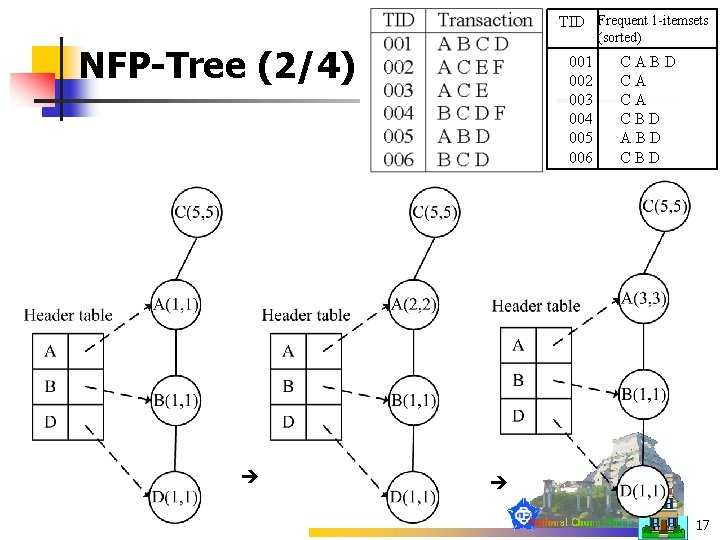

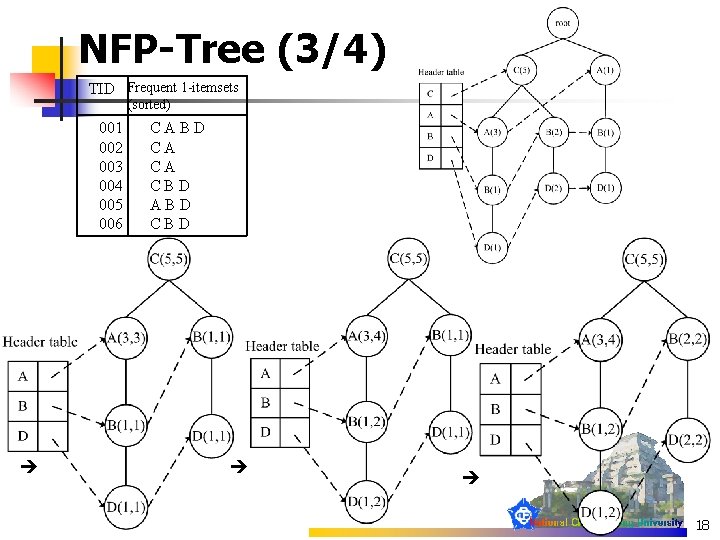

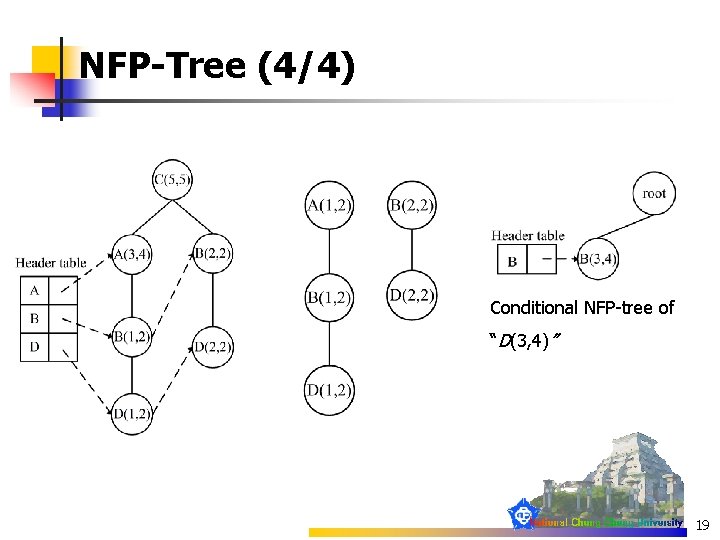

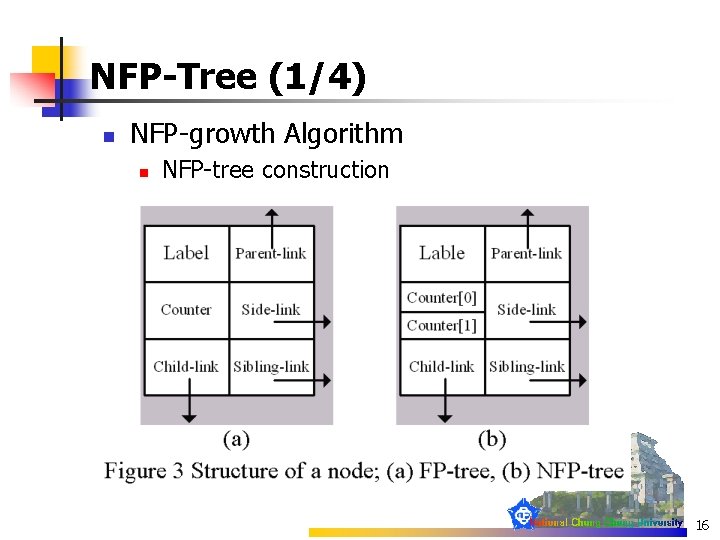

NFP-Tree (1/4) n NFP-growth Algorithm n NFP-tree construction 16

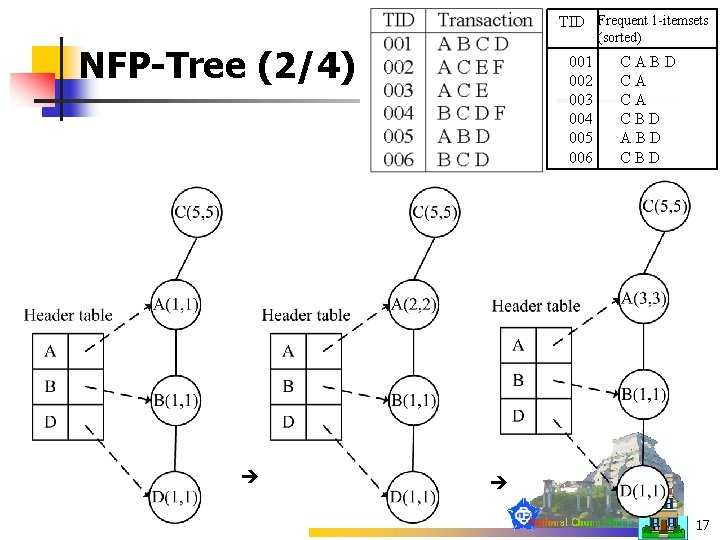

TID Frequent 1 -itemsets (sorted) NFP-Tree (2/4) 001 002 003 004 005 006 CABD CA CA CBD ABD CBD 17

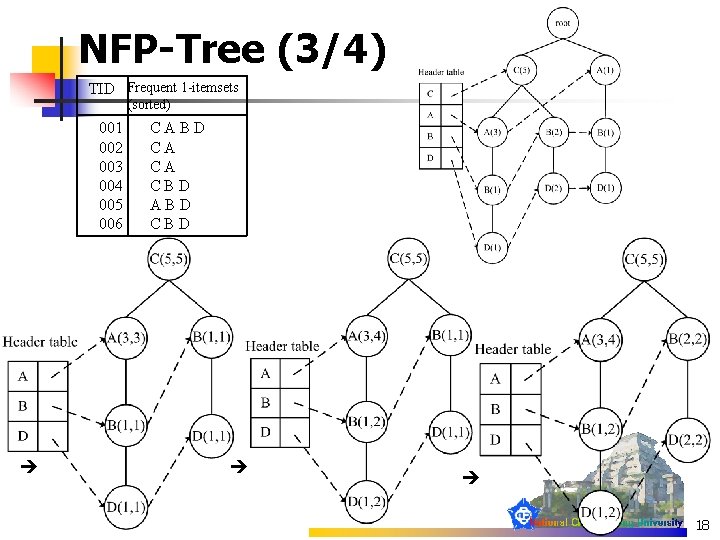

NFP-Tree (3/4) TID Frequent 1 -itemsets (sorted) 001 002 003 004 005 006 CABD CA CA CBD ABD CBD 18

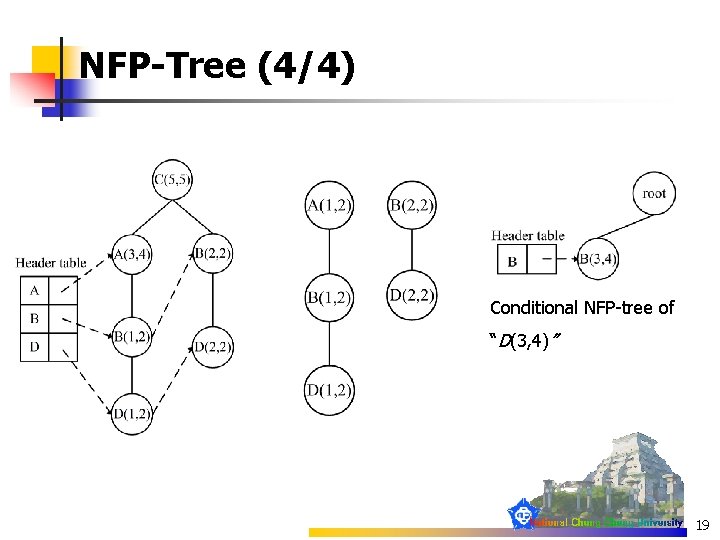

NFP-Tree (4/4) Conditional NFP-tree of “D(3, 4)” 19

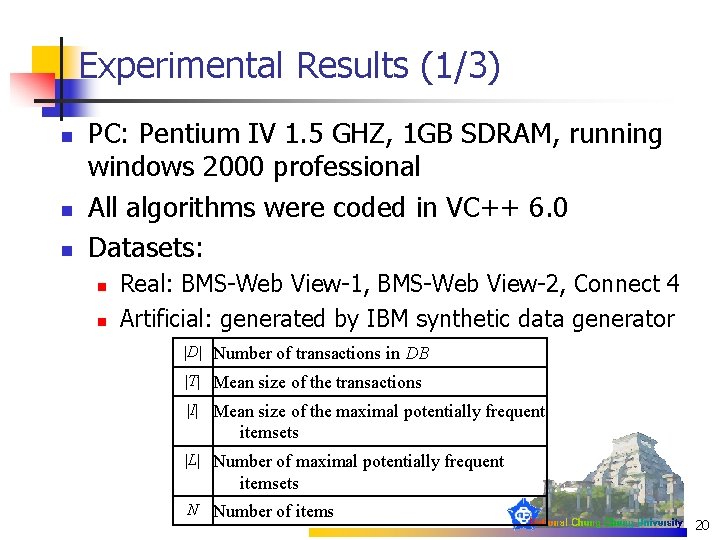

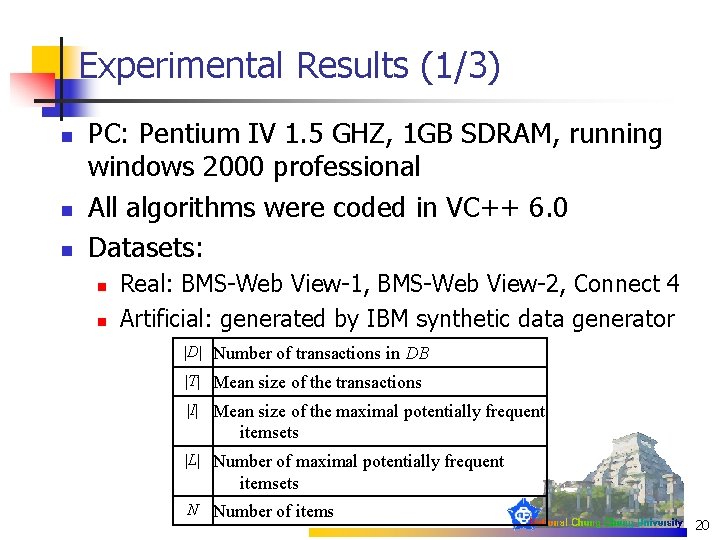

Experimental Results (1/3) n n n PC: Pentium IV 1. 5 GHZ, 1 GB SDRAM, running windows 2000 professional All algorithms were coded in VC++ 6. 0 Datasets: n n Real: BMS-Web View-1, BMS-Web View-2, Connect 4 Artificial: generated by IBM synthetic data generator |D| Number of transactions in DB |T| Mean size of the transactions |I| Mean size of the maximal potentially frequent itemsets |L| Number of maximal potentially frequent itemsets N Number of items 20

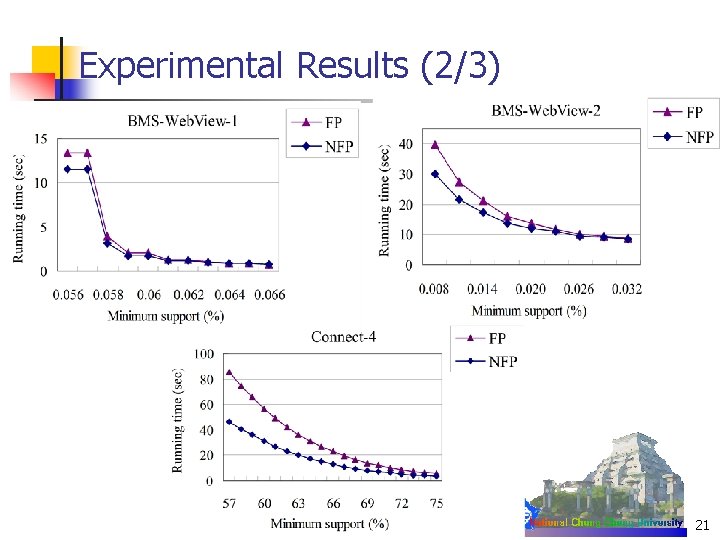

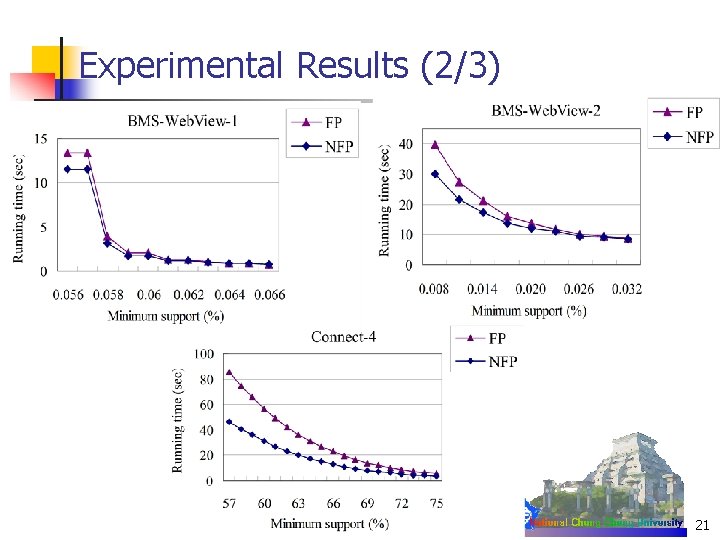

Experimental Results (2/3) 21

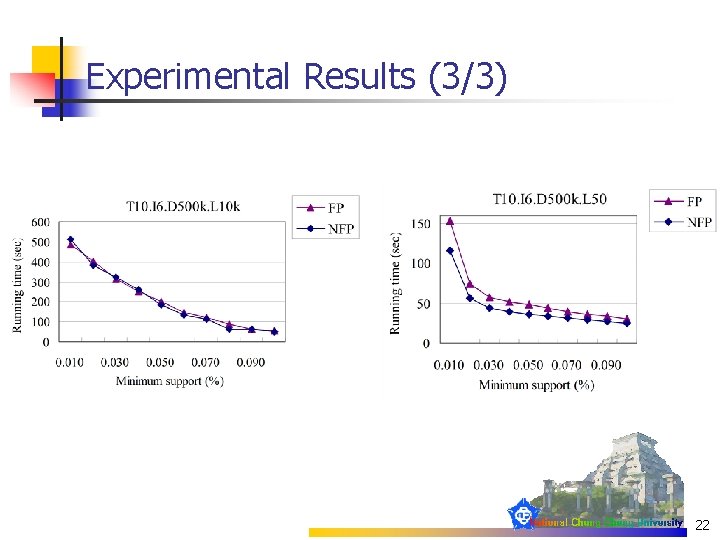

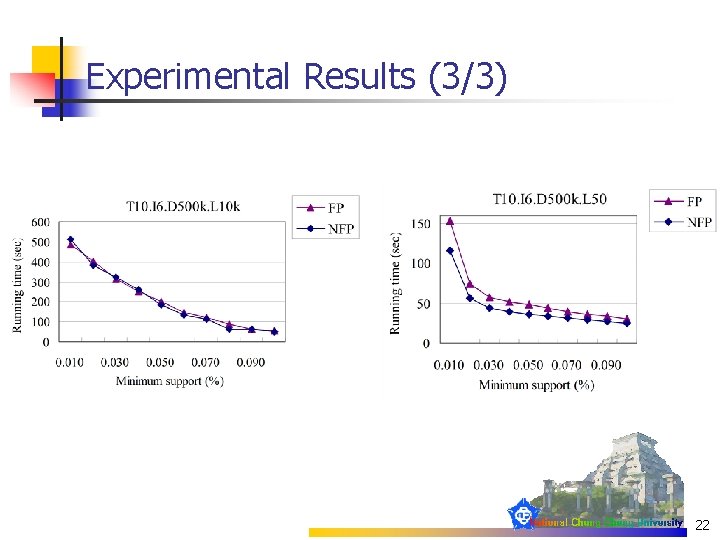

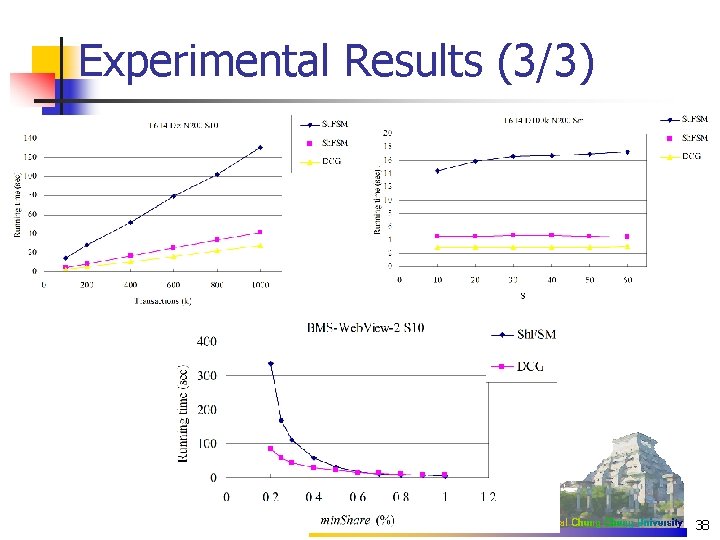

Experimental Results (3/3) 22

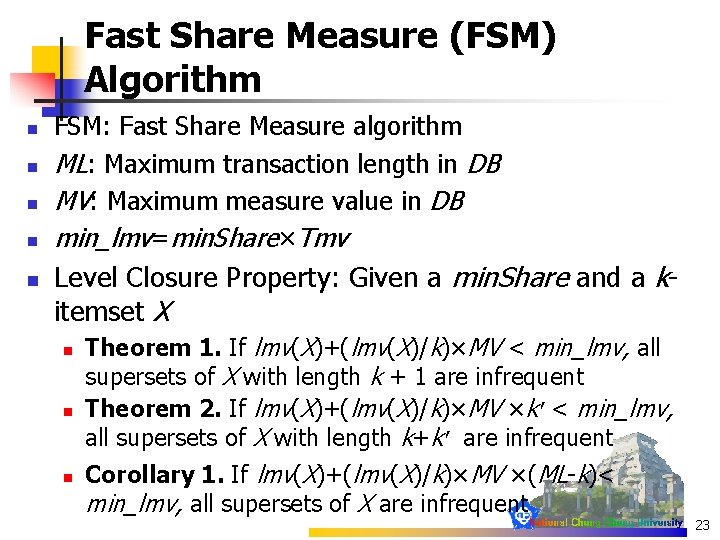

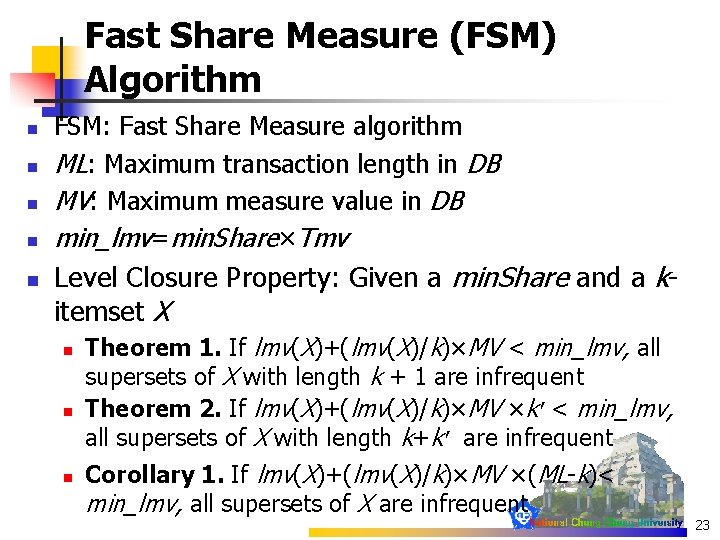

Fast Share Measure (FSM) Algorithm n n n FSM: Fast Share Measure algorithm ML: Maximum transaction length in DB MV: Maximum measure value in DB min_lmv=min. Share×Tmv Level Closure Property: Given a min. Share and a kitemset X n n n Theorem 1. If lmv(X)+(lmv(X)/k)×MV < min_lmv, all supersets of X with length k + 1 are infrequent Theorem 2. If lmv(X)+(lmv(X)/k)×MV ×k’< min_lmv, all supersets of X with length k+k’ are infrequent Corollary 1. If lmv(X)+(lmv(X)/k)×MV ×(ML-k)< min_lmv, all supersets of X are infrequent 23

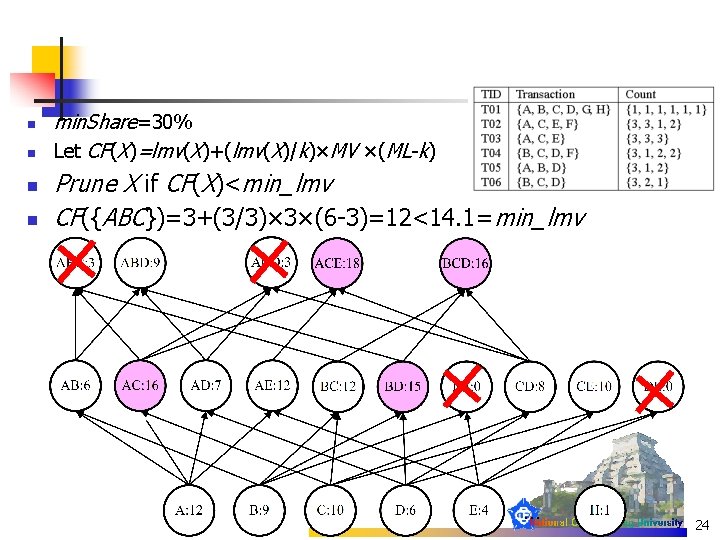

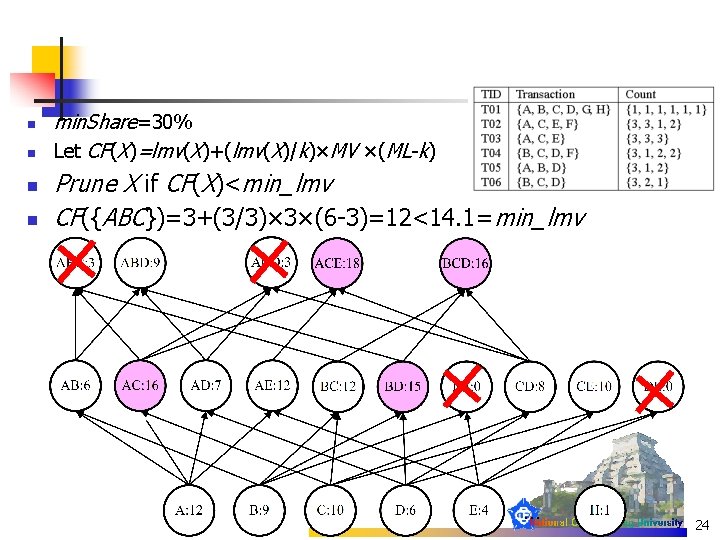

n n min. Share=30% Let CF(X)=lmv(X)+(lmv(X)/k)×MV ×(ML-k) Prune X if CF(X)<min_lmv CF({ABC})=3+(3/3)× 3×(6 -3)=12<14. 1=min_lmv 24

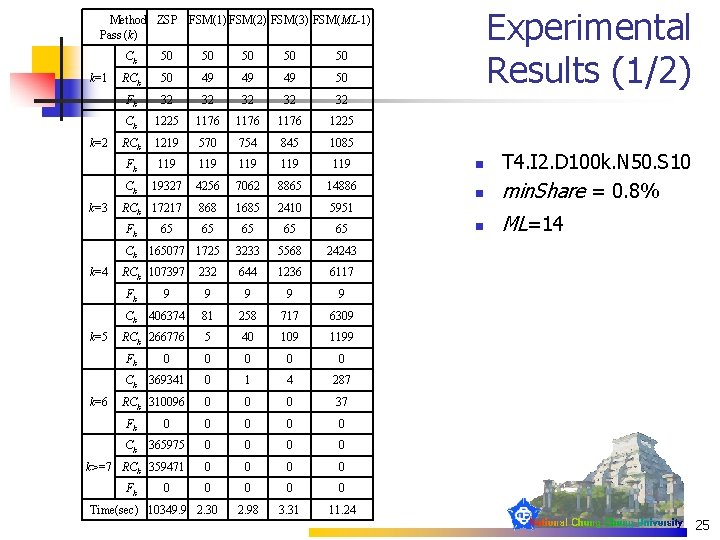

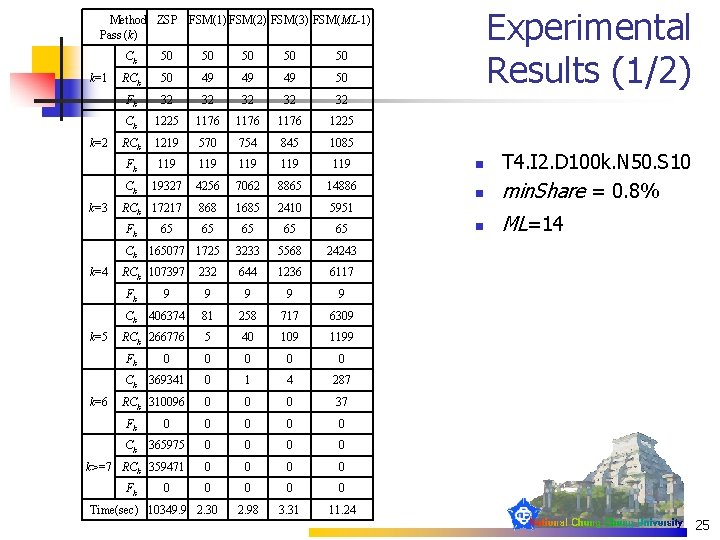

Experimental Results (1/2) Method ZSP FSM(1) FSM(2) FSM(3) FSM(ML-1) Pass (k) k=1 k=2 k=3 Ck 50 50 50 RCk 50 49 49 49 50 Fk 32 32 32 Ck 1225 1176 1225 RCk 1219 570 754 845 1085 Fk 119 119 119 Ck 19327 4256 7062 8865 14886 RCk 17217 868 1685 2410 5951 65 65 3233 5568 24243 232 644 1236 6117 Fk Ck k=4 k=5 k=6 65 165077 1725 RCk 107397 Fk 9 9 9 Ck 406374 81 258 717 6309 RCk 266776 5 40 109 1199 Fk 0 0 0 Ck 369341 0 1 4 287 RCk 310096 0 0 0 37 Fk 0 0 0 Ck 365975 0 0 k>=7 RCk 359471 0 0 0 0 2. 98 3. 31 11. 24 Fk 0 Time(sec) 10349. 9 2. 30 n T 4. I 2. D 100 k. N 50. S 10 min. Share = 0. 8% n ML=14 n 25

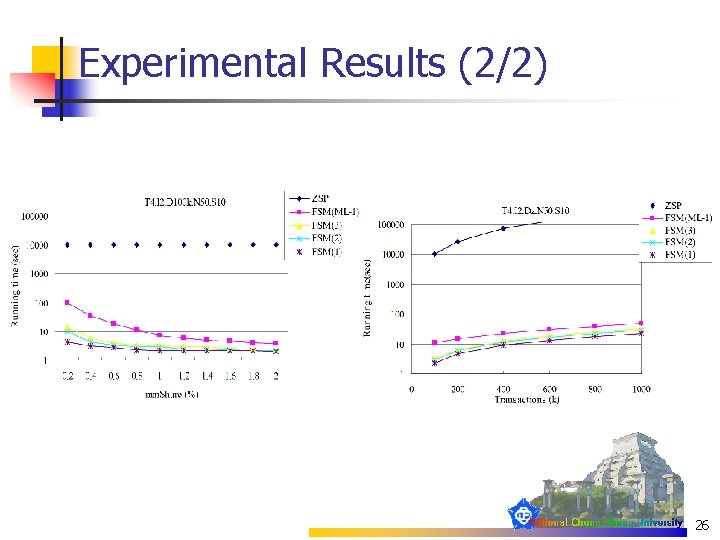

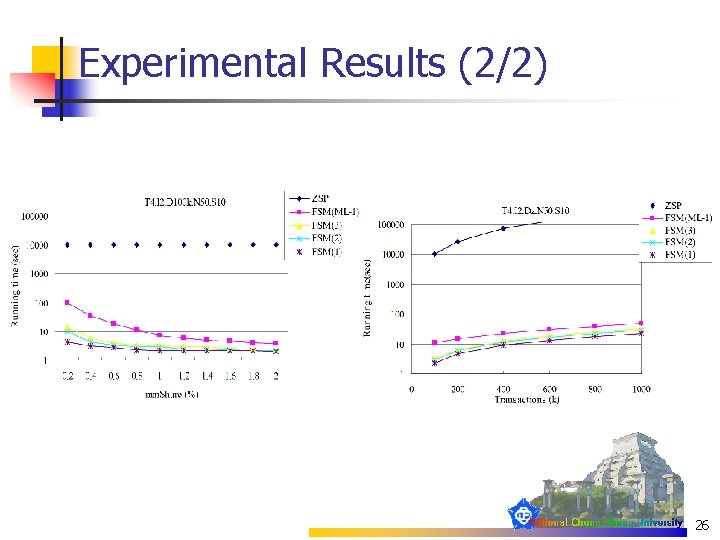

Experimental Results (2/2) 26

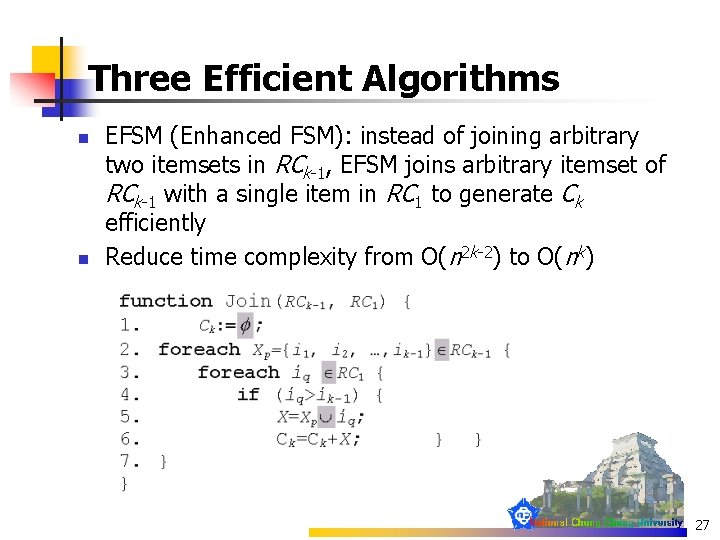

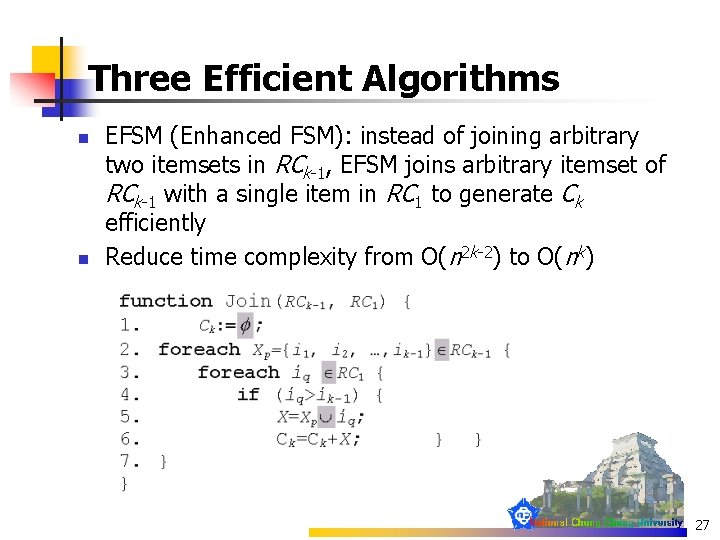

Three Efficient Algorithms n n EFSM (Enhanced FSM): instead of joining arbitrary two itemsets in RCk-1, EFSM joins arbitrary itemset of RCk-1 with a single item in RC 1 to generate Ck efficiently Reduce time complexity from O(n 2 k-2) to O(nk) 27

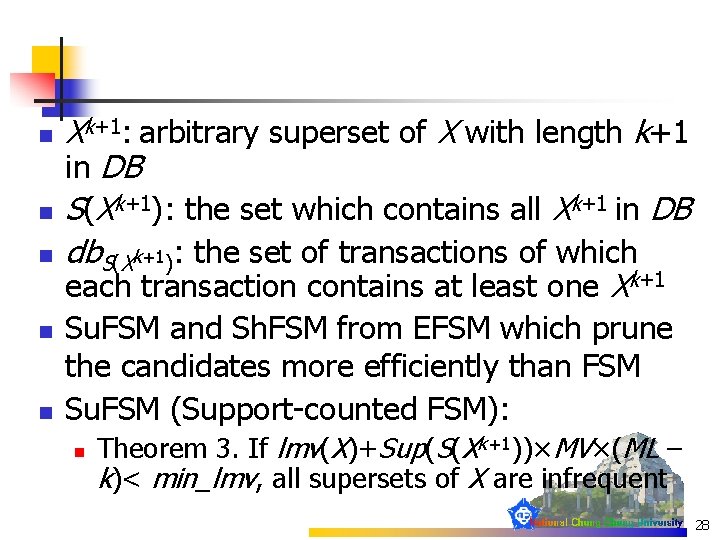

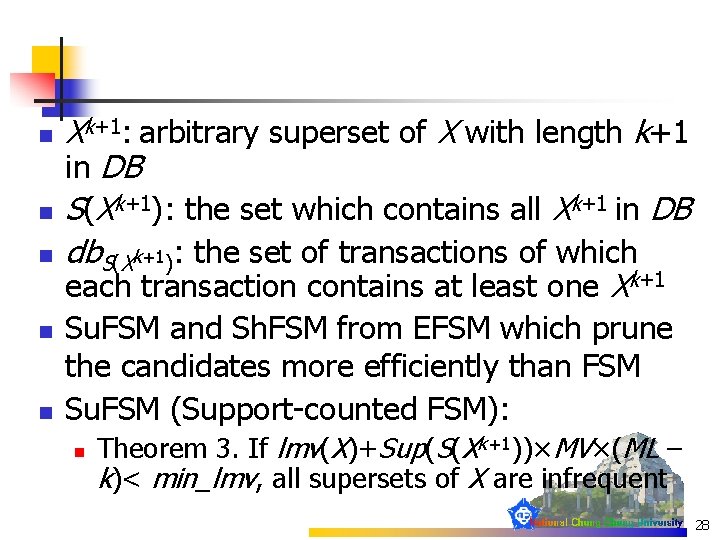

n n n Xk+1: arbitrary superset of X with length k+1 in DB S(Xk+1): the set which contains all Xk+1 in DB db. S(Xk+1): the set of transactions of which each transaction contains at least one Xk+1 Su. FSM and Sh. FSM from EFSM which prune the candidates more efficiently than FSM Su. FSM (Support-counted FSM): n Theorem 3. If lmv(X)+Sup(S(Xk+1))×MV×(ML – k)< min_lmv, all supersets of X are infrequent 28

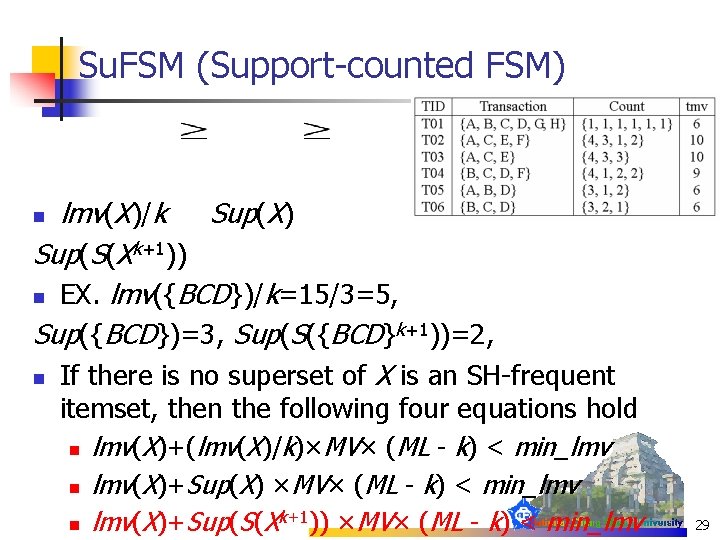

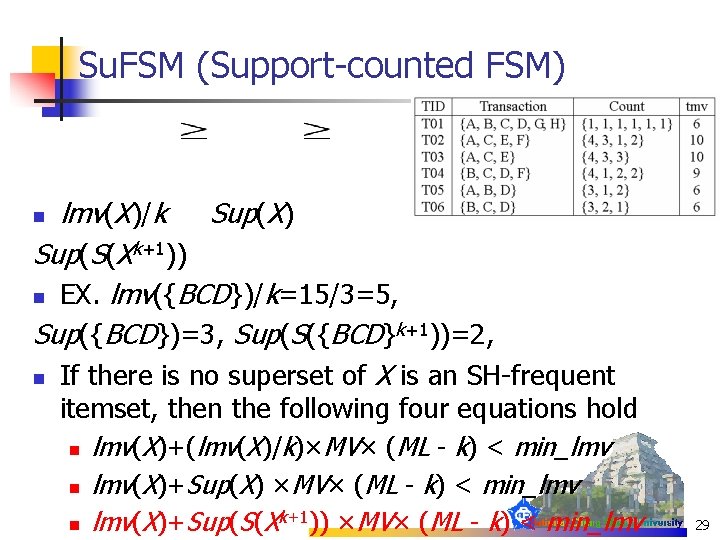

Su. FSM (Support-counted FSM) lmv(X)/k Sup(X) Sup(S(Xk+1)) n EX. lmv({BCD})/k=15/3=5, Sup({BCD})=3, Sup(S({BCD}k+1))=2, n If there is no superset of X is an SH-frequent n itemset, then the following four equations hold n lmv(X)+(lmv(X)/k)×MV× (ML - k) < min_lmv n lmv(X)+Sup(X) ×MV× (ML - k) < min_lmv k+1)) ×MV× (ML - k) < min_lmv n lmv(X)+Sup(S(X 29

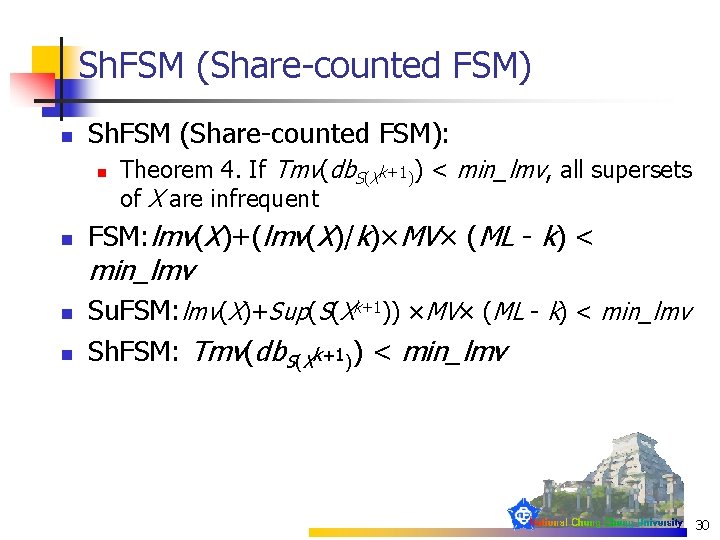

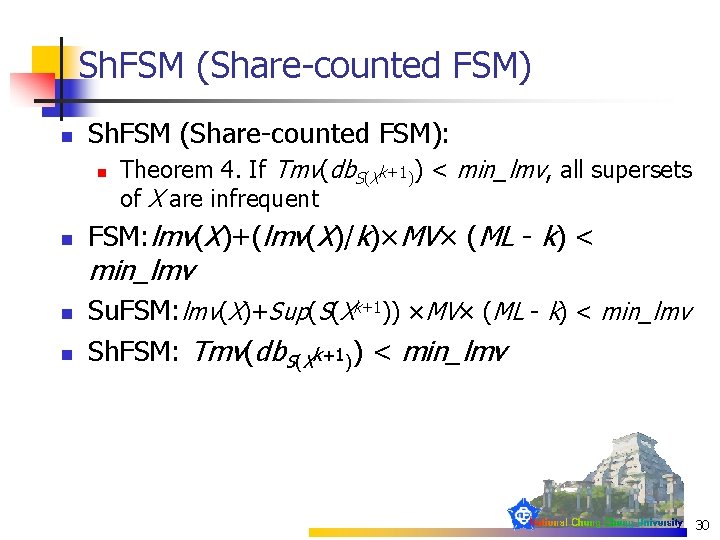

Sh. FSM (Share-counted FSM) n Sh. FSM (Share-counted FSM): n n Theorem 4. If Tmv(db. S(Xk+1)) < min_lmv, all supersets of X are infrequent FSM: lmv(X)+(lmv(X)/k)×MV× (ML - k) < min_lmv n n Su. FSM: lmv(X)+Sup(S(Xk+1)) ×MV× (ML - k) < min_lmv Sh. FSM: Tmv(db. S(Xk+1)) < min_lmv 30

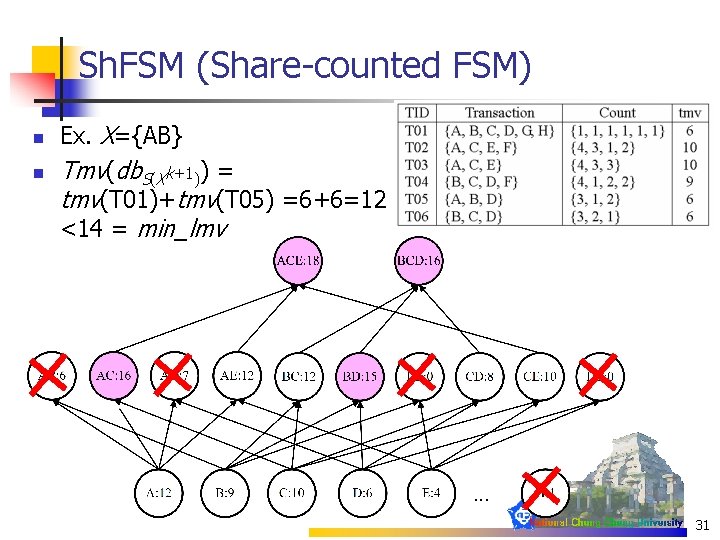

Sh. FSM (Share-counted FSM) n n Ex. X={AB} Tmv(db. S(Xk+1)) = tmv(T 01)+tmv(T 05) =6+6=12 <14 = min_lmv 31

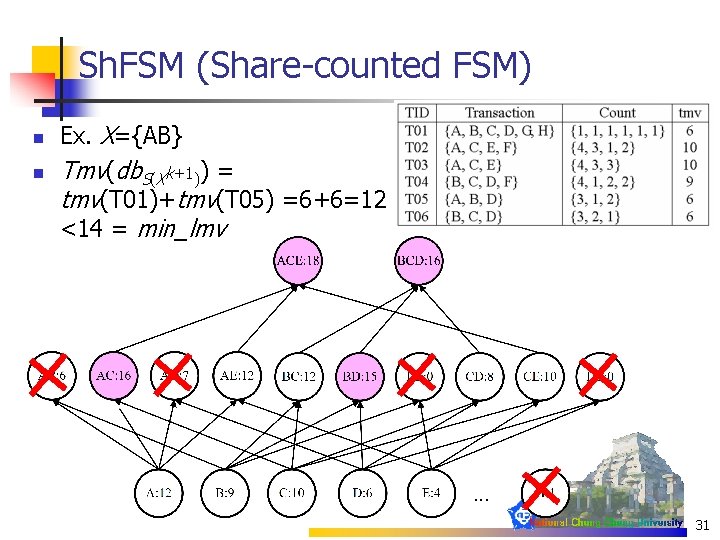

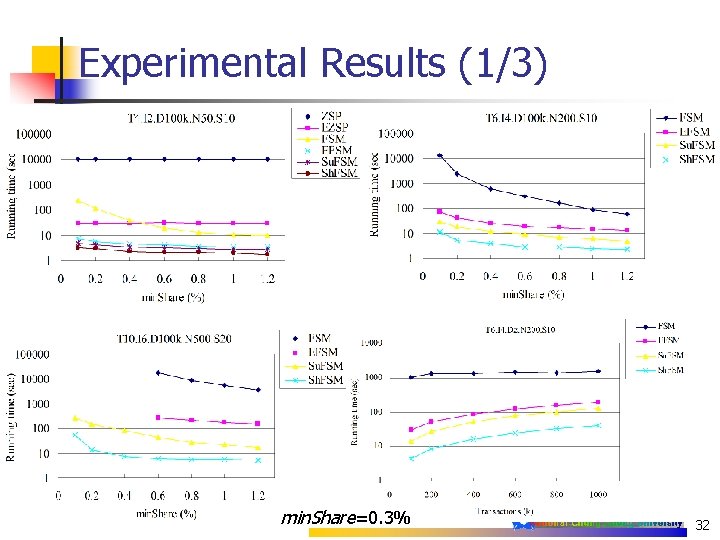

Experimental Results (1/3) min. Share=0. 3% 32

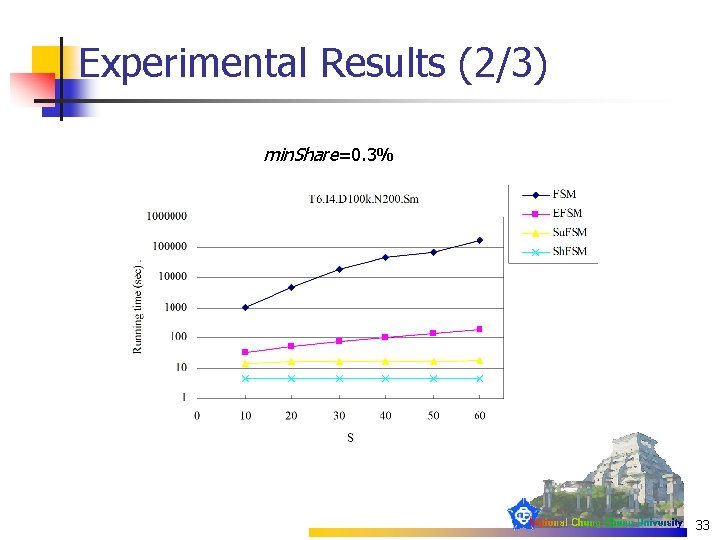

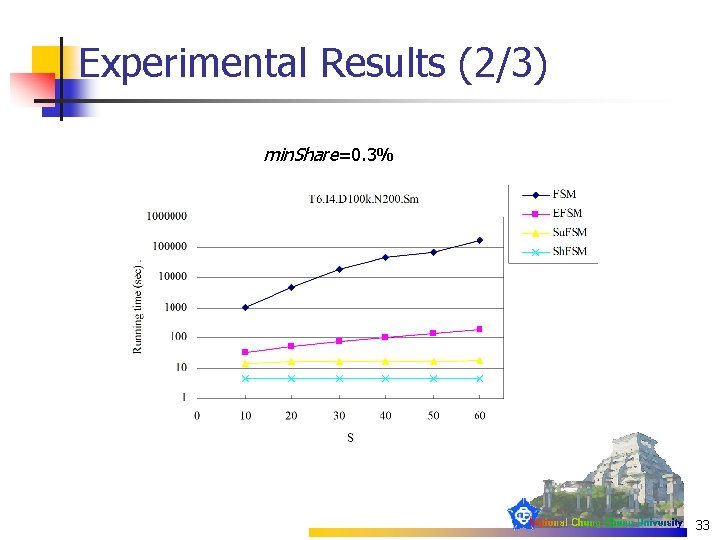

Experimental Results (2/3) min. Share=0. 3% 33

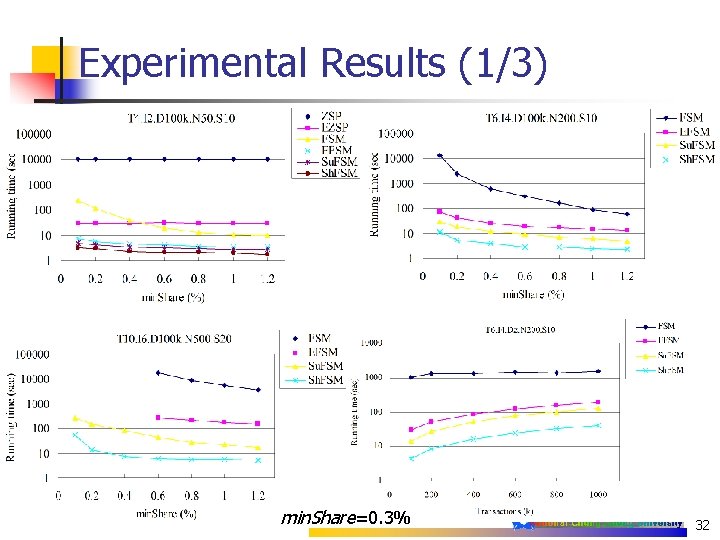

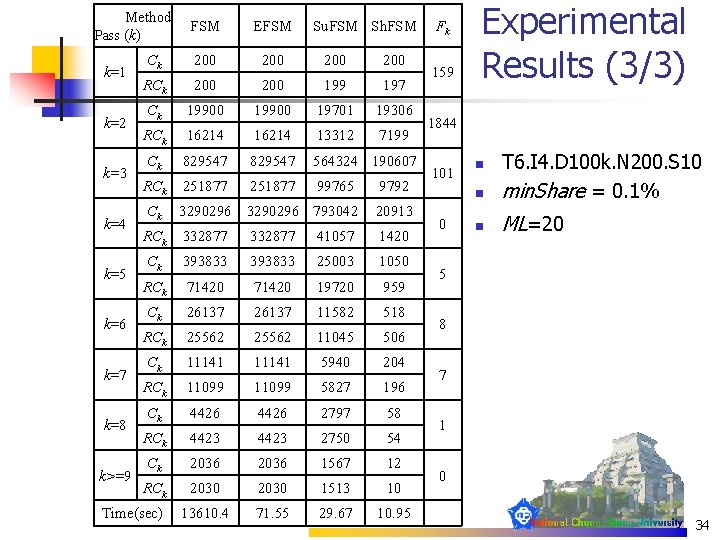

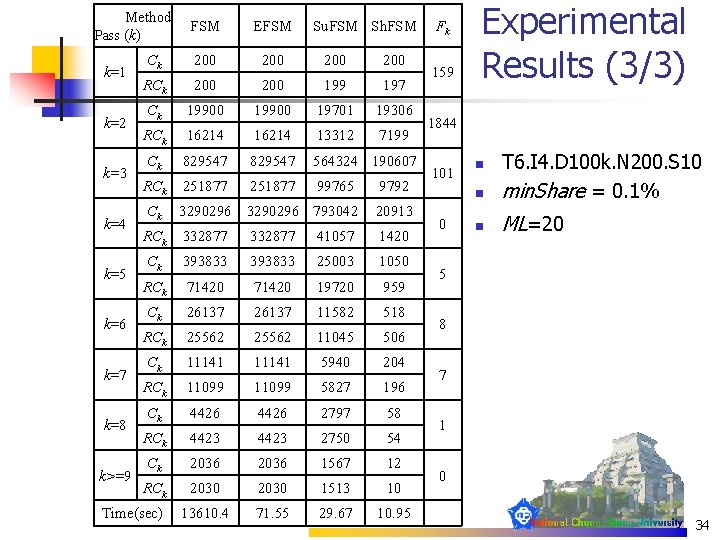

Method Pass (k) k=1 k=2 k=3 k=4 k=5 k=6 k=7 k=8 k>=9 FSM EFSM Ck 200 200 RCk 200 199 197 Ck 19900 19701 19306 RCk 16214 13312 7199 Ck 829547 564324 190607 RCk 251877 99765 9792 3290296 793042 20913 RCk 332877 41057 1420 Ck 393833 25003 1050 RCk 71420 19720 959 Ck 26137 11582 518 RCk 25562 11045 506 Ck 11141 5940 204 RCk 11099 5827 196 Ck 4426 2797 58 RCk 4423 2750 54 Ck 2036 1567 12 RCk 2030 1513 10 13610. 4 71. 55 29. 67 10. 95 Ck Time(sec) Su. FSM Sh. FSM Fk 159 Experimental Results (3/3) 1844 101 0 n T 6. I 4. D 100 k. N 200. S 10 min. Share = 0. 1% n ML=20 n 5 8 7 1 0 34

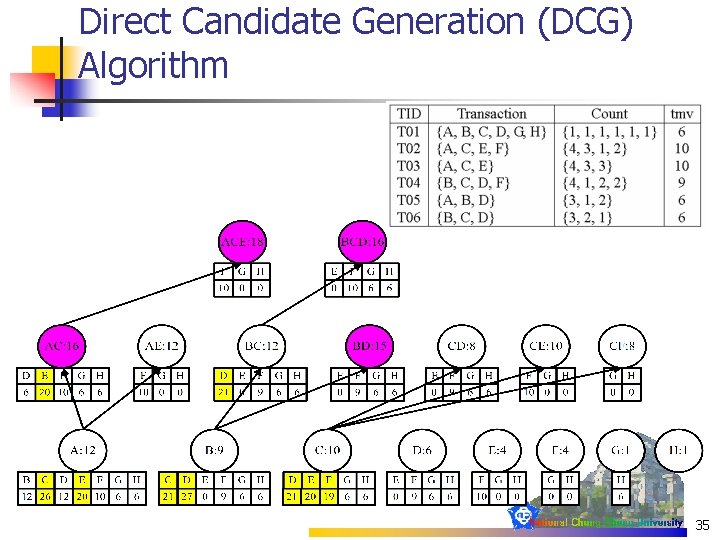

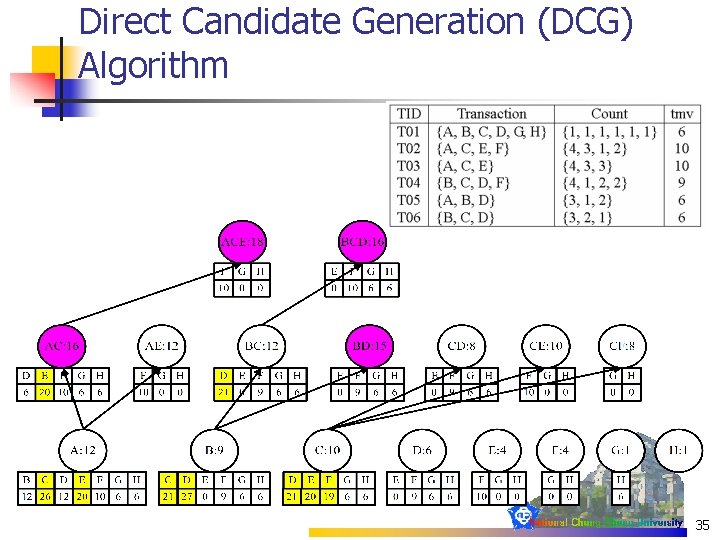

Direct Candidate Generation (DCG) Algorithm 35

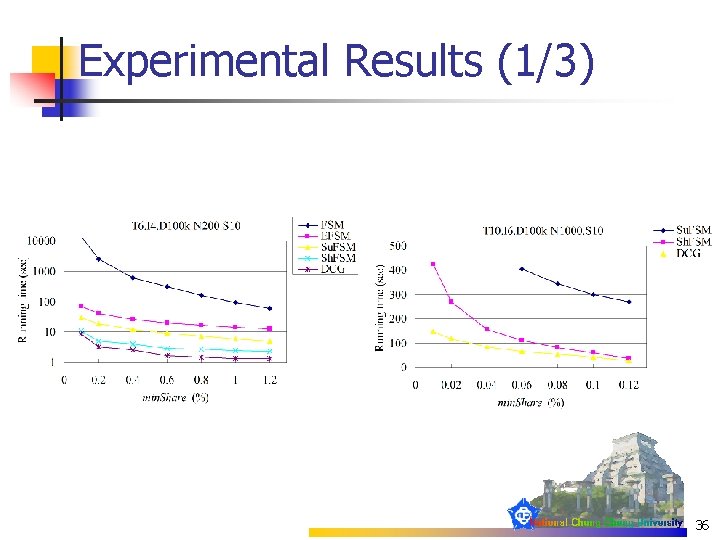

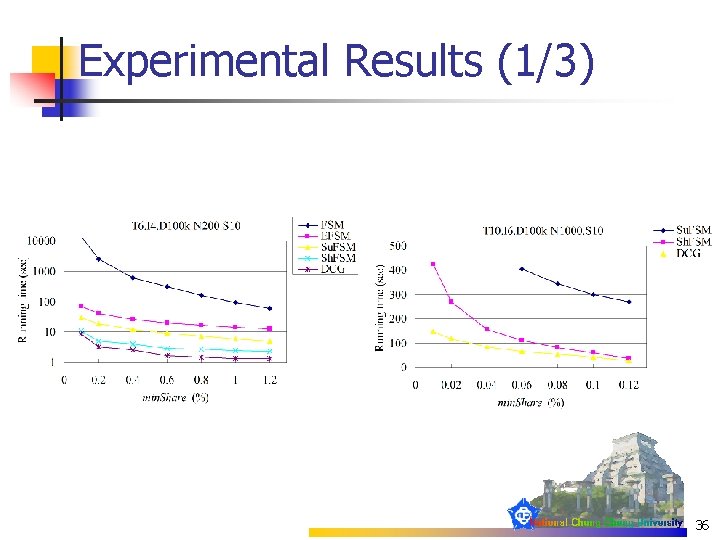

Experimental Results (1/3) 36

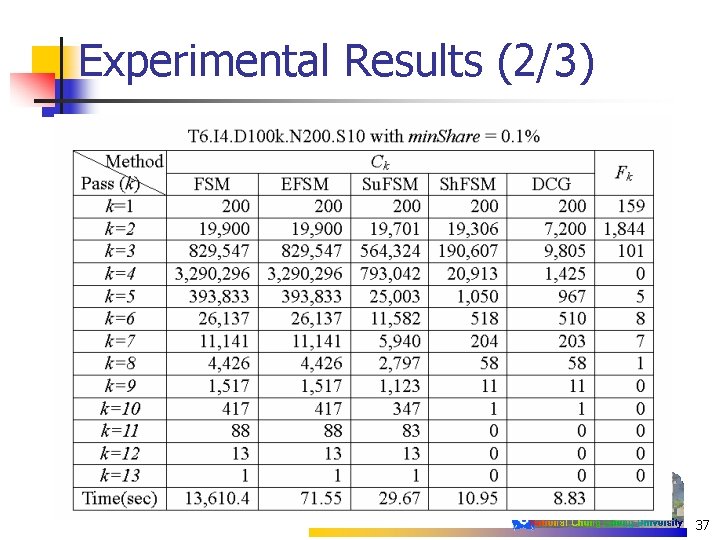

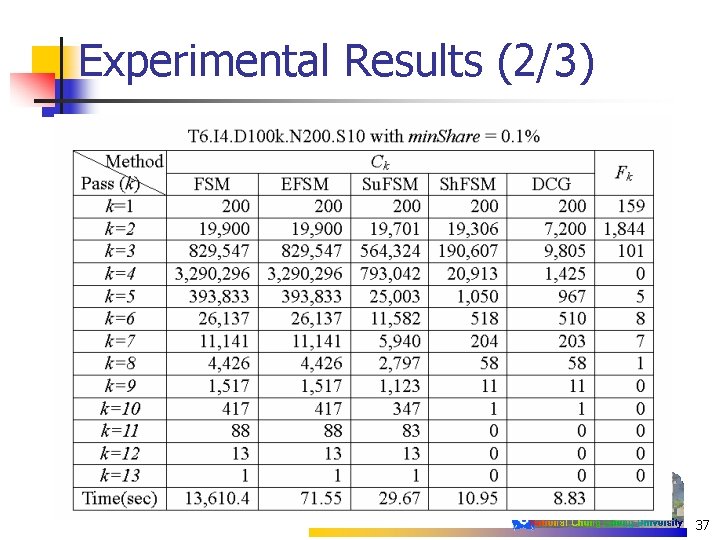

Experimental Results (2/3) 37

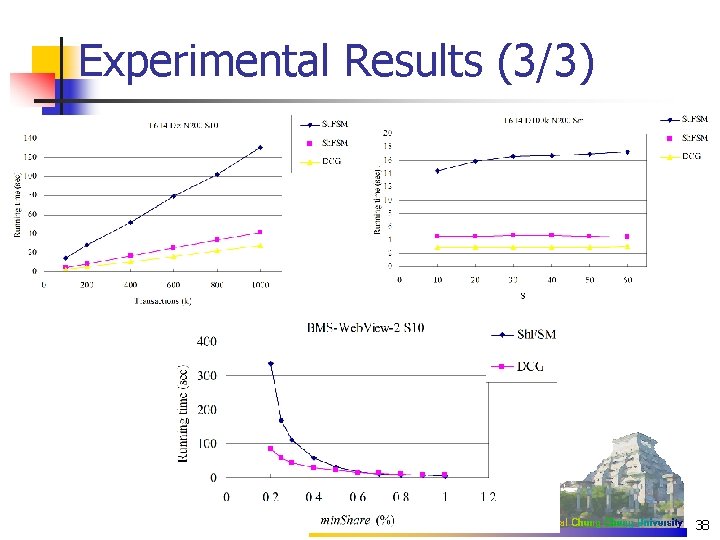

Experimental Results (3/3) 38

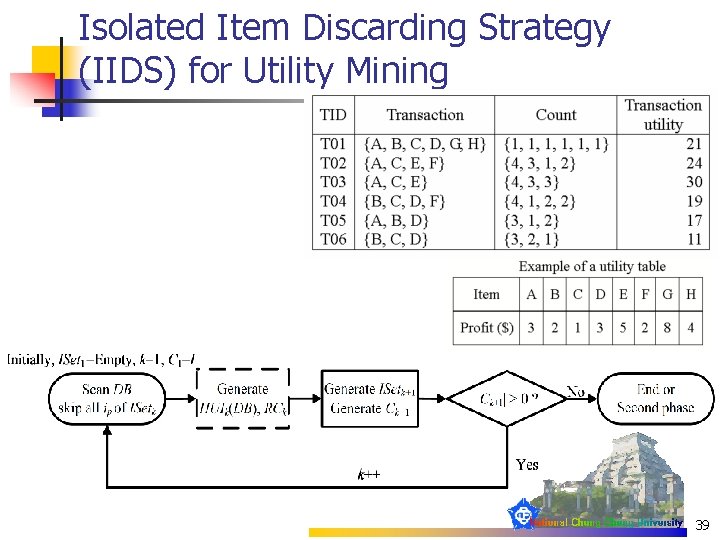

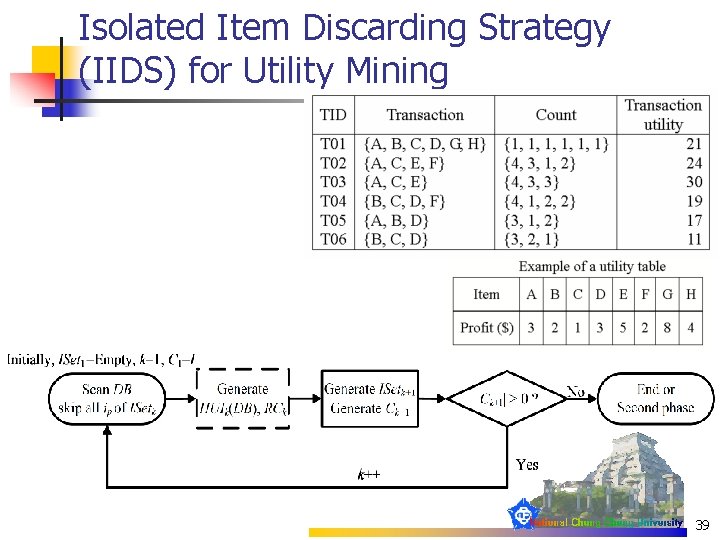

Isolated Item Discarding Strategy (IIDS) for Utility Mining 39

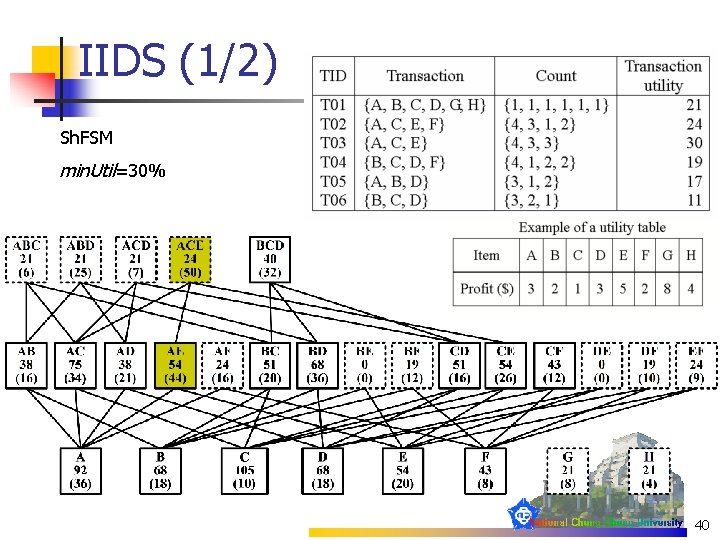

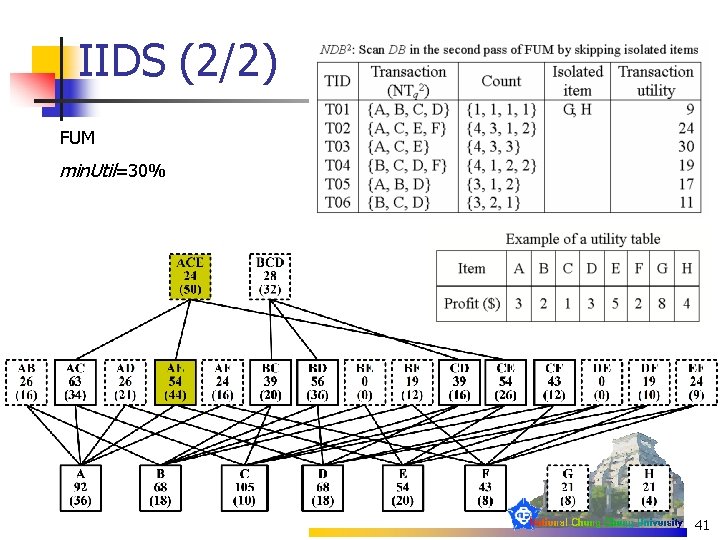

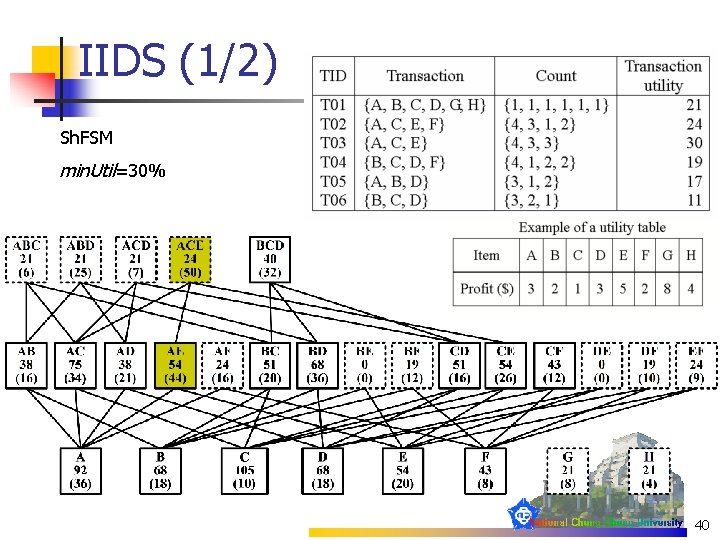

IIDS (1/2) Sh. FSM min. Util=30% 40

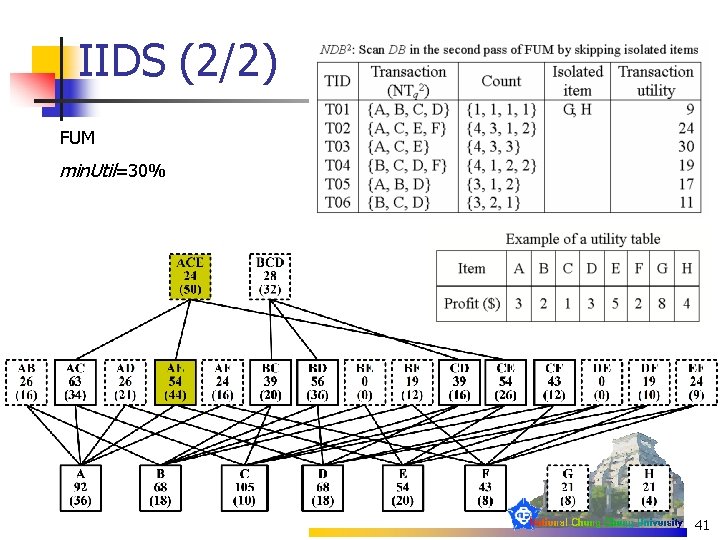

IIDS (2/2) FUM min. Util=30% 41

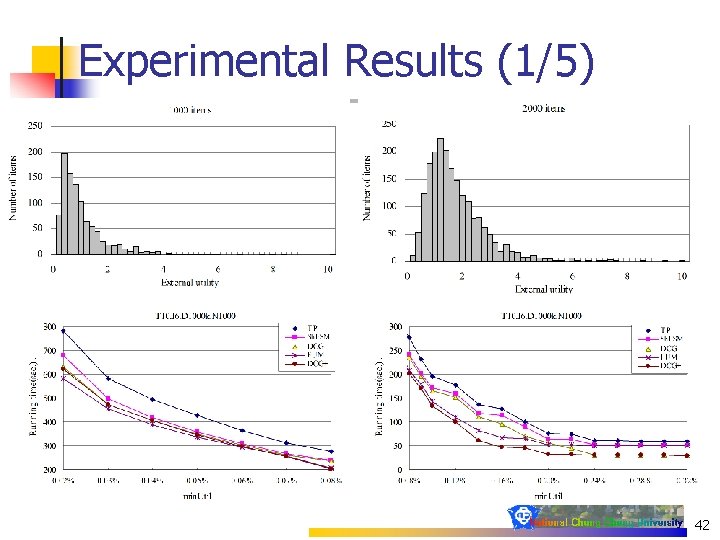

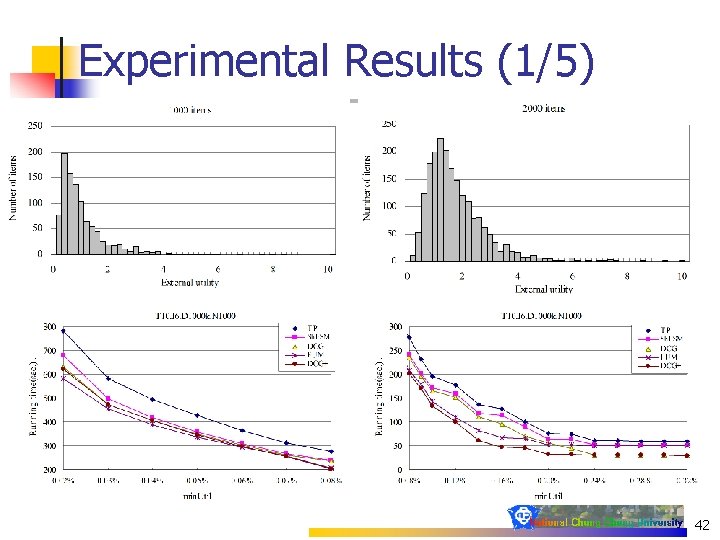

Experimental Results (1/5) 42

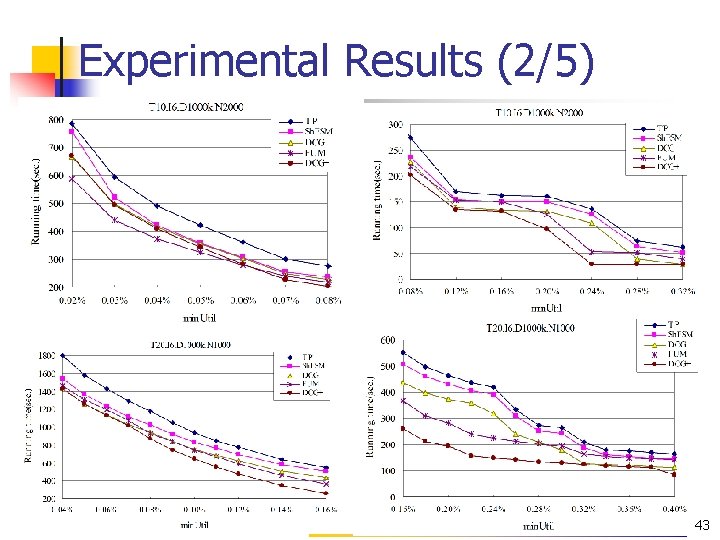

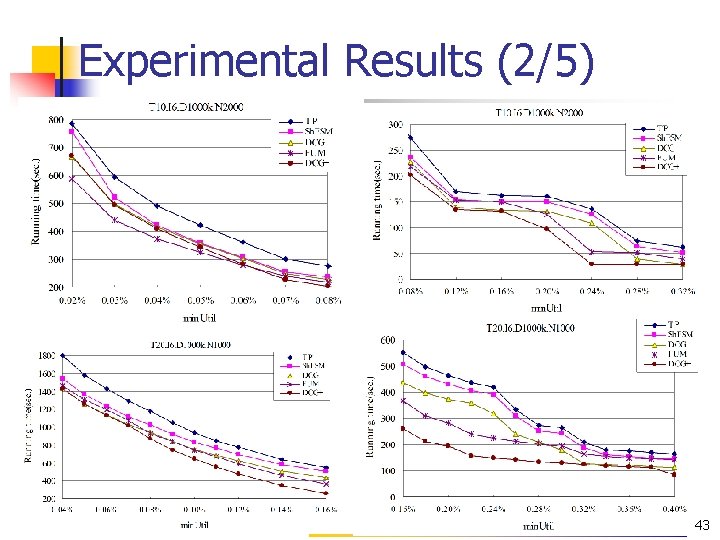

Experimental Results (2/5) 43

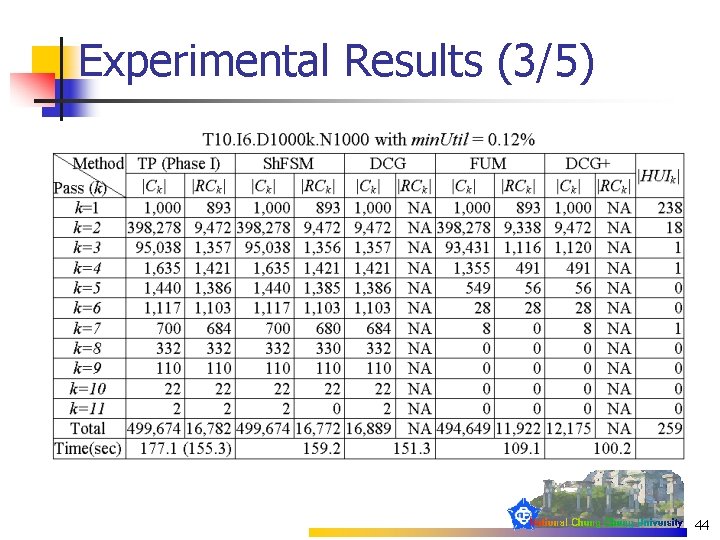

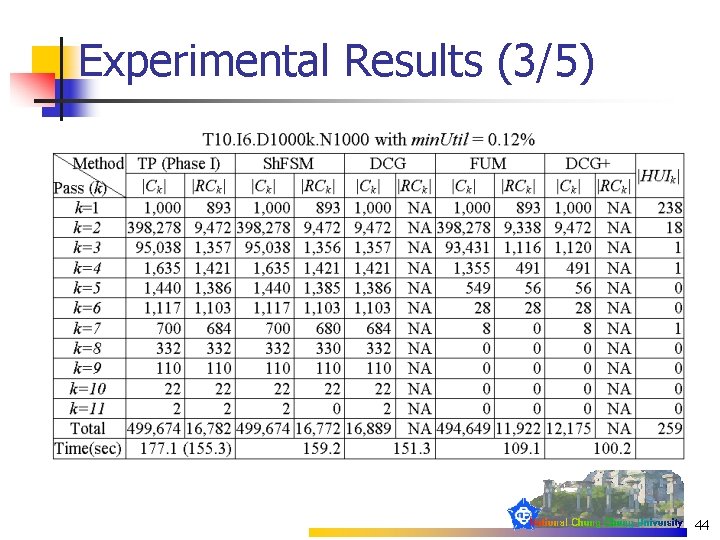

Experimental Results (3/5) 44

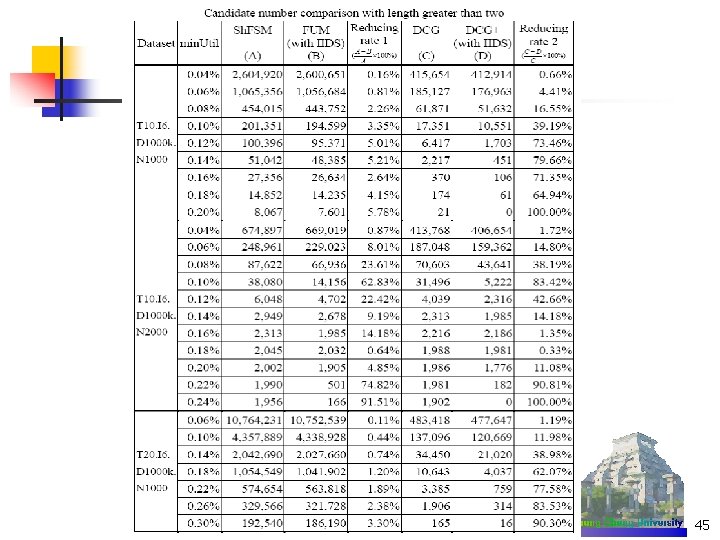

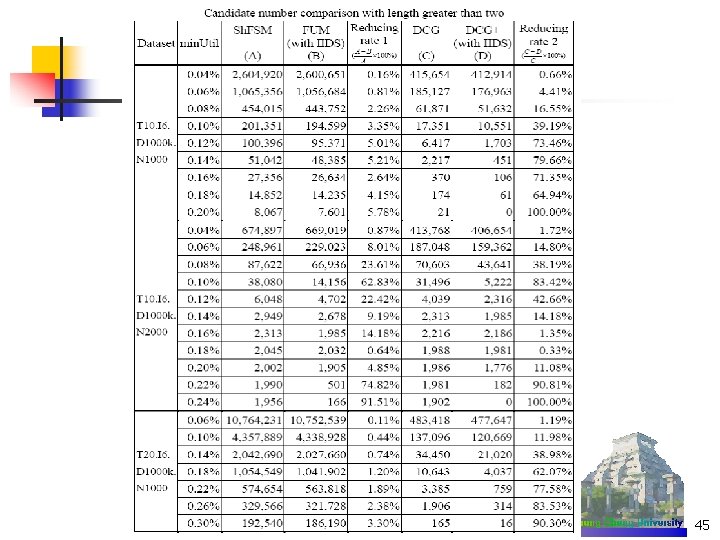

45

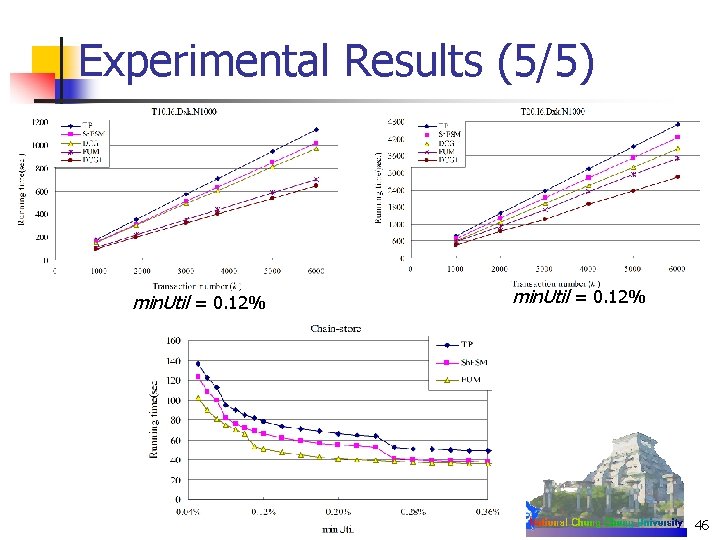

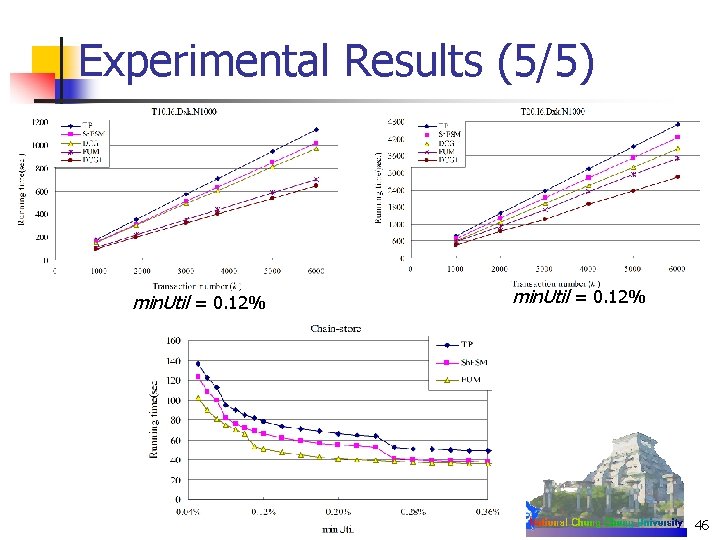

Experimental Results (5/5) min. Util = 0. 12% 46

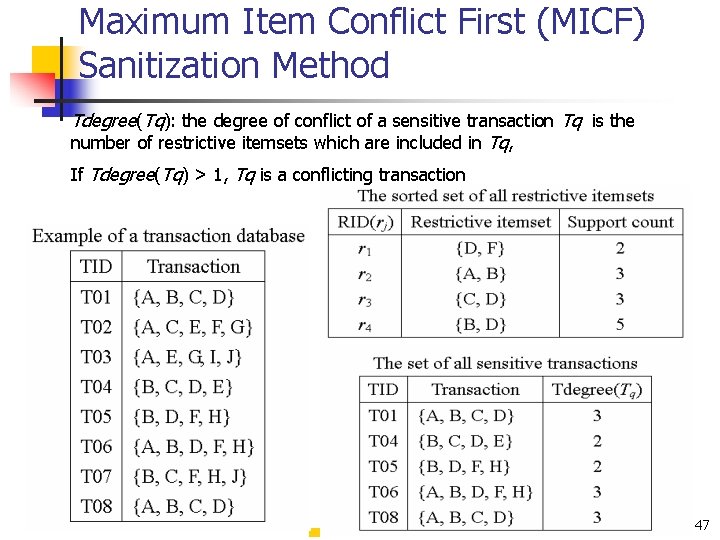

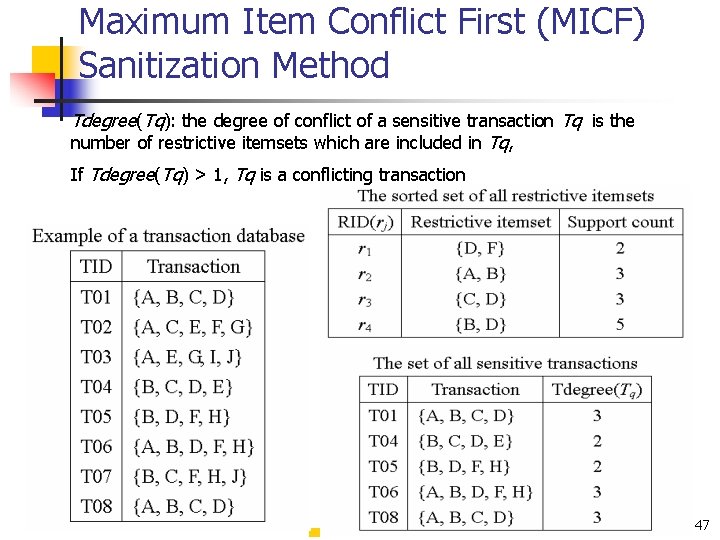

Maximum Item Conflict First (MICF) Sanitization Method Tdegree(Tq): the degree of conflict of a sensitive transaction Tq is the number of restrictive itemsets which are included in Tq, If Tdegree(Tq) > 1, Tq is a conflicting transaction 47

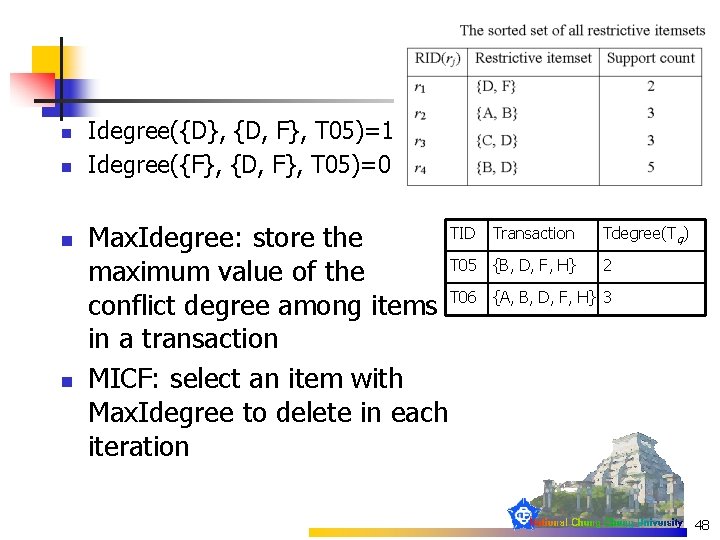

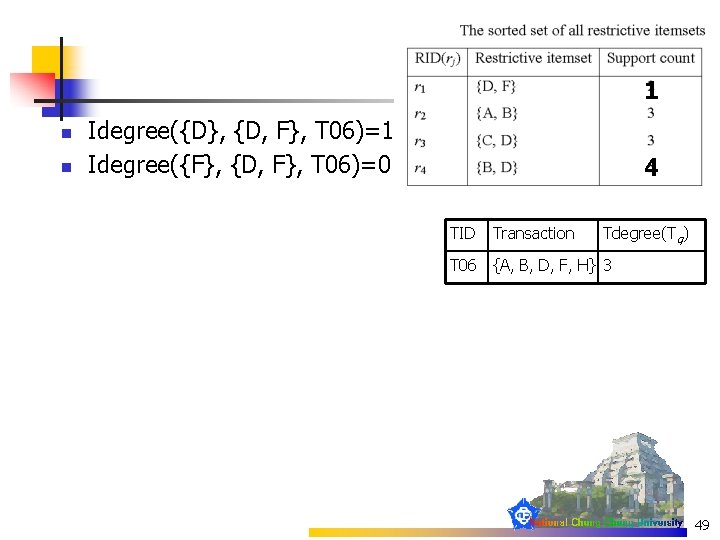

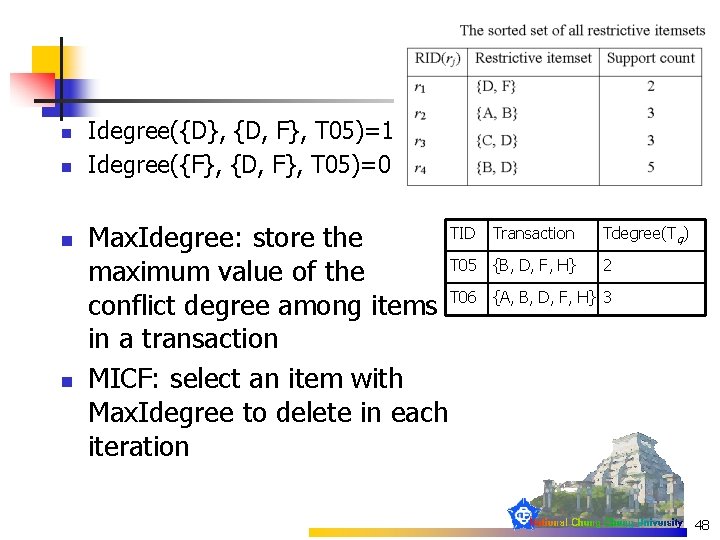

n n Idegree({D}, {D, F}, T 05)=1 Idegree({F}, {D, F}, T 05)=0 TID Max. Idegree: store the T 05 maximum value of the conflict degree among items T 06 in a transaction MICF: select an item with Max. Idegree to delete in each iteration Transaction Tdegree(Tq) {B, D, F, H} 2 {A, B, D, F, H} 3 48

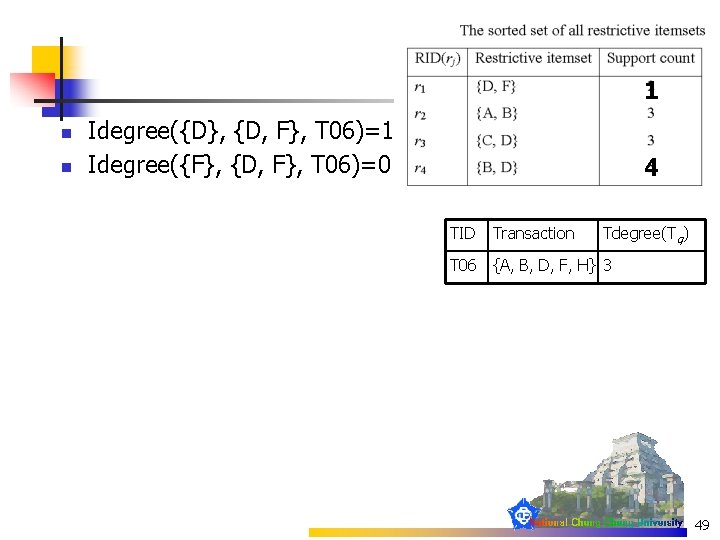

1 n n Idegree({D}, {D, F}, T 06)=1 Idegree({F}, {D, F}, T 06)=0 4 TID Transaction Tdegree(Tq) T 06 {A, B, D, F, H} 3 49

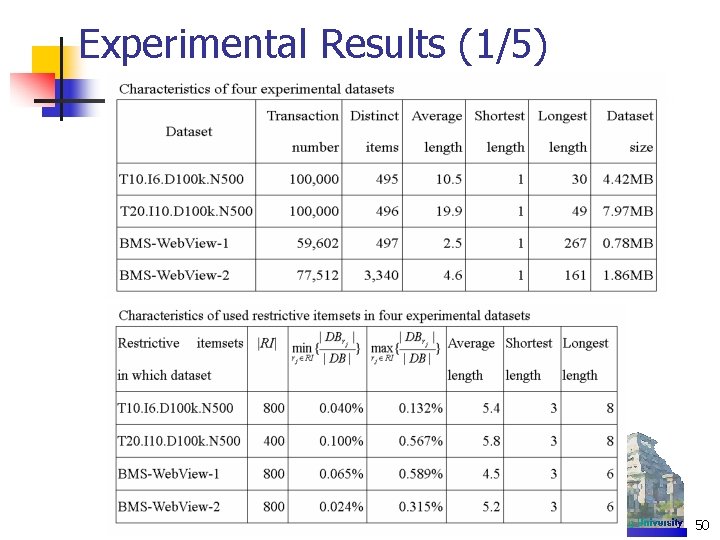

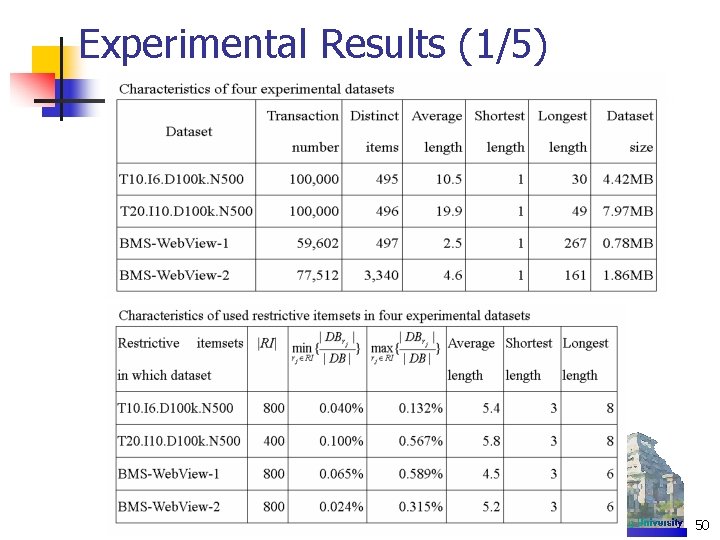

Experimental Results (1/5) 50

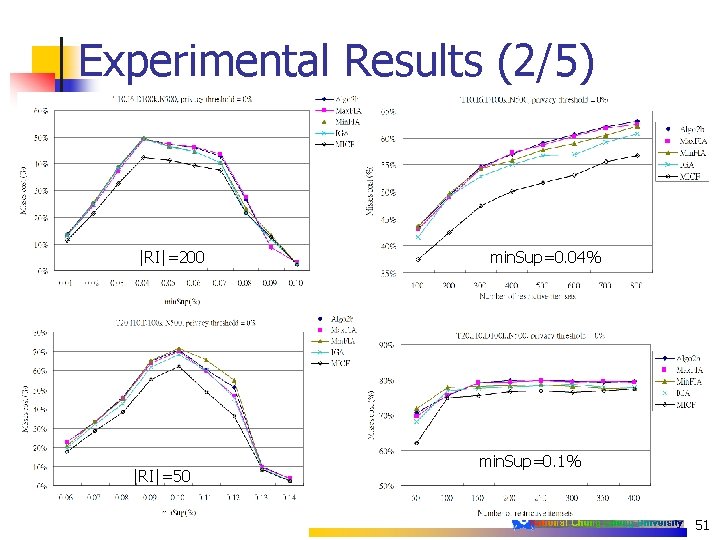

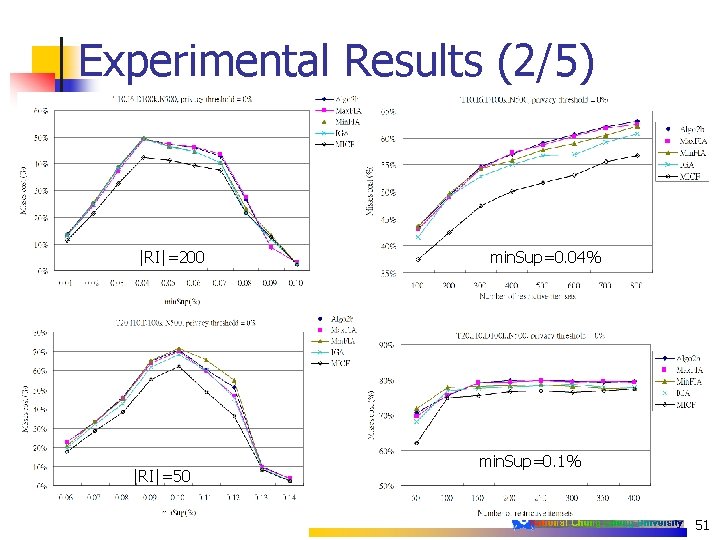

Experimental Results (2/5) |RI|=200 |RI|=50 min. Sup=0. 04% min. Sup=0. 1% 51

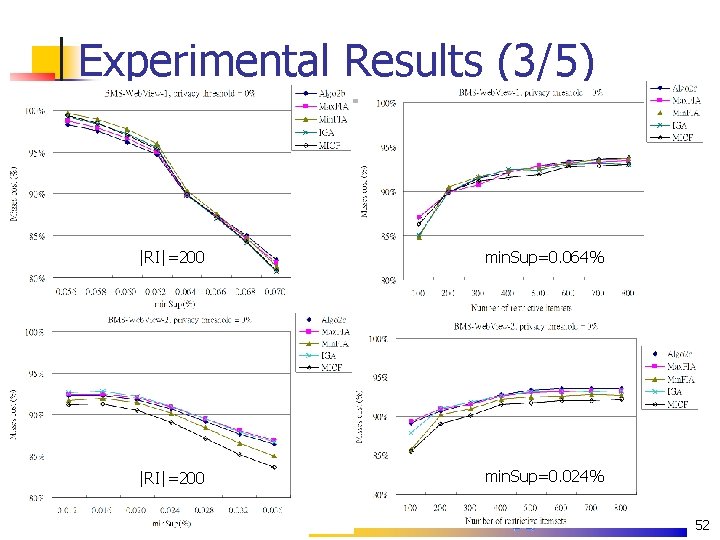

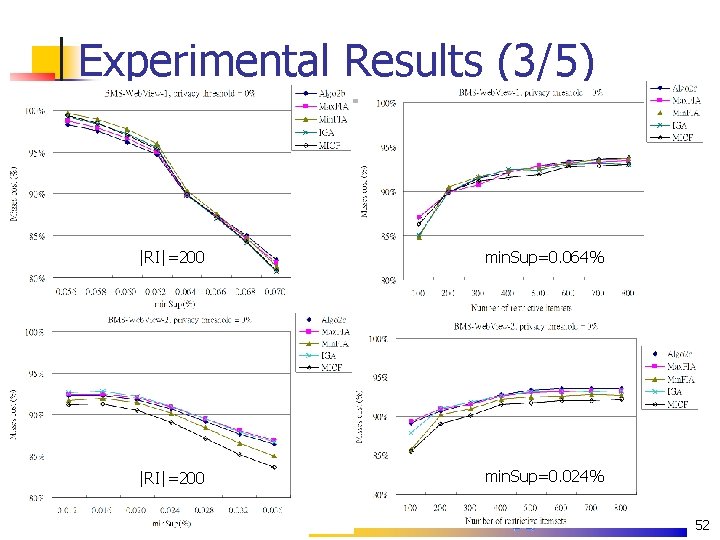

Experimental Results (3/5) |RI|=200 min. Sup=0. 064% |RI|=200 min. Sup=0. 024% 52

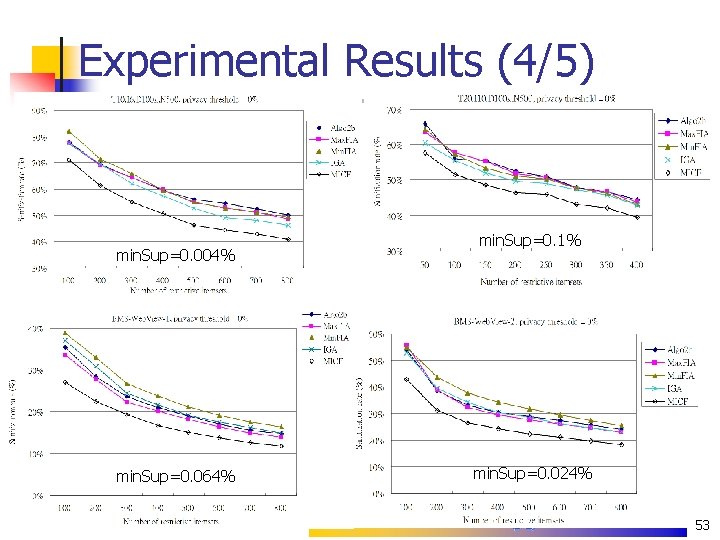

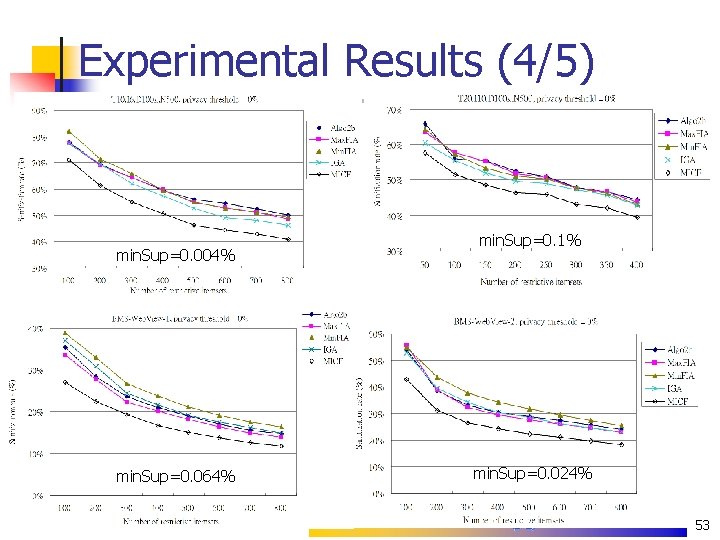

Experimental Results (4/5) min. Sup=0. 004% min. Sup=0. 064% min. Sup=0. 1% min. Sup=0. 024% 53

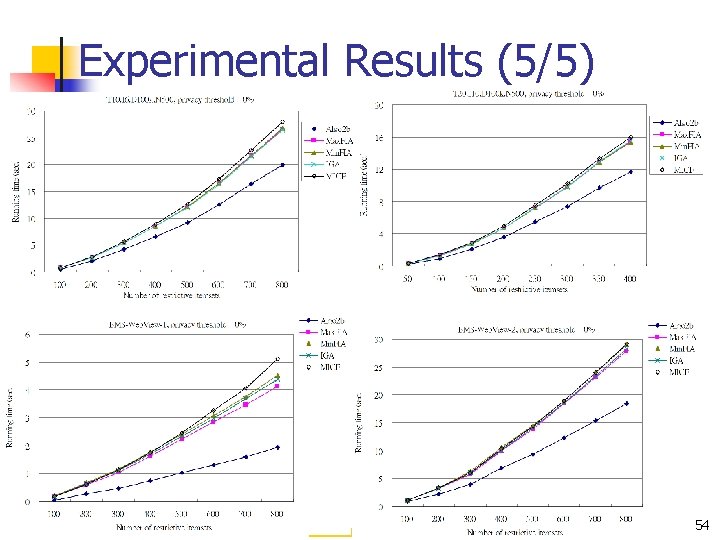

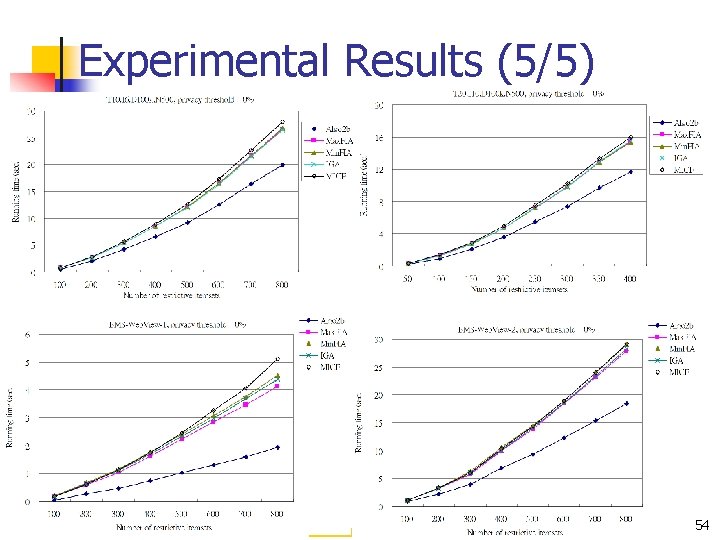

Experimental Results (5/5) 54

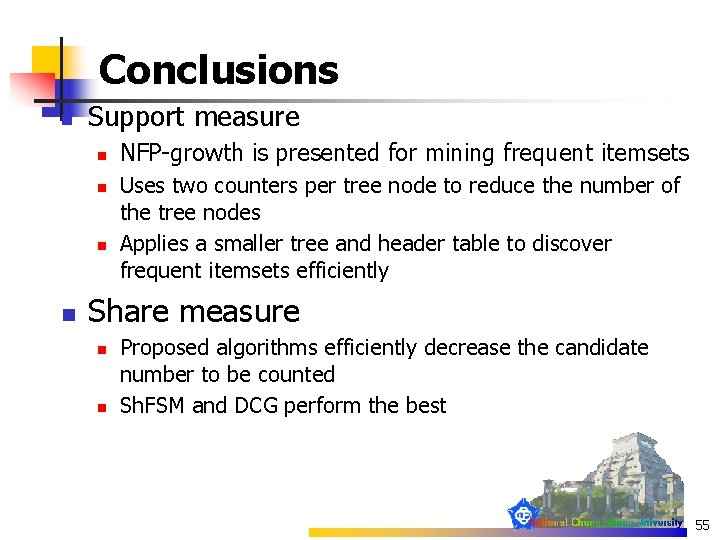

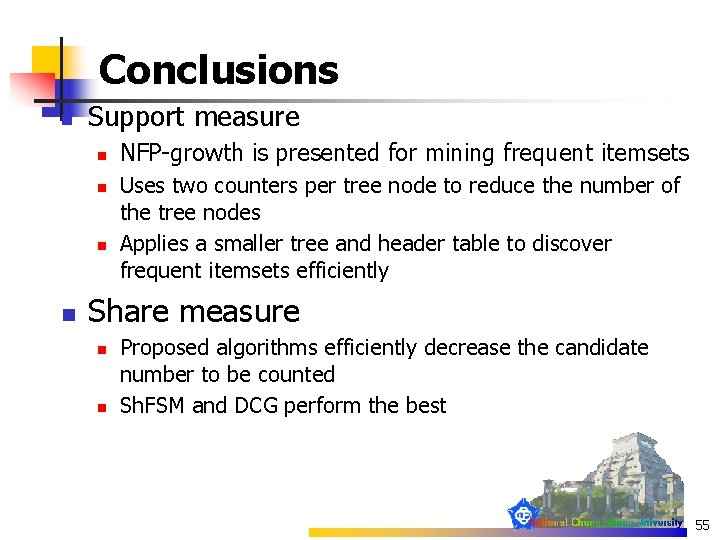

Conclusions n Support measure n n NFP-growth is presented for mining frequent itemsets Uses two counters per tree node to reduce the number of the tree nodes Applies a smaller tree and header table to discover frequent itemsets efficiently Share measure n n Proposed algorithms efficiently decrease the candidate number to be counted Sh. FSM and DCG perform the best 55

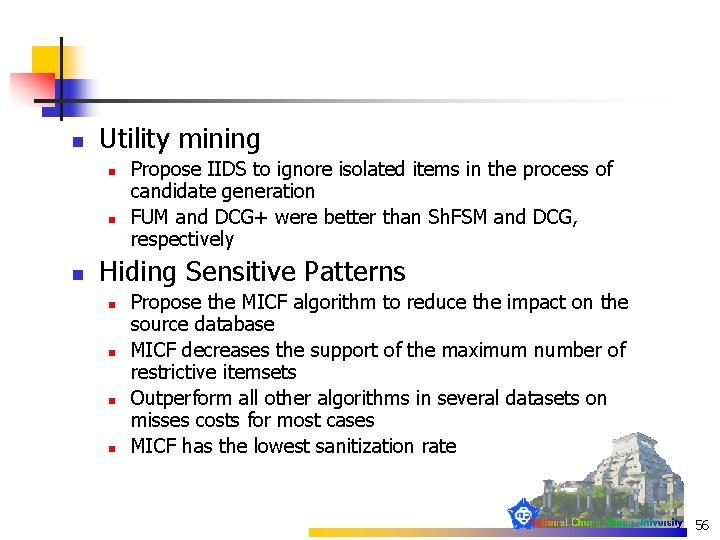

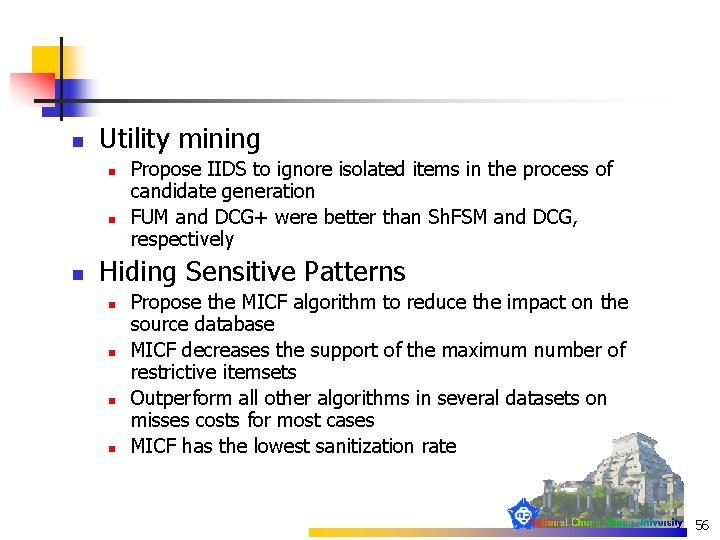

n Utility mining n n n Propose IIDS to ignore isolated items in the process of candidate generation FUM and DCG+ were better than Sh. FSM and DCG, respectively Hiding Sensitive Patterns n n Propose the MICF algorithm to reduce the impact on the source database MICF decreases the support of the maximum number of restrictive itemsets Outperform all other algorithms in several datasets on misses costs for most cases MICF has the lowest sanitization rate 56

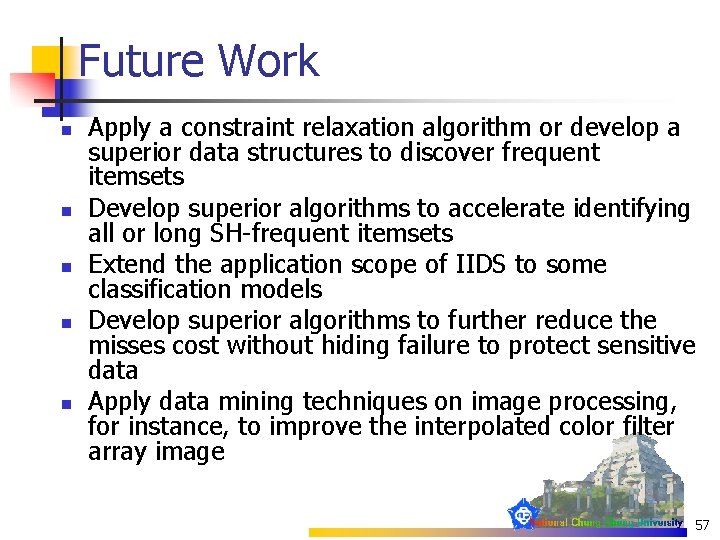

Future Work n n n Apply a constraint relaxation algorithm or develop a superior data structures to discover frequent itemsets Develop superior algorithms to accelerate identifying all or long SH-frequent itemsets Extend the application scope of IIDS to some classification models Develop superior algorithms to further reduce the misses cost without hiding failure to protect sensitive data Apply data mining techniques on image processing, for instance, to improve the interpolated color filter array image 57

References D. Agrawal and C. Aggarwal, “On the design and quantification of privacy preserving data mining algorithms, ” in Proc. 20 th ACM Symposium on Principles of Database Systems, Santa Barbara, CA, pp. 247 -255, May 2001. R. C. Agarwal, C. C. Aggarwal, and V. V. V. Prasad, “A tree projection algorithm for generation of frequent itemsets, ” Journal of Parallel and Distributed Computing, vol. 61, no. 3, pp. 350 -361, 2001. R. Agrawal, T. Imielinski, and A. Swami, “Mining association rules between sets of items in large databases” in Proc. 1993 ACM SIGMOD Intl. Conf. on Management of Data, Washington, D. C. , pp. 207 -216, May 1993. R. Agrawal and R. Srikant, “Fast algorithms for mining association rules, ” in Proc. 20 th Intl. Conf. on Very Large Data Bases, Santiago, Chile, pp. 487499, Sep. 1994. M. Atallah, E. Bertino, A. Elmagarmid, M. Ibrahim, and V. Verykios, “Disclosure limitation of sensitive rules, ” in Proc. 1999 Workshop on Knowledge and Data Engineering Exchange, Chicage, IL, pp. 45 -52, Nov. 1999. B. Barber and H. J. Hamilton, “Parametric algorithm for mining share frequent itemsets, ” Journal of Intelligent Information Systems, vol. 16, no. 3, pp. 277 -293, 2001. F. Berzal, J. C. Cubero, N. Marín, and J. M. Serrano, “TBAR: An efficient method for association rule mining in relational databases, ” Data & Knowledge Engineering, vol. 37, no. 1, pp. 47 -64, 2001. 58

S. Brin, R. Motwani, J. D. Ullman, and S. Tsur, “Dynamic itemset counting and implication rules for market basket data, ” in Proc. 1997 ACM SIGMOD Intl. Conf. on Management of Data, Tucson, AZ, pp. 255 -264, May 1997. P. Cabena, P. Hadjinian, R. Stadler, J. Verhees, and A. Zanasi, “Discovering Data Mining from Concept to Implementation, ” Prentice Hall PTR, New Jersey, 1998. C. L. Carter, H. J. Hamilton, and N. Cercone, “Share based measures for itemsets, ” Lecture Notes in Computer Science 1263 --- 1 st European Conf. on the Principles of Data Mining and Knowledge Discovery, H. J. Komorowski and J. M. Zytkow (eds. ), Springer-Verlag, Berlin, pp. 14 -24, 1997. G. Grahne and J. Zhu, “Efficient using prefix-tree in mining frequent itemsets, ” in Proc. IEEE ICDM Workshop on Frequent Itemset Mining Implementations, Melbourne, FL, Nov. 2003. J. Han, J. Pei, and Y. Yin, “Mining frequent patterns without candidate generation, ” in Proc. 2000 ACM-SIGMOD Intl. Conf. on Management of Data, Dallas, TX, pp. 1 -12, May 2000. J. Han, J. Pei, Y. Yin, and R. Mao, “Mining frequent patterns without candidate generation: A frequent pattern tree approach, ” Data Mining and Knowledge Discovery, vol. 8, no. 1, pp. 53 -87, 2004. 59

T. Johnsten and V. V. Raghavan, “Impact of decision-region based classification mining algorithms on database security, ” in Proc. IFIP WG 11. 3 13 th Intl. Conf. on Database Security, Seattle, WA, pp. 177 -191, Jul. 1999. M. Kantardzic, “Data Mining: Concepts, Models, Methods, and Algorithms, ” John Wiley & Sons, Inc. , New York, 2002. S. R. M. Oliveira and O. R. Zaïane, “Privacy preserving frequent itemset mining, ” in Proc. IEEE ICDM Workshop on Privacy, Security and Data Mining, Maebashi City, Japan, pp. 43 -54, Dec. 2002. S. R. M. Oliveira and O. R. Zaïane, “Algorithms for balancing privacy and knowledge discovery in association rule mining, ” in Proc. of 7 th Intl. Database Engineering and Applications Symposium, Hong Kong, China, pp. 54 -63, Jul. 2003. Y. Saygin, V. S. Verykios, and C. Clifton, “Using unknowns to prevent discovery of association rules, ” ACM SIGMOD Record, vol. 30, no. 4, pp. 45 -54, 2001. H. Yao and H. J. Hamilton, “Mining itemset utilities from transaction databases, ” Data & Knowledge Engineering, vol. 59, no. 3, pp. 603 -626, 2006. H. Yao, H. J. Hamilton, and C. J. Butz, “A foundational approach to mining itemset utilities from databases, ” in Proc. 4 th SIAM Intl. Conf. on Data Mining, Lake Buena Vista, FL, pp. 482 -486, Apr. 2004. 60

Thank You!

Background and Related Work n Support-Confidence Framework n n Each item is a binary variable denoting whether an item was purchased Apriori (Agrawal & Swami, 1994) & Apriori-like algorithms (Agrawal et al. , 1993; Berzal et al. , 2001; Brin et al. , 1997) Pattern-growth algorithms (Agarwal et al. , 2001; Grahn & Zhu, 2003; Han et al. , 2000; Han et al. , 2004) Share-Confidence Framework (Carter et al. , 1997 ) n n Support-confidence framework does not analyze the exact number of products purchased. The support count method does not measure the profit or cost of an itemset Exhaustive search algorithm Fast algorithms 62

n Utility mining (Yao et al. 2004; Yao and Hamiltom, 2006) n A generalized form of share-confidence framework n Privacy-Preserving in Mining Frequent Itemsets n Classification rules (Agrawal & Aggarwal, 2001; Johnsten & Raghavan 1999) n Association rules (Atallah et al. , 1999; Oliveira & Zaïane, 2002) 63