Face Track Tracking and summarizing faces from compressed

- Slides: 32

Face. Track: Tracking and summarizing faces from compressed video Hualu Wang, Harold S. Stone*, Shih-Fu Chang Dept. of Electrical Engineering, Columbia University *NEC Research Institute Presentation by Andy Rova School of Computing Science Simon Fraser University March 15, 2005 Andy Rova • SFU CMPT 820

Introduction n Face. Track n System for both tracking and summarizing faces in compressed video data n Tracking n Detect faces and trace them through time in video shots n Summarizing n Cluster the faces across video shots and associate them with different people n Compressed video n March 15, 2005 Avoids the costly overhead of decoding prior to face detection Andy Rova • SFU CMPT 820 2

System Overview n The Face. Track system’s goals are related to ideas discussed in previous presentations A face-based video summary can help users decide if they want to download the whole video n The summary provides good visual indexing information for a database search engine n March 15, 2005 Andy Rova • SFU CMPT 820 3

Problem definition n The goal of the Face. Track system is to take an input video sequence and generate a list of prominent faces that appear in the video, and determine the time periods where each of the faces appears March 15, 2005 Andy Rova • SFU CMPT 820 4

General Approach Track faces within shots n Once tracking is done, group faces across video shots into faces of different people n Output a list of faces for each sequence n n n For each face, list shots where it appears, and when Face recognition is not performed n Very difficult in unconstrained videos due to the broad range of face sizes, numbers, orientations and lighting conditions March 15, 2005 Andy Rova • SFU CMPT 820 5

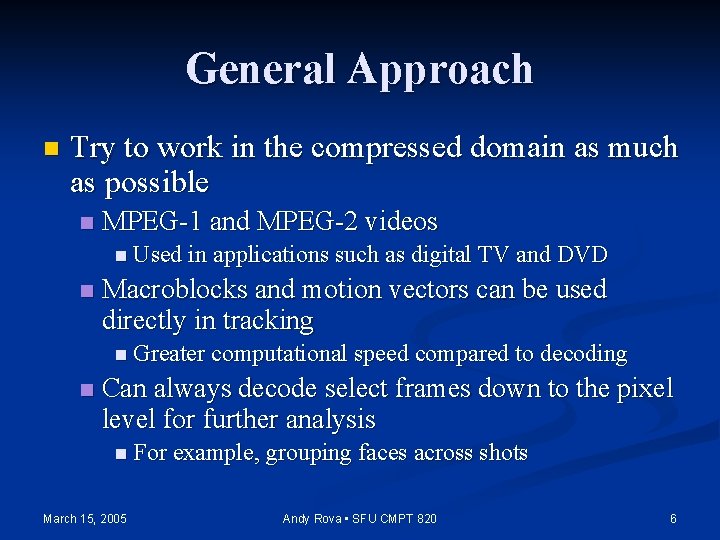

General Approach n Try to work in the compressed domain as much as possible n MPEG-1 and MPEG-2 videos n Used in applications such as digital TV and DVD n Macroblocks and motion vectors can be used directly in tracking n Greater computational speed compared to decoding n Can always decode select frames down to the pixel level for further analysis n For example, grouping faces across shots March 15, 2005 Andy Rova • SFU CMPT 820 6

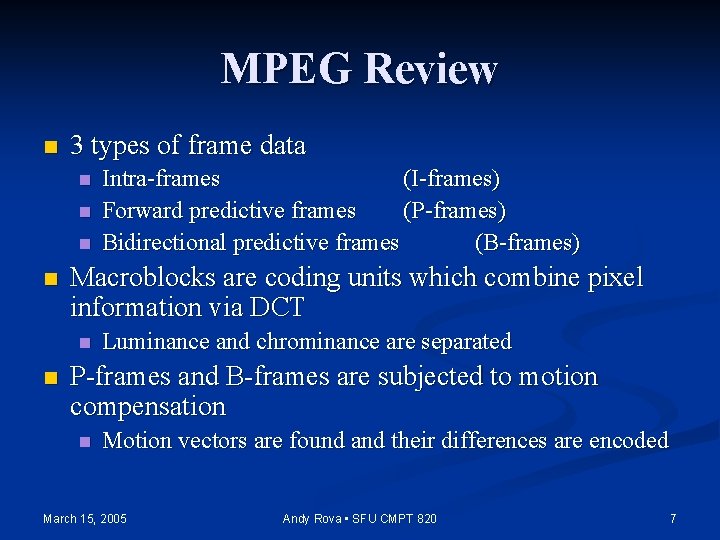

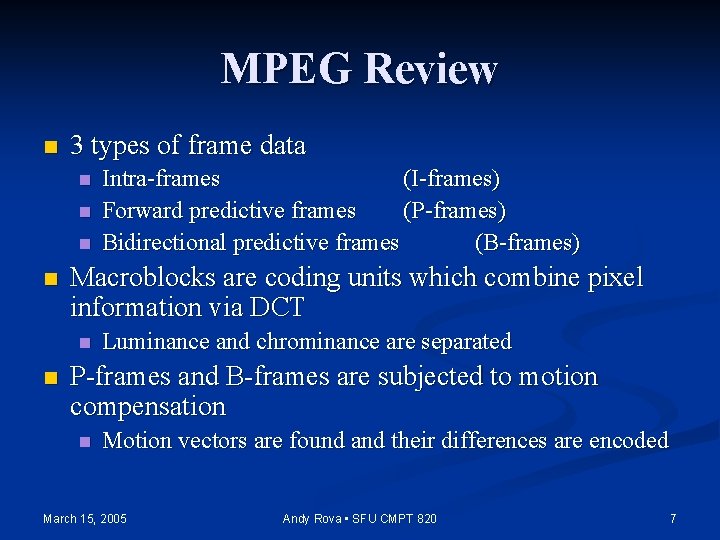

MPEG Review n 3 types of frame data n n Macroblocks are coding units which combine pixel information via DCT n n Intra-frames (I-frames) Forward predictive frames (P-frames) Bidirectional predictive frames (B-frames) Luminance and chrominance are separated P-frames and B-frames are subjected to motion compensation n Motion vectors are found and their differences are encoded March 15, 2005 Andy Rova • SFU CMPT 820 7

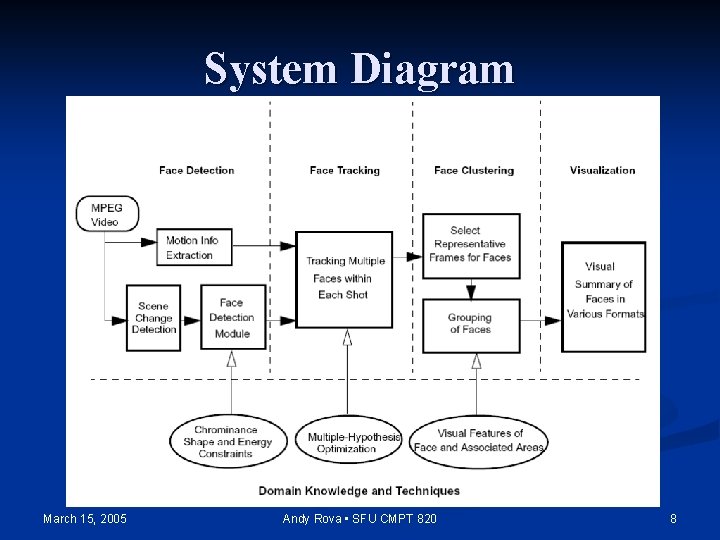

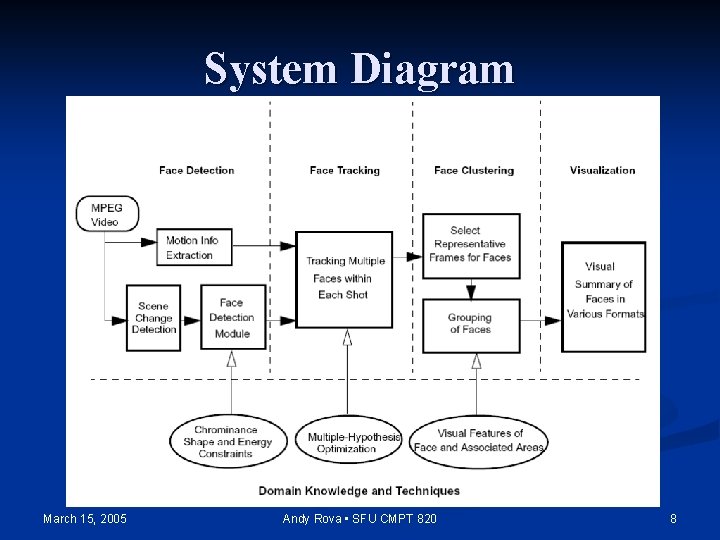

System Diagram March 15, 2005 Andy Rova • SFU CMPT 820 8

Face Tracking n Challenges n n n Locations of detected faces may not be accurate, since the face detection algorithm works on 16 x 16 macroblocks False alarms and misses Multiple faces cause ambiguities when they move close to each other The motion approximated by the MPEG motion vectors may not be accurate A tracking framework which can handle these issues in the compressed domain is needed March 15, 2005 Andy Rova • SFU CMPT 820 9

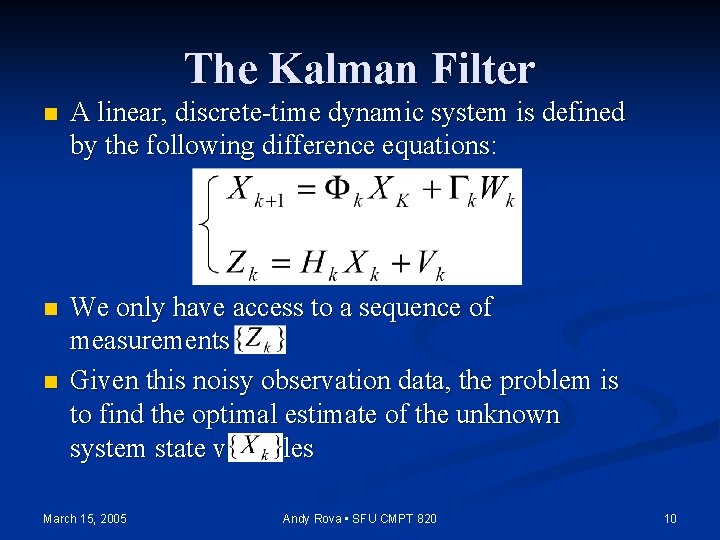

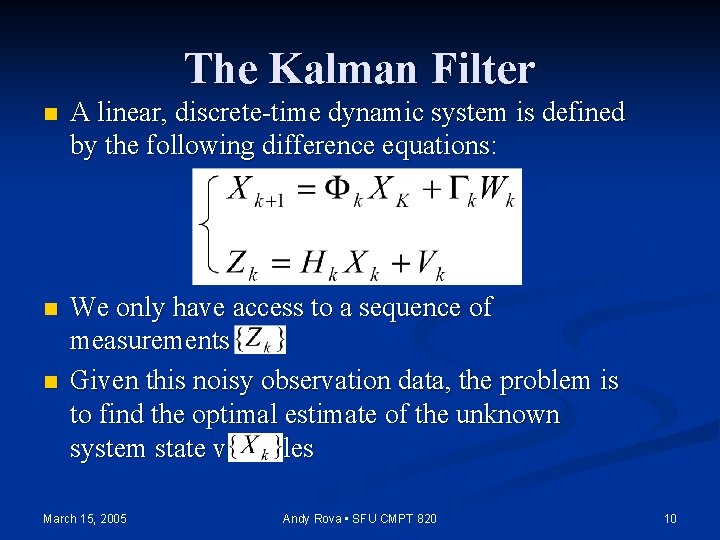

The Kalman Filter n A linear, discrete-time dynamic system is defined by the following difference equations: n We only have access to a sequence of measurements Given this noisy observation data, the problem is to find the optimal estimate of the unknown system state variables n March 15, 2005 Andy Rova • SFU CMPT 820 10

The Kalman Filter The “filter” is actually an iterative algorithm which keeps taking in new observations n The new states are successively estimated n The error of the prediction of is called the innovation n The innovation is amplified by a gain matrix and used as a correction for the state prediction n The corrected prediction is the new state estimate n March 15, 2005 Andy Rova • SFU CMPT 820 11

The Kalman Filter n In the Face. Track system, the state vector of the Kalman filter is the kinematic information of the face n n The observation vector the detected face n n position, velocity (and sometimes acceleration) is the position of May not be accurate The Kalman filter lets the system predict and update the position and parameters of the faces March 15, 2005 Andy Rova • SFU CMPT 820 12

The Kalman Filter The Face. Track system uses a 0. 1 second time interval for state updates n This corresponds to every I-frame and P-frame for typical MPEG GOP structure n GOP: “Group Of Pictures” frame structure n For example, IBBPBBP… n March 15, 2005 Andy Rova • SFU CMPT 820 13

The Kalman Filter n n For I-frames, the face detector results are used directly For P-frames, the face detector results are more prone to false alarms Instead, P-frame face locations are predicted based on the MPEG motion vectors (approximately) These locations are then fed into the Kalman filter as observations n (in contrast with previous trackers, which assumed that the motion-vector calculated locations were correct alone) March 15, 2005 Andy Rova • SFU CMPT 820 14

The Face Tracking Framework n How to discriminate new faces from previous ones during tracking? The Mahalanobis distance is a quantitative indicator of how close the new observation is to the prediction n This can help separate new faces from existing tracks: if the Mahalanobis distance is greater than a certain threshold, then the newly detected face is unlikely to belong to a particular existing track n March 15, 2005 Andy Rova • SFU CMPT 820 15

The Face Tracking Framework In the case where two faces move close together, Mahalanobis distance alone cannot keep track of multiple faces n Case where a face is missed or occluded: n n n Case of false alarm or faces close together: n n Hypothesize the continuation of the face track Hypothesize creation of a new track The idea is to wait for new observation data before making the final decision about a track March 15, 2005 Andy Rova • SFU CMPT 820 16

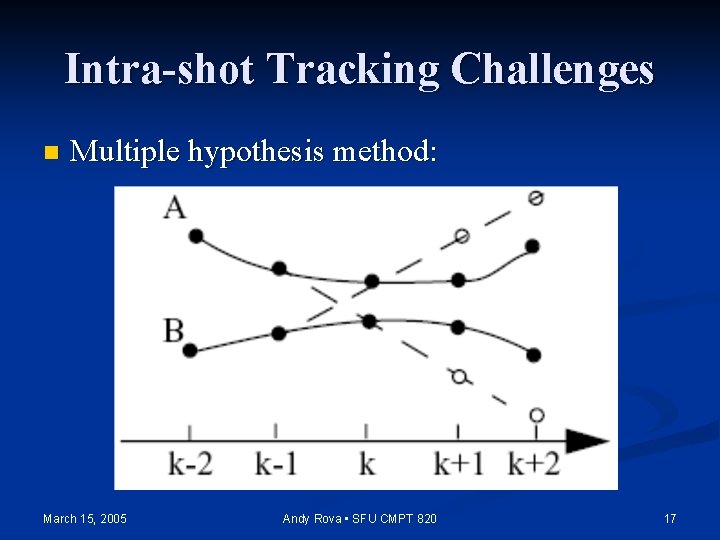

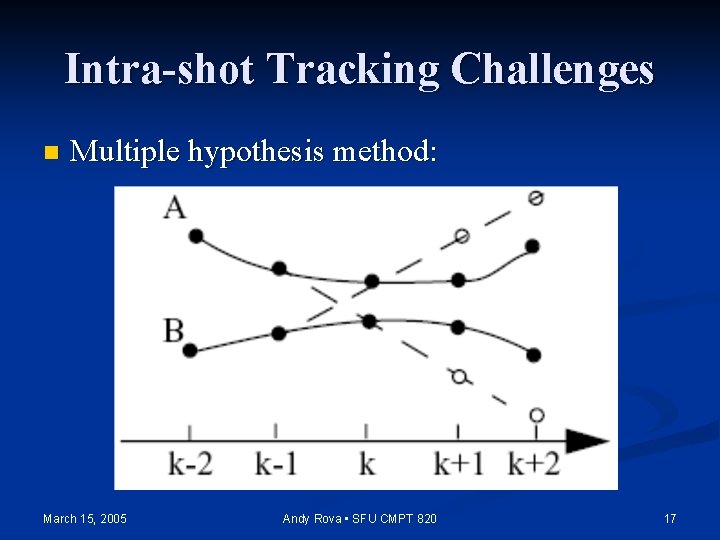

Intra-shot Tracking Challenges n Multiple hypothesis method: March 15, 2005 Andy Rova • SFU CMPT 820 17

Kalman Motion Models n n n The Kalman filter is a framework which can model different types of motion, depending on the system matrices used Several models were tested for the paper, with varying results Intuition: who pays to research object tracking? n n n The military! Hence many tracking models are based on trajectories that are unlike those that faces in video will likely exhibit For example, in most commercial video, a human face will not maneuver like a jet or missile March 15, 2005 Andy Rova • SFU CMPT 820 18

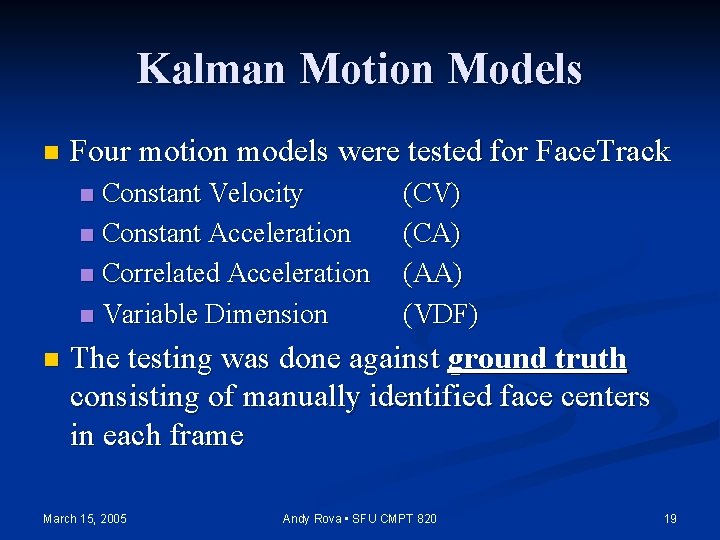

Kalman Motion Models n Four motion models were tested for Face. Track Constant Velocity n Constant Acceleration n Correlated Acceleration n Variable Dimension n n (CV) (CA) (AA) (VDF) The testing was done against ground truth consisting of manually identified face centers in each frame March 15, 2005 Andy Rova • SFU CMPT 820 19

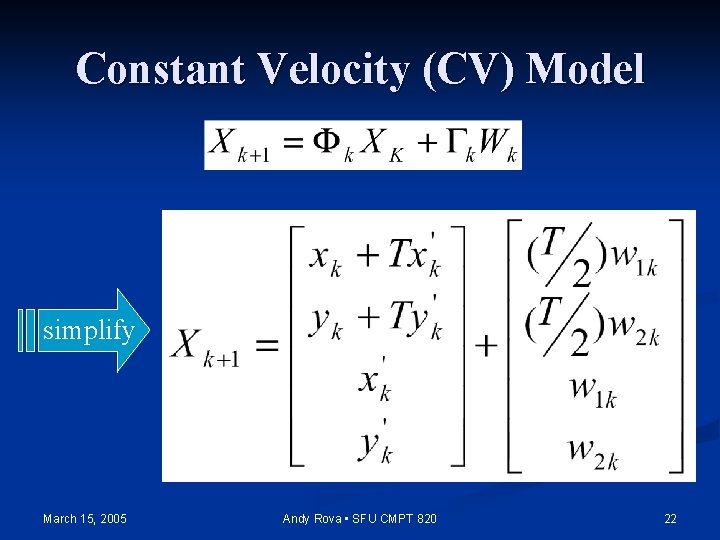

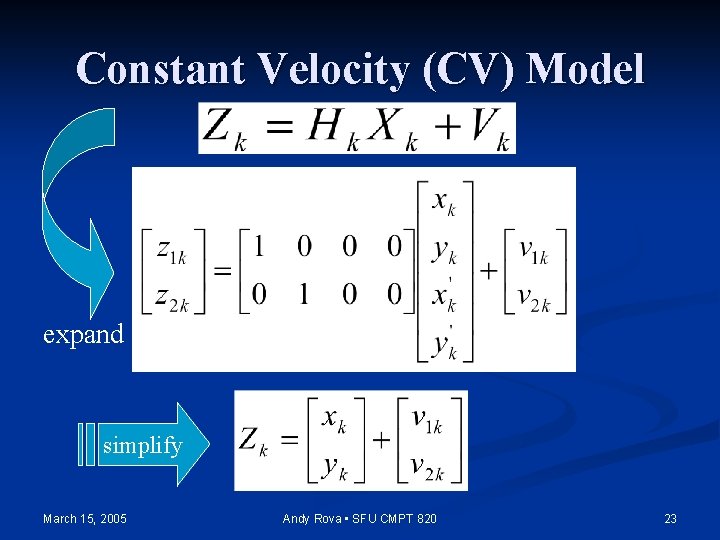

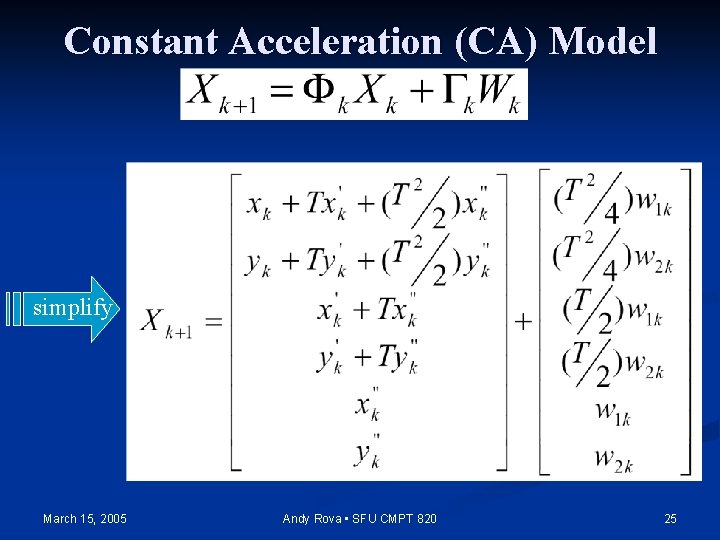

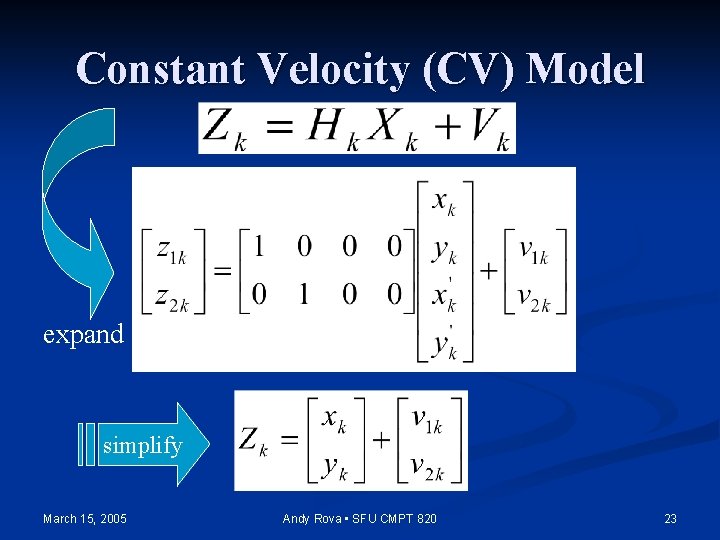

Kalman Motion Models Rather than go through the whole process in exact detail, the next several slides are an illustration of the differences between the CV and CA models n Also, the matrices are expanded to show the states are updated n March 15, 2005 Andy Rova • SFU CMPT 820 20

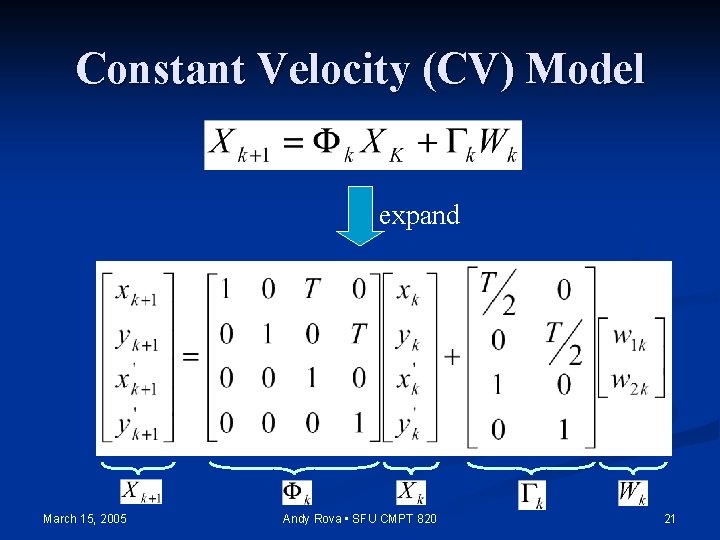

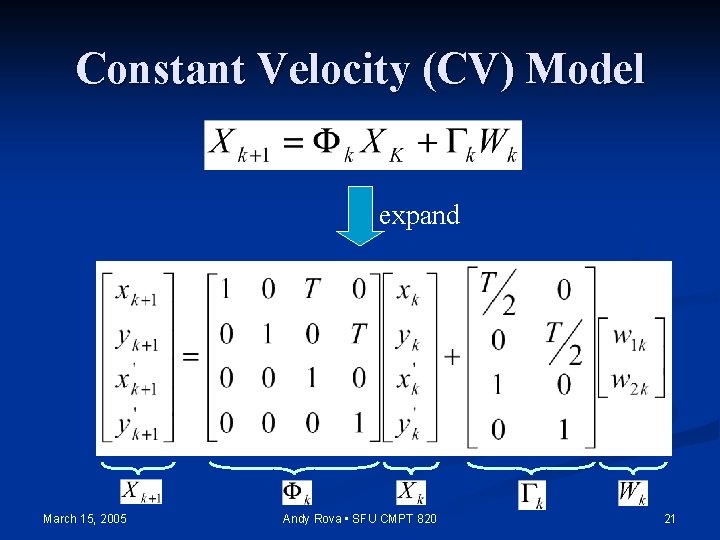

Constant Velocity (CV) Model expand March 15, 2005 Andy Rova • SFU CMPT 820 21

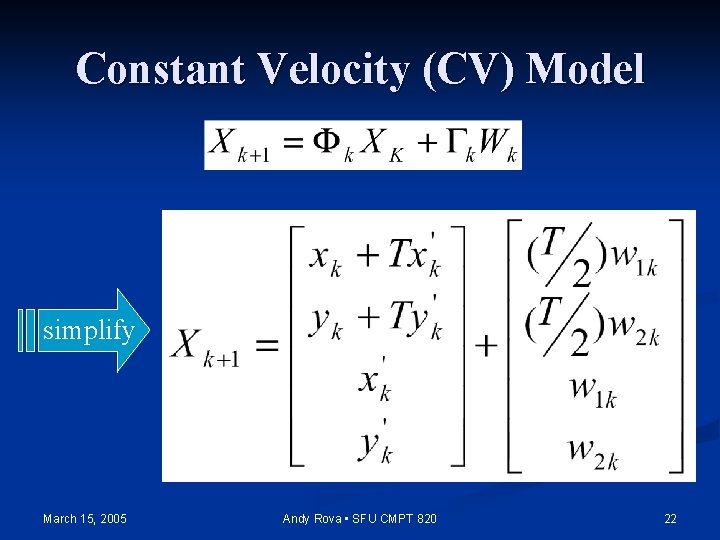

Constant Velocity (CV) Model simplify March 15, 2005 Andy Rova • SFU CMPT 820 22

Constant Velocity (CV) Model expand simplify March 15, 2005 Andy Rova • SFU CMPT 820 23

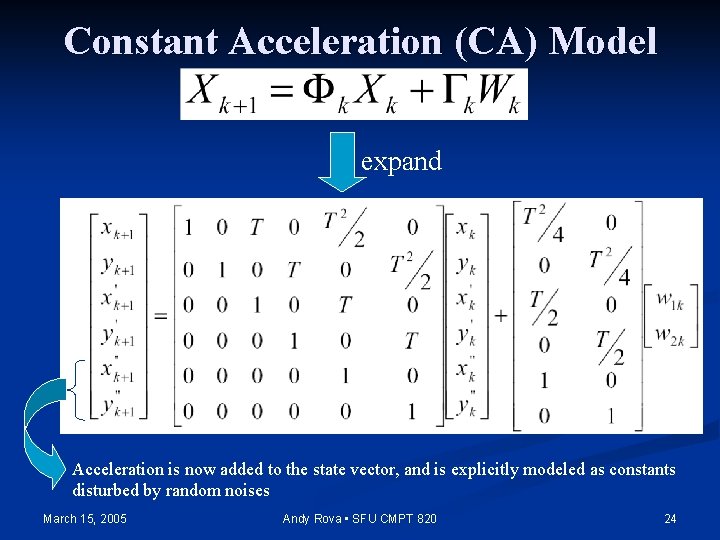

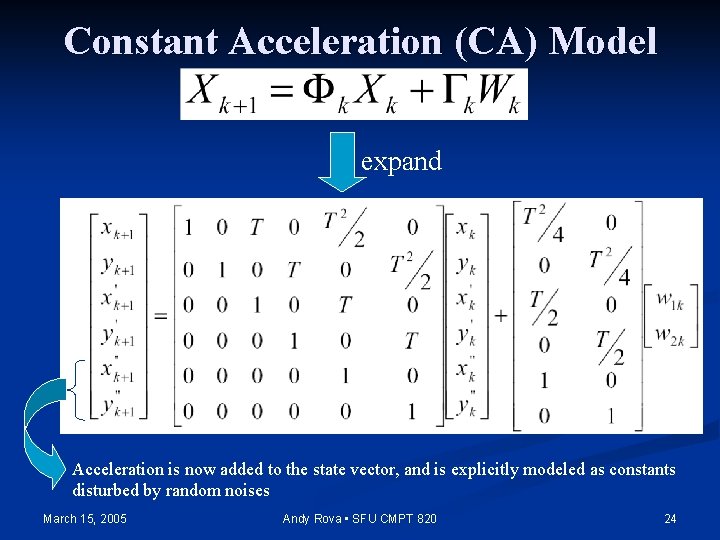

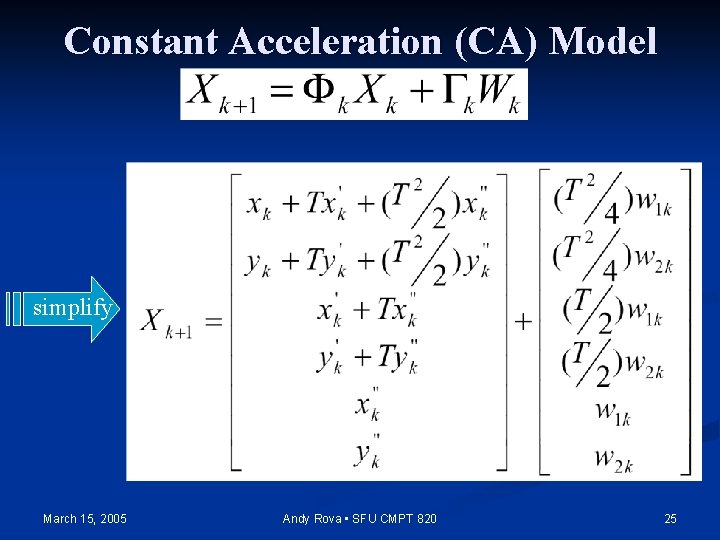

Constant Acceleration (CA) Model expand Acceleration is now added to the state vector, and is explicitly modeled as constants disturbed by random noises March 15, 2005 Andy Rova • SFU CMPT 820 24

Constant Acceleration (CA) Model simplify March 15, 2005 Andy Rova • SFU CMPT 820 25

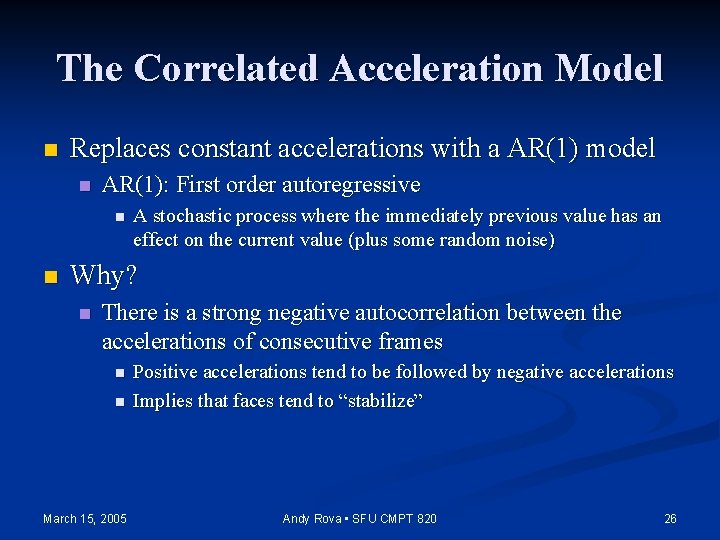

The Correlated Acceleration Model n Replaces constant accelerations with a AR(1) model n AR(1): First order autoregressive n n A stochastic process where the immediately previous value has an effect on the current value (plus some random noise) Why? n There is a strong negative autocorrelation between the accelerations of consecutive frames n n March 15, 2005 Positive accelerations tend to be followed by negative accelerations Implies that faces tend to “stabilize” Andy Rova • SFU CMPT 820 26

The Variable Dimension Filter A system that switches between CV (constant velocity) and CA (constant acceleration) modes n The dimension of the state vector changes when a maneuver is detected, hence “VDF” n Developed for tracking highly maneuverable targets (probably military jets) n March 15, 2005 Andy Rova • SFU CMPT 820 27

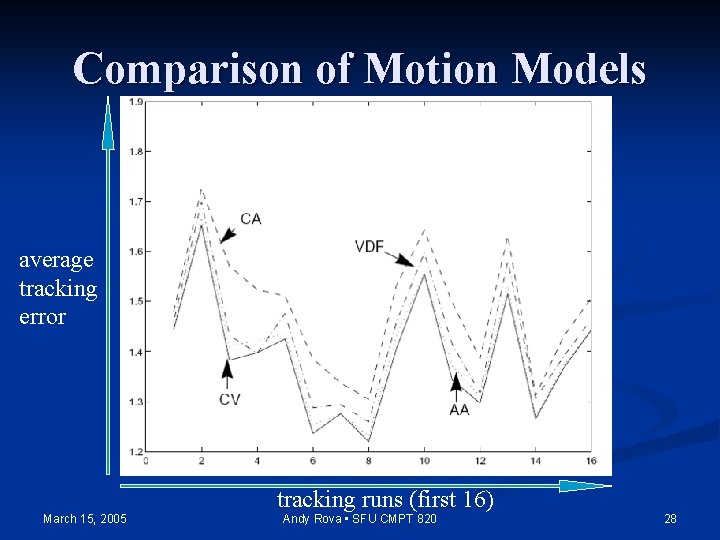

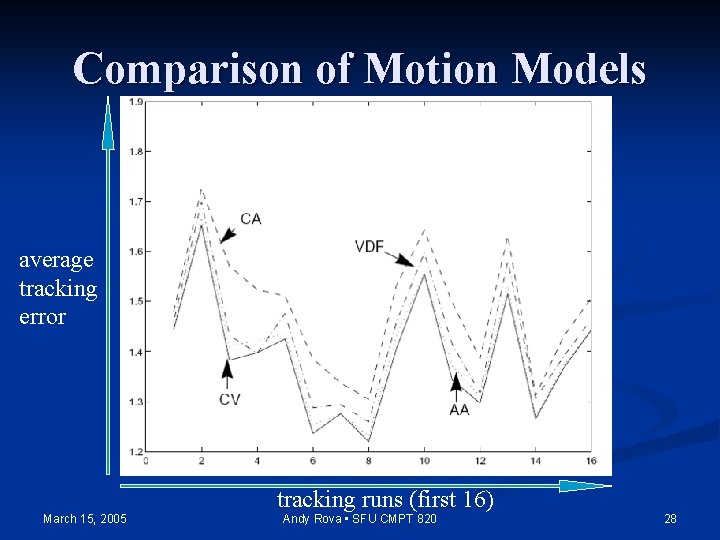

Comparison of Motion Models average tracking error tracking runs (first 16) March 15, 2005 Andy Rova • SFU CMPT 820 28

Comparison of Motion Models n Why does CV perform best? Small sampling interval justifies viewing face motion as piecewise linear movements n The face cannot achieve very high accelerations (as opposed to a jet fighter) n n AA also performs well because it fits the nature of the face motion well n Commercial video faces exhibit few persistent accelerations (negative autocorrelation) March 15, 2005 Andy Rova • SFU CMPT 820 29

Summarization Across Shots n Select representative frames for tracked faces n n Large, frontal-view faces are best Decode representative frames into the pixel domain Use clustering algorithms to group the faces into different persons Make use of domain knowledge n For example, people do not usually change clothes within a news segment, but often do change outfits within a sitcom episode March 15, 2005 Andy Rova • SFU CMPT 820 30

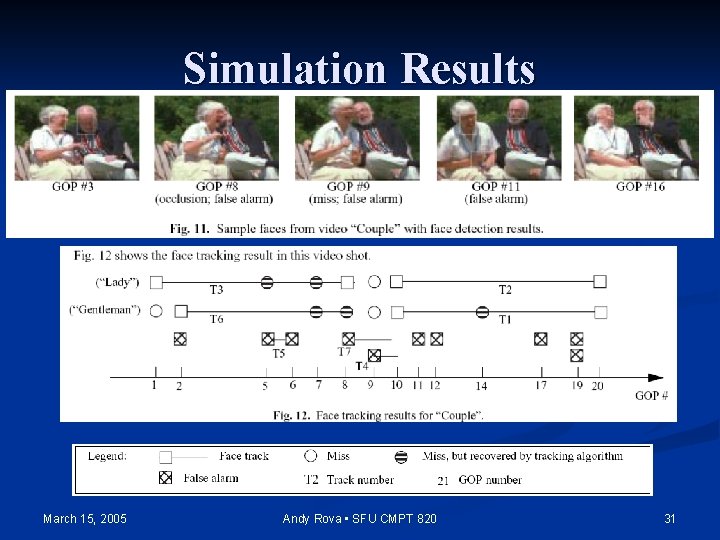

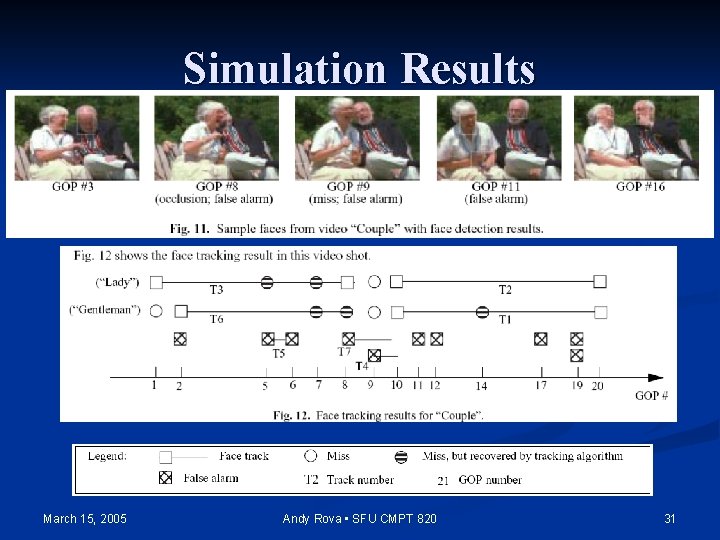

Simulation Results March 15, 2005 Andy Rova • SFU CMPT 820 31

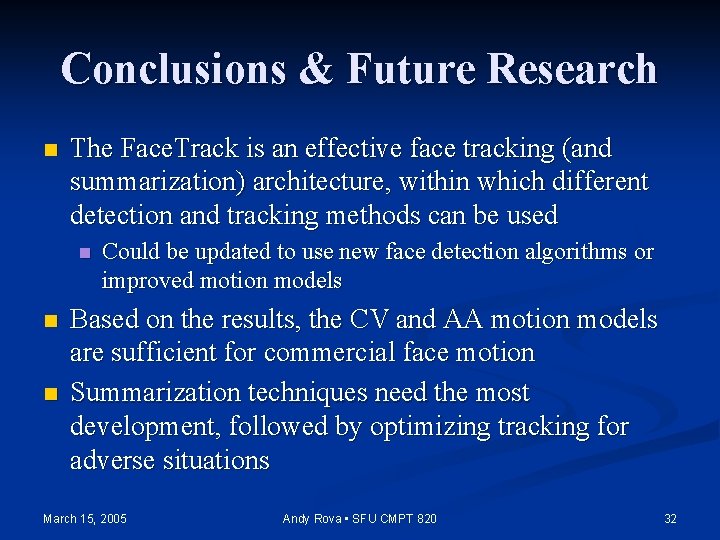

Conclusions & Future Research n The Face. Track is an effective face tracking (and summarization) architecture, within which different detection and tracking methods can be used n n n Could be updated to use new face detection algorithms or improved motion models Based on the results, the CV and AA motion models are sufficient for commercial face motion Summarization techniques need the most development, followed by optimizing tracking for adverse situations March 15, 2005 Andy Rova • SFU CMPT 820 32