F 5 HD Fast Flexible FPGAbased Framework for

F 5 -HD: Fast Flexible FPGA-based Framework for Hyperdimensional Computing Sahand Salamat, Mohsen Imani, Behnam Khaleghi, Tajana Šimunić Rosing System Energy Efficiency Lab University of California San Diego System Energy Efficiency Lab seelab. ucsd. edu

Machine Learning is Changing Our Life Healthcare Self Driving Cars Smart Robots Finance Gaming System Energy Efficiency Lab seelab. ucsd. edu 2

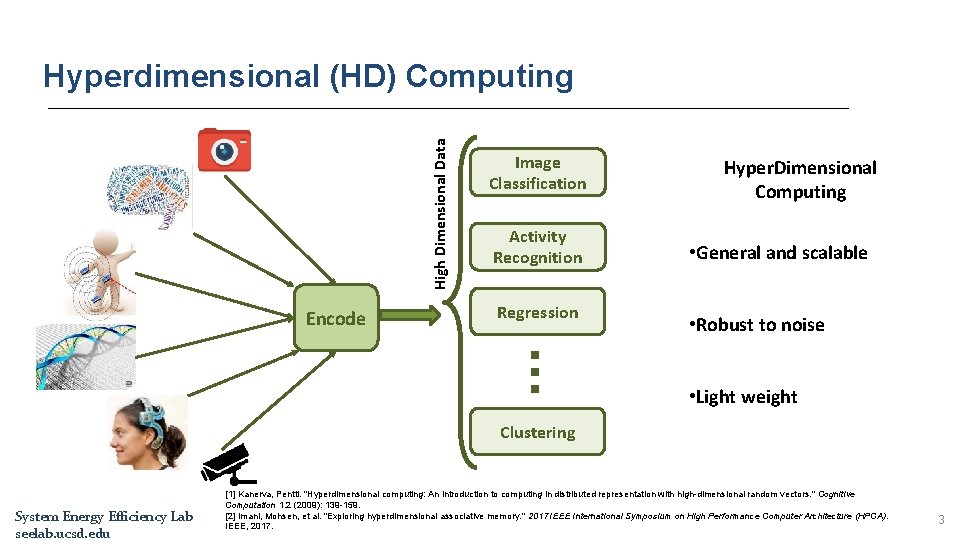

High Dimensional Data Hyperdimensional (HD) Computing Encode Image Classification Activity Recognition Regression Hyper. Dimensional Computing • General and scalable • Robust to noise . . . • Light weight Clustering System Energy Efficiency Lab seelab. ucsd. edu [1] Kanerva, Pentti. "Hyperdimensional computing: An introduction to computing in distributed representation with high-dimensional random vectors. " Cognitive Computation 1. 2 (2009): 139 -159. [2] Imani, Mohsen, et al. "Exploring hyperdimensional associative memory. " 2017 IEEE International Symposium on High Performance Computer Architecture (HPCA). IEEE, 2017. 3

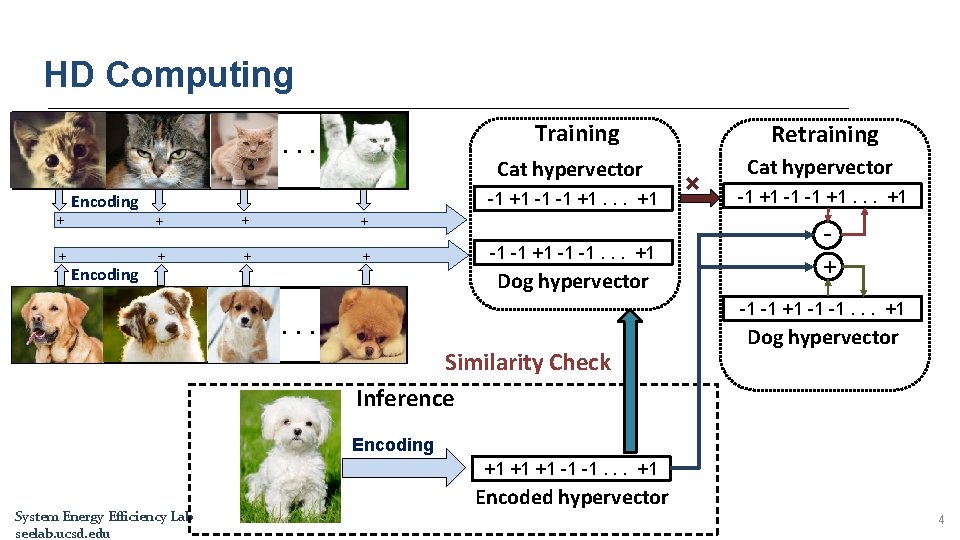

HD Computing Training . . . Cat hypervector -1 +1 -1 -1 +1. . . +1 Encoding + + + + Encoding -1 -1 +1 -1 -1. . . +1 Dog hypervector Retraining × Cat hypervector -1 +1 -1 -1 +1. . . +1 + -1 -1 +1 -1 -1. . . +1 . . . Similarity Check Inference Dog hypervector Encoding +1 +1 +1 -1 -1. . . +1 System Energy Efficiency Lab seelab. ucsd. edu Encoded hypervector 4

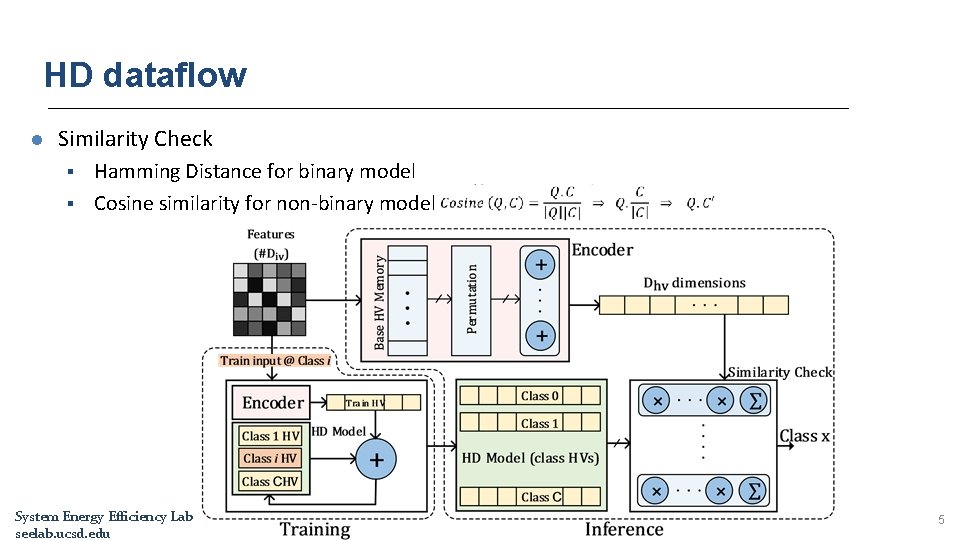

HD dataflow l Similarity Check § § Hamming Distance for binary model Cosine similarity for non-binary model System Energy Efficiency Lab seelab. ucsd. edu 5

HD Acceleration l HD thousands of bit-level additions, multiplication and accumulation l ü × × These operations can be parallelized in dimension level FPGAs can provide huge parallelism FPGA design requires extensive hardware expertise FPGAs have long design cycles Ø Ø Ø Application-specific template-based design Several template-based FPGA implementation for neural networks [Micro’ 16][FCCM’ 17][FPGA’ 18] No FPGA implementation framework for HD! [1] Sharma, Hardik, et al. "From high-level deep neural models to FPGAs. " The 49 th Annual IEEE/ACM International Symposium on Microarchitecture. IEEE Press, 2016 [2] Guan, Yijin, et al. "FP-DNN: An automated framework for mapping deep neural networks onto FPGAs with RTL-HLS hybrid templates. " 2017 IEEE 25 th Annual International Symposium on Field-Programmable Custom Computing Machines (FCCM). IEEE, 2017. [3] Shen, Junzhong, et al. "Towards a uniform template-based architecture for accelerating 2 d and 3 d cnns on fpga. " Proceedings of the 2018 ACM/SIGDA International Symposium on Field-Programmable Gate Arrays. ACM, 2018. System Energy Efficiency Lab seelab. ucsd. edu 6

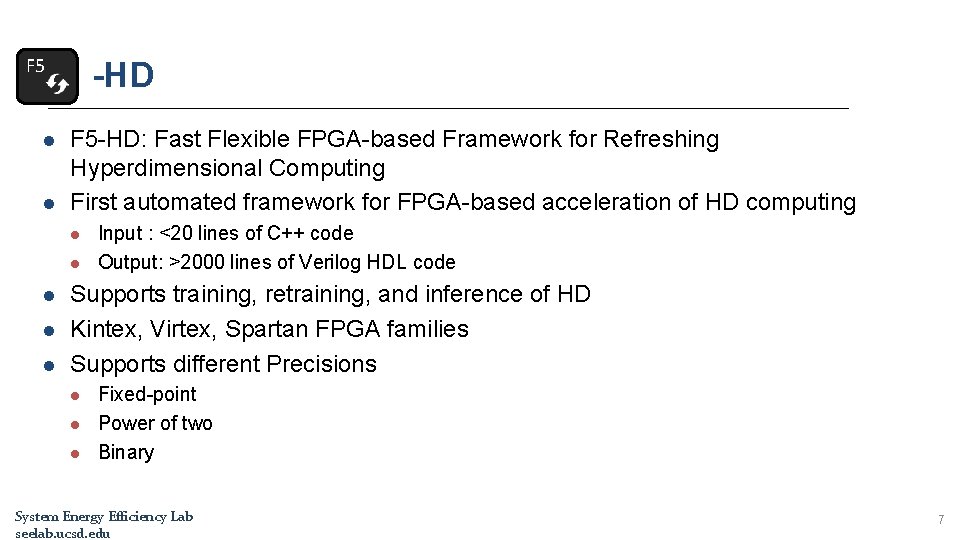

F 5 l l -HD F 5 -HD: Fast Flexible FPGA-based Framework for Refreshing Hyperdimensional Computing First automated framework for FPGA-based acceleration of HD computing l l l Input : <20 lines of C++ code Output: >2000 lines of Verilog HDL code Supports training, retraining, and inference of HD Kintex, Virtex, Spartan FPGA families Supports different Precisions l l l Fixed-point Power of two Binary System Energy Efficiency Lab seelab. ucsd. edu 7

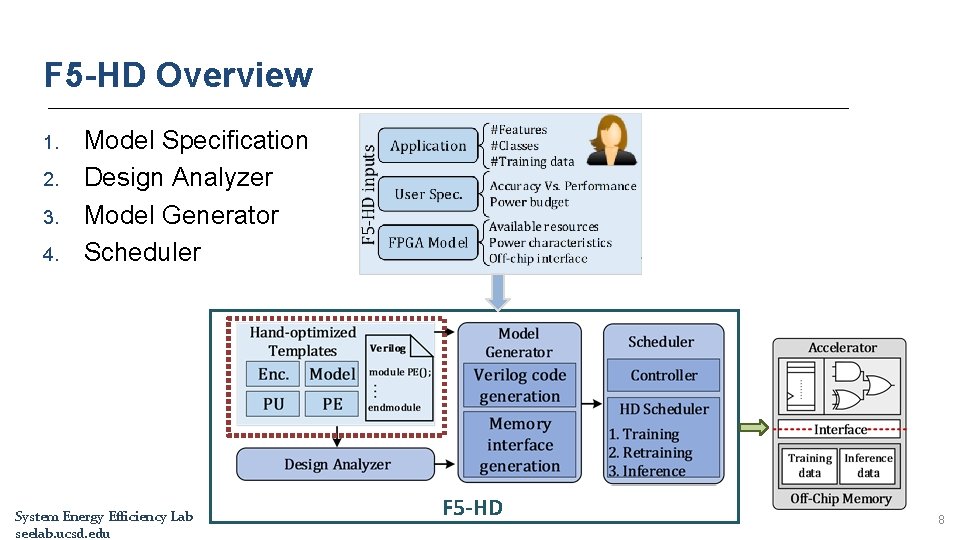

F 5 -HD Overview 1. 2. 3. 4. Model Specification Design Analyzer Model Generator Scheduler System Energy Efficiency Lab seelab. ucsd. edu F 5 -HD 8

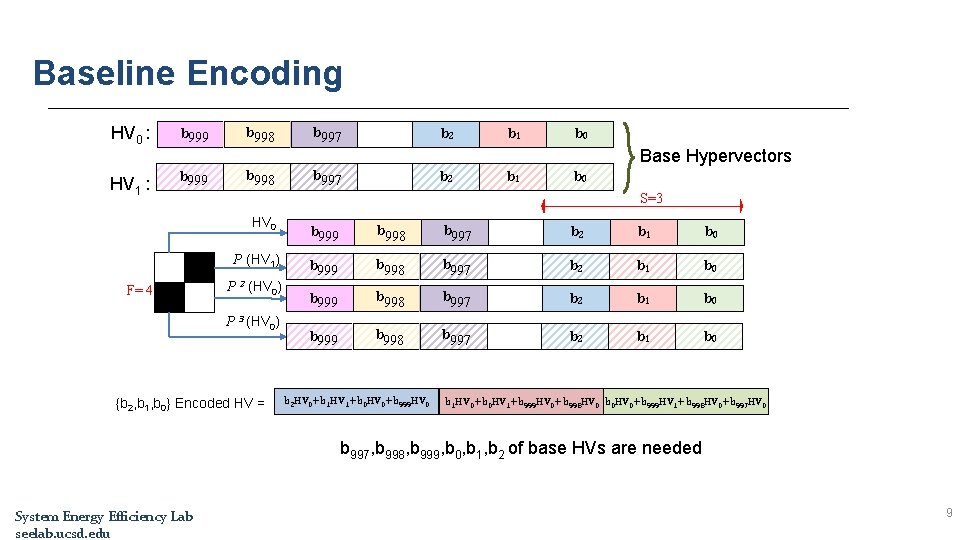

Baseline Encoding HV 0 : b 999 HV 1 : b 999 b 998 b 997 b 2 b 1 b 0 Base Hypervectors b 998 b 2 b 1 b 0 S=3 HV 0 P (HV 1) F= 4 b 997 P 2 (HV 0) P 3 (HV 0) {b 2, b 1, b 0} Encoded HV = b 999 b 998 b 997 b 2 b 1 b 0 b 2 HV 0+b 1 HV 1+b 0 HV 0+b 999 HV 0 b 1 HV 0+b 0 HV 1+b 999 HV 0+b 998 HV 0 b 0 HV 0+b 999 HV 1+b 998 HV 0+b 997 HV 0 b 997, b 998, b 999, b 0, b 1, b 2 of base HVs are needed System Energy Efficiency Lab seelab. ucsd. edu 9

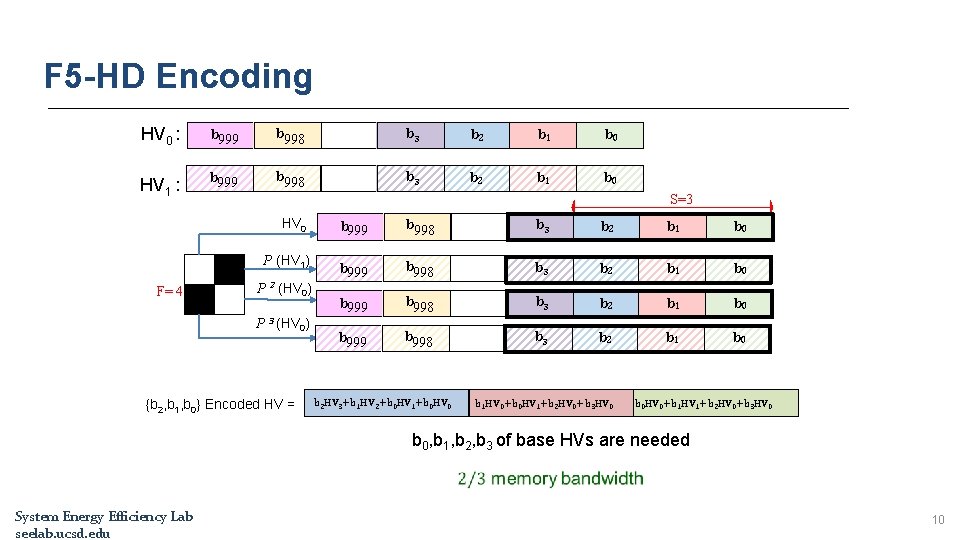

F 5 -HD Encoding HV 0 : b 999 b 998 b 3 b 2 b 1 b 0 HV 1 : b 999 b 998 b 3 b 2 b 1 b 0 S=3 HV 0 P (HV 1) F= 4 P 2 (HV 0) P 3 (HV 0) {b 2, b 1, b 0} Encoded HV = b 999 b 998 b 3 b 2 b 1 b 0 b 2 HV 3+b 1 HV 2+b 0 HV 1+b 0 HV 0 b 1 HV 0+b 0 HV 1+b 2 HV 0+b 3 HV 0 b 0 HV 0+b 1 HV 1+b 2 HV 0+b 3 HV 0 b 0, b 1, b 2, b 3 of base HVs are needed System Energy Efficiency Lab seelab. ucsd. edu 10

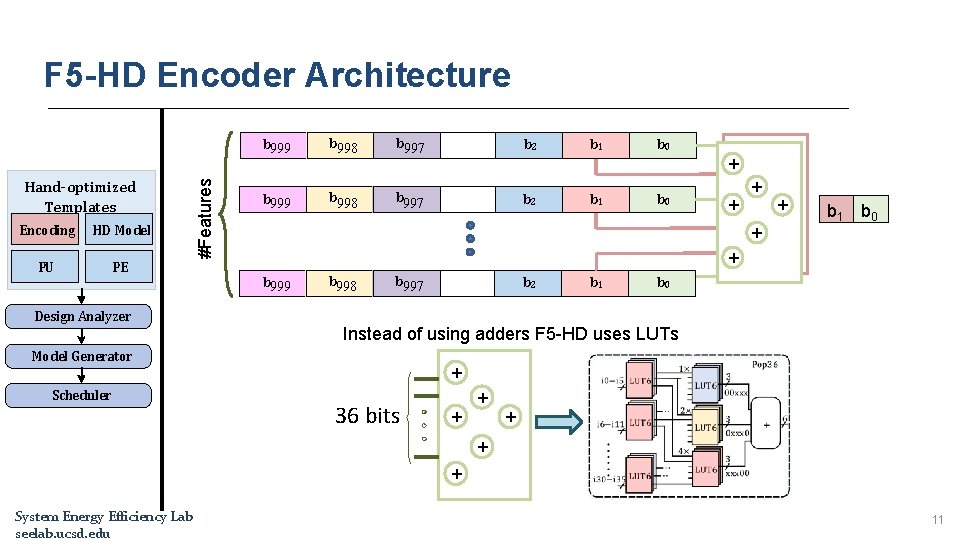

F 5 -HD Encoder Architecture Hand-optimized Templates Encoding HD Model PU PE #Features b 999 b 998 b 997 b 998 b 2 b 997 b 1 b 0 ++ ++ b 2 b 1 ++ ++ ++ b 1 b 0 Instead of using adders F 5 -HD uses LUTs Model Generator Scheduler b 2 ++ b 999 Design Analyzer b 998 + 36 bits + + + System Energy Efficiency Lab seelab. ucsd. edu 11

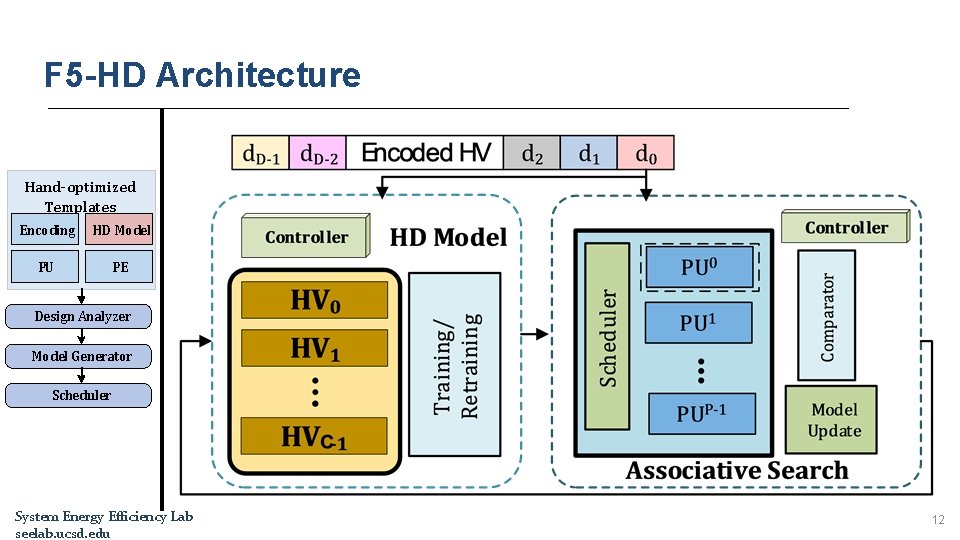

F 5 -HD Architecture Hand-optimized Templates Encoding HD Model PU PE Design Analyzer Model Generator Scheduler System Energy Efficiency Lab seelab. ucsd. edu 12

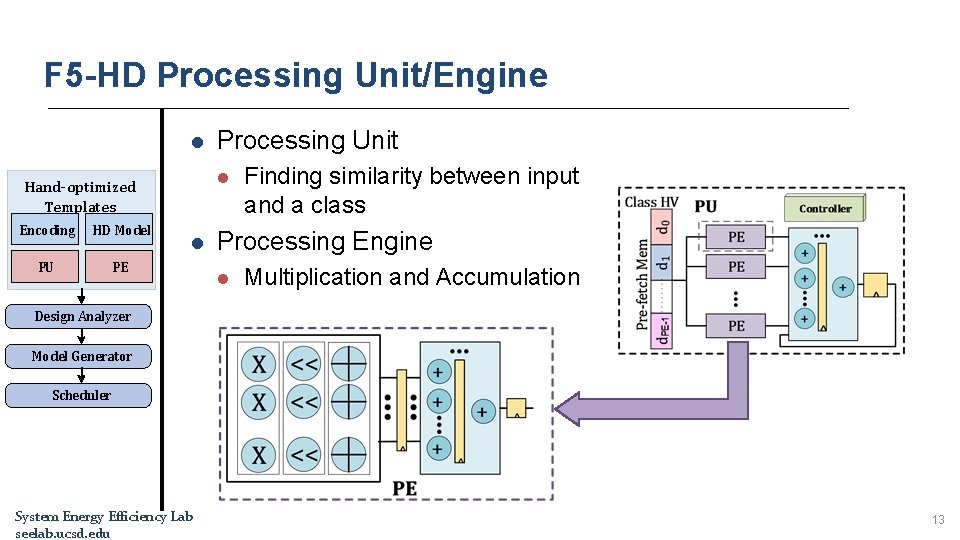

F 5 -HD Processing Unit/Engine l l Hand-optimized Templates Encoding HD Model PU PE Processing Unit l Finding similarity between input and a class Processing Engine l Multiplication and Accumulation Design Analyzer Model Generator Scheduler System Energy Efficiency Lab seelab. ucsd. edu 13

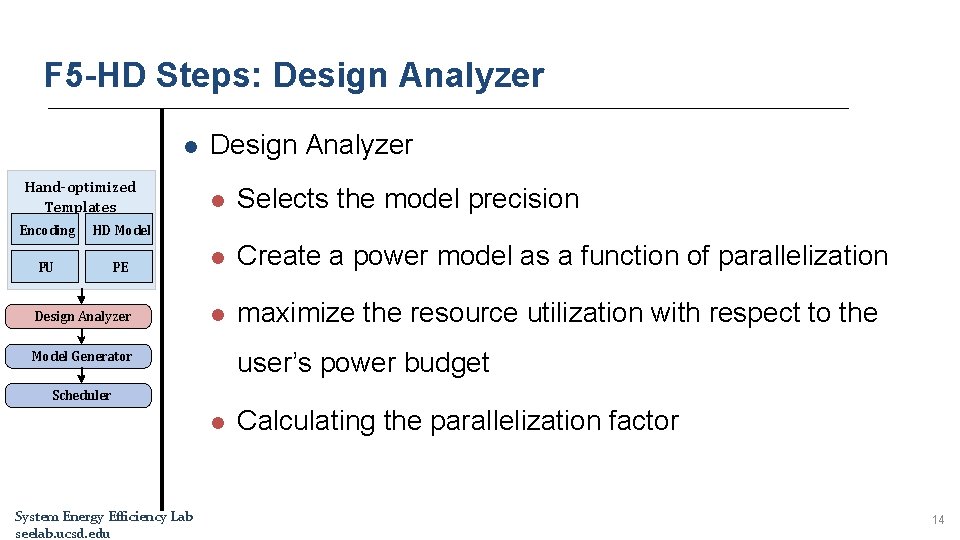

F 5 -HD Steps: Design Analyzer l Hand-optimized Templates Design Analyzer l Selects the model precision Encoding HD Model PU PE l Create a power model as a function of parallelization Design Analyzer l maximize the resource utilization with respect to the user’s power budget Model Generator Scheduler l System Energy Efficiency Lab seelab. ucsd. edu Calculating the parallelization factor 14

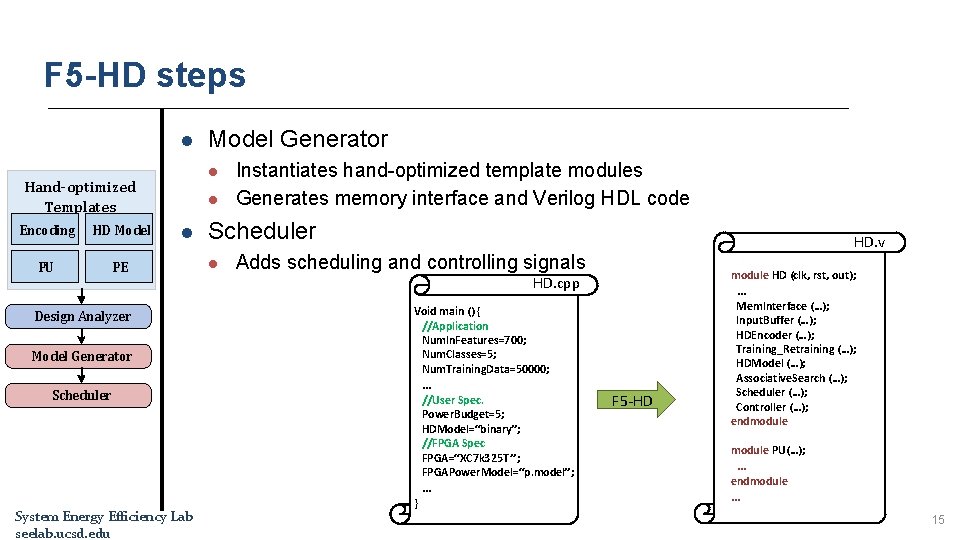

F 5 -HD steps l l Hand-optimized Templates Encoding HD Model PU PE Model Generator l l Design Analyzer Model Generator Instantiates hand-optimized template modules Generates memory interface and Verilog HDL code Scheduler l Adds scheduling and controlling signals module HD (clk, rst, out); HD. cpp … Void main () { //Application Num. In. Features=700; Num. Classes=5; Num. Training. Data=50000; … Scheduler System Energy Efficiency Lab seelab. ucsd. edu HD. v //User Spec. Power. Budget=5; HDModel=“binary”; //FPGA Spec FPGA=“XC 7 k 325 T ”; FPGAPower. Model=“p. model”; } … F 5 -HD Mem. Interface (…); Input. Buffer (…); HDEncoder (…); Training_Retraining (…); HDModel (…); Associative. Search (…); Scheduler (…); Controller (…); endmodule PU(…); … endmodule … 15

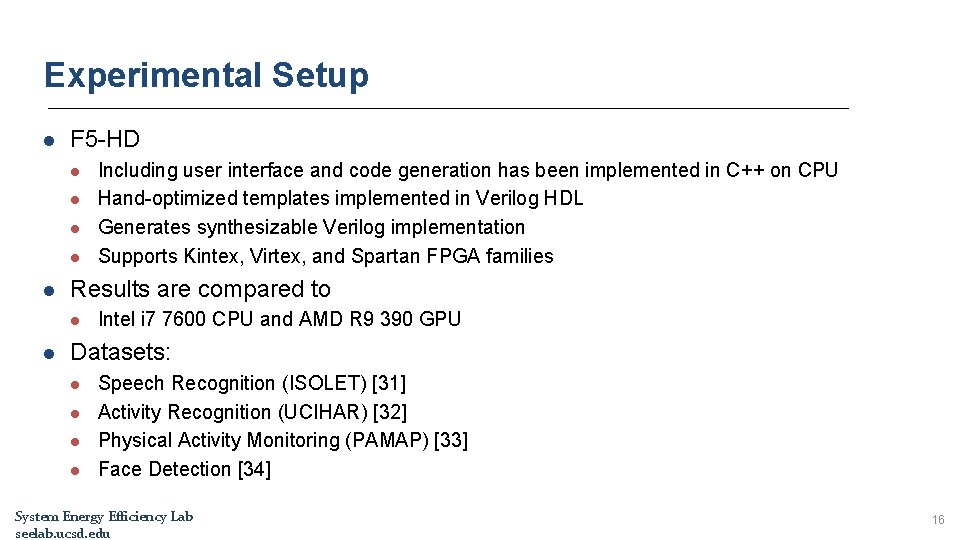

Experimental Setup l F 5 -HD l l l Results are compared to l l Including user interface and code generation has been implemented in C++ on CPU Hand-optimized templates implemented in Verilog HDL Generates synthesizable Verilog implementation Supports Kintex, Virtex, and Spartan FPGA families Intel i 7 7600 CPU and AMD R 9 390 GPU Datasets: l l Speech Recognition (ISOLET) [31] Activity Recognition (UCIHAR) [32] Physical Activity Monitoring (PAMAP) [33] Face Detection [34] System Energy Efficiency Lab seelab. ucsd. edu 16

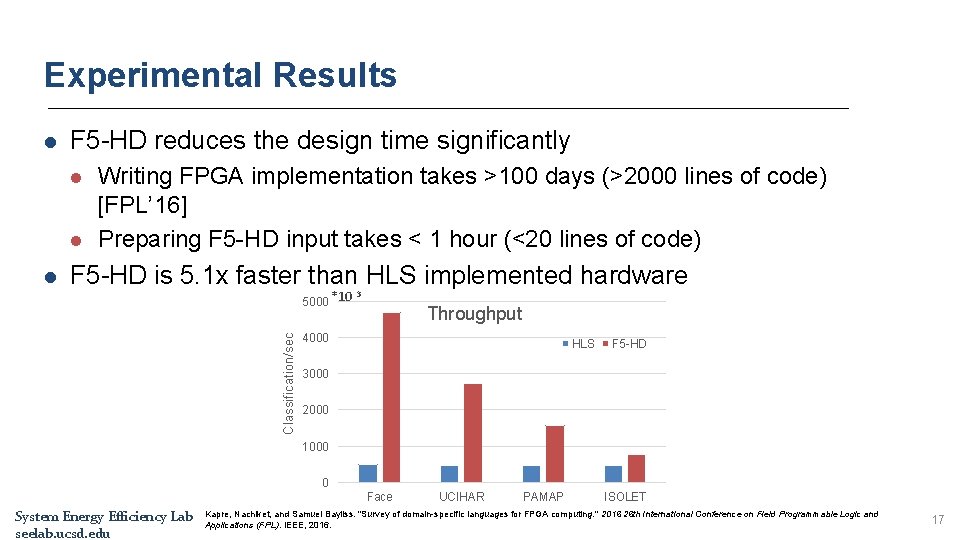

Experimental Results l F 5 -HD reduces the design time significantly l l F 5 -HD is 5. 1 x faster than HLS implemented hardware 5000 *10 Classification/sec l Writing FPGA implementation takes >100 days (>2000 lines of code) [FPL’ 16] Preparing F 5 -HD input takes < 1 hour (<20 lines of code) 3 Throughput 4000 HLS F 5 -HD 3000 2000 1000 0 Face System Energy Efficiency Lab seelab. ucsd. edu UCIHAR PAMAP ISOLET Kapre, Nachiket, and Samuel Bayliss. "Survey of domain-specific languages for FPGA computing. " 2016 26 th International Conference on Field Programmable Logic and Applications (FPL). IEEE, 2016. 17

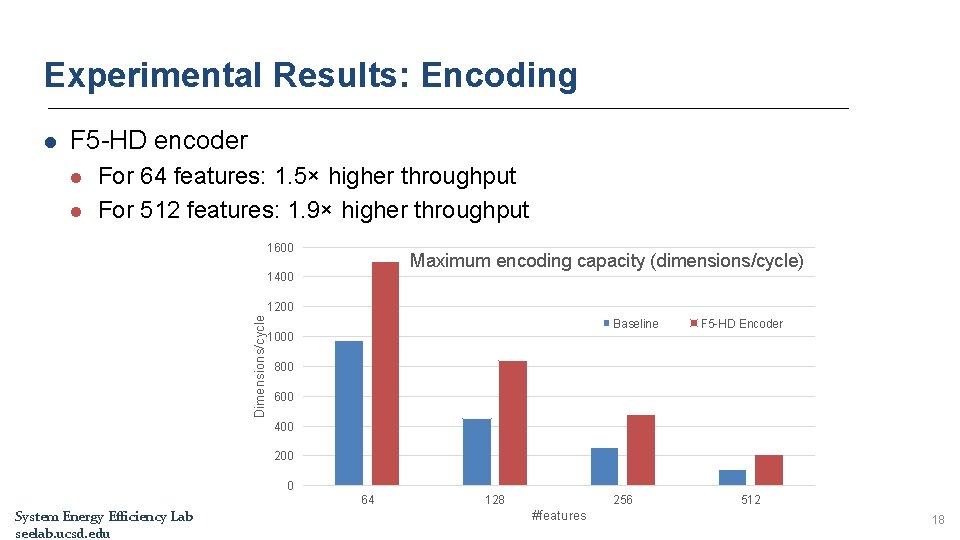

Experimental Results: Encoding l F 5 -HD encoder l l For 64 features: 1. 5× higher throughput For 512 features: 1. 9× higher throughput 1600 Maximum encoding capacity (dimensions/cycle) 1400 Dimensions/cycle 1200 Baseline F 5 -HD Encoder 1000 800 600 400 200 0 64 System Energy Efficiency Lab seelab. ucsd. edu 128 256 #features 512 18

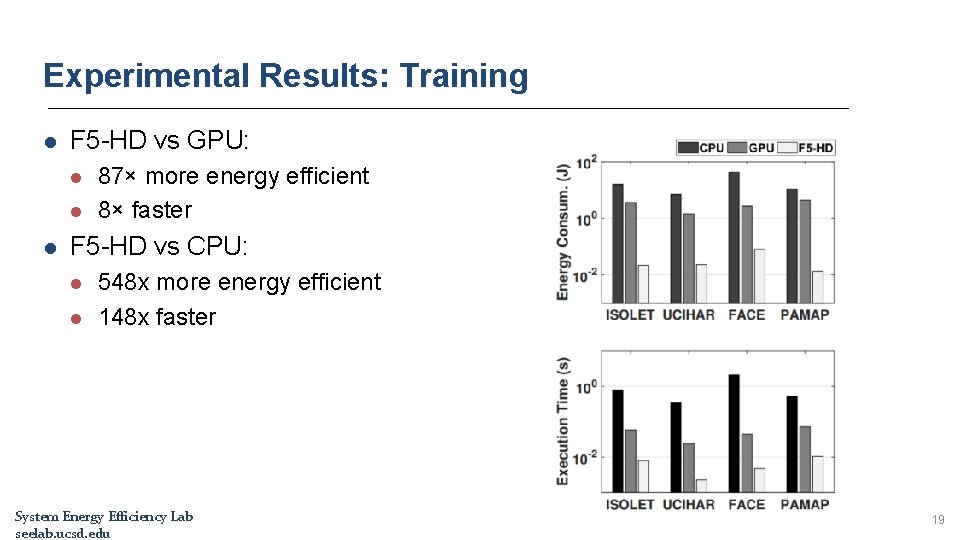

Experimental Results: Training l F 5 -HD vs GPU: l l l 87× more energy efficient 8× faster F 5 -HD vs CPU: l l 548 x more energy efficient 148 x faster System Energy Efficiency Lab seelab. ucsd. edu 19

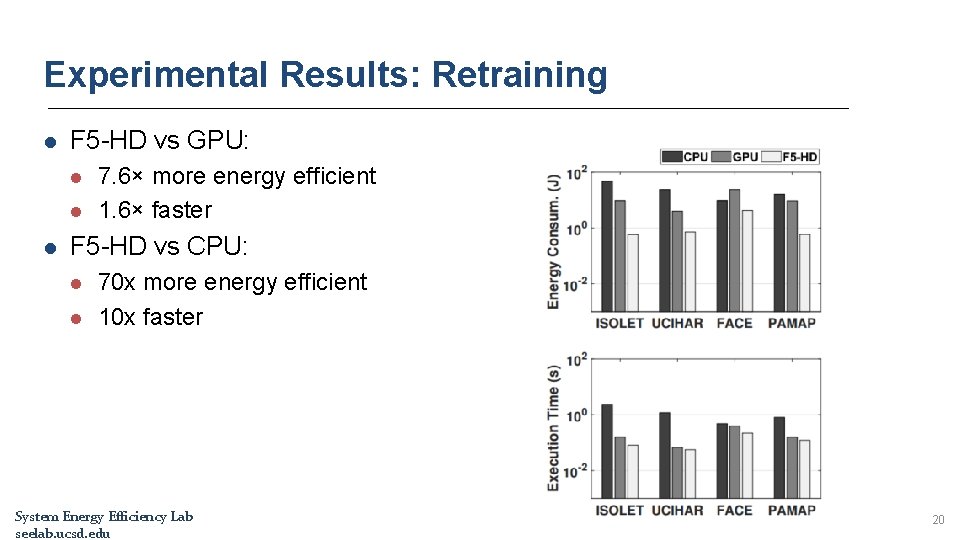

Experimental Results: Retraining l F 5 -HD vs GPU: l l l 7. 6× more energy efficient 1. 6× faster F 5 -HD vs CPU: l l 70 x more energy efficient 10 x faster System Energy Efficiency Lab seelab. ucsd. edu 20

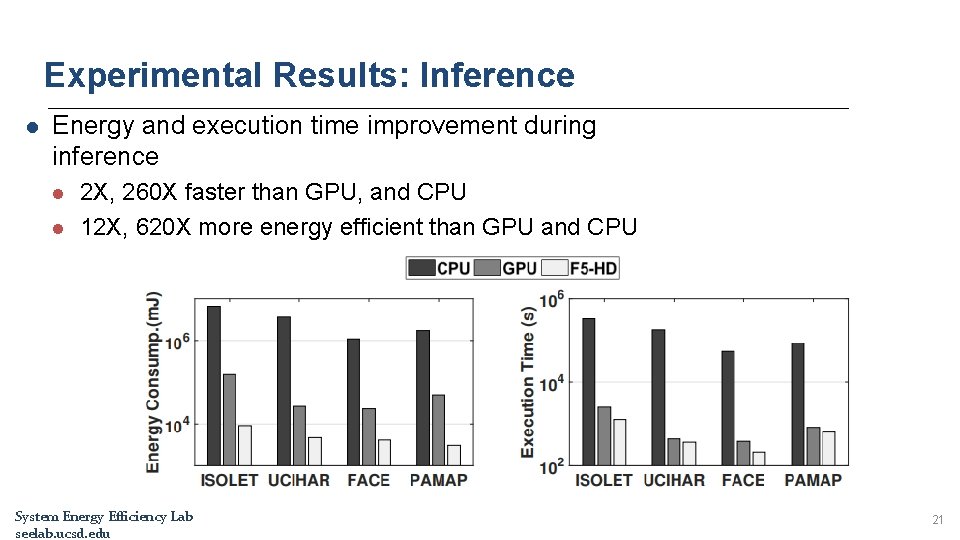

Experimental Results: Inference l Energy and execution time improvement during inference l l 2 X, 260 X faster than GPU, and CPU 12 X, 620 X more energy efficient than GPU and CPU System Energy Efficiency Lab seelab. ucsd. edu 21

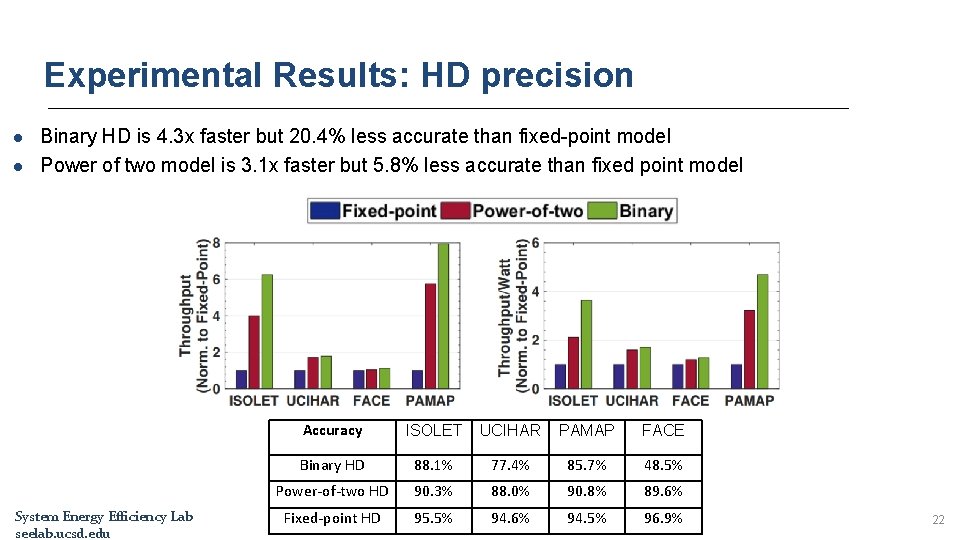

Experimental Results: HD precision l l Binary HD is 4. 3 x faster but 20. 4% less accurate than fixed-point model Power of two model is 3. 1 x faster but 5. 8% less accurate than fixed point model System Energy Efficiency Lab seelab. ucsd. edu Accuracy ISOLET UCIHAR PAMAP FACE Binary HD 88. 1% 77. 4% 85. 7% 48. 5% Power-of-two HD 90. 3% 88. 0% 90. 8% 89. 6% Fixed-point HD 95. 5% 94. 6% 94. 5% 96. 9% 22

Conclusion l l l F 5 -HD: an automated framework for FPGA-based acceleration of HD computing F 5 -HD reduces the design time from 3 months to less than an hour F 5 -HD supports: l l Fixed-point, power of two and binary models Training, retraining, and inference of HD Xilinx FPGAs F 5 -HD is: l ~5 x faster than HLS tool implementation l ~87 x more energy efficient and ~8 x faster during training than GPU 12 x more energy efficient and 2 x faster during inference than GPU l System Energy Efficiency Lab seelab. ucsd. edu 23

- Slides: 23