Extending the ICARUS Cognitive Architecture Pat Langley School

- Slides: 28

Extending the ICARUS Cognitive Architecture Pat Langley School of Computing and Informatics Arizona State University Tempe, Arizona USA Thanks to D. Choi, T. Konik, U. Kutur, D. Nau, S. Ohlsson, S. Rogers, and D. Shapiro for their many contributions. This talk reports research partly funded by grants from DARPA IPTO, which is not responsible for its contents.

The ICARUS Architecture ICARUS is a theory of the human cognitive architecture that posits: 1. Short-term memories are distinct from long-term stores 2. Memories contain modular elements cast as symbolic structures 3. Long-term structures are accessed through pattern matching 4. Cognition occurs in retrieval/selection/action cycles 5. Learning involves monotonic addition of elements to memory 6. Learning is incremental and interleaved with performance It shares the assumptions with other cognitive architectures like Soar (Laird et al. , 1987) and ACT-R (Anderson, 1993).

Distinctive Features of ICARUS However, ICARUS also makes assumptions that distinguish it from these architectures: 1. Cognition is grounded in perception and action 2. Categories and skills are separate cognitive entities 3. Short-term elements are instances of long-term structures 4. Inference and execution are more basic than problem solving 5. Skill hierarchies are learned in a cumulative manner Some of these tenets also appear in Bonasso et al. ’s (2003) 3 T, Freed’s APEX, and Sun et al. ’s (2001) CLARION.

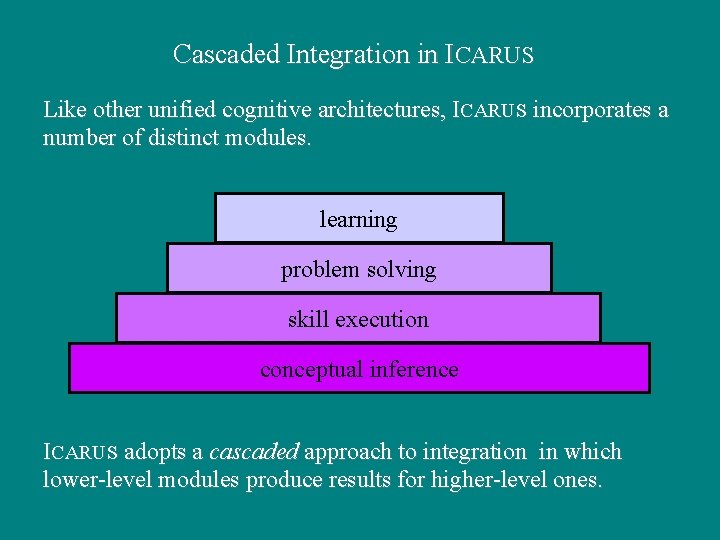

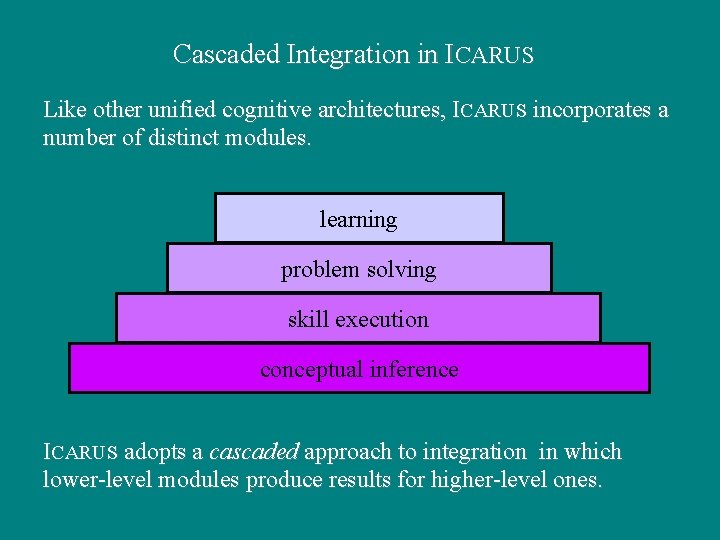

Cascaded Integration in ICARUS Like other unified cognitive architectures, ICARUS incorporates a number of distinct modules. learning problem solving skill execution conceptual inference ICARUS adopts a cascaded approach to integration in which lower-level modules produce results for higher-level ones.

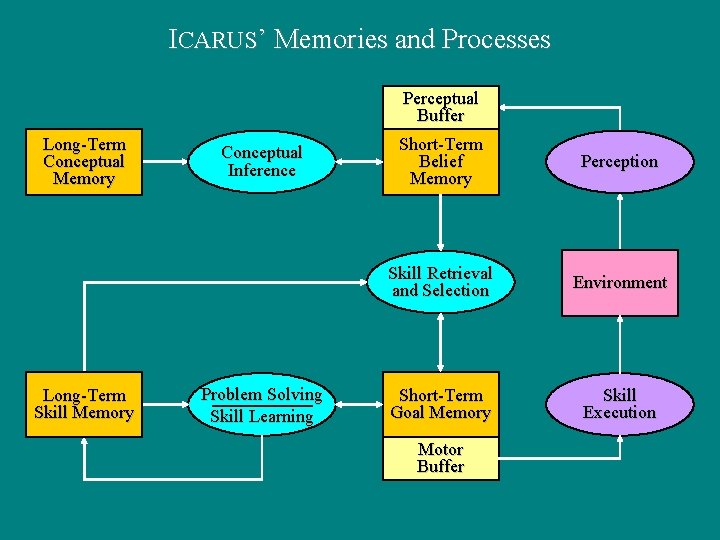

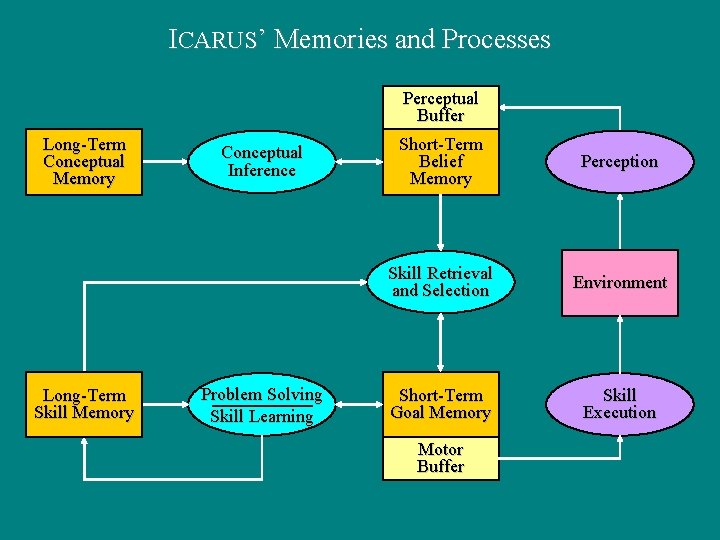

ICARUS’ Memories and Processes Perceptual Buffer Long-Term Conceptual Memory Long-Term Skill Memory Conceptual Inference Problem Solving Skill Learning Short-Term Belief Memory Perception Skill Retrieval and Selection Environment Short-Term Goal Memory Skill Execution Motor Buffer

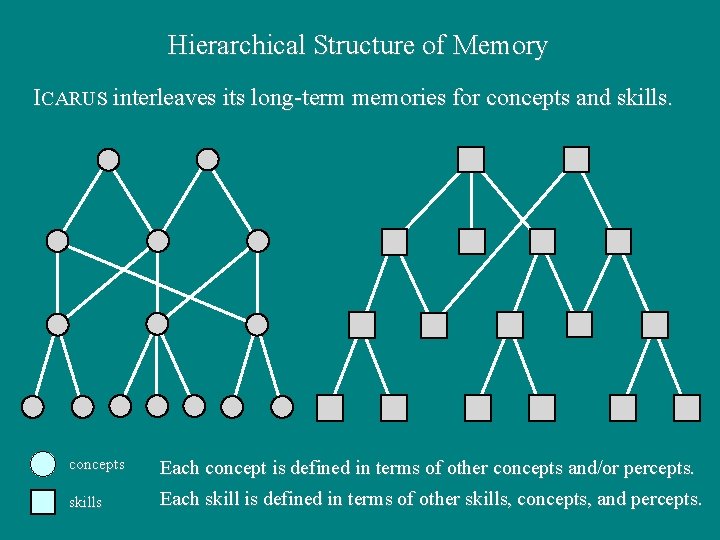

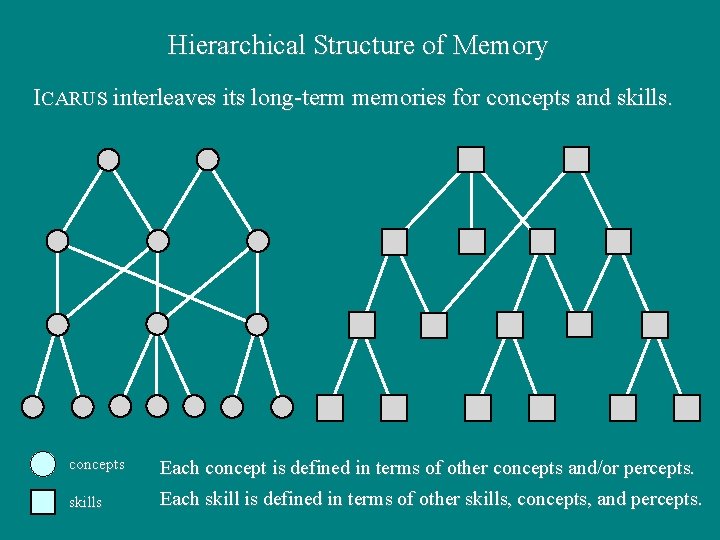

Hierarchical Structure of Memory ICARUS interleaves its long-term memories for concepts and skills. concepts Each concept is defined in terms of other concepts and/or percepts. skills Each skill is defined in terms of other skills, concepts, and percepts.

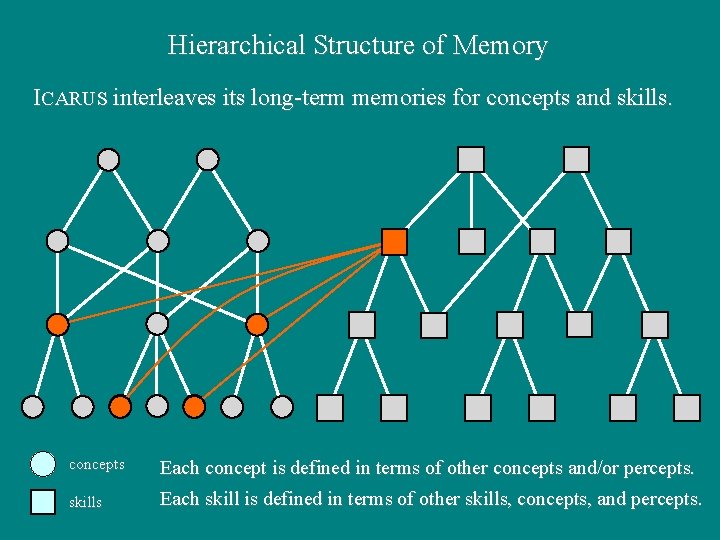

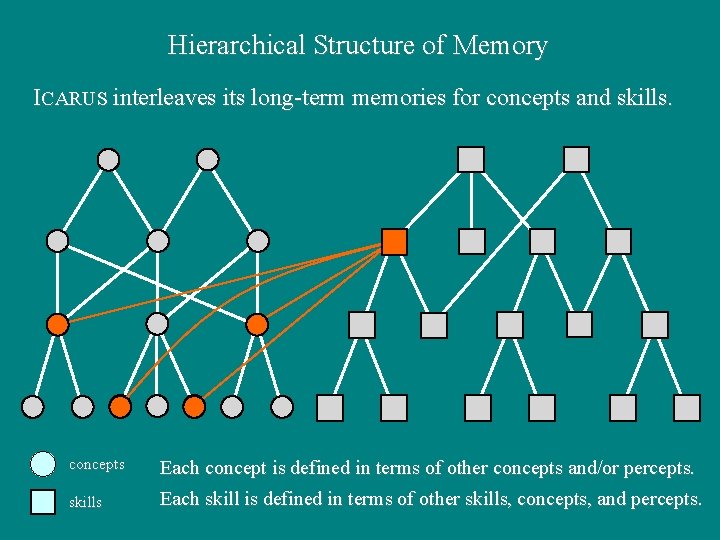

Hierarchical Structure of Memory ICARUS interleaves its long-term memories for concepts and skills. concepts Each concept is defined in terms of other concepts and/or percepts. skills Each skill is defined in terms of other skills, concepts, and percepts.

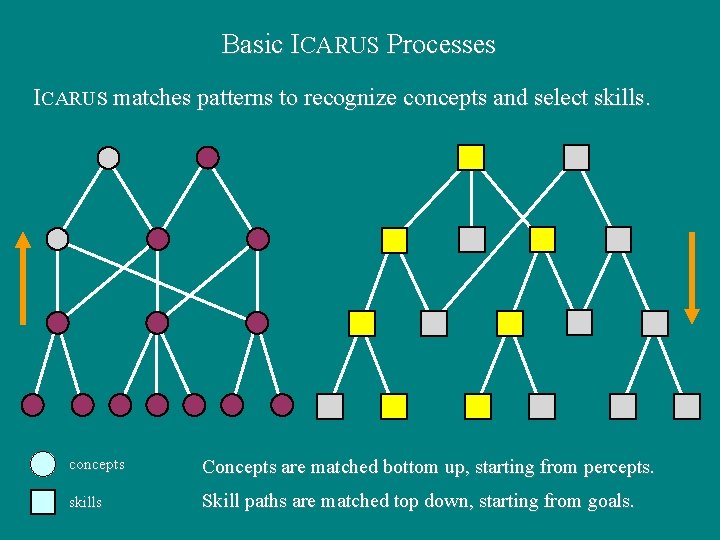

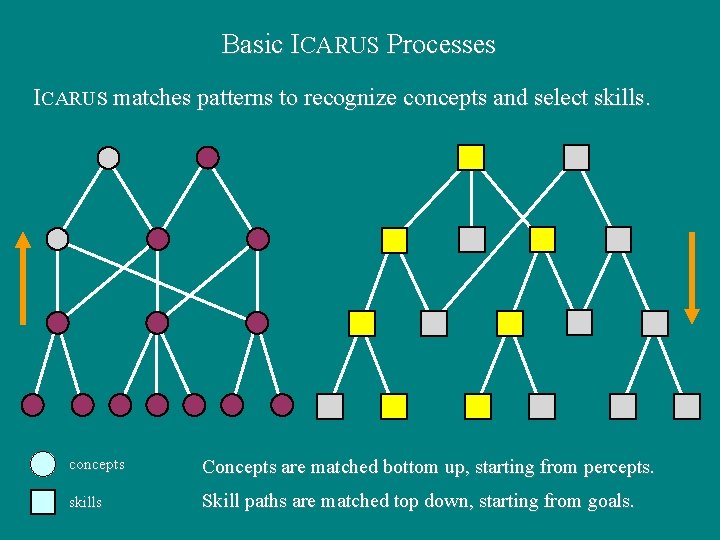

Basic ICARUS Processes ICARUS matches patterns to recognize concepts and select skills. concepts Concepts are matched bottom up, starting from percepts. skills Skill paths are matched top down, starting from goals.

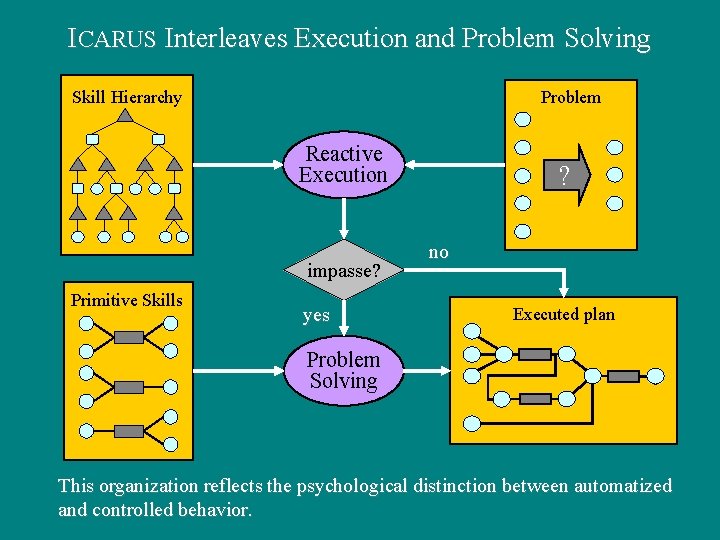

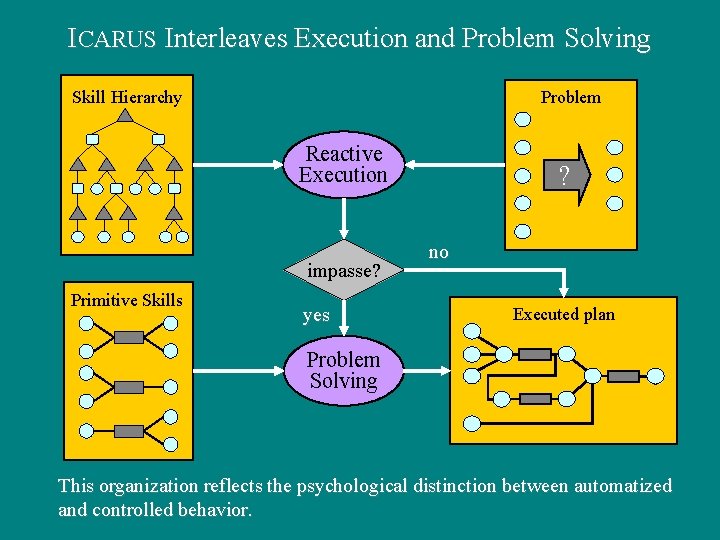

ICARUS Interleaves Execution and Problem Solving Skill Hierarchy Problem Reactive Execution impasse? Primitive Skills yes ? no Executed plan Problem Solving This organization reflects the psychological distinction between automatized and controlled behavior.

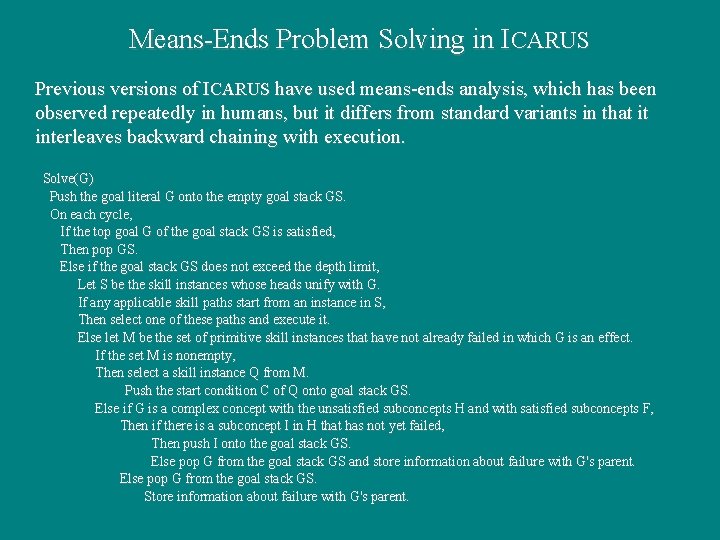

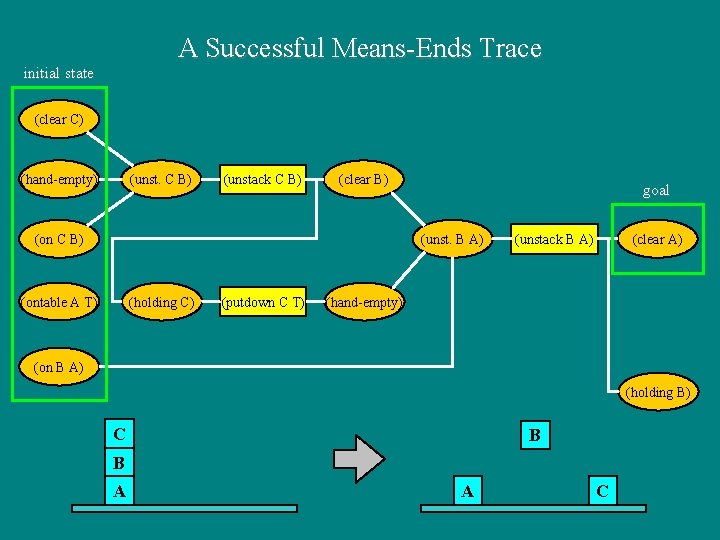

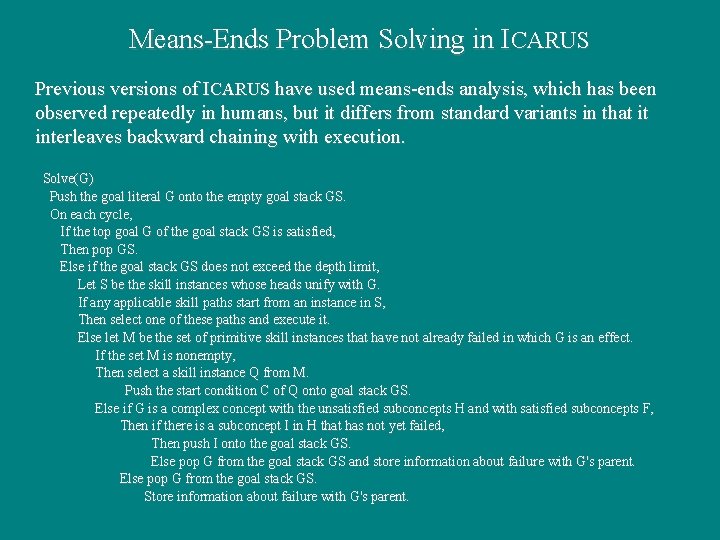

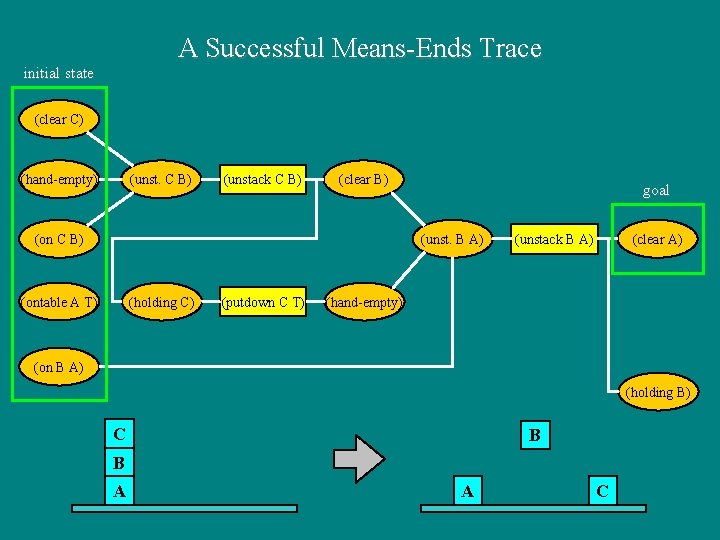

Means-Ends Problem Solving in ICARUS Previous versions of ICARUS have used means-ends analysis, which has been observed repeatedly in humans, but it differs from standard variants in that it interleaves backward chaining with execution. Solve(G) Push the goal literal G onto the empty goal stack GS. On each cycle, If the top goal G of the goal stack GS is satisfied, Then pop GS. Else if the goal stack GS does not exceed the depth limit, Let S be the skill instances whose heads unify with G. If any applicable skill paths start from an instance in S, Then select one of these paths and execute it. Else let M be the set of primitive skill instances that have not already failed in which G is an effect. If the set M is nonempty, Then select a skill instance Q from M. Push the start condition C of Q onto goal stack GS. Else if G is a complex concept with the unsatisfied subconcepts H and with satisfied subconcepts F, Then if there is a subconcept I in H that has not yet failed, Then push I onto the goal stack GS. Else pop G from the goal stack GS and store information about failure with G's parent. Else pop G from the goal stack GS. Store information about failure with G's parent.

Learning from Problem Solutions ICARUS incorporates a mechanism for learning new skills that: · operates whenever problem solving overcomes an impasse; · incorporates only information available from the goal stack; · generalizes beyond the specific objects concerned; · depends on whether chaining involved skills or concepts; · supports cumulative learning and within-problem transfer. This skill creation process is fully interleaved with means-ends analysis and execution. Learned skills carry out forward execution in the environment rather than backward chaining in the mind.

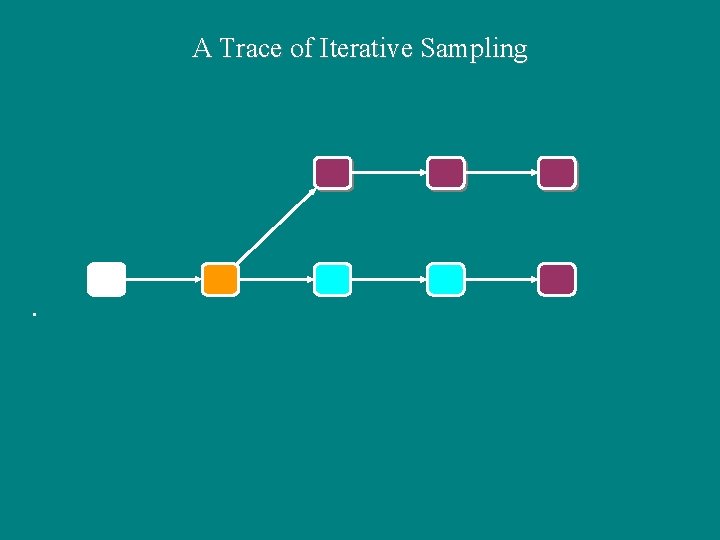

Forward Search and Mental Simulation However, in some domains, humans carry out forward-chaining search with methods like progressive deepening (de Groot, 1978). In response, we are adding a new module to ICARUS that: · performs mental simulation of a single trajectory consistent with its stored hierarchical skills; · repeats this process to find a number of alternative paths from the current environmental state; · selects the path that produces the best outcome to determine the next primitive skill to execute. We refer to this memory-limited search method as hierarchical iterative sampling (Langley, 1992).

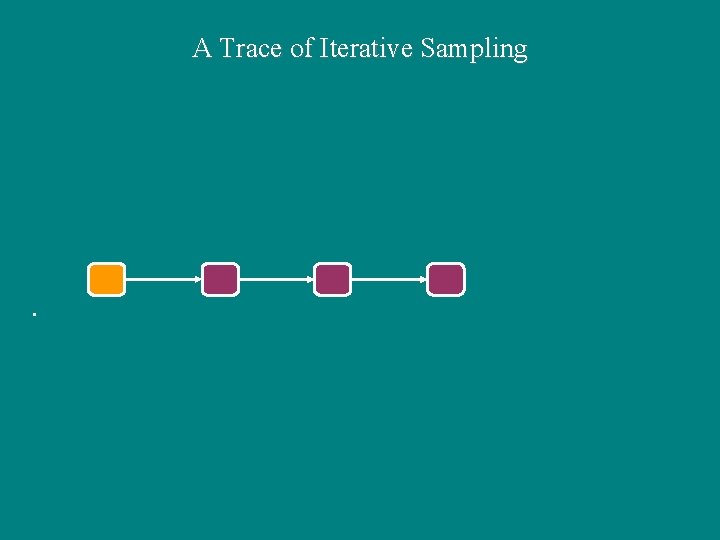

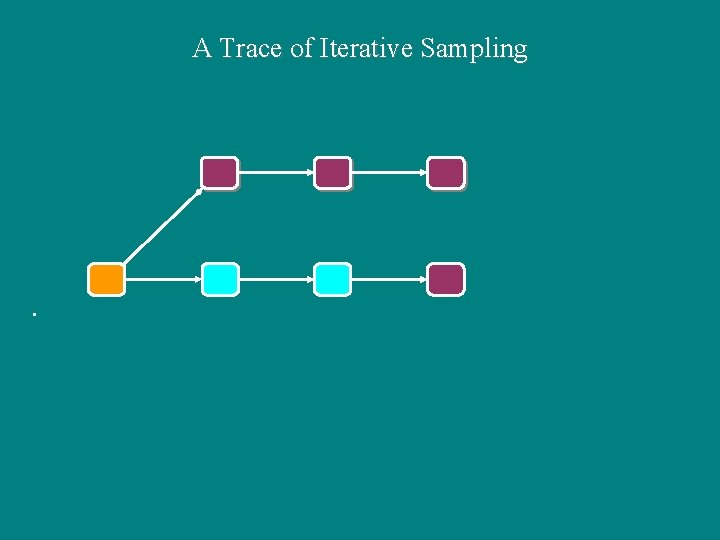

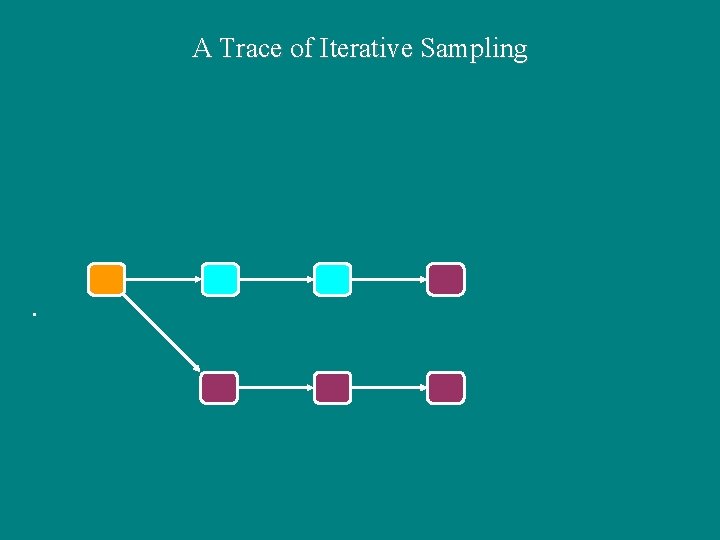

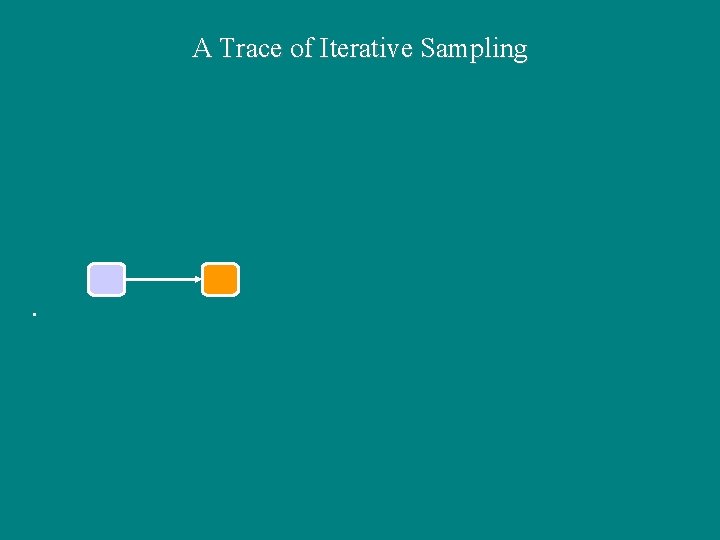

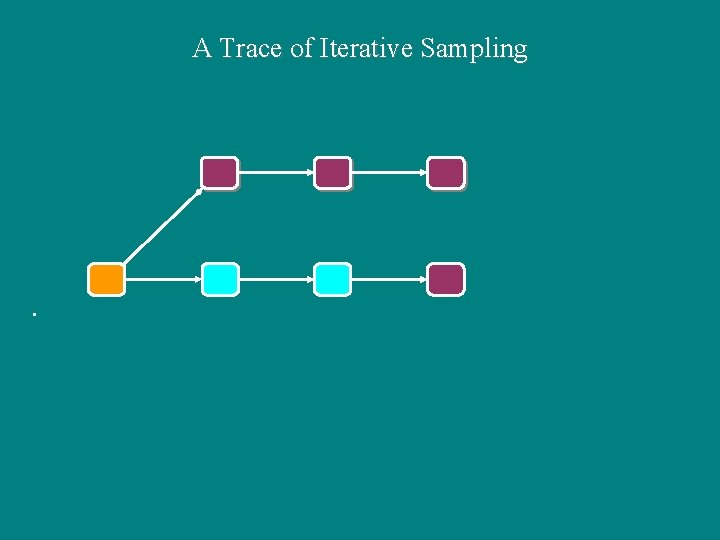

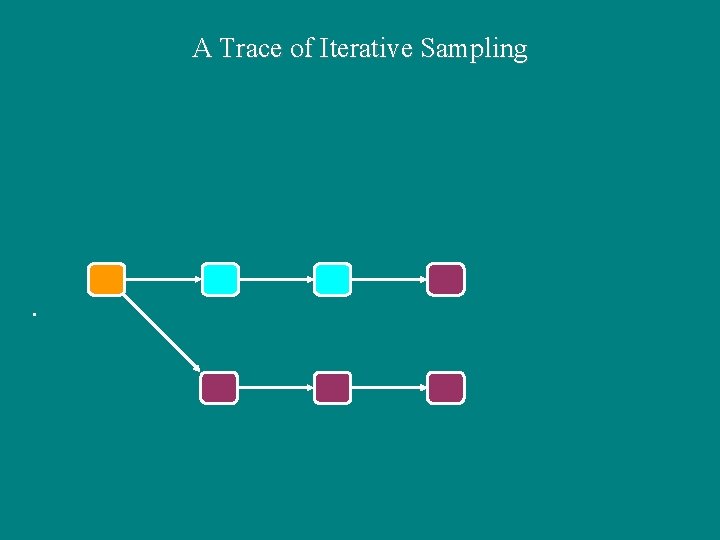

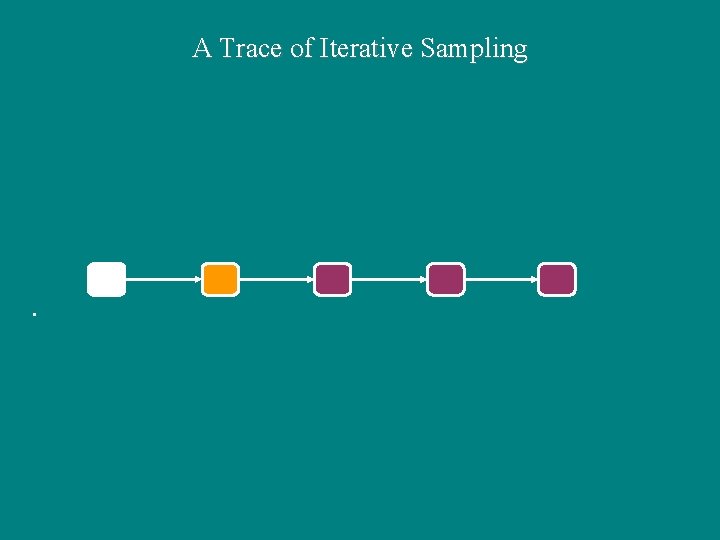

A Trace of Iterative Sampling

A Trace of Iterative Sampling

A Trace of Iterative Sampling

A Trace of Iterative Sampling

A Trace of Iterative Sampling

A Trace of Iterative Sampling

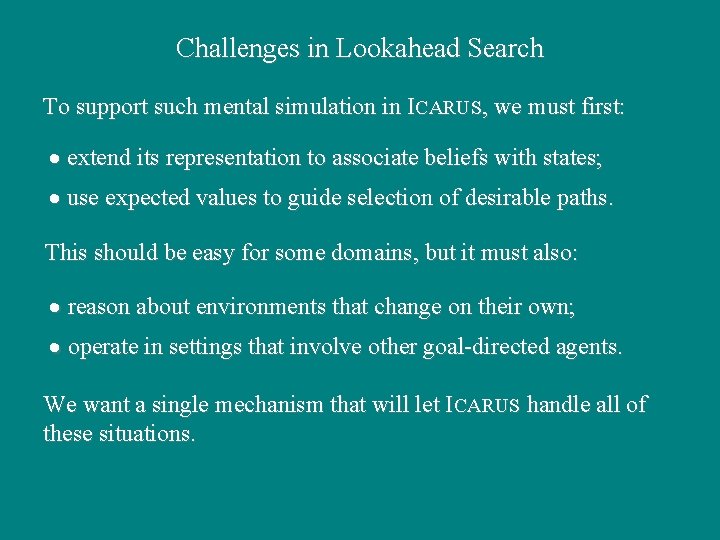

Challenges in Lookahead Search To support such mental simulation in ICARUS, we must first: · extend its representation to associate beliefs with states; · use expected values to guide selection of desirable paths. This should be easy for some domains, but it must also: · reason about environments that change on their own; · operate in settings that involve other goal-directed agents. We want a single mechanism that will let ICARUS handle all of these situations.

More on Mental Simulation We must address other issues to make this idea operational: · determine the depth of lookahead and number of samples; · ensure reasonable diversity among the sampled paths; · explain when problem solvers chain backward and forward; · use the results of forward search to drive skill acquisition. Answering these questions will let ICARUS provide a more complete theory of human problem solving.

Learning from Undesirable Outcomes Despite their best efforts, humans sometimes take actions that produce undesired results. We plan to model learning from such outcomes in ICARUS by: · identifying conditions on path that, if violated, avoid result; · carry out search to find another path that would violate it; · analyze the alternative path to learn skills that produce it; · store the new skills so as to mask older, problematic ones. Learning from such counterfactual reasoning is an important human ability.

Plans for Evaluation We propose to evaluate these extensions to ICARUS on two different testbeds: · a simulated urban driving environment that contains other vehicles and pedestrians; · a mobile robot that carries out joint activities with humans to achieve shared goals. Both dynamic environments should illustrate the benefits of mental simulation and counterfactual learning.

Concluding Remarks ICARUS is a unified theory of the cognitive architecture that: · includes hierarchical memories for concepts and skills; · interleaves conceptual inference with reactive execution; · resorts to problem solving when it lacks routine skills; · learns such skills from successful resolution of impasses. However, we plan to extend the framework so it can support: · forward-chaining search via repeated mental simulation; · learning new skills through counterfactual reasoning. These will let ICARUS more fully model human cognition.

End of Presentation

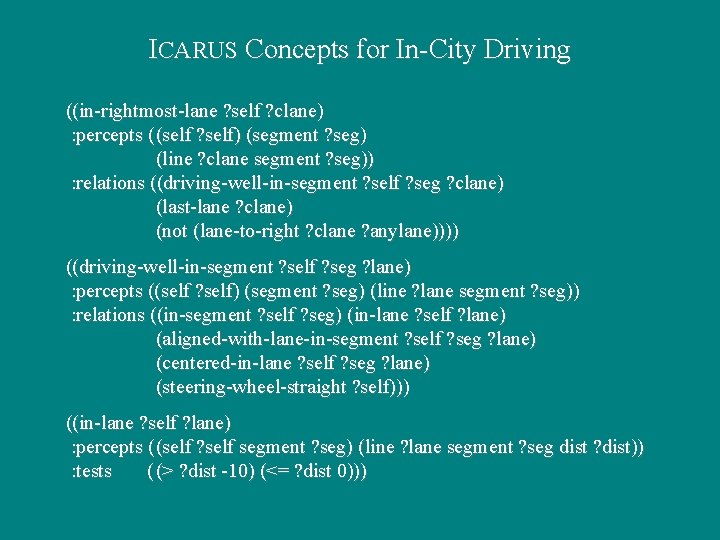

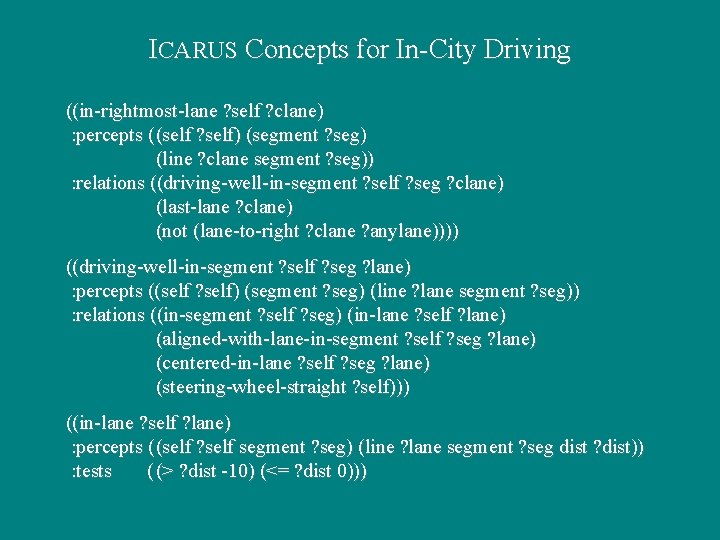

ICARUS Concepts for In-City Driving ((in-rightmost-lane ? self ? clane) : percepts ( (self ? self) (segment ? seg) (line ? clane segment ? seg)) : relations ((driving-well-in-segment ? self ? seg ? clane) (last-lane ? clane) (not (lane-to-right ? clane ? anylane)))) ((driving-well-in-segment ? self ? seg ? lane) : percepts ((self ? self) (segment ? seg) (line ? lane segment ? seg)) : relations ((in-segment ? self ? seg) (in-lane ? self ? lane) (aligned-with-lane-in-segment ? self ? seg ? lane) (centered-in-lane ? self ? seg ? lane) (steering-wheel-straight ? self))) ((in-lane ? self ? lane) : percepts ( (self ? self segment ? seg) (line ? lane segment ? seg dist ? dist)) : tests ( (> ? dist -10) (<= ? dist 0)))

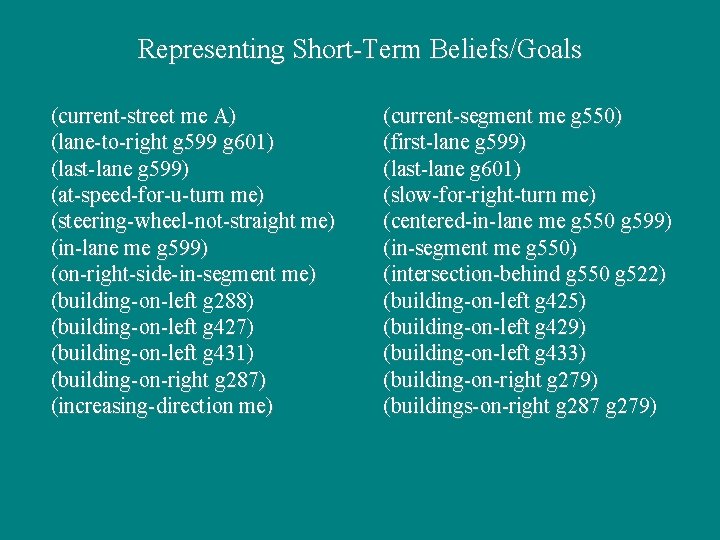

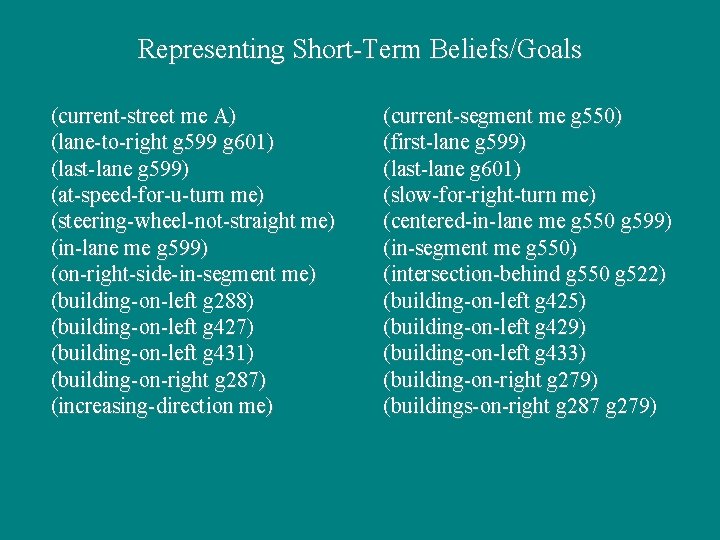

Representing Short-Term Beliefs/Goals (current-street me A) (lane-to-right g 599 g 601) (last-lane g 599) (at-speed-for-u-turn me) (steering-wheel-not-straight me) (in-lane me g 599) (on-right-side-in-segment me) (building-on-left g 288) (building-on-left g 427) (building-on-left g 431) (building-on-right g 287) (increasing-direction me) (current-segment me g 550) (first-lane g 599) (last-lane g 601) (slow-for-right-turn me) (centered-in-lane me g 550 g 599) (in-segment me g 550) (intersection-behind g 550 g 522) (building-on-left g 425) (building-on-left g 429) (building-on-left g 433) (building-on-right g 279) (buildings-on-right g 287 g 279)

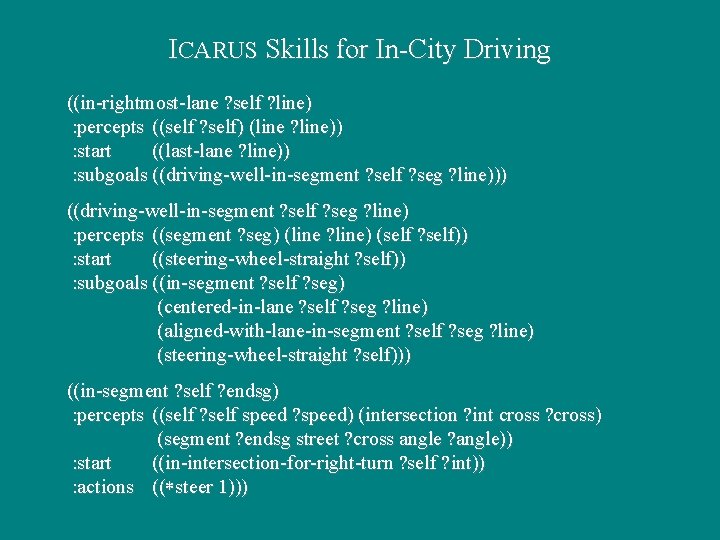

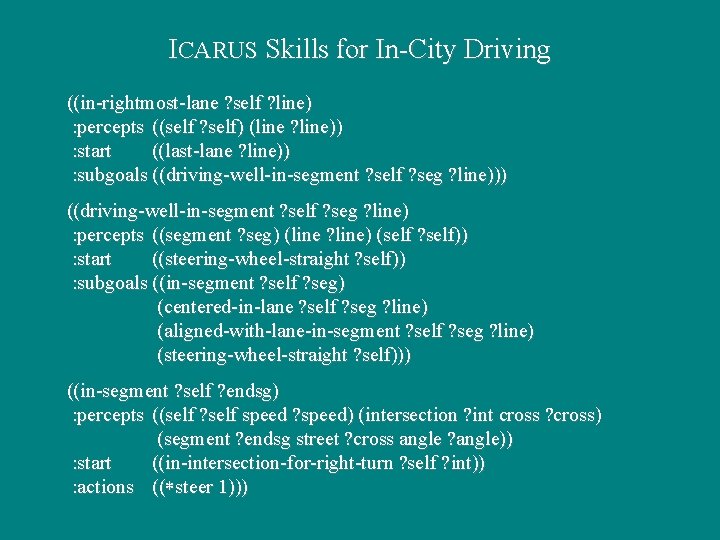

ICARUS Skills for In-City Driving ((in-rightmost-lane ? self ? line) : percepts ((self ? self) (line ? line)) : start ((last-lane ? line)) : subgoals ((driving-well-in-segment ? self ? seg ? line))) ((driving-well-in-segment ? self ? seg ? line) : percepts ((segment ? seg) (line ? line) (self ? self)) : start ((steering-wheel-straight ? self)) : subgoals ((in-segment ? self ? seg) (centered-in-lane ? self ? seg ? line) (aligned-with-lane-in-segment ? self ? seg ? line) (steering-wheel-straight ? self))) ((in-segment ? self ? endsg) : percepts ((self ? self speed ? speed) (intersection ? int cross ? cross) (segment ? endsg street ? cross angle ? angle)) : start ((in-intersection-for-right-turn ? self ? int)) : actions (( steer 1)))

A Successful Means-Ends Trace initial state (clear C) (hand-empty) (unst. C B) (unstack C B) (clear B) (on C B) goal (unst. B A) (ontable A T) (holding C) (putdown C T) (clear A) (unstack B A) (hand-empty) (on B A) (holding B) C B B A A C