Ext 2 On Singularity Scott Finley University of

- Slides: 36

Ext 2 On Singularity Scott Finley University of Wisconsin – Madison CS 736 Project

Ext 2 Defined � Basic, default Linux file system � Almost exactly the same as FFS ◦ Disk broken into “block groups” ◦ Super-block, inode/block bitmaps, etc.

Microsoft Research’s Singularity � New from the ground up � Reliability as #1 goal � Reevaluate conventional OS structure � Leverage advances of the last 20 years ◦ Languages and compilers ◦ Static analysis of whole system

My Goals � Implement Ext 2 on Singularity � Focus on read support with caching � Investigate how Singularity design impact FS integration � Investigate performance implications

Overview of Results �I have a Ext 2 “working” on Singularity ◦ Reading fully supported ◦ Caching improves performance! ◦ Limited write support � Singularity design ◦ Garbage collection hurts performance ◦ Reliability is good: I couldn’t crash it.

Outline 1. 2. 3. Singularity Details of my Ext 2 implementation Results

Singularity

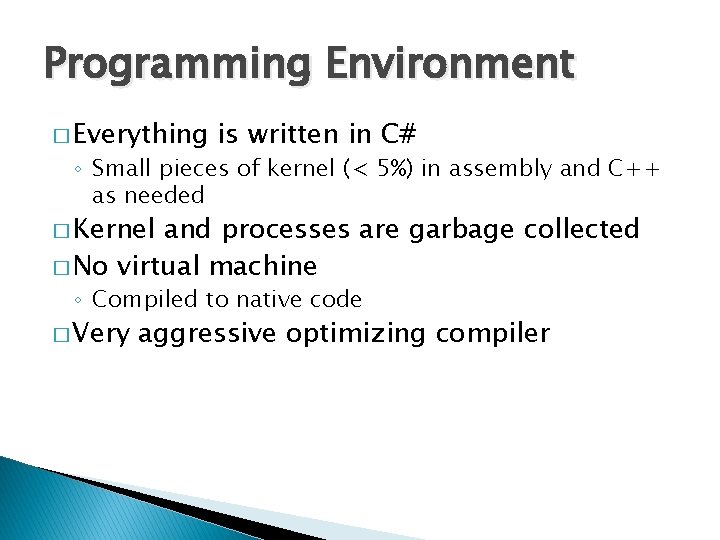

Programming Environment � Everything is written in C# ◦ Small pieces of kernel (< 5%) in assembly and C++ as needed � Kernel and processes are garbage collected � No virtual machine ◦ Compiled to native code � Very aggressive optimizing compiler

Process Model � Singularity is a micro kernel � Everything else is a SIP ◦ “Software Isolated Process” � No hardware based memory isolation ◦ SIP “Object Space” isolation guaranteed by static analysis and safe language (C#) ◦ Context switches are much faster

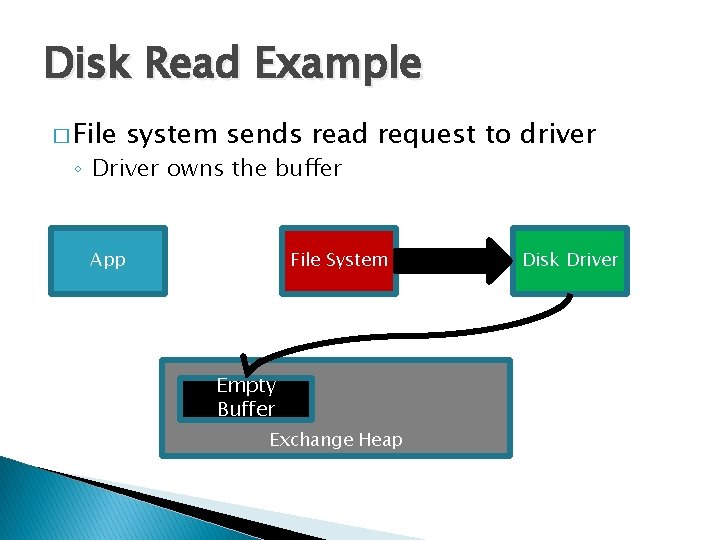

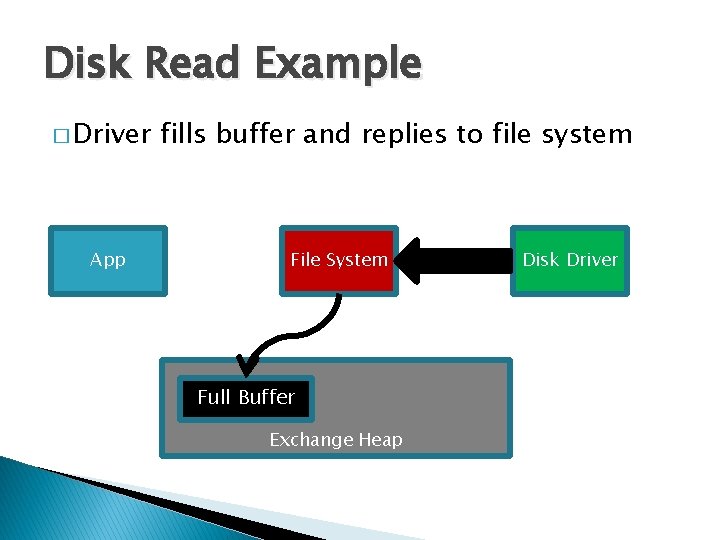

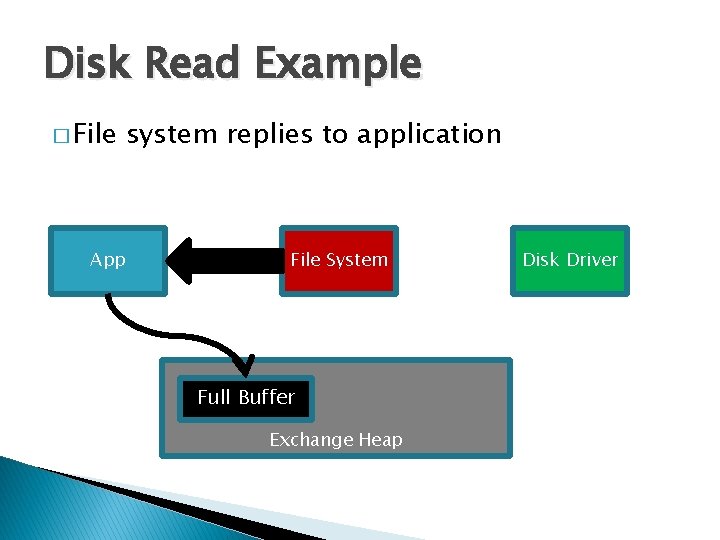

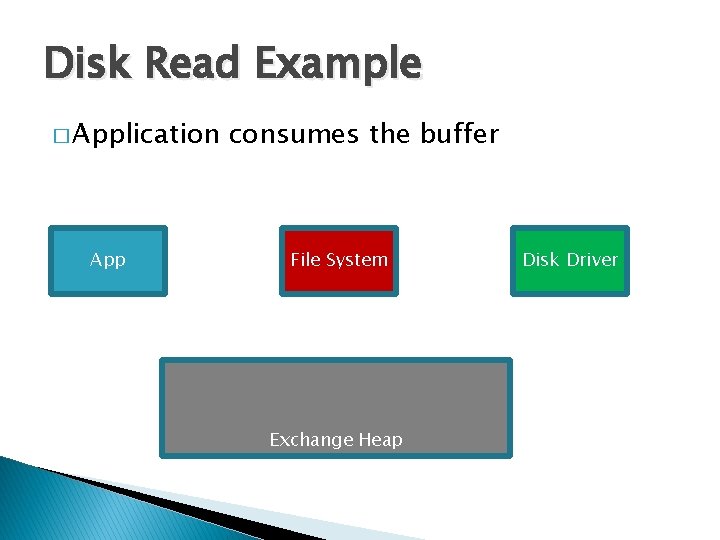

Communication Channels � All SIP communication is via message channels � No shared memory � Messages and data passed via Exchange Heap � Object ownership is tracked � Zero copy data passing via pointers

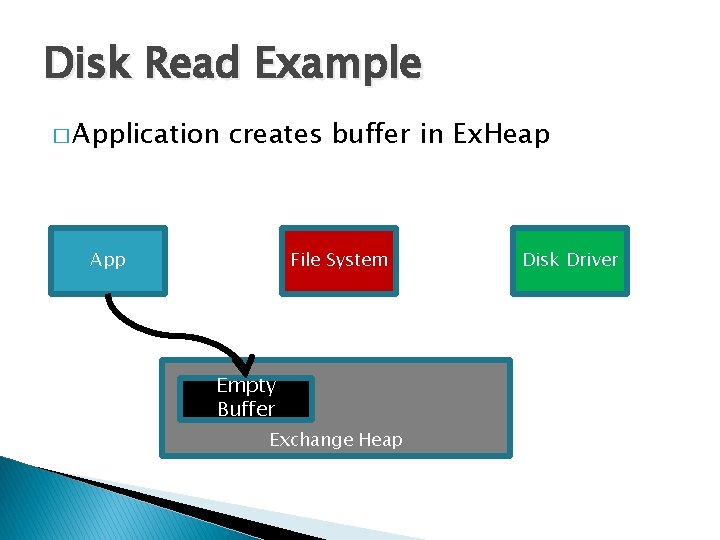

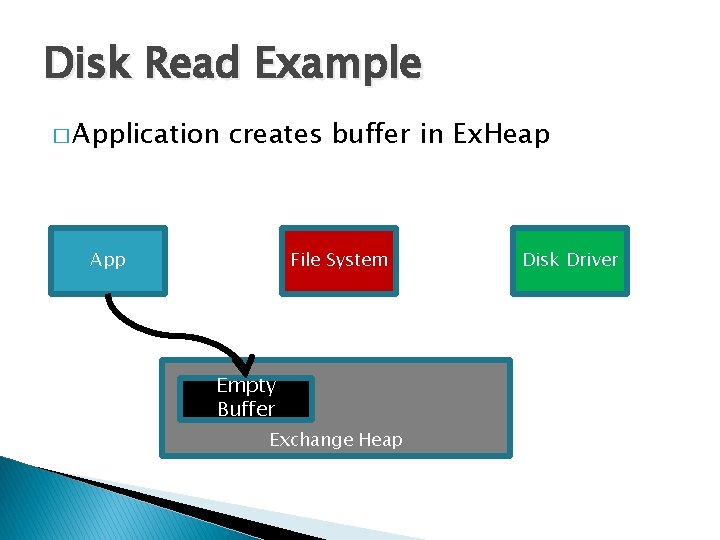

Disk Read Example � Application creates buffer in Ex. Heap App File System Empty Buffer Exchange Heap Disk Driver

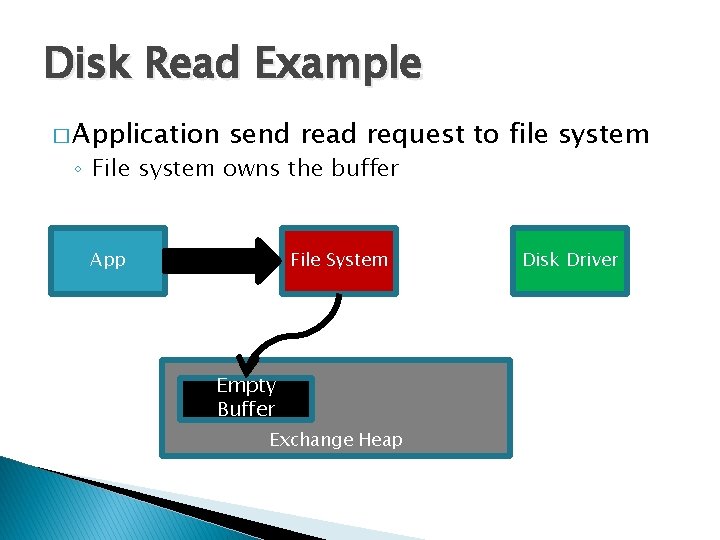

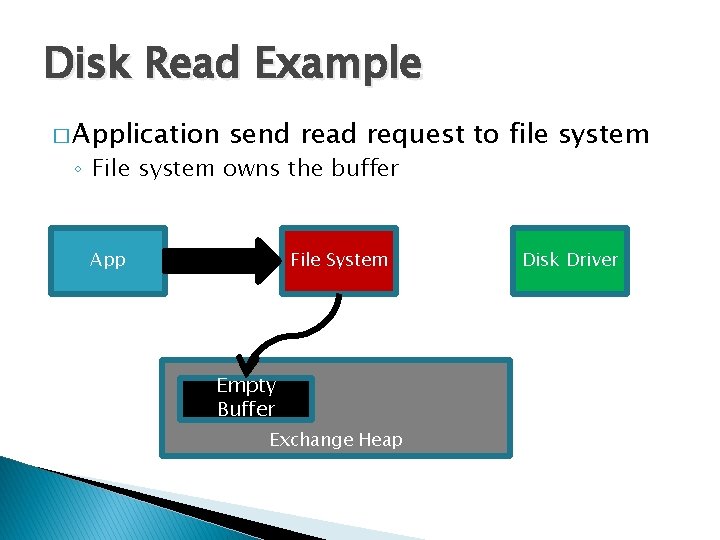

Disk Read Example � Application send read request to file system ◦ File system owns the buffer App File System Empty Buffer Exchange Heap Disk Driver

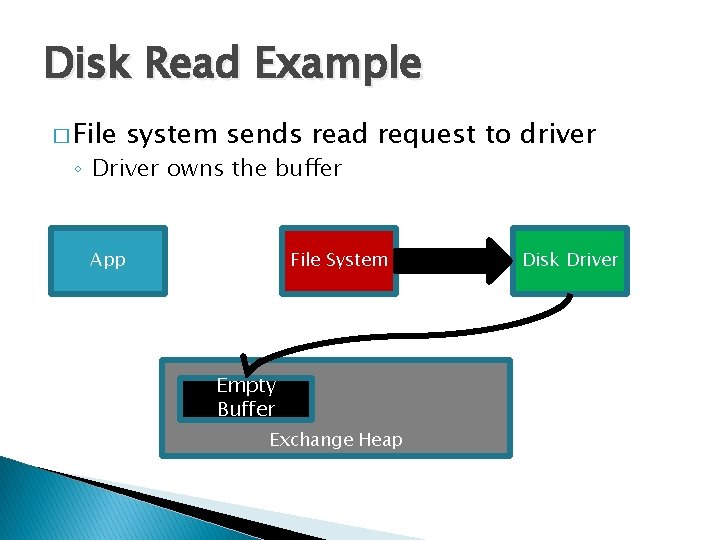

Disk Read Example � File system sends read request to driver ◦ Driver owns the buffer App File System Empty Buffer Exchange Heap Disk Driver

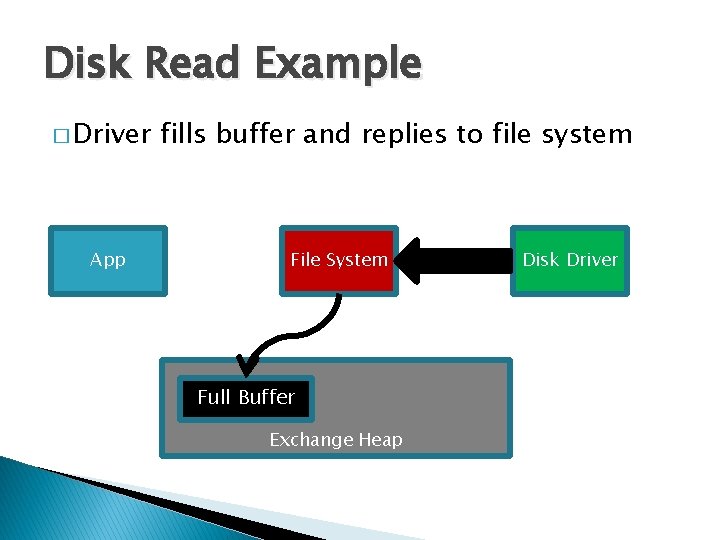

Disk Read Example � Driver App fills buffer and replies to file system File System Full Buffer Exchange Heap Disk Driver

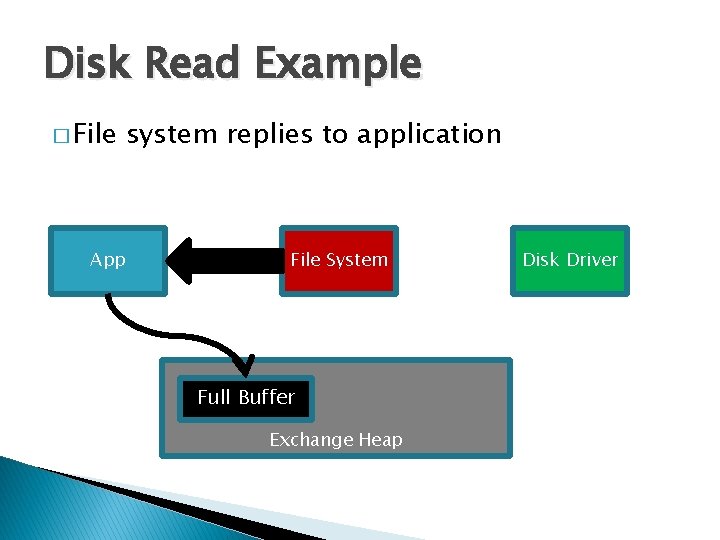

Disk Read Example � File App system replies to application File System Full Buffer Exchange Heap Disk Driver

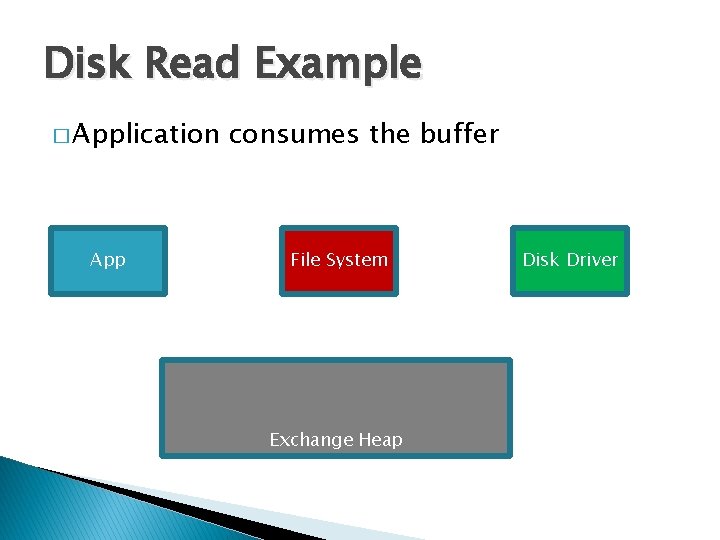

Disk Read Example � Application App consumes the buffer File System Exchange Heap Disk Driver

Ext 2 Implementation

Required Pieces � Ext 2 Control: Command line application � Ext 2 Client. Manager: Manages mount points � Ext 2 FS: Core file system functionality � Ext 2 Contracts: Defines communication

Ext 2 Client Manager � System service (SIP) launched at boot � Accessible at known location in /dev directory � Does “Ext 2 stuff” � Operates on Ext 2 volumes and mount points � Exports “Mount” and “Unmount” ◦ Would also provide “Format” if implemented � 300 lines of code

Ext 2 Control � Command line application � Allows Ext 2 Client Manger interface access � Not used by other applications � 500 lines of code

Ext 2 Fs � Core Ext 2 file system. � Separate instance (SIP) for each mount point. ◦ Exports “Directory Service Provider” interface � Clients open files and directories by attaching a communication channel ◦ Internally paired with an Inode. � Reads implemented, Writes in progress � 2400 Lines of code

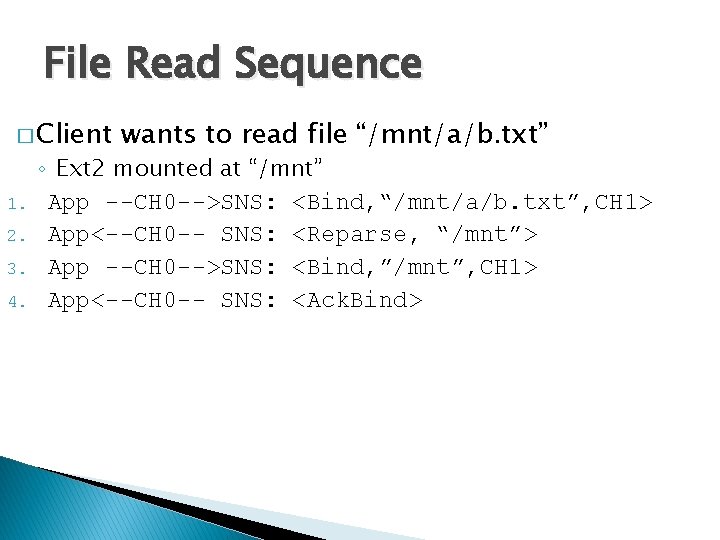

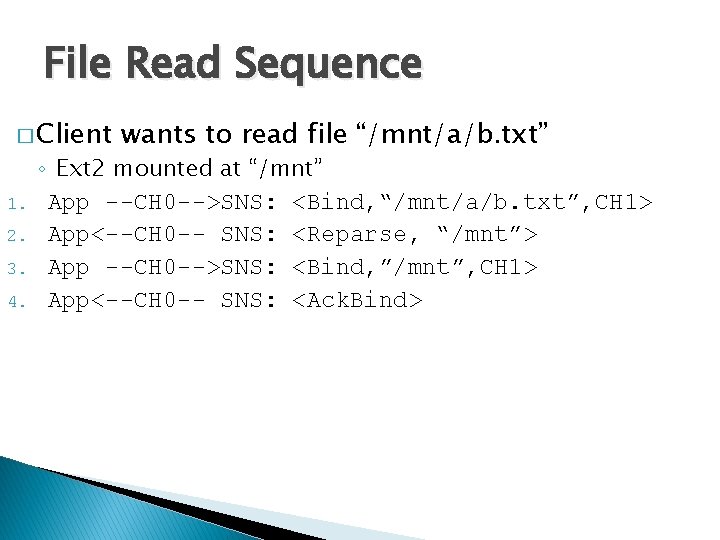

File Read Sequence � Client 1. 2. 3. 4. wants to read file “/mnt/a/b. txt” ◦ Ext 2 mounted at “/mnt” App --CH 0 -->SNS: <Bind, “/mnt/a/b. txt”, CH 1> App<--CH 0 -- SNS: <Reparse, “/mnt”> App --CH 0 -->SNS: <Bind, ”/mnt”, CH 1> App<--CH 0 -- SNS: <Ack. Bind>

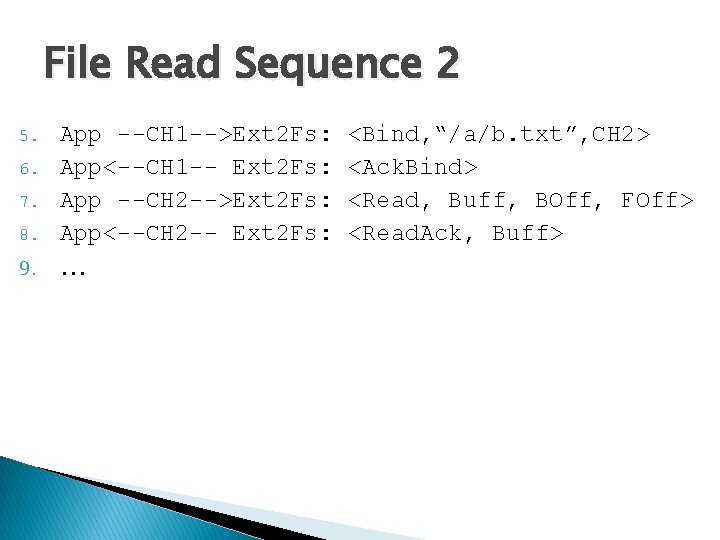

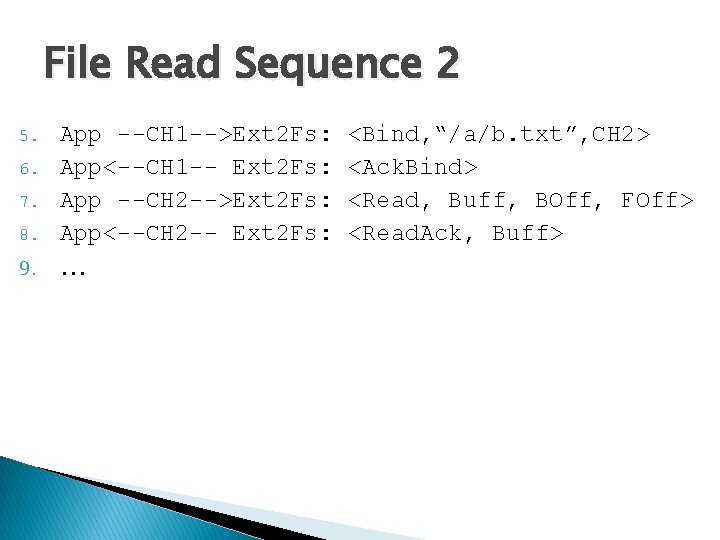

File Read Sequence 2 5. 6. 7. 8. 9. App --CH 1 -->Ext 2 Fs: App<--CH 1 -- Ext 2 Fs: App --CH 2 -->Ext 2 Fs: App<--CH 2 -- Ext 2 Fs: … <Bind, “/a/b. txt”, CH 2> <Ack. Bind> <Read, Buff, BOff, FOff> <Read. Ack, Buff>

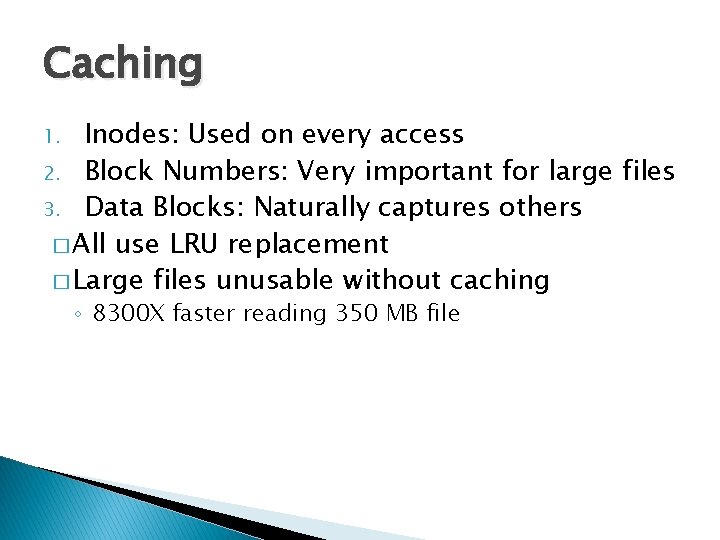

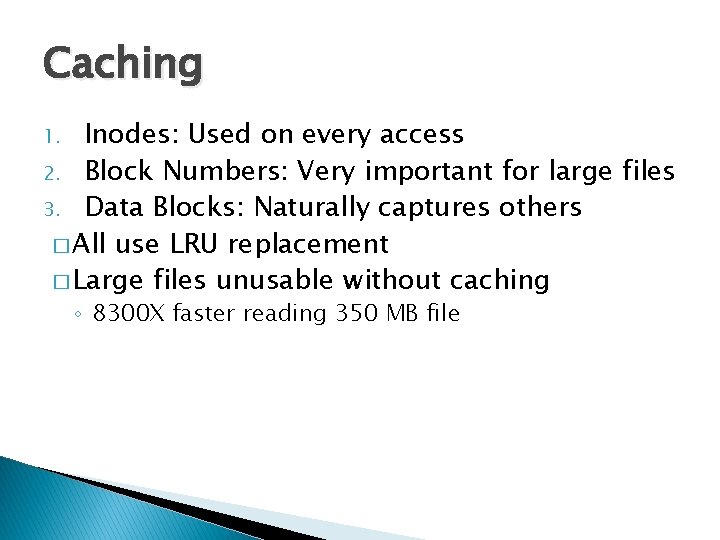

Caching Inodes: Used on every access 2. Block Numbers: Very important for large files 3. Data Blocks: Naturally captures others � All use LRU replacement � Large files unusable without caching 1. ◦ 8300 X faster reading 350 MB file

Results

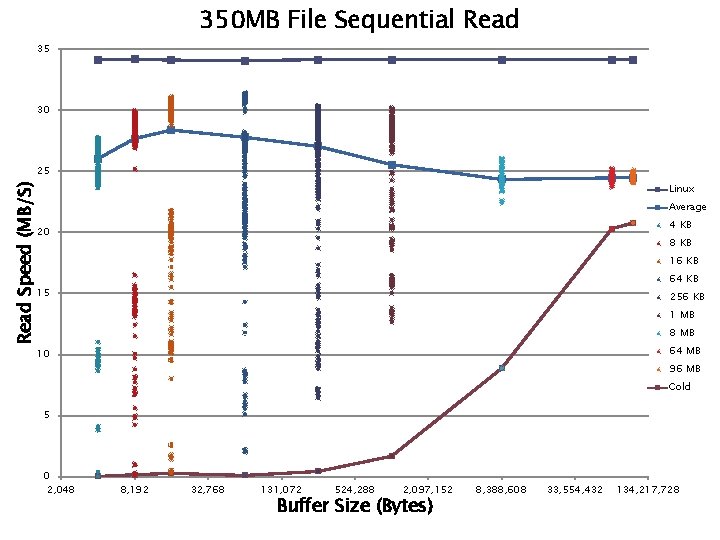

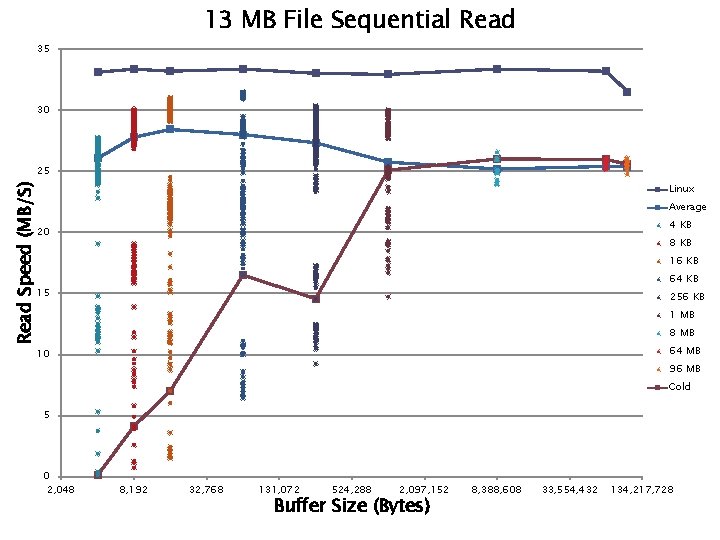

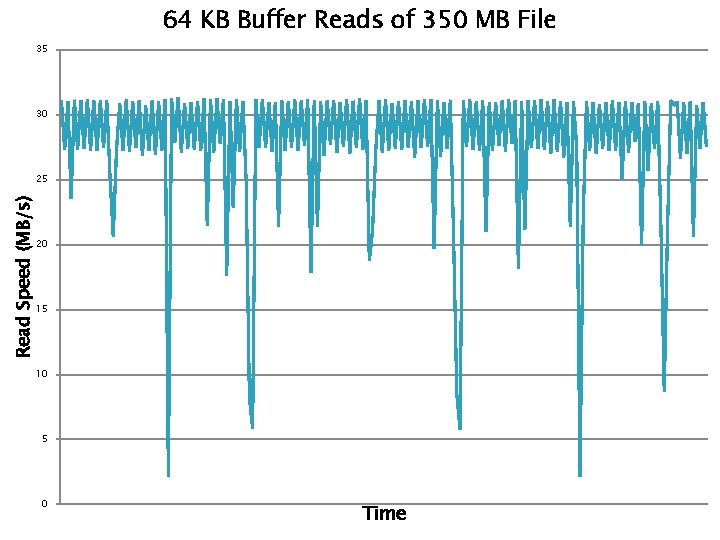

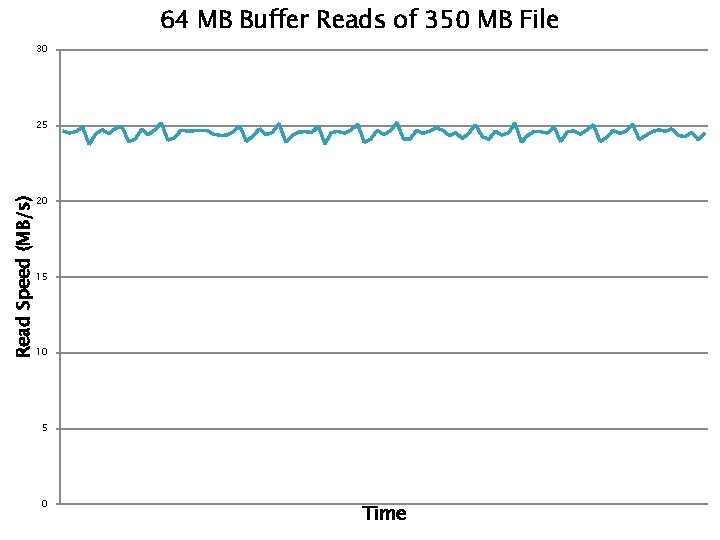

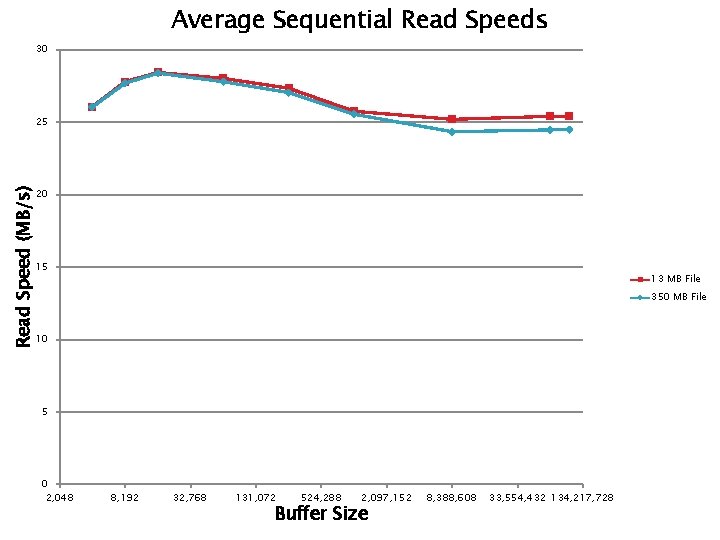

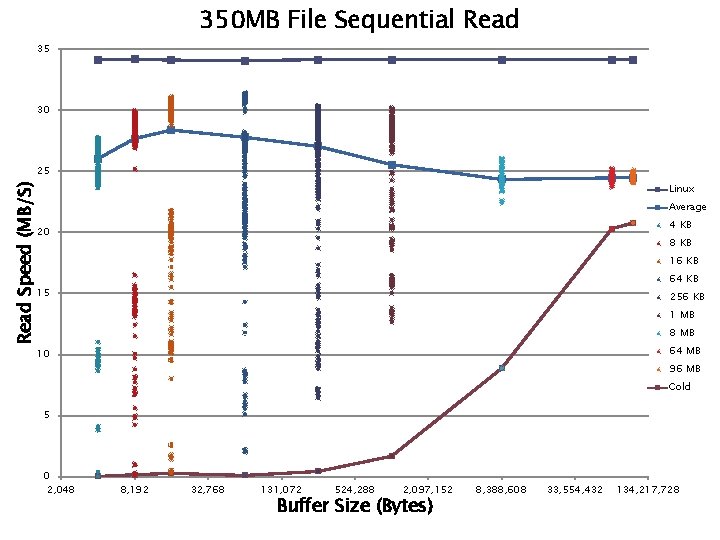

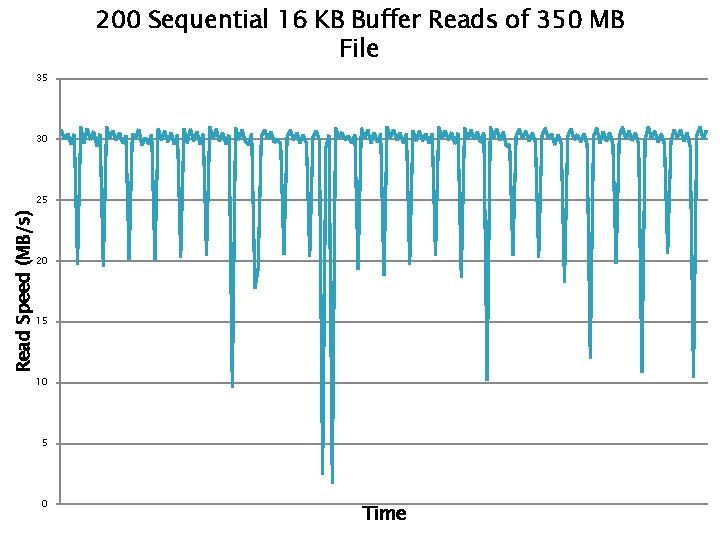

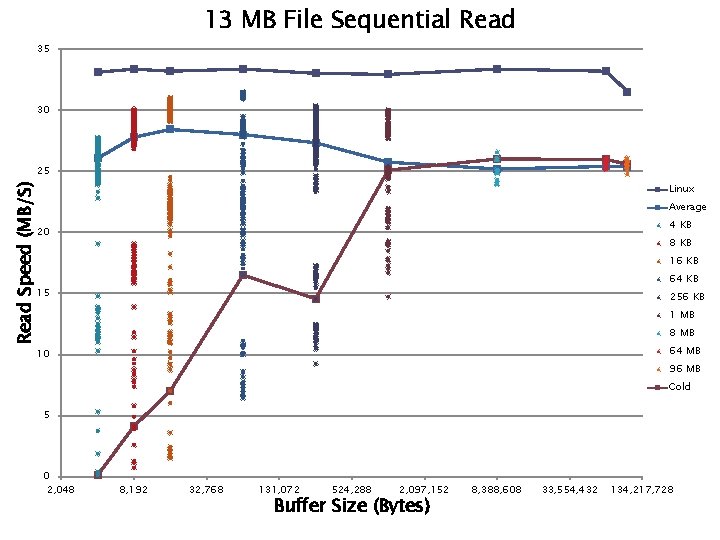

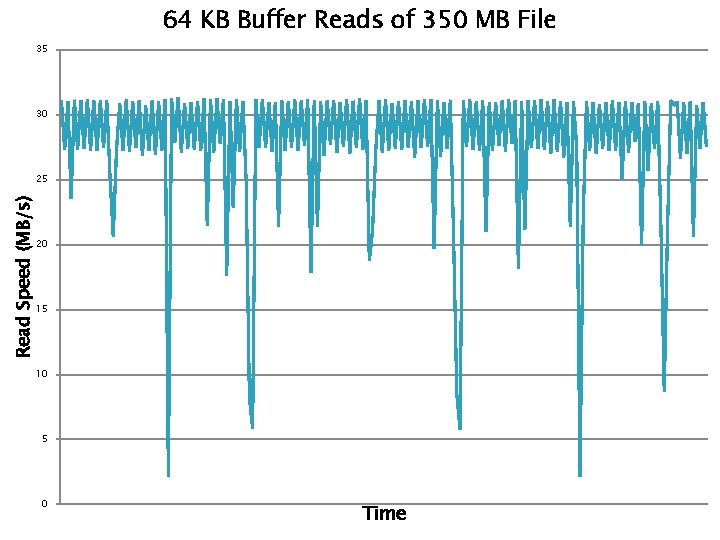

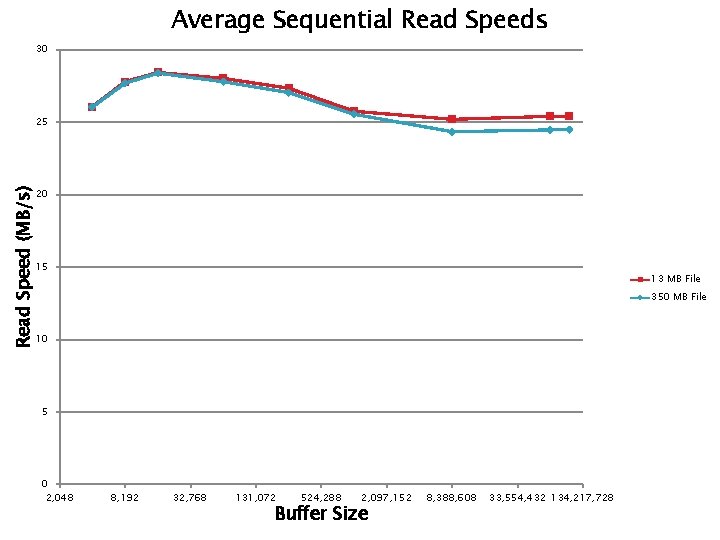

Testing � Athlon 64 3200, 1 GB RAM � Disk: 120 GB, 7200 RPM, 2 MB buffer, PATA � Measured sequential reads � Varied read buffer size from 4 KB to 96 MB � Timed each request � File sizes ranged from 13 MB to 350 MB

350 MB File Sequential Read 35 30 Read Speed (MB/S) 25 Linux Average 4 KB 20 8 KB 16 KB 64 KB 15 256 KB 1 MB 8 MB 64 MB 10 96 MB Cold 5 0 2, 048 8, 192 32, 768 131, 072 524, 288 2, 097, 152 Buffer Size (Bytes) 8, 388, 608 33, 554, 432 134, 217, 728

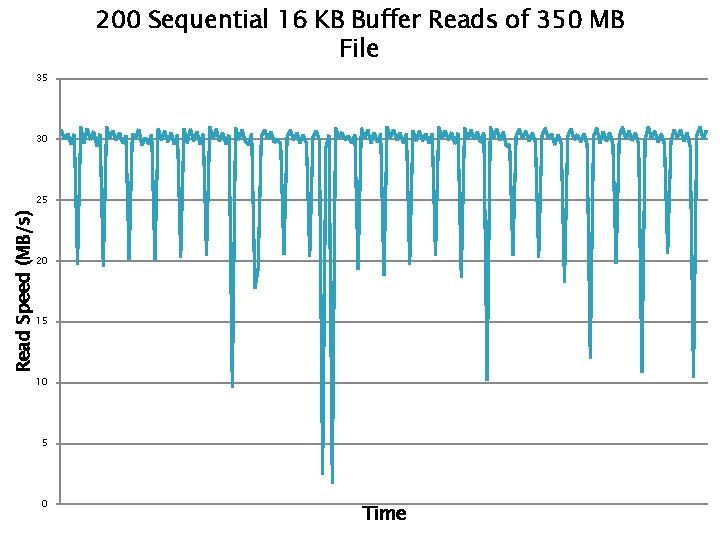

200 Sequential 16 KB Buffer Reads of 350 MB File 35 30 Read Speed (MB/s) 25 20 15 10 5 0 Time

Results � Linux is faster ◦ Not clear that this is fundamental � Performance is not horrible ◦ “Good enough” objective met ◦ Garbage collection hurts, but not “too bad” � Not sensitive to file size

Conclusion � System programming in a modern language � System programming with no crashes � Micro kernel is feasible ◦ Hurts feature integration: mmap, cache sharing ◦ Clean, simple interfaces

Questions

Extras

13 MB File Sequential Read 35 30 Read Speed (MB/S) 25 Linux Average 4 KB 20 8 KB 16 KB 64 KB 15 256 KB 1 MB 8 MB 64 MB 10 96 MB Cold 5 0 2, 048 8, 192 32, 768 131, 072 524, 288 2, 097, 152 Buffer Size (Bytes) 8, 388, 608 33, 554, 432 134, 217, 728

64 KB Buffer Reads of 350 MB File 35 30 Read Speed (MB/s) 25 20 15 10 5 0 Time

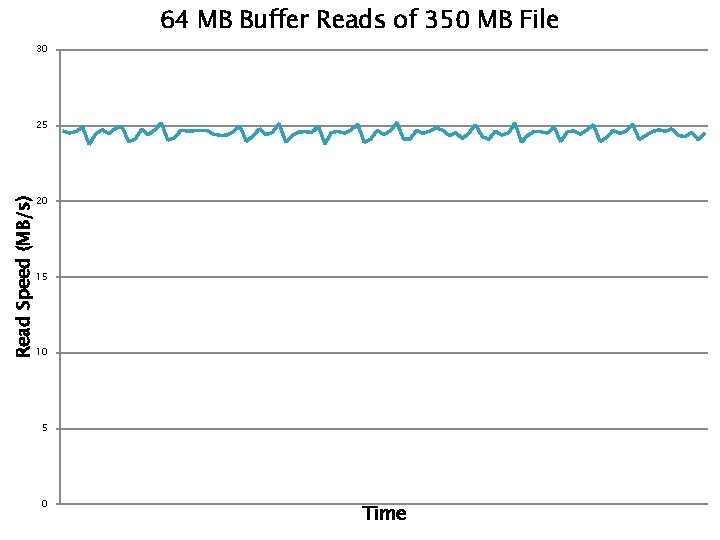

64 MB Buffer Reads of 350 MB File 30 25 Read Speed (MB/s) 20 15 10 5 0 Time

Average Sequential Read Speeds 30 Read Speed (MB/s) 25 20 15 13 MB File 350 MB File 10 5 0 2, 048 8, 192 32, 768 131, 072 524, 288 2, 097, 152 Buffer Size 8, 388, 608 33, 554, 432 134, 217, 728