Exploiting Route Redundancy via Structured Peer to Peer

- Slides: 29

Exploiting Route Redundancy via Structured Peer to Peer Overlays Ben Y. Zhao, Ling Huang, Jeremy Stribling, Anthony D. Joseph, and John D. Kubiatowicz University of California, Berkeley ICNP 2003 November 7, 2003 ravenben@eecs. berkeley. edu

Challenges Facing Network Applications n Network connectivity is not reliable q q Disconnections frequent in the wide-area Internet IP-level repair is slow n n n Wide-area: BGP 3 mins Local-area: IS-IS 5 seconds Next generation network applications q q Mostly wide-area Streaming media, Vo. IP, B 2 B transactions Low tolerance of delay, jitter and faults Our work: transparent resilient routing infrastructure that adapts to faults in not seconds, but milliseconds November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

Talk Overview n n n Motivation Why structured routing Structured Peer to Peer overlays Mechanisms and policy Evaluation Summary November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

Routing in “Mesh-like” Networks n n Previous work has shown reasons for long convergence [Labovitz 00, Labovitz 01] Min. Route. Adver timer q Necessary to aggregate updates from all neighbors n q n Contributes to lower bound of BGP convergence time Internet becoming more mesh-like [Kaat 99, labovitz 99] q n Commonly set to 30 seconds Worsens BGP convergence behavior Question q Can convergence be faster in context of structured routing? November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

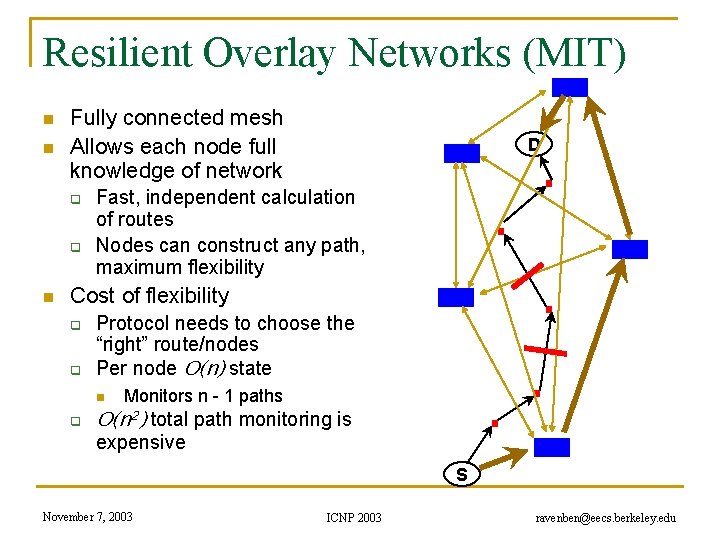

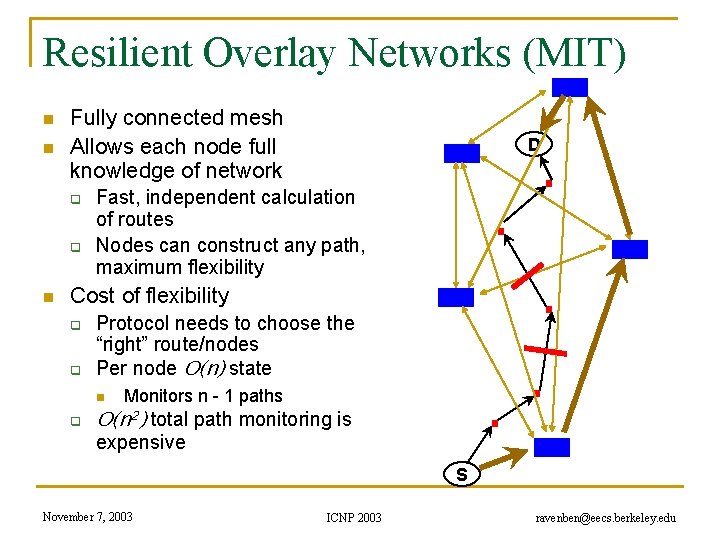

Resilient Overlay Networks (MIT) n n Fully connected mesh Allows each node full knowledge of network q q n D Fast, independent calculation of routes Nodes can construct any path, maximum flexibility Cost of flexibility q q Protocol needs to choose the “right” route/nodes Per node O(n) state n q Monitors n - 1 paths O(n 2) total path monitoring is expensive S November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

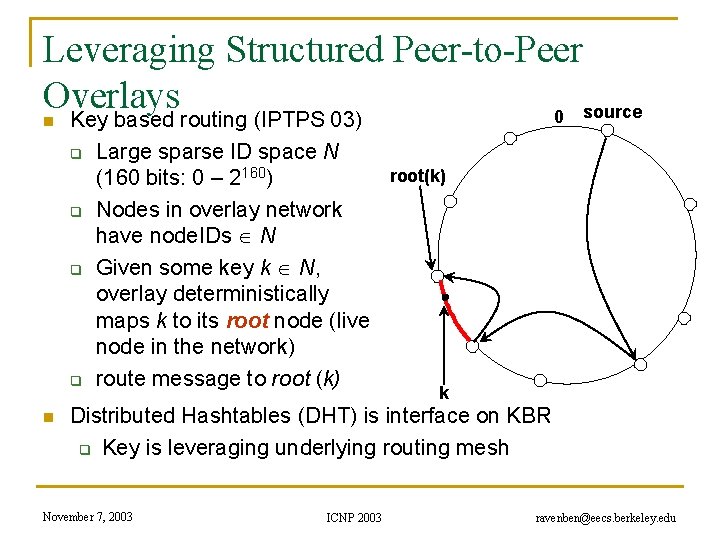

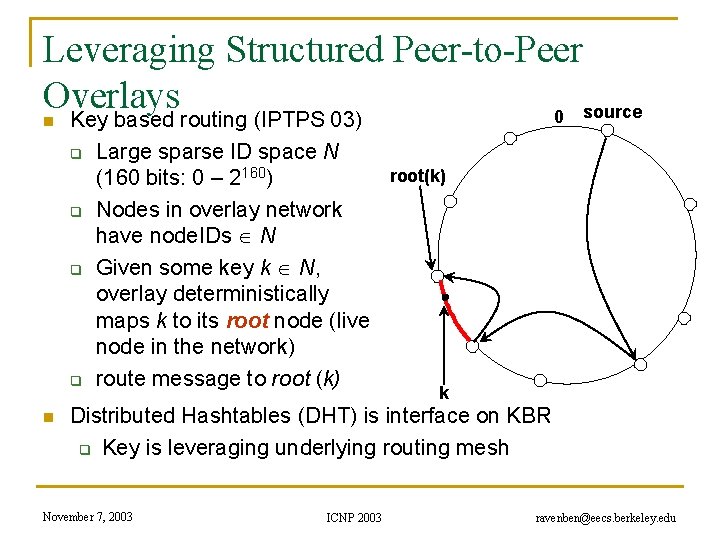

Leveraging Structured Peer-to-Peer Overlays 0 source n Key based routing (IPTPS 03) q Large sparse ID space N root(k) (160 bits: 0 – 2160) q Nodes in overlay network have node. IDs N q Given some key k N, overlay deterministically maps k to its root node (live node in the network) q route message to root (k) k n Distributed Hashtables (DHT) is interface on KBR q Key is leveraging underlying routing mesh November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

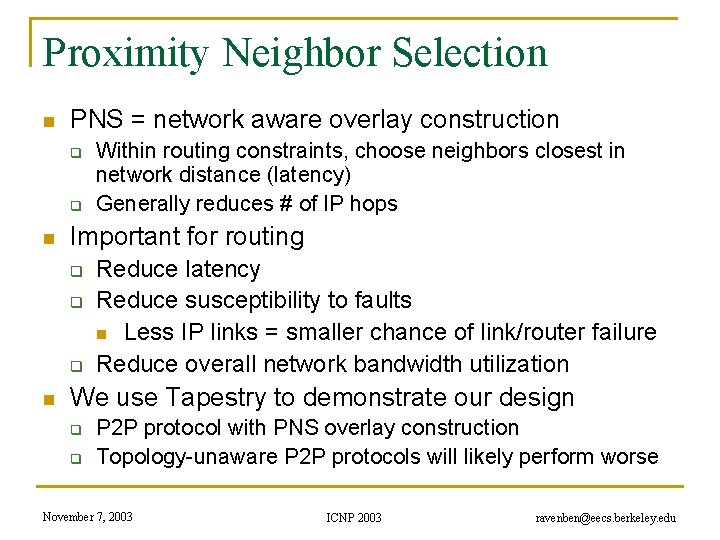

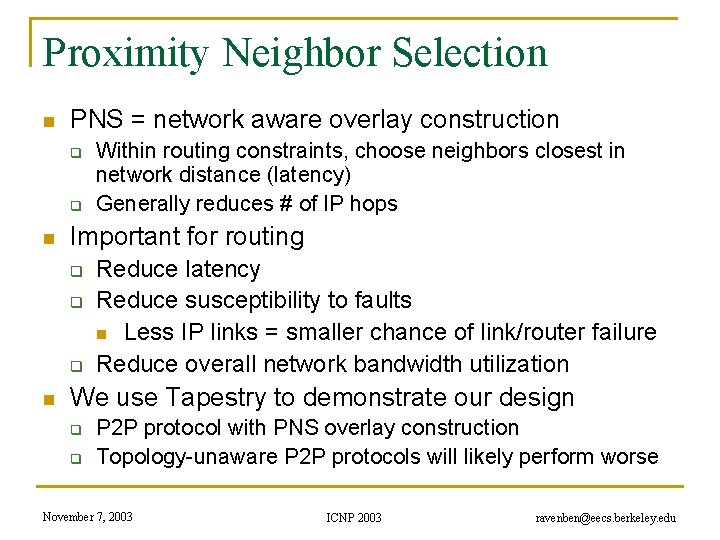

Proximity Neighbor Selection n PNS = network aware overlay construction q q n Important for routing q q q n Within routing constraints, choose neighbors closest in network distance (latency) Generally reduces # of IP hops Reduce latency Reduce susceptibility to faults n Less IP links = smaller chance of link/router failure Reduce overall network bandwidth utilization We use Tapestry to demonstrate our design q q P 2 P protocol with PNS overlay construction Topology-unaware P 2 P protocols will likely perform worse November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

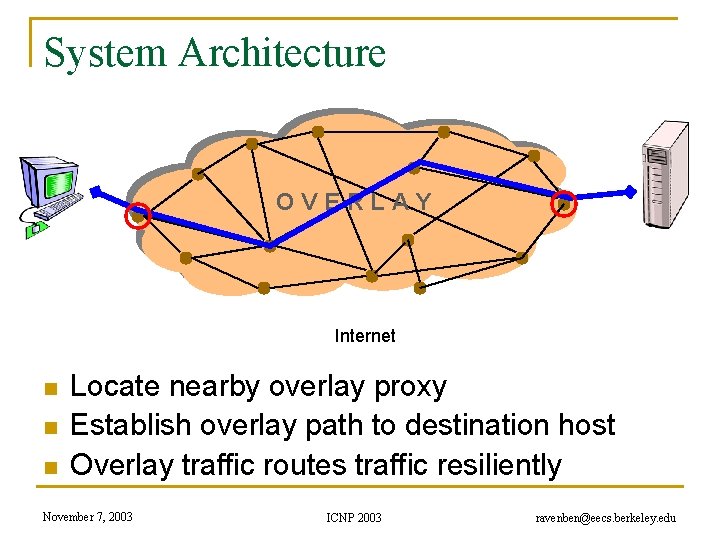

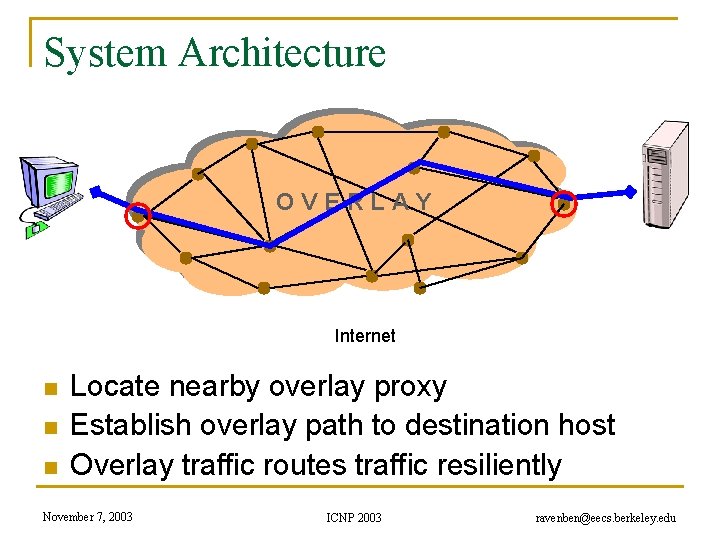

System Architecture v v v OVERLAY v v v v Internet n n n Locate nearby overlay proxy Establish overlay path to destination host Overlay traffic routes traffic resiliently November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

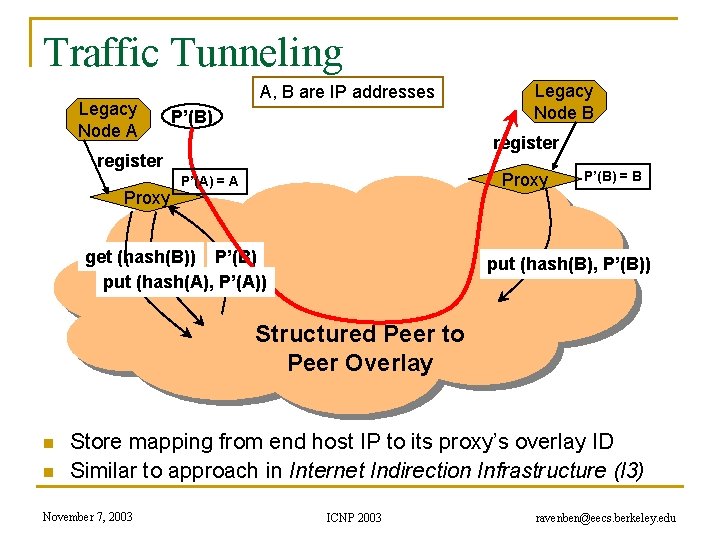

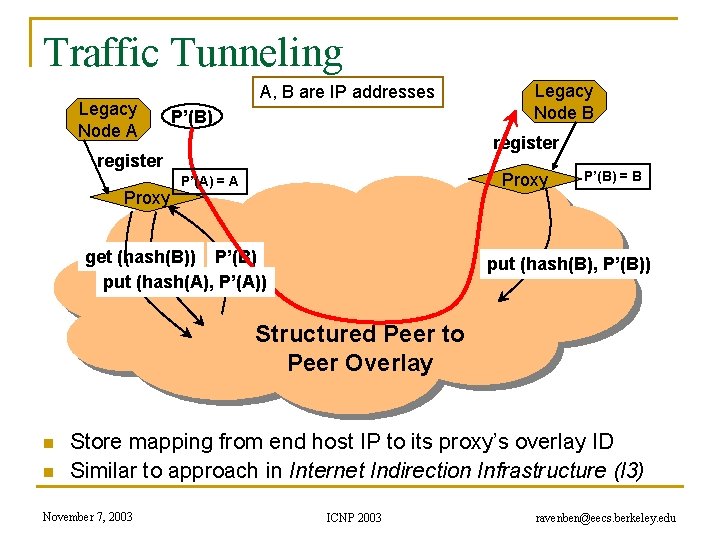

Traffic Tunneling Legacy Node A A, B are IP addresses P’(B) B register Proxy Legacy Node B Proxy P’(A) = A get (hash(B)) P’(B) put (hash(A), P’(A)) P’(B) = B put (hash(B), P’(B)) Structured Peer to Peer Overlay n n Store mapping from end host IP to its proxy’s overlay ID Similar to approach in Internet Indirection Infrastructure (I 3) November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

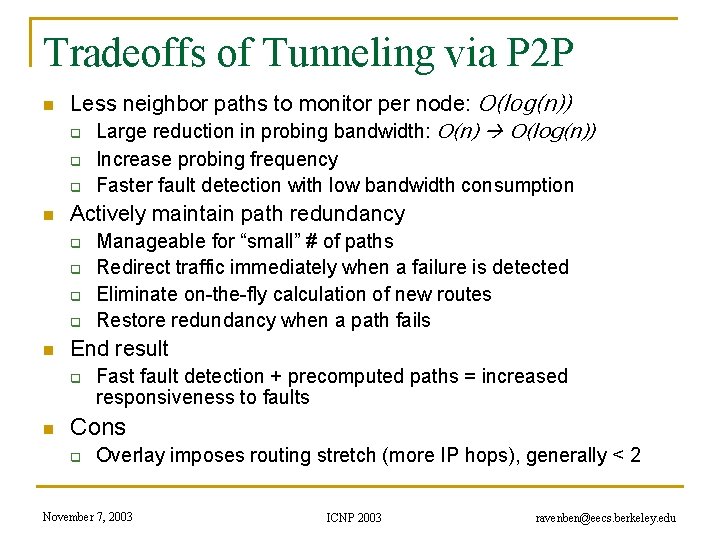

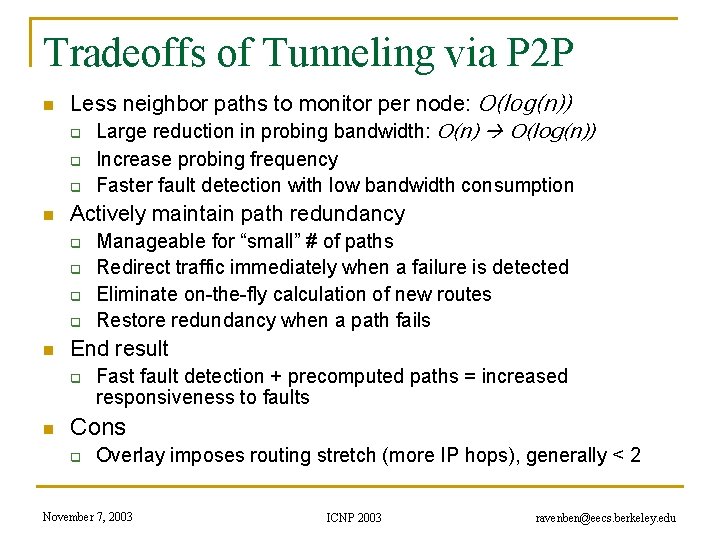

Tradeoffs of Tunneling via P 2 P n Less neighbor paths to monitor per node: O(log(n)) q Large reduction in probing bandwidth: O(n) O(log(n)) q q n Actively maintain path redundancy q q n Manageable for “small” # of paths Redirect traffic immediately when a failure is detected Eliminate on-the-fly calculation of new routes Restore redundancy when a path fails End result q n Increase probing frequency Faster fault detection with low bandwidth consumption Fast fault detection + precomputed paths = increased responsiveness to faults Cons q Overlay imposes routing stretch (more IP hops), generally < 2 November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

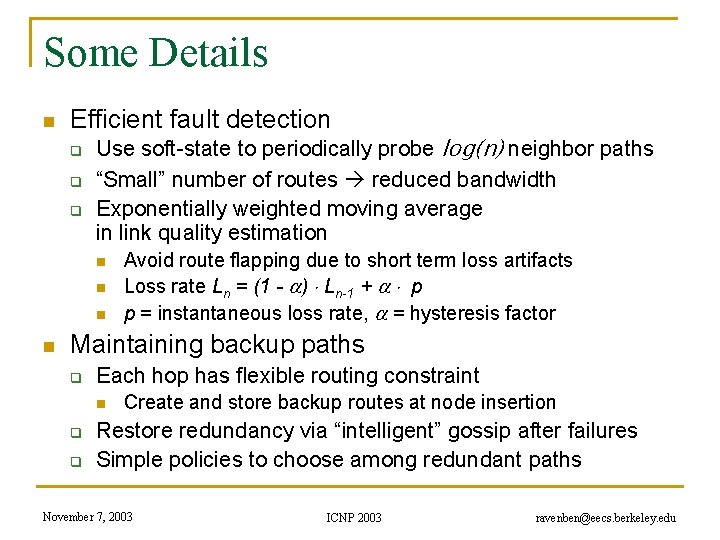

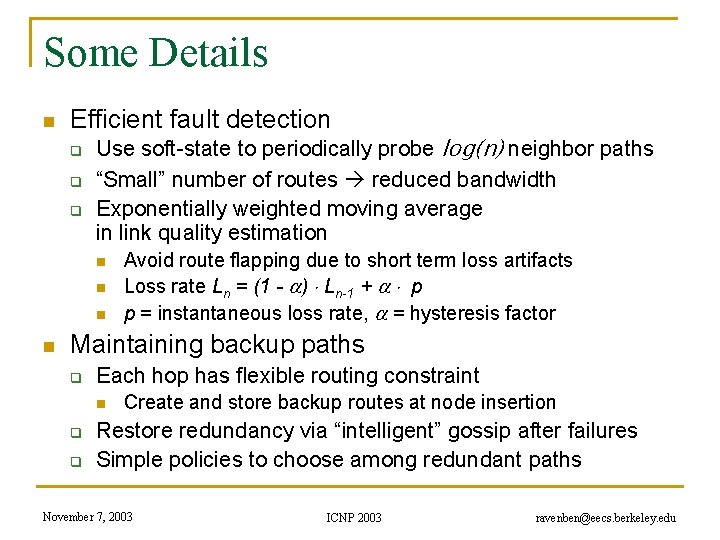

Some Details n Efficient fault detection q q q Use soft-state to periodically probe log(n) neighbor paths “Small” number of routes reduced bandwidth Exponentially weighted moving average in link quality estimation n n Avoid route flapping due to short term loss artifacts Loss rate Ln = (1 - ) Ln-1 + p p = instantaneous loss rate, = hysteresis factor Maintaining backup paths q Each hop has flexible routing constraint n q q Create and store backup routes at node insertion Restore redundancy via “intelligent” gossip after failures Simple policies to choose among redundant paths November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

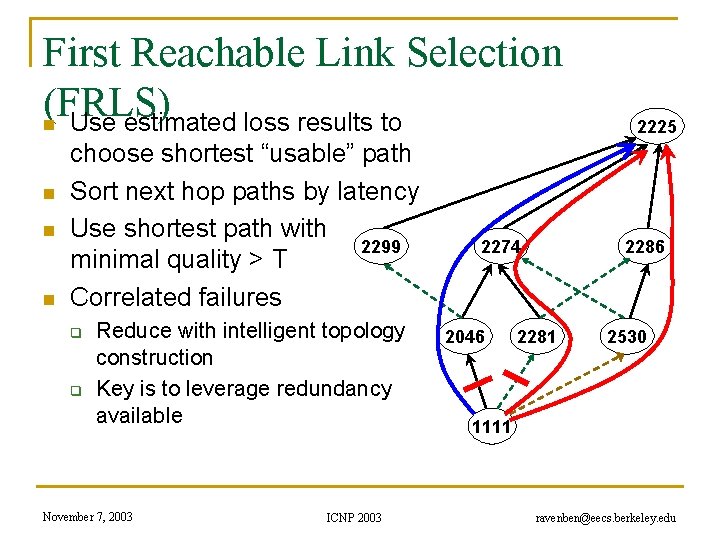

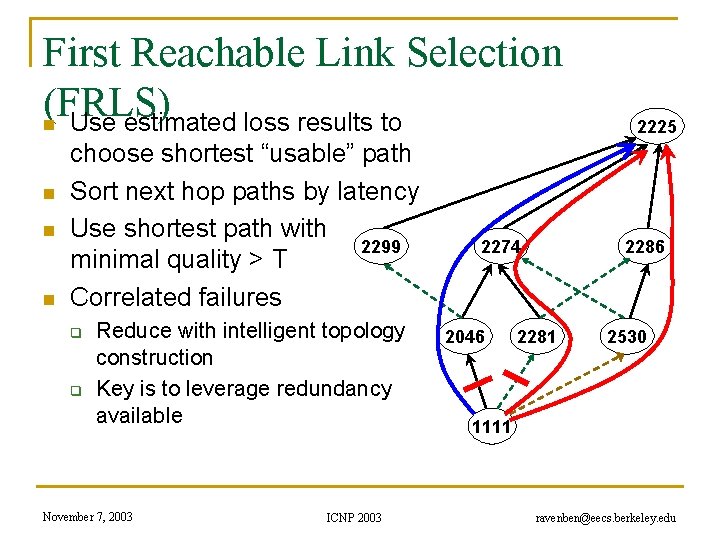

First Reachable Link Selection (FRLS) n Use estimated loss results to n n n choose shortest “usable” path Sort next hop paths by latency Use shortest path with 2299 minimal quality > T Correlated failures q q Reduce with intelligent topology construction Key is to leverage redundancy available November 7, 2003 ICNP 2003 2274 2046 2225 2286 2281 2530 1111 ravenben@eecs. berkeley. edu

Evaluation n Metrics for evaluation q q q How much routing resiliency can we exploit? How fast can we adapt to faults? What is the overhead of routing around a failure? n n n Proportional increase in end to end latency Proportional increase in end to end bandwidth used Experimental platforms q Event-based simulations on transit stub topologies n q Data collected over different 5000 -node topologies Planet. Lab measurements n n n Microbenchmarks on responsiveness Bandwidth measurements from 200+ node overlays Multiple virtual nodes run per physical machine November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

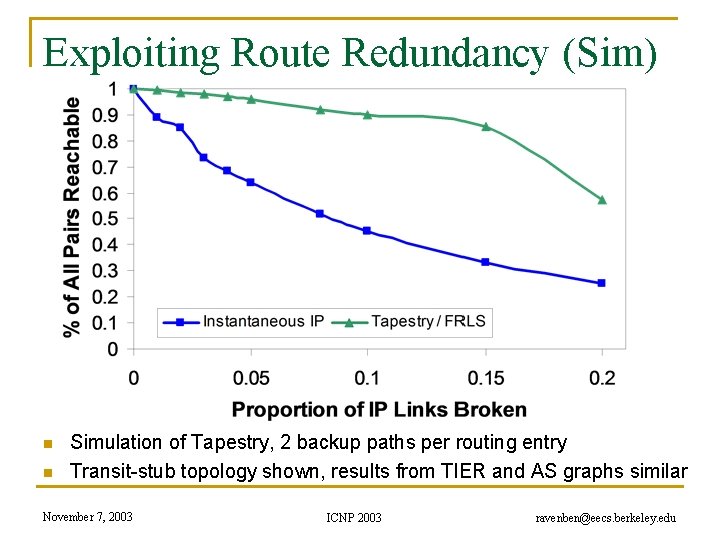

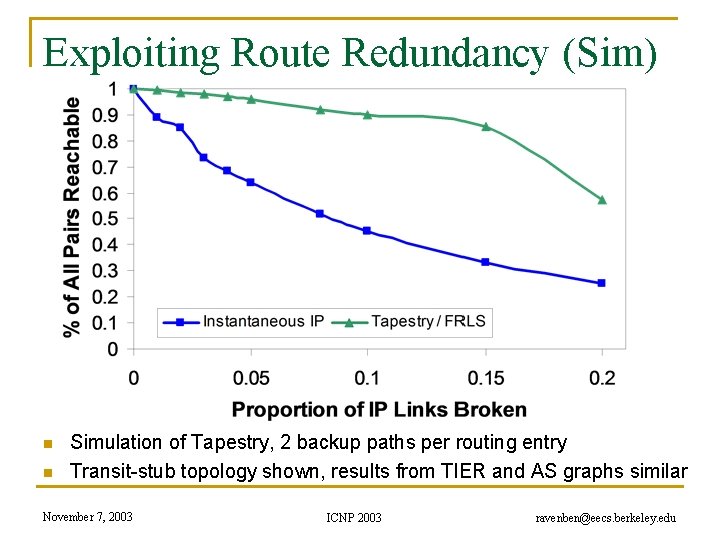

Exploiting Route Redundancy (Sim) n n Simulation of Tapestry, 2 backup paths per routing entry Transit-stub topology shown, results from TIER and AS graphs similar November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

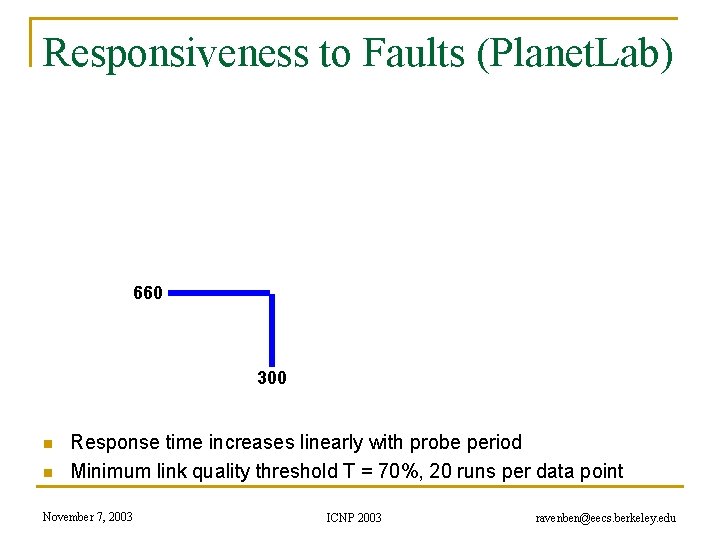

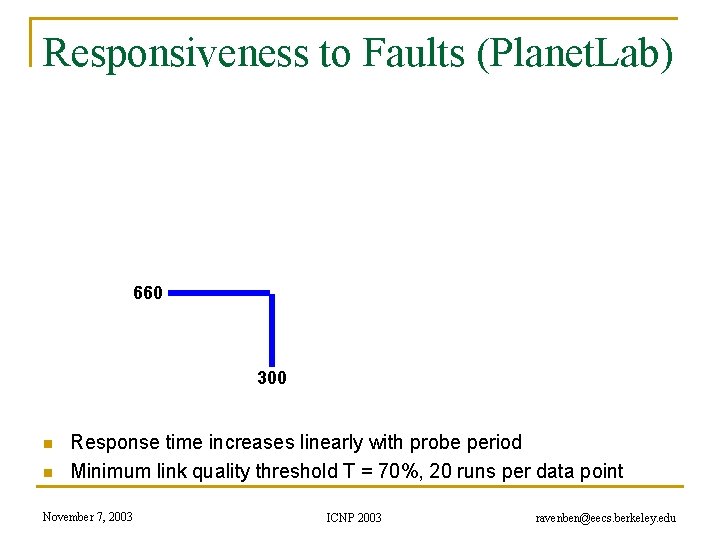

Responsiveness to Faults (Planet. Lab) 660 300 n n Response time increases linearly with probe period Minimum link quality threshold T = 70%, 20 runs per data point November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

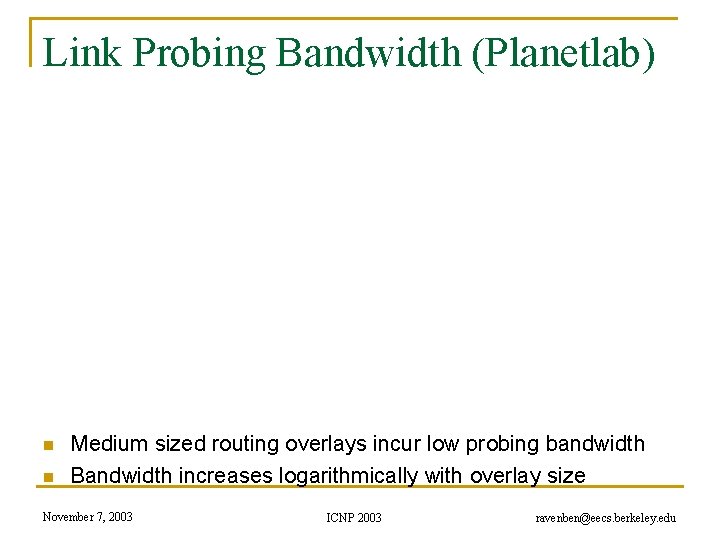

Link Probing Bandwidth (Planetlab) n n Medium sized routing overlays incur low probing bandwidth Bandwidth increases logarithmically with overlay size November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

Related Work n Redirection overlays q q n Topology estimation techniques q q n Detour (IEEE Micro 99) Resilient Overlay Networks (SOSP 01) Internet Indirection Infrastructure (SIGCOMM 02) Secure Overlay Services (SIGCOMM 02) Adaptive probing (IPTPS 03) Peer-based shared estimation (Zhuang 03) Internet tomography (Chen 03) Routing underlay (SIGCOMM 03) Structured peer-to-peer overlays q Tapestry, Pastry, Chord, CAN, Kademlia, Skipnet, Viceroy, Symphony, Koorde, Bamboo, X-Ring… November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

Conclusion n Benefits of structure outweigh costs q Structured routing lowers path maintenance costs n q Can no longer construct arbitrary paths n n n Structured routing with low redundancy gets very close to ideal in connectivity Incur low routing stretch Fast enough for highly interactive applications q q n Allows “caching” of backup paths for quick failover 300 ms beacon period response time < 700 ms On overlay networks of 300 nodes, b/w cost is 7 KB/s Future work q q Deploying a public routing and proxy service on Planet. Lab Examine impact of n n Network aware topology construction Loss sensitive probing techniques November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

Questions… n Related websites: Tapestry http: //www. cs. berkeley. edu/~ravenben/tapestry q Pastry http: //research. microsoft. com/~antr/pastry q Chord http: //lcs. mit. edu/chord q n Acknowledgements q Thanks to Dennis Geels and Sean Rhea for their work on the BMark benchmark suite November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

Backup Slides November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

Another Perspective on Reachability Portion of all pairwise paths where no failure-free paths remain A path exists, but neither IP nor FRLS can locate the path Portion of all paths where IP and FRLS both route successfully November 7, 2003 FRLS finds path, where short-term IP routing fails ICNP 2003 ravenben@eecs. berkeley. edu

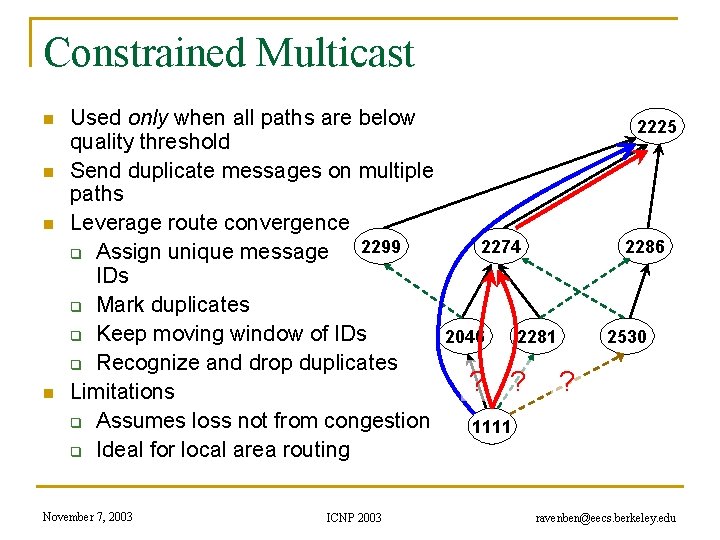

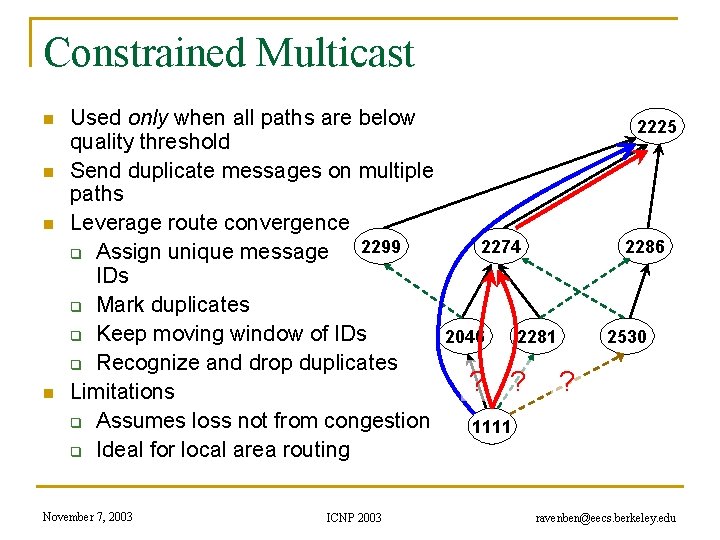

Constrained Multicast n n Used only when all paths are below quality threshold Send duplicate messages on multiple paths Leverage route convergence 2299 2274 q Assign unique message IDs q Mark duplicates q Keep moving window of IDs 2046 2281 q Recognize and drop duplicates ? ? ? Limitations q Assumes loss not from congestion 1111 q Ideal for local area routing November 7, 2003 ICNP 2003 2225 2286 2530 ravenben@eecs. berkeley. edu

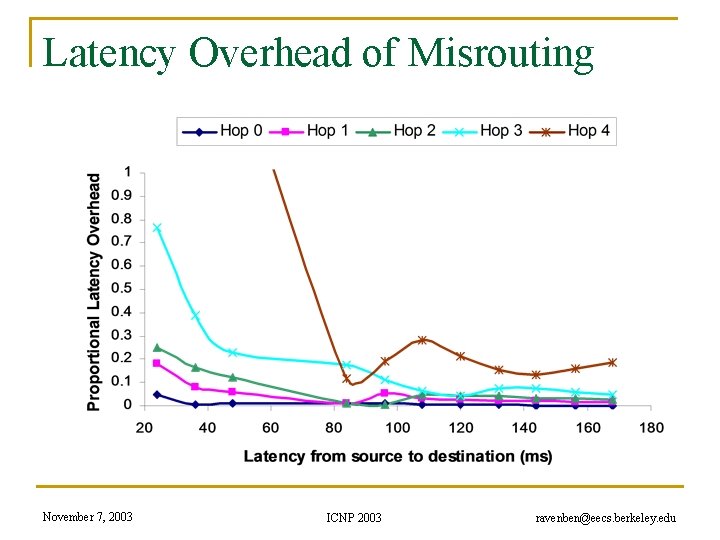

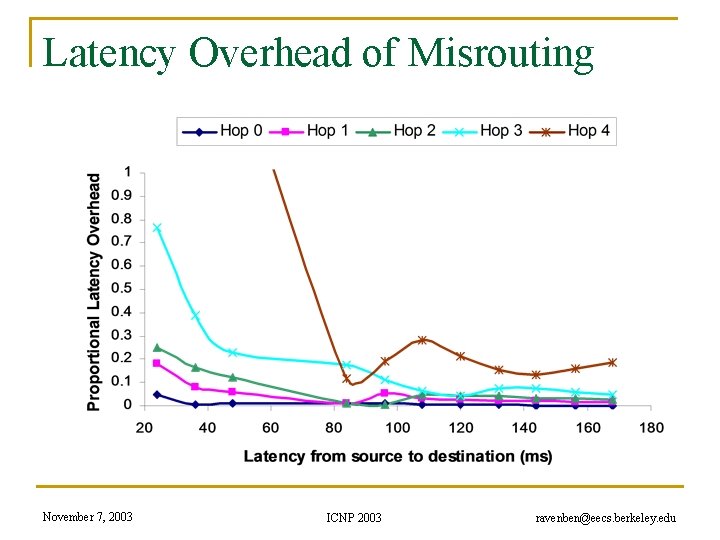

Latency Overhead of Misrouting November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

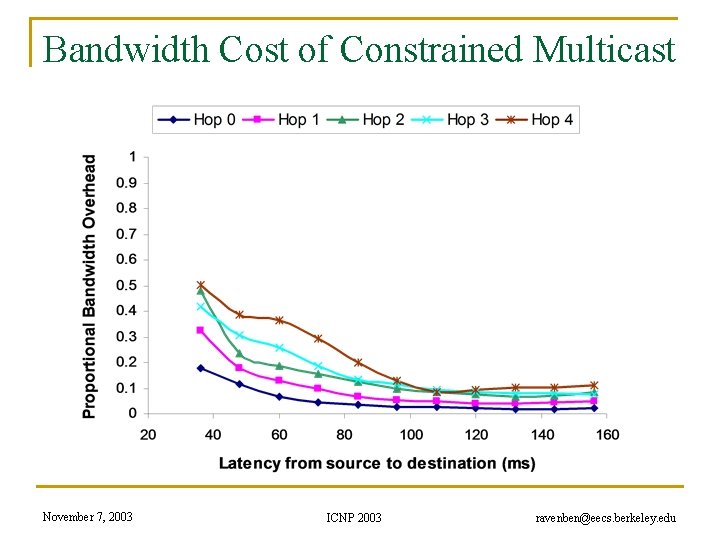

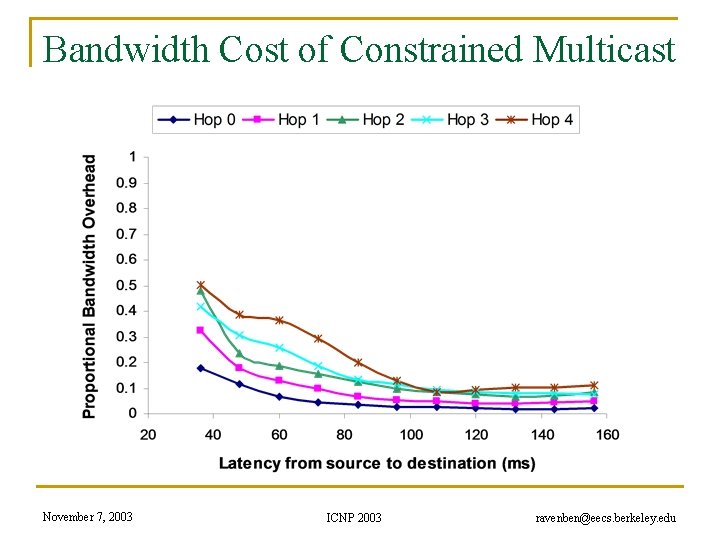

Bandwidth Cost of Constrained Multicast November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

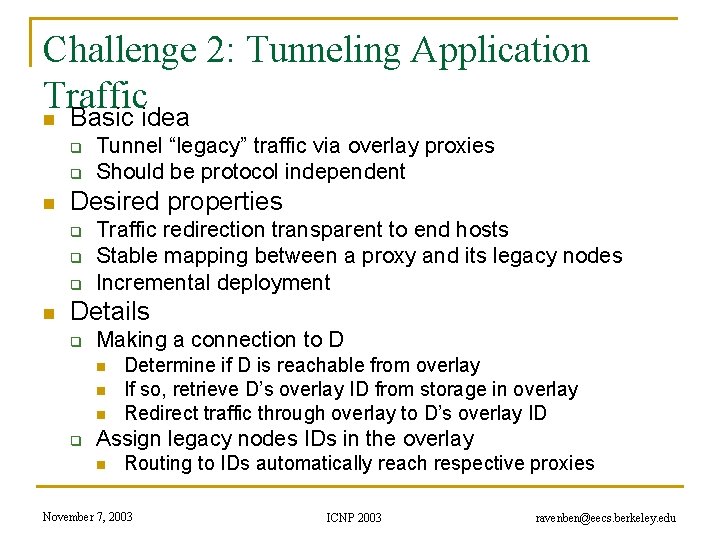

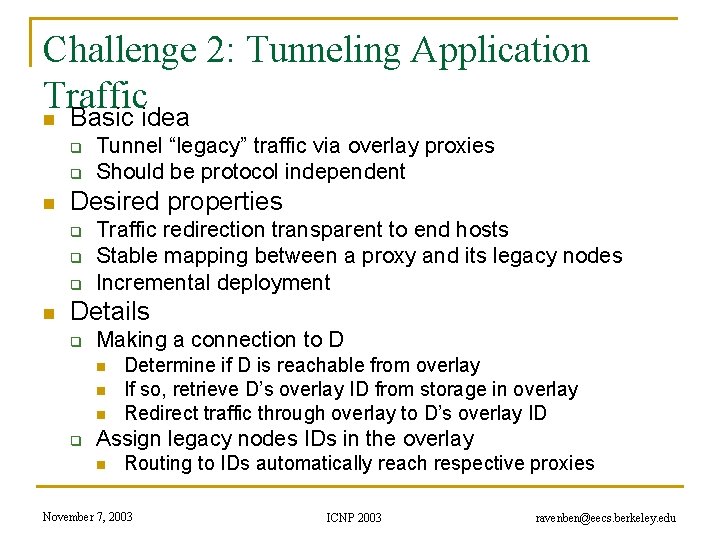

Challenge 2: Tunneling Application Traffic n Basic idea q q n Desired properties q q q n Tunnel “legacy” traffic via overlay proxies Should be protocol independent Traffic redirection transparent to end hosts Stable mapping between a proxy and its legacy nodes Incremental deployment Details q Making a connection to D n n n q Determine if D is reachable from overlay If so, retrieve D’s overlay ID from storage in overlay Redirect traffic through overlay to D’s overlay ID Assign legacy nodes IDs in the overlay n Routing to IDs automatically reach respective proxies November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

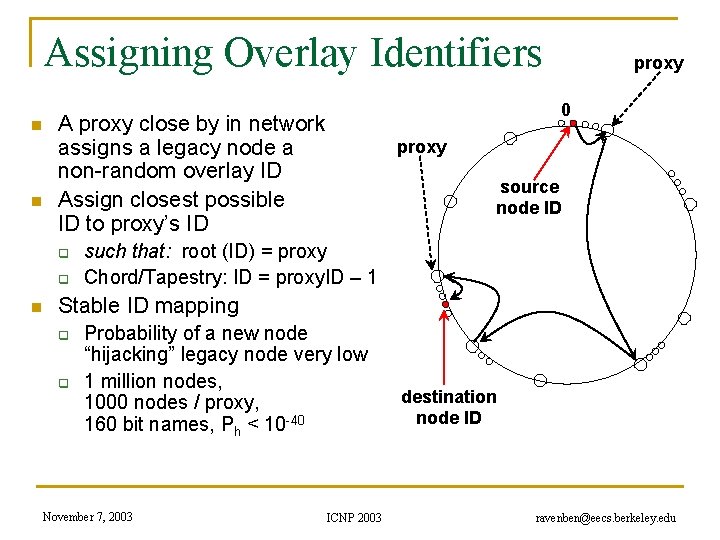

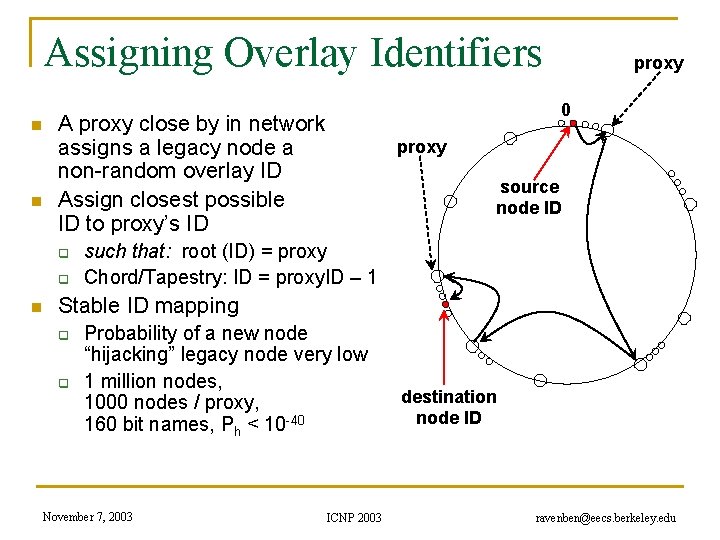

Assigning Overlay Identifiers n n q n 0 A proxy close by in network assigns a legacy node a non-random overlay ID Assign closest possible ID to proxy’s ID q proxy source node ID such that: root (ID) = proxy Chord/Tapestry: ID = proxy. ID – 1 Stable ID mapping q q Probability of a new node “hijacking” legacy node very low 1 million nodes, 1000 nodes / proxy, 160 bit names, Ph < 10 -40 November 7, 2003 ICNP 2003 destination node ID ravenben@eecs. berkeley. edu

Unused Slides November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

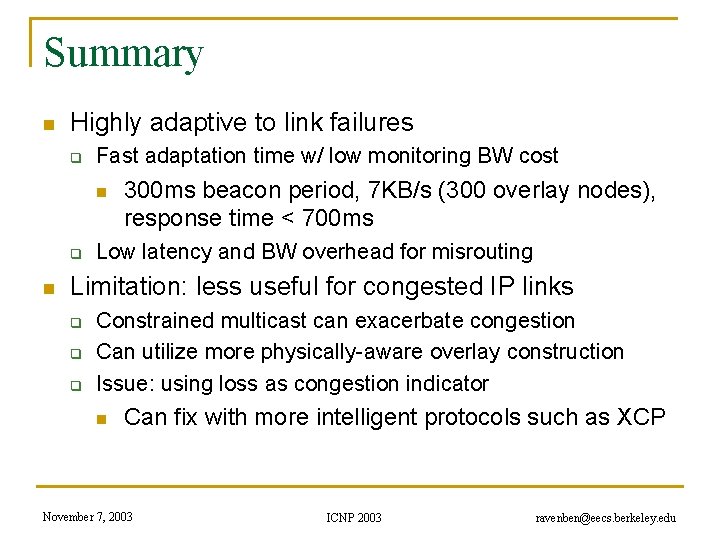

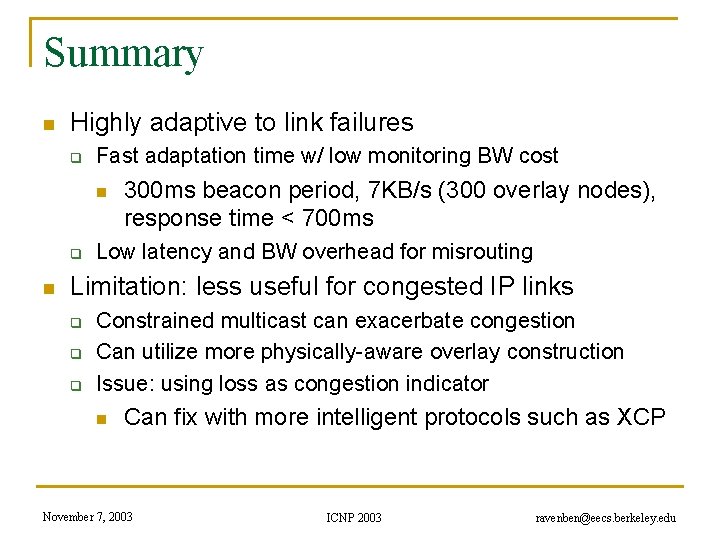

Summary n Highly adaptive to link failures q Fast adaptation time w/ low monitoring BW cost n q n 300 ms beacon period, 7 KB/s (300 overlay nodes), response time < 700 ms Low latency and BW overhead for misrouting Limitation: less useful for congested IP links q q q Constrained multicast can exacerbate congestion Can utilize more physically-aware overlay construction Issue: using loss as congestion indicator n Can fix with more intelligent protocols such as XCP November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu

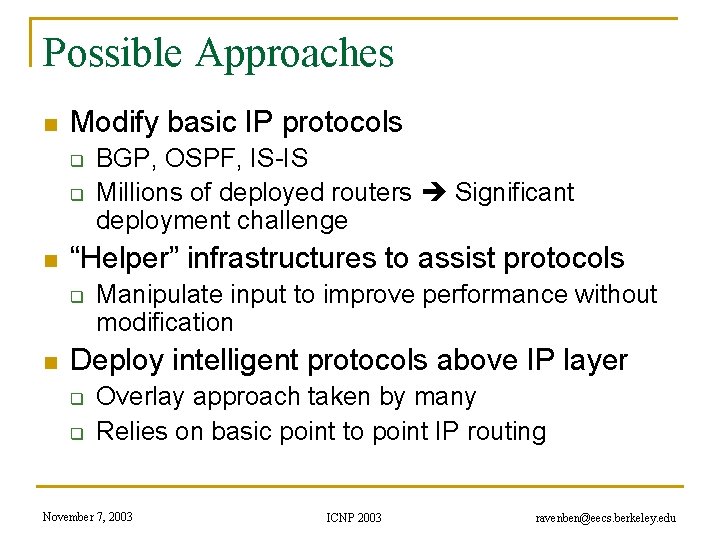

Possible Approaches n Modify basic IP protocols q q n “Helper” infrastructures to assist protocols q n BGP, OSPF, IS-IS Millions of deployed routers Significant deployment challenge Manipulate input to improve performance without modification Deploy intelligent protocols above IP layer q q Overlay approach taken by many Relies on basic point to point IP routing November 7, 2003 ICNP 2003 ravenben@eecs. berkeley. edu