Exploiting Memory Hierarchy Chapter 7 B Ramamurthy Page

Exploiting Memory Hierarchy Chapter 7 B. Ramamurthy Page 1 12/26/2021

Direct Mapped Cache: the Idea Cache Main Memory All addresses with LSB 001 will map to purple cache slot All addresses with LSB 101 will map to blue cache slot And so on Page 2 12/26/2021

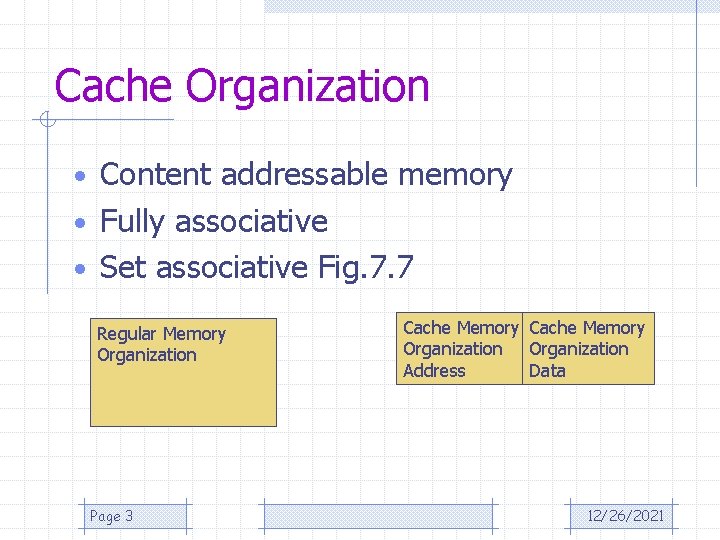

Cache Organization • Content addressable memory • Fully associative • Set associative Fig. 7. 7 Regular Memory Organization Page 3 Cache Memory Organization Address Data 12/26/2021

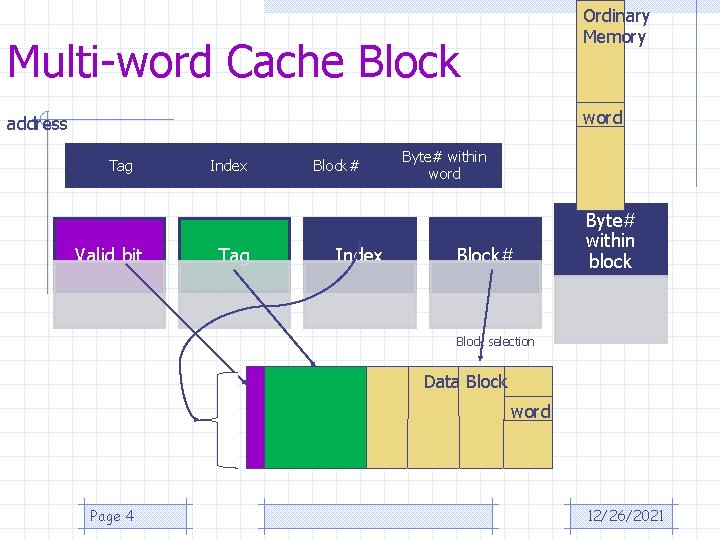

Ordinary Memory Multi-word Cache Block word address Tag Valid bit Index Tag Block# Index Byte# within word Block# Byte# within block Block selection Data Block word Page 4 12/26/2021

Address Cache block# • Floor (Address/#bytes per block) block# in main memory • (Block# in memory % blocks in cache) cache block# • Example: • Floor(457/4) 114 • 114%8 2 Page 5 12/26/2021

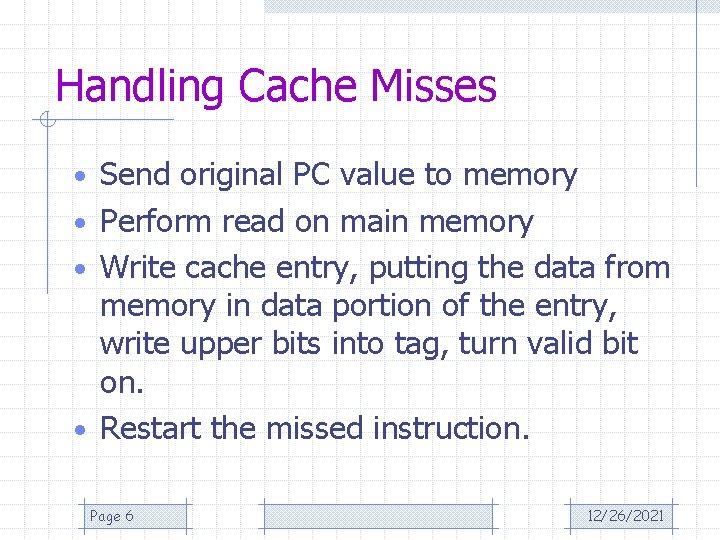

Handling Cache Misses • Send original PC value to memory • Perform read on main memory • Write cache entry, putting the data from memory in data portion of the entry, write upper bits into tag, turn valid bit on. • Restart the missed instruction. Page 6 12/26/2021

Handling Writes • Write through: A scheme in which writes always update both the cache and the memory, ensuring that data is always consistent between two. • Write-back: A scheme that handles writes by updating values only to the block in the cache, then writing the modified block to the main memory. Page 7 12/26/2021

Example • SPEC 2000 • CPI 1. 0 with no misses • Each miss incurs 100 extra cycles; miss occurs 10% of the times. • Average CPI : 1 + 100 X 0. 1 = 1+ 10 = 11 cycles (not good!) Page 8 12/26/2021

An Example Cache: The Intrinsity Fast. Math processor • 12 -stage pipeline • When operating on peak speed, the processor can request both an instruction and a data word on every clock. • Separate instruction and data cache are used. • Each cache is 16 KB or 4 K words with 16 -word blocks. Page 9 12/26/2021

Fig 7. 9 • 256 blocks with 16 words per block. Page 10 12/26/2021

- Slides: 10