Explainable Recommendation Perspectives from Knowledge Graph Reasoning and

Explainable Recommendation: Perspectives from Knowledge Graph Reasoning and Natural Language Generation Xiting Wang Xie Microsoft Research Asia Social Computing Group

Outline • Basics • Definition and goals • Basic types of explanations and applications • Recent advances • Structured: graph-based reasoning • Unstructured: natural language generation • Challenges and future directions 2

Explainable Recommendation: Definition • A recommender system that explains why an item is recommended • Comparison with traditional recommendation • Tradition recommendation: What, Who, When, Where • Explainable recommendation: Why Traditional Recommendation Explainable Recommendation 1. Fog Harbor Fish House 2. Thy’s Noodles 3. Got Harvest … Fog Harbor Fish House Their tan noodles are made of magic. The chili oil is really appetizing. Prices are reasonable. Similar with Thy’s Noodles, which you may like. 3

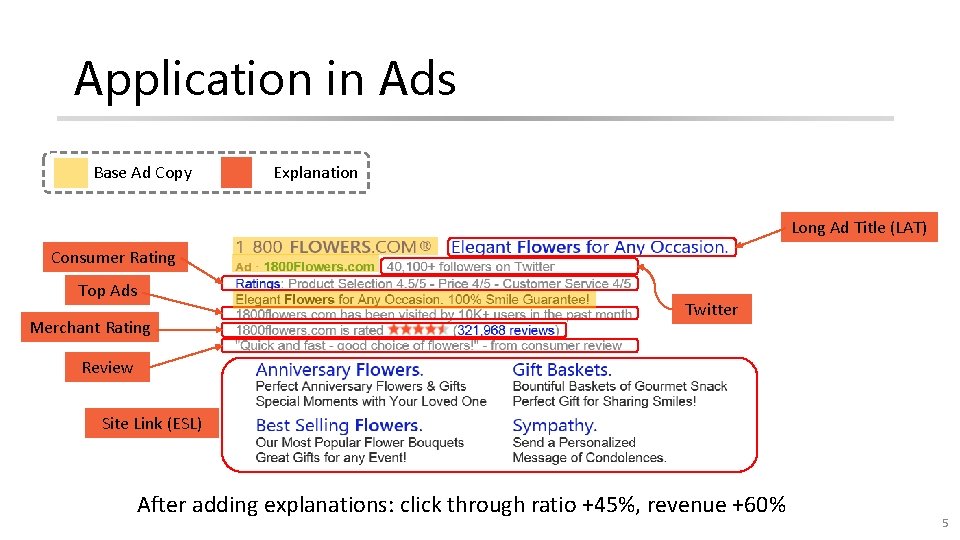

Application in Ads 4

Application in Ads Base Ad Copy Explanation Long Ad Title (LAT) Consumer Rating Top Ads Merchant Rating Twitter Review Site Link (ESL) After adding explanations: click through ratio +45%, revenue +60% 5

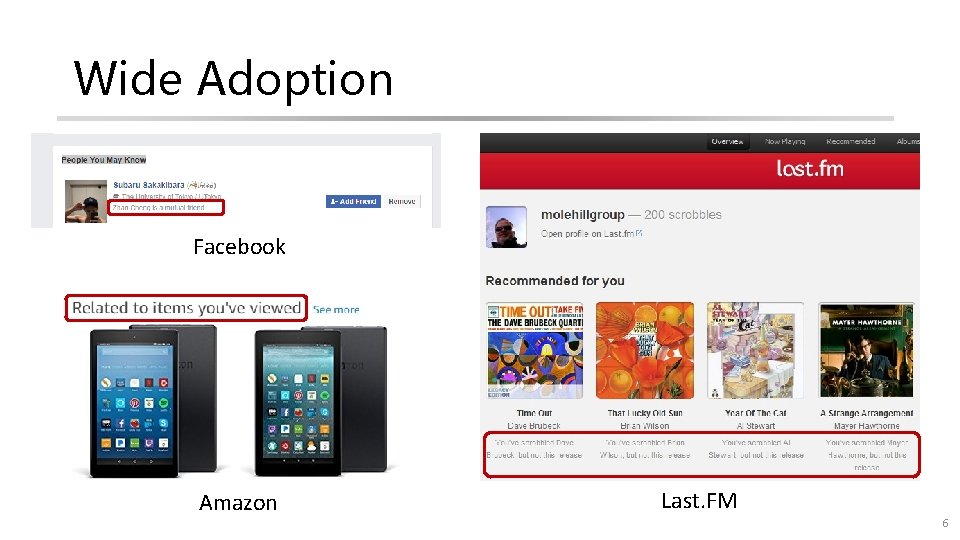

Wide Adoption Facebook Amazon Last. FM 6

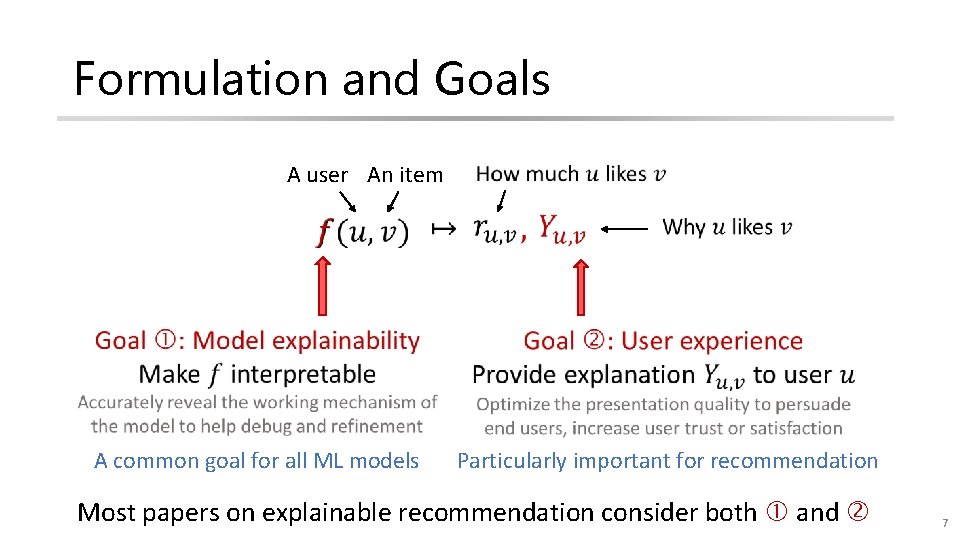

Formulation and Goals A user An item A common goal for all ML models Particularly important for recommendation Most papers on explainable recommendation consider both and 7

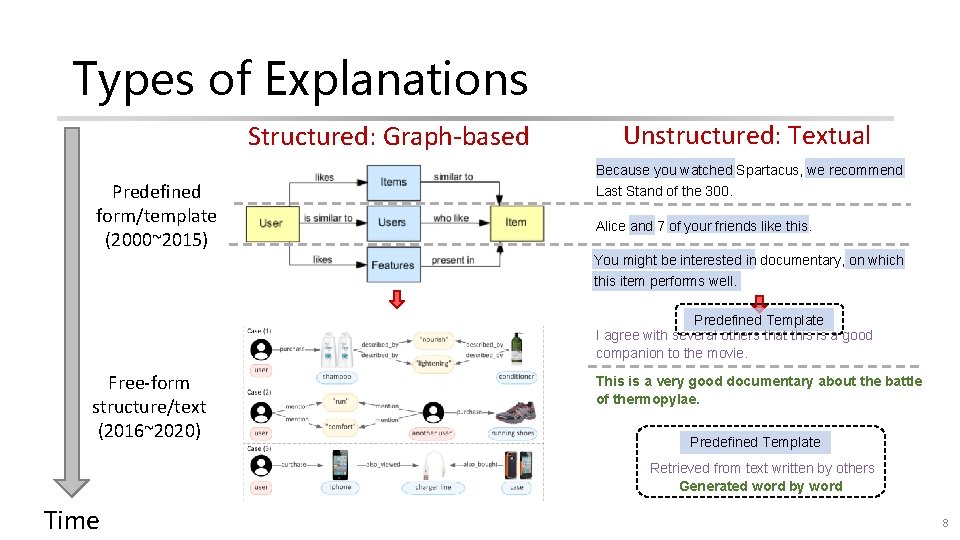

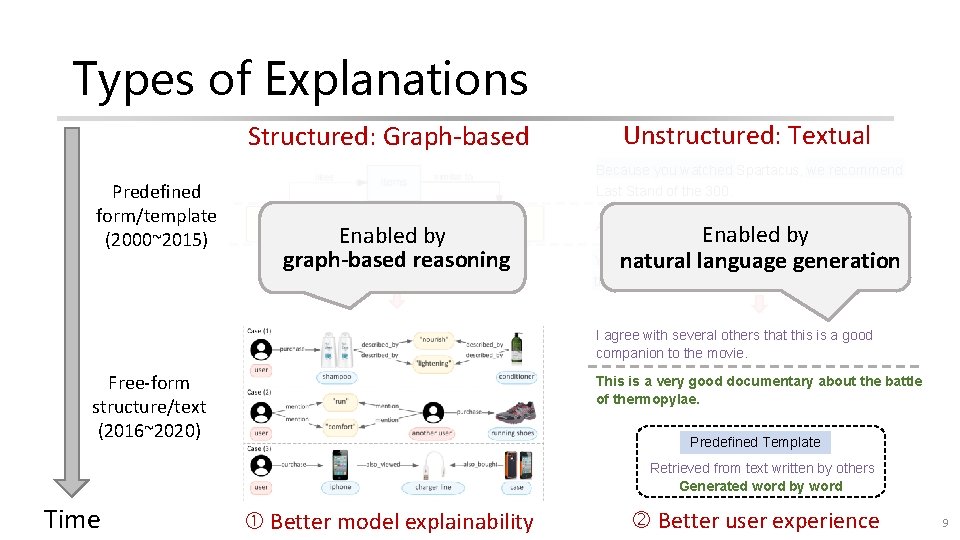

Types of Explanations Structured: Graph-based Predefined form/template (2000~2015) Unstructured: Textual Because you watched Spartacus, we recommend Last Stand of the 300. Alice and 7 of your friends like this. You might be interested in documentary, on which this item performs well. Predefined Template I agree with several others that this is a good companion to the movie. Free-form structure/text (2016~2020) This is a very good documentary about the battle of thermopylae. Predefined Template Retrieved from text written by others Generated word by word Time 8

Types of Explanations Structured: Graph-based Predefined form/template (2000~2015) Unstructured: Textual Because you watched Spartacus, we recommend Last Stand of the 300. Enabled by graph-based reasoning Alice and 7 of your friends like this. Enabled by You natural might be interested in documentary, on which language generation this item performs well. I agree with several others that this is a good companion to the movie. Free-form structure/text (2016~2020) This is a very good documentary about the battle of thermopylae. Predefined Template Retrieved from text written by others Generated word by word Time Better model explainability Better user experience 9

Outline • Basics • Definition and goals • Basic types of explanations and applications • Recent advances • Structured: graph-based reasoning • Unstructured: natural language generation • Challenges and future directions 10

Outline • Basics • Definition and goals • Basic types of explanations and applications • Recent advances • Structured: graph-based reasoning • Unstructured: natural language generation • Challenges and future directions 11

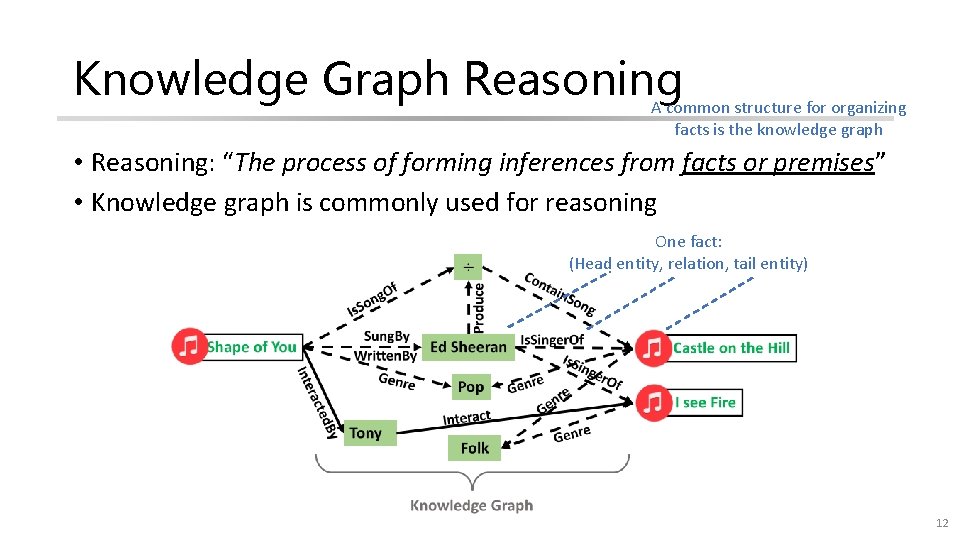

Knowledge Graph Reasoning A common structure for organizing facts is the knowledge graph • Reasoning: “The process of forming inferences from facts or premises” • Knowledge graph is commonly used for reasoning One fact: (Head entity, relation, tail entity) 12

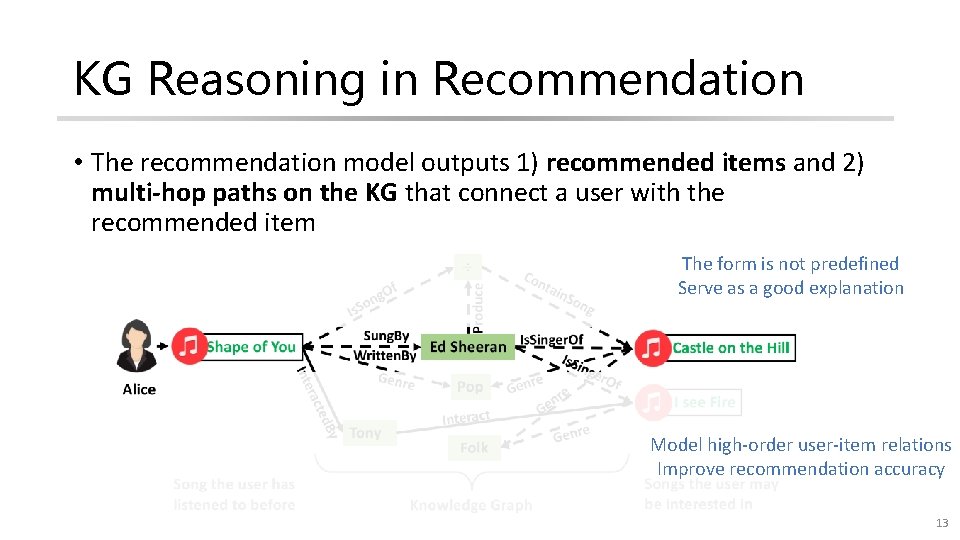

KG Reasoning in Recommendation • The recommendation model outputs 1) recommended items and 2) multi-hop paths on the KG that connect a user with the recommended item The form is not predefined Serve as a good explanation Model high-order user-item relations Improve recommendation accuracy 13

KG Reasoning in Recommendation • Major challenge: a combinatorial problem that consists of two steps • Scoring: scoring a given item according to user preferences • Path finding: identifying feasible paths by exploring the KG (NP-hard) • Existing methods fail to efficiently handle path finding 14

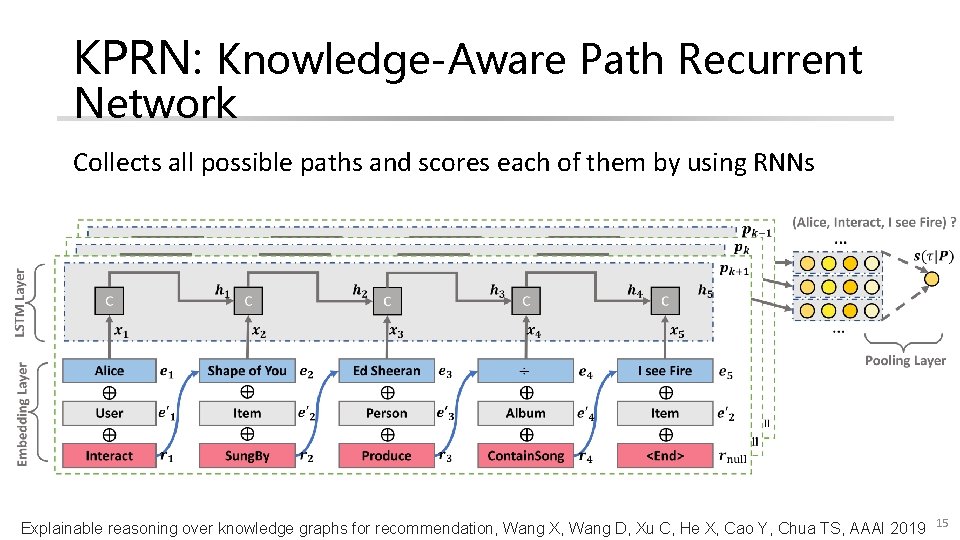

KPRN: Knowledge-Aware Path Recurrent Network Collects all possible paths and scores each of them by using RNNs Explainable reasoning over knowledge graphs for recommendation, Wang X, Wang D, Xu C, He X, Cao Y, Chua TS, AAAI 2019 15

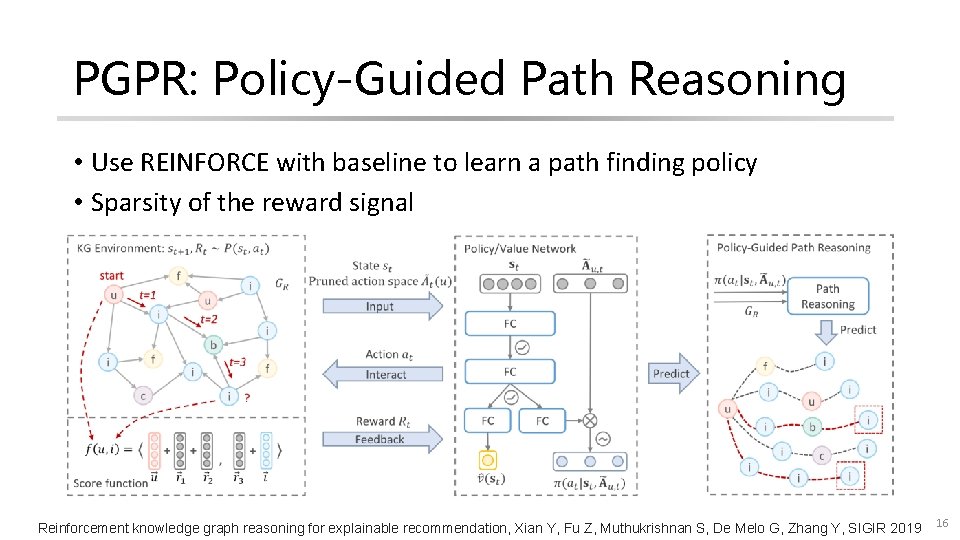

PGPR: Policy-Guided Path Reasoning • Use REINFORCE with baseline to learn a path finding policy • Sparsity of the reward signal Reinforcement knowledge graph reasoning for explainable recommendation, Xian Y, Fu Z, Muthukrishnan S, De Melo G, Zhang Y, SIGIR 2019 16

KG Reasoning for Recommendation • Major challenge: it is a NP-hard problem that consists of two steps • Scoring: scoring a given candidate item according to user preferences • Path finding: identifying feasible candidate paths by exploring the KG • Existing methods lack supervision in path finding • Convergence issue: it is difficult for existing methods to quickly converge to a satisfying solution • Explainability issue: since existing methods optimize only recommendation accuracy, there is no guarantee that the discovered paths are interpretable 17

43 rd International ACM SIGIR Conference on Research and Development in Information Retrieval (SIGIR 2020) Leveraging Demonstrations for Reinforcement Recommendation Reasoning over Knowledge Graphs Kangzhi Zhao 1, Xiting Wang*2, Yuren Zhang 3, Li Zhao 2, Zheng Liu 2, Chunxiao Xing 1 and Xing Xie 2 1 Tsinghua University 2 Microsoft Research Asia 3 University of Science and Technology of China * Corresponding Author 18

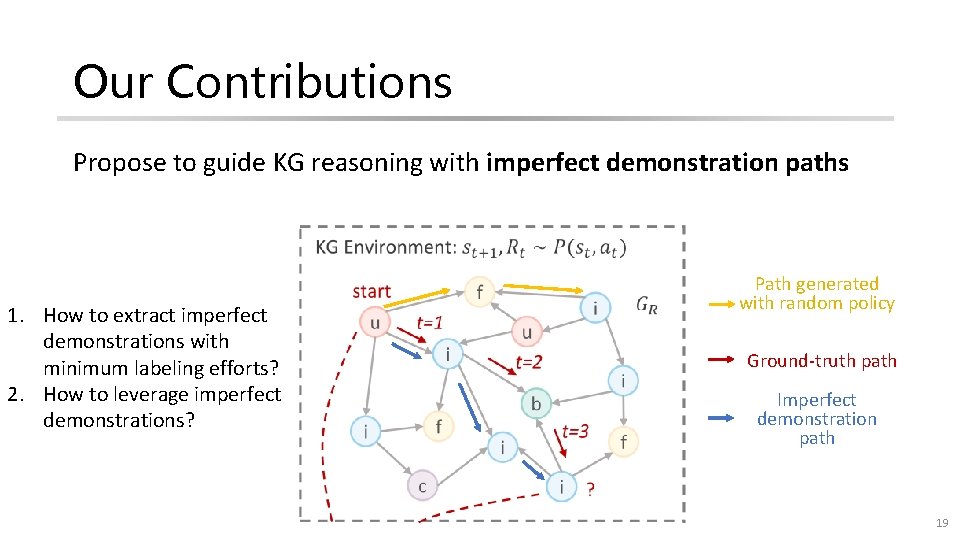

Our Contributions Propose to guide KG reasoning with imperfect demonstration paths 1. How to extract imperfect demonstrations with minimum labeling efforts? 2. How to leverage imperfect demonstrations? Path generated with random policy Ground-truth path Imperfect demonstration path 19

Random Ground-truth Demonstration Extraction Three desirable properties for demonstrations • P 1: Accessibility. The demonstrations can be obtained with minimum labeling efforts. Explainability The demonstrations are more interpretable than • P 2: Explainability. randomly sampled paths. • P 3: Accuracy. The demonstrations lead to accurate recommendations, i. e. , they connect users with items they interacted with. Even if the extracted demonstrations are imperfect, we consider them useful if they satisfy these three properties 20

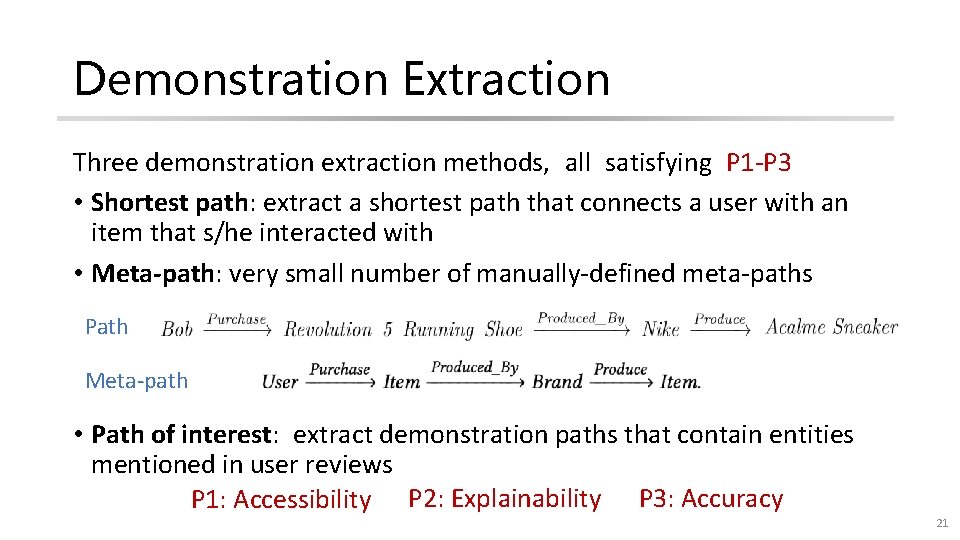

Demonstration Extraction Three demonstration extraction methods, all satisfying P 1 -P 3 • Shortest path: extract a shortest path that connects a user with an item that s/he interacted with • Meta-path: very small number of manually-defined meta-paths Path Meta-path • Path of interest: extract demonstration paths that contain entities mentioned in user reviews P 1: Accessibility P 2: Explainability P 3: Accuracy 21

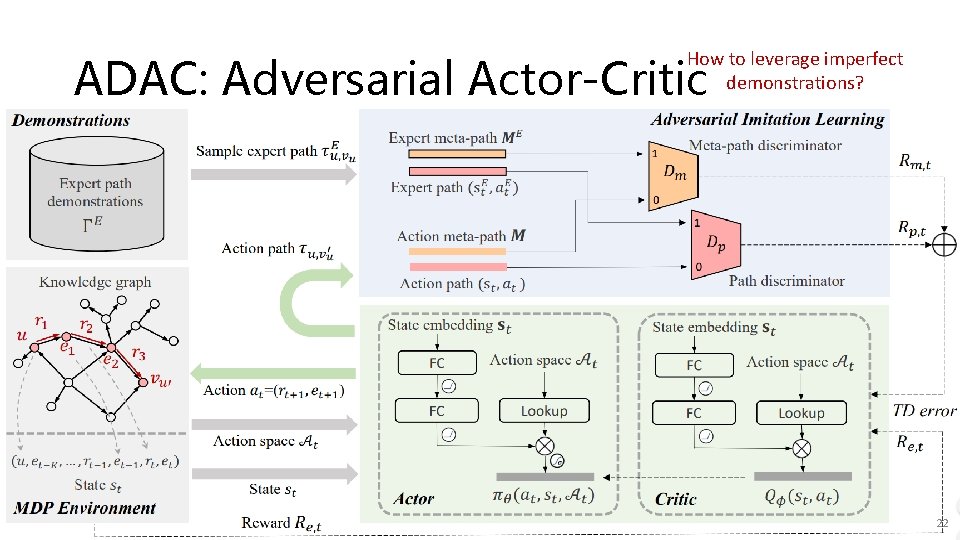

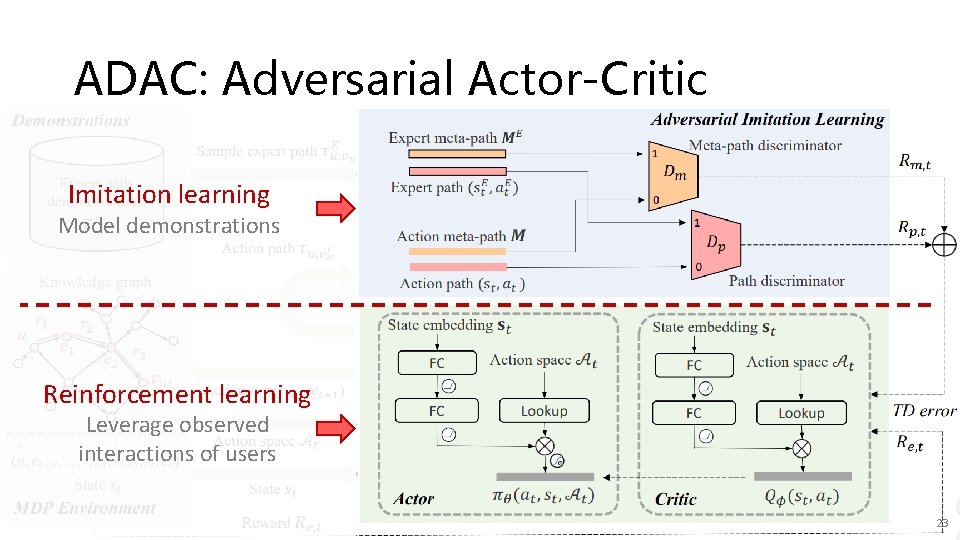

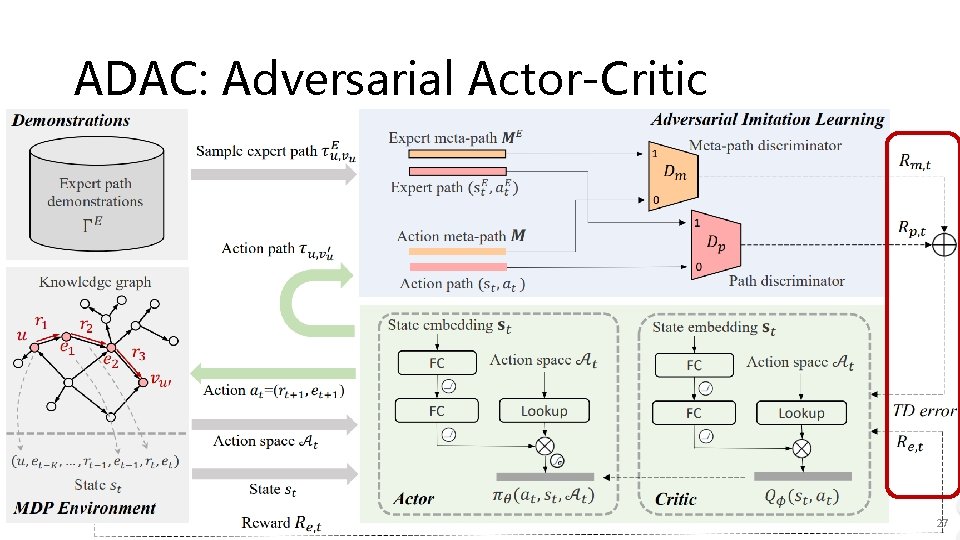

How to leverage imperfect demonstrations? ADAC: Adversarial Actor-Critic 22

ADAC: Adversarial Actor-Critic Imitation learning Model demonstrations Reinforcement learning Leverage observed interactions of users 23

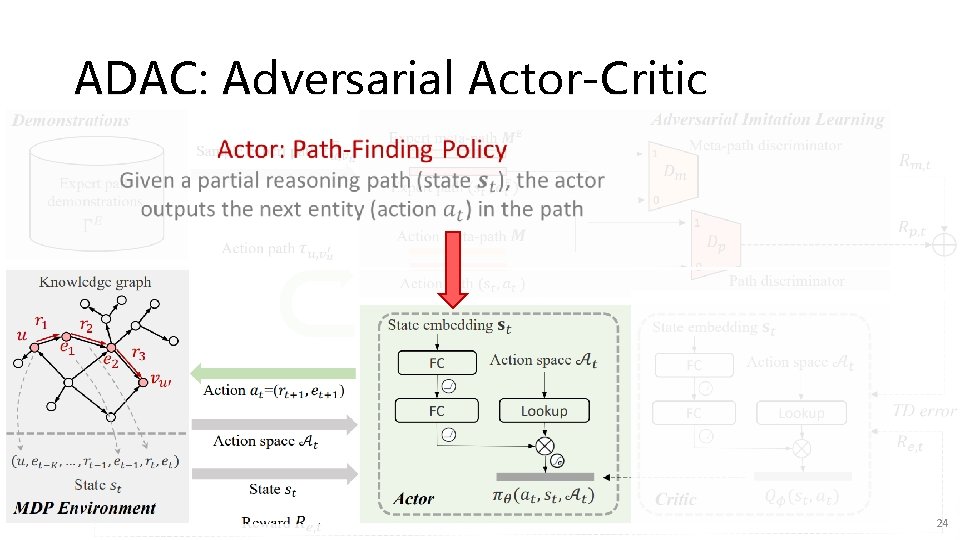

ADAC: Adversarial Actor-Critic 24

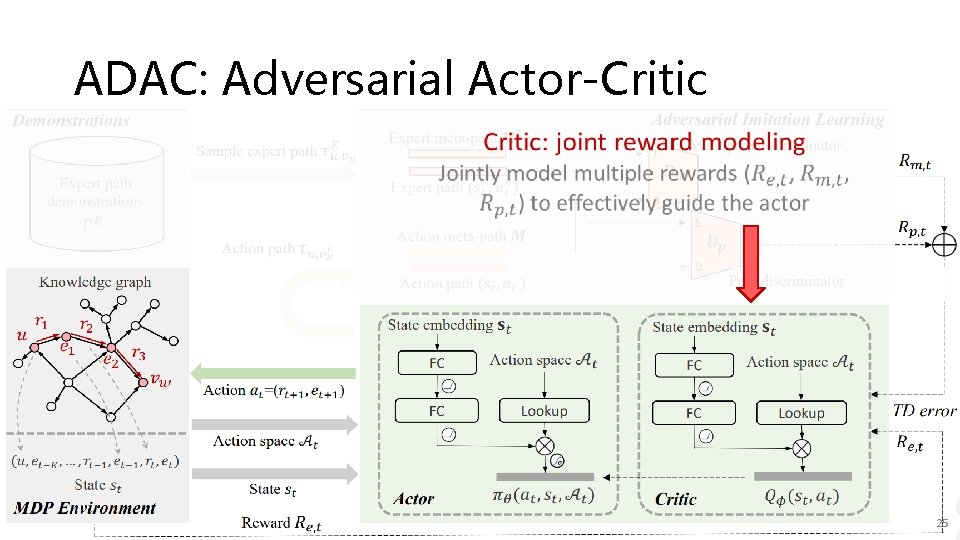

ADAC: Adversarial Actor-Critic 25

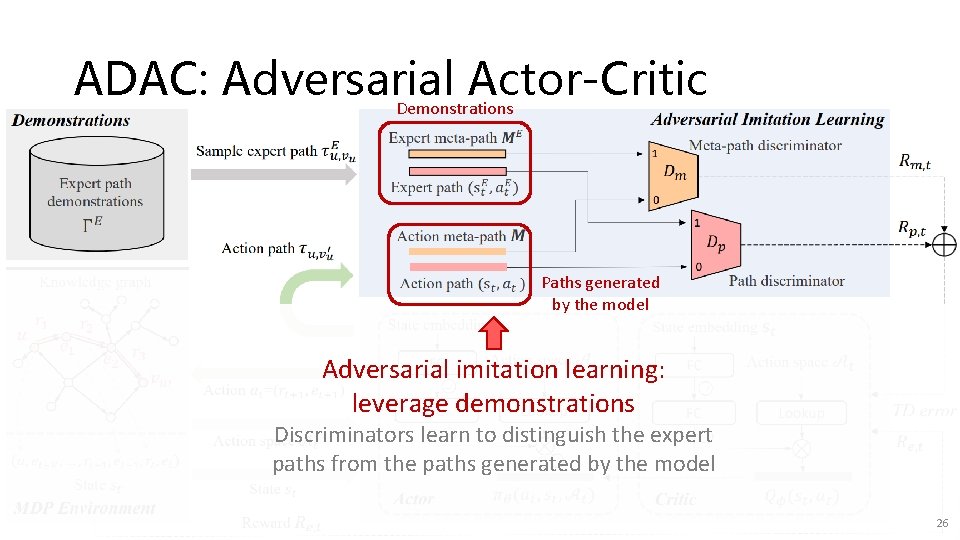

ADAC: Adversarial Actor-Critic Demonstrations Paths generated by the model Adversarial imitation learning: leverage demonstrations Discriminators learn to distinguish the expert paths from the paths generated by the model 26

ADAC: Adversarial Actor-Critic 27

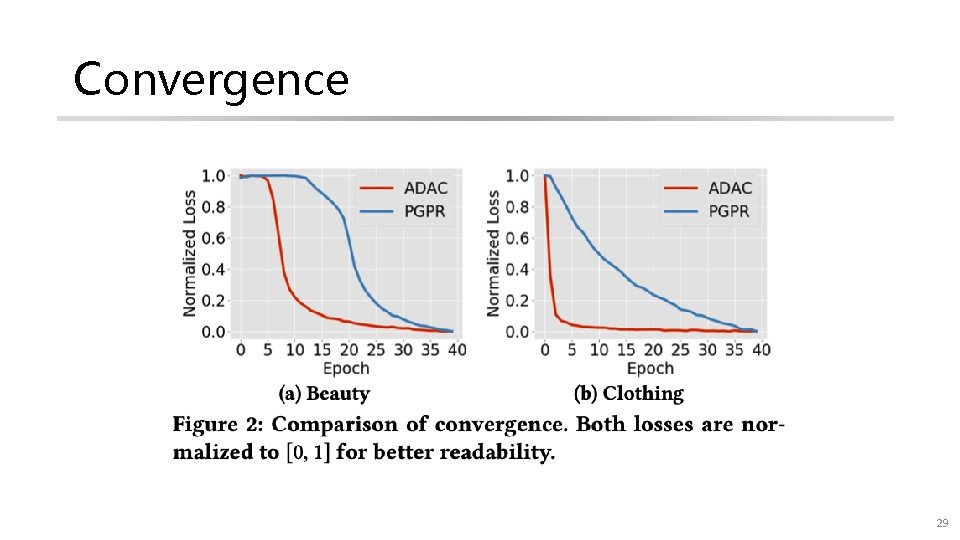

Convergence 29

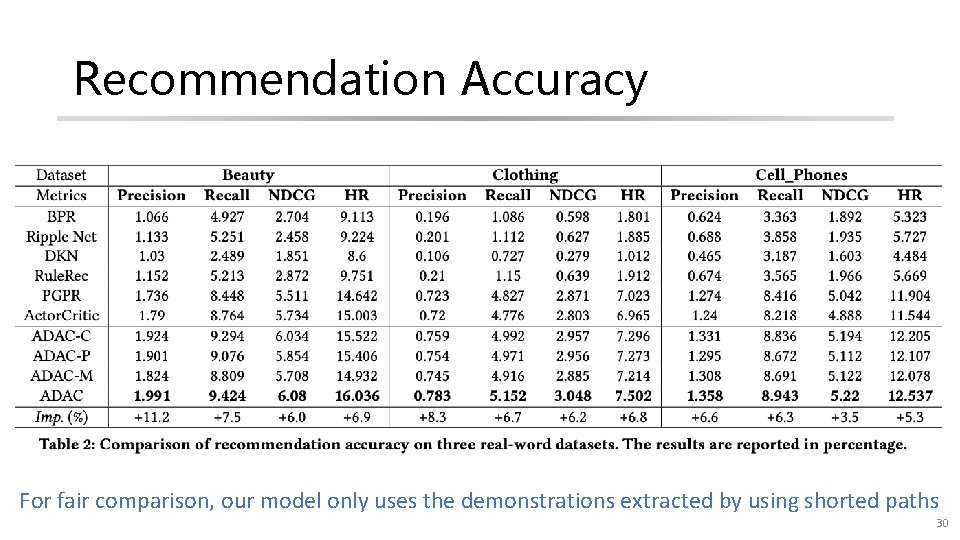

Recommendation Accuracy For fair comparison, our model only uses the demonstrations extracted by using shorted paths 30

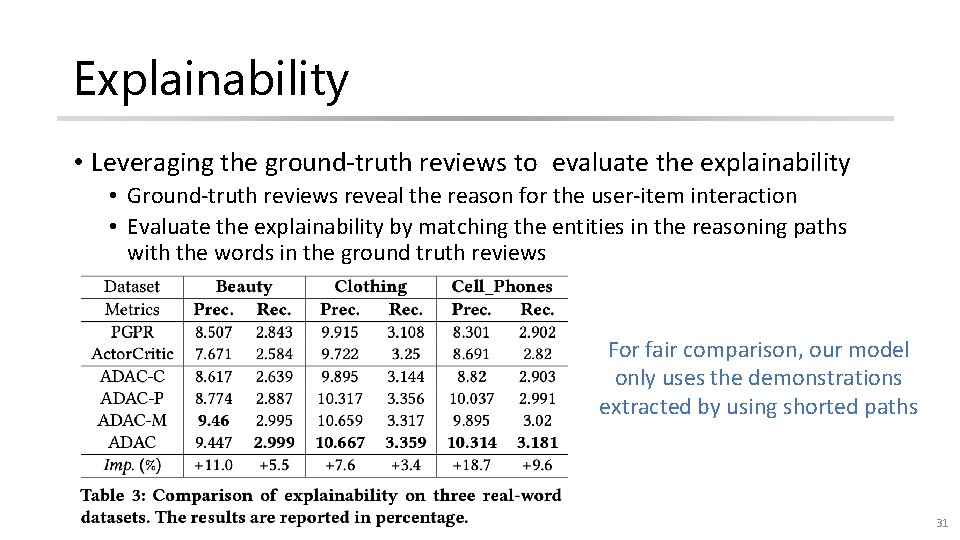

Explainability • Leveraging the ground-truth reviews to evaluate the explainability • Ground-truth reviews reveal the reason for the user-item interaction • Evaluate the explainability by matching the entities in the reasoning paths with the words in the ground truth reviews For fair comparison, our model only uses the demonstrations extracted by using shorted paths 31

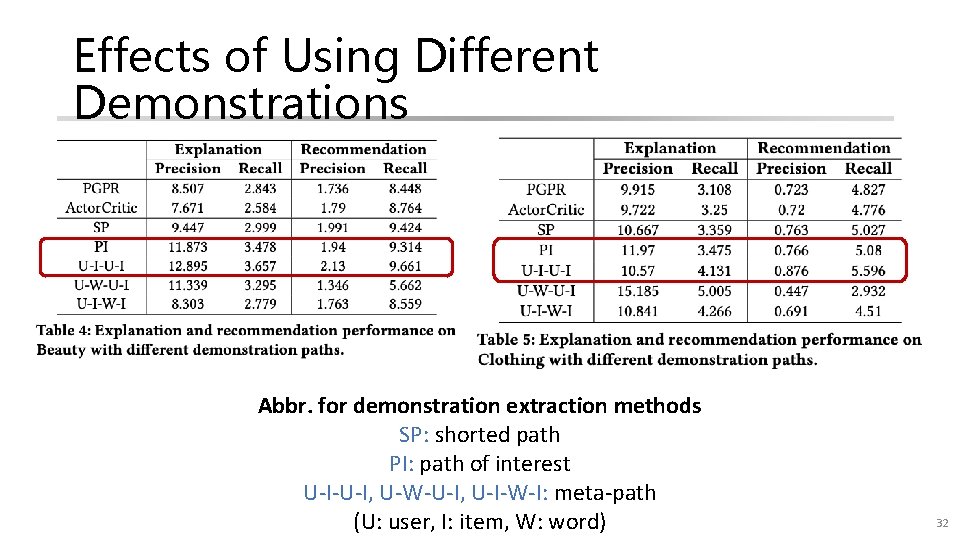

Effects of Using Different Demonstrations Abbr. for demonstration extraction methods SP: shorted path PI: path of interest U-I-U-I, U-W-U-I, U-I-W-I: meta-path (U: user, I: item, W: word) 32

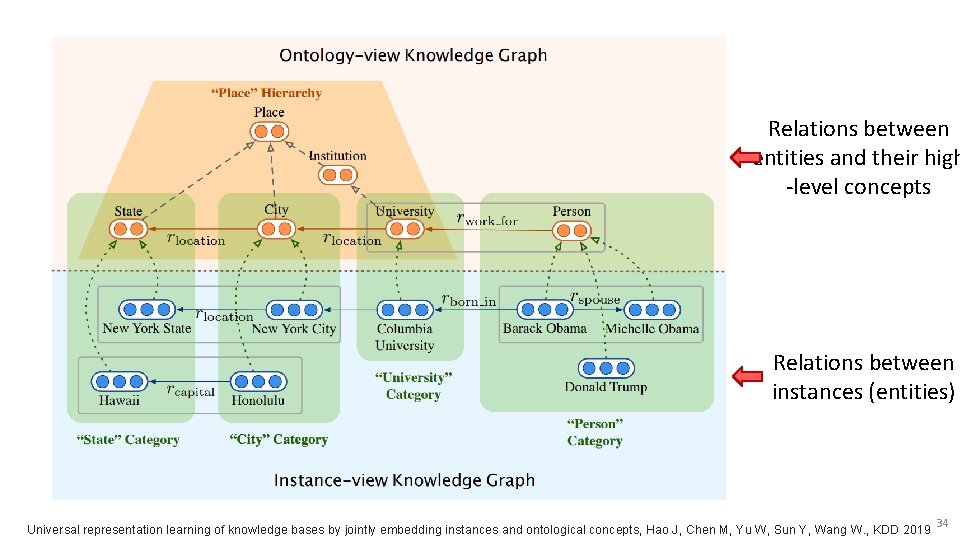

Relations between entities and their high -level concepts Relations between instances (entities) Universal representation learning of knowledge bases by jointly embedding instances and ontological concepts, Hao J, Chen M, Yu W, Sun Y, Wang W. , KDD 2019 34

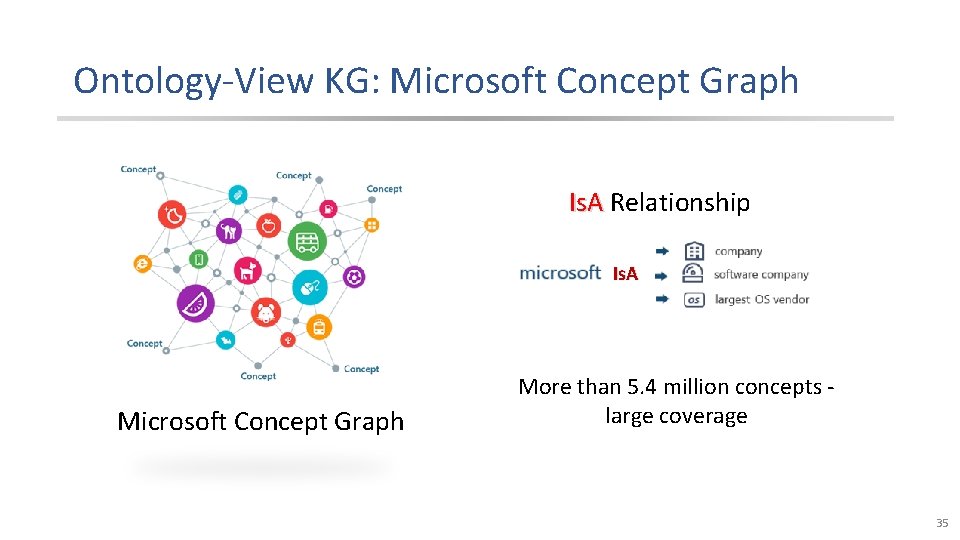

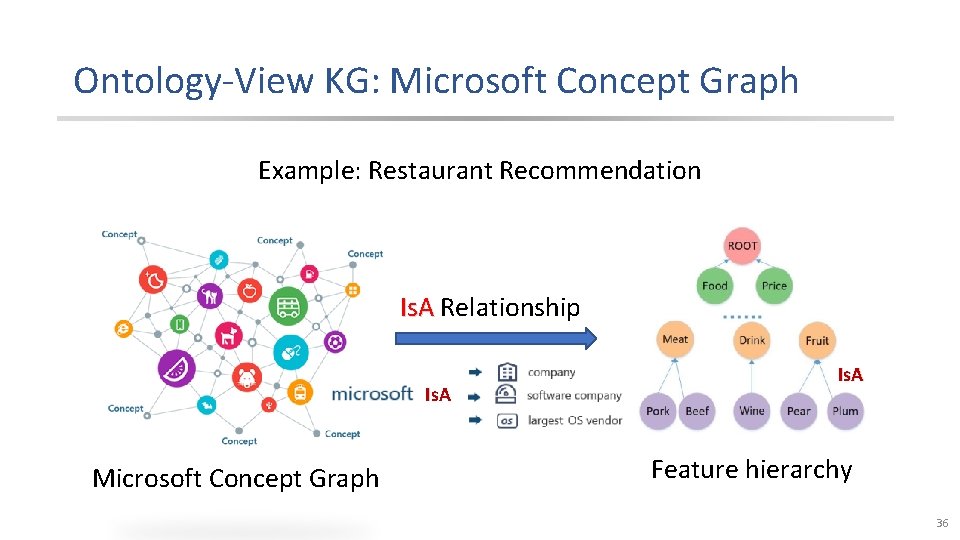

Ontology-View KG: Microsoft Concept Graph Is. A Relationship Is. A Microsoft Concept Graph More than 5. 4 million concepts large coverage 35

Ontology-View KG: Microsoft Concept Graph Example: Restaurant Recommendation Is. A Relationship Is. A Microsoft Concept Graph Is. A Feature hierarchy 36

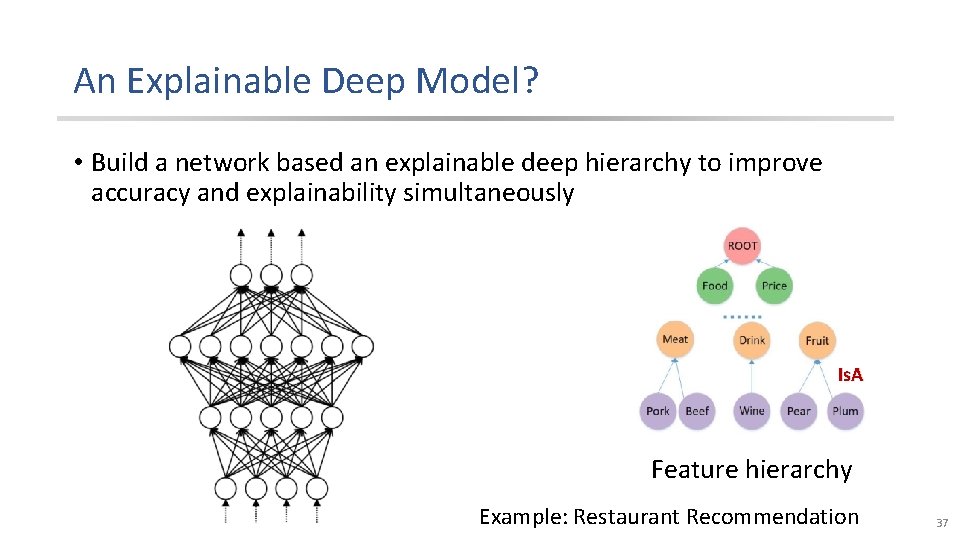

An Explainable Deep Model? • Build a network based an explainable deep hierarchy to improve accuracy and explainability simultaneously Is. A Feature hierarchy Example: Restaurant Recommendation 37

The 33 rd AAAI Conference on Artificial Intelligence (AAAI 2019) Honolulu, Hawaii, USA Explainable Recommendation Through Attentive Multi-View Learning Jingyue Gao 1, 2, Xiting Wang 2, *, Yasha Wang 1, Xing Xie 2 1 Peking University, 2 Microsoft Research Asia * Corresponding author 38

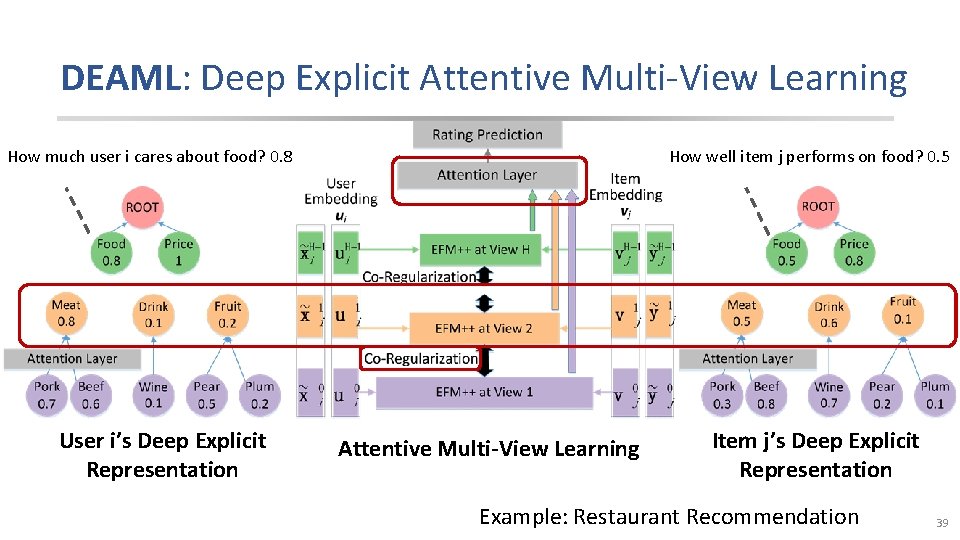

DEAML: Deep Explicit Attentive Multi-View Learning How much user i cares about food? 0. 8 User i’s Deep Explicit Representation How well item j performs on food? 0. 5 Attentive Multi-View Learning Item j’s Deep Explicit Representation Example: Restaurant Recommendation 39

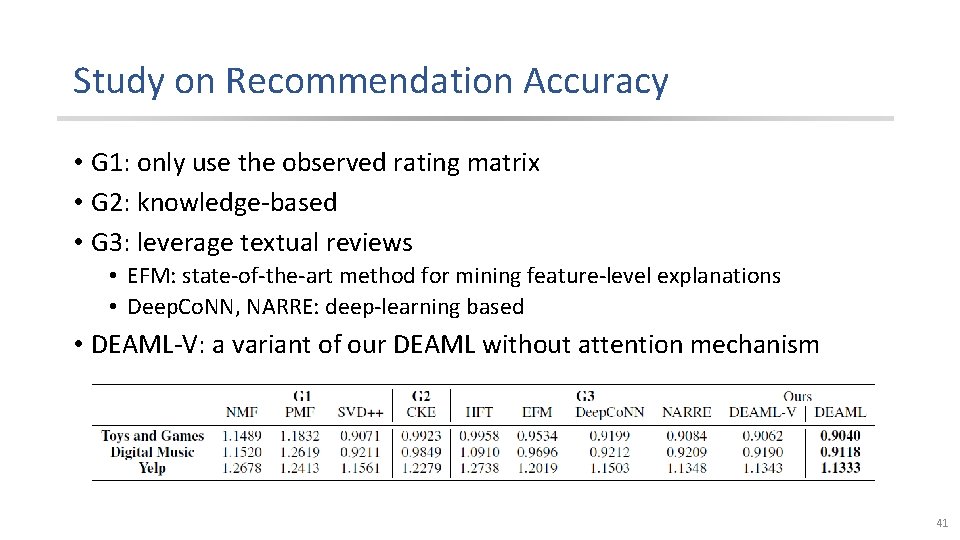

Study on Recommendation Accuracy • G 1: only use the observed rating matrix • G 2: knowledge-based • G 3: leverage textual reviews • EFM: state-of-the-art method for mining feature-level explanations • Deep. Co. NN, NARRE: deep-learning based • DEAML-V: a variant of our DEAML without attention mechanism 41

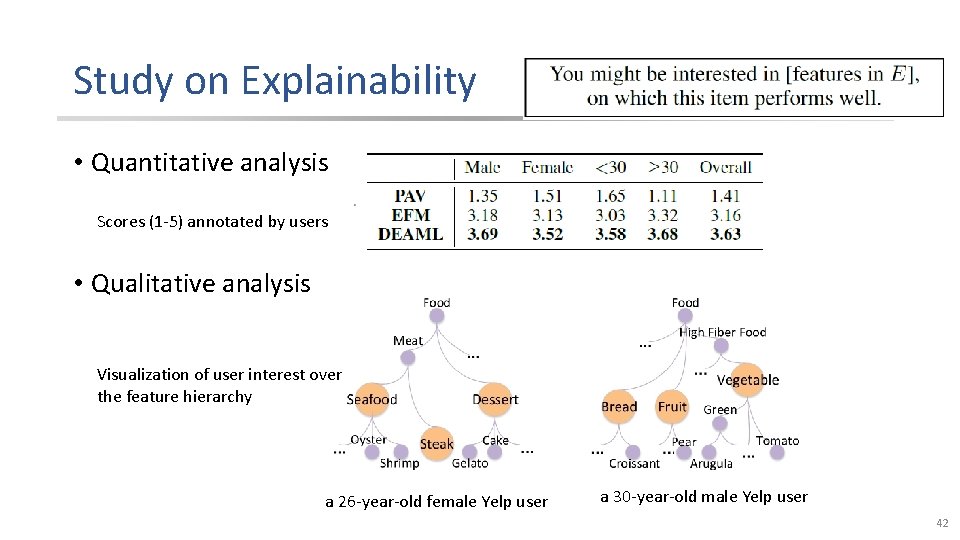

Study on Explainability • Quantitative analysis Scores (1 -5) annotated by users • Qualitative analysis Visualization of user interest over the feature hierarchy a 26 -year-old female Yelp user a 30 -year-old male Yelp user 42

Outline • Basics • Definition and goals • Basic types of explanations and applications • Recent advances • Structured: graph-based reasoning • Unstructured: natural language generation • Challenges and future directions 43

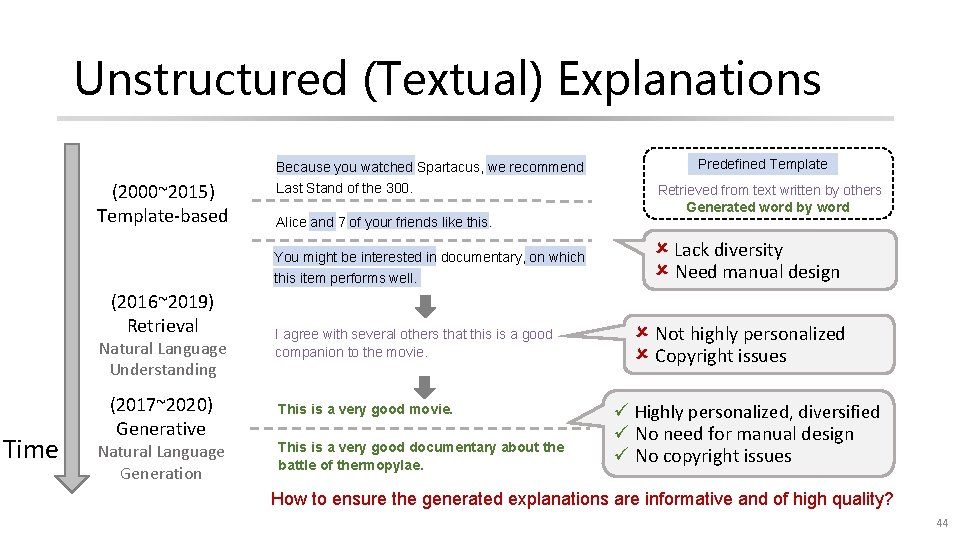

Unstructured (Textual) Explanations (2000~2015) Template-based Because you watched Spartacus, we recommend Last Stand of the 300. Alice and 7 of your friends like this. You might be interested in documentary, on which this item performs well. (2016~2019) Retrieval Natural Language Understanding Time (2017~2020) Generative Natural Language Generation I agree with several others that this is a good companion to the movie. This is a very good movie. This is a very good documentary about the battle of thermopylae. Predefined Template Retrieved from text written by others Generated word by word Lack diversity Need manual design Not highly personalized Copyright issues Highly personalized, diversified No need for manual design No copyright issues How to ensure the generated explanations are informative and of high quality? 44

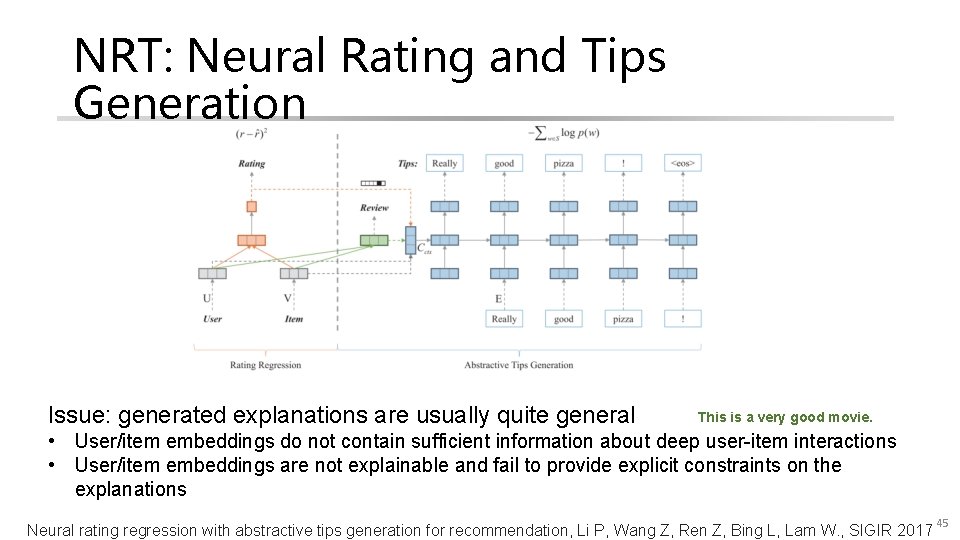

NRT: Neural Rating and Tips Generation Issue: generated explanations are usually quite general This is a very good movie. • User/item embeddings do not contain sufficient information about deep user-item interactions • User/item embeddings are not explainable and fail to provide explicit constraints on the explanations Neural rating regression with abstractive tips generation for recommendation, Li P, Wang Z, Ren Z, Bing L, Lam W. , SIGIR 2017 45

The 28 th International Joint Conference on Artificial Intelligence (IJCAI 2019) August 10 -16, 2019, Macao, China Co-Attentive Multi-Task Learning for Explainable Recommendation Zhongxia Chen 1, 2, Xiting Wang 2, Xing Xie 2, Tong Wu 3, Guoqing Bu 3, Yining Wang 3 and Enhong Chen 1 September 26, 2019 46

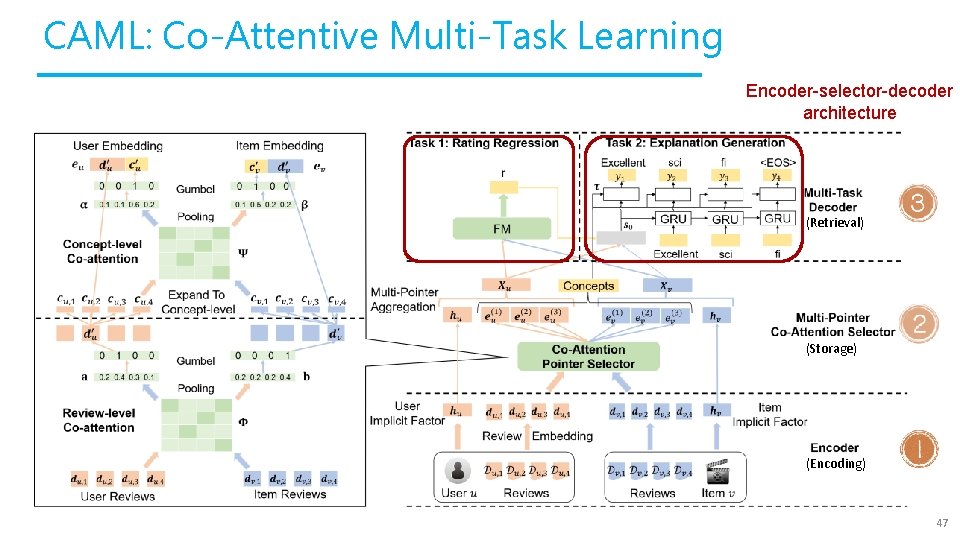

CAML: Co-Attentive Multi-Task Learning Encoder-selector-decoder architecture (Retrieval) (Storage) (Encoding) 47

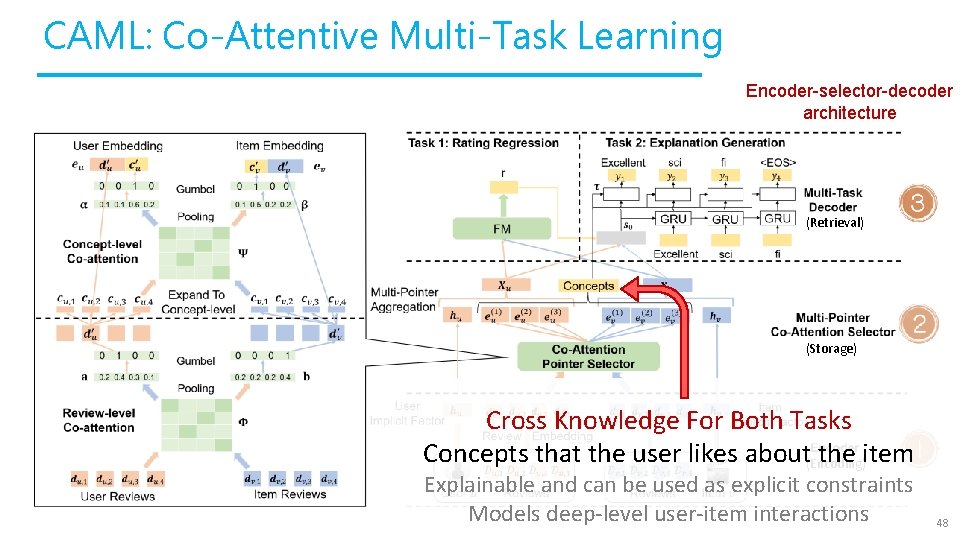

CAML: Co-Attentive Multi-Task Learning Encoder-selector-decoder architecture (Retrieval) (Storage) Cross Knowledge For Both Tasks Concepts that the user likes about(Encoding) the item Explainable and can be used as explicit constraints Models deep-level user-item interactions 48

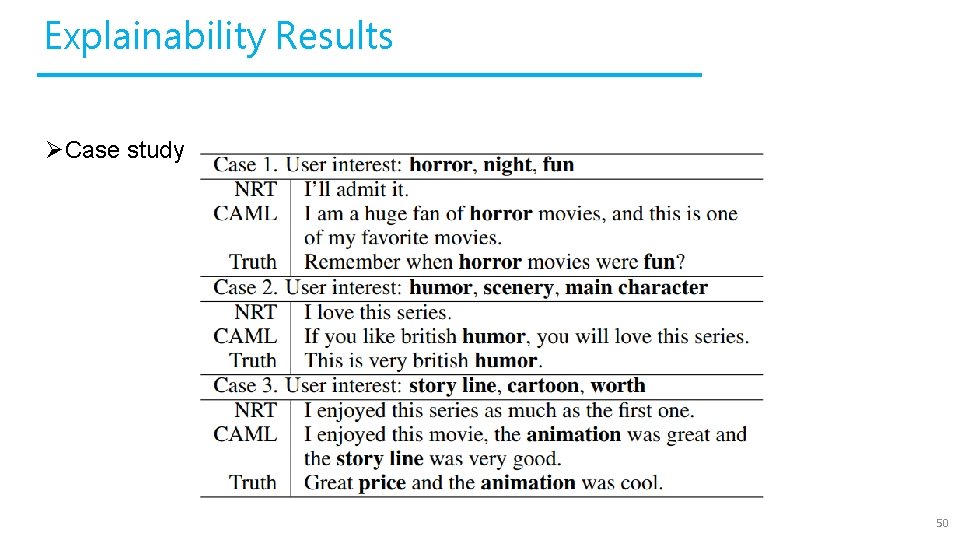

Explainability Results ØCase study 50

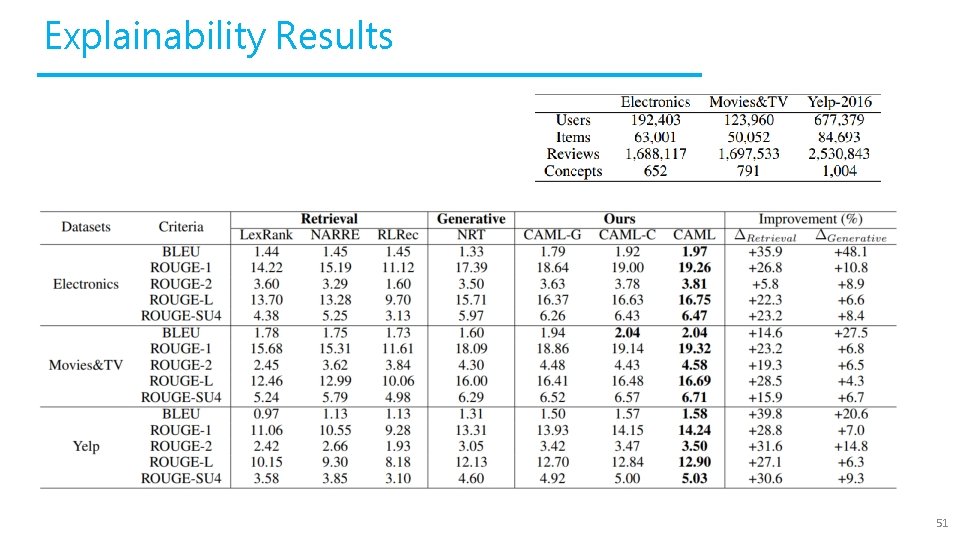

Explainability Results 51

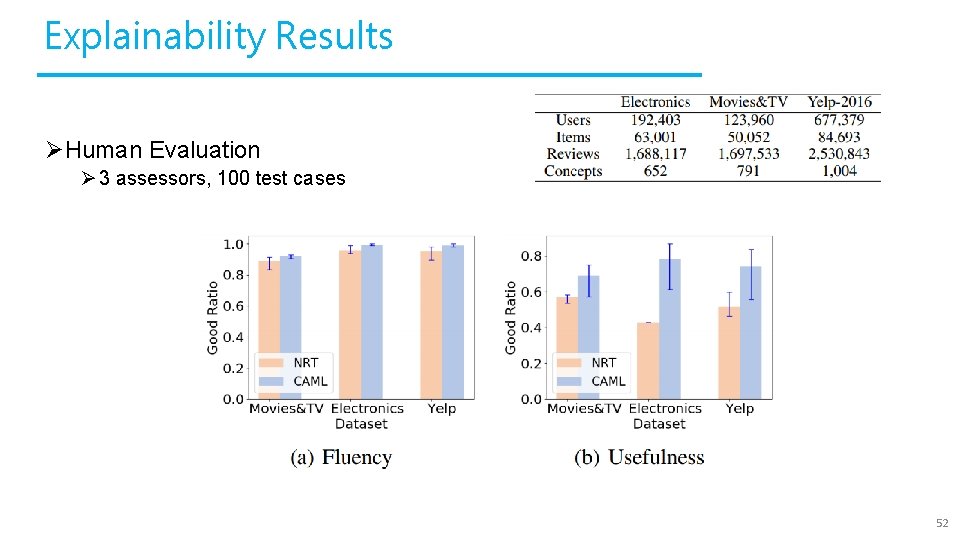

Explainability Results ØHuman Evaluation Ø 3 assessors, 100 test cases 52

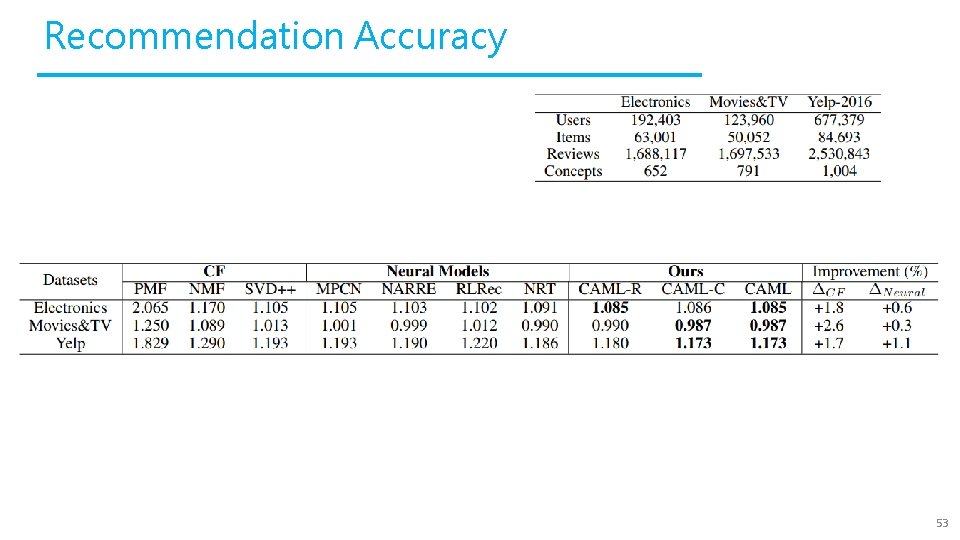

Recommendation Accuracy 53

Are Single-Round Explanations Enough? • Explanation serves as a bridge between users and recommender systems • Help users understand the working mechanisms of the models • Trigger potential user feedbacks (inspiring users to inform the system when it is wrong) Example This is a very good documentary about the battle of thermopylae After a user sees the explanation, s/he may realize why the recommendation is wrong, i. e. , the model provides the recommendation based on his/her previous interest documentary. • Disadvantage of single-round explanations • The user cannot communicate his/her findings with the system e. g. , his/her interest has recently shifted to thrillers. 54

The 29 th International Joint Conference on Artificial Intelligence (IJCAI 2020) Postponed to 2021, Possibly in Kyoto, Japan 55

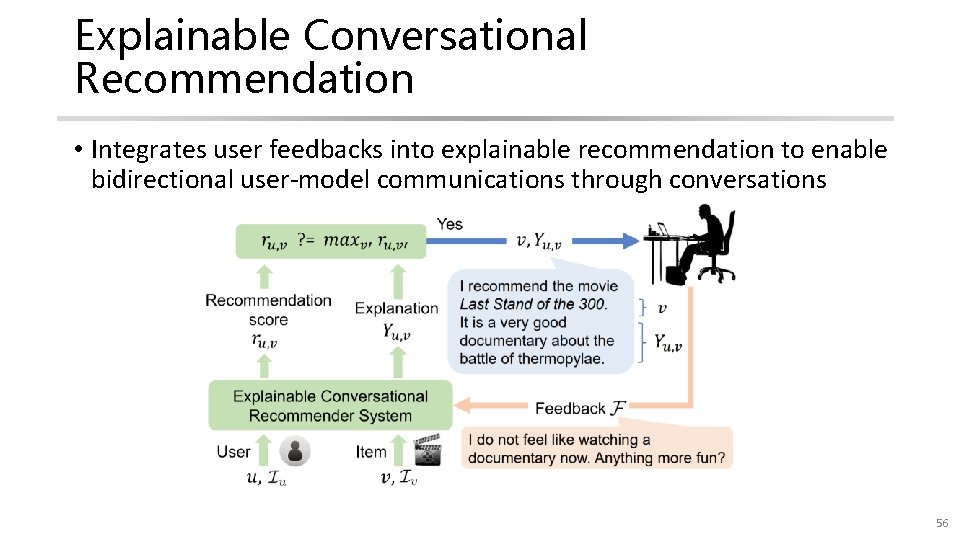

Explainable Conversational Recommendation • Integrates user feedbacks into explainable recommendation to enable bidirectional user-model communications through conversations 56

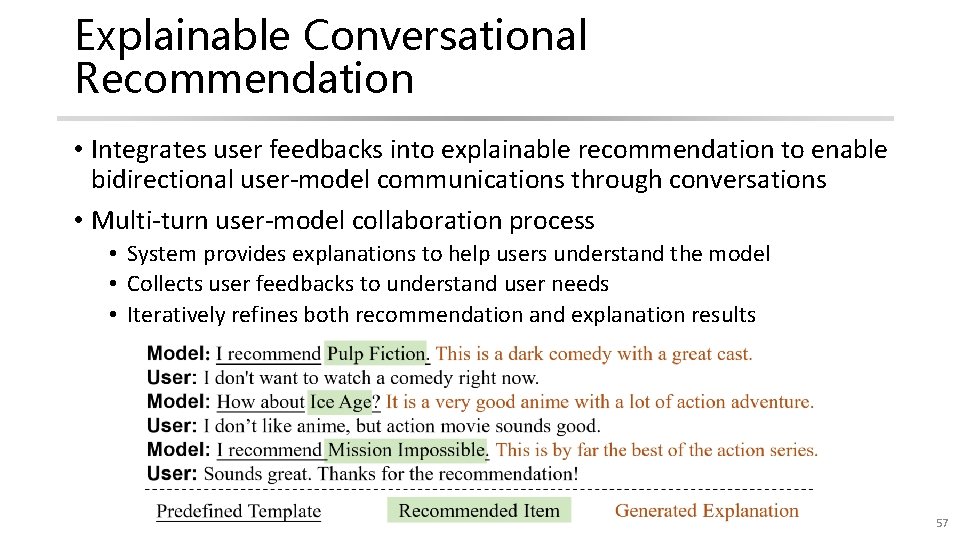

Explainable Conversational Recommendation • Integrates user feedbacks into explainable recommendation to enable bidirectional user-model communications through conversations • Multi-turn user-model collaboration process • System provides explanations to help users understand the model • Collects user feedbacks to understand user needs • Iteratively refines both recommendation and explanation results 57

Goal Explainable conversational recommendation is a promising but challenging multi-objective (O 1 -O 3) problem • Generalization: significant and stable improvement of recommendation accuracy (O 1) and explainability (O 2) • Satisfaction: the model should seldom violate the user requirements reflected in the feedbacks (O 3) 58

Contribution We introduce explainable conversational recommendation and develop a model that effectively integrates user feedbacks and fulfills all 3 objectives • We design an incremental multi-task learning framework which simultaneously achieves multiple objectives • We propose a multi-view feedback integration method to achieve effective incremental model update • Local view for satisfying user requirements (O 3) • Global view for better generalizing user feedbacks (O 1 -O 2) 59

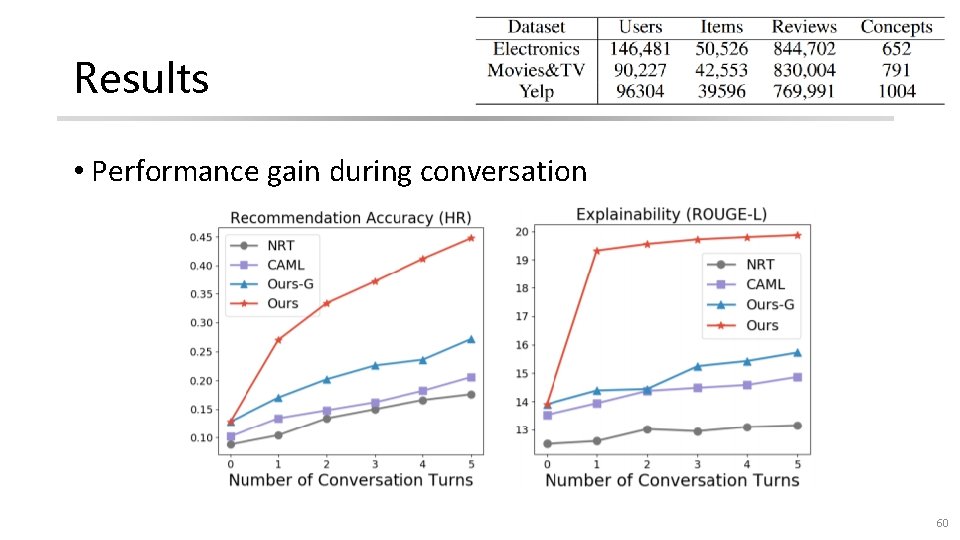

Results • Performance gain during conversation 60

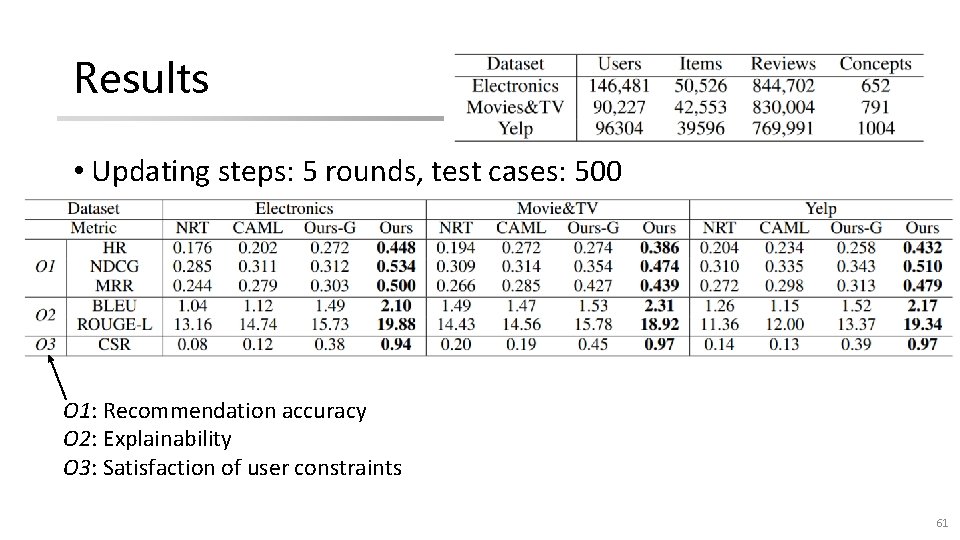

Results • Updating steps: 5 rounds, test cases: 500 O 1: Recommendation accuracy O 2: Explainability O 3: Satisfaction of user constraints 61

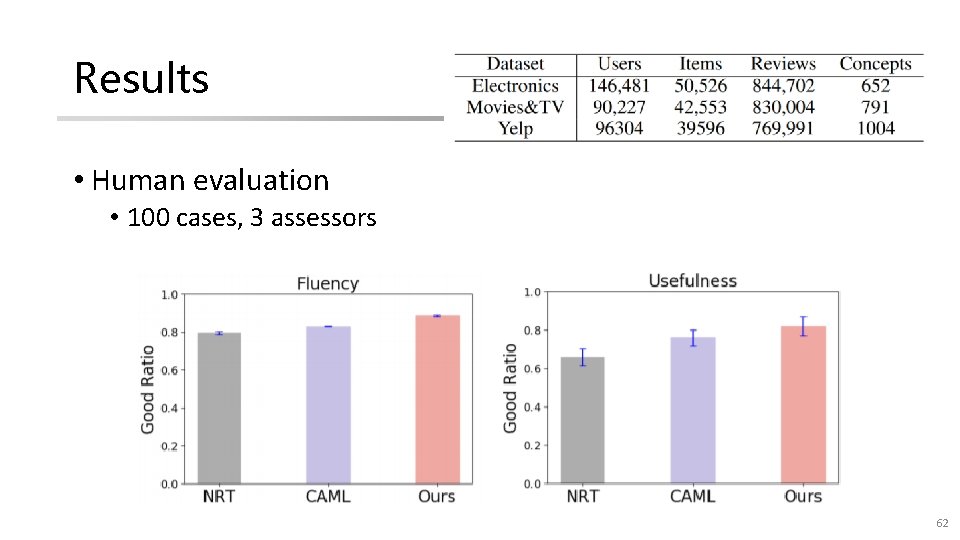

Results • Human evaluation • 100 cases, 3 assessors 62

Outline • Basics • Definition and goals • Basic types of explanations and applications • Recent advances • Structured: graph-based reasoning • Unstructured: natural language generation • Challenges and future directions 63

Challenges and Future Directions • Unstructured explanations • Fact-checking: how can we ensure the messages conveyed are facts? • Zero/low resource: how can we generate high-quality natural language explanations when we do not have enough ground-truth explanations? • Structured explanations • Efficiency & robustness: handling very large and noisy knowledge graphs • Multi-modality: integrating other types of structured data, e. g. , images • Others • Offline evaluation: how to evaluate user experience in the offline setting? • New paradigm: tighter integration of users and recommendation models 64

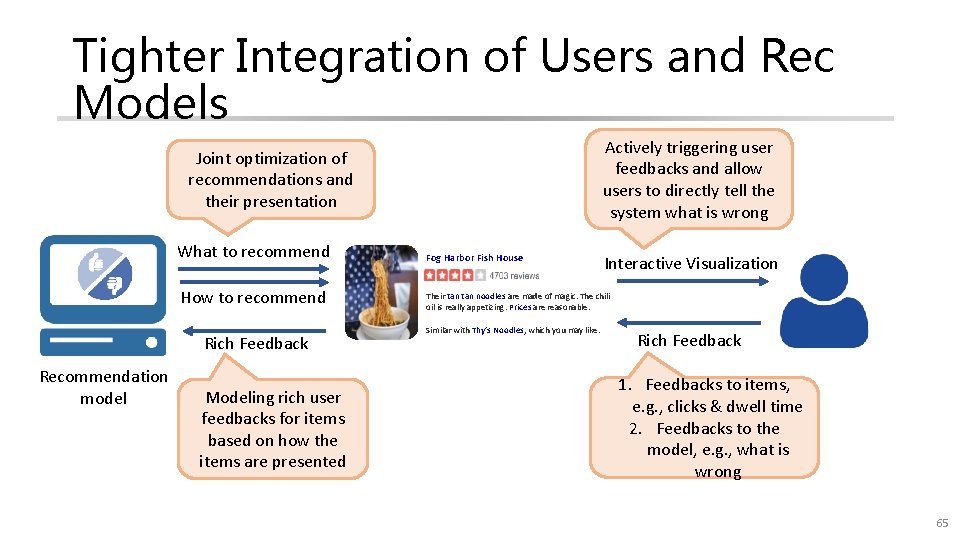

Tighter Integration of Users and Rec Models Actively triggering user feedbacks and allow users to directly tell the system what is wrong Joint optimization of recommendations and their presentation What to recommend How to recommend Rich Feedback Recommendation model Modeling rich user feedbacks for items based on how the items are presented Fog Harbor Fish House Interactive Visualization Their tan noodles are made of magic. The chili oil is really appetizing. Prices are reasonable. Similar with Thy’s Noodles, which you may like. Rich Feedback 1. Feedbacks to items, e. g. , clicks & dwell time 2. Feedbacks to the model, e. g. , what is wrong 65

Thanks 66

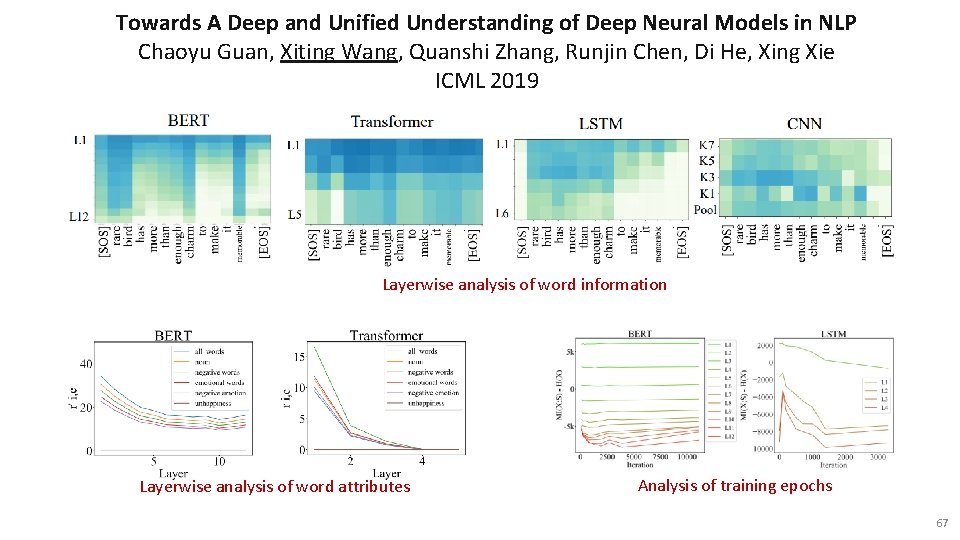

Towards A Deep and Unified Understanding of Deep Neural Models in NLP Chaoyu Guan, Xiting Wang, Quanshi Zhang, Runjin Chen, Di He, Xing Xie ICML 2019 Layerwise analysis of word information Layerwise analysis of word attributes Analysis of training epochs 67

- Slides: 63