Expert Systems n n Expert systems are AI

![Forward-chaining rules rule id 1: [1: has(X, hair)] ==> [assert(isa(X, mammal)), retract(all)]. rule id Forward-chaining rules rule id 1: [1: has(X, hair)] ==> [assert(isa(X, mammal)), retract(all)]. rule id](https://slidetodoc.com/presentation_image_h/80dc51b605070bbc9c6e8eb3d51b8045/image-19.jpg)

- Slides: 21

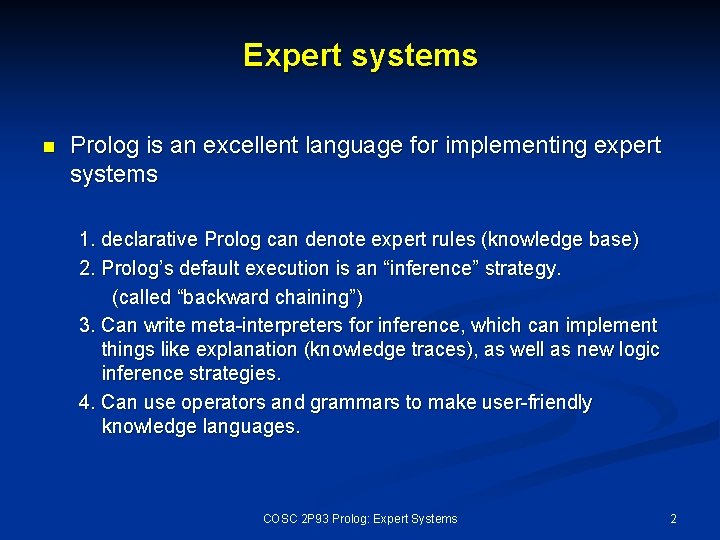

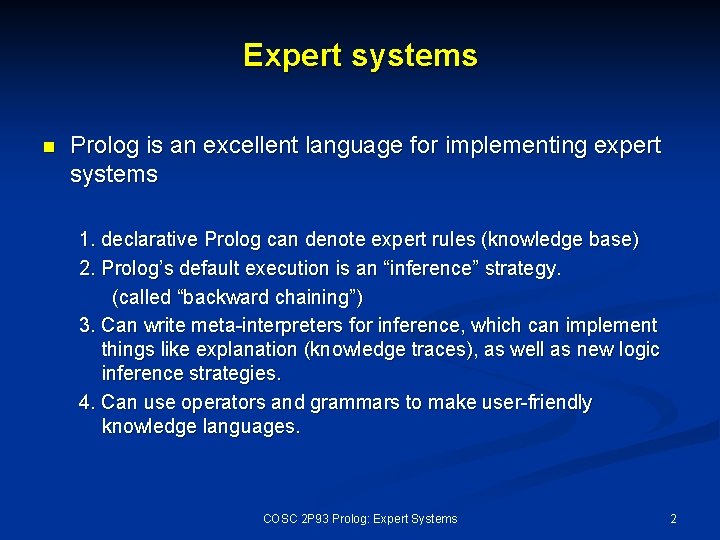

Expert Systems n n Expert systems are AI programs that solve a highly technical problem in some domain Normally a human expert is used for solving such problems. An expert system encodes a human expert’s knowledge. Common areas: n n n medicine science: chemistry, biology engineering agriculture military finance. COSC 2 P 93 Prolog: Expert Systems 1

Expert systems n Prolog is an excellent language for implementing expert systems 1. declarative Prolog can denote expert rules (knowledge base) 2. Prolog’s default execution is an “inference” strategy. (called “backward chaining”) 3. Can write meta-interpreters for inference, which can implement things like explanation (knowledge traces), as well as new logic inference strategies. 4. Can use operators and grammars to make user-friendly knowledge languages. COSC 2 P 93 Prolog: Expert Systems 2

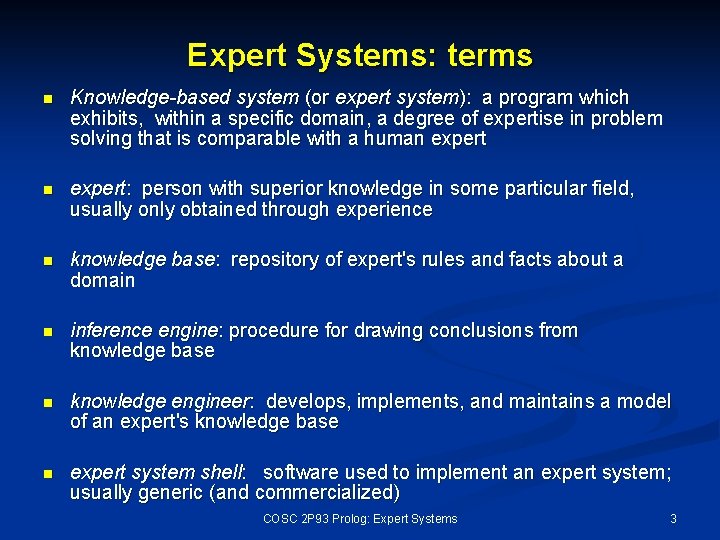

Expert Systems: terms n Knowledge-based system (or expert system): a program which exhibits, within a specific domain, a degree of expertise in problem solving that is comparable with a human expert: person with superior knowledge in some particular field, usually only obtained through experience n knowledge base: repository of expert's rules and facts about a domain n inference engine: procedure for drawing conclusions from knowledge base n knowledge engineer: develops, implements, and maintains a model of an expert's knowledge base n expert system shell: software used to implement an expert system; usually generic (and commercialized) COSC 2 P 93 Prolog: Expert Systems 3

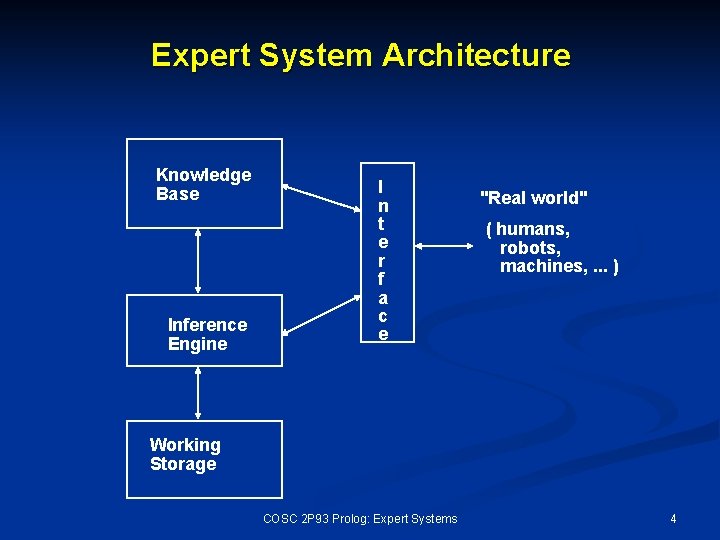

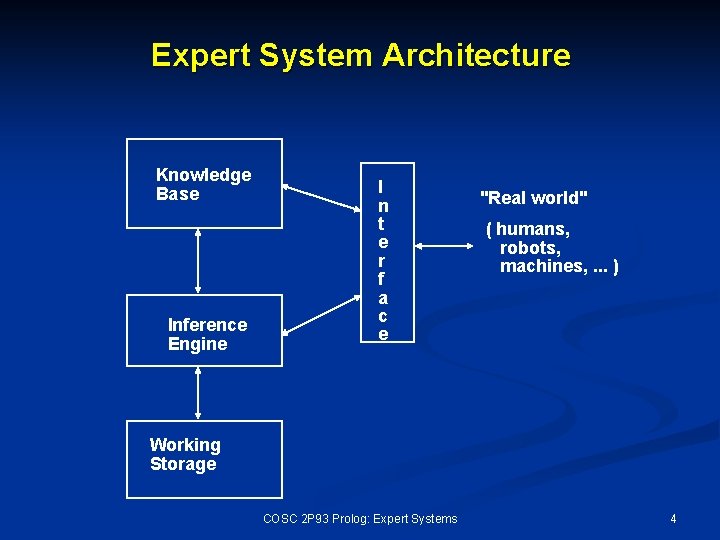

Expert System Architecture Knowledge Base Inference Engine I n t e r f a c e "Real world" ( humans, robots, machines, . . . ) Working Storage COSC 2 P 93 Prolog: Expert Systems 4

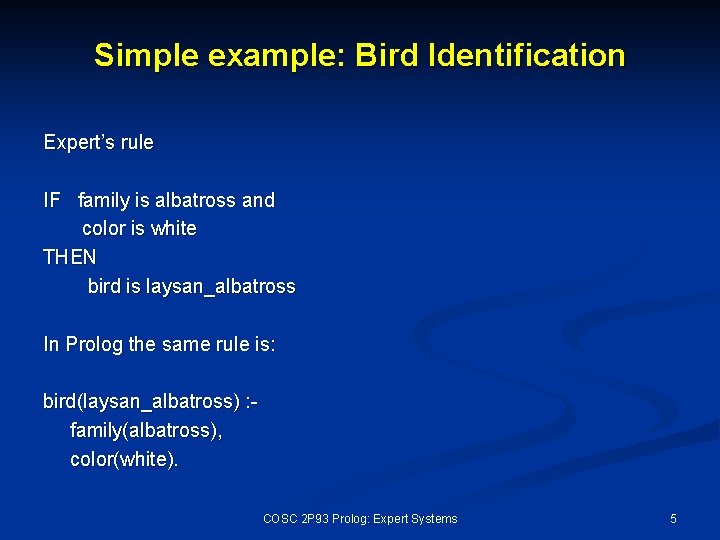

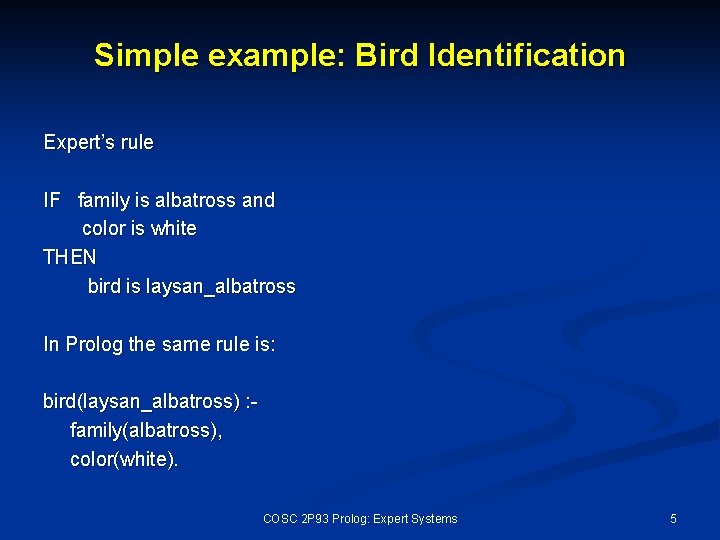

Simple example: Bird Identification Expert’s rule IF family is albatross and color is white THEN bird is laysan_albatross In Prolog the same rule is: bird(laysan_albatross) : family(albatross), color(white). COSC 2 P 93 Prolog: Expert Systems 5

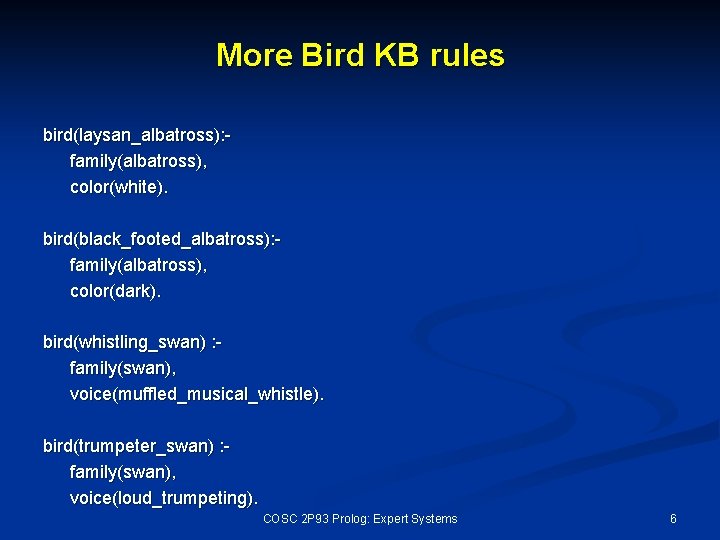

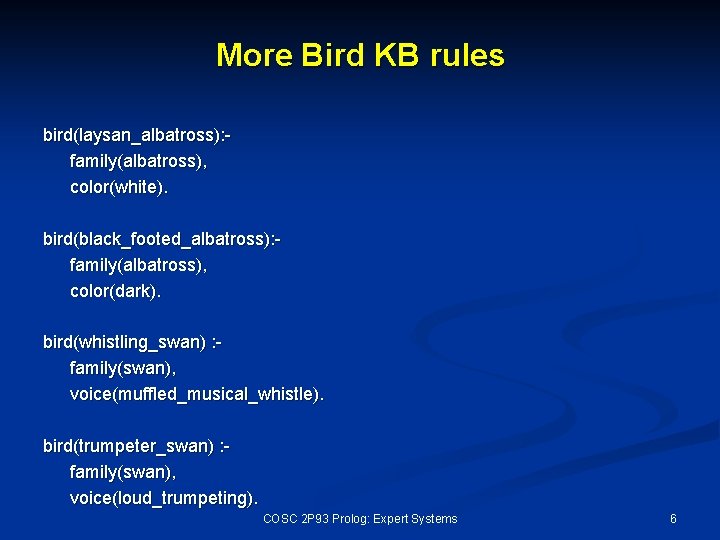

More Bird KB rules bird(laysan_albatross): family(albatross), color(white). bird(black_footed_albatross): family(albatross), color(dark). bird(whistling_swan) : family(swan), voice(muffled_musical_whistle). bird(trumpeter_swan) : family(swan), voice(loud_trumpeting). COSC 2 P 93 Prolog: Expert Systems 6

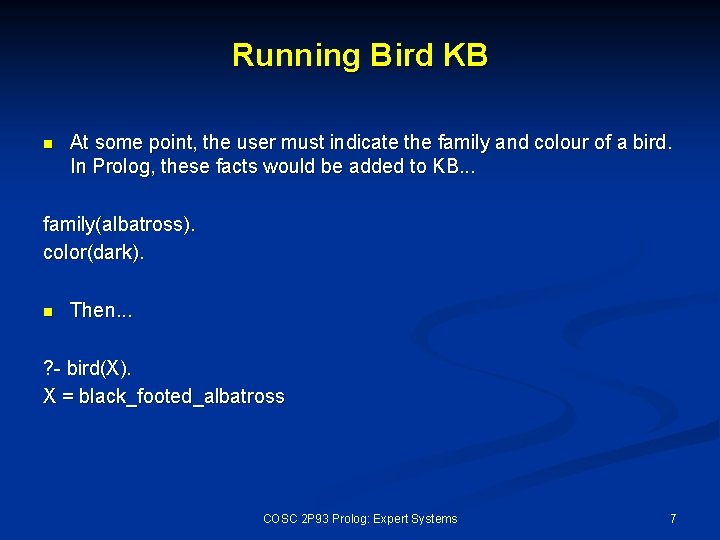

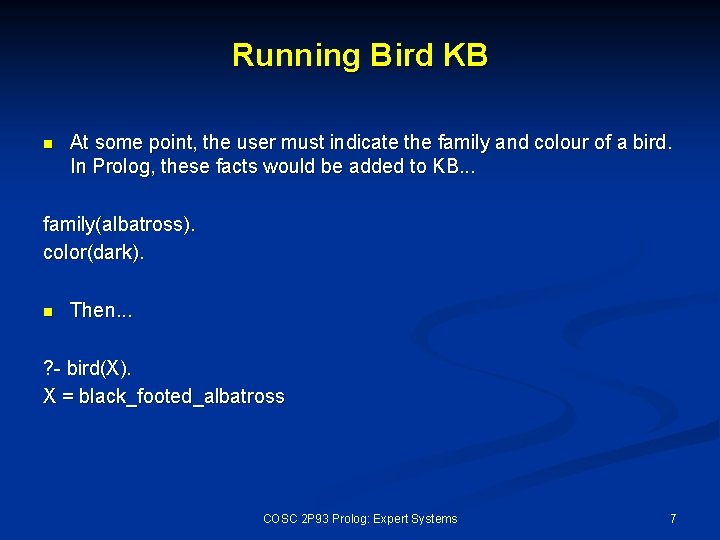

Running Bird KB n At some point, the user must indicate the family and colour of a bird. In Prolog, these facts would be added to KB. . . family(albatross). color(dark). n Then. . . ? - bird(X). X = black_footed_albatross COSC 2 P 93 Prolog: Expert Systems 7

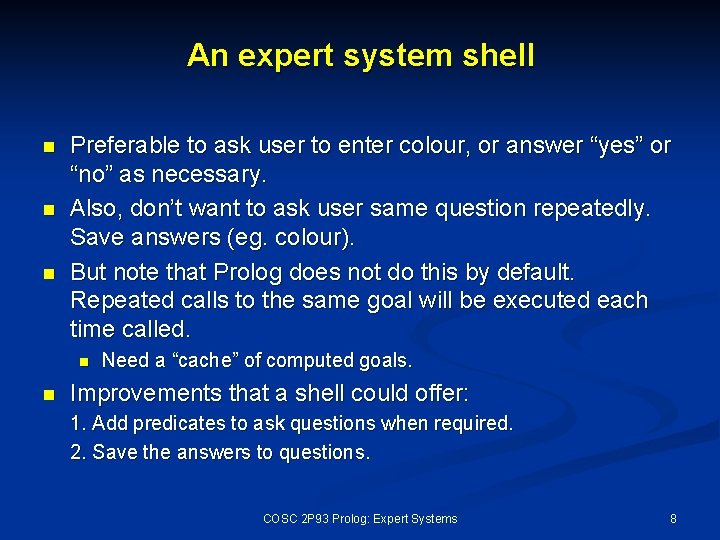

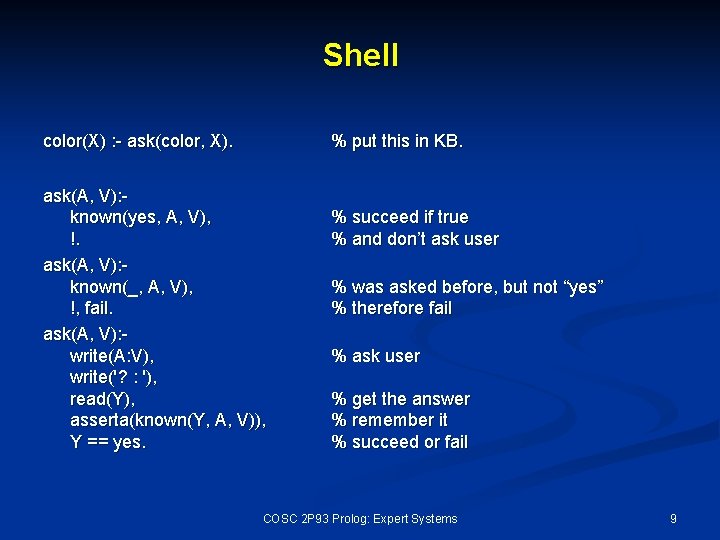

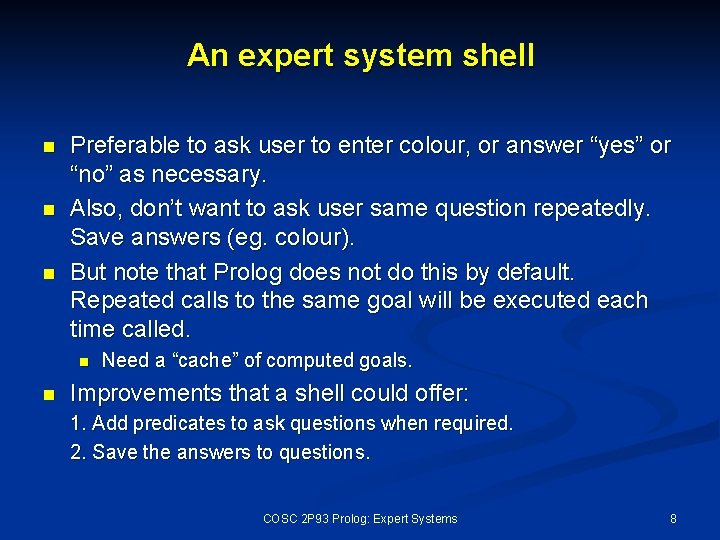

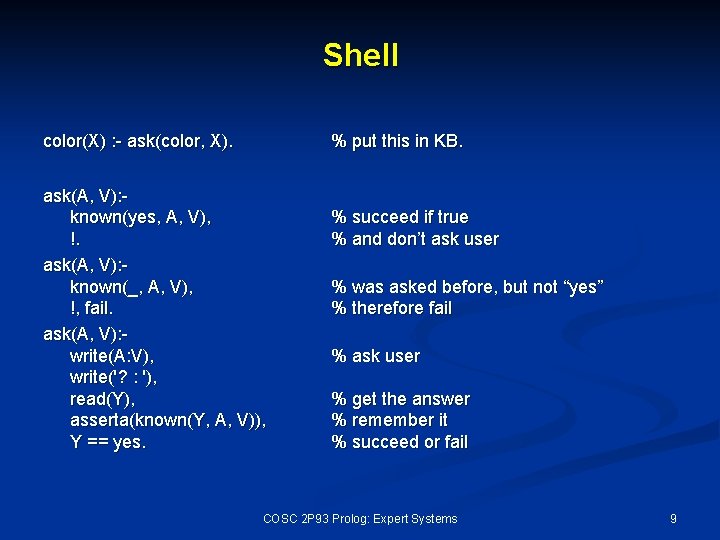

An expert system shell n n n Preferable to ask user to enter colour, or answer “yes” or “no” as necessary. Also, don’t want to ask user same question repeatedly. Save answers (eg. colour). But note that Prolog does not do this by default. Repeated calls to the same goal will be executed each time called. n n Need a “cache” of computed goals. Improvements that a shell could offer: 1. Add predicates to ask questions when required. 2. Save the answers to questions. COSC 2 P 93 Prolog: Expert Systems 8

Shell color(X) : - ask(color, X). % put this in KB. ask(A, V): known(yes, A, V), !. ask(A, V): known(_, A, V), !, fail. ask(A, V): write(A: V), write('? : '), read(Y), asserta(known(Y, A, V)), Y == yes. % succeed if true % and don’t ask user % was asked before, but not “yes” % therefore fail % ask user % get the answer % remember it % succeed or fail COSC 2 P 93 Prolog: Expert Systems 9

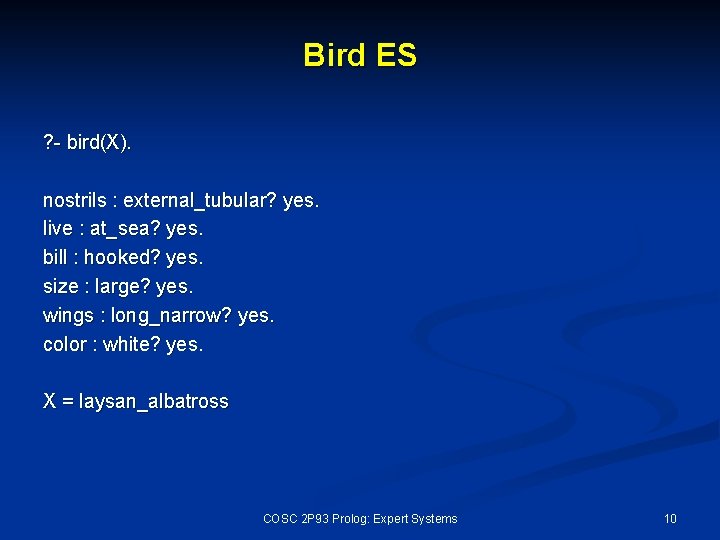

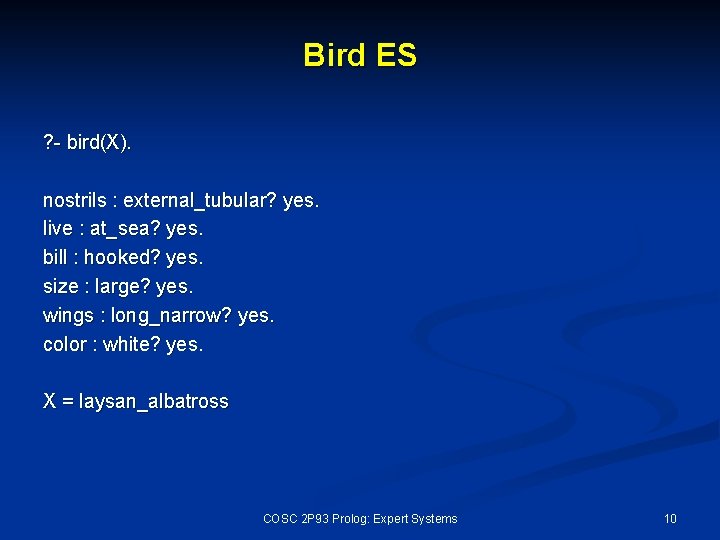

Bird ES ? - bird(X). nostrils : external_tubular? yes. live : at_sea? yes. bill : hooked? yes. size : large? yes. wings : long_narrow? yes. color : white? yes. X = laysan_albatross COSC 2 P 93 Prolog: Expert Systems 10

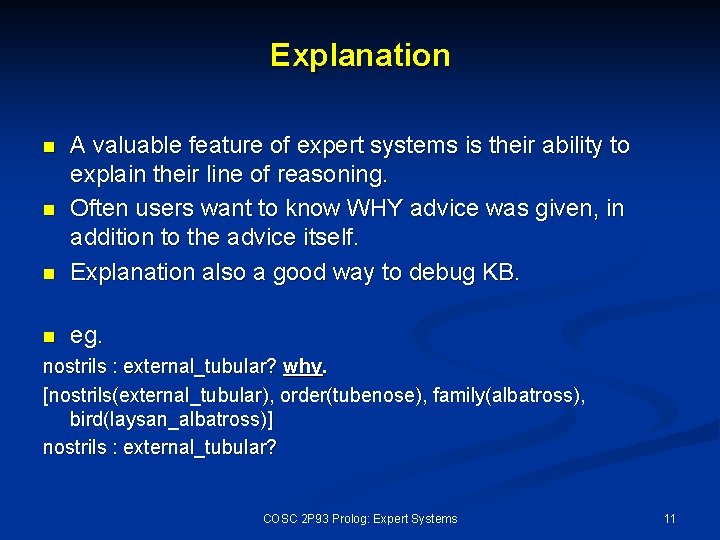

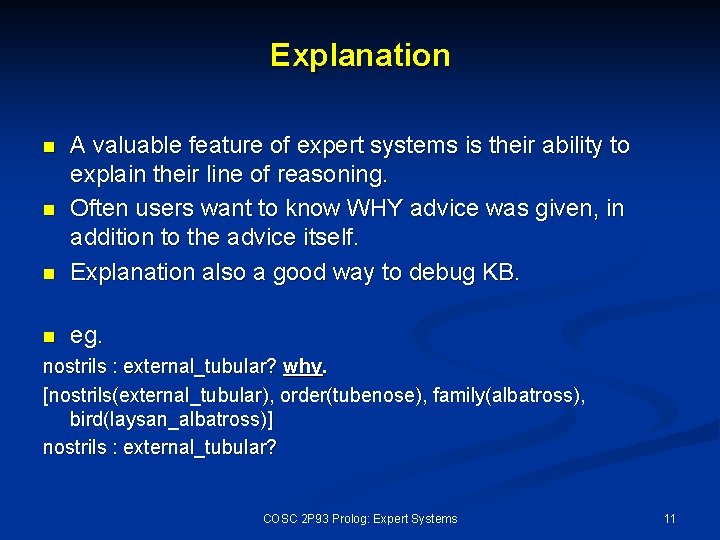

Explanation n A valuable feature of expert systems is their ability to explain their line of reasoning. Often users want to know WHY advice was given, in addition to the advice itself. Explanation also a good way to debug KB. n eg. n n nostrils : external_tubular? why. [nostrils(external_tubular), order(tubenose), family(albatross), bird(laysan_albatross)] nostrils : external_tubular? COSC 2 P 93 Prolog: Expert Systems 11

Explanation n Why: explain the line of reasoning for this question n n Goes from node UP to the root of the tree. How: How was some advice derived? n Goes from node DOWN the branch. n Why not: Why was some other advice not given? n If Prolog’s inference is used, then the above can be implemented with a meta-interpreter. n n Very similar to the one that kept the proof tree for boolean logic. Also similar to grammars that keep the parse tree. COSC 2 P 93 Prolog: Expert Systems 12

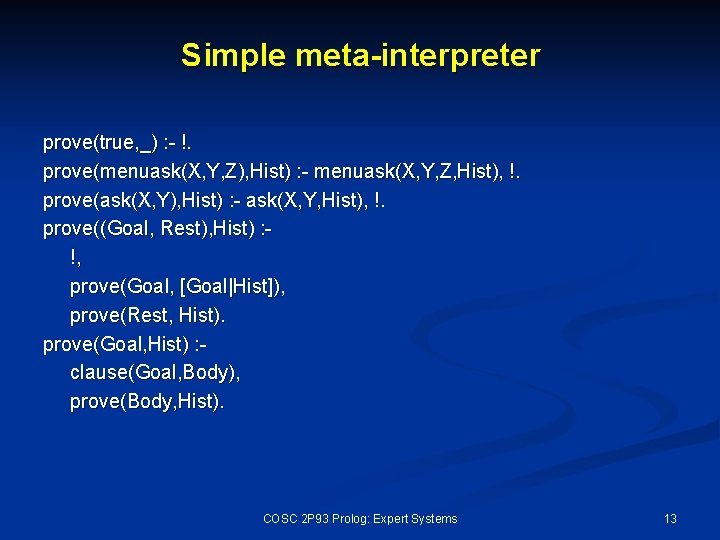

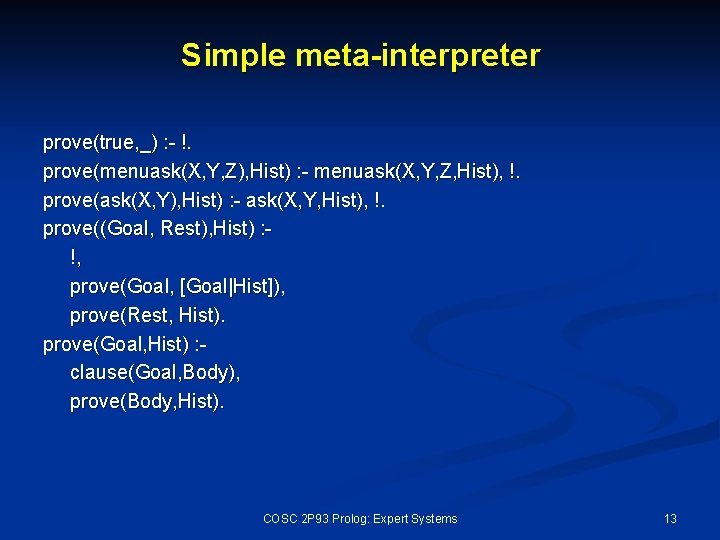

Simple meta-interpreter prove(true, _) : - !. prove(menuask(X, Y, Z), Hist) : - menuask(X, Y, Z, Hist), !. prove(ask(X, Y), Hist) : - ask(X, Y, Hist), !. prove((Goal, Rest), Hist) : !, prove(Goal, [Goal|Hist]), prove(Rest, Hist). prove(Goal, Hist) : clause(Goal, Body), prove(Body, Hist). COSC 2 P 93 Prolog: Expert Systems 13

Meta-interpreter n 2 nd argument of prove is the “explanation” list. n Every time a goal is called, it is added to list. n n represents the goals from a node up the tree to the root. Explanation list passed to ask, menuask utilities. n n If user asks “why”, then the list can be written out. Best to write it out in pieces, in “english” format. COSC 2 P 93 Prolog: Expert Systems 14

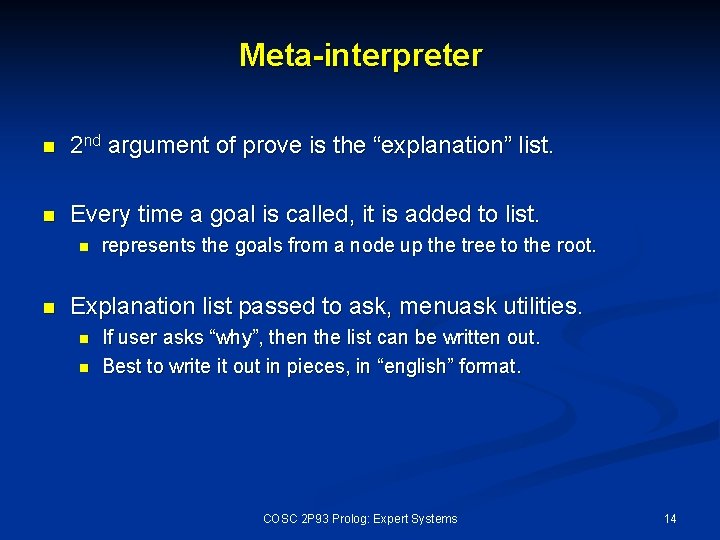

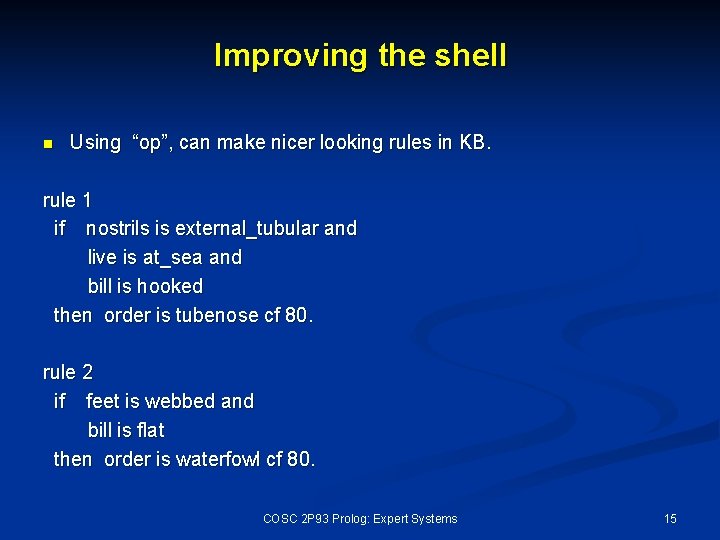

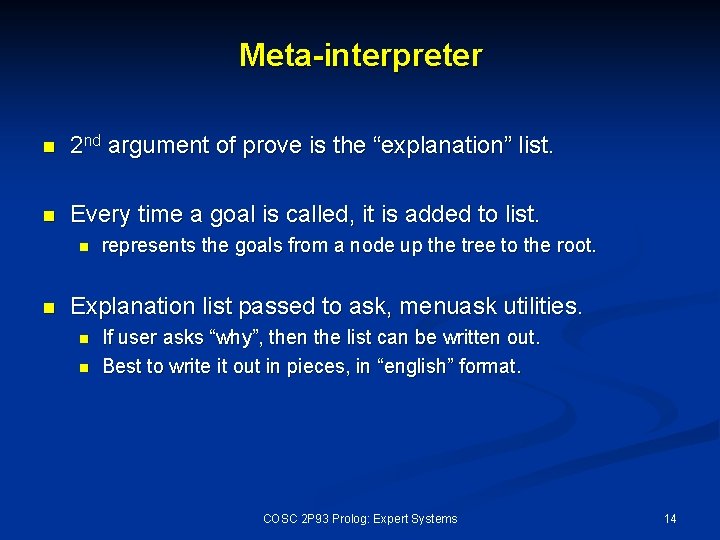

Improving the shell n Using “op”, can make nicer looking rules in KB. rule 1 if nostrils is external_tubular and live is at_sea and bill is hooked then order is tubenose cf 80. rule 2 if feet is webbed and bill is flat then order is waterfowl cf 80. COSC 2 P 93 Prolog: Expert Systems 15

Explanation n With nicer looking rules, you can make explanation and queries more English-like. . . Are the nostrils external_tubular? why. The nostrils are external_tubular is necessary To show that the order is tubenose To show that the family is albatross To show that the bird is a laysan_albatross COSC 2 P 93 Prolog: Expert Systems 16

Uncertainty n n Previous rules had “CF 80” terms: Certainty Factor Expertise is often vague, rather than black and white. n n n eg. medical diagnoses: could be likelihoods of different diseases. Doctors want to consider all possibilities. Expert systems with uncertainty will allow multiple conclusions to be reached. n an ordered list of conclusions (disease diagnoses) will be generated at end of a session. . . Measles CF 80 Chicken Pox CF 75 Yellow Fever CF 45 COSC 2 P 93 Prolog: Expert Systems 17

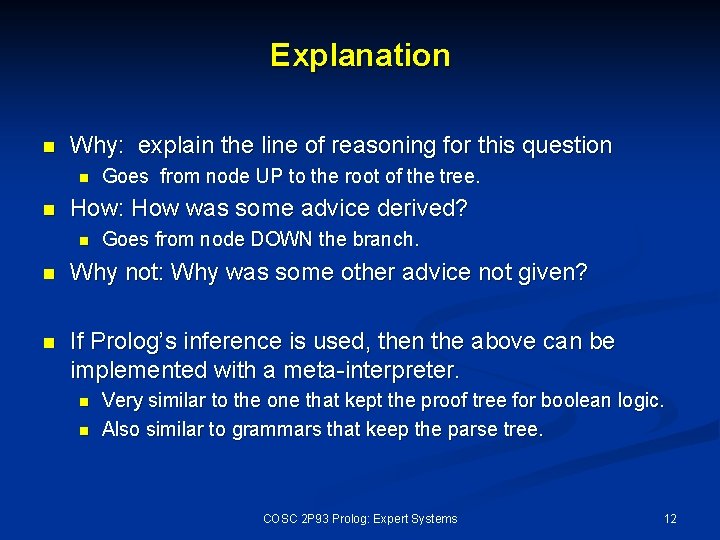

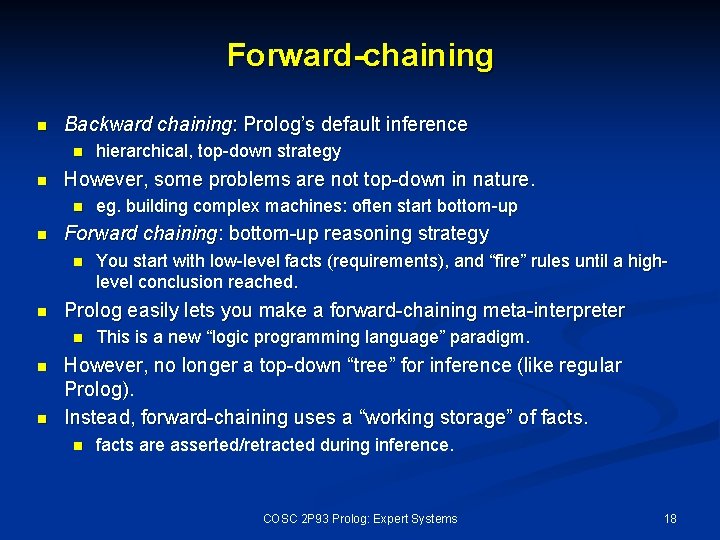

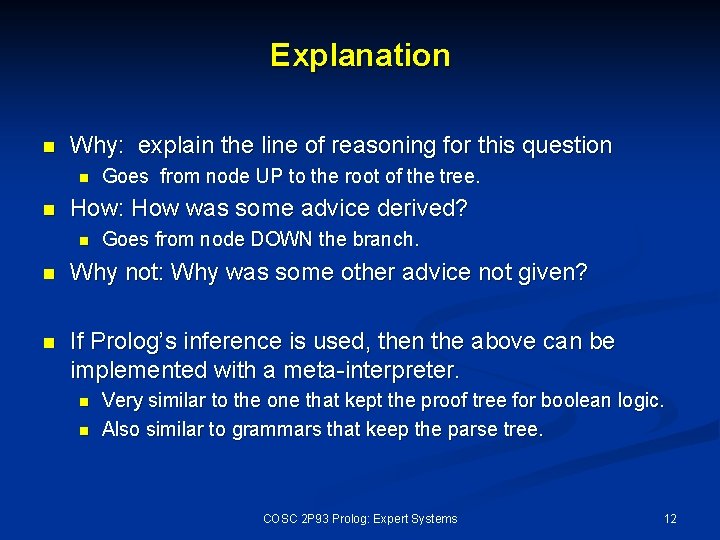

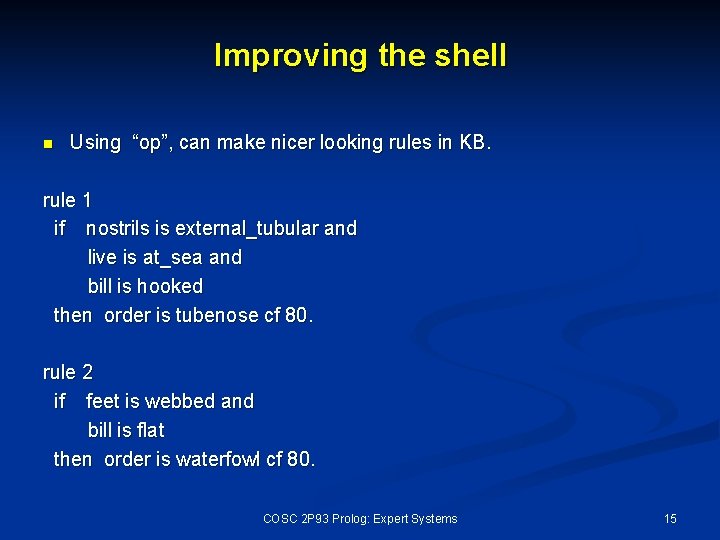

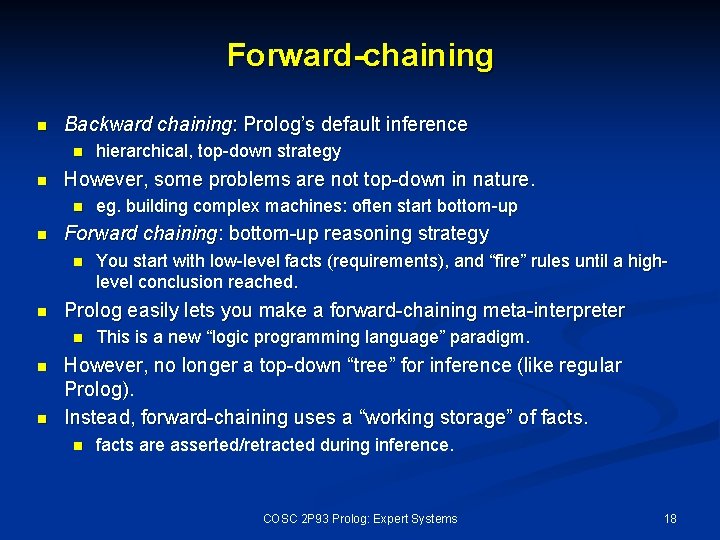

Forward-chaining n Backward chaining: Prolog’s default inference n n However, some problems are not top-down in nature. n n n You start with low-level facts (requirements), and “fire” rules until a highlevel conclusion reached. Prolog easily lets you make a forward-chaining meta-interpreter n n eg. building complex machines: often start bottom-up Forward chaining: bottom-up reasoning strategy n n hierarchical, top-down strategy This is a new “logic programming language” paradigm. However, no longer a top-down “tree” for inference (like regular Prolog). Instead, forward-chaining uses a “working storage” of facts. n facts are asserted/retracted during inference. COSC 2 P 93 Prolog: Expert Systems 18

![Forwardchaining rules rule id 1 1 hasX hair assertisaX mammal retractall rule id Forward-chaining rules rule id 1: [1: has(X, hair)] ==> [assert(isa(X, mammal)), retract(all)]. rule id](https://slidetodoc.com/presentation_image_h/80dc51b605070bbc9c6e8eb3d51b8045/image-19.jpg)

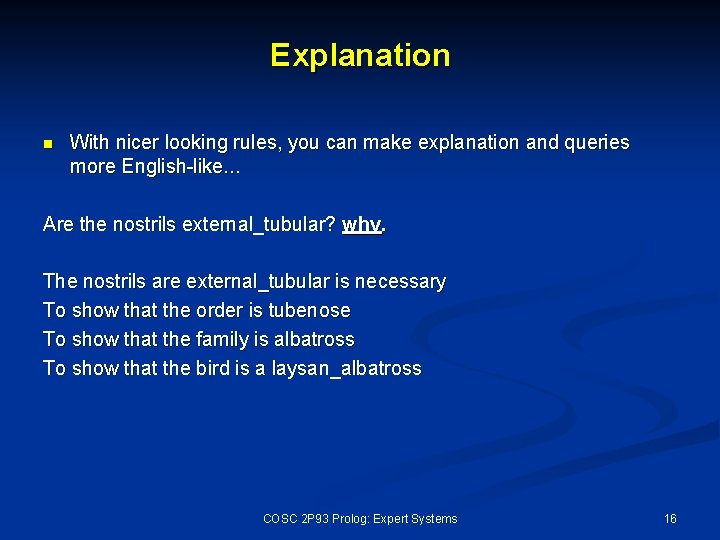

Forward-chaining rules rule id 1: [1: has(X, hair)] ==> [assert(isa(X, mammal)), retract(all)]. rule id 3: [1: has(X, feathers)] ==> [assert(isa(X, bird)), retract(all)]. COSC 2 P 93 Prolog: Expert Systems 19

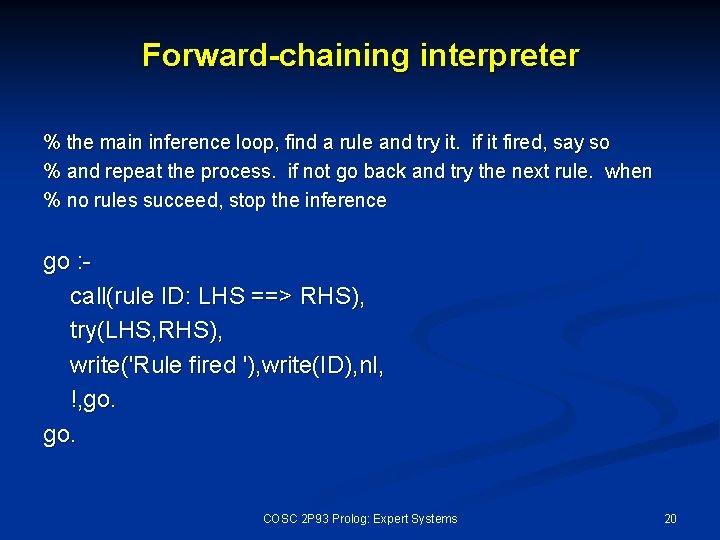

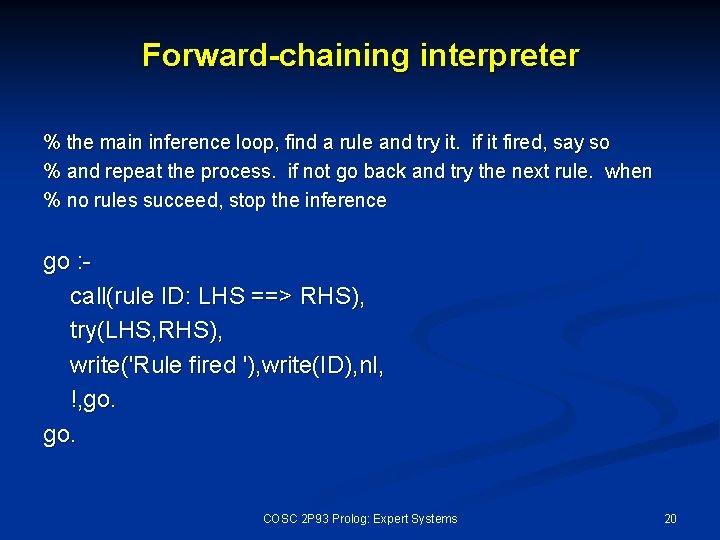

Forward-chaining interpreter % the main inference loop, find a rule and try it. if it fired, say so % and repeat the process. if not go back and try the next rule. when % no rules succeed, stop the inference go : call(rule ID: LHS ==> RHS), try(LHS, RHS), write('Rule fired '), write(ID), nl, !, go. COSC 2 P 93 Prolog: Expert Systems 20

Conclusion n n Expert systems: one of the major commercial success stories of AI (along with data mining, vision, objectoriented programming, . . . ) tens of thousands of expert systems being used. If you qualify (or not) for a mortgage or credit card, an expert system probably made the decision! Prolog is commonly used as an expert system implementation language. n Its ability to interface with databases, other languages, and the WWW, makes it ideal for implementing ES software. COSC 2 P 93 Prolog: Expert Systems 21