Experiments in Utility Computing Hadoop and Condor Sameer

- Slides: 17

Experiments in Utility Computing: Hadoop and Condor • Sameer Paranjpye • Y! Web Search

Outline • Introduction – Application environment, motivation, development principles • Hadoop and Condor – Description, Hadoop-Condor interaction Condor Week 2006

Introduction

Web Search Application Environment • Data intensive distributed applications – Crawling, Document Analysis and Indexing, Web Graphs, Log Processing, … – Highly parallel workloads – Bandwidth to data is a significant design driver • Very large production deployments – Several clusters of 100 s-1000 s of nodes – Lots of data (billions of records, input/output of 10 s of TB in a single run) Condor Week 2006

Why Condor and Hadoop? • To date, our Utility Computing efforts have been conducted using a command-control model – Closed, “cathedral” style development – Custom built, proprietary solutions • Hadoop and Condor – Experimental effort to leverage open source for infrastructure components – Current deployment: Cluster for supporting research computations • Multiple users, running ad-hoc, experimental programs Condor Week 2006

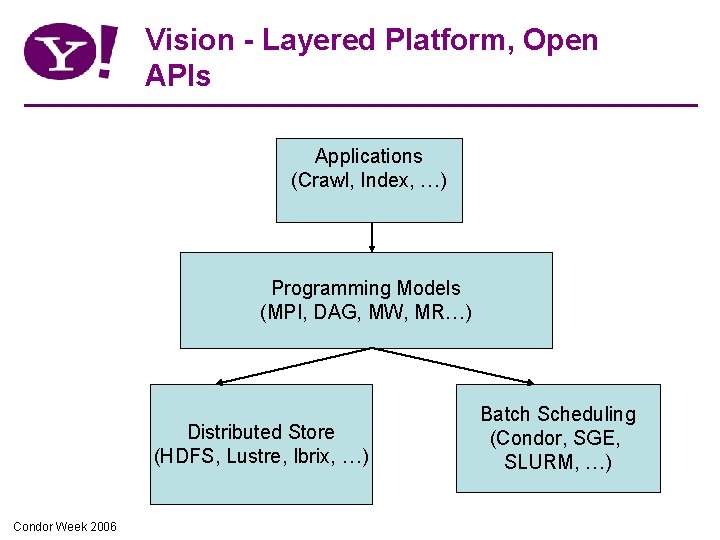

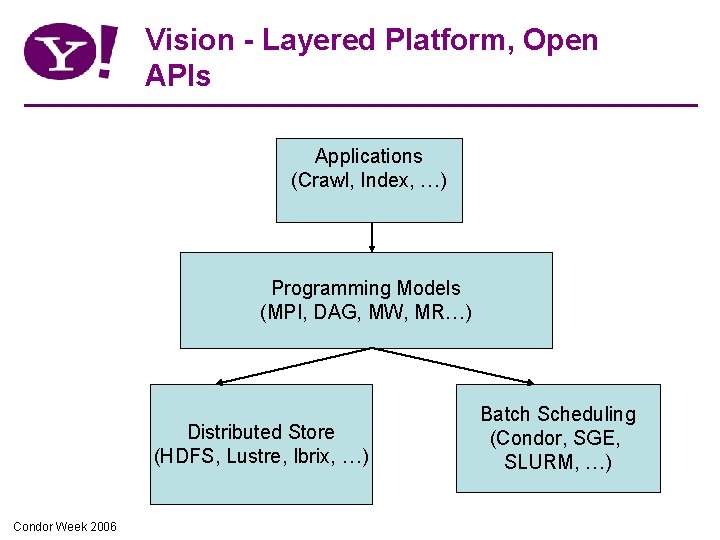

Vision - Layered Platform, Open APIs Applications (Crawl, Index, …) Programming Models (MPI, DAG, MW, MR…) Distributed Store (HDFS, Lustre, Ibrix, …) Condor Week 2006 Batch Scheduling (Condor, SGE, SLURM, …)

Development philosophy • • Adopt, Collaborate, Extend Open source commodity software Open APIs for interoperability Identify and use existing robust platform components • Engage community and participate in developing nascent and emerging solutions Condor Week 2006

Hadoop and Condor

Hadoop • Open source project developing – – – Distributed store Implementation of Map/Reduce programming model Led by Doug Cutting Implemented in Java Alpha (0. 1) release available for download • Apache distribution • Genesis – Lucene and Nutch (Open source search) – Hadoop (factors out distributed compute/storage infrastructure) • http: //lucene. apache. org/hadoop Condor Week 2006

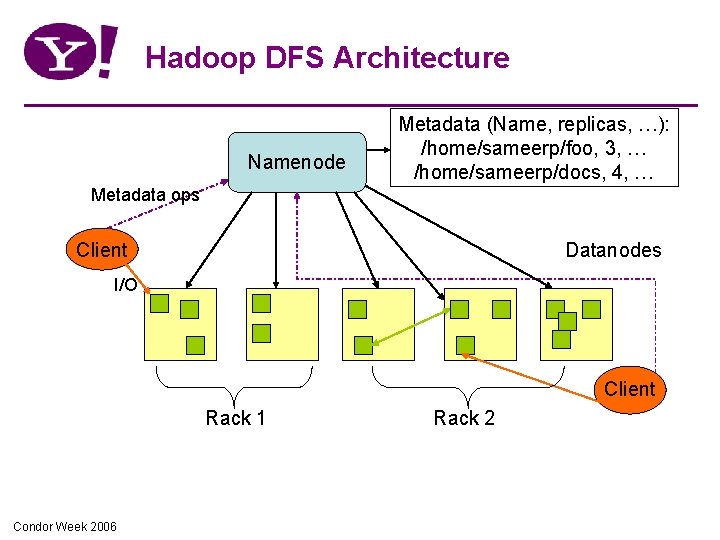

Hadoop DFS • Distributed storage system – Files are divided into uniform sized blocks and distributed across cluster nodes – Block replication for failover – Checksums for corruption detection and recovery – DFS exposes details of block placement so that computes can be migrated to data • Notable differences from mainstream DFS work – Single ‘storage + compute’ cluster vs. Separate clusters – Simple I/O centric API vs. Attempts at POSIX compliance Condor Week 2006

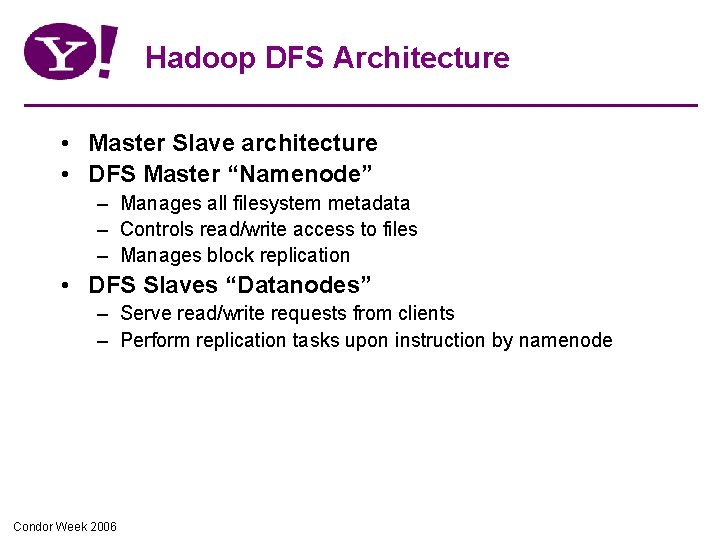

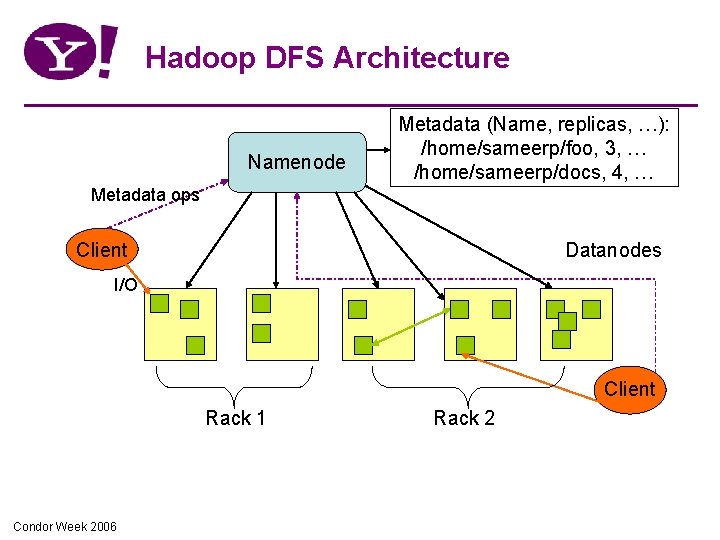

Hadoop DFS Architecture • Master Slave architecture • DFS Master “Namenode” – Manages all filesystem metadata – Controls read/write access to files – Manages block replication • DFS Slaves “Datanodes” – Serve read/write requests from clients – Perform replication tasks upon instruction by namenode Condor Week 2006

Hadoop DFS Architecture Namenode Metadata (Name, replicas, …): /home/sameerp/foo, 3, … /home/sameerp/docs, 4, … Metadata ops Datanodes Client I/O Client Rack 1 Condor Week 2006 Rack 2

Benchmarks Condor Week 2006

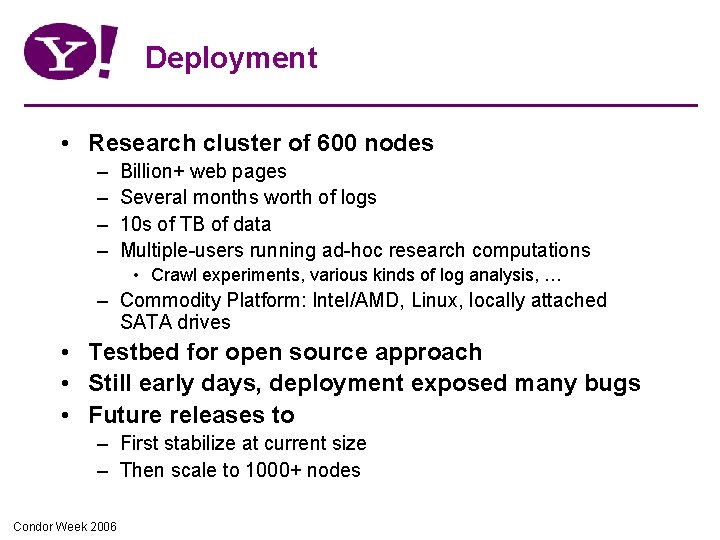

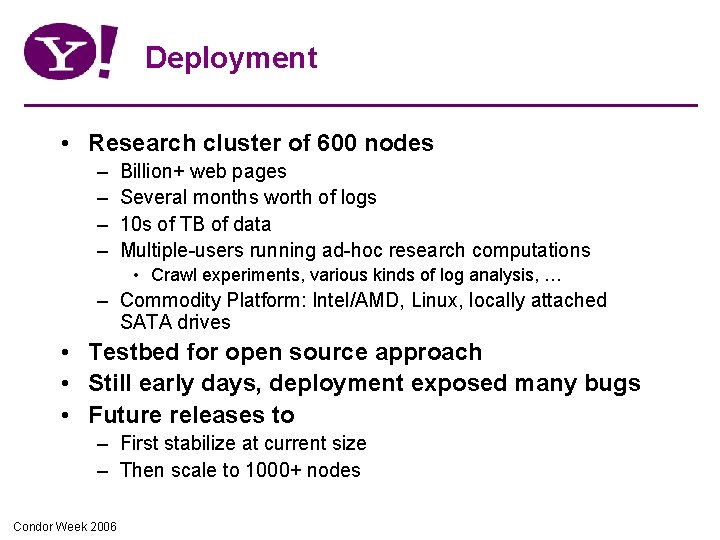

Deployment • Research cluster of 600 nodes – – Billion+ web pages Several months worth of logs 10 s of TB of data Multiple-users running ad-hoc research computations • Crawl experiments, various kinds of log analysis, … – Commodity Platform: Intel/AMD, Linux, locally attached SATA drives • Testbed for open source approach • Still early days, deployment exposed many bugs • Future releases to – First stabilize at current size – Then scale to 1000+ nodes Condor Week 2006

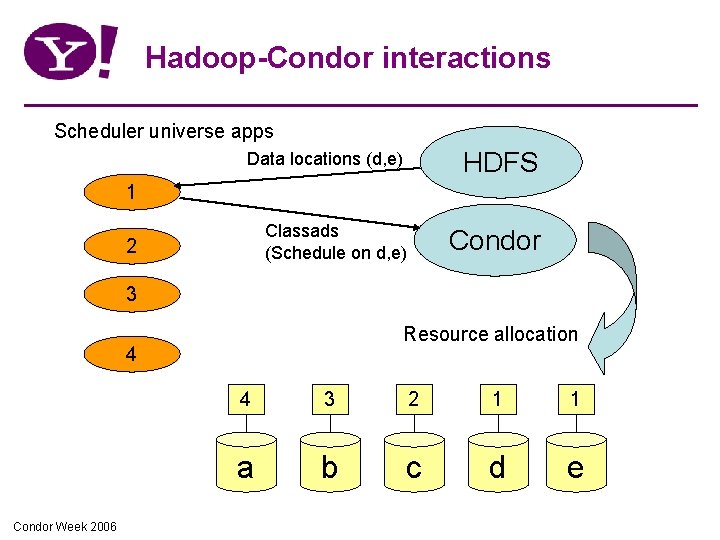

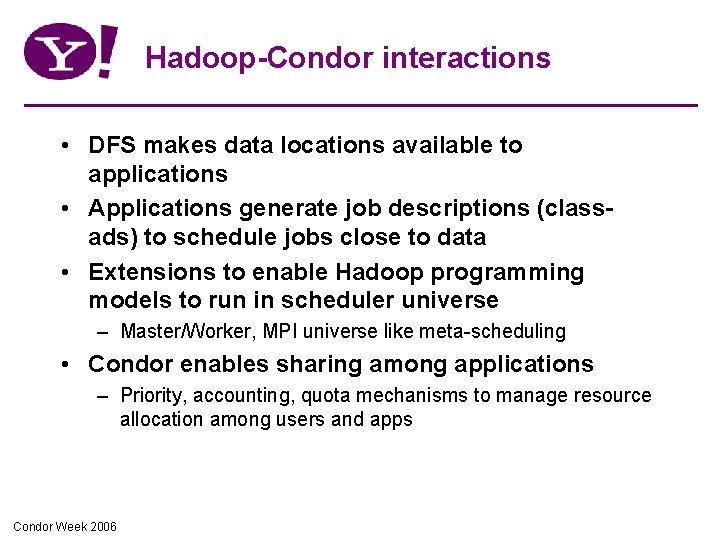

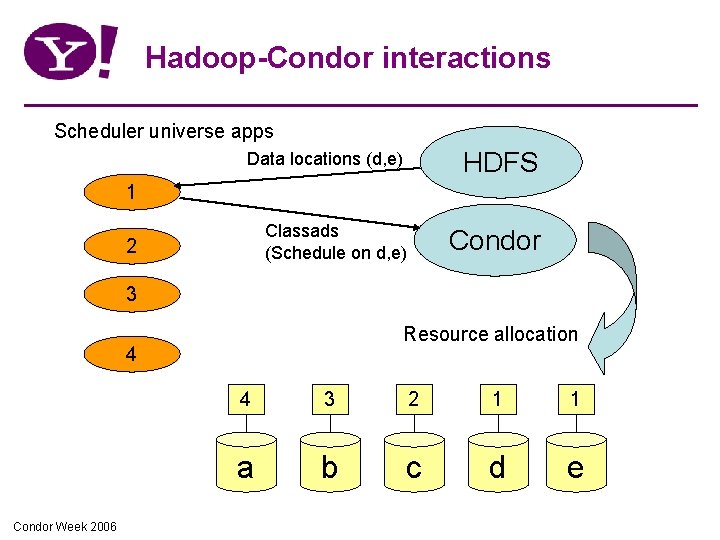

Hadoop-Condor interactions • DFS makes data locations available to applications • Applications generate job descriptions (classads) to schedule jobs close to data • Extensions to enable Hadoop programming models to run in scheduler universe – Master/Worker, MPI universe like meta-scheduling • Condor enables sharing among applications – Priority, accounting, quota mechanisms to manage resource allocation among users and apps Condor Week 2006

Hadoop-Condor interactions Scheduler universe apps Data locations (d, e) HDFS Classads (Schedule on d, e) Condor 1 2 3 Resource allocation 4 Condor Week 2006 4 3 2 1 1 a b c d e

The end THE END Condor Week 2006