Experiments in computer science Emmanuel Jeannot INRIA LORIA

- Slides: 10

Experiments in computer science Emmanuel Jeannot INRIA – LORIA Aleae Kick-off meeting April 1 st 2009

The discipline of computing: an experimental science Studied objects (hardware, programs, data, protocols, algorithms, network): more and more complex. Modern infrastructures: • Processors have very nice features § Cache § Hyperthreading § Multi-core • Operating system impacts the performance (process scheduling, socket implementation, etc. ) • The runtime environment plays a role (MPICH≠OPENMPI) • Middleware have an impact (Globus≠Grid. Solve) • Various parallel architectures that can be: § § Heterogeneous Hierarchical Distributed Dynamic Experimental validation Emmanuel Jeannot 2/26

Analytic study Purely analytical (math) models: • Demonstration of properties (theorem) • Models need to be tractable: oversimplification? • Good to understand the basic of the problem • Most of the time ones still perform a experiments (at least for comparison) For a practical impact: analytic study not always possible or not sufficient Experimental validation Emmanuel Jeannot 3/26

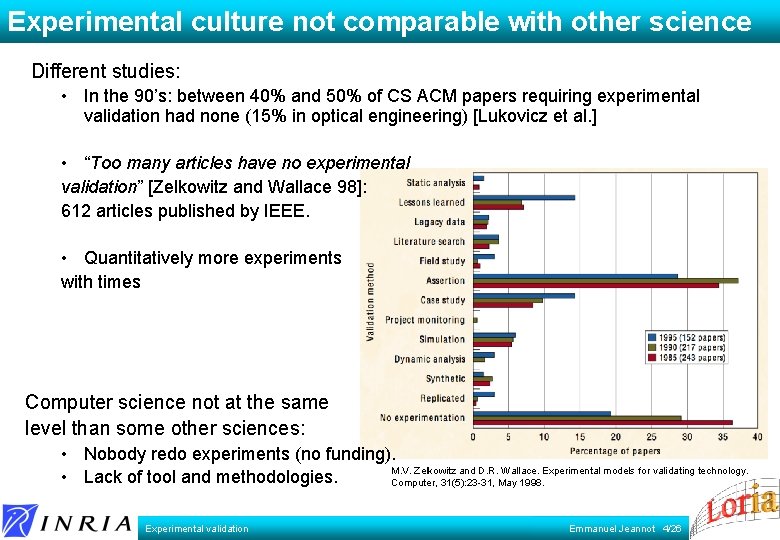

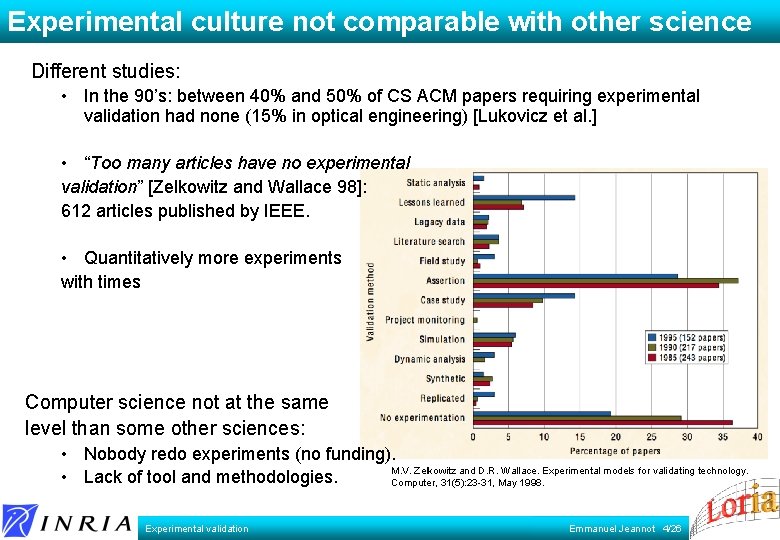

Experimental culture not comparable with other science Different studies: • In the 90’s: between 40% and 50% of CS ACM papers requiring experimental validation had none (15% in optical engineering) [Lukovicz et al. ] • “Too many articles have no experimental validation” [Zelkowitz and Wallace 98]: 612 articles published by IEEE. • Quantitatively more experiments with times Computer science not at the same level than some other sciences: • Nobody redo experiments (no funding). M. V. Zelkowitz and D. R. Wallace. Experimental models for validating technology. • Lack of tool and methodologies. Computer, 31(5): 23 -31, May 1998. Experimental validation Emmanuel Jeannot 4/26

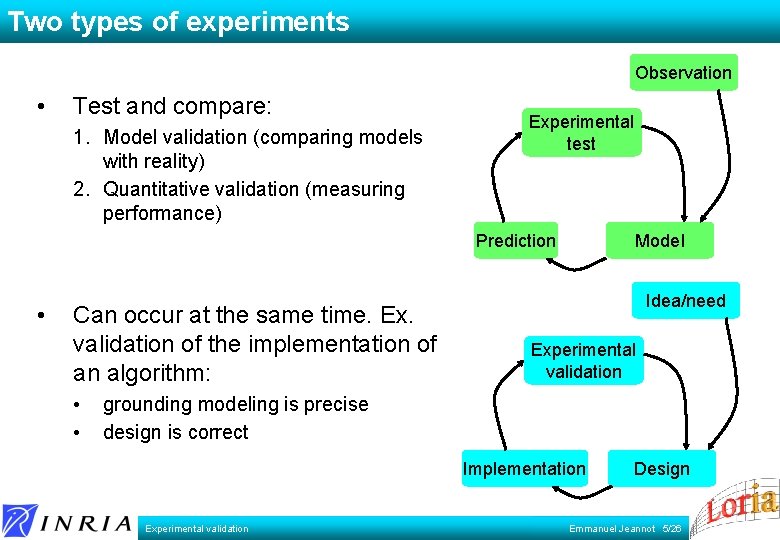

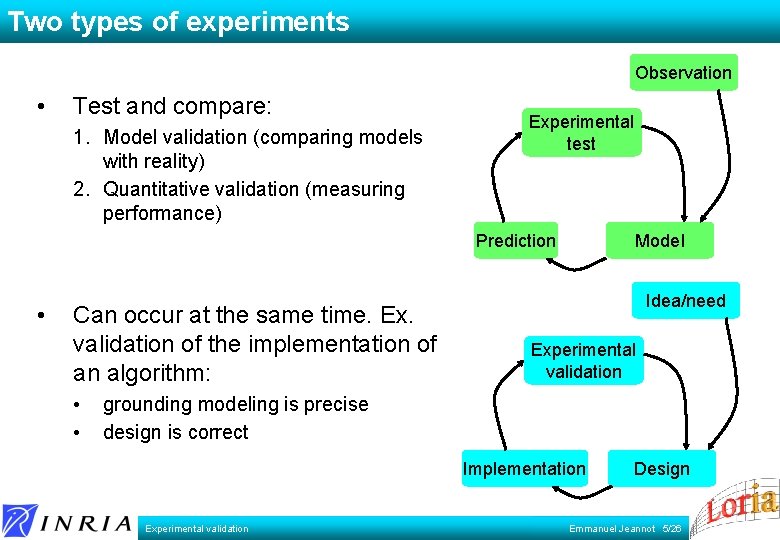

Two types of experiments Observation • Test and compare: 1. Model validation (comparing models with reality) 2. Quantitative validation (measuring performance) Experimental test Prediction • Can occur at the same time. Ex. validation of the implementation of an algorithm: • • Model Idea/need Experimental validation grounding modeling is precise design is correct Implementation Experimental validation Design Emmanuel Jeannot 5/26

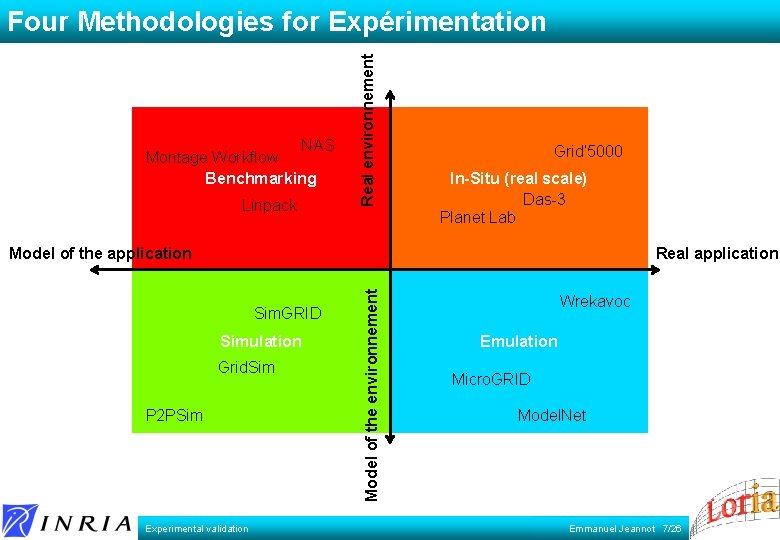

Experimental validation A good alternative to analytical validation: • Provides a comparison between algorithms or programs • Provides a validation of the model or helps to define the validity domain of the model Four Methodologies • In-situ (Real scale) • Simulation • Emulation • Benchmarking Experimental validation Emmanuel Jeannot 6/26

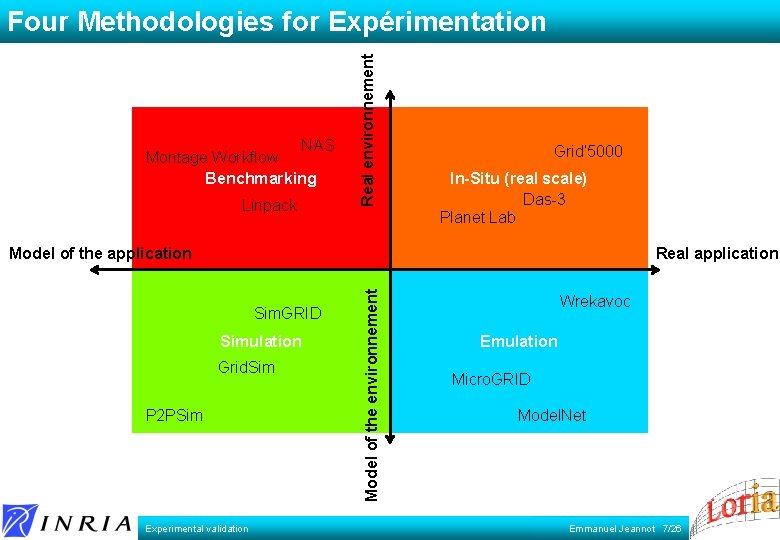

NAS Montage Workflow Benchmarking Linpack Real environnement Four Methodologies for Expérimentation Grid’ 5000 In-Situ (real scale) Das-3 Planet Lab Real application Sim. GRID Simulation Grid. Sim P 2 PSim Experimental validation Model of the environnement Model of the application Wrekavoc Emulation Micro. GRID Model. Net Emmanuel Jeannot 7/26

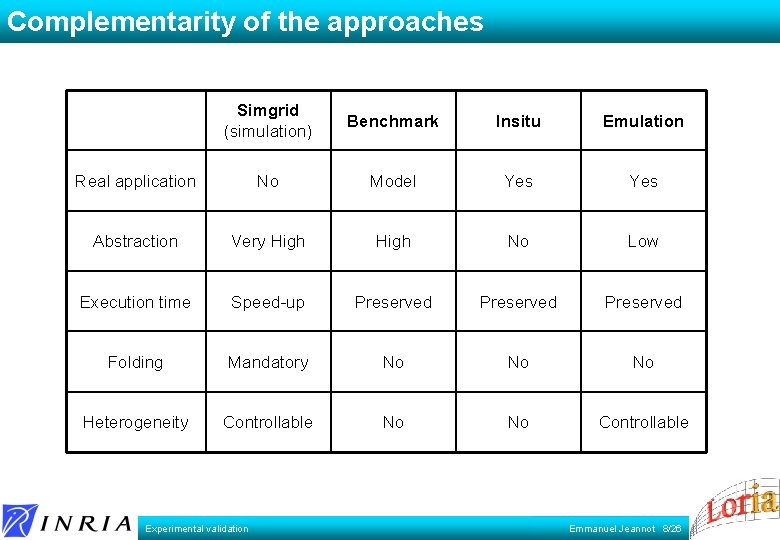

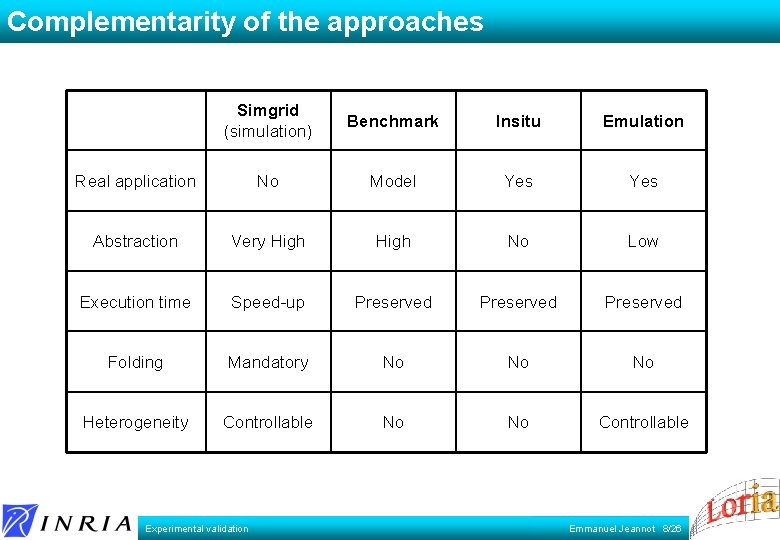

Complementarity of the approaches Simgrid (simulation) Benchmark Insitu Emulation Real application No Model Yes Abstraction Very High No Low Execution time Speed-up Preserved Folding Mandatory No No No Heterogeneity Controllable No No Controllable Experimental validation Emmanuel Jeannot 8/26

Simulation vs real scale A good strategy : 1. Build benchmarks to asses the characteristics of the environments 2. Calibrate model with real-scale experiments 3. Real application tests with benchmarks 4. Perform large-scale and reproducible experiments with simulation Experimental validation Emmanuel Jeannot 9/26

Conclusion • Experimentation is important to validate our approach (model, solutions, etc. ) • But it has to be done carefully! • This is an important part of the project • Important : we get funded (partially) because we promised we will use Grid’ 500 Experimental validation Emmanuel Jeannot 10/26