Experimental Research Experimental Research An experiment is a

- Slides: 20

Experimental Research

Experimental Research An experiment is a scientific investigation that sets out to determine the cause and effect of two or more variables. A “good” experiment… 1. manipulates the independent variable 2. compares the groups in terms of the dependent variable 3. assures that only the IV causes the changes in the DV if this is accomplished then the experiment has internal validity 4. minimizes confounding variables any variable that varies along with the IV 5. uses randomization 6. uses experimenter control

Independent or Dependent? Independent Variable • What the experimenter manipulates • The “cause” of the experiment • It is “independent” of all other variables Dependent Variable • What the experimenter measures • The “effect” of the experiment • It “depends” on the Independent Variable

Independent Variable: Factors and Levels The Experimental Design depends on two things: The Number of Independent Variables (or Factors) used One Factor is nice and simple Two or more become Factorial Analysis The Number of Levels within each Independent Variable

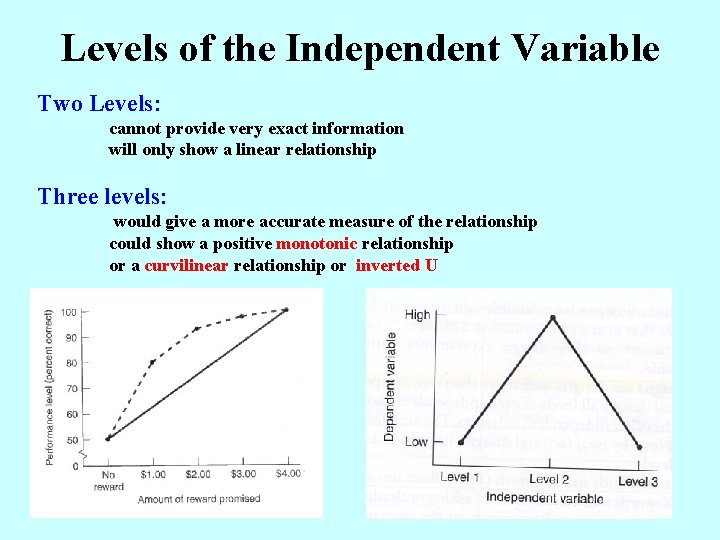

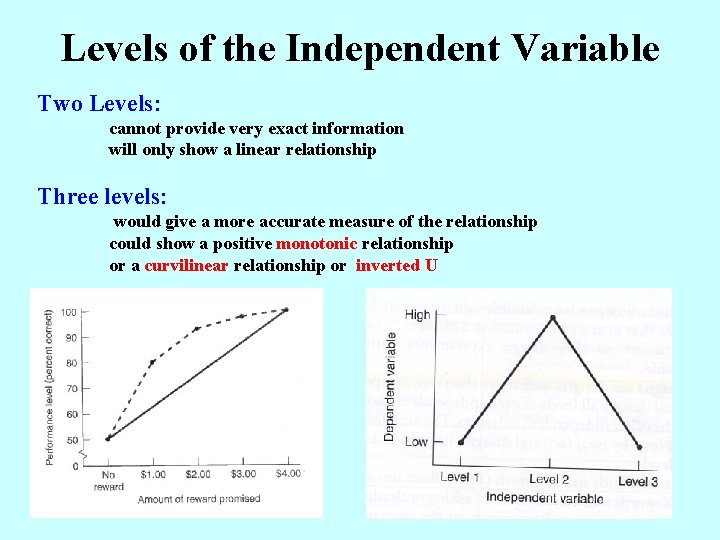

Levels of the Independent Variable Two Levels: cannot provide very exact information will only show a linear relationship Three levels: would give a more accurate measure of the relationship could show a positive monotonic relationship or a curvilinear relationship or inverted U

Dependent Variable Sensitivity should be sensitive enough to detect differences between groups Ceiling Effect • IV seems to have no effect only because participants quickly reach the maximum performance level Floor Effect • IV seems to have no effect only because the task is so difficult that participants cannot perform well

Operational Definition A definition of a variable that allows it to be concretely measured. • A variable is an Abstract Concept • It must be translated into a Concrete Form • so it can be measured • and studied empirically • Helps to successfully communicate ideas to others • Often a variety of measures are used

Dependent Variable Types Self-Report Measures • rating scales with descriptive anchors are most commonly used Strongly Agree ___ ___ Strongly Disagree Behavioral Measures • direct observations of behavior • typical behavior measures: • occurrence - did the behavior occur • absence - did the behavior fail to occur • rate or frequency - how often did the behavior occur • duration - how long did the behavior last • reaction time - how soon did the behavior occur after the stimulus Physiological Measures • recordings of responses of the body

Dependent Variable: Other Factors Multiple Measures • often desirable to measure more than one DV • IV effect on multiple, similar DV’s increases confidence in the effect • useful to know whether IV affects some DV’s and not others • ordering the DV measures can be an issue • counterbalancing may be needed • always present the most important DV first Cost of the Measures • some measures are more costly than others Ethics • may influence the choice of the DV

Types of Data Categorical, Qualitative Variables: Nominal e. g. , “yes/no”; “male/female” Ordinal e. g. , “first, second, third”; “gold, silver, bronze” Continuous, Quantitative Variables: Interval e. g. , “temperature” Ratio e. g. , “exam scores”; “GPA”

Other Types of Variables Situational Variables • describe characteristics of a situation or environment Response Variables • are responses or behaviors of individuals Participant or Subject Variables • are individual differences or the characteristics of the individuals Mediating Variables • are the psychological processes that mediate the effects of a situational variable on a particular response.

Who do We Study? • Subjects • are they really subjected to something against their will? • Participants • or are they active voluntary participants? • Respondents • refers to those that respond to a survey • Informants • specifically knowledgeable people that assist the experimenter

Experimental Causality • Temporal Order • first manipulate IV and then see result in DV • Covariance of Two Variables • experimental subjects show effect • control subjects do not show effect • Alternative Explanations • the research design should control these

Experimental Validity • Construct Validity • adequacy of the operational definition of variables • does it actually reflect the true meaning of the variable? • Internal Validity • ability to draw conclusions about causal relationships from our data • can you make strong inferences about one variable affecting another? • External Validity • the extent to which the results can be generalized to other populations and other settings

Construct Validity Face Validity the content of the measure reflects the construct being measured Criterion-Oriented Validity scores on the measure are related to a criterion of the construct Predictive Validity scores predict behavior Concurrent Validity people who differ on the construct, differ on the measure Convergent Validity scores are related to other scores of same construct Discriminant Validity scores are not related to other scores of different theoretical constructs

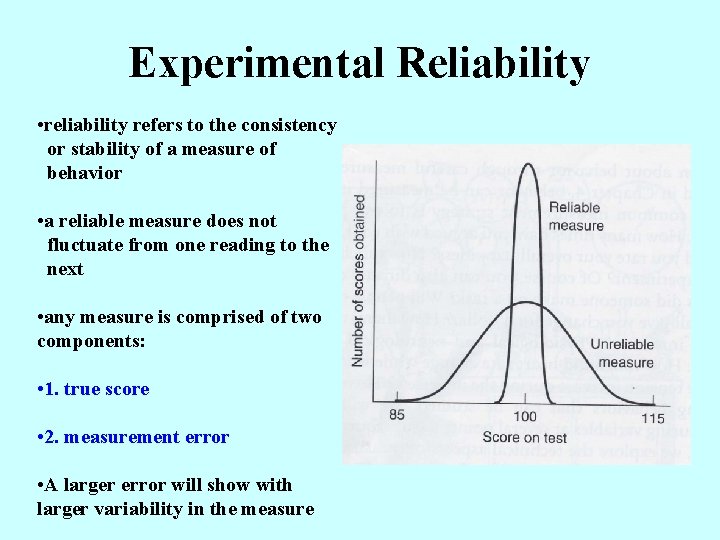

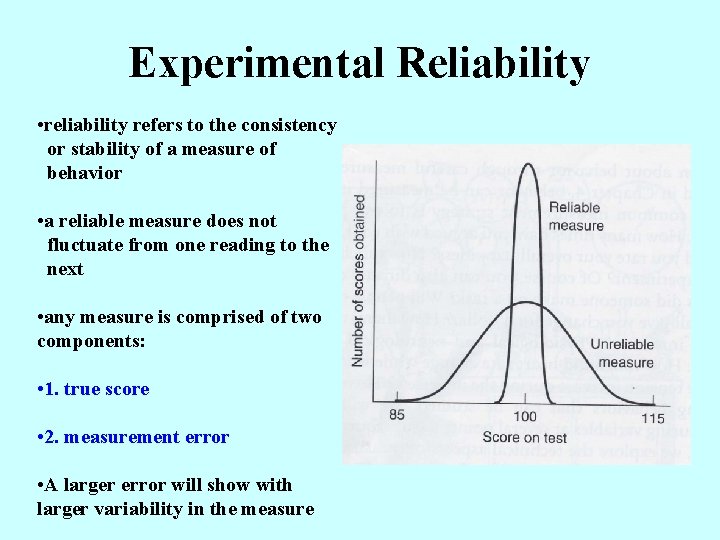

Experimental Reliability • reliability refers to the consistency or stability of a measure of behavior • a reliable measure does not fluctuate from one reading to the next • any measure is comprised of two components: • 1. true score • 2. measurement error • A larger error will show with larger variability in the measure

Types of Reliability • Test-Retest Reliability • measure the same individual at two different time points • can also use Alternative Forms Reliability test • Internal Consistency Reliability • measures at only one point in time • Split-Half Reliability compares one half of the test scores with the other • Cronbach's Alpha correlates each single item with all other items • eliminate the items that do not correlate well • Interrater Reliability • the extent to which raters agree on their assessments • Cohen's Kappa • Reliability of Accuracy Measures • reliability tells us about measurement error • does not tell us about the validity of the measure

Experimental Control • Involves control of all variables • All extraneous variables are kept constant • Constant variables are not responsible for cause and effect • Must treat all participants the same

Controls: Participants Demand Characteristics participants form expectations about the study and behave differently typically "help" the scientists to support their hypothesis deception helps to counter demand characteristics filler items can be used on surveys to disguise the true DV can ask the participants about their perspectives of the study not really seen in non-participant field setting and naturalistic observations Placebo Groups typically used in drug studies simulates drug taking protocol but has sugar pill replacement placebo effect just thinking you are taking medicine can improve your condition can be a very powerful effect Confederates and Deception are used to keep the subjects from knowing the true nature of the experiment

Controls: Experimenter All subjects need to be treated the same and the experiment must maintain consistency from start to finish. Experimenter Bias the experimenter inadvertently influences the outcome of the an experiment may give one group of subjects hints on how to answer a question could treat the control and experimental groups differently can be biased in the way they score subjective evaluations can make mistakes in data recording. "teacher expectancy" can influence performance just knowing about the study can influence the results must assume the bias will happen if not corrected Solutions to the Experimenter Bias blind designs single-blind experimental design double-blind experimental design simultaneous running of participants experimenters should be well-trained and practice consistent behaviors automatic/computerized scoring