Experimental Design Research vs Experiment Research A careful

- Slides: 50

Experimental Design Research vs Experiment

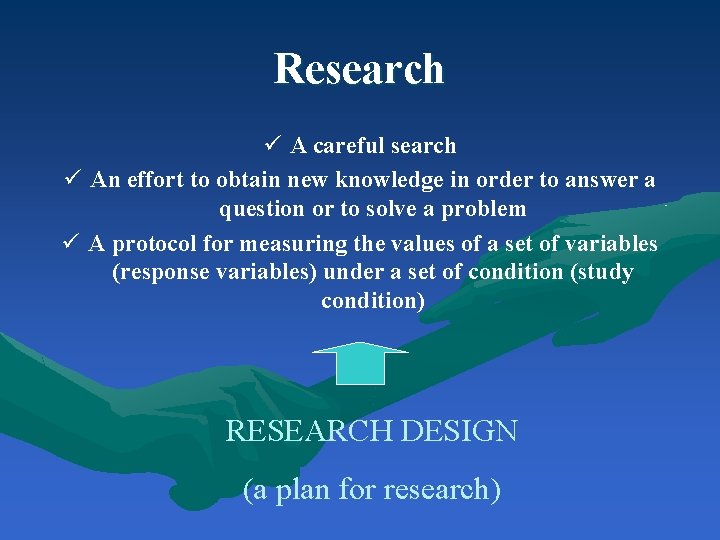

Research ü A careful search ü An effort to obtain new knowledge in order to answer a question or to solve a problem ü A protocol for measuring the values of a set of variables (response variables) under a set of condition (study condition) RESEARCH DESIGN (a plan for research)

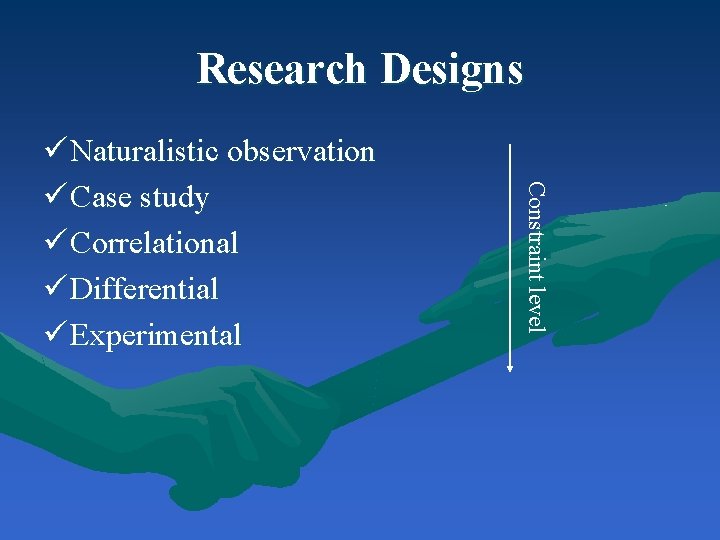

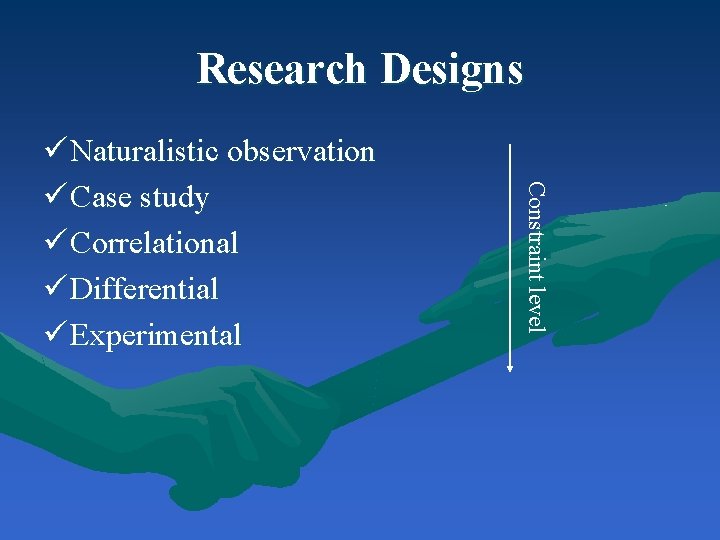

Research Designs Constraint level ü Naturalistic observation ü Case study ü Correlational ü Differential ü Experimental

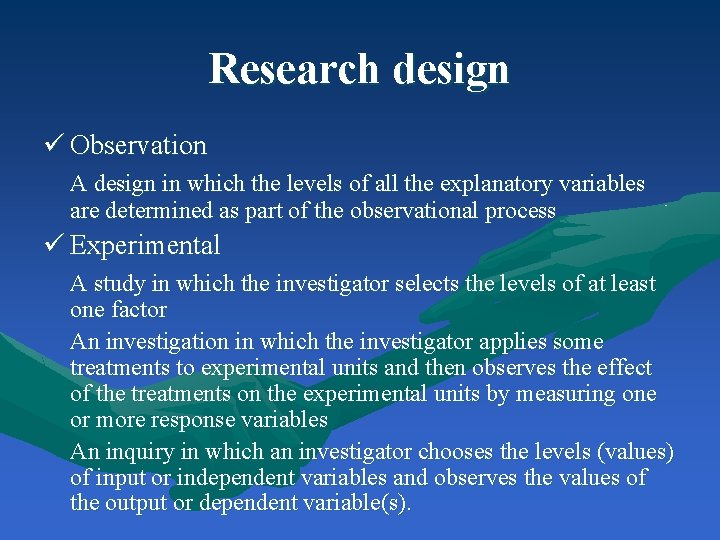

Research design ü Observation A design in which the levels of all the explanatory variables are determined as part of the observational process ü Experimental A study in which the investigator selects the levels of at least one factor An investigation in which the investigator applies some treatments to experimental units and then observes the effect of the treatments on the experimental units by measuring one or more response variables An inquiry in which an investigator chooses the levels (values) of input or independent variables and observes the values of the output or dependent variable(s).

Strengths of observation ü Can be used to generate hypotheses ü Can be used to negate a proposition ü Can be used to identify contingent relationships

Limitations of Observation ü Cannot be used to test hypotheses ü Poor representative ness ü Poor replicability ü Observer bias

Strengths of experimental ü Causation can be determined (if properly designed) ü The researcher has considerable control over the variables of interest ü Can be designed to evaluate multiple independent variables

Limitations of experimental ü Not ethical in many situations ü Often more difficult and costly

Design of Experiments ü Define the objectives of the experiment and the population of interest. ü Identify all sources of variation. ü Choose an experimental design and specify the experimental procedure.

Defining the Objectives What questions do you hope to answer as a result of your experiment? To what population do these answers apply?

Defining the Objectives

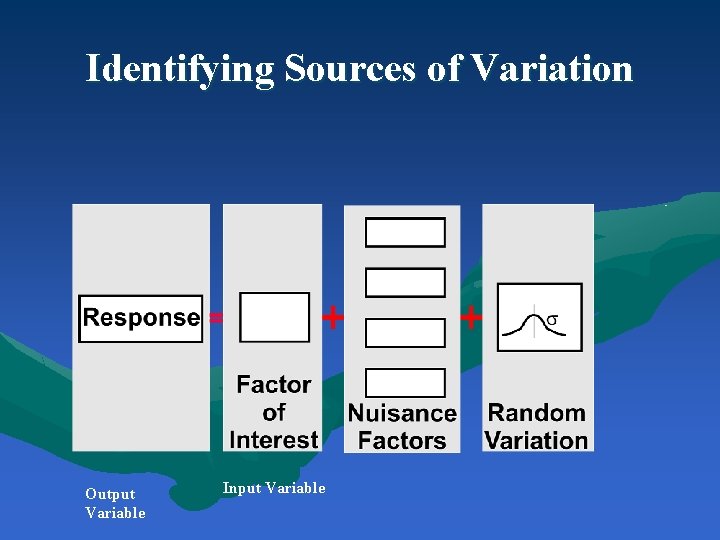

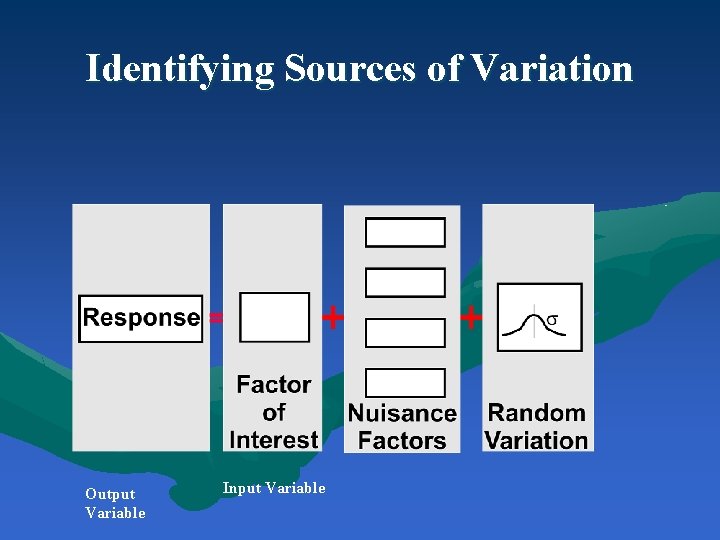

Identifying Sources of Variation Output Variable Input Variable

Choosing an Experimental Design Experimental design?

Experimental Design A plan and a structure to test hypotheses in which the analyst controls or manipulates one or more variables Protocol for measuring the values of a set of variable It contains independent and dependent variables

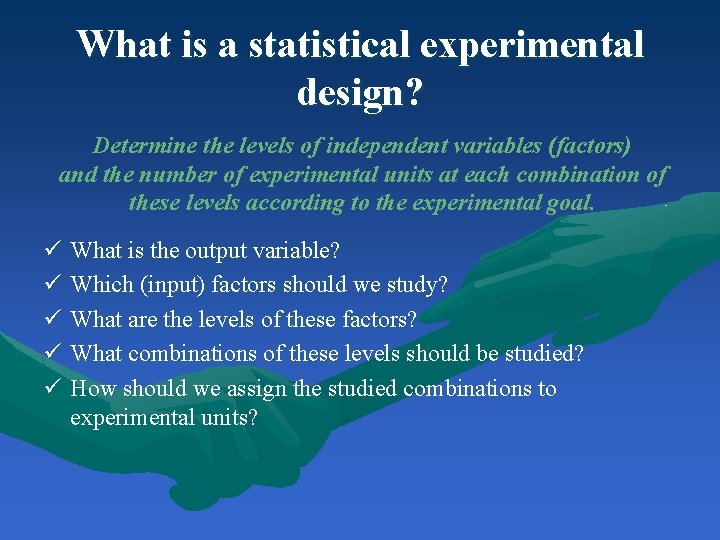

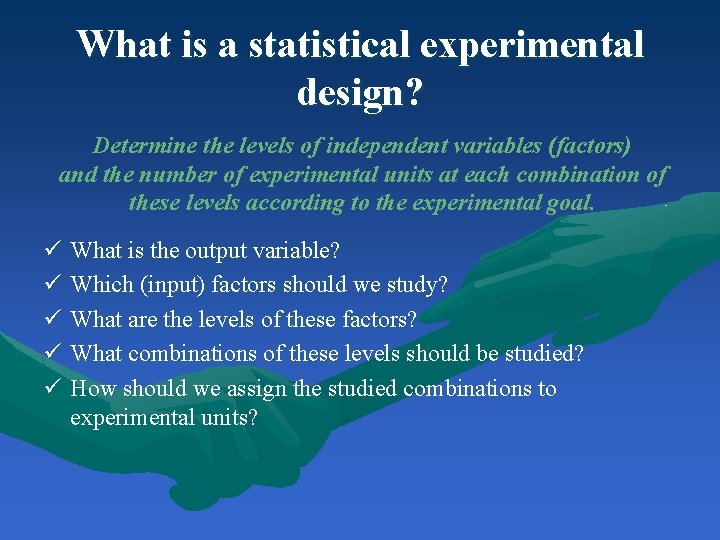

What is a statistical experimental design? Determine the levels of independent variables (factors) and the number of experimental units at each combination of these levels according to the experimental goal. ü What is the output variable? ü Which (input) factors should we study? ü What are the levels of these factors? ü What combinations of these levels should be studied? ü How should we assign the studied combinations to experimental units?

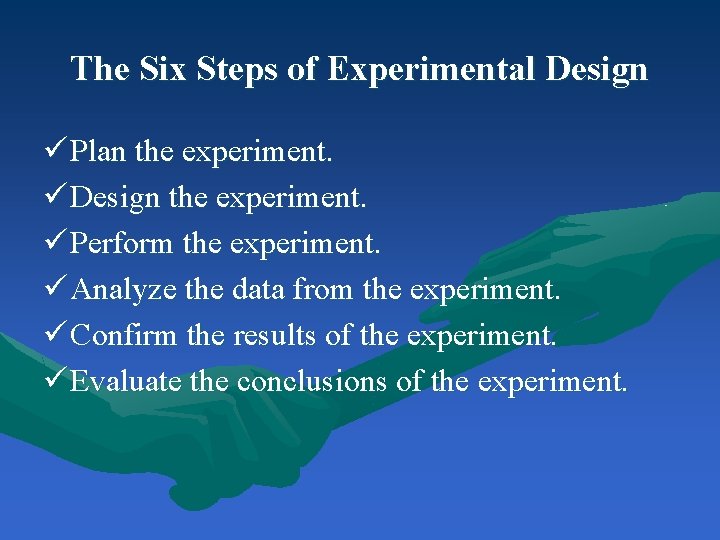

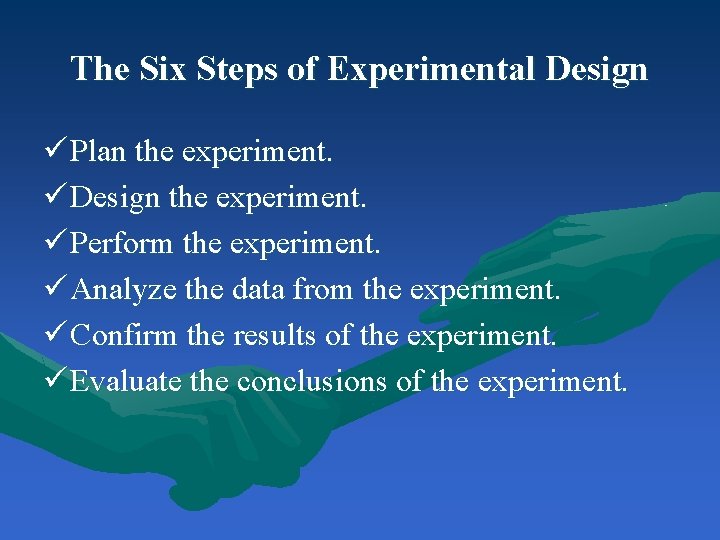

The Six Steps of Experimental Design ü Plan the experiment. ü Design the experiment. ü Perform the experiment. ü Analyze the data from the experiment. ü Confirm the results of the experiment. ü Evaluate the conclusions of the experiment.

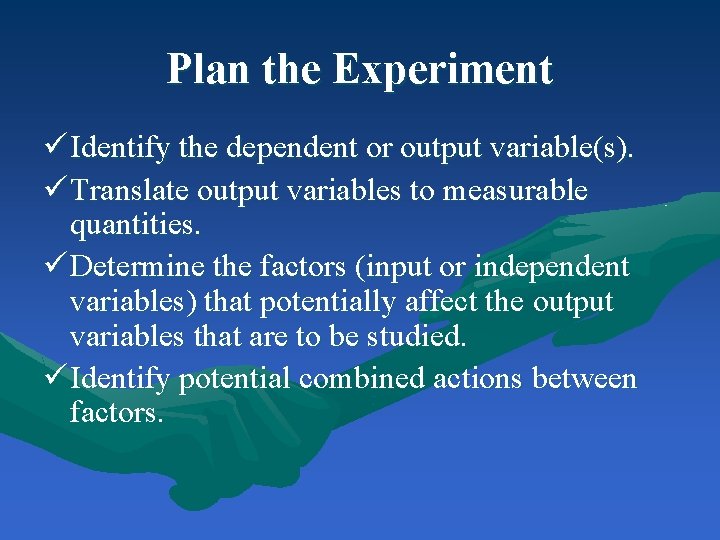

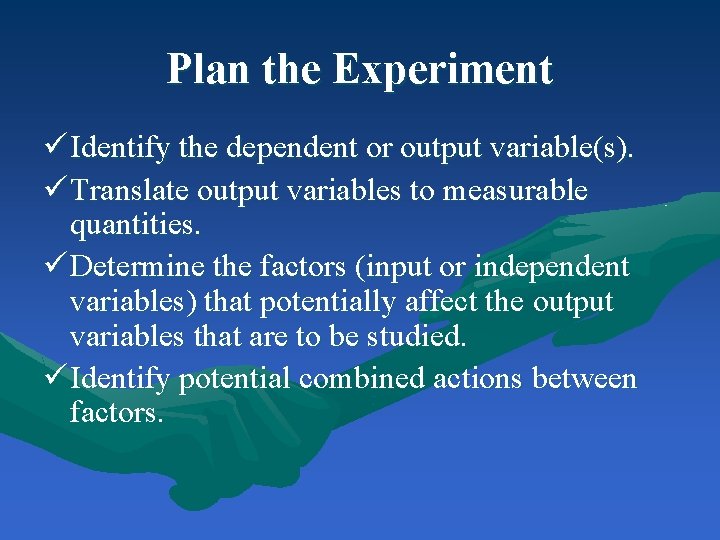

Plan the Experiment ü Identify the dependent or output variable(s). ü Translate output variables to measurable quantities. ü Determine the factors (input or independent variables) that potentially affect the output variables that are to be studied. ü Identify potential combined actions between factors.

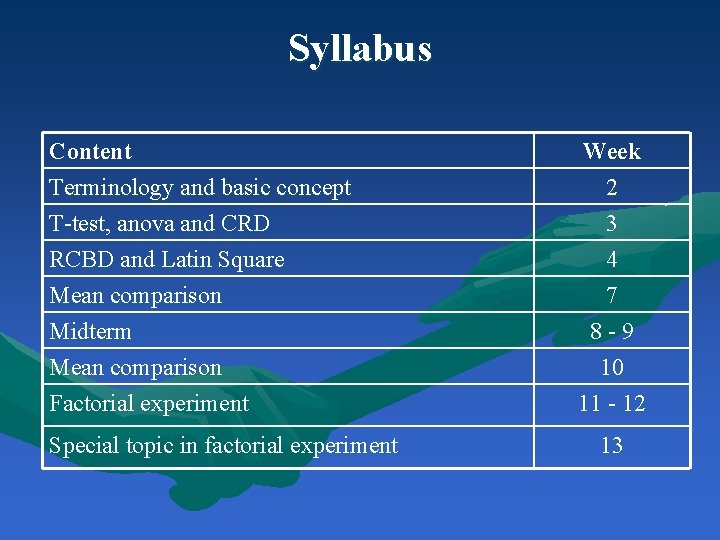

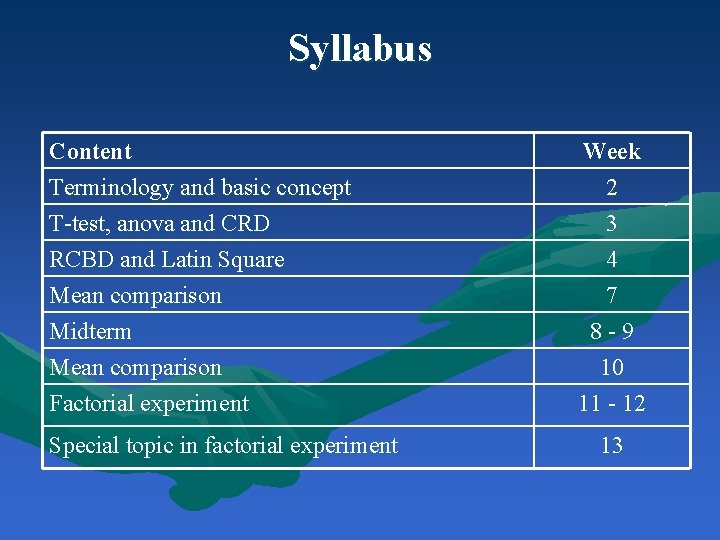

Syllabus Content Terminology and basic concept T-test, anova and CRD RCBD and Latin Square Week 2 3 4 Mean comparison Midterm Mean comparison Factorial experiment 7 8 -9 10 11 - 12 Special topic in factorial experiment 13

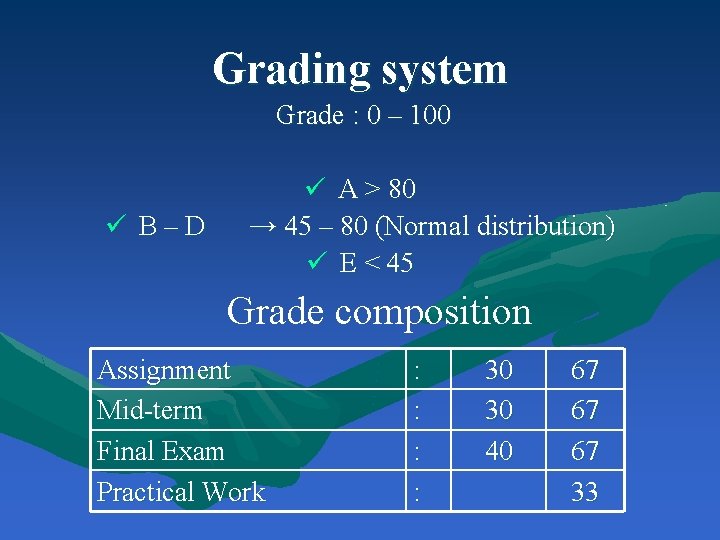

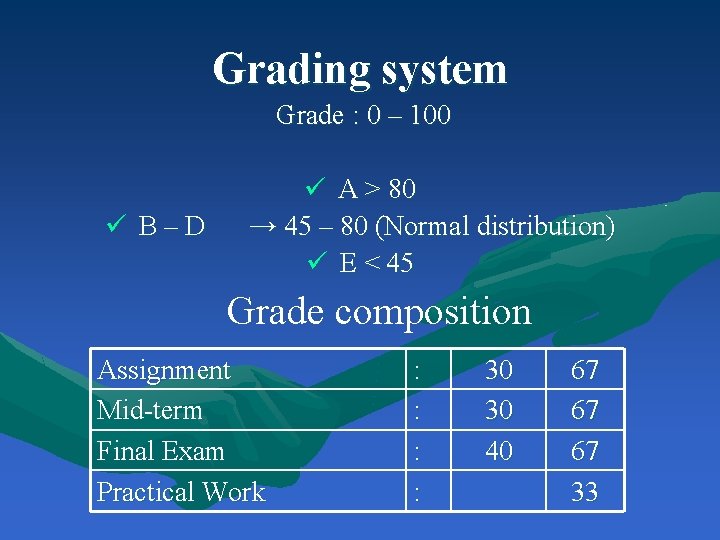

Grading system Grade : 0 – 100 ü B–D ü A > 80 → 45 – 80 (Normal distribution) ü E < 45 Grade composition Assignment Mid-term Final Exam Practical Work : : 30 30 40 67 67 67 33

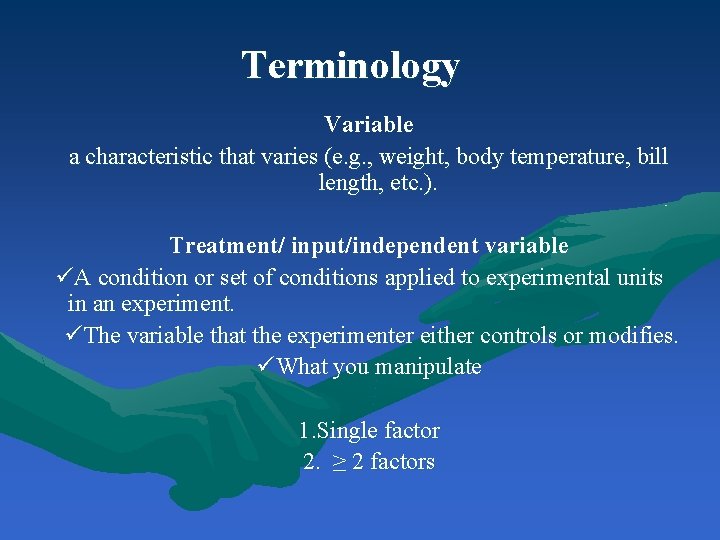

Terminology Variable a characteristic that varies (e. g. , weight, body temperature, bill length, etc. ). Treatment/ input/independent variable üA condition or set of conditions applied to experimental units in an experiment. üThe variable that the experimenter either controls or modifies. üWhat you manipulate 1. Single factor 2. ≥ 2 factors

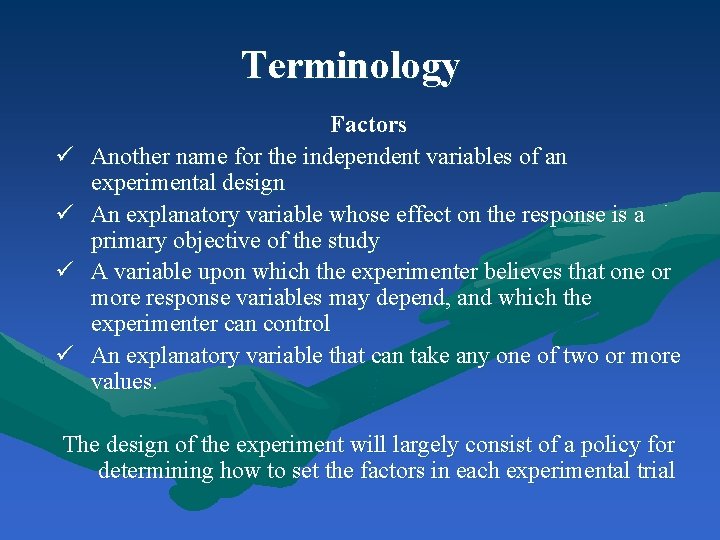

Terminology ü ü Factors Another name for the independent variables of an experimental design An explanatory variable whose effect on the response is a primary objective of the study A variable upon which the experimenter believes that one or more response variables may depend, and which the experimenter can control An explanatory variable that can take any one of two or more values. The design of the experiment will largely consist of a policy for determining how to set the factors in each experimental trial

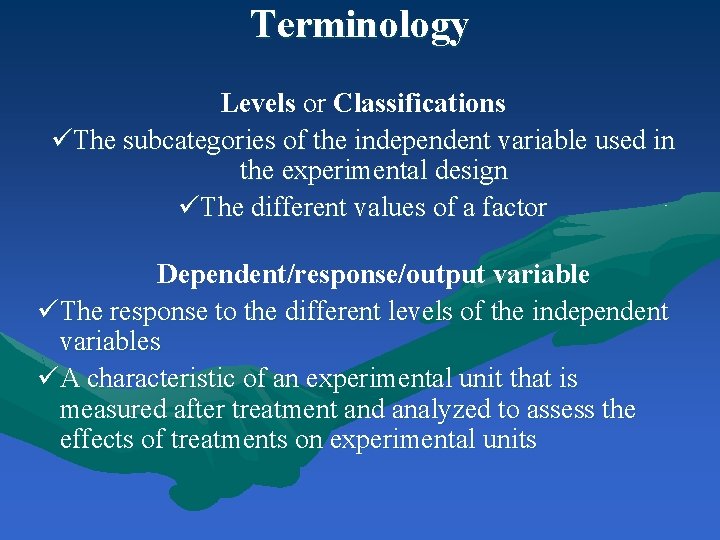

Terminology Levels or Classifications üThe subcategories of the independent variable used in the experimental design üThe different values of a factor Dependent/response/output variable üThe response to the different levels of the independent variables üA characteristic of an experimental unit that is measured after treatment and analyzed to assess the effects of treatments on experimental units

Terminology Treatment Factor A factor whose levels are chosen and controlled by the researcher to understand how one or more response variables change in response to varying levels of the factor Treatment Design The collection of treatments used in an experiment. Full Factorial Treatment Design Treatment design in which the treatments consist of all possible combinations involving one level from each of the treatment factors.

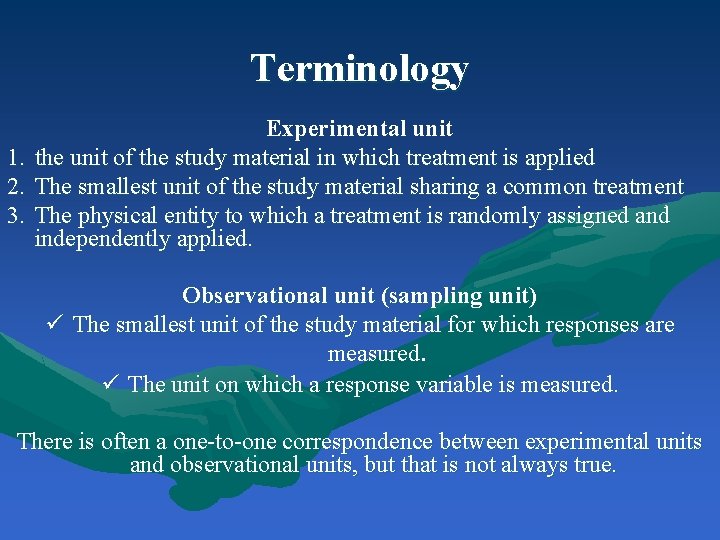

Terminology Experimental unit 1. the unit of the study material in which treatment is applied 2. The smallest unit of the study material sharing a common treatment 3. The physical entity to which a treatment is randomly assigned and independently applied. Observational unit (sampling unit) ü The smallest unit of the study material for which responses are measured. ü The unit on which a response variable is measured. There is often a one-to-one correspondence between experimental units and observational units, but that is not always true.

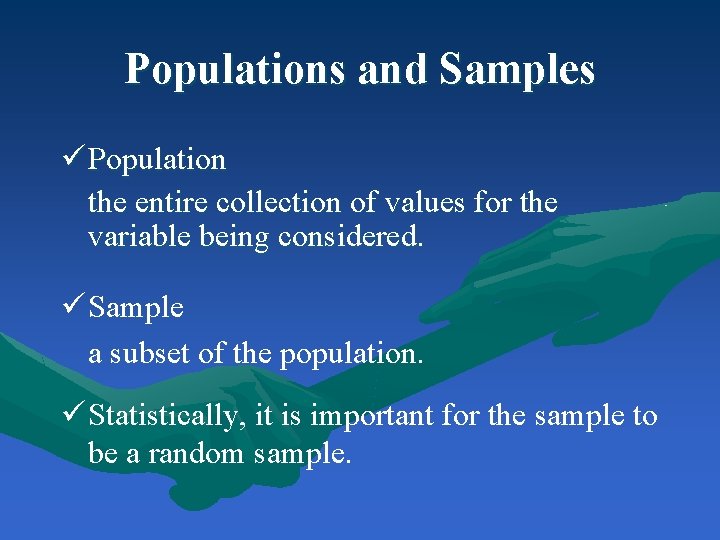

Populations and Samples ü Population the entire collection of values for the variable being considered. ü Sample a subset of the population. ü Statistically, it is important for the sample to be a random sample.

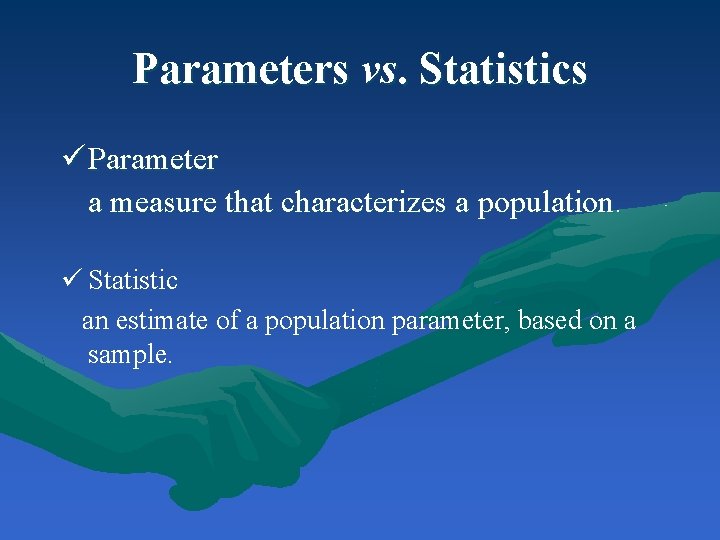

Parameters vs. Statistics ü Parameter a measure that characterizes a population. ü Statistic an estimate of a population parameter, based on a sample.

Basic principles 1. 2. 3. 4. 5. 6. Formulate question/goal in advance Comparison/control Replication Randomization Stratification (blocking) Factorial experiment

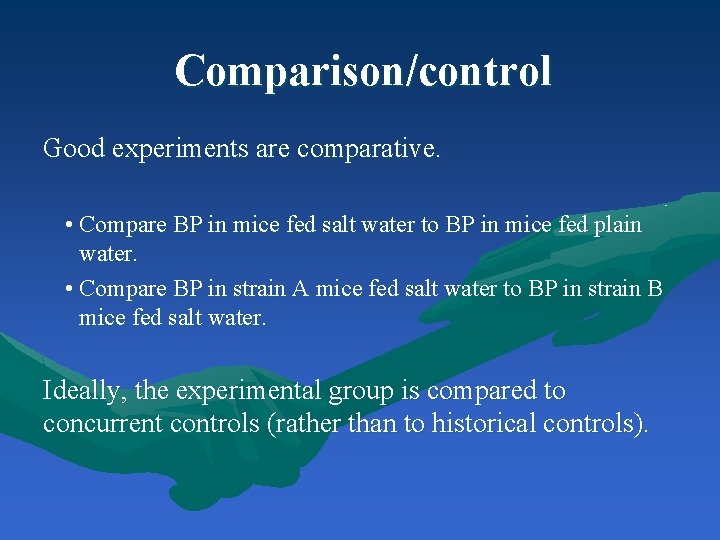

Comparison/control Good experiments are comparative. • Compare BP in mice fed salt water to BP in mice fed plain water. • Compare BP in strain A mice fed salt water to BP in strain B mice fed salt water. Ideally, the experimental group is compared to concurrent controls (rather than to historical controls).

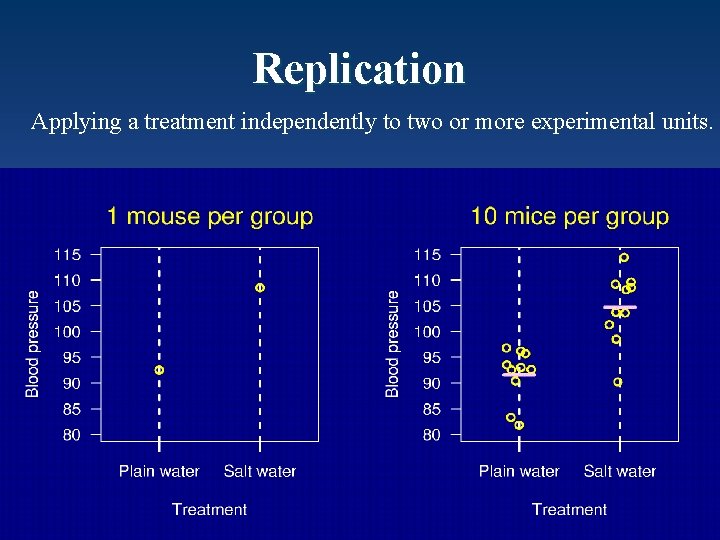

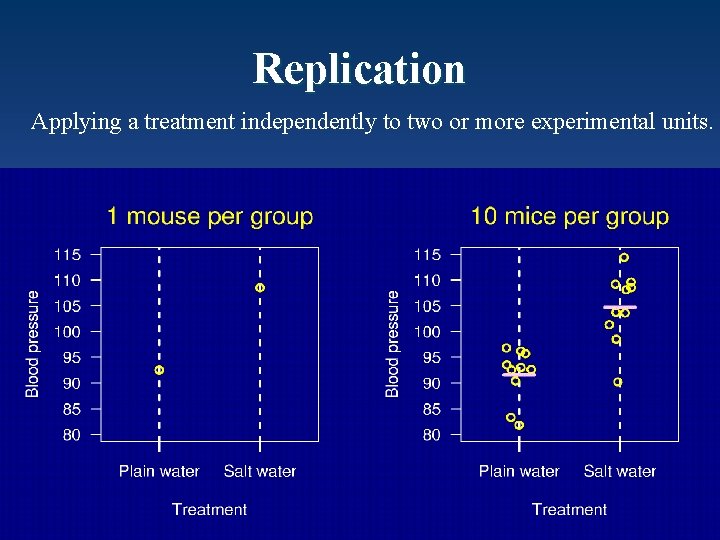

Replication Applying a treatment independently to two or more experimental units.

Why replicate? ü Reduce the effect of uncontrolled variation (i. e. increase precision). ü Quantify uncertainty.

Randomization üRandom assignment of treatments to experimental units. üExperimental subjects (“units”) should be assigned to treatment groups at random. At random does not mean haphazardly. One needs to explicitly randomize using • A computer, or • Coins, dice or cards.

Why randomize? • Avoid bias. – For example: the first six mice you grab may have intrinsically higher BP. • Control the role of chance. – Randomization allows the later use of probability theory, and so gives a solid foundation for statistical analysis.

Stratification (Blocking) Grouping similar experimental units together and assigning different treatments within such groups of experimental units ü If you anticipate a difference between morning and afternoon measurements: üEnsure that within each period, there are equal numbers of subjects in each treatment group. üTake account of the difference between periods in your analysis.

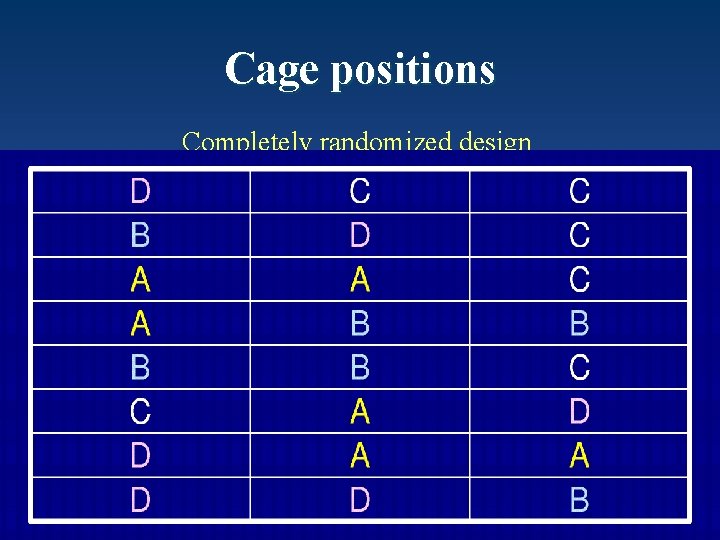

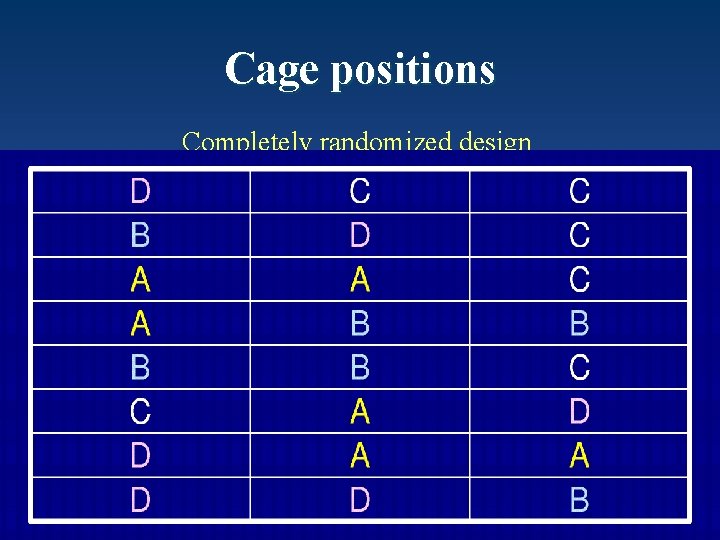

Cage positions Completely randomized design

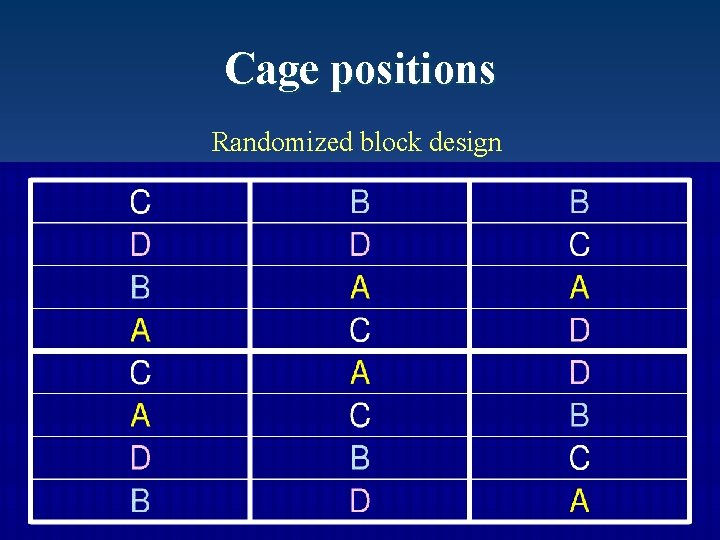

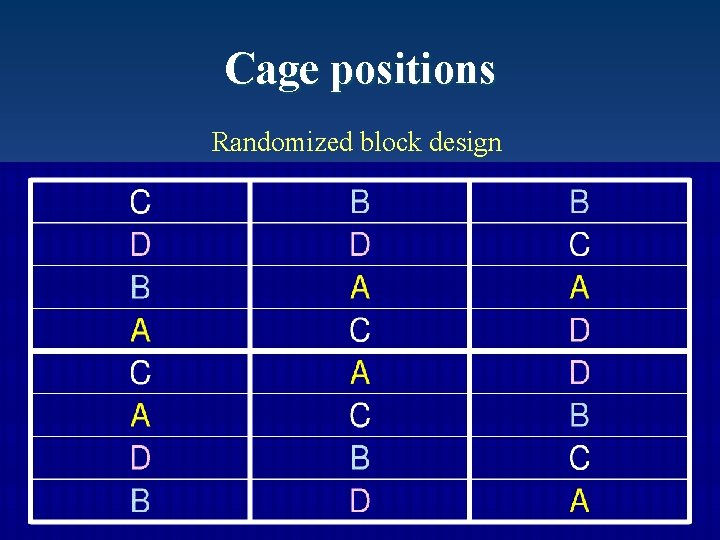

Cage positions Randomized block design

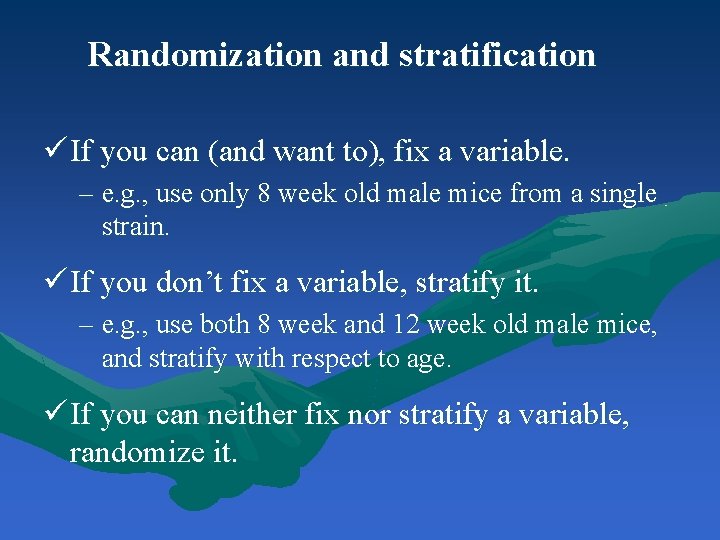

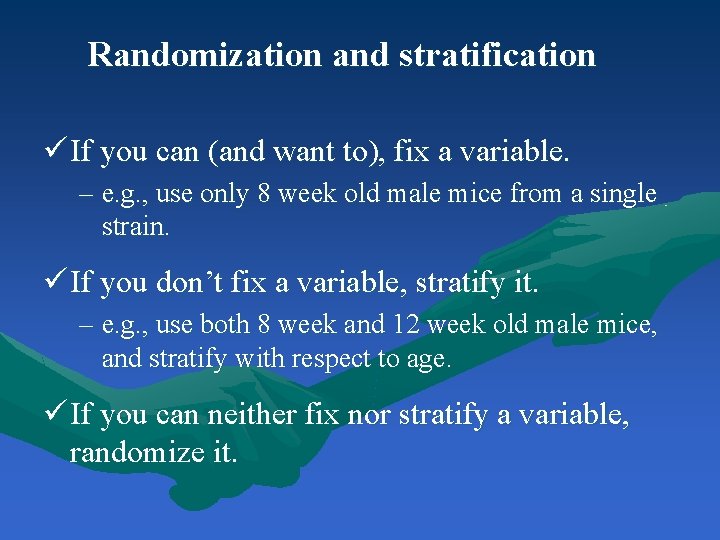

Randomization and stratification ü If you can (and want to), fix a variable. – e. g. , use only 8 week old male mice from a single strain. ü If you don’t fix a variable, stratify it. – e. g. , use both 8 week and 12 week old male mice, and stratify with respect to age. ü If you can neither fix nor stratify a variable, randomize it.

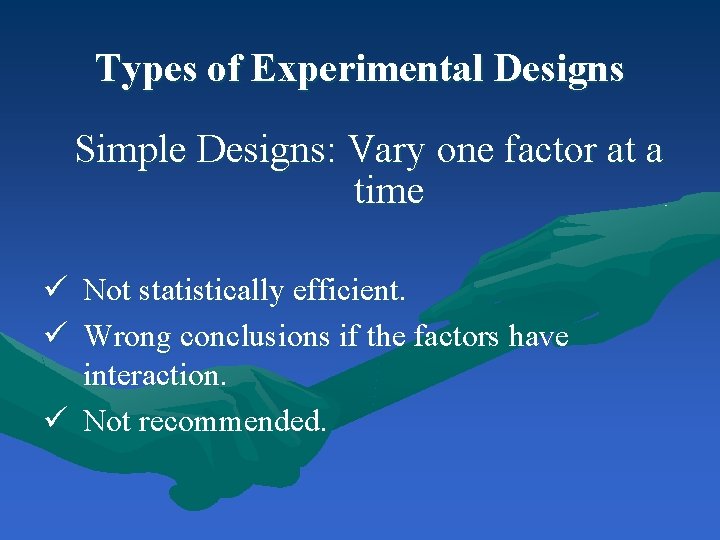

Types of Experimental Designs Simple Designs: Vary one factor at a time ü Not statistically efficient. ü Wrong conclusions if the factors have interaction. ü Not recommended.

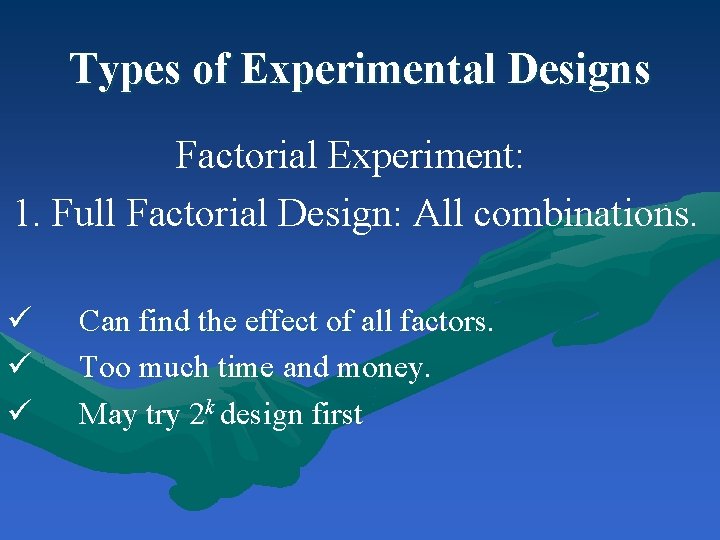

Types of Experimental Designs Factorial Experiment: 1. Full Factorial Design: All combinations. ü ü ü Can find the effect of all factors. Too much time and money. May try 2 k design first

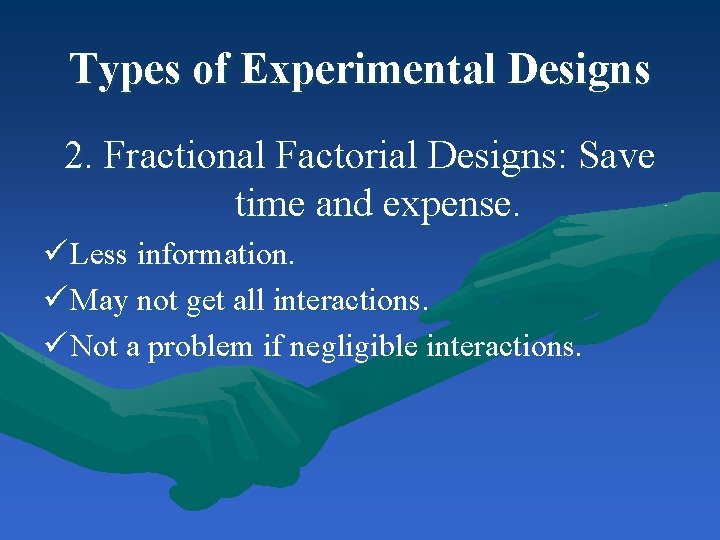

Types of Experimental Designs 2. Fractional Factorial Designs: Save time and expense. ü Less information. ü May not get all interactions. ü Not a problem if negligible interactions.

Common Mistakes in Experimentation 1. The variation due to experimental error is ignored. 2. Important parameters are not controlled. 3. Effects of different factors are not isolated. 4. Simple one-factor-at-a-time designs are used 5. Interactions are ignored. 6. Too many experiments are conducted. Better: two phases.

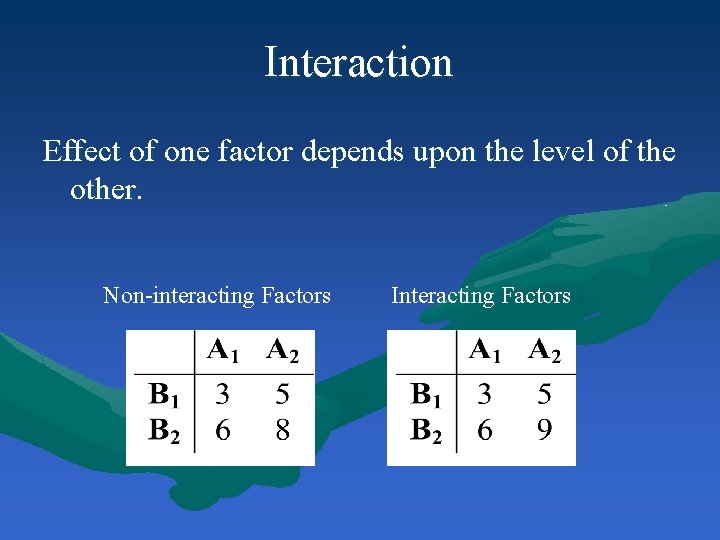

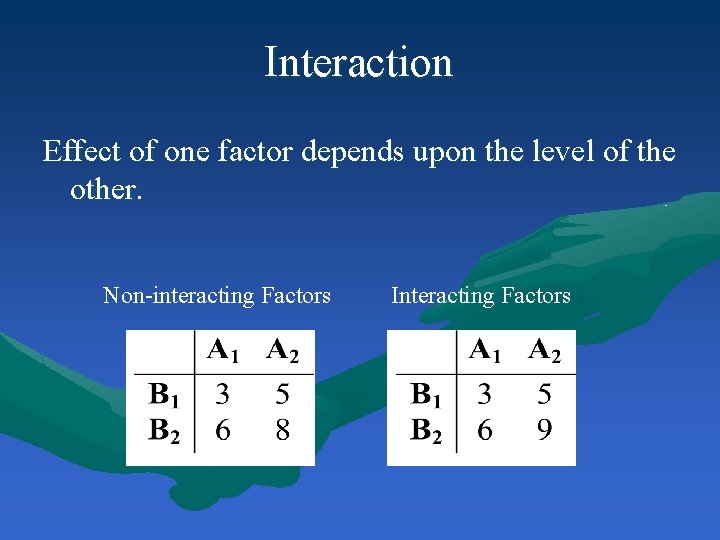

Interaction Effect of one factor depends upon the level of the other. Non-interacting Factors Interacting Factors

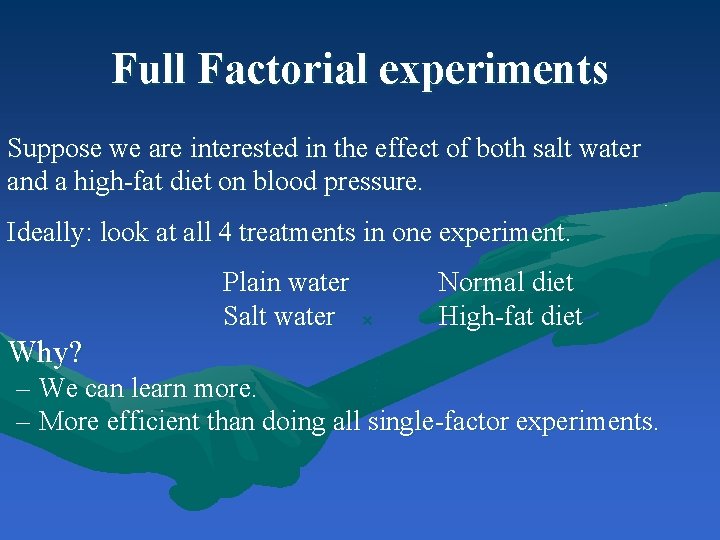

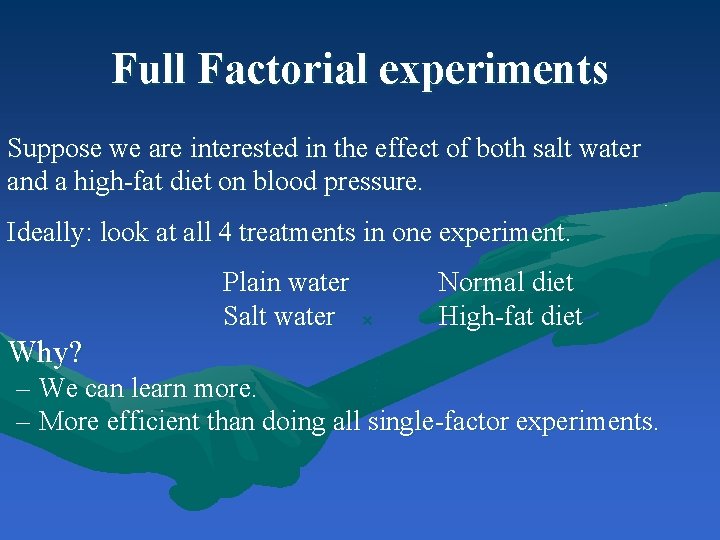

Full Factorial experiments Suppose we are interested in the effect of both salt water and a high-fat diet on blood pressure. Ideally: look at all 4 treatments in one experiment. Why? Plain water Salt water × Normal diet High-fat diet – We can learn more. – More efficient than doing all single-factor experiments.

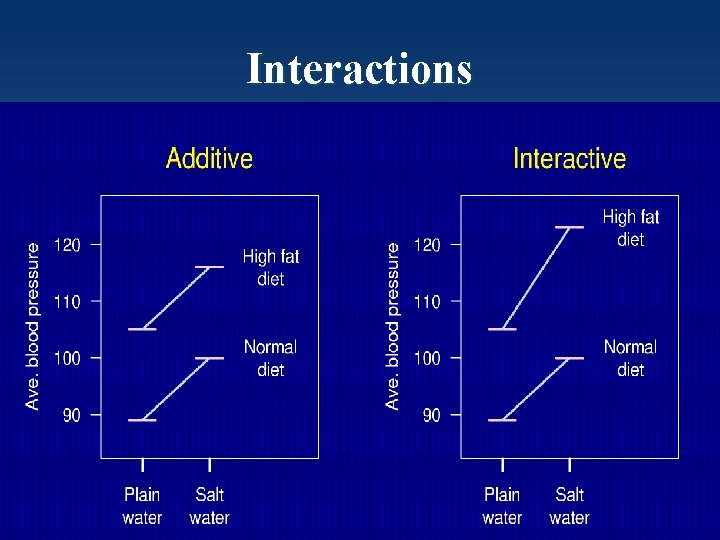

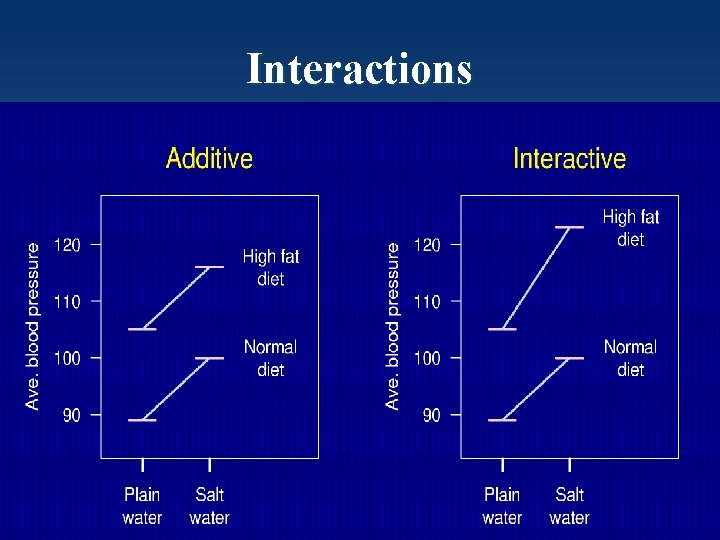

Interactions

Other points • Blinding – Measurements made by people can be influenced by unconscious biases. – Ideally, dissections and measurements should be made without knowledge of the treatment applied. • Internal controls – It can be useful to use the subjects themselves as their own controls (e. g. , consider the response after vs. before treatment). – Why? Increased precision.

Other points • Representativeness – Are the subjects/tissues you are studying really representative of the population you want to study? – Ideally, your study material is a random sample from the population of interest.

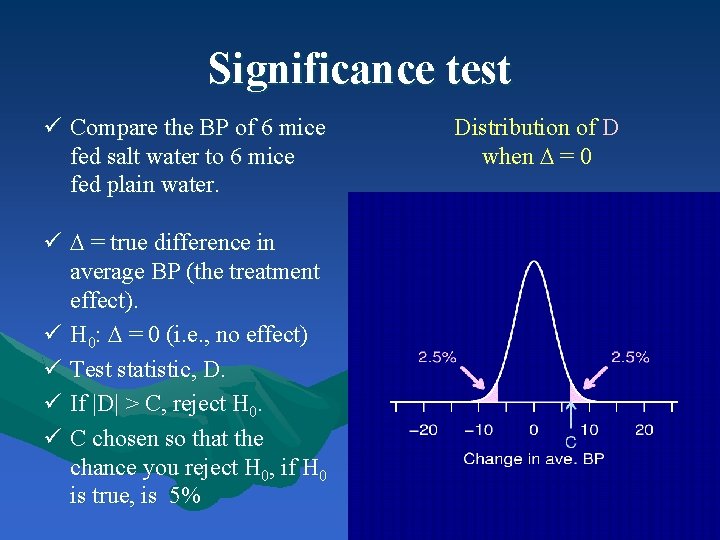

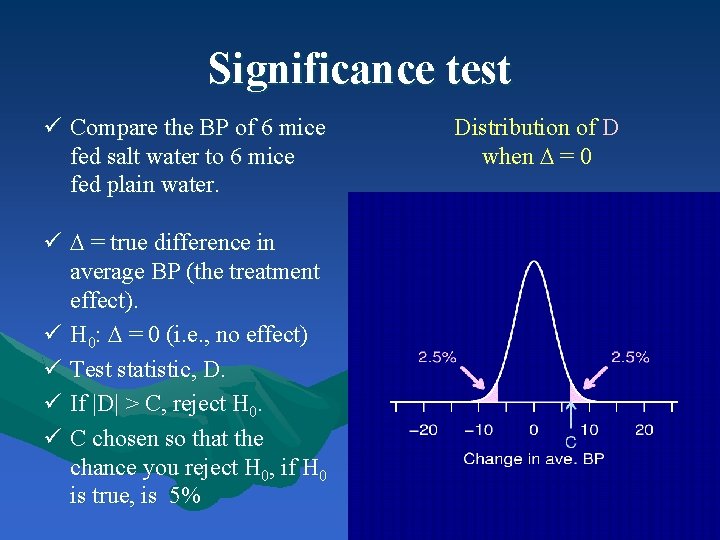

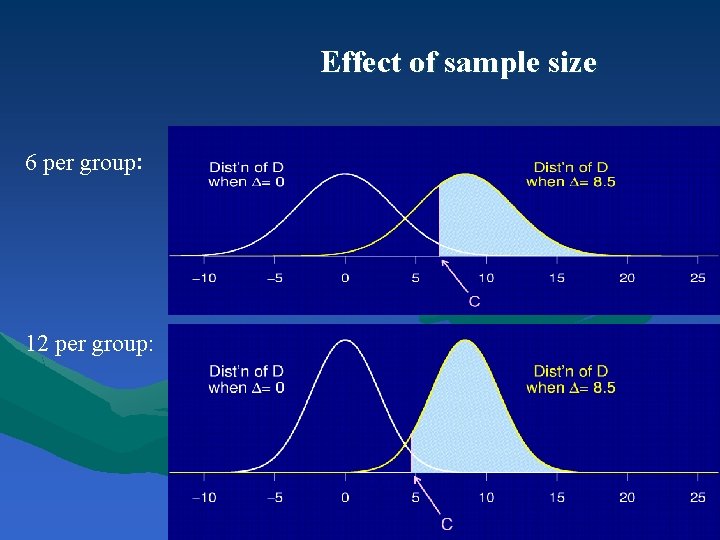

Significance test ü Compare the BP of 6 mice fed salt water to 6 mice fed plain water. ü = true difference in average BP (the treatment effect). ü H 0: = 0 (i. e. , no effect) ü Test statistic, D. ü If |D| > C, reject H 0. ü C chosen so that the chance you reject H 0, if H 0 is true, is 5% Distribution of D when = 0

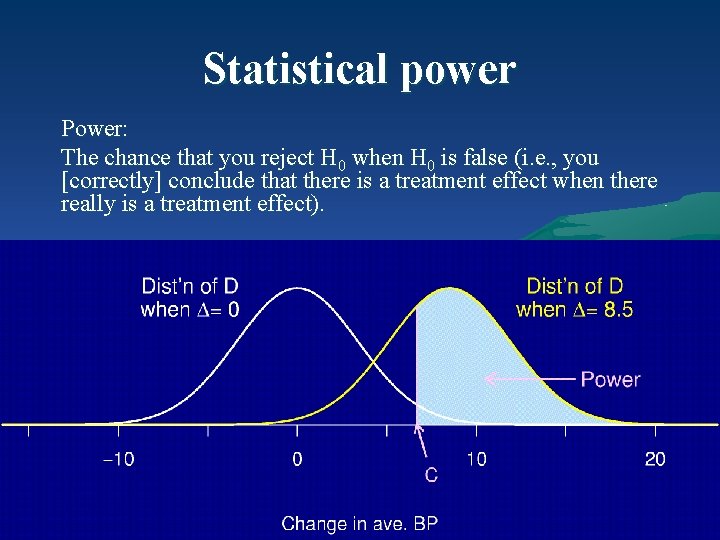

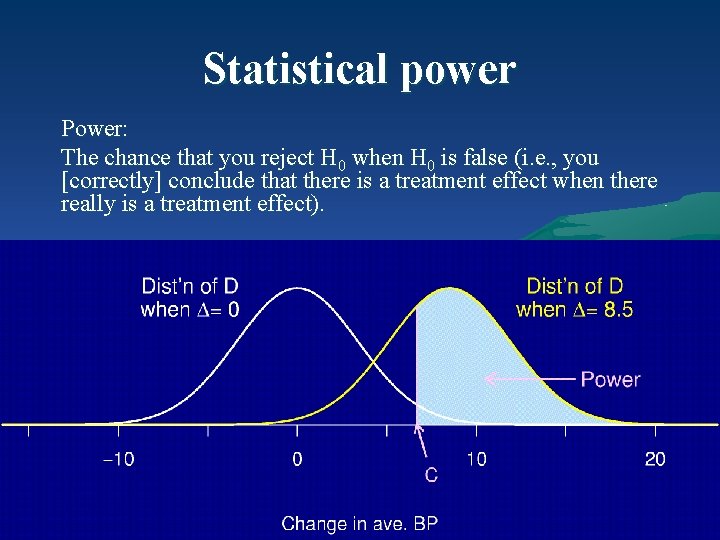

Statistical power Power: The chance that you reject H 0 when H 0 is false (i. e. , you [correctly] conclude that there is a treatment effect when there really is a treatment effect).

Power depends on… üThe structure of the experiment üThe method for analyzing the data üThe size of the true underlying effect üThe variability in the measurements üThe chosen significance level ( ) üThe sample size Note: We usually try to determine the sample size to give a particular power (often 80%).

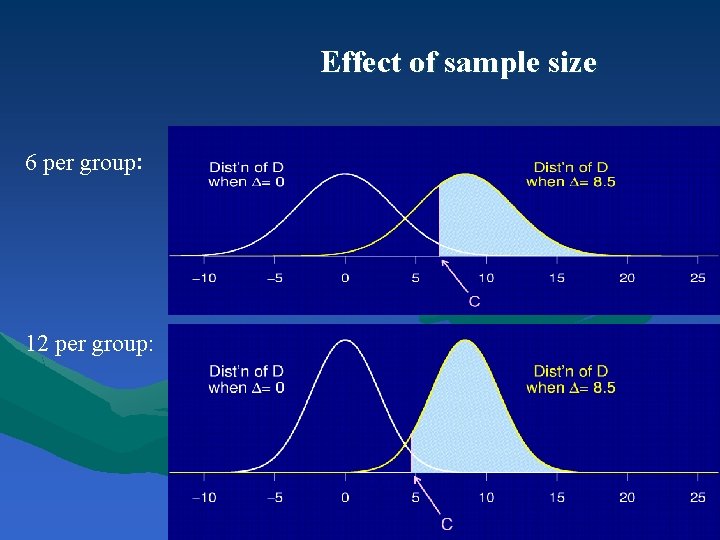

Effect of sample size 6 per group: 12 per group:

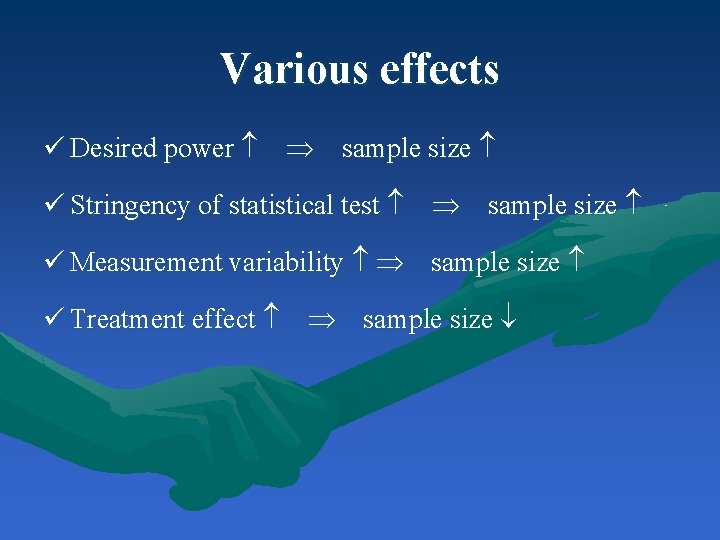

Various effects ü Desired power sample size ü Stringency of statistical test sample size ü Measurement variability sample size ü Treatment effect sample size