Expectation Maximization for GMM Comp 344 Tutorial Kai

- Slides: 13

Expectation Maximization for GMM Comp 344 Tutorial Kai Zhang

GMM n Model the data distribution by a combination of Gaussian functions n Given a set of sample points, how to estimate the parameters of the GMM?

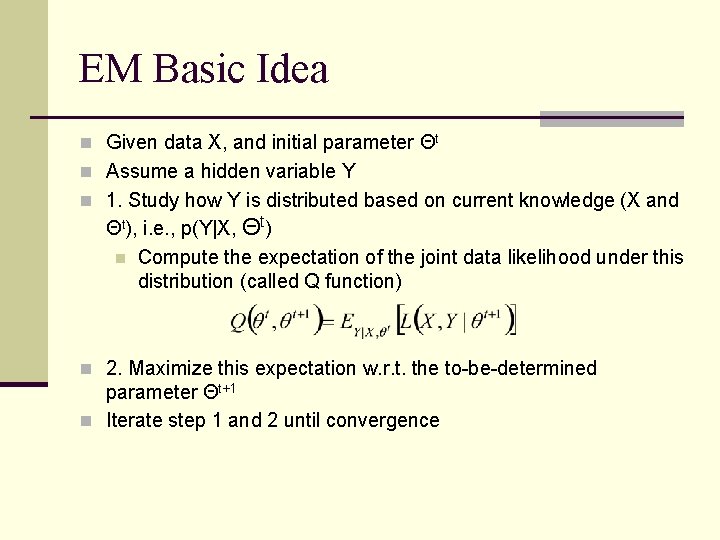

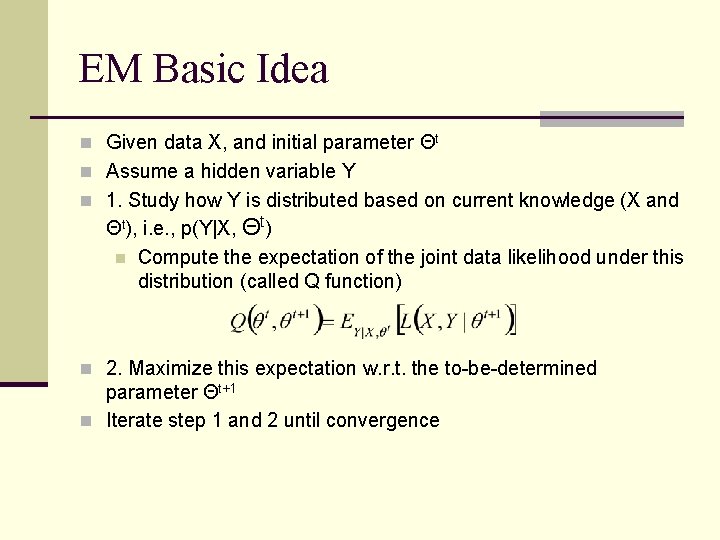

EM Basic Idea n Given data X, and initial parameter Θt n Assume a hidden variable Y n 1. Study how Y is distributed based on current knowledge (X and Θt), i. e. , p(Y|X, Θt) n Compute the expectation of the joint data likelihood under this distribution (called Q function) n 2. Maximize this expectation w. r. t. the to-be-determined parameter Θt+1 n Iterate step 1 and 2 until convergence

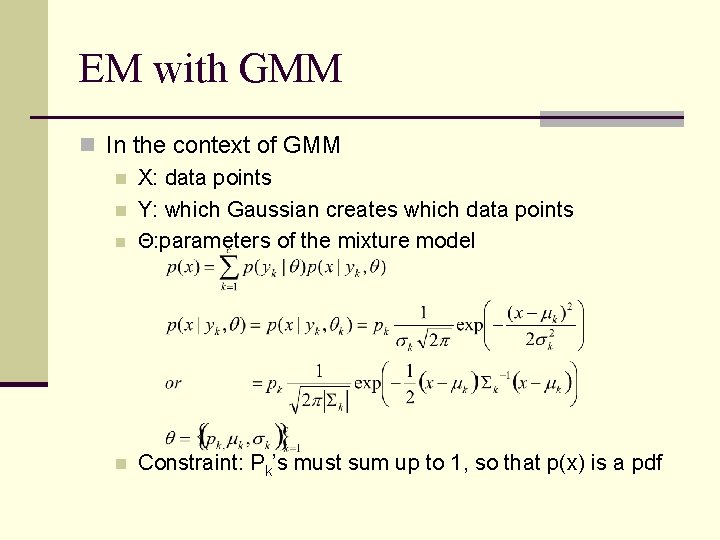

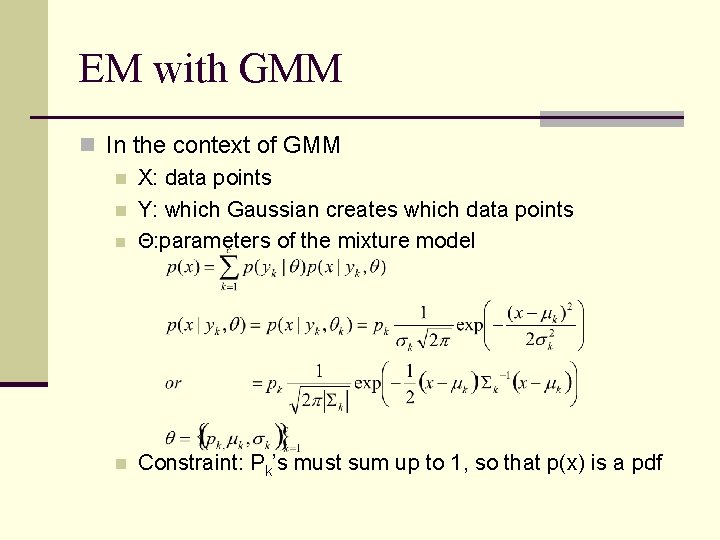

EM with GMM n In the context of GMM n X: data points n Y: which Gaussian creates which data points n Θ: parameters of the mixture model n Constraint: Pk’s must sum up to 1, so that p(x) is a pdf

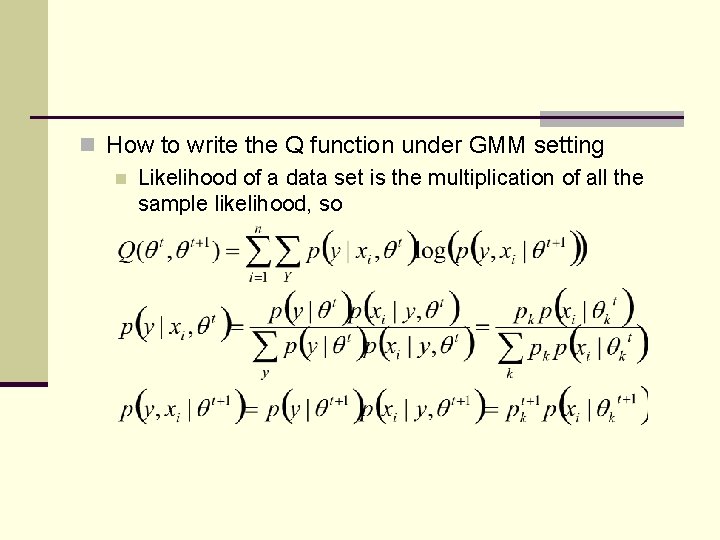

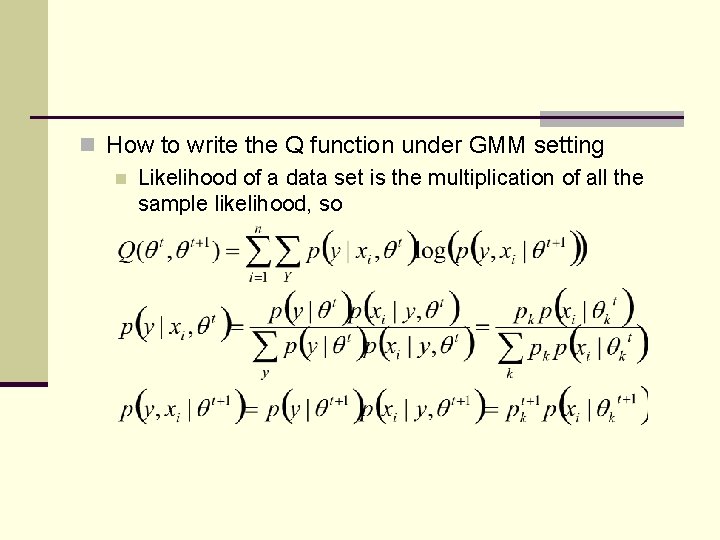

n How to write the Q function under GMM setting n Likelihood of a data set is the multiplication of all the sample likelihood, so

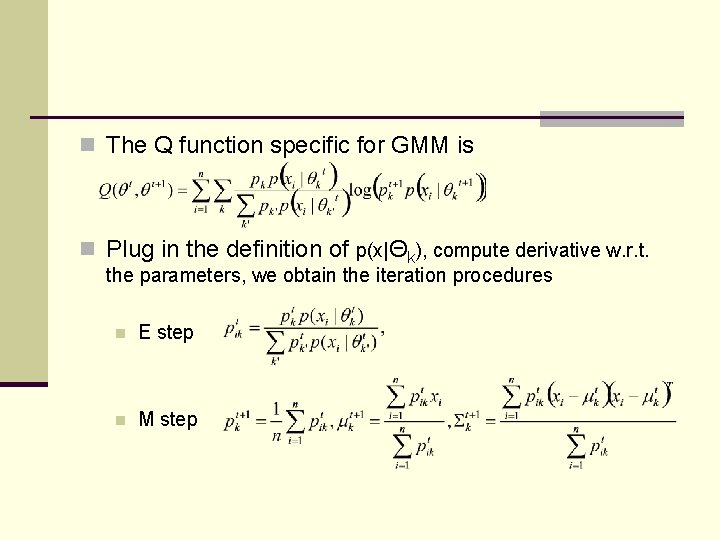

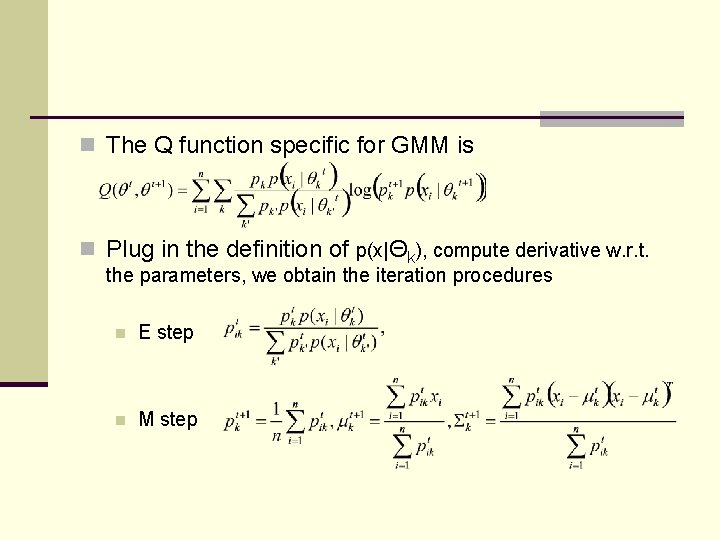

n The Q function specific for GMM is n Plug in the definition of p(x|Θk), compute derivative w. r. t. the parameters, we obtain the iteration procedures n E step n M step

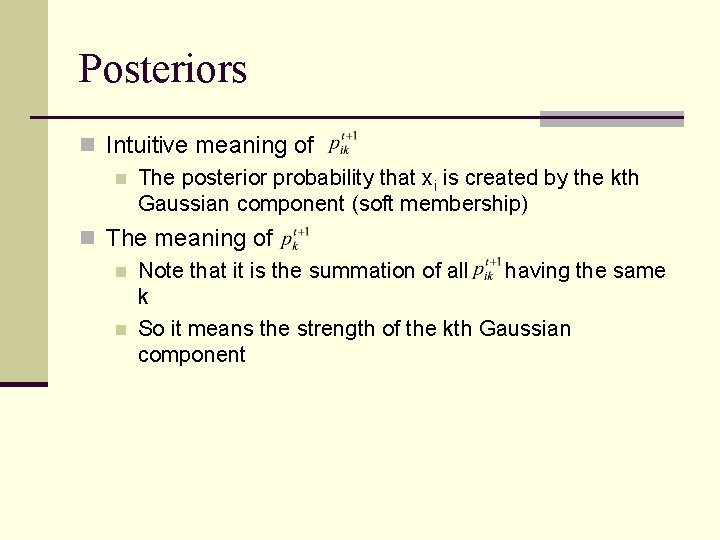

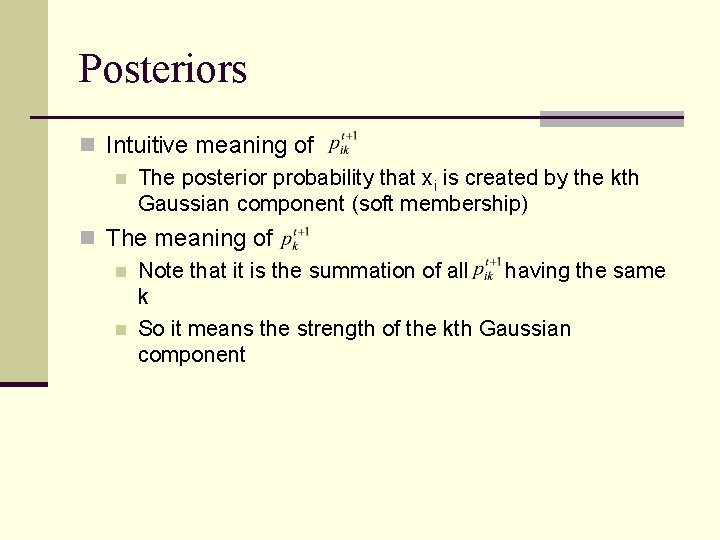

Posteriors n Intuitive meaning of n The posterior probability that xi is created by the kth Gaussian component (soft membership) n The meaning of n Note that it is the summation of all having the same k n So it means the strength of the kth Gaussian component

Comments n GMM can be deemed as performing a n n density estimation, in the form of a combination of a number of Gaussian functions clustering, where clusters correspond to the Gaussian component, and cluster assignment can be achieved through the bayes rule n GMM produces exactly what are needed in the Bayes decision rule: prior probability and class conditional probability n So GMM+Bayes rule can compute posterior probability, hence solving clustering problem

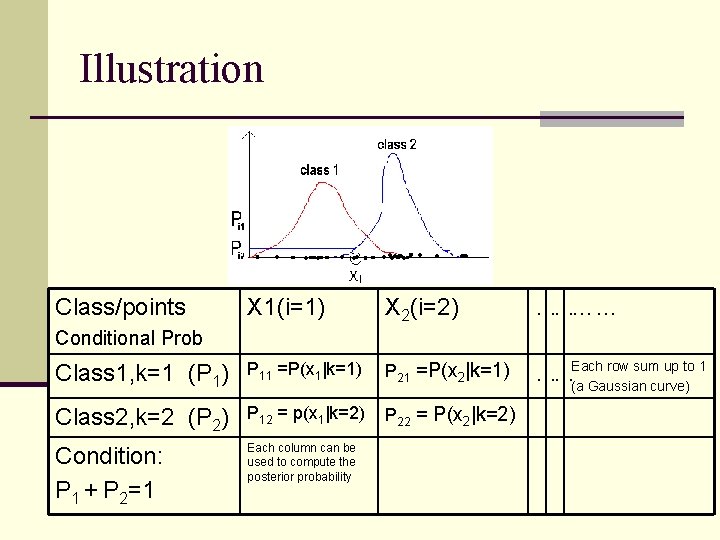

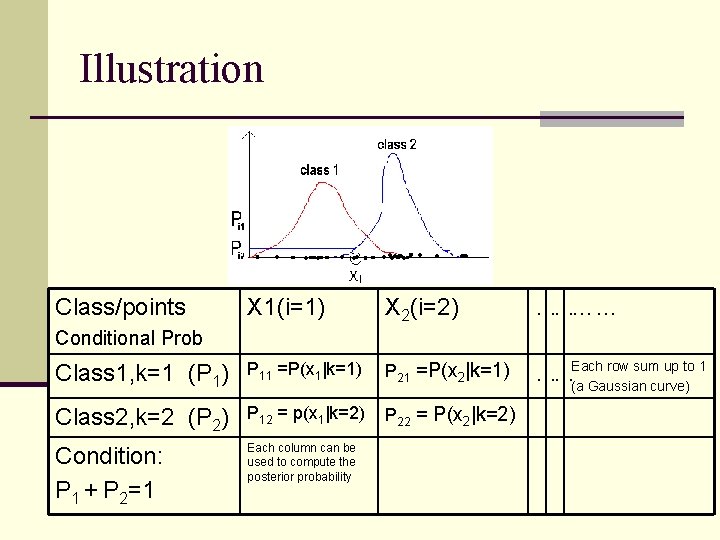

Illustration Class/points X 1(i=1) X 2(i=2) ………… Class 1, k=1 (P 1) P 11 =P(x 1|k=1) P 21 =P(x 2|k=1) row sum up to 1 ……Each (a Gaussian curve) Class 2, k=2 (P 2) P 12 = p(x 1|k=2) P 22 = P(x 2|k=2) Condition: P 1 + P 2=1 Each column can be used to compute the posterior probability Conditional Prob

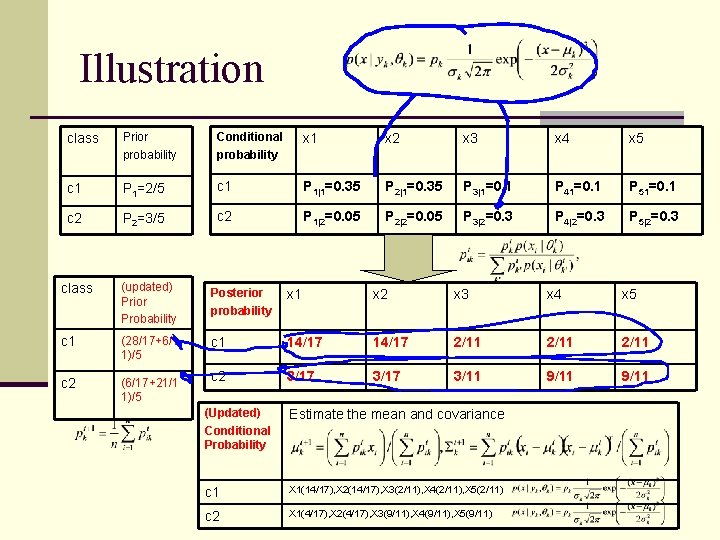

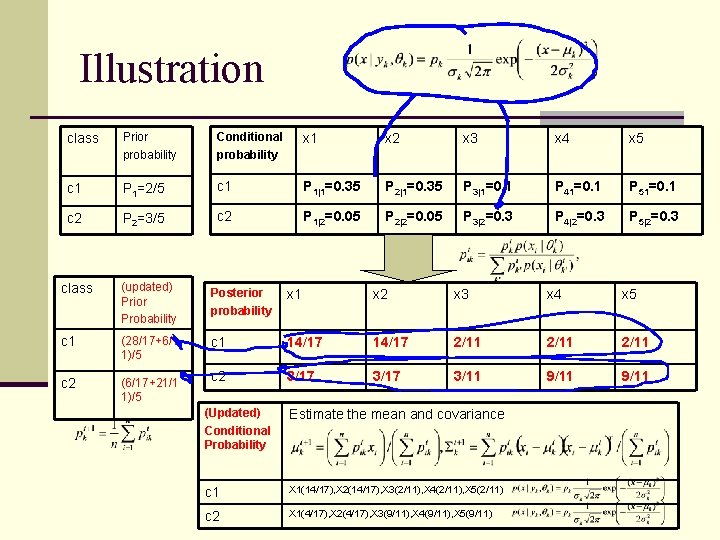

Illustration class Prior probability Conditional probability x 1 x 2 x 3 x 4 x 5 c 1 P 1=2/5 c 1 P 1|1=0. 35 P 2|1=0. 35 P 3|1=0. 1 P 41=0. 1 P 51=0. 1 c 2 P 2=3/5 c 2 P 1|2=0. 05 P 2|2=0. 05 P 3|2=0. 3 P 4|2=0. 3 P 5|2=0. 3 class (updated) Prior Probability Posterior probability x 1 x 2 x 3 x 4 x 5 c 1 (28/17+6/1 1)/5 c 1 14/17 2/11 c 2 (6/17+21/1 1)/5 c 2 3/17 3/11 9/11 (Updated) Conditional Probability Estimate the mean and covariance c 1 X 1(14/17), X 2(14/17), X 3(2/11), X 4(2/11), X 5(2/11) c 2 X 1(4/17), X 2(4/17), X 3(9/11), X 4(9/11), X 5(9/11)

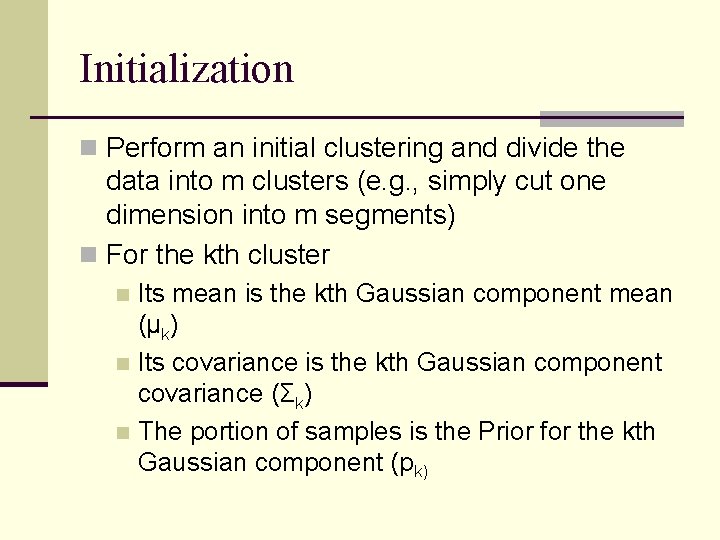

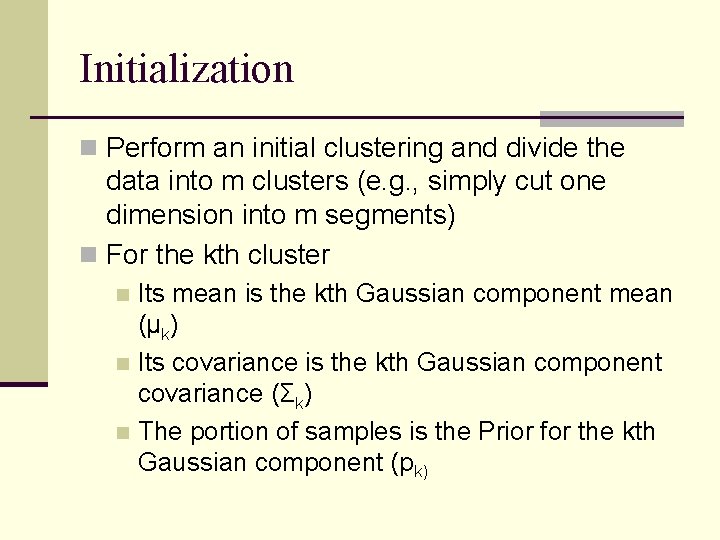

Initialization n Perform an initial clustering and divide the data into m clusters (e. g. , simply cut one dimension into m segments) n For the kth cluster Its mean is the kth Gaussian component mean (μk) n Its covariance is the kth Gaussian component covariance (Σk) n The portion of samples is the Prior for the kth Gaussian component (pk) n

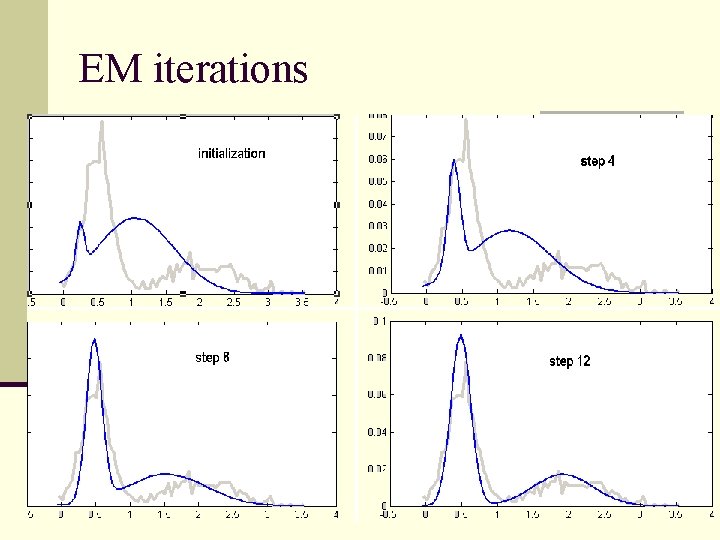

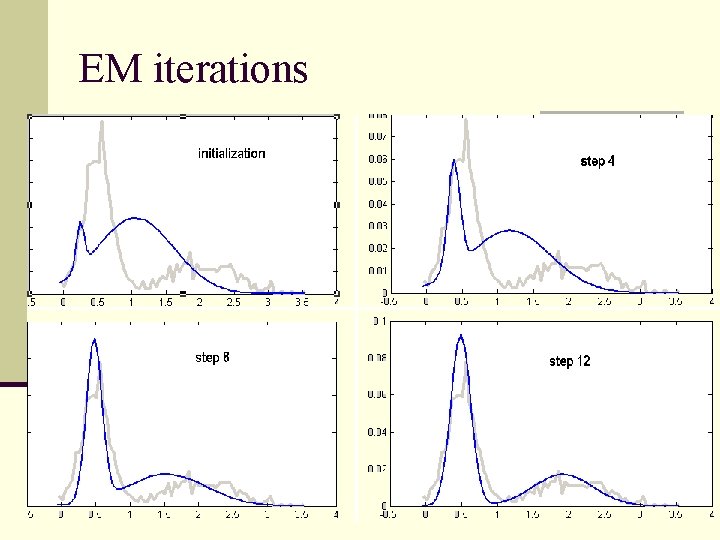

EM iterations

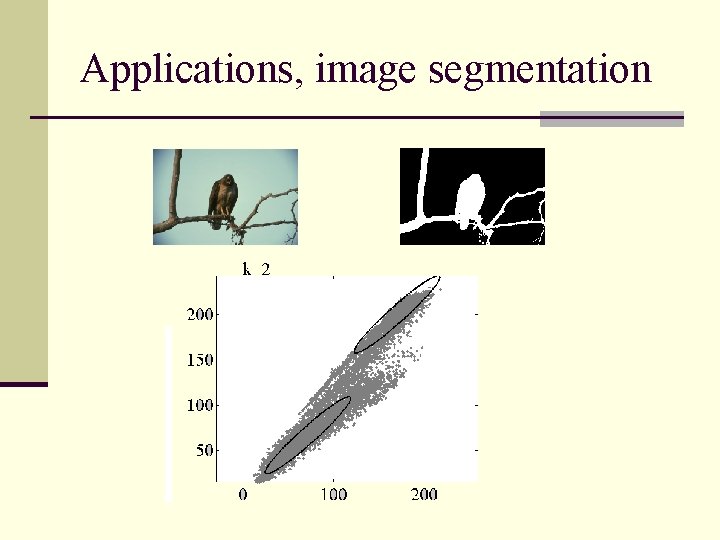

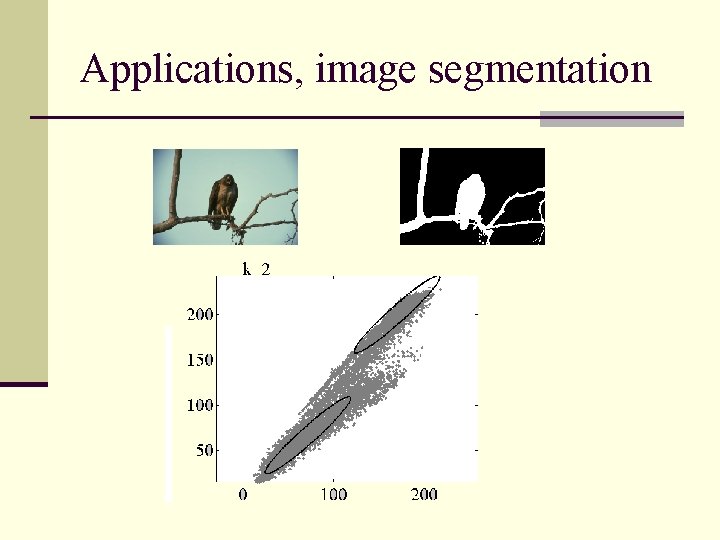

Applications, image segmentation