Expectation Maximization A Gentle Introduction Scott Morris Department

- Slides: 11

Expectation Maximization A “Gentle” Introduction Scott Morris Department of Computer Science

Basic Premise • Given a set of observed data, X, what is the underlying model that produced X? – Example: distributions – Gaussian, Poisson, Uniform • Assume we know (or can intuit) what type of model produced data • Model has m parameters (Θ 1. . Θm) – Parameters are unknown, we would like to estimate them

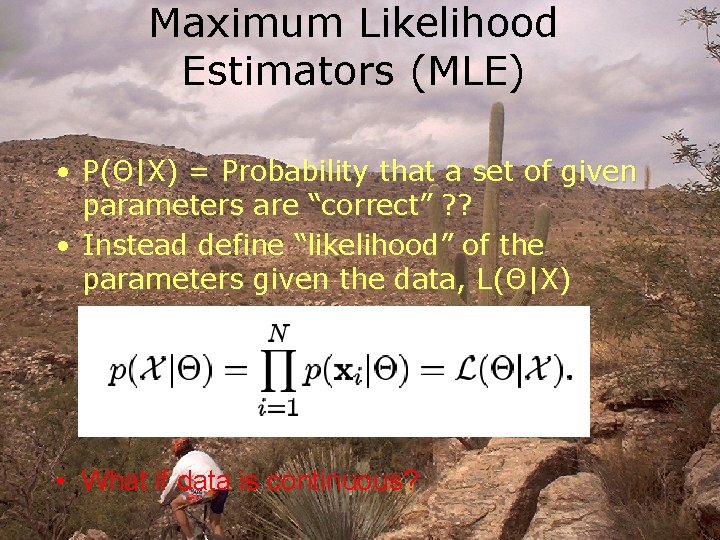

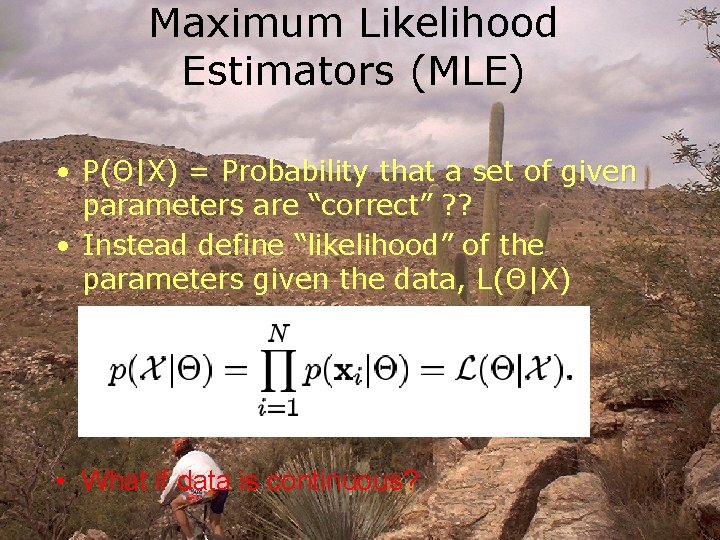

Maximum Likelihood Estimators (MLE) • P(Θ|X) = Probability that a set of given parameters are “correct” ? ? • Instead define “likelihood” of the parameters given the data, L(Θ|X) • What if data is continuous?

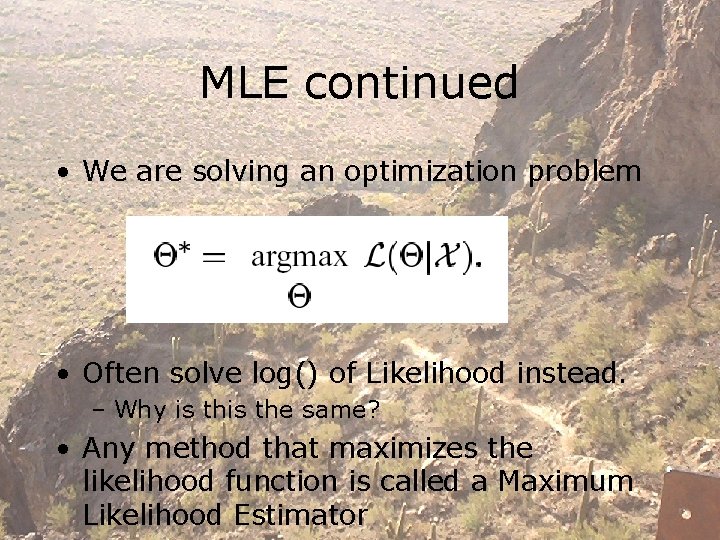

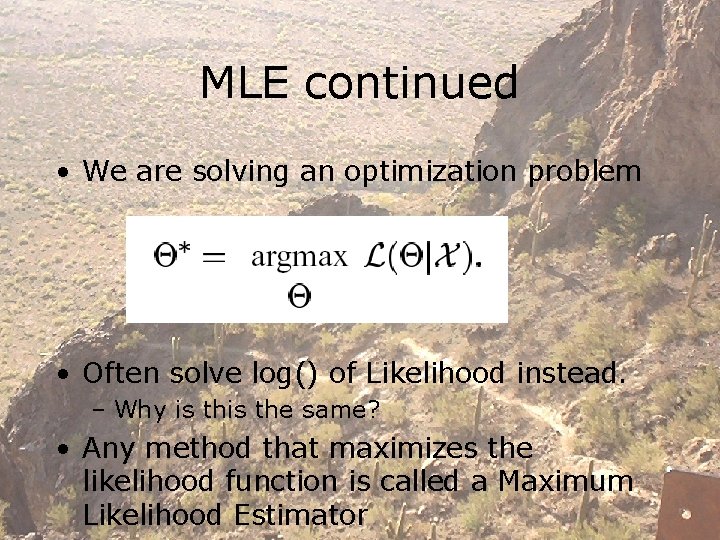

MLE continued • We are solving an optimization problem • Often solve log() of Likelihood instead. – Why is the same? • Any method that maximizes the likelihood function is called a Maximum Likelihood Estimator

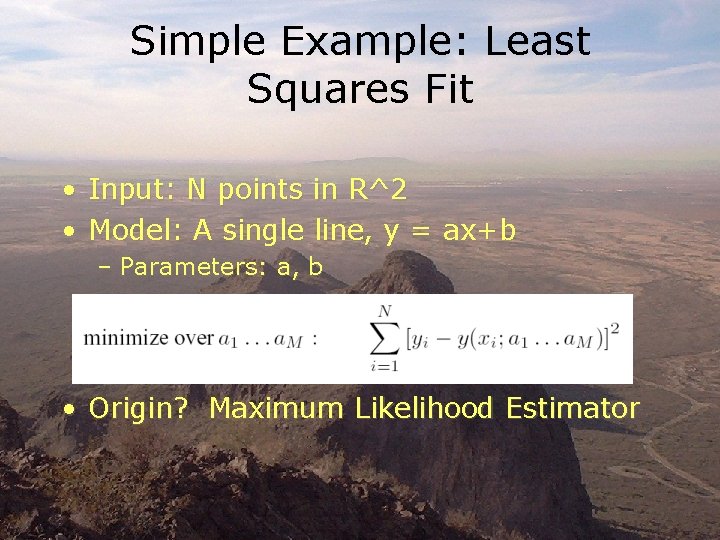

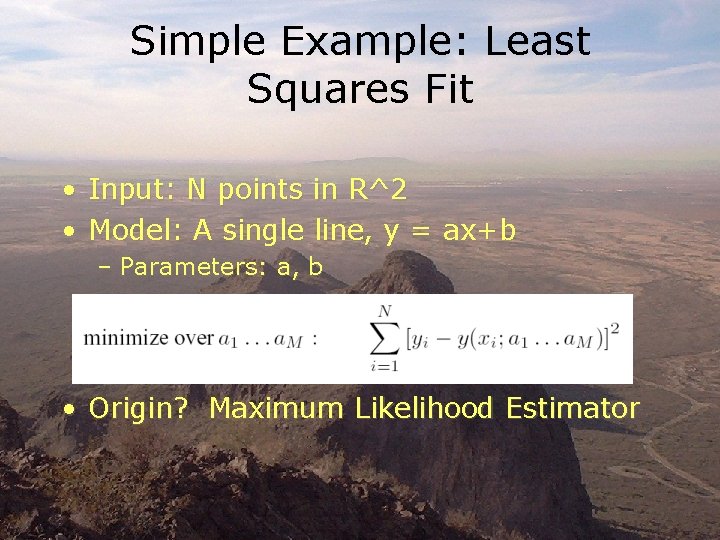

Simple Example: Least Squares Fit • • Input: N points in R^2 Model: A single line, y = ax+b – Parameters: a, b • Origin? Maximum Likelihood Estimator

Expectation Maximization • An elaborate technique for maximizing the likelihood function • Often used when observed data is incomplete – Due to problems in observation process – Due to unknown or difficult distribution function(s) • Iterative Process • Still a local technique

EM likelihood function • Observed data X, assume missing data Y. • Let Z be the complete data – Joint density function – P(z|Θ) = p(x, y|Θ) = p(y|x, Θ)p(x|Θ) • Define new likelihood function L(Θ|Z) = p(X, Y|Θ) • X, Θ are constants, so L() is a random variable dependent on the random variable Y.

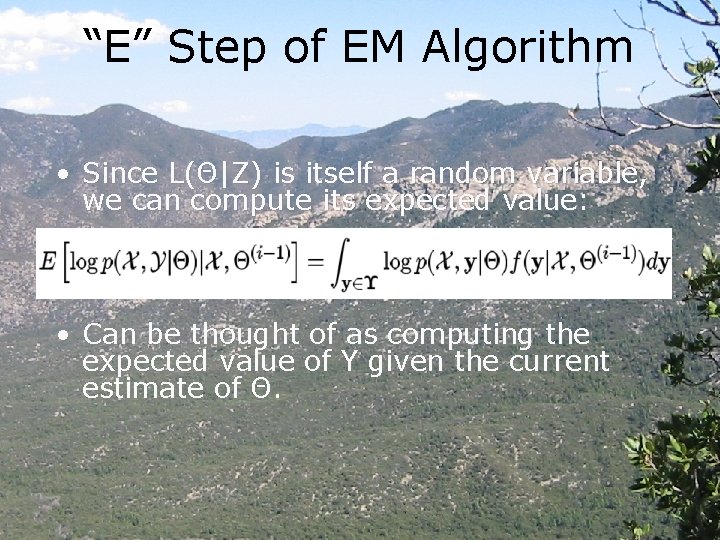

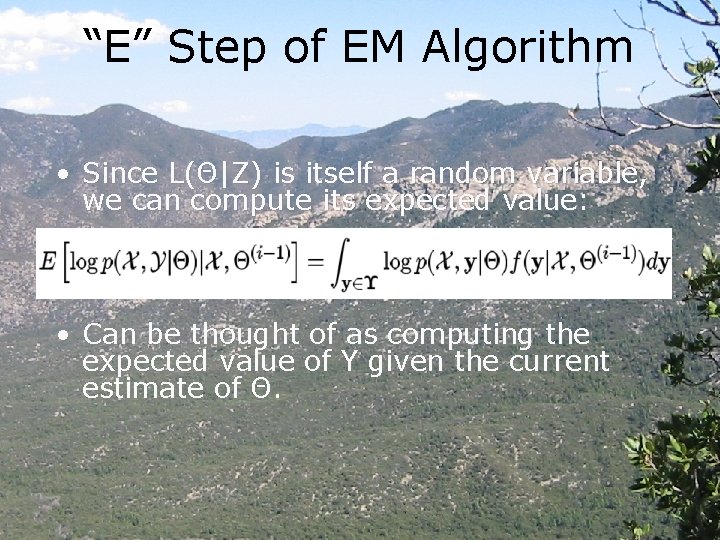

“E” Step of EM Algorithm • Since L(Θ|Z) is itself a random variable, we can compute its expected value: • Can be thought of as computing the expected value of Y given the current estimate of Θ.

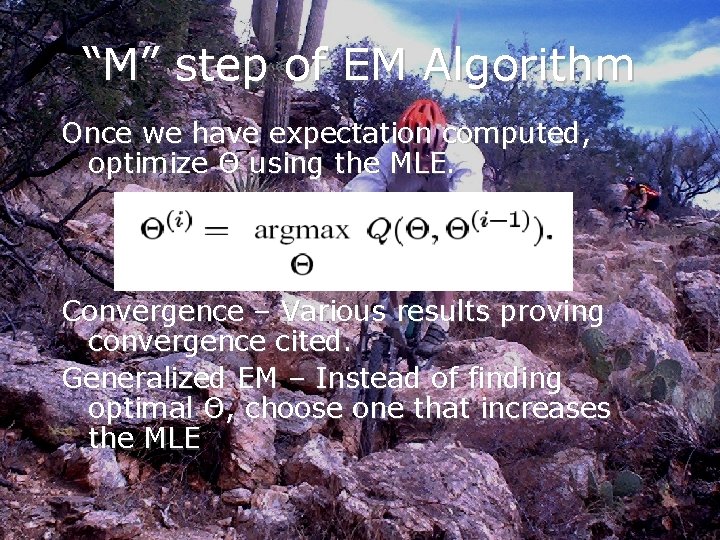

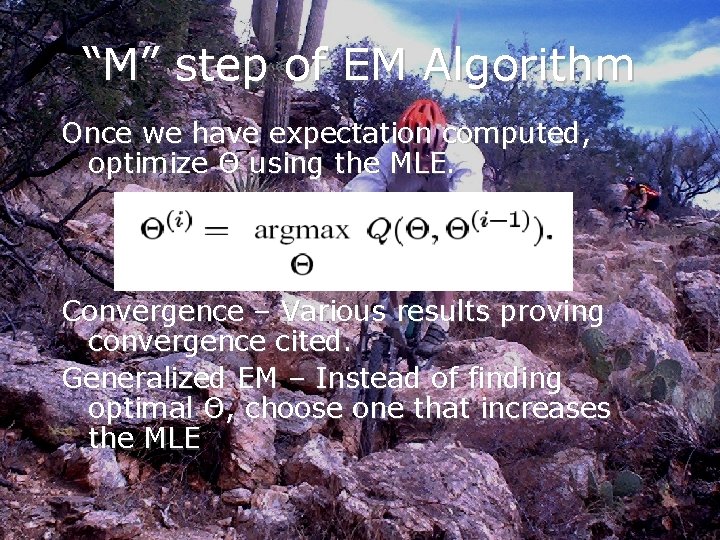

“M” step of EM Algorithm Once we have expectation computed, optimize Θ using the MLE. Convergence – Various results proving convergence cited. Generalized EM – Instead of finding optimal Θ, choose one that increases the MLE

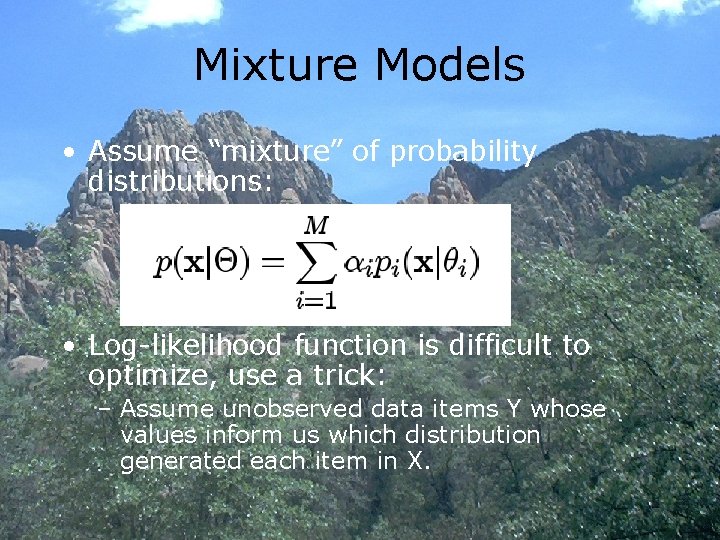

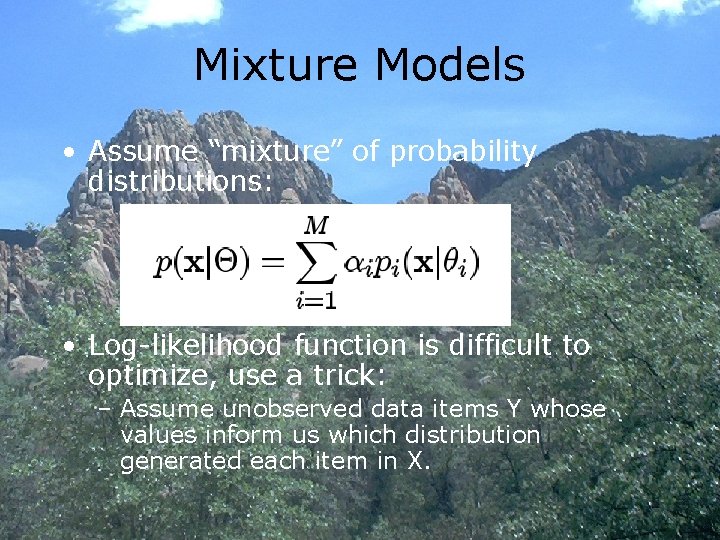

Mixture Models • Assume “mixture” of probability distributions: • Log-likelihood function is difficult to optimize, use a trick: – Assume unobserved data items Y whose values inform us which distribution generated each item in X.

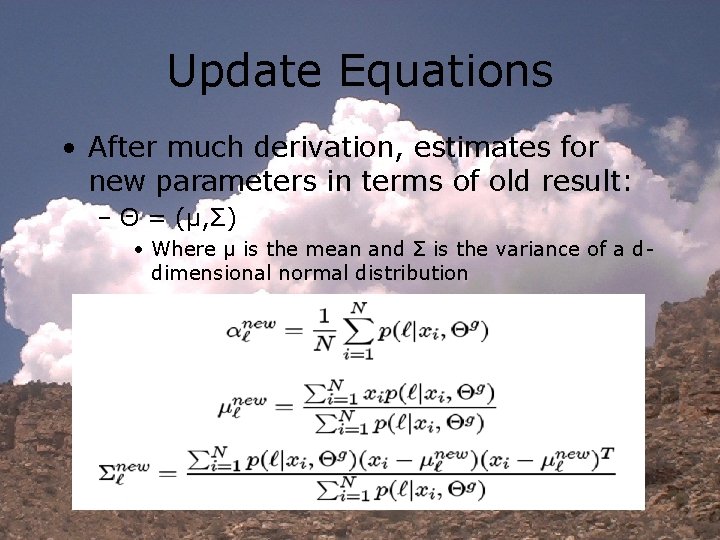

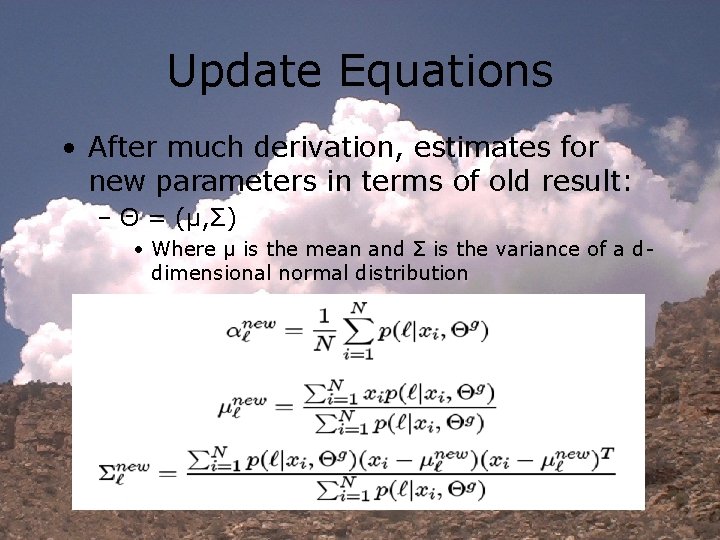

Update Equations • After much derivation, estimates for new parameters in terms of old result: – Θ = (μ, Σ) • Where μ is the mean and Σ is the variance of a ddimensional normal distribution