Evidencing Value in Teaching and Learning going with

![Evidencing Value in Teaching and Learning: [going with and beyond] impact, instrumentality and indicators Evidencing Value in Teaching and Learning: [going with and beyond] impact, instrumentality and indicators](https://slidetodoc.com/presentation_image_h/0882e6c4463f9abf62bf9a35170a6168/image-1.jpg)

- Slides: 41

![Evidencing Value in Teaching and Learning going with and beyond impact instrumentality and indicators Evidencing Value in Teaching and Learning: [going with and beyond] impact, instrumentality and indicators](https://slidetodoc.com/presentation_image_h/0882e6c4463f9abf62bf9a35170a6168/image-1.jpg)

Evidencing Value in Teaching and Learning: [going with and beyond] impact, instrumentality and indicators Roni Bamber April 2016

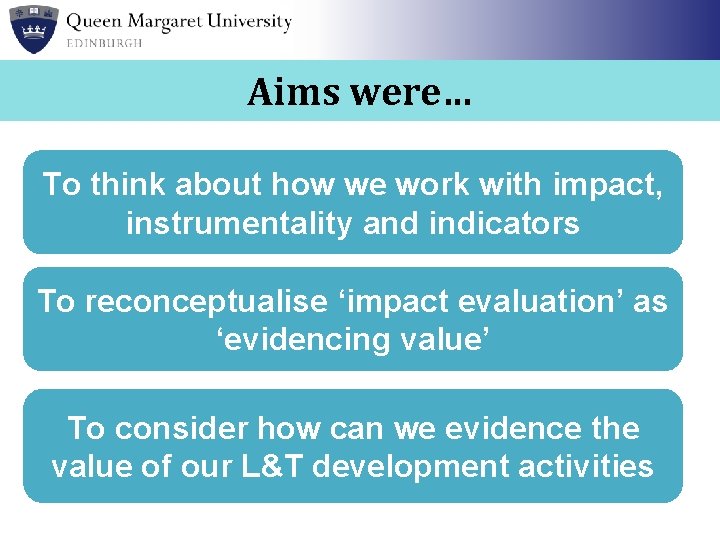

Aims To think about how we work with impact, instrumentality and indicators To reconceptualise ‘impact evaluation’ as ‘evidencing value’ To consider how can we evidence the value of our L&T development activities

Evaluating – Evidencing

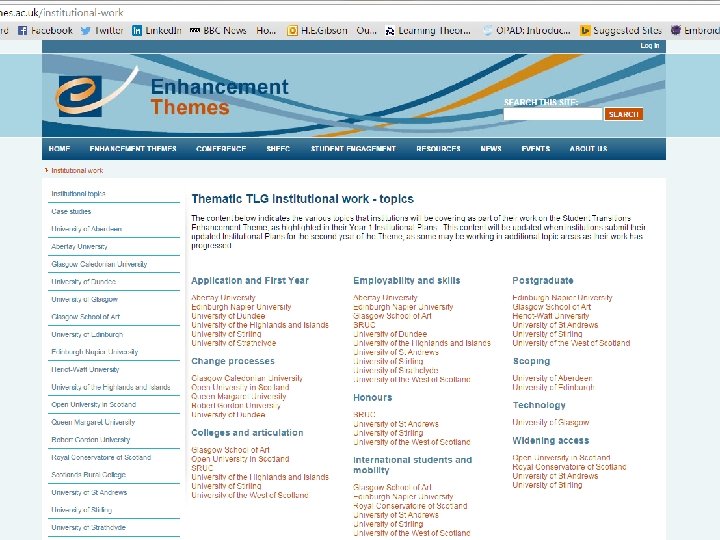

Student Transitions Enhancement Theme http: //commons. wikimedia. org/wiki/File: Nym phalidae_-_Danaus_plexippus_Chrysalis-1. JPG Into HE Through HE Out of HE

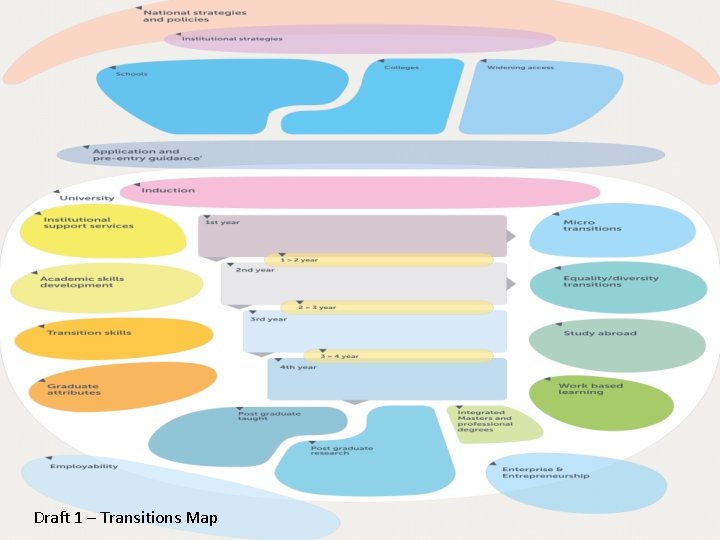

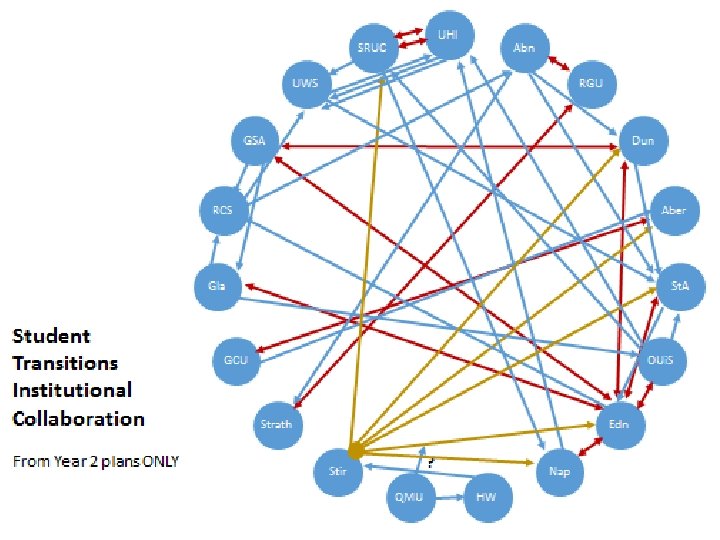

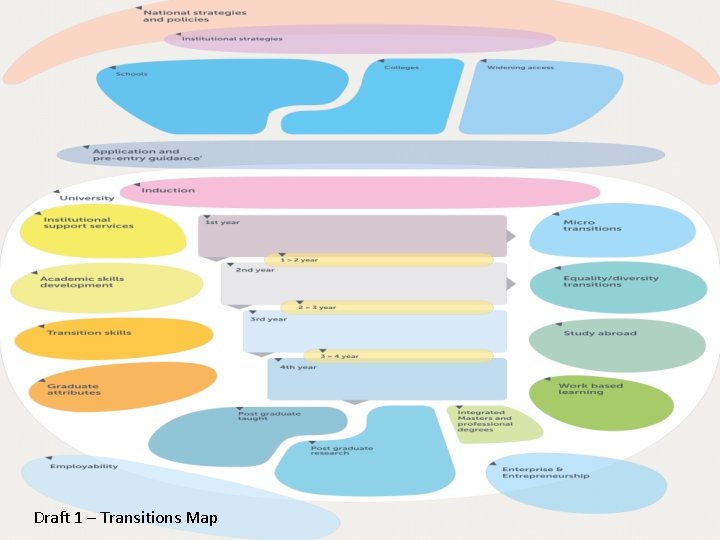

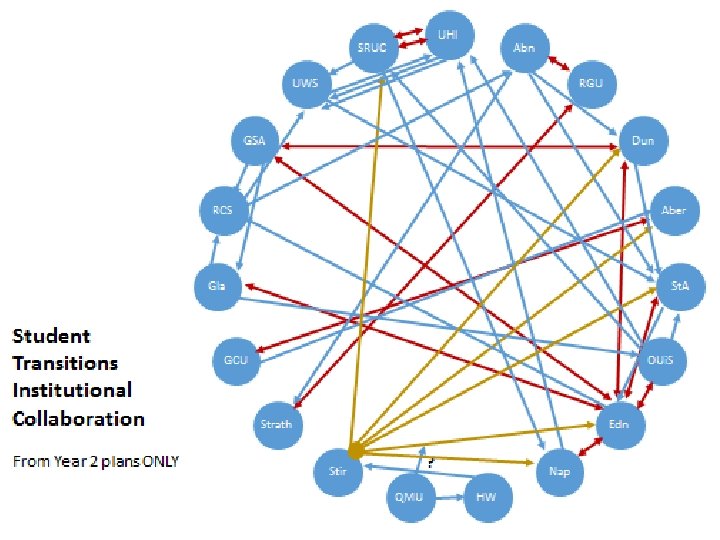

Draft 1 – Transitions Map

Student Network Projects: • Non-traditional students • Students’ perspectives on transition

Transition skills and strategies

Institutional syntheses / case studies

EXAMPLE ACTIVITIES

1 How can we work with impact, instrumentality and indicators?

The Impact Myth

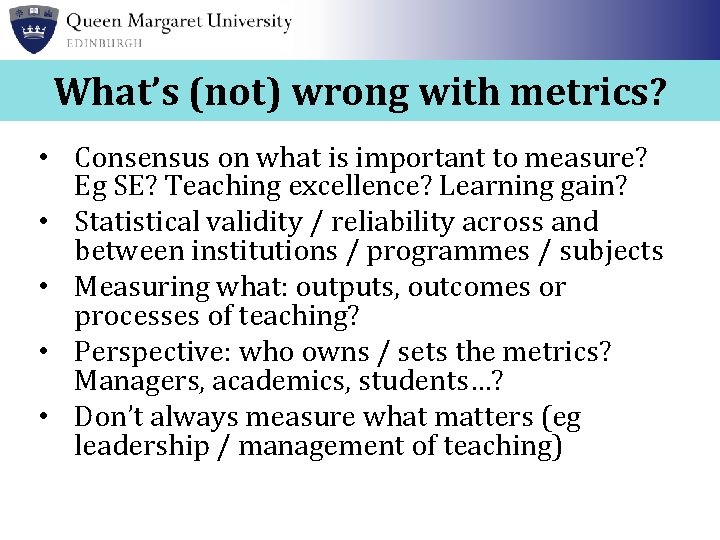

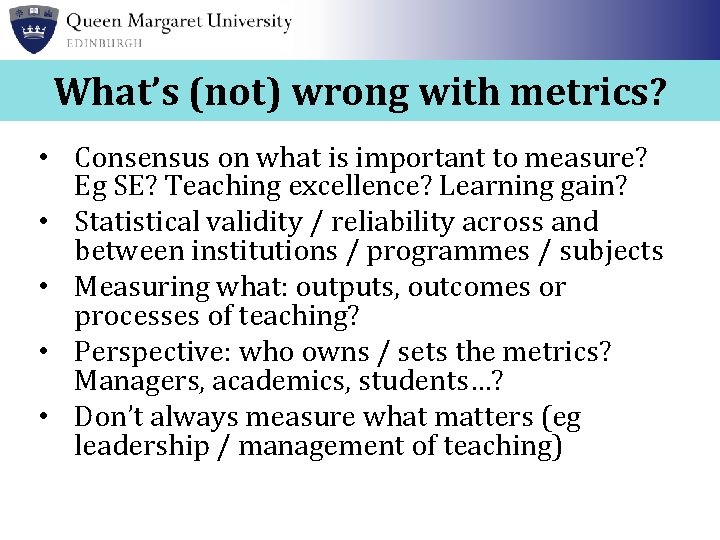

What’s (not) wrong with metrics? • Consensus on what is important to measure? Eg SE? Teaching excellence? Learning gain? • Statistical validity / reliability across and between institutions / programmes / subjects • Measuring what: outputs, outcomes or processes of teaching? • Perspective: who owns / sets the metrics? Managers, academics, students…? • Don’t always measure what matters (eg leadership / management of teaching)

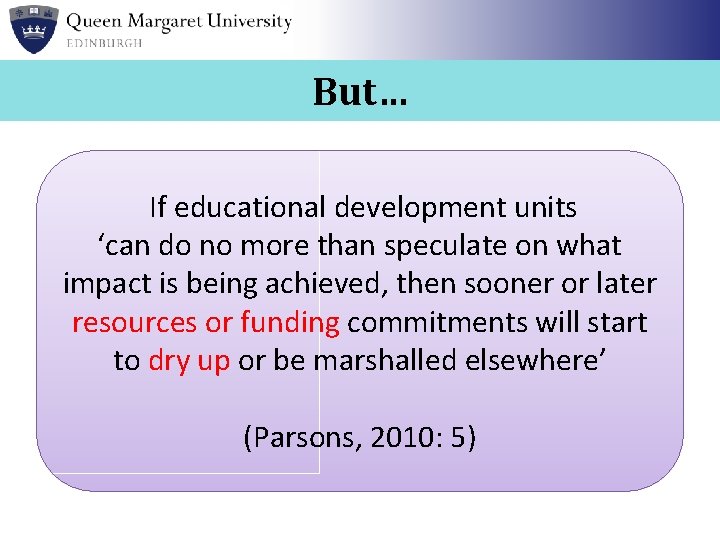

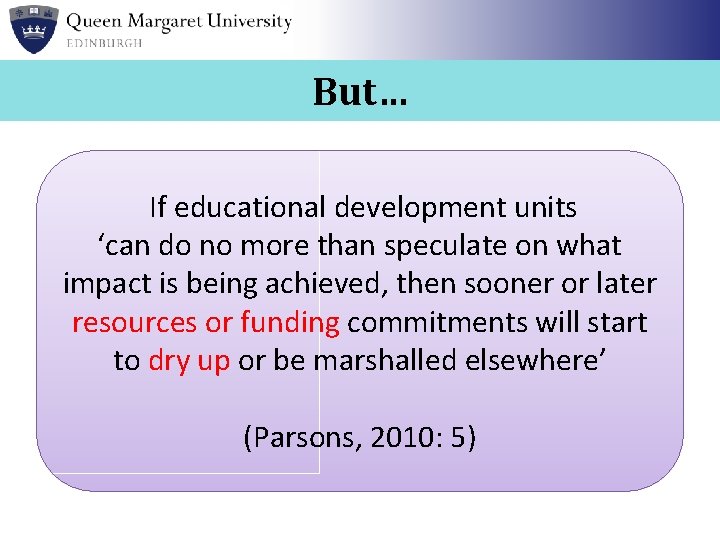

But… If educational development units ‘can do no more than speculate on what impact is being achieved, then sooner or later resources or funding commitments will start to dry up or be marshalled elsewhere’ (Parsons, 2010: 5)

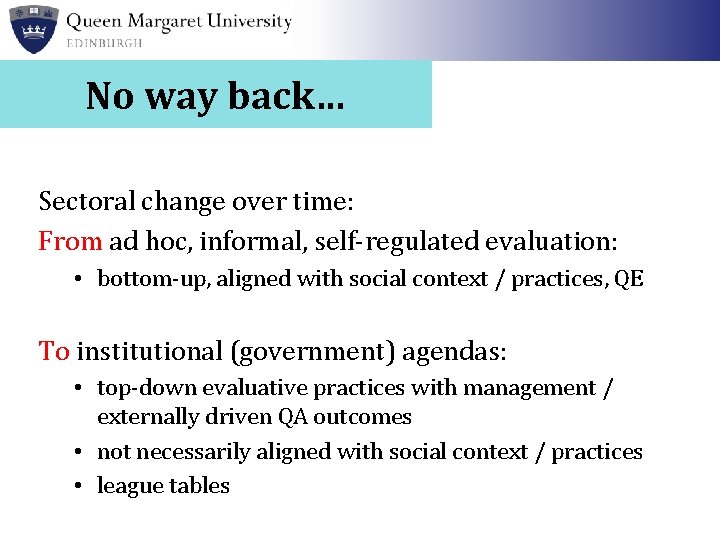

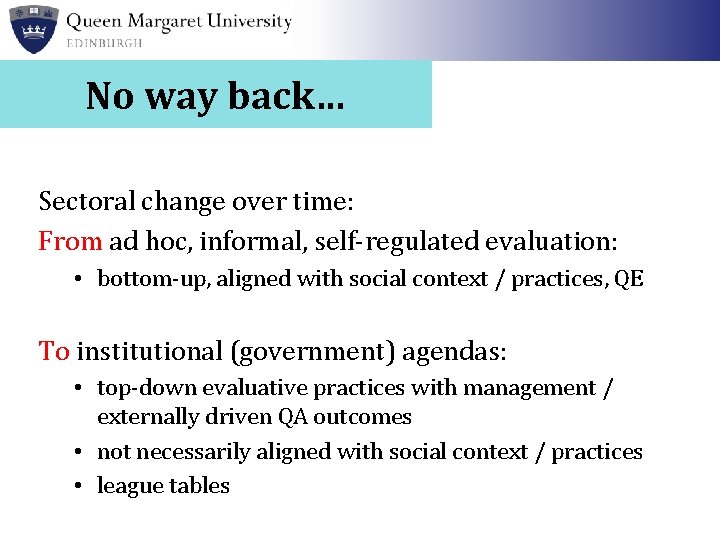

No way back… Sectoral change over time: From ad hoc, informal, self-regulated evaluation: • bottom-up, aligned with social context / practices, QE To institutional (government) agendas: • top-down evaluative practices with management / externally driven QA outcomes • not necessarily aligned with social context / practices • league tables

Managerialism, accountability, education

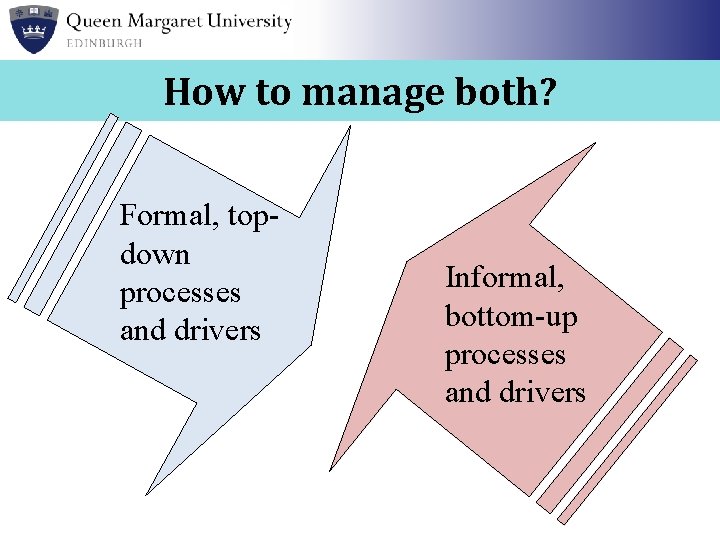

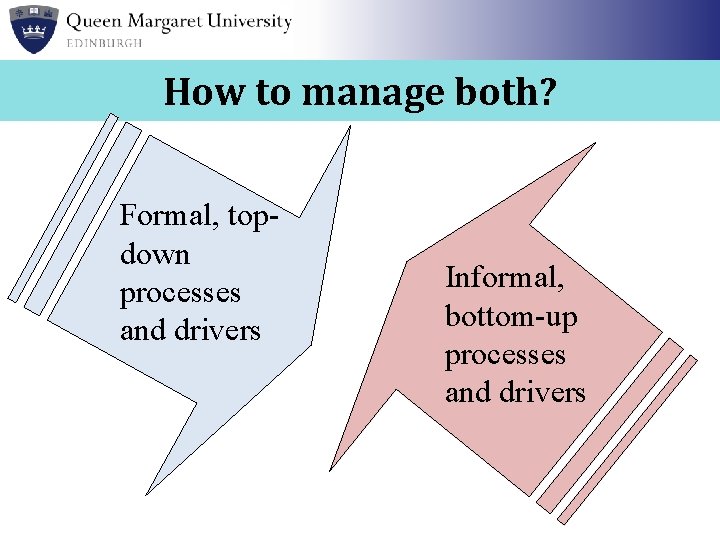

How to manage both? Formal, topdown processes and drivers Informal, bottom-up processes and drivers

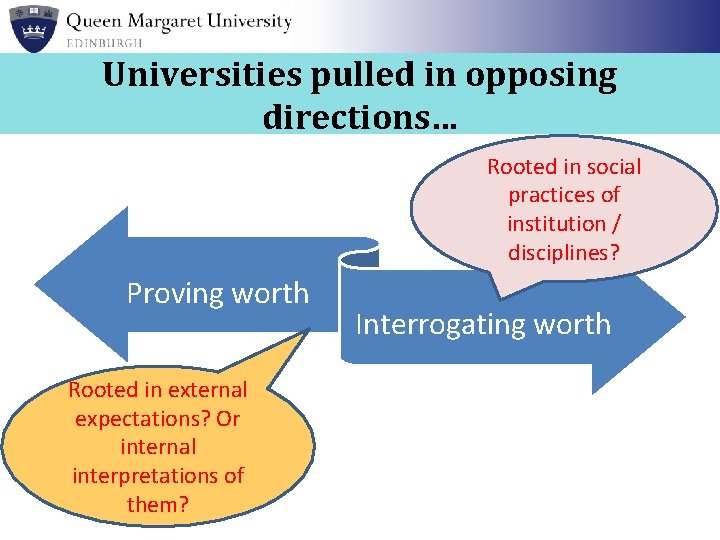

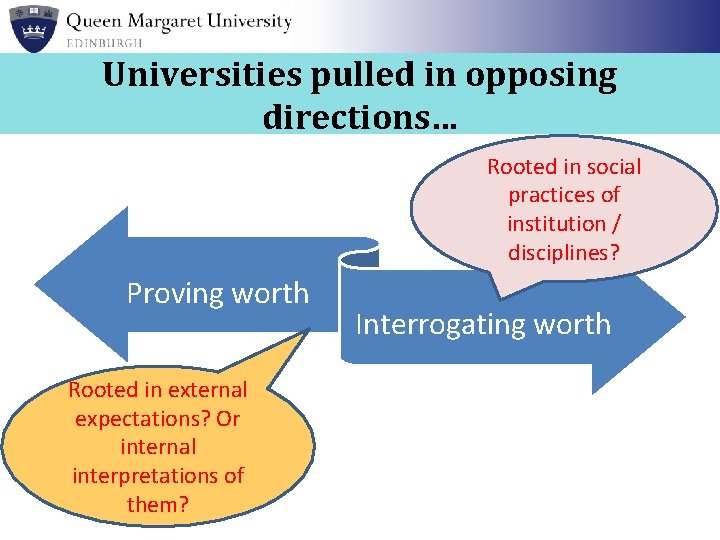

Universities pulled in opposing directions… Rooted in social practices of institution / disciplines? Proving worth Rooted in external expectations? Or internal interpretations of them? Interrogating worth

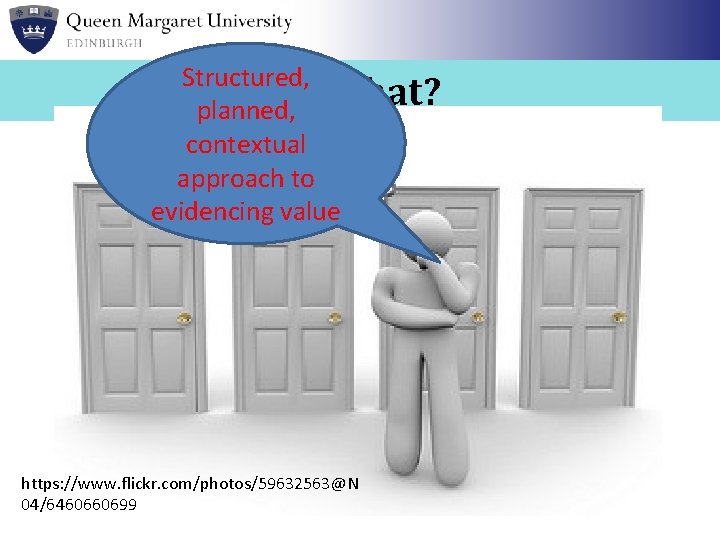

Structured, So what? planned, contextual approach to evidencing value https: //www. flickr. com/photos/59632563@N 04/6460660699

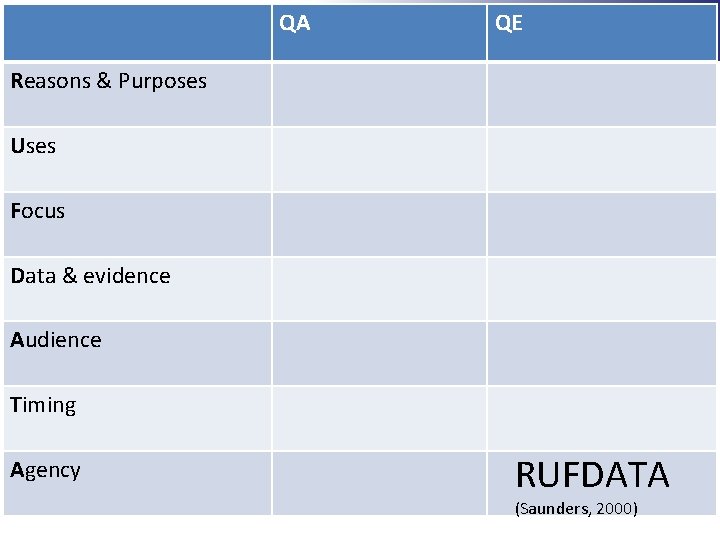

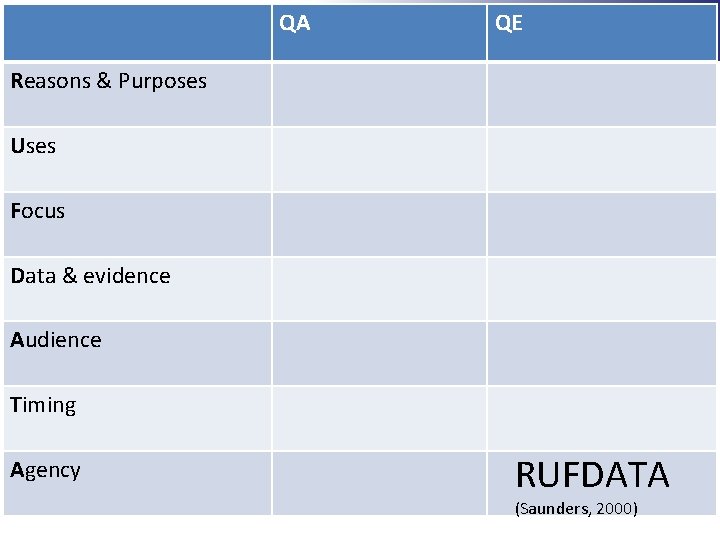

QA QE Reasons & Purposes Uses Focus Data & evidence Audience Timing Agency RUFDATA (Saunders, 2000)

2 How to reconceptualise ‘impact evaluation’ as ‘evidencing value’?

Evidence • ‘a guide to truth: evidence as sign, symptom, or mark’ (Kelly, 2008) • Evidence-informed practice

What constitutes good evidence? Nutley et al (2013) • Depends on what we want to know, for what purposes and context of use • Need range of types / qualities of evidence for range of contexts • ‘Evidence journey’ – always under development

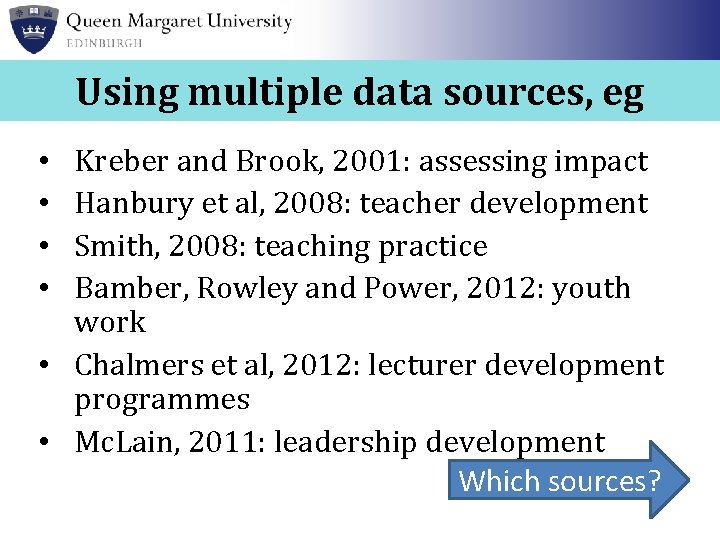

Using multiple data sources, eg Kreber and Brook, 2001: assessing impact Hanbury et al, 2008: teacher development Smith, 2008: teaching practice Bamber, Rowley and Power, 2012: youth work • Chalmers et al, 2012: lecturer development programmes • Mc. Lain, 2011: leadership development Which sources? • •

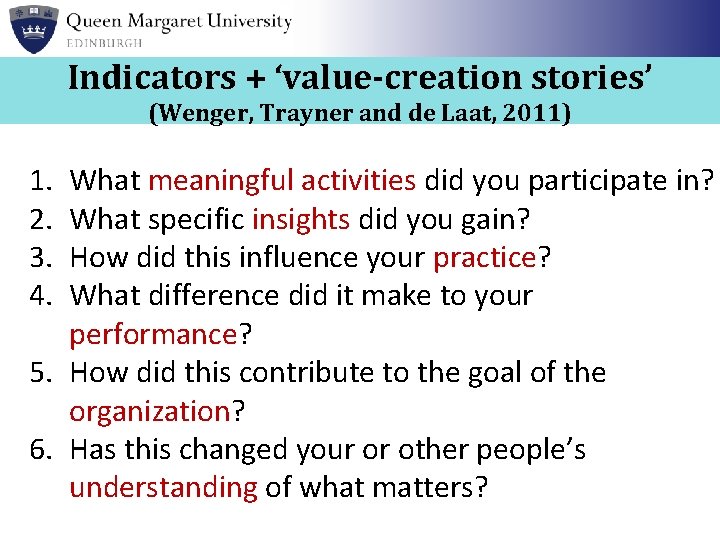

Indicators + ‘value-creation stories’ (Wenger, Trayner and de Laat, 2011) 1. 2. 3. 4. What meaningful activities did you participate in? What specific insights did you gain? How did this influence your practice? What difference did it make to your performance? 5. How did this contribute to the goal of the organization? 6. Has this changed your or other people’s understanding of what matters?

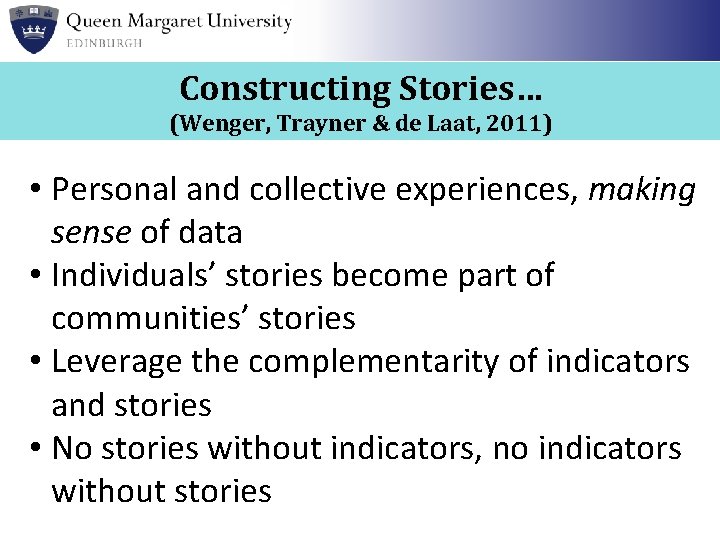

Constructing Stories… (Wenger, Trayner & de Laat, 2011) • Personal and collective experiences, making sense of data • Individuals’ stories become part of communities’ stories • Leverage the complementarity of indicators and stories • No stories without indicators, no indicators without stories

Put the stories into the mix of evidence 3 How can we evidence the value of our L&T development activities?

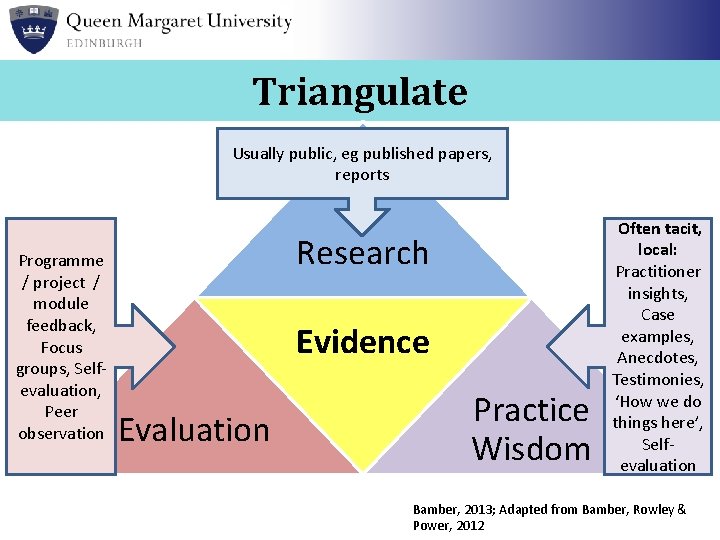

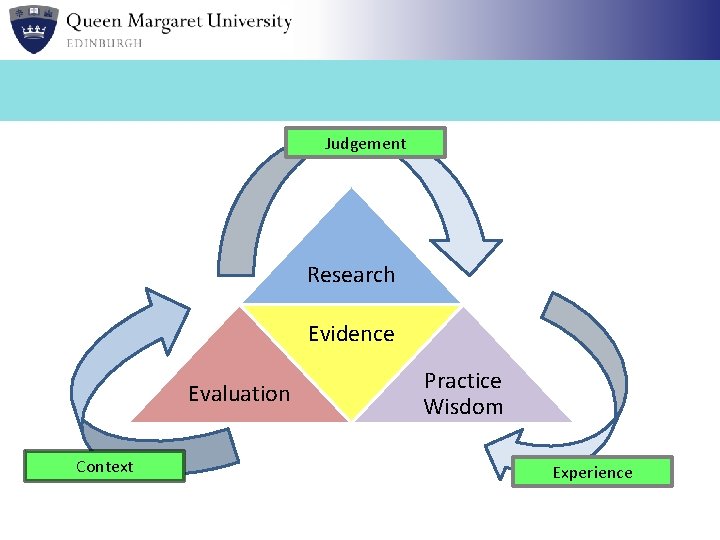

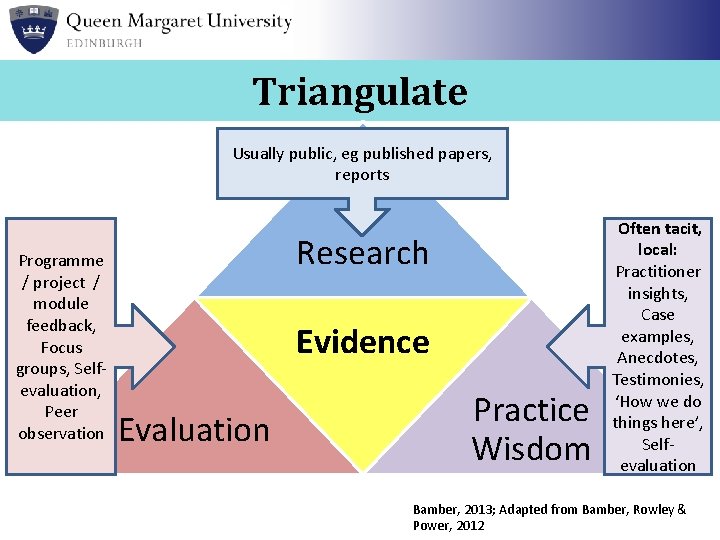

The Evidence Mix Research Evidence Evaluation Practice Wisdom Bamber, 2013; Adapted from Bamber, Rowley & Power, 2012

Triangulate Usually public, eg published papers, reports Programme / project / module feedback, Focus groups, Selfevaluation, Peer observation Research Evidence Evaluation Practice Wisdom Often tacit, local: Practitioner insights, Case examples, Anecdotes, Testimonies, ‘How we do things here’, Selfevaluation Bamber, 2013; Adapted from Bamber, Rowley & Power, 2012

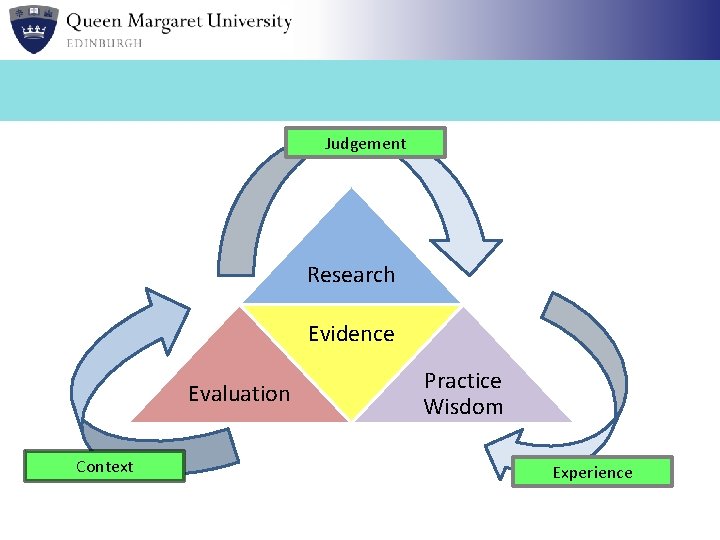

Judgement Research Evidence Evaluation Context Practice Wisdom Experience

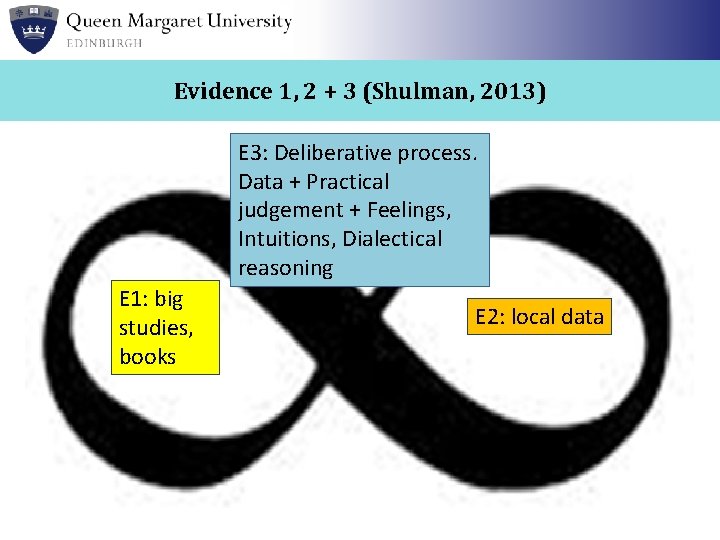

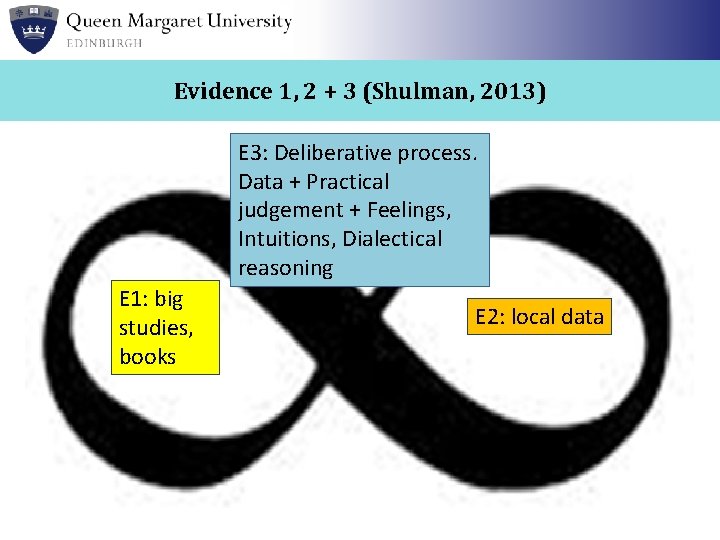

Evidence 1, 2 + 3 (Shulman, 2013) E 3: Deliberative process. Data + Practical judgement + Feelings, Intuitions, Dialectical reasoning E 1: big studies, books E 2: local data

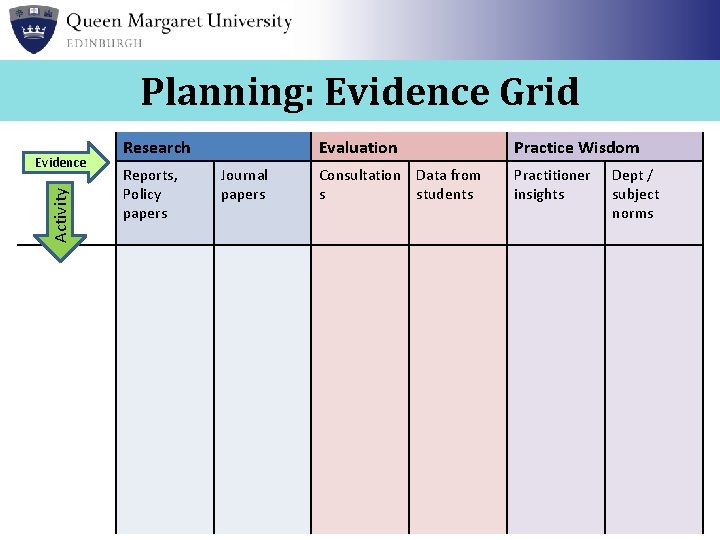

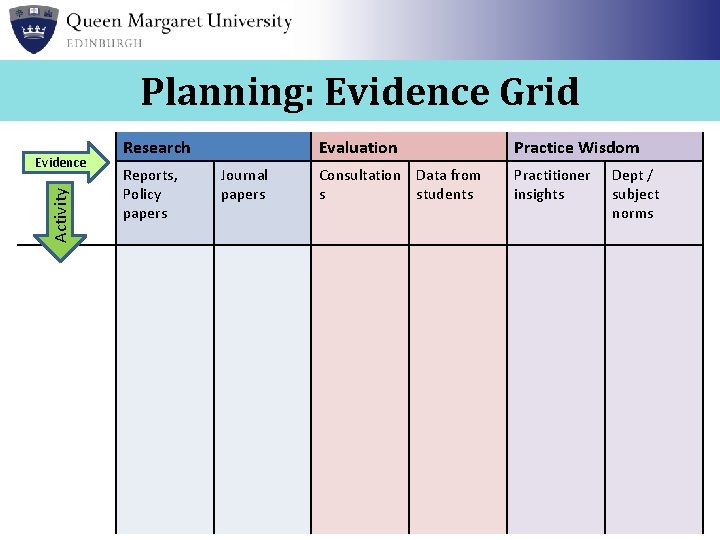

Planning: Evidence Grid Activity Evidence Research Reports, Policy papers Journal papers Evaluation Practice Wisdom Consultation Data from s students Practitioner insights Dept / subject norms

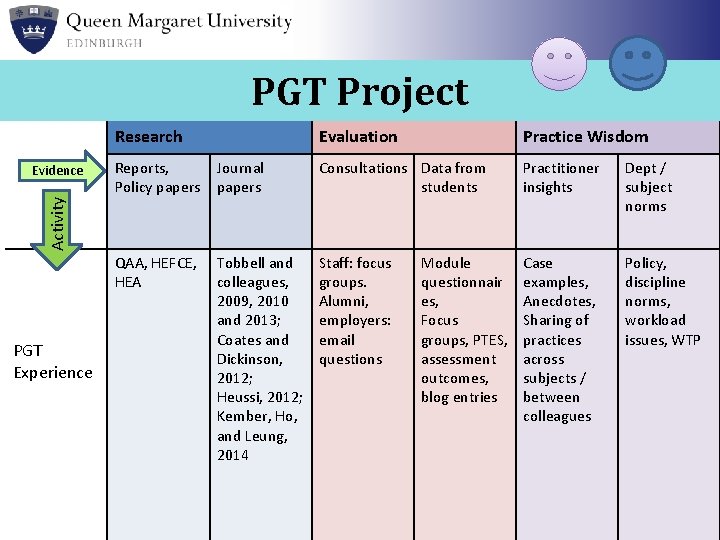

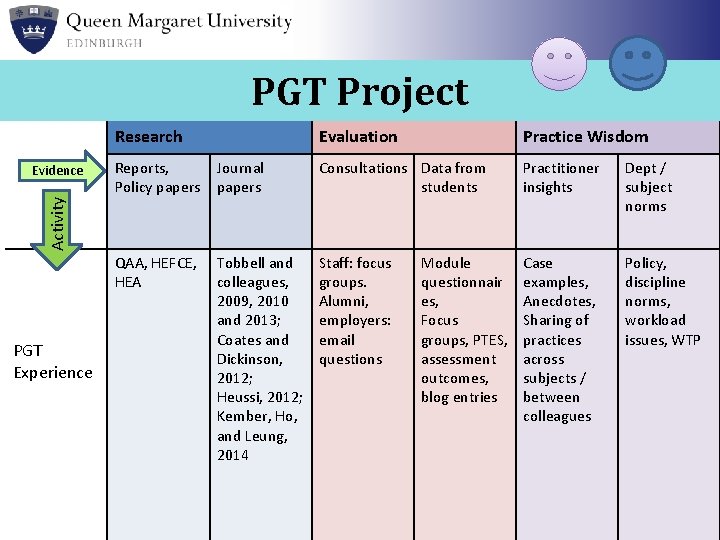

PGT Project Research Practice Wisdom Reports, Policy papers Journal papers Consultations Data from students Practitioner insights Dept / subject norms QAA, HEFCE, HEA Tobbell and colleagues, 2009, 2010 and 2013; Coates and Dickinson, 2012; Heussi, 2012; Kember, Ho, and Leung, 2014 Staff: focus groups. Alumni, employers: email questions Case examples, Anecdotes, Sharing of practices across subjects / between colleagues Policy, discipline norms, workload issues, WTP Activity Evidence Evaluation PGT Experience Module questionnair es, Focus groups, PTES, assessment outcomes, blog entries

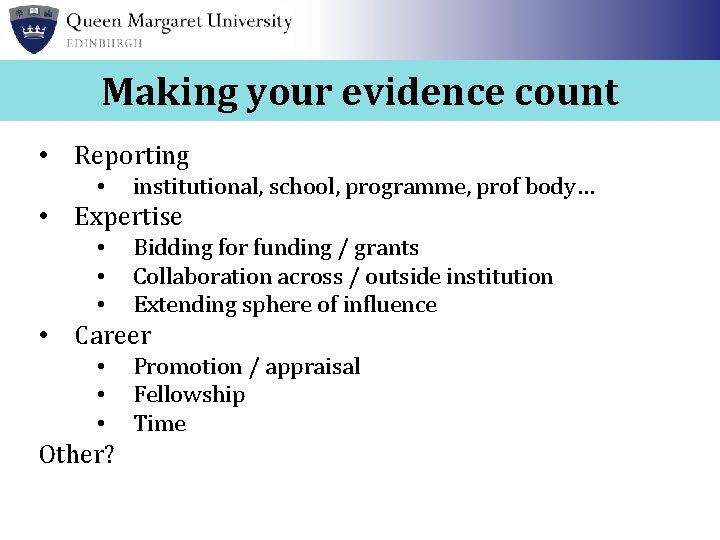

Making your evidence count • Reporting • institutional, school, programme, prof body… • Expertise • • • Bidding for funding / grants Collaboration across / outside institution Extending sphere of influence • Career • • • Other? Promotion / appraisal Fellowship Time

Making your evidence count For self-confidence: ‘we had underestimated the lack of confidence of people… “T&L is just what I do” – they’re not confident about doing all the other stuff that is in the framework’ (HEA, 2013)

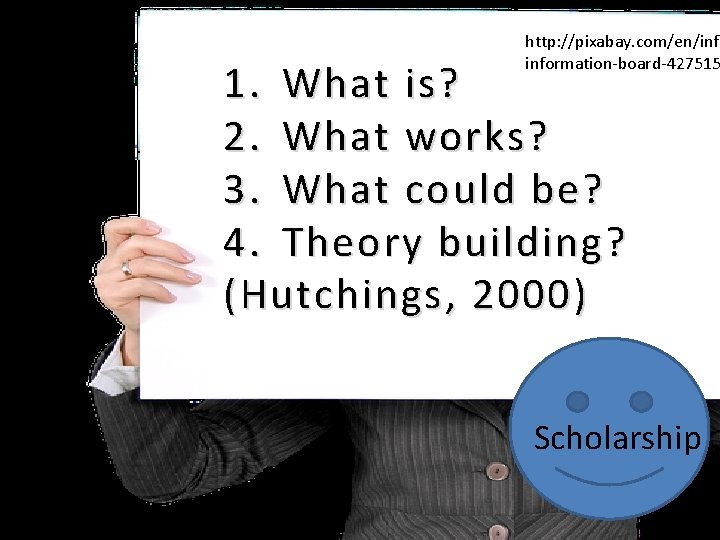

http: //pixabay. com/en/information-board-427515 Mapping onto Nelson’s Groups (DATE) 1. What is? 1. 2. 3. 4. 5. 6. What ANYTHING 2. TO SAY RE MYworks? ANALYSIS? What could be? Reports on 3. particular classes Reflections 4. on years of teaching experience Theory building? Larger contexts, comparisons 2000) (Hutchings, Formal research Summaries and analyses of prior studies Scholarship

Aims were… To think about how we work with impact, instrumentality and indicators To reconceptualise ‘impact evaluation’ as ‘evidencing value’ To consider how can we evidence the value of our L&T development activities

References Bamber, V. (Ed) (2013). Evidencing the Value of Educational Development. SEDA Special No 34. ISBN 978 -1 -902435 -56 -5 Bamber, J. , Rowley, C. & Power, A. (2012). Speaking evidence to youth work – and vice versa. Journal of Youth Work – research and positive practices in work with young people, 10, pp. 37 -56. HEA (2013) Promoting teaching: Making evidence count. York: HEA Hutchings, P. (2000). Opening lines: Approaches to the scholarship of teaching and learning. Menlo Park, CA: Carnegie Foundation. Kelly, T. (2008) Evidence. In The Stanford Encyclopedia of Philosophy, E. N. Zalta (Ed) http: //plato. stanford. edu/archives/fall 2008/entries/evidence/. (Last accessed 12 -1 -2013). Nutley, S. , Powell, A. , & Davies, H. (2013). What counts as good evidence? Provocation paper for the alliance for useful evidence. St Andrews: Research Unit for Research Utilisation. Parsons, D. (2010). HOST Research and Guidance Series. Number Three: a guide to impact assessment. Host Policy Research: Horsham, UK. Saunders, M (2000) Beginning an Evaluation with RUFDATA: Theorizing a Practical Approach to Evaluation Planning. Journal of Evaluation, Vol 6 (1): 7 -21 Saunders, M. , Trowler, P. & Bamber, V. (2011). Reconceptualising Evaluative Practices in Higher Education, London: Open University Press. Wenger, E. , Trayner, B. , & de Laat, M. (2011). Promoting and assessing value creation in communities and networks: A conceptual framework. Rapport 18, Ruud de Moor Centrum, Open University of the Netherlands, Heerlen, Netherlands.

Thank You Questions and Comments?